Estimating g-Leakage via Machine Learning

Abstract.

This paper considers the problem of estimating the information leakage of a system in the black-box scenario, i.e. when the system’s internals are unknown to the learner, or too complicated to analyze, and the only available information are pairs of input-output data samples, obtained by submitting queries to the system or provided by a third party. The frequentist approach relies on counting the frequencies to estimate the input-output conditional probabilities, however this method is not accurate when the domain of possible outputs is large. To overcome this difficulty, the estimation of the Bayes error of the ideal classifier was recently investigated using Machine Learning (ML) models, and it has been shown to be more accurate thanks to the ability of those models to learn the input-output correspondence. However, the Bayes vulnerability is only suitable to describe one-try attacks. A more general and flexible measure of leakage is the -vulnerability, which encompasses several different types of adversaries, with different goals and capabilities. We propose a novel approach to perform black-box estimation of the -vulnerability using ML which does not require to estimate the conditional probabilities and is suitable for a large class of ML algorithms. First, we formally show the learnability for all data distributions. Then, we evaluate the performance via various experiments using k-Nearest Neighbors and Neural Networks. Our approach outperform the frequentist one when the observables domain is large.

1. Introduction

The information leakage of a system is a fundamental concern of computer security, and measuring the amount of sensitive information that an adversary can obtain by observing the outputs of a given system is of the utmost importance to understand whether such leakage can be tolerated or must be considered a major security flaw. Much research effort has been dedicated to studying and proposing solutions to this problem, see for instance (Clark et al., 2001; Köpf and Basin, 2007; Chatzikokolakis et al., 2008a; Boreale, 2009; Smith, 2009; Alvim et al., 2012, 2014; Chatzikokolakis et al., 2019). So far, this area of research, known as quantitative information flow (QIF), has mainly focused on the so-called white-box scenario, i.e. assuming that the channel of the system is known, or can be computed by analyzing the system’s internals. This channel consists of the conditional probabilities of the outputs (observables) given the inputs (secrets).

The white-box assumption, however, is not always realistic: sometimes the system is unknown, or anyway it is too complex, so that an analytic computation becomes hard if not impossible to be performed. Therefore, it is important to consider also black-box approaches where we only assume the availability of a finite set of input-output pairs generated by the system. A further specification of a black box model is the “degree of inaccessibility”, namely whether or not we are allowed to interact with the system to obtain these pairs, or they are just provided by a third party. Both scenarios are realistic, and in our paper we consider the two cases.

The estimation of the internal probabilities of a system’s channel have been investigated in (Chothia and Guha, 2011) and (Chothia et al., 2014) via a frequentist paradigm, i.e., relying on the computation of the frequencies of the outputs given some inputs. However, this approach does not scale to applications for which the output space is very large since a prohibitively large number of samples would be necessary to achieve good results and fails on continuous alphabets unless some strong assumption on the distributions are made. In order to overcome this limitation, the authors of (Cherubin et al., 2019) exploited the fact that Machine Learning (ML) algorithms provide a better scalability to black-box measurements. Intuitively, the advantage of the ML approach over the frequentist one is its generalization power: while the frequentist method can only draw conclusions based on counts on the available samples, ML is able to extrapolate from the samples and provide better prediction (generalization) for the rest of the universe.

In particular, (Cherubin et al., 2019) proposed to use k-Nearest Neighbors (k-NN) to measure the basic QIF metrics, the Bayes vulnerability (Smith, 2009). This is the expected probability of success of an adversary that has exactly one attempt at his disposal (one-try), and tries to maximize the chance of guessing the right secret. The Bayes vulnerability corresponds to the converse of the error of the ideal Bayes classifier that, given any sample (observable), tries to predict its corresponding class (secret). Hence the idea is to build a model that approximates such classifier, and estimate its expected error. The main takeaway is that any ML rule which is universally consistent (i.e., approximates the ideal Bayes classifier) has a guarantee on the accuracy of the estimation, i.e., the error in the estimation of the leakage tends to vanish as the number of training samples grows large.

The method of (Cherubin et al., 2019), however, is limited to the Bayes vulnerability, which models only one particular kind of adversary. As argued in (Alvim et al., 2012), there are many other realistic ones. For instance, adversaries whose aim is to guess only a part of the secret, or a property of the secret, or that have multiple tries at their disposal. To represent a larger class of attacks, (Alvim et al., 2012) introduced the so-called -vulnerability. This metric is very general, and in particular it encompasses the Bayes vulnerability.

| Symbol | Description |

|---|---|

| a secret | |

| a guess | |

| an observable output by the system | |

| random var. for secrets taking values | |

| random var. for guesses taking values | |

| random var. for observables taking values | |

| size of a set | |

| Distribution over a set of symbols | |

| class of learning functions | |

| , | prior distribution over the secret space |

| , | empirical prior distribution over the secret space |

| Channel matrix | |

| joint distribution from prior and channel | |

| joint probability distribution | |

| empirical joint probability distribution | |

| conditional probability of given | |

| empirical conditional probabilities | |

| probability measure | |

| expected value | |

| gain function: guess and secret as inputs | |

| gain matrix of size for a specific | |

| -vulnerability | |

| -vulnerability functional | |

| empirical -vuln. functional evaluated on samples |

In this paper, we propose an approach to the black-box estimation of -vulnerability via ML. The idea is to reduce the problem to that of approximating the Bayes classifier, so that any universally consistent ML algorithm can be used for the purpose. This reduction essentially takes into account the impact of the gain function in the generation of the training data, and we propose two methods to obtain this effect, which we call channel pre-processing and data pre-processing, respectively. We evaluate our approach via experiments on various channels and gain functions. In order to show the generality of our approach, we use two different ML algorithms, namely k-NN and Artificial Neural Networks (ANN), and we compare their performances. The experimental results show that our approach provides accurate estimations, and that it outperforms by far the frequentist approach when the observables domain is large.

1.1. Our contribution

-

•

We propose a novel approach to the black-box estimation of -vulnerability based on ML. To the best of our knowledge, this is the first time that a method to estimate -vulnerability in a black-box fashion is introduced.

-

•

We provide statistical guarantees showing the learnability of the -vulnerability for all distributions and we derive distribution-free bounds on the accuracy of its estimation.

-

•

We validate the performance of our method via several experiments using k-NN and ANN models. The code is available at the URL https://github.com/LEAVESrepo/leaves.

1.2. Related work

One important aspect to keep in mind when measuring leakage is the kind of attack that we want to model. In (Köpf and Basin, 2007), Köpf and Basin identified various kinds of adversaries and showed that they can be captured by known entropy measures. In particular it focussed on the adversaries corresponding to Shannon and Guessing entropy. In (Smith, 2009) Smith proposed another notion, the Rényi min-entropy, to measure the system’s leakage when the attacker has only one try at its disposal and attempts to make its best guess. The Rényi min-entropy is the logarithm of the Bayes vulnerability, which is the expected probability of the adversary to guess the secret correctly. The Bayes vulnerability is the converse of the Bayes error, which was already proposed as a measure of leakage in (Chatzikokolakis et al., 2008b).

Alvim et al. (Alvim et al., 2012) generalized the notion of Bayes vulnerability to that of -vulnerability, by introducing a parameter (the gain function ) that describes the adversary’s payoff. The -vulnerability is the expected gain of the adversary in a one-try attack.

The idea of estimating the -vulnerability in the using ML techniques is inspired by the seminal work (Cherubin et al., 2019), which used k-NN algorithms to estimate the Bayes vulnerability, a paradigm shift with respect to the previous frequentist approaches (Chatzikokolakis et al., 2010; Chothia and Guha, 2011; Chothia et al., 2014). Notably, (Cherubin et al., 2019) showed that universally consistent learning rules allow to achieve much more precise estimations than the frequentist approach when considering large or even continuous output domains which would be intractable otherwise. However, (Cherubin et al., 2019) was limited to the Bayes adversary. In contrast, our approach handles any adversary that can be modeled by a gain function . Another novelty w.r.t. (Cherubin et al., 2019) is that we consider also ANN algorithms, which in various experiments appear to perform better than the k-NN ones.

Bordenabe and Smith (Bordenabe and Smith, 2016) investigated the indirect leakage induced by a channel (i.e., leakage on sensitive information not in the domain of the channel), and proved a fundamental equivalence between Dalenius min-entropy leakage under arbitrary correlations and g-leakage under arbitrary gain functions. This result is similar to our Theorem 4.2, and it opens the way to the possible extension of our approach to this more general leakage scenario.

2. Preliminaries

In this section, we recall some useful notions from QIF and ML.

2.1. Quantitative information flow

Let be a set of secrets and a set of observations. The adversary’s initial knowledge about the secrets is modeled by a prior distribution (namely ). A system is modeled as a probabilistic channel from to , described by a stochastic matrix , whose elements give the probability to observe when the input is (namely ). Running with input induces a joint distribution on denoted by .

In the -leakage framework (Alvim et al., 2012) an adversary is described by a set of guesses (or actions) that it can make about the secret, and by a gain function expressing the gain of selecting the guess when the real secret is . The prior -vulnerability is the expected gain of an optimal guess, given a prior distribution on secrets:

| (1) |

In the posterior case, the adversary observes the output of the system which allows to improve its guess. Its expected gain is given by the posterior -vulnerability, according to

| (2) |

Finally, the multiplicative111Originally the multiplicative version of -leakage was defined as the of the definition given here. In recent literature the is not used anymore. Anyway, the two definitions are equivalent for system comparison, since is monotonic. and additive -leakage quantify how much a specific channel increases the vulnerability of the system:

| (3) |

The choice of the gain function allows to model a variety of different adversarial scenarios. The simplest case is the identity gain function, given by , iff and otherwise. This gain function models an adversary that tries to guess the secret exactly in one try; is the Bayes-vulnerability, which corresponds to the complement of the Bayes error (cfr. (Alvim et al., 2012)).

However, the interest in -vulnerability lies in the fact that many more adversarial scenarios can be captured by a proper choice of . For instance, taking with iff and otherwise, models an adversary that tries to guess the secret correctly in tries. Moreover, guessing the secret approximately can be easily expressed by constructing from a metric on ; this is a standard approach in the area of location privacy (Shokri et al., 2011, 2012) where is taken to be inversely proportional to the Euclidean distance between and . Several other gain functions are discussed in (Alvim et al., 2012), while (Alvim et al., 2016) shows that any vulnerability function satisfying basic axioms can be expressed as for a properly constructed .

The main focus of this paper is estimating the posterior -vulnerability of the system from such samples. Note that, given , estimating and is straightforward, since only depends on the prior (not on the system) and it can be either computed analytically or estimated from the samples.

2.2. Artificial Neural Networks

We provide a short review of the aspects of ANN that are relevant for this work. For further details, we refer to (Bishop, 2007; Goodfellow et al., 2016; Hastie et al., 2001). Neural networks are usually modeled as directed graphs with weights on the connections and nodes that forward information through “activation functions”, often introducing non-linearity (such as sigmoids or soft-max). In particular, we consider an instance of learning known as supervised learning, where input samples are provided to the network model together with target labels (supervision). From the provided data and by means of iterative updates of the connection weights, the network learns how the data and respective labels are distributed. The training procedure, known as back-propagation, is an optimization process aimed at minimizing a loss function that quantifies the quality of the network’s prediction w.r.t. the data.

Classification problems are a typical example of tasks for which supervised learning works well. Samples are provided together with target labels which represent the classes they belong to. A model can be trained using these samples and, later on, it can be used to predict the class of new samples.

According to the No Free Lunch theorem (NFL) (Wolpert, 1996), in general, it cannot be said that ANN are better than other ML methods. However, it is well known that the NFL can be broken by additional information on the data or the particular problem we want to tackle, and, nowadays, for most applications and available data, especially in multidimensional domains, ANN models outperform other methods therefore representing the state-of-the-art.

2.3. k-Nearest Neighbors

The k-NN algorithm is one of the simplest algorithms used to classify a new sample given a training set of samples labelled as belonging to specific classes. This algorithm assumes that the space of the features is equipped with a notion of distance. The basic idea is the following: every time we need to classify a new sample, we find the samples whose features are closest to those of the new one (nearest neighbors). Once the nearest neighbors are selected, a majority vote over their class labels is performed to decide which class should be assigned to the new sample. For further details, as well as for an extensive analysis of the topic, we refer to the chapters about k-NN in (Shalev-Shwartz and Ben-David, 2014; Hastie et al., 2001).

3. Learning -vulnerability: Statistical Bounds

This section introduces the mathematical problem of learning -vulnerability, characterizing universal learnability in the present framework. We derive distribution-free bounds on the accuracy of the estimation, implying the estimator’s statistical consistence.

3.1. Main definitions

We consider the problem of estimating the -vulnerability from samples via ML models, and we show that the analysis of this estimation can be conducted in the general statistical framework of maximizing an expected functional using observed samples. The idea can be described using three components:

-

•

A generator of random secrets with , drawn independently from a fixed but unknown distribution ;

-

•

a channel that returns an observable with for every input , according to a conditional distribution , also fixed and unknown;

-

•

a learning machine capable of implementing a set of rules , where denotes the class of functions and is the finite set of guesses.

Moreover let us note that

| (4) |

where and are finite real values, and and are finite sets. The problem of learning the -vulnerability is that of choosing the function which maximizes the functional , representing the expected gain, defined as:

| (5) |

Note that corresponds to the “guess” , for the given , in (2). The maximum of is the -vulnerability, namely:

| (6) |

3.2. Principle of the empirical -vulnerability maximization

Since we are in the black box scenario, the joint probability distribution is unknown. We assume, however, the availability of independent and identically distributed (i.i.d.) samples drawn according to that can be used as a training set to solve the maximization of over and additionally i.i.d. samples are available to be used as a validation222We call validation set rather than test set, since we use it to estimate the -vulnerability with the learned , rather than to measure the quality of . set to estimate the average in (5). Let us denote these sets as: and , respectively.

In order to maximize the -vulnerability functional (5) for an unknown probability measure , the following principle is usually applied. The expected -vulnerability functional is replaced by the empirical -vulnerability functional:

| (7) |

which is evaluated on rather than . This estimator is clearly unbiased in the sense that

Let denote the empirical optimal rule given by

| (8) |

which is evaluated on rather than . The function is the optimizer according to , namely the best way among the functions to approximate by maximizing over the class of functions . This principle is known in statistics as the Empirical Risk Maximization (ERM).

Intuitively, we would like to give a good approximation of the -vulnerability, in the sense that its expected gain

| (9) |

should be close to . Note that the difference

| (10) |

is always non negative and represents the gap by selecting a possible suboptimal function . Unfortunately, we are not able to compute either, since is unknown. In its place, we have to use its estimation Hence, the real estimation error is . Note that:

| (11) |

where the first term in the right end side represents the error induced by using the trained model and the second represents the error induced by estimating the -vulnerability over the samples in the validation set.

By using basics principles from statistical learning theory, we study two main questions:

-

•

When does the estimator work? What are the conditions for its statistical consistency?

-

•

How well does approximate ? In other words, how fast does the sequence of largest empirical g-leakage values converge to the largest g-leakage function? This is related to the so called rate of generalization of a learning machine that implements the ERM principle.

3.3. Bounds on the estimation accuracy

In the following we use the notation , where stands for the variance of the random variable . Namely, is the variance of the gain for a given function . We also use to represent the probability induced by sampling the training and validation sets over the distribution .

Next proposition, proved in Appendix B.1, states that we can probabilistically delimit the two parts of the bound in (11) in terms of the sizes and of the training and validation sets.

Proposition 3.1 (Uniform deviations).

For all ,

| (12) |

where the expectation is taken w.r.t. the random training set, and

| (13) |

Inequality (12) shows that the estimation error due to the use of a validation set in instead of the true expected gain vanishes with the number of validation samples. On the other hand, expression (13) implies ‘learnability’ of an optimal , i.e., the suboptimality of learned using the training set vanishes with the number of training samples.

On the other hand the bound in eq. 13 depends on the underlying distribution and the structural properties of the class through the variance. However, it does not explicitly depend on the optimization algorithm (e.g., stochastic gradient descent) used to learn the function from the training set, which just comes from assuming the worst-case upper bound over all optimization procedures. The selection of a “good” subset of candidate functions, having a high probability of containing an almost optimal classifier, is a very active area of research in ML (Chizat and Bach, 2020), and hopefully there will be some result available soon, which together with our results will provide a practical method to estimate the bound on the errors. In appendix F we discuss heuristics to choose a “good” model, together with a new set of experiments showing the impact of different architectures.

Theorem 3.2.

The averaged estimation error of the -vulnerability can be bounded as follows:

| (14) |

where the expectations are understood over all possible training and validation sets drawn according to . Furthermore, let , then :

| (15) |

where for , and, otherwise,

| (16) |

with .

Interestingly, the term is the average error induced when estimating the function using samples from the training set while indicates the average error incurred when estimating the -vulnerability using samples from the validation set. Clearly, in eq. 15, the scaling of these bounds with the number of samples are very different which can be made evident by using the order notation:

| (17) | ||||

| (18) |

These bounds indicate that the error in (17) vanishes much faster than the error in (18) and thus, the size of the training set, in general, should be kept larger than the size of the validation set, i.e., .

3.4. Sample complexity

We now study how large the validation set should be in order to get a good estimation. For , we define the sample complexity as the set of smallest integers and sufficient to guarantee that the gap between the true -vulnerability and the estimated is at most with at least probability:

Definition 3.3.

For , let all pairs be the set of smallest sizes of training and validation sets such that:

| (19) |

Next result says that we can bound the sample complexity in terms of , and (see Appendix B.3 for the proof).

Corollary 3.4.

The sample complexity of the ERM algorithm -vulnerability is bounded from above by the set of values satisfying:

| (20) | ||||

| (21) |

for all such that .

The theoretical results of this section are very general and cannot take full advantage of the specific properties of a particular model or data distribution. In particular, it is important to emphasize that the upper bound in (11) is independent of the learned function because and because of (39), and thus these bounds are independent of the specific algorithm and training sets in used to solve the optimization in (8). Furthermore, the maximizing in those in-equations is not necessarily what the algorithm would choose. Hence the bounds given in Theorem 3.2 and 3.4 in general are not tight. However, these theoretical bounds provide a worst-case measure from which learnability holds for all data sets.

In the next section, we will propose an approach for selecting and estimating . The experiments in Section 5 suggest that our method usually estimates much more accurately than what is indicated by Theorem 3.2.

4. From -vulnerability to Bayes vulnerability via pre-processing

This is the core section of the paper, which describes our approach to select the to estimate .

In principle one could train a neural network to learn by using as the loss function, and minimizing it over the training samples (cfr. Equation 8). However, this would require to be a differentiable function of the weights of the neural network, so that its gradient can be computed during the back-propagation. Now, the problem is that the component of is essentially a non-differentiable function, so it would need to be approximated by a suitable differentiable (surrogate) function, (e.g., as it is the case of the Bayes error via the cross-entropy). Finding an adequate differentiable function to replace each possible may be a challenging task in practice. If this surrogate does not preserve the original dynamic of the gradient of with respect to , the learned will be far from being optimal.

In order to circumvent this issue, we propose a different approach, which presents two main advantages:

-

(1)

it reduces the problem of learning to a standard classification problem, therefore it does not require a different loss function to be implemented for each adversarial scenario;

-

(2)

it can be implemented by using any universally consistent learning algorithm (i.e., any ML algorithm approximating the ideal Bayes classifier).

The reduction described in the above list (item 1) is based on the idea that, in the -leakage framework, the adversary’s goal is not to directly infer the actual secret , but rather to select the optimal guess about the secret. As a consequence, the training of the ML classifier to produce should not be done on pairs of type , but rather of type , expressing the fact that the best guess, in the particular run which produced , is . This shift from to is via a pre-processing and we propose two distinct and systematic ways to perform this transformation, called data and channel pre-processing, respectively. The two methods are illustrated in the following sections.

We remind that, according to section 3, we restrict, wlog, to non-negative ’s. If takes negative values, then it can be shifted by adding , without consequences for the -leakage value (cfr. (Alvim et al., 2012, 2014)). Furthermore we assume that there exists at least a pair such that . Otherwise would be and the problem of estimating it will be trivial.

4.1. Data pre-processing

The data pre-processing technique is completely black-box in the sense that it does not need access to the channel. We only assume the availability of a set of pairs of type , sampled according to , the input-output distribution of the channel. This set could be provided by a third party, for example. We divide the set in (training) and (validation), containing and pairs, respectively.

For the sake of simplicity, to describe this technique we assume that takes only integer values, in addition to being non-negative. The construction for the general case is discussed in Appendix C.3.

The idea behind the data pre-processing technique is that the effect of the gain function can be represented in the transformed dataset by amplifying the impact of the guesses in proportion to their reward. For example, consider a pair in , and assume that the reward for the guess is , while for another guess is . Then in the transformed dataset this pair will contribute with copies of and only copy of . The transformation is described in Algorithm 1. Note that in general it causes an expansion of the original dataset.

Estimation of

Given , we construct the set of pairs according to Algorithm 1. Then, we use to train a classifier , using an algorithm that approximates the ideal Bayes classifier. As proved below, gives the same mapping as the optimal empirical rule on (cfr. subsection 3.2). Finally, we use and to compute the estimation of as in (7), with replaced by .

Correctness

We first need some notation. For each , define:

| (22) |

which represents the “ideal” proportion of copies of that should contain. From we can now derive the ideal joint distribution on and the marginal on :

| (23) | where |

(note that because of the assumption on and ),

| (24) |

The channel of the conditional probabilities of given is:

| (25) |

Note that . By construction, it is clear that the generated by Algorithm 1 could have been generated, with the same probability, by sampling . The following theorem, whose proof is in Appendix C.1, establishes that the -vulnerability of is equivalent to the Bayes vulnerability of , and hence it is correct to estimate as an empirical Bayes classifier trained on .

Estimation error

To reason about the error we need to consider the optimal empirical classifiers. Assuming that we can perfectly match the above with the of Algorithm 1, we can repeat the same reasoning as above, thus obtaining , where is the empirical functional defined in (8), and is the corresponding empirical functional for evaluated in :

| (26) |

is the maximizer of this functional, i.e. . Therefore we have:

A bound on the estimation error of this method can therefore be obtained by using the theory developed in previous section, applied to the Bayes classification problem. Remember that the estimation error is is . With respect to the estimation error of the corresponding Bayes classifier, we have a magnification of a factor as shown by the following formula, where represents the empirical functional for the Bayes classifier:

However, the normalized estimation error (cfr. section 5) remains the same because both numerator and denominator are magnified by a factor .

Concerning the probability that the error is above a certain threshold , we have the same bound as those for the Bayes classifier in 3.1 and the other results of previous section, where is replaced by , by , by , and (because it’s a Bayes classifier). It may sounds a bit surprising that the error for the estimation of the -vulnerability is not much worse than for the estimation of the Bayes error, but we recall that we are essentially solving the same problem, only every quantity is magnified by a factor . Also, we are assuming that we can match perfectly by . When ranges in a large domain this may not be possible, and we should rather resort to the channel pre-processing method described in the next section.

4.2. Channel pre-processing

For this technique we assume black-box access to the system, i.e., that we can execute the system while controlling each input, and collect the corresponding output.

The core idea behind this technique is to transform the input of into entries of type , and to ensure that the distribution on the ’s reflects the corresponding rewards expressed by .

More formally, let us define a distribution on as follows:

| (27) | where |

(note that is strictly positive because of the assumptions on and ), and let us define the following matrix from to :

| (28) |

It is easy to check that is a stochastic matrix, hence the composition is a channel.

It is important to emphasize the following:

Remark

In the above definitions, and depend solely on and , and not on .

The above property is crucial to our goals, because in the black-box approach we are not supposed to rely on the knowledge of ’s internals.

We now illustrate how we can estimate using the pre-processed channel .

Estimation of

Given and , we build a set consisting of pairs of type sampled from . We also construct a set of pairs of type sampled from . Then, we use to train a classifier , using an algorithm that approximates the ideal Bayes classifier. Finally, we use and to compute the estimation of as in (7), with replaced by .

Alternatively, we could estimate by computing the empirical Bayes error of on a validation set of type sampled from , but the estimation would be less precise. Intuitively, this is because is more “noisy” than .

Correctness

The correctness of the channel pre-processing method is given by the following theorem, which shows that we can learn by training a Bayesian classifier on a set sampled from .

Theorem 4.2 (Correctness of channel pre-processing).

Interestingly, a result similar to Theorem 4.2 is also given in (Bordenabe and Smith, 2016), although the context is completely different from ours: the focus of (Bordenabe and Smith, 2016), indeed, is to study how the leakage of on may induce also a leakage of other sensitive information that has nothing to do with (in the sense that is not information manipulated by ). We intend to explore this connection in the context of a possible extension of our approach to this more general scenario.

Estimation error

Concerning the estimation error, the results are essentially the same as in previous section (with replaced by ). As for the bound on the probability of error, the results are worse, because the original variance is magnified by the channel pre-processing, which introduces a further factor of randomness in the sampling of training data in 12, which means that in practice this bound is more difficult to estimate.

4.3. Pros and cons of the two methods

The fundamental advantage of data pre-processing is that it allows to estimate from just samples of the system, without even black-box access. In contrast to channel pre-processing, however, this method is particularly sensitive to the values of the gain function . Large gain values will increase the size of , with consequent increase of the computational cost for estimating the -vulnerability. Moreover, if takes real values then we need to apply the technique described in Appendix C.3, which can lead to a large increase of the dataset as well. In contrast, the channel pre-processing method has the advantage of controlling the size of the training set, but it can be applied only when it is possible to interact with the channel by providing input and collecting output. Finally, from the precision point of view, we expect the estimation based on data pre-processing to be more accurate when consists of small integers, because the channel pre-processing introduces some extra noise in the channel.

5. Evaluation

In this section we evaluate our approach to the estimation of -vulnerability. We consider four different scenarios:

-

(1)

is a set of (synthetic) numeric data, the channel consists of geometric noise, and is the multiple guesses gain function, representing an adversary that is allowed to make several attempts to discover the secret.

-

(2)

is a set of locations from the Gowalla dataset (Gow, 2011), is the optimal noise of Shokri et al. (Shokri et al., 2012), and is one of the functions used to evaluate the privacy loss in (Shokri et al., 2012), namely a function anti-monotonic on the distance, representing the idea that the more the adversary’s guess is close to the target (i.e., the real location), the more he gains.

-

(3)

is the Cleveland heart disease dataset (Dua and Graff, 2017), is a differentially private (DP) mechanism (Dwork et al., 2006; Dwork, 2006), and assigns higher values to worse heart conditions, modeling an adversary that aims at discovering whether a patient is at risk (for instance, to deny his application for health insurance).

-

(4)

is a set of passwords of bits and is a password checker that leaks the time before the check fails, but mitigates the timing attacks by applying some random delay and the bucketing technique (see, for example, (Köpf and Dürmuth, 2009)). The function represents the part of the password under attack.

For each scenario, we proceed in the following way:

-

•

We consider different samples sizes for the training sets that are used to train the ML models and learn the remapping. This is to evaluate how the precision of the estimate depends on the amount of data available, and on its relation with the size of .

-

•

In order to evaluate the variance of the precision, for each training size we create different training sets, and

-

•

for each trained model we estimate the -vulnerability using different validation sets.

5.1. Representation of the results and metrics

We graphically represent the results of the experiment as box plots, using one box for each size. More precisely, given a specific size, let be the -vulnerability estimation on the -th validation set computed with a model trained over the -th training set (where and ). Let be the real -vulnerability of the system. We define the normalized estimation error and the mean value of the ’s as follows:

| (29) |

In the graphs, the ’s are reported next to the box corresponding to the size, and is the black horizontal line inside the box.

Thanks to the normalization the ’s allow to compare results among different scenario and different levels of (real) -vulnerability. Also, we argue that the percentage of the error is more meaningful than the absolute value. The interested reader, however, can find in Appendix H also plots showing the estimations of the -vulnerability and their distance from the real value.

We also consider the following typical measures of precision:

| (30) | |||

| (31) |

The dispersion is an average measure of how far the normalized estimation errors are from their mean value when using same-size training and validation sets. On the other hand, the total error is an average measure of the normalized estimation error, when using same-size training and validation sets.

In order to make a fair comparison between the two pre-processing methods, intuitively we need to use training and validation sets of “equivalent size”. For the validation part, since the “best” function has been already found and therefore we do not need any pre-processing anymore, “equivalent size” just means same size. But what does it mean, in the case of training sets built with different pre-processing methods? Assume that we have a set of data coming from a third party collector (recall that represents the size of the set), and let be the result of the data pre-processing on . Now, let be the dataset obtained drawing samples according to the channel pre-processing method. Should we impose or ? We argue that the right choice is the first one, because the amount of “real” data collected is . Indeed, is generated synthetically from and cannot contain more information about than , despite its larger size.

5.2. Learning algorithms

We consider two ML algorithms in the experiments: k-Nearest Neighbors (k-NN) and Artificial Neural Networks (ANN). We have made however a slight modification of k-NN algorithm, due to the following reason: recall that, depending on the particular gain function, the data pre-processing method might create many instances where a certain observable is repeated multiple times in pair with different ’s. For the k-NN algorithm, a very common choice is to consider a number of neighbors which is equivalent to natural logarithm of the total number of training samples. In particular, when the data pre-processing is applied, this means that nearest neighbors will be considered for the classification decision. Since grows slowly with respect to , it might happen that k-NN fails to find the subset of neighbors from which the best remapping can be learned. To amend this problem, we modify the k-NN algorithm in the following way: instead of looking for neighbors among all the samples, we only consider a subset of samples, where each value only appears once. After the neighbors have been detected among the samples, we select according to a majority vote over the tuples created through the remapping.

The distance on which the notion of neighbor is based depends on the experiments. We have considered the standard distance among numbers in the first and fourth experiments, the Euclidean distance in the second one, and the Manhattan distance in the third one, which is a stand choice for DP.

Concerning the ANN models, their specifics are in Appendix D. Note that, for the sake of fairness, we use the same architecture for both pre-processing methods, although we adapt number of epochs and batch size to the particular dataset we are dealing with.

5.3. Frequentist approach

In the experiments, we will compare our method with the frequentist one. This approach has been proposed originally in (Chatzikokolakis et al., 2010) for estimating mutual information, and extended successively also to min-entropy leakage (Chothia et al., 2013). Although not considered in the literature, the extension to the case of -vulnerability is straightforward. The method consists in estimating the probabilities that constitute the channel matrix , and then calculating analytically the -vulnerability on . The precise definition is in Appendix E.

In (Cherubin et al., 2019) it was observed that in the case of the Bayes error the frequentist approach performs poorly when the size of the observable domain is large with respect to the available data. We want to study whether this is the case also for -vulnerability.

For the experiment on the multiple guesses the comparison is illustrated in the next section. For the other experiments, because of lack of space, we have reported it in the Appendix H.

5.4. Experiment : multiple guesses

We consider a system in which the secrets are the integers between and , and the observables are the integers between and . Hence and K. The rows of the channel are geometric distributions centered on the corresponding secret:

| (32) |

where:

-

•

is a parameter that determines how concentrated around the distribution is. In this experiment we set ;

-

•

is an auxiliary function that reports to the same scale of , and centers on . Here ;

-

•

is a normalization factor

Figure 1 illustrates the shape of , showing the distributions for two adjacent secrets and . We consider an adversary that can make two attempts to discover the secret (two-tries adversary), and we define the corresponding gain function as follows. A guess is one of all the possible combinations of different secrets from , i.e., with and . Therefore . The gain function is then

| (33) |

For this experiment we consider a uniform prior distribution on . The true -vulnerability for these particular and , results to be . As training sets sizes we consider K, K and K, and 50K for the validation sets.

5.4.1. Data pre-processing

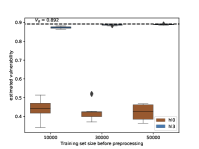

(cfr. Section 4.1). The plot in Figure 3 shows the performances of the k-NN and ANN models in terms of normalized estimation error, while Figure 2 shows the comparison with the frequentist approach. As we can see, the precision of the frequentist method is much lower, thus confirming that the trend observed in (Cherubin et al., 2019) for the Bayes vulnerability holds also for -vulnerability.

It is worth noting that, in this experiment, the pre-processing of each sample creates samples (matching with each possible such that with ). This means that the sample size of the pre-processed sets is times the size of the original ones. For functions representing more than tries this pre-processing method may create training sets too large. In the next section we will see that the alternative channel pre-processing method can be a good compromise.

5.4.2. Channel pre-processing

(cfr. Section 4.2) The results for hannel pre-processing are reported in Figure 4. As we can see, the estimation is worse than with data pre-processing, especially for the k-NN algorithm. This was to be expected, since the random sampling to match the effect of introduces a further level of confusion, as explained in Section 4.2. Nevertheless, these results are still much better than the frequentist case, so it is a good alternative method to apply when the use of data pre-processing would generate validation sets that are too large, which could be the case when the matrix representing contains large numbers with a small common divider.

Additional plots are provided in Appendix H.

5.5. Experiment 2: location privacy

In this section we estimate the -vulnerability of a typical system for location privacy protection. We use data from the open Gowalla dataset (Gow, 2011), which contains the coordinates of users’ check-ins. In particular, we consider a square region in San Francisco, USA, centered in (latitude, longitude) = (, ), and with Km long sides. In this area Gowalla contains check-ins.

We discretize the region in 400 cells of 250m long side, and we assume that the adversary’s goal is to discover the cell of a check-in. The frequency of the Gowalla check-ins per cell is represented by the heat-map in Figure 5. From these frequencies we can directly estimate the distribution representing the prior of the secrets (ElSalamouny and Palamidessi, 2020).

The channel that we consider here is the optimal obfuscation mechanism proposed in (Shokri et al., 2012) to protect location privacy under a utility constraint. We recall that the framework of (Shokri et al., 2012) assumes two loss functions, one for utility and one for privacy. The utility loss of a mechanism, for a certain prior, is defined as the expected utility loss of the noisy data generated according to the prior and the mechanism. The privacy loss is defined in a similar way, except that we allow the attacker to “remap” the noisy data so to maximize the privacy loss. For our experiment, we use the Euclidean distance as loss function for the utility, and the function defined in the next paragraph as loss function for the privacy. For further details on the construction of the optimal mechanism we refer to (Shokri et al., 2012).

We define and to be the set of the cells. Hence . We consider a function representing the precision of the guess in terms of Euclidean distance: the closer the guess is to the real location, the higher is the attacker’s gain. Specifically, our is illustrated in Figure 6, where the central cell represents the real location . For a generic “guess” cell , the number written in represent . 333Formally, is defined as where is the maximal gain, is a normalization coefficient to control the skewness of the exponential, is the euclidean distance and is the length of the cells’ side. The symbol in this context represents the rounding to the closest integer operation.

In this experiment we consider training set sizes of , k and K samples respectively. After applying the data pre-processing transformation, the size of the resulting datasets is approximately times that of the original one. This was to be expected, since the sum of the values of in Figure 6 is . Note that this sum and the increase factor in the dataset do not necessarily coincide, because the latter is also influenced by the prior and by the mechanism.

Figure 7 and Figure 8 show the performance of k-NN and ANN for both data and channel pre-processing. As expected, the data pre-processing method is more precise than the channel pre-processing one, although only slightly. The ANN model is also slightly better than the k-NN in most of the cases.

5.6. Experiment 3: differential privacy

In this section we consider a popular application of DP: individual data protection in medical datasets from which we wish to extract some statistics via counting queries. It is well known that the release of exact information from the database, even if it is only the result of statistical computation on the aggregated data, can leak sensitive information about the individuals. The solution proposed by DP is to obfuscate the released information with carefully crafted noise that obeys certain properties. The goal is to make it difficult to detect whether a certain individual is in the database or not. In other words, two adjacent datasets (i.e., datasets that differ only for the presence of one individual) should have almost the same likelihood to produce a certain observable result.

In our experiment, we consider the Cleveland heart disease dataset (Dua and Graff, 2017) which consist of records of patients with a medical heart condition. Each condition is labeled by an integer number indicating the severity: from , representing a healthy patient, to , representing a patient whose life is at risk.

We assume that the hospital publishes the histogram of the patients’ records, i.e., the number of occurrences for each label. To protect the patients’ privacy, the hospital sanitizes the histogram by adding geometric noise (a typical DP mechanism) to each label’s count. More precisely, if the count of a label is , the probability that the corresponding published number is is defined by the distribution in (32), where and are replaced by and respectively, and is . Note that , the real count, is an integer between and , while its noisy version ranges on all integers. As for the value of , in this experiment we set it to .

The secrets space is set to be a set of two elements: the full dataset, and the dataset with one record less. These are adjacent in the sense of DP, and, as customary in DP, we assume that the record on which the two databases differ is the target of the adversary. The observables space is the set of the -tuples produces by the noisy counts of the labels. is set to be the same as .

We assume that the adversary is especially interested in finding out whether the patient has a serious condition. The function reflects this preference by assigning higher value to higher labels. Specifically, we set and

| (34) |

For the estimation, we consider 10K, 30K and 50K samples for the training sets, and 50K samples for the validation set.

For the experiments with k-NN we choose the Manhattan distance, which is typical for DP444The Manhattan distance on histograms corresponds to the total variation distance on the distributions resulting from the normalization of these histograms.. In the case of data pre-processing the size of the transformed training set is about times the original . The performances of the data and channel pre-processing are shown in Figures 9 and Figure 10 respectively. Surprisingly, in this case the data pre-processing method outperforms the channel pre-processing one, although only slightly. Additional plots, including the results for the frequentist approach, can be found in Appendix H.

5.7. Experiment 4: password checker

In this experiment we consider a password checker, namely a program that tests whether a given string corresponds to the password stored in the system. We assume that string and password are sequences of bits, an that the program is “leaky”, in the sense that it checks the two sequences bit by bit and it stops checking as soon as it finds a mismatch, reporting failure. It is well known that this opens the way to a timing attack (a kind of side-channel attack), so we assume that the system tries to mitigate the threat by adding some random delay, sampled from a Laplace distribution and then bucketing the reported time in 128 bins corresponding to the positions in the sequence (or equivalently, by sampling the delay from a Geometric distribution, cfr. Equation 32). Hence the channel is a stochastic matrix.

The typical attacker is an interactive one, which figures out larger and larger prefixes of the password by testing each bit at a time. We assume that the attacker has already figured out the first bits of the sequence and it is trying to figure out the -th. Thus the prior is distributed (uniformly, we assume) only on the sequences formed by the known -bits prefix and all the possible remaining bits, while the function assigns to the sequences whose -th bit agrees with the stored password, and otherwise. Thus is a partition gain function (Alvim et al., 2012), and its particularity is that for such kind of functions data pre-processing and channel pre-processing coincide. This is because is either or , so in both cases we generate exactly one pair for each pair for which . Note that in this case the data pre-processing transformation does not increase the training set, and the channel pre-processing transformation does not introduce any additional noise. The matrix (cfr. Section 4.1) is a stochastic matrix.

The experiments are done with training sets of 10K, 30K and 50K samples. The results are reported in Figure 11. We note that the estimation error is quite small, especially in the ANN case. This is because the learning problem is particularly simple since, by considering the -leakage and the preprocessing, we have managed to reduce the problem to learning a function of type , rather than , and there is a huge difference in size between and (the first is and the latter is ). Also the frequentist approach does quite well (cfr. Appendix H) , and this is because is small. With a finer bucketing (on top of the Laplace delay), or no bucketing at all, we expect that the difference between the accuracy of the frequentist and of the ML estimation would be much larger.

5.8. Discussion

In almost all the experiments, our method gives much better result than the frequentist approach (see Figure 2 and the other plots in the appendix Appendix H). The exception of the second experiment can be explained by the fact that the observable space is not very large, which is a scenario where the frequentist approach can be successful because the available data is enough to estimate the real distribution. In general, with the frequentist approach there is no real learning, therefore, if is large and the training set contains few samples, we cannot make a good guess with the observables never seen before (Cherubin et al., 2019). In ML, on the contrary, we can still make an informed guess, as ML models are able to generalize from samples, especially when the Bayes error is small.

We observe that ANN outperforms the k-NN in all experiments. This is because usually ANN models are better at generalizing, and hence provide better classifiers. In particular, k-NN are not very good when the distributions are not smooth with respect to the metric with respect to which the neighbor relation is evaluated (Cherubin et al., 2019). The data pre-processing method gives better results, than the channel pre-processing in all experiments except the third one (DP), in which the difference is very small. The main advantage of using the channel pre-processing method is when the gain function is such that the data pre-processing would generate a set too large, as explained in Section 4.3.

The experiments show that our method is not too sensitive to the size of . On the other hand, the size of is important, because the ML classifiers are in general more precise (approximate better the ideal Bayes classifier) when the number of classes are small. This affects the estimation error of both the Bayes vulnerability and the -vulnerability. However, for the latter there is also the additional problem of the magnification due to the . To better understand this point, consider a modification of the last experiment, and assume that the password checker is not leaky, i.e., the observables are only or . A pair would have a negligible probability of appearing in the training set, hence our method, most likely, would estimate the vulnerability to be . This is fine if we are trying to estimate the Bayes vulnerability, which is also negligible. But the -vulnerability may not be negligible, in particular if we consider a that gives an enormous gain for the success case. If we can ensure that all the pairs are represented in the training set in proportion to their probability in , then the above ”magnification” in -vulnerability is not a problem, because our method will ensure the also the pairs would be magnified (with respect to the the pairs ) in the same proportion.

6. Conclusion and future work

We have proposed an approach to estimate the -vulnerability of a system under the black-box assumption, using machine learning. The basic idea is to reduce the problem to learn the Bayes classifier on a set of pre-processed training data, and we have proposed two techniques for this transformation, with different advantages and disadvantages. We have then evaluated our approach on various scenarios, showing favorable results. We have compared our approach to the frequentist one, showing that the performances are similar on small observable domains, while ours performs better on large ones. This is in line with what already observed in (Cherubin et al., 2019) for the estimation of the Bayes error.

As future work, we plan to test our framework on more real-life scenarios such as the web fingerprinting attacks (Cherubin et al., 2017; Cherubin, 2017) and the AES cryptographic algorithm (de Chérisey et al., 2019). We also would like to consider the more general case, often considered in Information-flow security, of channels that have both “high” and “low” inputs, where the first are the secrets and the latter are data visible to, or even controlled by, the adversary. Finally, a more ambitious goal is to use our approach to minimize the -vulnerability of complex systems, using a GAN based approach, along the lines of (Romanelli et al., 2020).

Acknowledgements.

This research was supported by DATAIA “Programme d’Investissement d’Avenir” (ANR-17-CONV-0003). It was also supported by the ANR project REPAS, and by the Inria/DRI project LOGIS. The work of Catuscia Palamidessi was supported by the project HYPATIA, funded by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program, grant agreement n. 835294. This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 792464.References

- (1)

- Gow (2011) 2011. Gowalla dataset. https://snap.stanford.edu/data/loc-Gowalla.html.

- Alvim et al. (2014) Mário S. Alvim, Konstantinos Chatzikokolakis, Annabelle McIver, Carroll Morgan, Catuscia Palamidessi, and Geoffrey Smith. 2014. Additive and Multiplicative Notions of Leakage, and Their Capacities. In IEEE 27th Computer Security Foundations Symposium, CSF 2014, Vienna, Austria, 19-22 July, 2014. IEEE, 308–322. https://doi.org/10.1109/CSF.2014.29

- Alvim et al. (2016) Mário S. Alvim, Konstantinos Chatzikokolakis, Annabelle McIver, Carroll Morgan, Catuscia Palamidessi, and Geoffrey Smith. 2016. Axioms for Information Leakage. In Proceedings of the 29th IEEE Computer Security Foundations Symposium (CSF). 77–92. https://doi.org/10.1109/CSF.2016.13

- Alvim et al. (2012) Mário S. Alvim, Konstantinos Chatzikokolakis, Catuscia Palamidessi, and Geoffrey Smith. 2012. Measuring Information Leakage Using Generalized Gain Functions. In 25th IEEE Computer Security Foundations Symposium, CSF 2012, Cambridge, MA, USA, June 25-27, 2012, Stephen Chong (Ed.). IEEE Computer Society, 265–279. http://ieeexplore.ieee.org/xpl/mostRecentIssue.jsp?punumber=6265867

- Bishop (2007) Christopher M. Bishop. 2007. Pattern recognition and machine learning, 5th Edition. Springer. I–XX, 1–738 pages. http://www.worldcat.org/oclc/71008143

- Bordenabe and Smith (2016) Nicolás E. Bordenabe and Geoffrey Smith. 2016. Correlated Secrets in Quantitative Information Flow. In CSF. IEEE Computer Society, 93–104. http://ieeexplore.ieee.org/xpl/mostRecentIssue.jsp?punumber=7518122

- Boreale (2009) Michele Boreale. 2009. Quantifying information leakage in process calculi. Inf. Comput 207, 6 (2009), 699–725.

- Boucheron et al. (2013) S. Boucheron, G. Lugosi, and P. Massart. 2013. Concentration Inequalities: A Nonasymptotic Theory of Independence. OUP Oxford.

- Chatzikokolakis et al. (2010) Konstantinos Chatzikokolakis, Tom Chothia, and Apratim Guha. 2010. Statistical Measurement of Information Leakage. In TACAS (Lecture Notes in Computer Science, Vol. 6015). Springer, 390–404.

- Chatzikokolakis et al. (2019) Konstantinos Chatzikokolakis, Natasha Fernandes, and Catuscia Palamidessi. 2019. Comparing systems: max-case refinement orders and application to differential privacy. In Proceedings of the 32nd IEEE Computer Security Foundations Symposium. Hoboken, United States, 442–457. https://doi.org/10.1109/CSF.2019.00037

- Chatzikokolakis et al. (2008a) Konstantinos Chatzikokolakis, Catuscia Palamidessi, and Prakash Panangaden. 2008a. Anonymity protocols as noisy channels. Inf. Comput 206, 2-4 (2008), 378–401.

- Chatzikokolakis et al. (2008b) Konstantinos Chatzikokolakis, Catuscia Palamidessi, and Prakash Panangaden. 2008b. On the Bayes risk in information-hiding protocols. Journal of Computer Security 16, 5 (2008), 531–571. https://doi.org/10.3233/JCS-2008-0333

- Cherubin (2017) Giovanni Cherubin. 2017. Bayes, not Naïve: Security Bounds on Website Fingerprinting Defenses. PoPETs 2017, 4 (2017), 215–231.

- Cherubin et al. (2019) Giovanni Cherubin, Konstantinos Chatzikokolakis, and Catuscia Palamidessi. 2019. F-BLEAU: Fast Black-box Leakage Estimation. IEEE Symposium on Security and Privacy abs/1902.01350 (2019). http://arxiv.org/abs/1902.01350

- Cherubin et al. (2017) Giovanni Cherubin, Jamie Hayes, and Marc Juárez. 2017. Website Fingerprinting Defenses at the Application Layer. PoPETs 2017, 2 (2017), 186–203. https://doi.org/10.1515/popets-2017-0023

- Chizat and Bach (2020) Lénaïc Chizat and Francis Bach. 2020. Implicit Bias of Gradient Descent for Wide Two-layer Neural Networks Trained with the Logistic Loss. In Conference on Learning Theory, COLT 2020, 9-12 July 2020, Virtual Event [Graz, Austria] (Proceedings of Machine Learning Research, Vol. 125), Jacob D. Abernethy and Shivani Agarwal (Eds.). PMLR, 1305–1338. http://proceedings.mlr.press/v125/chizat20a.html

- Chothia and Guha (2011) Tom Chothia and Apratim Guha. 2011. A Statistical Test for Information Leaks Using Continuous Mutual Information. In CSF. IEEE Computer Society, 177–190. http://ieeexplore.ieee.org/xpl/mostRecentIssue.jsp?punumber=5991608

- Chothia et al. (2013) Tom Chothia, Yusuke Kawamoto, and Chris Novakovic. 2013. A tool for estimating information leakage. In International Conference on Computer Aided Verification (CAV). Springer, 690–695.

- Chothia et al. (2014) Tom Chothia, Yusuke Kawamoto, and Chris Novakovic. 2014. LeakWatch: Estimating Information Leakage from Java Programs. In ESORICS (2) (Lecture Notes in Computer Science, Vol. 8713), Miroslaw Kutylowski and Jaideep Vaidya (Eds.). Springer, 219–236.

- Clark et al. (2001) David Clark, Sebastian Hunt, and Pasquale Malacaria. 2001. Quantitative Analysis of the Leakage of Confidential Data. Electr. Notes Theor. Comput. Sci 59, 3 (2001), 238–251.

- Cybenko (1992) George Cybenko. 1992. Approximation by superpositions of a sigmoidal function. MCSS 5, 4 (1992), 455.

- de Chérisey et al. (2019) Eloi de Chérisey, Sylvain Guilley, Olivier Rioul, and Pablo Piantanida. 2019. Best Information is Most Successful - Mutual Information and Success Rate in Side-Channel Analysis. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2019, 2 (2019), 49–79. https://doi.org/10.13154/tches.v2019.i2.49-79

- Devroye et al. (1996) Luc Devroye, László Györfi, and Gábor Lugosi. 1996. Vapnik-Chervonenkis Theory. Springer New York, New York, NY, 187–213. https://doi.org/10.1007/978-1-4612-0711-5_12

- Dua and Graff (2017) Dheeru Dua and Casey Graff. 2017. UCI Machine Learning Repository (Heart Disease Data Set). https://archive.ics.uci.edu/ml/datasets/heart+Disease

- Dwork (2006) Cynthia Dwork. 2006. Differential Privacy. In 33rd International Colloquium on Automata, Languages and Programming (ICALP 2006) (Lecture Notes in Computer Science, Vol. 4052), Michele Bugliesi, Bart Preneel, Vladimiro Sassone, and Ingo Wegener (Eds.). Springer, 1–12. http://dx.doi.org/10.1007/11787006_1

- Dwork et al. (2006) Cynthia Dwork, Frank Mcsherry, Kobbi Nissim, and Adam Smith. 2006. Calibrating noise to sensitivity in private data analysis. In In Proceedings of the Third Theory of Cryptography Conference (TCC) (Lecture Notes in Computer Science, Vol. 3876), Shai Halevi and Tal Rabin (Eds.). Springer, 265–284.

- ElSalamouny and Palamidessi (2020) Ehab ElSalamouny and Catuscia Palamidessi. 2020. Full Convergence of the Iterative Bayesian Update and Applications to Mechanisms for Privacy Protection. arXiv:1909.02961 [cs.CR] To appear in the proceedings of EuroS&P.

- Goodfellow et al. (2016) Ian J. Goodfellow, Yoshua Bengio, and Aaron C. Courville. 2016. Deep Learning. MIT Press. 1–775 pages. http://www.deeplearningbook.org/

- Hastie et al. (2001) T. Hastie, R. Tibshirani, and J. Friedman. 2001. The Elements of Statistical Learning: Data Mining, Inference and Prediction. Springer-Verlag.

- Köpf and Basin (2007) Boris Köpf and David A. Basin. 2007. An information-theoretic model for adaptive side-channel attacks. In Proceedings of the 2007 ACM Conference on Computer and Communications Security, CCS 2007, Alexandria, Virginia, USA, October 28-31, 2007, Peng Ning, Sabrina De Capitani di Vimercati, and Paul F. Syverson (Eds.). ACM, 286–296.

- Köpf and Dürmuth (2009) Boris Köpf and Markus Dürmuth. 2009. A Provably Secure and Efficient Countermeasure against Timing Attacks. In Proceedings of the 2009 22nd IEEE Computer Security Foundations Symposium (CSF ’09). IEEE Computer Society, USA, 324–335. https://doi.org/10.1109/CSF.2009.21

- Lecun et al. (1998) Y. Lecun, L. Bottou, Y. Bengio, and P. Haffner. 1998. Gradient-based learning applied to document recognition. Proc. IEEE 86, 11 (1998), 2278–2324.

- Romanelli et al. (2020) Marco Romanelli, Catuscia Palamidessi, and Konstantinos Chatzikokolakis. 2020. Generating Optimal Privacy-Protection Mechanisms via Machine Learning, In Proceedings of the IEEE International Symposium on Computer Security Foundations (CSF). CoRR. arXiv:1904.01059 http://arxiv.org/abs/1904.01059

- Sekeh et al. (2020) S. Y. Sekeh, B. Oselio, and A. O. Hero. 2020. Learning to Bound the Multi-Class Bayes Error. IEEE Transactions on Signal Processing 68 (2020), 3793–3807.

- Shalev-Shwartz and Ben-David (2014) Shai Shalev-Shwartz and Shai Ben-David. 2014. Understanding machine learning : from theory to algorithms. http://www.worldcat.org/search?qt=worldcat_org_all&q=9781107057135

- Shokri et al. (2011) Reza Shokri, George Theodorakopoulos, Jean-Yves Le Boudec, and Jean-Pierre Hubaux. 2011. Quantifying Location Privacy. In IEEE Symposium on Security and Privacy. IEEE Computer Society, 247–262. http://ieeexplore.ieee.org/xpl/mostRecentIssue.jsp?punumber=5955408;http://www.computer.org/csdl/proceedings/sp/2011/4402/00/index.html

- Shokri et al. (2012) Reza Shokri, George Theodorakopoulos, Carmela Troncoso, Jean-Pierre Hubaux, and Jean-Yves Le Boudec. 2012. Protecting location privacy: optimal strategy against localization attacks. In the ACM Conference on Computer and Communications Security, CCS’12, Raleigh, NC, USA, October 16-18, 2012, Ting Yu, George Danezis, and Virgil D. Gligor (Eds.). ACM, 617–627. http://dl.acm.org/citation.cfm?id=2382196

- Smith (2009) Geoffrey Smith. 2009. On the Foundations of Quantitative Information Flow. In Foundations of Software Science and Computational Structures, 12th International Conference, FOSSACS 2009, Held as Part of the Joint European Conferences on Theory and Practice of Software, ETAPS 2009, York, UK, March 22-29, 2009. Proceedings (Lecture Notes in Computer Science, Vol. 5504), Luca de Alfaro (Ed.). Springer, 288–302.

- Tumer and Ghosh (2003) Kagan Tumer and Joydeep Ghosh. 2003. Bayes Error Rate Estimation Using Classifier Ensembles. International Journal of Smart Engineering System Design 5, 2 (2003), 95–109. https://doi.org/10.1080/10255810305042 arXiv:https://doi.org/10.1080/10255810305042

- Wolpert (1996) David H. Wolpert. 1996. The Lack of A Priori Distinctions Between Learning Algorithms. Neural Computation 8, 7 (1996), 1341–1390.

Appendix A Auxiliary Results

Proposition A.1 (Bernstein’s inequality (Boucheron et al., 2013)).

Let be i.i.d. random variables such that almost surely and let and the variance of . Then, for any , we have:

| (35) |

Compared to the Hoeffding’s inequality, it is easy to check that, for regimes where is small, Bernstein’s inequality offers tighter bounds for .

Lemma A.2.

Let and let be a real-valued random variable such that for all ,

| (36) |

Then,

| (37) |

where, for large ,

| (38) |

Proof.

and eq. 38 follows from the Taylor’s expansion of the function. ∎

Appendix B Proofs for the statistical bounds

The following lemma is a simple adaption of the uniform deviations of relative frequencies from probabilities theorems in (Devroye et al., 1996).

Lemma B.1.

The following inequality holds:

| (39) |

Proof.

| (40) | ||||

| (41) | ||||

| (42) | ||||

| (43) | ||||

| (44) |

∎

B.1. Proof of 3.1

See 3.1

Proof.

We first prove (12). We have:

| (45) | |||

| (46) |

where (45) follows from the definition of : we consider the expectation of the probability over all training sets sampled from , and then for each we take the probability over all possible validation sets sampled again from . Consider the series of ’s sampled from that constitute the validation set, and define the random variables . Note that determines , hence, for a given the are i.i.d.. The inequality (46) then follows by applying A.1. Indeed, since the ’s are i.i.d., they all have the same expectation and the same variance, hence , and . The factor in front of the is because we consider the absolute value of .

As for the bound (13), we have:

| (47) | ||||

| (48) | ||||

| (49) | ||||

| (50) |

where (47) follows from B.1, steps (48) and (49) are standard, and (50) follows from the same reasoning that we have applied to prove (12). Here we do not take the expectation on the training sets because in each term of the summation the is fixed. ∎

B.2. Proof of Theorem 3.2

See 3.2

Proof.

Observe that

which follows from the triangular inequality.

First, let us call the worst case variance defined above which, according to Popoviciu’s inequality is upper-bounded by . Second, let us consider that one main advantage of deriving bounds from Bernstein’s inequality is that it allows allow the upper-bound in eq. 35 grows as instead of if . Moreover eq. 46 is upper-bounded by . This said we are going to consider two cases:

i) :

| (51) | |||

| (52) | |||

| (53) |

and then,

| (54) | |||

| (55) | |||

| (56) | |||

| (57) |

ii) :

| (58) | |||

| (59) | |||

| (60) |

considering , and applying lemma A.2.

And finally,

| (61) | |||

| (62) | |||

| (63) | |||

| (64) |

according to lemma A.2 with and .

∎

B.3. Proof of 3.4

See 3.4

Proof.

We first notice that

Appendix C Pre-processing

C.1. Data pre-processing

See 4.1

Proof.

| (72) | |||

| (73) | |||

| (74) |

∎

C.2. Channel pre-processing

See 4.2

Proof.

In this proof we use a notation that highlights the structure of the preprocessing. We will denote by be the matrix form of , i.e., , and by the square matrix with in its diagonal and elsewhere. We have that , which is strictly positive because of the assumptions on and . Furthermore, we have

where is the vector of s and represents the transposition of vector . Note that is a diagonal matrix with entries in its diagonal. If then the row is not properly defined; but its choice does not affect since the corresponding prior is ; so we can choose arbitrarily (or equivalently remove the action , it can never be optimal since it gives gain). It is easy to check that is a proper distribution and is a proper channel:

Moreover, it holds that:

The main result follows from the trace-based formulation of posterior -vulnerability (Alvim et al., 2012), since for any channel and strategy , the above equation directly yields

where is the matrix trace. ∎

C.3. Data pre-processing when is not integer

Approximating so that it only takes values allows us to represent each gain as a quotient of two integers, namely

Let us also define

| (75) |

where is the least common multiple. Multiplying by gives the integer version of the gain matrix that can replace the original one. It is clear that the calculation of the least common multiplier, as well as the increase in the amount of data produced during the dataset building using a gain matrix forced to be integer, might constitute a relevant computational burden.

Appendix D ANN models

We list here the specifics for the ANNs models used in the experiments. All the models are simple feed-forward networks whose layers are fully connected. The activation functions for the hidden neurons are rectifier linear functions, while the output layer has softmax activation function.

The loss function minimized during the training is the cross entropy, a popular choice in classification problems. The remapping can be in fact considered as a classification problem such that, given an observable, a model learns to make the best guess.

For each experiments, the models have been tuned by cross-validating them using one randomly chosen training sets among the available ones choosing among the largest in terms of samples. The specs are listed experiment by experiment in table 2.

| Hyper-parameters | ||||||

|---|---|---|---|---|---|---|

| Experiment | Pre-processing | learning rate | hidden layers | epochs | hidden units per layer | batch size |

| Multiple guesses | Data | 700 | 1000 | |||

| Channel | 500 | 1000 | ||||

| Location Priv. | Data | 1000 | ||||

| Channel | ||||||

| Diff. Priv. | Data | 500 | ||||

| Channel | 500 | |||||

| Psw SCA | - | 700 | ||||

Appendix E Frequentist approach description

In the frequentist approach the elements of the channel, namely the conditional probabilities , are estimated directly in the following way: the empirical prior probability of , , is computed by counting the number of occurrences of in the training set and dividing the result by the total number of elements. Analogously, the empirical joint probability is computed by counting the number of occurrences of the pair and dividing the result by the total number of elements in the set. The estimation of is then defined as

| (76) |

In order to have a fair comparison with our approach, which takes advantage of the fact that we have several training sets and validation sets at our disposal, while preserving at the same time the spirit of the frequentist approach, we proceed as follows: Let us consider a training set , that we will use to learn the best remapping , and a validation set which is then used to actually estimate the -vulnerability. We first compute using . For each in and for each , the empirical probability is computed using as well. In particular, is given by the number of times appears in pair with divided by the number of occurrences of . In case a certain is in but not in , it is assigned the secret so that and . It is now possible to find the best mapping for each defined as . Now we compute the empirical joint distribution for each in , namely , as the number of occurrences of divided by the total number of samples in . We now estimate the -vulnerability on the validation samples according to:

| (77) |

Appendix F ANN: Model selection and impact on the estimation

In this section we are going to:

-

•

briefly summarize the widely known background of the model selection problem from the machine learning standpoint;

-

•

show, through a new set of experiments, how this problem affects the leakage estimation;

-

•

propose a heuristic which can help the practitioner in the choice of the model on the same line as classical machine learning techniques.

The problem of model selection in machine learning is still an open one and, although a state-of-the art algorithm does not exists, heuristics can be proposed to lead the practitioner in the hard task of choosing a model over others. First, let us underline that the choice of a specific model in the context of machine learning, and specifically neural networks (and deep learning), must go through the hyper-parameter optimization procedure. In fact, if nowadays neural nets and especially deep models represent the state-of-the-art solutions to most of the automatic decision problem, it is also true that, with respect to other simpler methods, they introduce the need for hyper-parameters optimization. Some techniques, such as grid and random search as well as Bayesian optimization have been suggested during the years, especially when not so many parameters need to be tuned. Two aspects must be considered:

-

(1)

the hyper-parameter optimization relies on try and error strategy,

-

(2)

the results are highly dependent on the data distribution and how well the samples represent such distribution.

In particular, if we consider the typical classification problem framework in neural nets (which we build on to create our framework) we expect the network to reproduce in output the distribution from the observed data. In this context, the practitioner should be careful to avoid two main problems which might affect the models, namely under-fitting and over-fitting. Both problems undermine the generalization capabilities of the models: the former occurs when the model is too simple to correctly represent the distribution; the latter occurs when the model is over-complicated, especially for the given amount of samples, and it fits the training data “too well” but this does not translates into good performances on other samples drawn from the same distribution.