Enhancing interval observers for state estimation using constraints ††thanks: This work was funded by Novartis Pharmaceuticals as part of the Novartis-MIT Center for Continuous Manufacturing.

Abstract

This work considers the problem of calculating an interval-valued state estimate for a nonlinear system subject to bounded inputs and measurement errors. Such state estimators are often called interval observers. Interval observers can be constructed using methods from reachability theory. Recent advances in the construction of interval enclosures of reachable sets for nonlinear systems inspire the present work. These advances can incorporate constraints on the states to produce tighter interval enclosures. When applied to the state estimation problem, bounded-error measurements may be used as state constraints in these new theories. The result is a method that is easily implementable and which generally produces better, tighter interval state estimates. Furthermore, a novel linear programming-based method is proposed for calculating the observer gain, which must be tuned in practice. In contrast with previous approaches, this method does not rely on special system structure. The new approaches are demonstrated with numerical examples.

1 Introduction

This work considers the problem of bounded-error state estimation for nonlinear dynamic systems with continuous-time measurements. The goal of state estimation is to determine or estimate the internal state of a real system. While only certain states or functions of the states can be measured directly, a mathematical model of the system is available. When the system is a dynamic one, a history of measurements is typically available. Estimating the state using a dynamic model and measurements goes back to the Kalman filter [12], where a more statistical estimate of the state is obtained. More recently, work has focused on estimates in the form of guaranteed bounds on the state in the presence of bounded uncertainties and measurement errors [5, 11, 19, 21, 26]. This work continues in this direction.

Interval observers are a type of state estimator most closely related to the present work. The approach to constructing interval observers taken in [19, 18, 20, 21] is similar to the theory that we will use in the proposed approach. Underpinning these methods are theorems for general estimation of reachable sets. These theories are in the vein of comparison theorems involving differential inequalities. Such theorems have a long history, going back to “Müller’s theorem” [27], which subsequently was generalized to control systems in [6]. Recent work from [9, 7, 8, 24] develops these kinds of comparison theorems further. In particular, they incorporate state constraints into the bounding theory in a fundamental way; this information typically produces a tighter estimate of the reachable set without significant extra computational effort.

While the work in [19, 18, 20, 21] relies on the classic Müller’s theorem or the theory of cooperative systems, the present work depends on the more recent method for reachability analysis in [8]. When this theory is applied to state estimation, bounded error measurements take the role of state constraints, and tighter state estimates result.

Related literature includes work on general reachability analysis with constraints, which has been addressed in [16]. In that work, the focus is on linear systems and ellipsoidal enclosures of the reachable sets. Related work deals with control problems with state constraints [1, 15], in which the theoretical basis of the approaches is on the Hamilton-Jacobi-Bellman partial differential equation (PDE); the authors of [15] note that the solution of such a PDE is in general complicated for nonlinear systems.

The contributions and structure of this work are as follows. Section 2 discusses notation and formally states the problem of interest. Section 3 briefly discusses some of the ideas behind interval state estimates, and then proceeds to detail the proposed method. Again, the novelty of the proposed state estimation method is the incorporation of measurements as constraints and applying recent advances in reachability analysis. Further, a new procedure for calculating the “gain” of the observer system is discussed. In contrast with previous work, this method does not rely on any special system structure such as cooperativity. Section 4 demonstrates a numerical implementation of the new method for state estimation. Comparing it with related methods, it is shown that better, tighter state estimates can be obtained with the new method. Section 5 concludes with some final remarks.

2 Problem statement and preliminaries

2.1 Notation

First, notation that is used in this work includes lowercase bold letters for vectors and uppercase bold letters for matrices. The transposes of a vector and matrix are denoted and , respectively. The exception is that may denote either a matrix or vector of zeros, but it should be clear from context what the appropriate dimensions are. Similarly, denotes a vector of ones whose dimension should be clear from context. The component of a vector will be denoted . For a matrix , the notation may be used to emphasize that the row of is , for . Similarly, emphasizes that the element in the row and column is . Inequalities between vectors hold componentwise. For , such that , denotes a nonempty interval in . For a set , denote the set of nonempty interval subsets of by . The equivalence of norms on is used often; when a statement or result holds for any choice of norm, it is denoted . In some cases, it is useful to reference a specific norm, in which case it is subscripted; for instance, denotes the 1-norm. The dual norm of a norm is denoted . In a metric space, a neighborhood of a point is denoted and refers to an open ball centered at with some nonzero radius.

2.2 Problem statement

The problem of interest is one in estimating the state of a continuous-time dynamic system; in particular, we seek a rigorous enclosure of the states or a bounded error estimate. The dynamic model of the system is defined by: for positive integers , nonempty interval , , , , and . The system obeys the following equations:

| (1a) | ||||

| (1b) | ||||

where is the state of the system, is the measurement or output of the system, is a disturbance to the system, and is the measurement noise.

In this work, we exclusively consider absolutely continuous solutions of the IVP in ODEs (1a). Further, we assume that it is known that the initial conditions satisfy , is measurable and for all , is measurable and for all , where and , for all , for compact and . In other words, interval enclosures of the possible initial conditions, measurement noise, and disturbance values are known. A fundamental assumption on the dynamics is a kind of local Lipschitz condition: For any , there exists a neighborhood and such that for almost every and every

for every .

The challenge, then is to construct an interval such that

for any possible realization of noise , disturbance , and initial condition.

For future reference, since and are interval-valued, we can write and for appropriate functions.

3 State estimation

3.1 Motivation

Previous work on bounded-error state estimation includes interval observers; we refer to [4] for a recent review of such work and some related topics. The essence of this type of method is easily understood for a linear system. Consider

| (2) | ||||

| (3) |

Then for any matrix , we can write

and subsequently

The theory of interval observers often focuses on the case that the “gain matrix” is chosen so that has positive off-diagonal components, and that , for all , for all (so that , implying ). In this case one can write

Then assuming , the theory of monotone dynamic systems ensures that for any initial condition and realization of measurement noise, , for all .

Our approach is to extend this idea with more general theorems for reachability analysis based on the theory of differential inequalities, and in particular, to leverage the recent developments that take advantage of constraint information to tighten the estimates of the reachable sets. To this end, we interpret the state estimation problem as a reachability problem for an IVP in constrained ODEs. This has been considered most recently in [8]. Similar techniques from [9, 7, 24] also apply; those articles use “a priori enclosures” of the reachable set, which are in effect constraints, although they do not necessarily exclude any solutions. As demonstrated in [8], these techniques can be applied to constrained systems, if one is only interested in solutions that also satisfy the constraints.

Thus, the proposed approach is to apply reachability analysis to the system

for some choice of matrix , and constraint mapping defined by .

Recent developments in the design of interval observers from [5, 26] allow the gain matrix to vary, depending on time and measurements. There is no significant hurdle to incorporating such a generalization in the method that follows; we choose not to do so in order to keep notation simple and to highlight the essence of our contribution.

3.2 Proposed method

To state the method, we must introduce a few definitions and operations. Central to the method for estimating reachable sets that we will use, from [24], is the ability to overestimate or bound the dynamics. We will do so with an inclusion function constructed using principles from interval arithmetic. See [22] for an introduction to interval arithmetic and inclusion functions. As in the discussion above, the dynamics we care about are for a modified system depending on the gain matrix .

Definition 1.

For , define

and let and be the endpoints of an inclusion monotonic interval extension of .

The critical property of an inclusion monotonic interval extension is that it is an inclusion function; that is

for any , , , and . Inclusion functions for a broad class of functions can be automatically and cheaply evaluated with a number of numerical libraries implementing interval arithmetic, for instance, MC++ [2], INTLAB [23], or PROFIL [14]. The proposed method relies on these automatically computable inclusion functions, which reduces the amount of analysis that must be performed compared to other work. For instance, the work in [19, 18] requires analysis of the dynamics to derive a hybrid automata which is then used to construct the state estimate.

Since it will be useful later, we mention that the natural interval extension of a function is an inclusion monotonic interval extension. For a linear function , the natural interval extension is computed by applying the rules of interval arithmetic:

We will also require the following definition. If , then returns the lower face of the interval . If is empty, then a nonempty interval is constructed and the faces of that interval are returned.

Definition 2.

Define for each

where , are given componentwise by and .

The operation defined in Algorithm 1 is required. Originally from Definition 4 in [24], this algorithm defines the operation , which tightens an interval by excluding points which cannot satisfy a given set of linear constraints . Specifically, the discussion in §5.2 of [24] establishes that the tightened interval satisfies

With the definition of the interval tightening operator, we can define an operation specific to our problem which tightens an interval based on the constraints , .

Definition 3.

Let

Define

We can now state the specific method for constructing a state estimator. The main difference between this method and a classic comparison theorem for reachability (see [6]) is the application of the operator. While the classic method would overstimate, for instance, on the set (the upper face of the interval), the proposed method applies to that set, and overestimates on the resulting (no larger) interval.

Theorem 1.

For any , let be absolutely continuous and satisfy

for each , and with initial conditions . Then for all ; that is, is an interval estimate of for all .

Proof.

As mentioned earlier, the claim follows by applying an established method for estimating reachable sets for the system

where , . The specific method is from [24, §5.2]. With the assumptions on the problem setting in §2.2 and the definitions of the dynamics defining , all the assumptions and hypotheses of the method from [24] are satisfied, and the conclusion holds that for any solution satisfying . ∎

We note that Theorem 1 does not guarantee the existence of and ; it provides a construction that, if successful, yields an interval estimate. Conditions that we have not stated explicitly, such as continuity of and and measurability of , , , (defining the set-valued mappings and ), may be required to apply standard existence results for the solutions of IVPs in ODEs. Perhaps more important are the typically stronger conditions that guarantee the applicability of numerical methods for the solution of IVPs in ODEs; see, for instance, Theorem 1.1 of Section 1.4 of [17]. For example, in the numerical examples that follow, we use measurements that are a continuous function on .

Comparisons

In §4, we will compare the proposed method established above against its variants and others from the literature. The method from Theorem 1 with is called the “No Measurements” method; while it still uses measurement information as constraints, it does not use the measurement values directly. Meanwhile, the “No Constraints” method is a variant of the proposed method that does not use the constraint information; that is, the endpoints of the interval estimate satisfy the differential equations

Note that application of the operation has been omitted.

3.3 Linear stability analysis and automatic gain calculation

Methods from the literature for calculating the gain matrix often focus on the case that the system is linear, e.g. . The methods often involve finding a gain matrix so that has nonnegative off-diagonal components; a matrix with this last property is often called a Metzler matrix. Consequently, the theory of cooperative or monotone systems can be applied to derive interval estimates.

In the present section, our goal is to state a method for calculating the gain matrix that does not depend on the system being cooperative. We begin with a fundamental result which will motivate the method; we show that the No Constraints method with a gain matrix satisfying certain constraints will produce an asymptotically exact interval estimate for an idealized linear system.

Theorem 2.

Suppose that the system of interest has a homogeneous, linear time invariant form, with exact measurements:

Consider the No Constraints method for calculating an interval state estimate; assume that the natural interval extension is used to calculate the inclusion monotonic interval extension function required in Definition 1. Let the columns of be . Then for any gain matrix which satisfies

| (4) |

the interval state estimate resulting from the No Constraints method is asymptotically exact; i.e. as .

Proof.

For any , let (clearly depends on but we will suppress this dependence in the notation). Let so that the state estimates resulting from the No Constraints method satisfy the following differential equations for each and almost every :

| (5) | ||||

| (6) |

Here, is a linear mapping whose effect is the following: if then

Simply, is exactly the value of the lower bound of the natural interval extension of on the set , as we would expect of the No Constraints method. Similarly, if then

and again, equals the upper bound of the natural interval extension of on the set .

Then we have that for each

or in matrix form,

where each off-diagonal component of equals the absolute value of the corresponding component of , and the diagonals of and are equal.

If every eigenvalue of has negative real part, then as (see for instance [13, Thm. 3.5]. Gershgorin’s circle theorem [25, §7.4] provides a way to bound the eigenvalues; for any eigenvalue , there is some such that

Since each is real, if we require that for all

then we can be sure that all eigenvalues of have negative real part.

Of course, we realize that these conditions simplify to for all . Finally, recall the definition , let the rows of be , and let the columns of be . Then these conditions become

∎

Related results are in the literature, like [4, Thm. 1]. Stability/asymptotic properties of the interval state estimate are often stated as requiring to have eigenvalues with negative real part; it is clear, again using Gershgorin’s circle theorem, that conditions (4), if satisfied, imply exactly this. Of course, it is not the stability of the modified system that matters – this is mostly a coincidence; it is the stability of the dynamics defining that determines their asymptotic properties.

To turn the conditions in Theorem 2 into an implementable numerical procedure, the next result states that a gain matrix satisfying the conditions may be found as the solution of a linear program (LP).

Corollary 3.

Let the columns of be . Consider the LP

| (7) | ||||

| s.t. | ||||

where the variables are , , and . Any which is a solution of this LP with optimal objective value satisfies conditions (4).

Proof.

Note that the first two sets of constraints imply , for all . Then, with the last set of constraints, we get

for each . Thus, if the optimal is negative, we certainly have that a corresponding solution satisfies conditions (4). ∎

As a practical note, we would add a (negative) lower bound on to the above LP in order to prevent the possibility that the LP is unbounded. Further, the variables do not appear in the constraints or objective and could be eliminated; a decent numerical LP solver will likely identify this automatically and eliminate them in a presolve step.

Other results for calculating the gain matrix based on the solution of an LP have been proposed; see for instance [3]. Again, these results rely on something like being Metzler, although this extra structure permits more detailed claims about the input-output gains of the system.

4 Examples

We evaluate the performance of the proposed estimation method on some examples. At the heart of the method is the solution of the IVP in ODEs in Theorem 1. This initial value problem is solved with a C/C++ code employing the CVODE component of the SUNDIALS suite [10]. Specifically, the numerical method uses the implementation of the Backwards Differentiation Formulae (BDF) in CVODE, using a Newton iteration for the corrector, with relative and absolute integration tolerances equal to . The implementation of interval arithmetic in MC++ [2] produces the inclusion function in Definition 1.

The proposed method will be referred to as GMAC, indicating that it incorporates Gained Measurement And Constraint information when constructing the state estimate. The No Constraints and No Measurement methods will be compared (recall discussion following Theorem 1).

4.1 Bioreactor

We consider an example involving a bioreactor originally from [20, 19]. The dynamic model describes the evolution in time of the concentrations of biomass and feed substrate. The dynamic equations on the time domain (day) are

where and are the biomass and substrate concentrations, respectively, at time , and the known parameters are

Meanwhile, the unknown parameters/disturbances are which satisfy for all

where (so take ).

Continuous measurements of , the biomass concentration, are available (so ). The error111We note that the error in the initial measurements of the biomass concentration are much larger than the subsequent measurements. While in practice it seems likely that the initial measurements should be known at least as accurately as any subsequent online measurements, for consistency we follow this example as it appears in [20, 19]. in these measurements satifies . For simulation purposes, we obtain measurements from a numerical solution with for all , for all , for all , and initial conditions and . These measurements are obtained at 500 equally spaced time points from the interval , and then made continuous by linearly interpolating between them.

In [20], a “bundle” of interval observers are constructed, essentially by running independent estimates with different gain matrices . We choose one of these gain matrices .

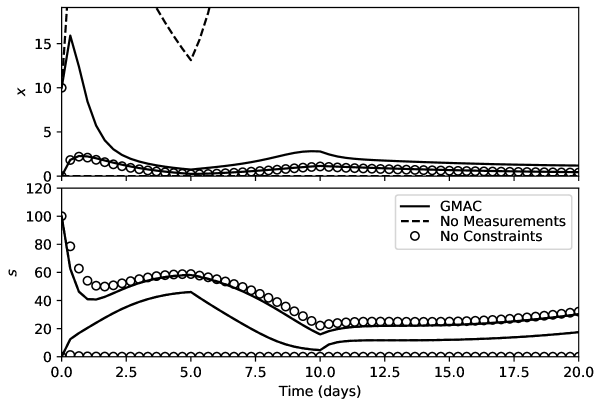

Figure 1 summarizes the results; the upper and lower bounds of the interval estimate for each state is plotted versus time. The proposed GMAC method is strictly tighter than either the No Measurements or No Constraints methods for at least one of the states. The interval estimates at the final time are given in Table 1.

Compared to the bundle of observers from [20], the estimates from GMAC are of comparable quality. As mentioned, however, we achieve our results with only one observer gain matrix. The same example is considered in [19], and again we achieve similar state estimates. The work in [19] is based on a hybrid automata approach, and some extra analysis to determine the switching conditions is required. Meanwhile, we rely on automatic construction of inclusion functions through implementations of interval arithmetic and little extra analysis is required.

| method | ||

|---|---|---|

| GMAC | ||

| No Measurements | ||

| No Constraints |

4.2 Linearized system

This example comes from Example 1 of [4]. We have a three state system with nonlinear dynamics; satisfies

where

The time interval of interest is , with the state known at : . The disturbances satisfy . Measurements of the first state are available, so that . The error in these measurements satisfies . For simulation purposes, we obtain measurements from a numerical solution of the system with for all , for all , and . These measurements are obtained at 500 equally spaced time points from the interval , and then made continuous by linearly interpolating between them.

Although this is a nonlinear system, we attempt to design an observer gain matrix based on the linear part. The original analysis in [4, Example 1] suggests

However, solving LP (7) yields

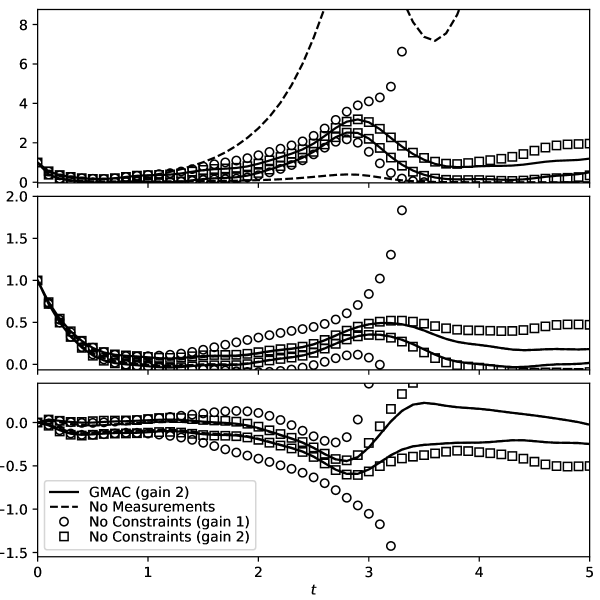

and a negative optimal objective value is obtained. We will calculate state estimates with both separately. Refer to these as “gain 1” and “gain 2,” respectively. Recall that the No Measurements method is defined by using , and so it remains the same.

Figure 2 summarizes the results; the upper and lower bounds of the interval estimate for each state is plotted versus time. For this example, omitting constraints produces a much more conservative interval state estimate. In fact, the bounds produced by the No Constraints method with gain 1 diverge and the numerical integration procedure prematurely terminates shortly after (specifically, the corrector iteration of the BDF in CVODE fails). Using gain 2, the No Constraints method performs much better and can produce state estimates for the entire time interval.

Meanwhile, the proposed GMAC method (with either gain- the results are largely the same) more consistently produces a tight interval estimate for each state. The No Measurements method is comparable. Table 2 lists specific values at the final time. The main difference is that the estimate of the first state from GMAC is much tighter. This is somewhat of a moot point, however, as bounded-error measurements of the first state are directly available; for our simulated measurement values, this implies the interval estimate .

| method | |||

|---|---|---|---|

| GMAC (gain 2) | |||

| No Measurements | |||

| No Constraints (gain 2) |

5 Conclusions

This work has considered the problem of bounded-error state estimation for nonlinear systems. Using recent work for computing the reachable sets of constrained dynamic systems, we proposed a method that uses measurements in a novel way to construct interval state estimates. Numerical examples demonstrate that the method is effective in practice and can improve on existing approaches. The proposed approach also benefits from its reliance on interval arithmetic and its implementation in numerical libraries, which reduces the amount of manual analysis of a specific problem one must perfom. Finally, we proposed a new way of calculating the gain matrix and showed that it can produce asymptotically exact interval estimates in ideal cases.

References

- [1] Olivier Bokanowski, Nicolas Forcadel, and Hasnaa Zidani. Reachability and minimal times for state constrained nonlinear problems without any controllability assumption. SIAM Journal on Control and Optimization, 48(7):4292–4316, 2010.

- [2] Benoît Chachuat. MC++: A Versatile Library for McCormick Relaxations and Taylor Models. http://www.imperial.ac.uk/people/b.chachuat/research.html, 2015.

- [3] Stanislav Chebotarev, Denis Efimov, Tarek Raïssi, and Ali Zolghadri. Interval observers for continuous-time lpv systems with l1/l2 performance. Automatica, 58:82–89, 2015.

- [4] Denis Efimov and Tarek Raïssi. Design of interval observers for uncertain dynamical systems. Automation and Remote Control, 77(2):191–225, 2016.

- [5] Denis Efimov, Tarek Raïssi, Stanislav Chebotarev, and Ali Zolghadri. Interval state observer for nonlinear time varying systems. Automatica, 49(1):200–205, 2013.

- [6] Gary W. Harrison. Dynamic models with uncertain parameters. In X .J. R. Avula, editor, Proceedings of the First International Conference on Mathematical Modeling, volume 1, pages 295–304. University of Missouri Rolla, 1977.

- [7] Stuart M. Harwood and Paul I. Barton. Efficient polyhedral enclosures for the reachable set of nonlinear control systems. Mathematics of Control, Signals, and Systems, 28(1):8, 2016.

- [8] Stuart M. Harwood and Paul I. Barton. Affine relaxations for the solutions of constrained parametric ordinary differential equations. Optimal Control Applications and Methods, 2017.

- [9] Stuart M. Harwood, Joseph K Scott, and Paul I. Barton. Bounds on reachable sets using ordinary differential equations with linear programs embedded. IMA Journal of Mathematical Control and Information, 33(2):519–541, 2016.

- [10] Alan C. Hindmarsh, Peter N. Brown, Keith E. Grant, Steven L. Lee, Radu Serban, Dan E. Shumaker, and Carol S. Woodward. SUNDIALS: Suite of Nonlinear and Differential/Algebraic Equation Solvers. ACM Transactions on Mathematical Software, 31(3):363–396, 2005.

- [11] L. Jaulin. Nonlinear bounded-error state estimation of continuous-time systems. Automatica, 38(6):1079–1082, 2002.

- [12] R. E. Kalman. A New Approach to Linear Filtering and Prediction Problems. Journal of Basic Engineering, 82:35–45, 1960.

- [13] Hassan K. Khalil. Nonlinear Systems. Prentice-Hall, Upper Saddle River, NJ, second edition, 1996.

- [14] O. Knüppel. PROFIL/BIAS — A fast interval library. Computing, 53(3):277–287, Sep 1994.

- [15] A. B. Kurzhanski, I. M. Mitchell, and P. Varaiya. Optimization techniques for state-constrained control and obstacle problems. Journal of Optimization Theory and Applications, 128(3):499–521, 2006.

- [16] A. B. Kurzhanski and P. Varaiya. Ellipsoidal techniques for reachability under state constraints. SIAM Journal on Control and Optimization, 45(4):1369–1394, January 2006.

- [17] J. D. Lambert. Numerical Methods for Ordinary Differential Systems: The Initial Value Problem. John Wiley & Sons, New York, 1991.

- [18] N. Meslem and N. Ramdani. Interval observer design based on nonlinear hybridization and practical stability analysis. International Journal of Adaptive Control and Signal Processing, 25:228–248, 2011.

- [19] N. Meslem, N. Ramdani, and Y. Candau. Using hybrid automata for set-membership state estimation with uncertain nonlinear continuous-time systems. Journal of Process Control, 20:481–489, 2010.

- [20] M. Moisan and O. Bernard. Interval observers for non-montone systems. Application to bioprocess models. 16th IFAC World Congress, 16:43–48, 2005.

- [21] M. Moisan and O. Bernard. An interval observer for non-monotone systems: application to an industrial anaerobic digestion process. 10th International IFAC Symposium on Computer Applications in Biotechnology, 1:325–330, 2007.

- [22] Ramon E. Moore, R. Baker Kearfott, and Michael J. Cloud. Introduction to Interval Analysis. SIAM, Philadelphia, 2009.

- [23] Siegfried M. Rump. INTLAB — INTerval LABoratory. In Tibor Csendes, editor, Developments in Reliable Computing, pages 77–104. Springer Netherlands, Dordrecht, 1999.

- [24] Joseph K. Scott and Paul I. Barton. Bounds on the reachable sets of nonlinear control systems. Automatica, 49(1):93–100, 2013.

- [25] Gilbert Strang. Linear Algebra and its Applications. Thomson Brooks/Cole, fourth edition, 2006.

- [26] Rihab El Houda Thabet, Tarek Raïssi, Christophe Combastel, Denis Efimov, and Ali Zolghadri. An effective method to interval observer design for time-varying systems. Automatica, 50(10):2677–2684, 2014.

- [27] Wolfgang Walter. Differential and Integral Inequalities. Springer, New York, 1970.