Enhancing Breast Cancer Diagnosis in Mammography: Evaluation and Integration of Convolutional Neural Networks and Explainable AI

Abstract

The Deep learning (DL) models for diagnosing breast cancer from mammographic images often operate as ”black boxes,” making it difficult for healthcare professionals to trust and understand their decision-making processes. The study presents an integrated framework combining Convolutional Neural Networks (CNNs) and Explainable Artificial Intelligence (XAI) for the enhanced diagnosis of breast cancer using the CBIS-DDSM dataset. The methodology encompasses an elaborate data preprocessing pipeline and advanced data augmentation techniques to counteract dataset limitations and transfer learning using pre-trained networks such as VGG-16, Inception-V3 and ResNet was employed. A focal point of our study is the evaluation of XAI’s effectiveness in interpreting model predictions, highlighted by utilising the Hausdorff measure to assess the alignment between AI-generated explanations and expert annotations quantitatively. This approach is critical for XAI in promoting trustworthiness and ethical fairness in AI-assisted diagnostics. The findings from our research illustrate the effective collaboration between CNNs and XAI in advancing diagnostic methods for breast cancer, thereby facilitating a more seamless integration of advanced AI technologies within clinical settings. By enhancing the interpretability of AI-driven decisions, this work lays the groundwork for improved collaboration between AI systems and medical practitioners, ultimately enriching patient care. Furthermore, the implications of our research extended well beyond the current methodologies. It encourages further research into how to combine multimodal data and improve AI explanations to meet the needs of clinical practice.

Keywords— Breast Cancer Diagnosis, Mammography, CNNs, XAI, ResNet50, Grad-CAM, LIME, SHAP, Data Augmentation, Transfer Learning

1 Introduction

Breast cancer remains the predominant oncological challenge among women globally, characterized by the uncontrolled growth of abnormal cells within the mammary gland. In 2020, this malignancy was diagnosed in approximately 2.3 million women worldwide, culminating in 685,000 fatalities [1]. Computer-aided detection (CAD) systems have been developed in response to this global health issue, leveraging artificial intelligence (AI) algorithms to enhance mammogram interpretation accuracy. These systems facilitate the identification of suspicious regions within digital imaging, subsequently offering diagnostic classifications to assist physicians, thereby acting as an adjunctive opinion [2].

AI methodologies employed in these systems are divided into two principal categories: conventional machine learning (ML) algorithms, which use hand-crafted features, and DL, which autonomously extract pertinent features from data [3]. Traditional techniques, such as the K-Nearest Neighbor and Decision Tree algorithms, exhibit limitations in managing voluminous and high-dimensional datasets due to the intricate requirement for robust feature identification and extraction, demanding extensive domain expertise [4]. In contrast, Deep Neural Networks (DNNs), characterized by multiple hidden layers [5], automatically extract features from raw inputs to yield accurate predictions, adjusting to the varied features identified. This automatic feature extraction and classification fusion attribute of DL algorithms has been instrumental in attaining unprecedented efficacy in breast cancer detection via imaging modalities [6].

However, the interpretability of DNNs poses a significant challenge due to their ”black-box” nature, an attribute referencing their operational opacity. These networks integrate millions of parameters across numerous layers; thus, their complex nature hinders the ability to find an explanation [7]. Although DNNs’ performance in clinical diagnostics is commendable, the lack of transparency and explainability impedes their integration into routine healthcare applications [8]. The emergence of XAI aims to address these issues of transparency and explainability, advocating the creation of accurate AI models that are comprehensible and trustworthy to users [9].

XAI is characterized as a system that generates models while maintaining high accuracy and enabling human users to comprehend and trust these models effectively. Incorporating XAI into a model boosts its credibility, aids in identifying causal relationships within the data, enhances user knowledge, empowers more assured decision-making, and fosters ethical fairness by enhancing transparency [10, 11]. XAI techniques are fundamentally classified into two categories: transparent ML and post-hoc models. Transparent models, such as decision trees or K-Nearest Neighbors, are directly interpretable to users due to their inherent simplicity and straightforwardness [12]. Conversely, post-hoc models, encompassing complex DL networks like CNNs and RNNs, necessitate additional explanations to comprehend the logic behind their outputs [7], [13]. Explanations generated by post-hoc methods fall into two primary categories: global and local interpretability. Global interpretability provides a comprehensive view of the model’s functioning, incorporating its overall architecture, training, and data. Local interpretability, however, zeroes in on the rationale for specific predictions, analyzing relevant features and variables [9]. Post-hoc explanatory techniques are also further classified into model-specific and model-agnostic approaches. Model-specific methods, exemplified by Grad-CAM, are devised for particular models and are not transferable across different architectures. Model-agnostic techniques, such as LIME and SHAP, are designed for broader applicability and are capable of being deployed across a variety of models. Grad-CAM, LIME, and SHAP are among the leading explainability techniques employed in this research.

The methodology adopted in this research involves a comprehensive preprocessing of mammographic images to enhance their quality for AI analysis, followed by deploying a CNN model for classification. The selection of the CNN architecture is informed by a literature review and the model’s suitability for processing high-dimensional imaging data. To address the challenge of interpretability, this study employs leading XAI techniques, including both model-specific and model-agnostic approaches, to provide insights into the decision-making process of CNN [9]. The efficacy of these explanatory mechanisms is assessed based on a framework that emphasizes security, transparency, and user comprehensibility [14]. This paper contributes to the growing field of AI in healthcare by providing empirical evidence on the effectiveness of combining CNNs with XAI models for breast cancer detection. It underscores the potential of such integrated approaches to not only enhance diagnostic accuracy but also foster trust and ethical fairness by making AI decisions in healthcare more transparent and understandable. [10].

The remainder of the paper is organized as follows: Section II details the materials and methods, including data preprocessing, the CNN architecture, and the selection of XAI techniques. Section III presents the results of the classification and explanation tasks, followed by a discussion in Section IV that contextualizes the findings within the broader implications for AI in healthcare. Finally, Section V concludes the paper with a summary of the findings, limitations, and future research directions. By bridging the gap between advanced AI technologies and clinical applicability through explainability, this research aspires to pave the way for more ethical, transparent, and effective AI-assisted diagnostics in breast cancer detection, ultimately contributing to better patient outcomes and the advancement of AI in medicine.

1.1 Explainability

Interpretability in ML is about comprehending how a model operates, especially when predicting variables. It provides insight into the model’s prediction mechanisms without necessarily unraveling the causal relationships behind those predictions. While interpretability contributes to a model’s overall explainability, not every interpretable model can be deemed fully explainable. It also encompasses the evaluation of hypothetical scenarios, allowing us to speculate on the outcomes had certain inputs been altered. The aim is to achieve a thorough understanding of the ML model, factoring in both visible and hidden elements, to construct a broad explanation of its functionality. The opaque nature of many AI models often obscures the rationale behind their outputs, complicating efforts to understand their decision-making processes. However, interpretability primarily concerns grasping the model’s functional details, whereas explainability extends this by elucidating how the model reacts to new data and the implications of specific changes in its predictions. This distinction becomes particularly significant in healthcare, where the stakes of decision-making are high. A deep comprehension of how AI algorithms derive their suggestions is essential. Without such transparency, healthcare practitioners may hesitate to rely on and embrace AI technologies, fearing the inability to confirm the validity of their recommendations [15]. Thus, explainability and interpretability are essential for verifying the reliability and fairness of AI systems, especially in areas like healthcare, finance, and the legal system where decision-making has significant consequences.

XAI models are primarily divided into two main categories: intrinsic and post-hoc. Intrinsic models, also known as transparent models, are naturally understandable and explainable.

Conversely, post-hoc explainability techniques are further divided into model-specific and model-agnostic methods. Models lacking inherent transparency utilize post-hoc or surrogate models to clarify their decision-making processes. Model-specific explanations are custom-made to fit a model’s unique architecture, with Grad-CAM and saliency maps being notable examples. In contrast, model-agnostic explanations are more versatile and can be applied across different models to explain the prediction process, with SHAP and LIME being widely recognized methods in this category. XAI explanations can also be distinguished as local or global. Local explanations focus on understanding individual decisions made by a model, while global explanations provide insight into the overall behavior and rules of the model across all scenarios.

The subsequent sections delve into various XAI models, with an emphasis on those most commonly applied alongside ML/Deep Learning (DL) models in the context of breast cancer research. A thorough discussion of XAI models follows, including an overview of relevant research within the breast cancer domain.

1.2 SHapley Additive exPlanations (SHAP)

Introduced by Lundberg, SHAP [16] offers a coherent framework for the interpretation of model predictions, attributing significance to each feature in the context of a specific prediction. SHAP elucidates the influence of individual features on the output of any ML model, providing a tool for both global and local model understanding.

Game theory, particularly as seen through Shapley values, serves as the foundation for the SHAP methodology. It aims to distribute the prediction outcome fairly among the features, akin to dividing a payout among players based on their contribution.

SHAP Formalism: The SHAP value equation is presented as follows:

| (1) |

Here, symbolizes the SHAP value for a model prediction with input , where denotes a subset of features, the total number of features, and and represent the model’s predictions for the subset and for the subset excluding a specific feature, respectively.

Key principles of SHAP include:

-

1.

Local Accuracy: This principle asserts that the output from the explanation model should align with the original model’s output for any given input , as follows:

(2) Here, is the model’s base value, and are the SHAP values associated with each feature.

-

2.

Missingness: According to this principle, an absent feature (with a value of zero) in the input should not influence the model’s prediction, formalized as:

(3) -

3.

Consistency: The consistency principle states that SHAP values should accurately reflect a feature’s impact. If a feature’s presence or constancy enhances the model’s output, its SHAP value should not decrease. For any two models, and , if

(4) for all inputs , then .

SHAP thus provides a mathematically rigorous framework for fair and consistent feature importance attribution, enhancing the interpretability of ML models.

1.3 Gradient-weighted Class Activation Mapping (Grad-CAM)

Grad-CAM [17], introduced by Selvaraju et al., serves as a visual explanation method for a wide spectrum of CNN models. It operates by identifying and illuminating significant areas within an input image that are pivotal for the model’s class predictions. This is achieved by calculating the gradients of any target class relative to the activations in the final convolutional layer, thereby creating a heatmap of these key regions. Distinguished from CAM, Grad-CAM boasts adaptability across various CNN architectures, enabling model interpretability without necessitating architectural modifications. As a method that discriminates between classes, Grad-CAM conducts gradient calculations and manipulates the feature maps of the concluding convolutional layer to produce meaningful visualizations.

Grad-CAM Computation Steps:

-

1.

Gradient Calculation: The first step of Grad-CAM involves the calculation of the gradient of the loss concerning the final convolutional layer’s activations:

(5) This step discerns the contribution of each segment within the activation maps towards the model’s loss, delineating their significance in the decision-making process.

-

2.

Global Average Pooling (GAP) of Gradients: Following, a Global Average Pooling (GAP) operation is applied to these gradients to ascertain the importance weights () for each channel within the activation maps:

(6) Herein, represents the total count of elements in the activation map , with elucidating the weightage of each channel’s impact on the model’s output.

-

3.

Final Grad-CAM Equation: The construction of the Grad-CAM heatmap is then achieved by applying these weights to the activation maps and incorporating the ReLU function:

(7)

This formula emphasizes the collaborative effect of the weighted activation maps on illuminating the predictive regions of the input image. The ReLU ensures visualization of only those features positively affecting the target class, thereby aiding in the interpretability of the model’s predictive behavior.

1.4 Local Interpretable Model-Agnostic Explanations (LIME)

Ribeiro et al. introduced Local Interpretable Model-Agnostic Explanations (LIME) [18], which generate explanations that are both locally accurate and comprehensible for individual predictions by simulating the model’s decision boundary to be linear in the proximity of the instance being explained. It explicates an instance by fitting an interpretable model to a perturbed dataset around the input instance, shedding light on the prediction mechanisms of the original model.

LIME Formalism:

-

1.

Interpretable Data Representations: The distinction between the actual features used by the model and those used for explanations is pivotal. For comprehensibility, explanations utilize representations that are understandable to humans, which might be different from the complex features the model employs. For text classification, this could be a binary vector denoting word presence, and for image classification, it might indicate the presence or absence of super-pixels, despite the model using complex features like word embeddings or pixel tensors.

(8) This represents the original instance, and

(9) This is its binary vector form for interpretable representation.

-

2.

Fidelity-Interpretability Trade-off: The explanation model, , where encompasses models such as linear models, decision trees, or lists, is chosen for its simplicity. The domain of is , acting over the binary vector. The complexity of an explanation model, , varies; for instance, it might be the depth of a decision tree or the count of non-zero weights in a linear model. The fidelity function, , assesses how accurately the explanation model approximates the original model near instance , with defining proximity to . To balance interpretability and local fidelity, the optimal explanation minimizes:

(10) -

3.

Sampling for Local Exploration: LIME approximates the loss , weighted by , by sampling around without presupposing any structure for . This sampling constructs a dataset of perturbed samples with labels generated by the model . Optimizing equation (10) with dataset yields a locally faithful explanation , emphasizing the need for a balance between simplicity and fidelity.

-

4.

Sparse Linear Explanations: Considering as the class of linear models and as the locally weighted square loss, the aim is to derive a sparse linear model that remains interpretable. This is facilitated using a kernel function to stress local proximity around :

(11) To ensure interpretability, limitations on the number of features in the explanation are imposed, typically achieved through regularization methods like Lasso for feature selection, followed by least squares for weight determination, known as K-LASSO.

(12)

LIME strategically creates explanations that are both locally accurate and interpretable, offering insights into the operational behaviors of complex models through interpretable approximations.

2 Related Work

Breast cancer detection often relies on ML/DL models to analyze medical imagery, such as mammograms, for diagnosis. These models, though powerful, are frequently viewed as ”black boxes” due to the opaque nature of their decision-making processes. This obscurity can breed mistrust and hinder their adoption in clinical settings. Explainable AI (XAI) seeks to mitigate this problem by making the workings of these models more transparent. For instance, saliency maps are a common XAI technique that illuminates the key features within an image that influence the model’s predictions. This enables healthcare practitioners to better comprehend the basis of a model’s diagnostic suggestions, leading to more informed clinical judgments. Moreover, methods like SHAP (SHapley Additive exPlanations) further aid in explaining the model predictions for breast cancer detection, offering detailed explanations that healthcare professionals can rely on for making diagnosis decisions. Moncada et al. [19] benchmarked the performance of ML models such as Random Survival Forest (RSF), Survival Support Vector Machine (SSVM), and Extreme Gradient Boosting (XGBoost) against the CPH model for predicting survival outcomes in breast cancer patients. The study focused on female patients from the Netherlands diagnosed with primary invasive non-metastatic breast cancer between 2005 and 2008, highlighting the predictive prowess of XGBoost with a c-index of 0.73, facilitated by SHAP for model explainability.

While in [20], a hybrid model was introduced that integrates ML with explainable AI (XAI), specifically using XGBoost and SHAP for both prediction and explanation. This approach emphasized the relevance of lifestyle and biological factors, such as a high-fat diet and breastfeeding, in breast cancer risk stratification.

Moreover, the integration of radiomics and XAI has opened new avenues in the molecular subtype classification of breast cancer, with studies demonstrating the potential of imaging features to discriminate between luminal and non-luminal subtypes [21]. SHAP dependence plots have provided insights into the contribution of specific radiomic features to model predictions. It describes how a single feature affects the output of the LASSO SVM prediction model. The SHAP value is used to estimate the contribution of each feature to the predicted result [22].

For visual explanations of DL models’ decisions in medical imaging, Grad-CAM has profound significance in breast cancer diagnosis. By generating heatmaps that highlight key regions in mammograms, Grad-CAM offers clinicians intuitive insights into the features driving model predictions. Its accessibility and ability to bridge the gap between AI-driven diagnostics and clinical practice make Grad-CAM invaluable for enhancing the trust and acceptance of AI systems in healthcare. This visual aspect of Grad-CAM significantly contributes to the interpretability and transparency of medical results, facilitating more informed clinical decisions.

Masud et al. [23] employed pre-trained CNN models for classifying breast cancer from ultrasound images, where ResNet50 and VGG16 stood out for their performance, achieving an accuracy of 92.4% and an AUC score of 0.97, respectively. The application of Grad-CAM heatmaps in their study illuminated the pivotal areas for cancer classification, thereby enhancing the model’s transparency.

Subsequently, [24] utilized DenseNet-121 for classifying primary breast lesions from 2D ultrasound images, introducing Grad-CAM visualizations as a novel tool for interpreting AI decisions. This methodology yielded impressive outcomes, with accuracy, sensitivity, and specificity rates of 88.4%, 87.9%, and 89.2%, respectively, for coarse regions of interest (ROIs), and 86.1%, 87.9%, and 83.8%, for fine ROIs. Such visual insights facilitated a deeper understanding of the AI’s reasoning process.

Similarly, in [25] authors employed DenseNet-169 and EfficientNet-B5 to automate malignancy detection, achieving accuracy levels of 88.1% and 87.9%, respectively. Here, Grad-CAM played a crucial role in highlighting the significant regions leading to malignancy predictions, offering insights into the importance of both the tumor and adjacent areas.

Lou et al.[26] introduced the multi-level global-guided branch-attention network (MGBN) for mass classification in mammograms, incorporating Grad-CAM to verify the network’s accuracy in identifying mass regions. This method not only enhanced the model’s reliability and interpretability but also achieved noteworthy AUC scores of 0.8375 and 0.9311 on the DDSM and INbreast databases, respectively. Building on the theme of enhancing model transparency and efficacy, Wang et al.[27] took a multitask approach with their MIB-Net, which merged classification and tumor segmentation tasks. Utilizing Grad-CAM visualizations, they provided insights into how the model focuses on significant areas across various modalities, showcasing the model’s capability to efficiently prioritize critical information for accurate predictions. Together, these studies highlight the evolving landscape of breast cancer diagnostics, where advanced ML models are increasingly leveraged for their precision, reliability, and interoperability.

LIME provides localized insights into the models’ predictions, including breast cancer diagnosis, by highlighting specific regions of interest in mammograms contributing to the decisions.

The authors in [28] present an automated breast cancer cell image analysis system using the public BreakHis dataset[29]. Utilizing Zernike image moments to extract intricate features from cancer cell images, they employ simple neural networks for binary classification (benign vs. malignant classes). Subsequently, they utilize the LIME technique for explaining the test results, highlighting significant regions responsible for the ML algorithm’s decision-making process. This approach enhances the interpretability of test outcomes and justifies the algorithm’s decisions based on input images, addressing the need for explainable AI in histopathology-based breast cancer detection.

Despite the advancements in utilizing XAI techniques for breast cancer detection through medical imaging analysis, a critical gap remains in the quantitative evaluation of these methods. Current literature, including seminal works by Rezazadeh et al.[30], Lamy et al.[31, 32], and others, vividly illustrates the application of XAI to make the decision-making processes of DL models transparent. However, the absence of a unified framework for the objective measurement and comparison of XAI’s effectiveness limits the ability to ascertain its actual impact on enhancing model interpretability and trustworthiness among healthcare professionals. This gap not only obscures the direct benefits of XAI in clinical settings but also hinders the strategic selection and application of XAI techniques tailored to specific diagnostic challenges in breast cancer detection. It is imperative to establish standardized metrics and benchmarks that enable the rigorous evaluation of XAI techniques across various dimensions, including their influence on diagnostic accuracy, clinician decision-making, and patient outcomes. Such a comprehensive approach would facilitate a deeper understanding of the comparative advantages of different XAI methods, guiding their optimal utilization in clinical practice.

3 Methodology

This section outlines the comprehensive methodology adopted for the detection and classification of breast cancer using a Neural networks with XAI techniques. Given the critical nature of breast cancer as a global health issue, the focus is on developing a model that not only achieves high accuracy but also provides transparency in its decision-making process to healthcare professionals.

The methodology is structured into several key components: the dataset used for training and evaluation, the preprocessing steps to prepare the data, the adoption of transfer learning for model development, and the implementation of XAI techniques to explicate the model’s output. Each component is designed to address specific challenges in the task at hand, from handling medical imaging data to enhancing the interpretability of complex DL models. By integrating these components, this methodology aims to contribute to the early detection and classification of breast cancer, providing a basis for further research and potential clinical applications, as illustrated in Figure 1.

3.1 Dataset

The Curated Breast Imaging Subset of the Digital Database for Screening Mammography (CBIS-DDSM) is an updated and standardized version of the DDSM, containing 2,620 mammography studies categorized into malignant, benign, and normal classifications as depicted in Table 1 . This extensive dataset includes 10,239 images, totaling 163.6 GB, featuring mammograms coupled with accurate pathology annotations through ROI segmentation and bounding boxes. Its comprehensive scale and verified annotations establish CBIS-DDSM as the preferred dataset for this study.

| Characteristic | Total Count | Categories | Origin |

|---|---|---|---|

| Total Images | 10,239 | Malignant, Benign, Normal | USA |

| Total Subjects | 6,671 |

In this study, 2,129 mammograms were selected from the dataset, with each corresponding mammogram’s ROI extracted and converted from DICOM to PNG format. The number of ROIs varies across images, with each highlighting the critical features. All ROIs from an individual mammogram were merged into a single composite image, resulting in a dataset of 2,129 paired mammograms and ROI images.

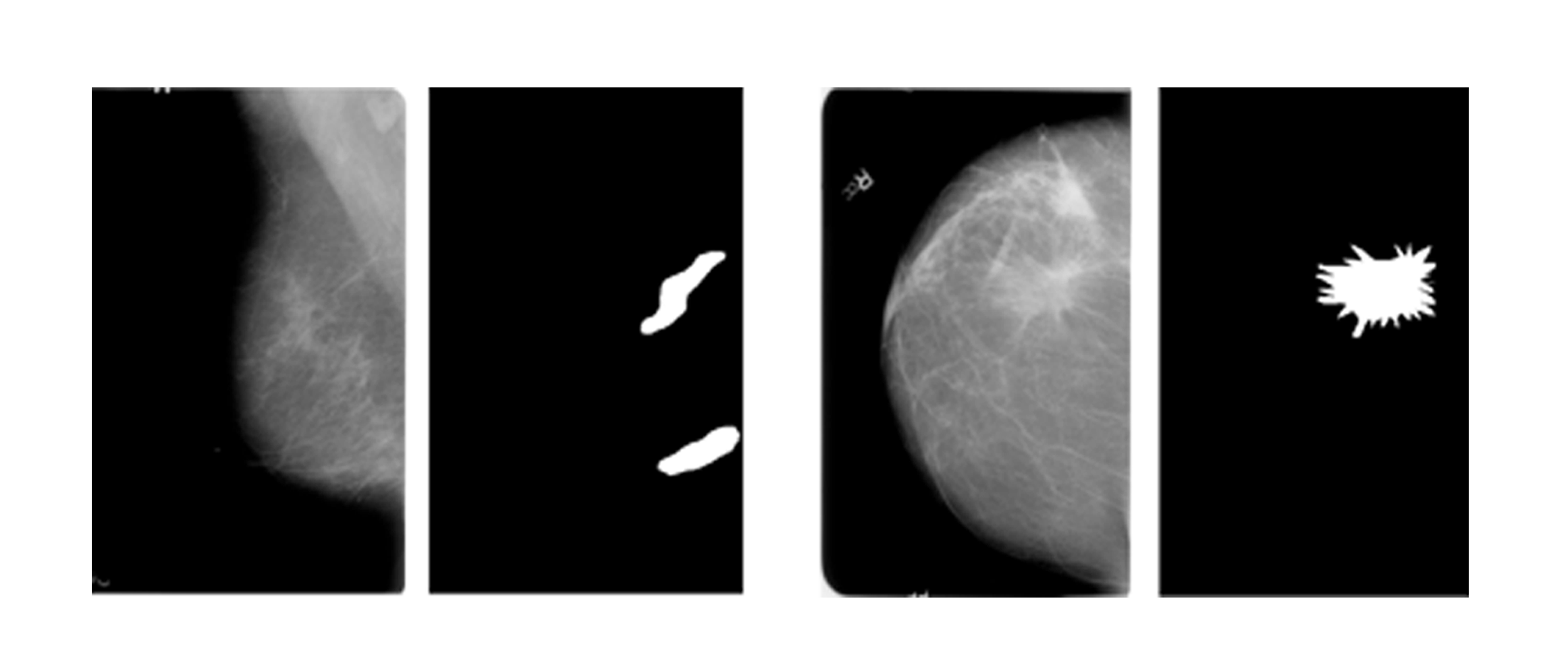

An illustration of this process can be seen in Figure 3, which displays a composite of five ROIs from one mammogram. Accompanying CSV files classify these images into benign and malignant categories, comprising 1,229 benign and 900 malignant cases. Figure 2 exemplifies a benign and a malignant mammogram alongside their respective ROIs from the dataset.

3.2 Image Pre-processing

Preprocessing is crucial in optimizing the efficacy and computational efficiency of DL models applied to medical imaging. For this research, the mammographic images within the dataset were standardized to a resolution of 224x224 pixels. This dimensionality adjustment is followed by a rigorous preprocessing pipeline meticulously designed to expunge extraneous details while simultaneously amplifying diagnostically relevant features within the images. The preprocessing regimen encompasses three principal components: artifact reduction, line removal, and image enhancement, each underpinned by data augmentation strategies to expand the dataset beyond its original volume of 2,129 images.

3.2.1 Artifact Reduction

The integrity of medical images, specifically mammograms, can be compromised by the presence of non-diagnostic elements such as annotative text and extraneous objects, as depicted in Figures 4 and 5. These artifacts, if left unaddressed, could potentially obfuscate the model’s interpretative precision, leading to suboptimal detection and classification performance [33], [34]. To mitigate this, artifact removal methods, which include morphological opening and contour detection,.

3.2.2 Line Removal

The de-noising through binary masking proved insufficient for the complete removal of all peripheral lines, necessitating further refinement. Intensity thresholding, Gabor filtering, and morphological operations were applied to discern and eliminate residual linear artifacts. This ensures the preservation of breast tissue fidelity while removing non-essential linear distractions. [35], [36].

3.2.3 Image Enhancement

The complexity of mammograms, characterized by the unclear separation between tumorous and dense tissues against a background of fatty tissues, poses a substantial challenge for interpretation based on models. This challenge is intensified in the CBIS-DDSM dataset, where dense tissues are predominantly prevalent. To counteract this, a dual-phase image enhancement strategy was adopted, incorporating Gamma correction followed by the application of Contrast Limited Adaptive Histogram Equalization (CLAHE), an evolved variant of adaptive histogram equalization [37]. This two-step enhancement, precisely optimized for medical imaging, significantly improves the diagnostic visibility of mammograms, resulting in better model accuracy. The mathematical foundations and detailed processes of CLAHE have been comprehensively explained in [38], providing a solid foundation for its application in enhancing mammographic image quality for DL-based diagnostic assessments.

Incorporating these preprocessing techniques within the framework has also been established by prior researchers in [33], [34], [35], [36], [37], [38]. This study not only adheres but also enriches the existing body of knowledge focused on refining mammographic image analysis for enhanced breast cancer detection and classification.

3.3 Data Augmentation

In the domain of ML applications within medical imaging analysis, data augmentation emerges as a critical preprocessing step, especially in tasks involving mammogram classification. The essence of data augmentation lies in its capacity to augment the training dataset through a series of transformations, such as flipping, rotating, and adjusting image properties. This process introduces a broader range of scenarios for the model to learn from, significantly enhancing its robustness and preventing overfitting. It is vital in medical imaging, where the ability to accurately recognize diverse patterns and anomalies can dramatically affect diagnostic outcomes. The goal of implementing specific augmentation strategies is to enrich the dataset with complexities and variabilities reflective of real-world conditions, thereby improving the model’s diagnostic performance.

Before the augmentation process begins, a crucial step involves dividing the collection of mammographic images into training and testing groups, adhering to a 90:10 split. This division is essential for ensuring a balanced representation of both benign and malignant cases in the training and testing phases and maintaining the integrity of the dataset. A balanced representation aids in preventing model bias, ensuring that the diagnostic capabilities of the ML model are developed uniformly across different types of cases.

Upon establishing a structured and balanced dataset, the focus shifts to enhancing the robustness and generalizability of the model. This enhancement is achieved by applying a series of data augmentation techniques to the training set. The deployment of these techniques aims to increase the size of the training dataset by generating modified versions of the images, thereby reducing overfitting and improving the model’s ability to generalize from training to unseen data.

To address this, seven distinct data augmentations were implemented on the training dataset. which include horizontal flipping, vertical flipping, and combined flipping in both directions and rotations (both positive and negative 30 degrees), with and without subsequent flipping as shown in Figure 6. Such comprehensive augmentation ensures the model is exposed to a wide array of image orientations and variations, mirroring the diversity encountered in real-world diagnostic settings.

3.4 Transfer Learning

The approach involves the use of models pre-trained on large datasets, which are then slightly adjusted or fine-tuned to perform specific tasks, such as identifying abnormalities in mammogram images. Transfer learning is particularly beneficial in situations where annotated medical datasets are scarce. By leveraging knowledge acquired from related tasks, it allows for the efficient training of robust models on smaller datasets, simply by modifying the model’s final layers to adapt to the new task. This technique’s capacity to overcome the challenges posed by limited data availability has been well documented in the literature of [39]. The ability to transfer learned patterns from one domain to another significantly enhances model performance and accelerates the development process in data-constrained environments. Convolutional neural Networks (CNNs) are at the forefront of image analysis technologies, with several architectures standing out for their performance. Notable examples include VGG [40], Res-Net [41], Dense-Net [42], Inception [43], and Alex-Net [44]. Each architecture offers distinct advantages, making them suitable for a wide range of applications in deciphering image data. This study aimed to evaluate the performance of various transfer learning models on a dataset curated for mammographic image analysis. The models tested were VGG16, Inception V3, Res-Net18, and Res-Net50. Consistency in dataset usage and data split ratios ensured the reliability of performance comparisons. The objective was to identify the model that The evaluations revealed variations across the tested models, with Res-Net50 emerging as the most effective, achieving a test accuracy of 72%. Enhancement of this model through fine-tuning—a process of re-adjusting pre-trained weights for specific tasks as detailed in [45]—led to improved accuracy of 76% as displayed in Table 2.

| Model | Epochs | Batch Size | Train Accuracy | Test Accuracy |

|---|---|---|---|---|

| VGG16 | 50 | 32 | 0.92 | 0.56 |

| Inception V3 | 20 | 32 | 0.67 | 0.56 |

| Res-Net18 | 10 | 32 | 0.71 | 0.58 |

| Res-Net50 | 50 | 32 | 0.95 | 0.72 |

| Res-Net50 (Fine Tuned) | 100 | 32 | 0.95 | 0.76 |

The fine-tuned Res-Net50 model is instrumental in the integration of XAI within the mammographic analysis. XAI aims to increase transparency in AI decision-making processes, making AI-driven diagnoses more understandable. This is particularly important in the medical sector, where clear diagnostic reasoning can significantly affect patient outcomes. The research findings demonstrate the significant impact of transfer learning on improving the accuracy of AI models for medical image analysis. Additionally, this study lays the foundation for advancements in Explainable AI (XAI) to bridge the gap between AI’s capabilities and human interpretability. This increased understanding has the potential to encourage better collaboration between healthcare professionals and AI systems, ultimately leading to improved patient care.

4 Results

In the exploration of advanced diagnostic methodologies for breast cancer, the integration of AI within mammographic analysis has shown promising potential to augment the precision and efficiency of early detection. This section delves into the empirical findings derived from the application of various interpretability techniques— Grad-CAM, LIME, and SHAP values—on mammographic images. These techniques offer a window into the AI model’s cognitive process, revealing how it discerns between benign and malignant findings. Through a meticulous examination of heatmaps, LIME masks, and SHAP value plots, our study explains the model’s ability to identify and prioritize areas of concern within mammographic images. This not only enhances our understanding of AI-driven diagnostics but also paves the way for improvements in the interpretability and reliability of AI models in medical imaging.

Upon analyzing the mammographic images with heatmaps (Grad-CAM), it became evident that the model assigns heightened importance to certain regions, visibly marked by an intensified color spectrum, where red hues denote areas of critical diagnostic value. Notably, these regions often align with denser tissue or anomalies, suggesting that the model’s algorithm is proficient in detecting subtle yet clinically significant patterns that might escape the unaided eye. For instance, in Figure 7, the brighter areas correlate with the precise locations of tissue irregularities, underscoring the model’s potential in highlighting zones warranting closer examination for the presence of malignancies.

The application of LIME masks to the original mammograms further refines our understanding of the model’s diagnostic process. By assigning distinct colors to different segments, the LIME analysis dissects the mammogram into regions of varying relevance to the model’s classification decision. This segmentation reveals that the model not only considers the overall tissue density but also pays detailed attention to specific areas that exhibit unusual textural or structural characteristics. For example, Figure 8 shows how segments marked by LIME correspond to areas where a radiologist might detect signs of pathological changes, thereby validating the model’s accuracy in identifying key diagnostic features.

SHAP value plots offer a granular view of the model’s reasoning by displaying the influence of individual features (pixels or regions) on the classification outcome. Through a color-coded representation—blue for features pushing the classification towards benign and red for those indicating malignancy—these plots articulate the model’s internal assessment of each region’s contribution to the final diagnosis. In the context of breast cancer detection, areas with high red SHAP values not only confirmed the presence of malignancy but also aligned with clinically relevant markers of cancerous tissue. Figure 9 illustrates this phenomenon, where the predictive significance of each pixel is quantified, offering a direct link between the model’s interpretation and identifiable pathological features.

5 Evaluation

Explainability methods such as Grad-CAM, LIME, and SHAP are vital for deciphering the workings of DL models. However, assessing the safety and reliability of these explanations is equally crucial. Our evaluation, based on the explainability factsheet by Sokol et al. [14], primarily focuses on the fourth dimension: safety. This dimension encompasses four critical factors: information leakage, explanation misuse, explanation invariance, and explanation quality. We have systematically evaluated all four of these factors.

5.1 Information Leakage

To ensure the safety and security of any AI system, it is important to consider how much knowledge about the model itself is revealed by the information provided in the explanation. An example of information leakage can be the explanations of a k-nearest neighbor model, which can reveal the information of the training data points of the model. However, when this factor is considered for our model, it is not a concern as we use DL models, which are essentially black-box models and require explanations to understand the results. The explanations provided by our chosen methods of Grad-CAM, LIME, and SHAP also do not reveal any information about the architecture of the model itself; instead, they help us understand the regions of interest chosen by the model in each sample image. Each output of the models provides visual information that highlights the most important regions in the image.

5.2 Explanation Misuse

The factor of information misuse comes into consideration based on the information in the model that is leaked via explanations. A model can be stolen or replicated if considerable information about it can be gathered through the explanations. However, this factor does not apply to our model, as our methods of explanation do not leak any information regarding the architecture of the model.

5.3 Explanation Invarience

The third factor in the dimension of safety is explanation invariance. The primary objective of explanations is to develop an understanding of humans for each sample. Hence, an explainable system is expected to be stable and consistent.

The factor of stability defines that explanations of the same prediction by a model generated multiple times should result in the same explanation as well. This was evaluated in our model as well by implementing the three XAI techniques multiple times on the same image. An explanation generated by LIME results from the process of random perturbation, which generates simulated data in the surroundings of an instance for a simple linear classifier to predict and results in instability as it leads to the generation of different explanations for the same prediction [46]. Hence, while Grad-CAM and SHAP are stable and produce the same explanation for each prediction, the explanation provided by LIME is unstable, which has been shown in Figure 10.

The factor of consistency defines that explanations of a fixed model for similar data points should also be similar. We have evaluated the consistency of our model by using the ROI data provided in the original CBIS DDSM dataset and checking whether images with similar regions of interest have similar XAI results. The Figure 11 shows two similar ROI images with similar Grad-CAM results, which shows the consistency of Grad-CAM. SHAP works by assigning each feature an importance value for a particular prediction [47]. Hence, similar datapoints are assigned similar importance values, which shows the consistency of SHAP. The instability of LIME also makes LIME inconsistent as it generates different explanations for the same prediction; hence, comparison of similar data points does not produce similar explanations.

5.4 Explanation Quality

The final safety measure is the explanation quality, which emphasizes the correctness of the explanation provided. While we have achieved the explainability of the model, it is necessary to analyze the correctness of the explanations provided by the different XAI functions to understand the performance of the black-box DL model. To analyze the explanations provided by XAI techniques for the performance of our model, the ROI images of the CBIS DDSM dataset have been used to compare the areas of importance highlighted by verified pathologists with the areas highlighted by XAI techniques. The ratio of similarity between the results of XAI and the original ROI images indicated how well the model is detecting the correct parts of the images for its classification. To create this ratio, we have created binary masks of the explanations provided by Grad-CAM and LIME which are shown in Figure 12.

Figure 13 shows the original mammogram, the pre-processed image, the Grad-CAM output, the mask generated from the Grad-CAM heatmap, and the original ROI from the dataset.

Figure 14 shows the original mammogram, the pre-processed image, the LIME output, the mask generated from the LIME segments, and the original ROI from the dataset.

The binary masks are then compared to the ROI images using Hausdorff distance. Hausdroff distance is a common measure for comparing the dissimilarity between image segmentation and point sets [48]. This method is commonly used in the medical domain to evaluate segmentation in medical images such as computed tomography (CT) and magnetic resonance imaging (MRI) [49]. The Hausdorff distance between two point sets and is the maximum distance between each point, as shown in the following equation [48].

| (13) |

The degree of resemblance is greater when the value of the Hausdroff distance between two shapes is smaller [50]. The range of Hausdorff distance values depends on the size and complexity of the images being compared. In general, the Hausdorff distance values range from 0 to infinity, where a value of 0 indicates that the two binary masks are identical, and larger values indicate increasing differences between the two masks. After the Hausdroff distance for each image is calculated, the mean distance is calculated to analyze the performance of the model. A lower mean Hausdorff distance indicates a higher level of similarity between all the images, while a higher mean Hausdorff distance indicates a greater level of dissimilarity.

When comparing the ROI images with the masks produced by Grad-CAM, the Hausdorff distance values of our dataset range from 1 to 133, with the mean Hausdorff value being 18.

When comparing the ROI images with the masks produced by Grad-CAM, the Hausdorff distance values of our dataset range from 1 to 160, with the mean Hausdorff value being 86. This indicates that the performance of the Grad-CAM explainability technique is better than the explainability provided by LIME.

6 Conclusion

The study has explored the symbiosis of CNNs and XAI within the context of mammography for breast cancer diagnosis. The core of our research did not only improved the predictive accuracy of CNNs through the deployment of a fine-tuned ResNet50 model but also highlighted the blackbox, a void often criticized in DL methodologies, by integrating XAI techniques—namely Grad-CAM, LIME, and SHAP. These methods addressed the ”black box” nature of DL, offering insightful visual and quantitative interpretations of the model’s decision-making processes.

Our findings highlight the potential of combining CNNs with XAI to not only advance the accuracy of breast cancer diagnosis but also to bridge the gap between AI technologies and clinical applicability. By making AI decisions more understandable, we pave the way for enhanced collaboration between AI systems and medical professionals, ultimately contributing to more informed and trustworthy patient care.

Despite the promising results, this study acknowledges the limitations inherent in our approach, including the variability in explanation consistency observed with certain XAI techniques, notably LIME. Such limitations highlight the necessity for continuous refinement of both models and explanation methodologies to ensure that they meet the rigorous demands of clinical application.

In the future, we have envisioned several trajectories for future research. Firstly, exploring more DL architectures and training techniques could further enhance diagnostic accuracy. Secondly, developing more robust and consistent XAI methodologies will be crucial to improving the reliability of AI explanations. Thirdly, collaboration with medical professionals in designing AI tools can ensure that these technologies align with clinical needs and practices. Finally, extending our research to include multi-modal data, such as patient history and genetic information, could offer a more holistic approach to breast cancer diagnosis.

References

- [1] I. A. for Research on Cancer), “Breast cancer iarc.” https://www.iarc.who.int/cancer-type/breast-cancer. [Accessed 23-Jun-2023].

- [2] M. Moghbel and S. Mashohor, “A review of computer assisted detection/diagnosis CAD in breast thermography for breast cancer detection,” Artificial Intelligence Review, vol. 39, no. 4, pp. 305–313, 2013.

- [3] U. Sajid, D. Khan, R. Ahmed, D. Shah, S. Munir, D. Arif, et al., “Breast cancer classification using deep learned features boosted with handcrafted features,” arXiv preprint arXiv:2206.12815, 2022.

- [4] S. M. Shah, R. A. Khan, S. Arif, and U. Sajid, “Artificial intelligence for breast cancer analysis: Trends & directions,” Computers in Biology and Medicine, p. 105221, 2022.

- [5] M. M. Bejani and M. Ghatee, “A systematic review on overfitting control in shallow and deep neural networks,” Artificial Intelligence Review, vol. 54, no. 8, pp. 6391–6438, 2021.

- [6] J.-Z. Cheng, D. Ni, Y.-H. Chou, J. Qin, C.-M. Tiu, Y.-C. Chang, C.-S. Huang, D. Shen, and C.-M. Chen, “Computer-aided diagnosis with deep learning architecture: applications to breast lesions in us images and pulmonary nodules in CT scans,” Scientific reports, vol. 6, no. 1, pp. 1–13, 2016.

- [7] A. B. Arrieta, N. Díaz-Rodríguez, J. Del Ser, A. Bennetot, S. Tabik, A. Barbado, S. García, S. Gil-López, D. Molina, R. Benjamins, et al., “Explainable artificial intelligence XAI: Concepts, taxonomies, opportunities and challenges toward responsible ai,” Information fusion, vol. 58, pp. 82–115, 2020.

- [8] G. Yang, Q. Ye, and J. Xia, “Unbox the black-box for the medical explainable AI via multi-modal and multi-centre data fusion: A mini-review, two showcases and beyond,” Information Fusion, vol. 77, pp. 29–52, 2022.

- [9] A. Singh, S. Sengupta, and V. Lakshminarayanan, “Explainable deep learning models in medical image analysis,” Journal of Imaging, vol. 6, no. 6, p. 52, 2020.

- [10] F. Emmert-Streib, O. Yli-Harja, and M. Dehmer, “Explainable artificial intelligence and machine learning: A reality rooted perspective,” Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, vol. 10, no. 6, p. e1368, 2020.

- [11] H. Chereda, A. Bleckmann, K. Menck, J. Perera-Bel, P. Stegmaier, F. Auer, F. Kramer, A. Leha, and T. Beißbarth, “Explaining decisions of graph convolutional neural networks: patient-specific molecular subnetworks responsible for metastasis prediction in breast cancer,” Genome medicine, vol. 13, pp. 1–16, 2021.

- [12] J. A. Recio-García, B. Díaz-Agudo, and V. Pino-Castilla, “CBR-LIME: a case-based reasoning approach to provide specific local interpretable model-agnostic explanations,” in Case-Based Reasoning Research and Development: 28th International Conference, ICCBR 2020, Salamanca, Spain, June 8–12, 2020, Proceedings 28, pp. 179–194, Springer, 2020.

- [13] E. M. Kenny, C. Ford, M. Quinn, and M. T. Keane, “Explaining black-box classifiers using post-hoc explanations-by-example: The effect of explanations and error-rates in XAI user studies,” Artificial Intelligence, vol. 294, p. 103459, 2021.

- [14] K. Sokol and P. Flach, “Explainability fact sheets: A framework for systematic assessment of explainable approaches,” in Proceedings of the 2020 conference on fairness, accountability, and transparency, pp. 56–67, 2020.

- [15] S. Nasir, R. A. Khan, and S. Bai, “Ethical framework for harnessing the power of ai in healthcare and beyond,” IEEE Access, vol. 12, pp. 31014–31035, 2024.

- [16] S. M. Lundberg and S.-I. Lee, “A unified approach to interpreting model predictions,” Advances in neural information processing systems, vol. 30, 2017.

- [17] R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra, “Grad-CAM: Visual explanations from deep networks via gradient-based localization,” in Proceedings of the IEEE international conference on computer vision, pp. 618–626, 2017.

- [18] M. T. Ribeiro, S. Singh, and C. Guestrin, “”Why should i trust you?” explaining the predictions of any classifier,” in Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pp. 1135–1144, 2016.

- [19] A. Moncada-Torres, M. C. van Maaren, M. P. Hendriks, S. Siesling, and G. Geleijnse, “Explainable machine learning can outperform cox regression predictions and provide insights in breast cancer survival,” Scientific reports, vol. 11, no. 1, p. 6968, 2021.

- [20] F. Silva-Aravena, H. Núñez Delafuente, J. H. Gutiérrez-Bahamondes, and J. Morales, “A Hybrid Algorithm of ML and XAI to Prevent Breast Cancer: A Strategy to Support Decision Making,” Cancers, vol. 15, no. 9, p. 2443, 2023.

- [21] F. Wang, D. Wang, Y. Xu, H. Jiang, Y. Liu, and J. Zhang, “Potential of the non-contrast-enhanced chest ct radiomics to distinguish molecular subtypes of breast cancer: A retrospective study,” Frontiers In Oncology, vol. 12, p. 848726, 2022.

- [22] R. Rodríguez-Pérez and J. Bajorath, “Interpretation of compound activity predictions from complex machine learning models using local approximations and shapley values,” Journal of Medicinal Chemistry, vol. 63, no. 16, pp. 8761–8777, 2019.

- [23] M. Masud, A. E. Eldin Rashed, and M. S. Hossain, “Convolutional neural network-based models for diagnosis of breast cancer,” Neural Computing and Applications, pp. 1–12, 2020.

- [24] F. Dong, R. She, C. Cui, S. Shi, X. Hu, J. Zeng, H. Wu, J. Xu, and Y. Zhang, “One step further into the blackbox: a pilot study of how to build more confidence around an AI-based decision system of breast nodule assessment in 2d ultrasound,” European radiology, vol. 31, pp. 4991–5000, 2021.

- [25] Y. J. Suh, J. Jung, and B.-J. Cho, “Automated breast cancer detection in digital mammograms of various densities via deep learning,” Journal of personalized medicine, vol. 10, no. 4, p. 211, 2020.

- [26] M. Lou, R. Wang, Y. Qi, W. Zhao, C. Xu, J. Meng, X. Deng, and Y. Ma, “MGBN: Convolutional neural networks for automated benign and malignant breast masses classification,” Multimedia Tools and Applications, vol. 80, no. 17, pp. 26731–26750, 2021.

- [27] J. Wang, Y. Zheng, J. Ma, X. Li, C. Wang, J. Gee, H. Wang, and W. Huang, “Information bottleneck-based interpretable multitask network for breast cancer classification and segmentation,” Medical Image Analysis, vol. 83, p. 102687, 2023.

- [28] D. Kaplun, A. Krasichkov, P. Chetyrbok, N. Oleinikov, A. Garg, and H. S. Pannu, “Cancer cell profiling using image moments and neural networks with model agnostic explainability: A case study of breast cancer histopathological (BreakHis) database,” Mathematics, vol. 9, no. 20, p. 2616, 2021.

- [29] F. A. Spanhol, L. S. Oliveira, C. Petitjean, and L. Heutte, “A dataset for breast cancer histopathological image classification,” Ieee transactions on biomedical engineering, vol. 63, no. 7, pp. 1455–1462, 2015.

- [30] A. Rezazadeh, Y. Jafarian, and A. Kord, “Explainable ensemble machine learning for breast cancer diagnosis based on ultrasound image texture features,” Forecasting, vol. 4, no. 1, pp. 262–274, 2022.

- [31] J.-B. Lamy, B. Sekar, G. Guezennec, J. Bouaud, and B. Séroussi, “Hierarchical visual case-based reasoning for supporting breast cancer therapy,” in 2019 Fifth International Conference on Advances in Biomedical Engineering (ICABME), pp. 1–4, IEEE, 2019.

- [32] J.-B. Lamy, B. Sekar, G. Guezennec, J. Bouaud, and B. Séroussi, “Explainable artificial intelligence for breast cancer: A visual case-based reasoning approach,” Artificial intelligence in medicine, vol. 94, pp. 42–53, 2019.

- [33] R. S. Lee, F. Gimenez, A. Hoogi, K. K. Miyake, M. Gorovoy, and D. L. Rubin, “A curated mammography data set for use in computer-aided detection and diagnosis research,” Scientific data, vol. 4, no. 1, pp. 1–9, 2017.

- [34] L. Ahmed, M. M. Iqbal, H. Aldabbas, S. Khalid, Y. Saleem, and S. Saeed, “Images data practices for semantic segmentation of breast cancer using deep neural network,” Journal of Ambient Intelligence and Humanized Computing, pp. 1–17, 2020.

- [35] D. A. Ragab, M. Sharkas, S. Marshall, and J. Ren, “Breast cancer detection using deep convolutional neural networks and support vector machines,” PeerJ, vol. 7, p. e6201, 2019.

- [36] S. Montaha, S. Azam, A. K. M. R. H. Rafid, P. Ghosh, M. Hasan, M. Jonkman, F. De Boer, et al., “Breastnet18: a high accuracy fine-tuned VGG16 model evaluated using ablation study for diagnosing breast cancer from enhanced mammography images,” Biology, vol. 10, no. 12, p. 1347, 2021.

- [37] M. Van Droogenbroeck and M. Buckley, “Morphological erosions and openings: fast algorithms based on anchors,” Journal of Mathematical Imaging and Vision, vol. 22, pp. 121–142, 2005.

- [38] N. Hassan, S. Ullah, N. Bhatti, H. Mahmood, and M. Zia, “The retinex based improved underwater image enhancement,” Multimedia Tools and Applications, vol. 80, pp. 1839–1857, 2021.

- [39] R. A. Khan, A. Crenn, A. Meyer, and S. Bouakaz, “A novel database of children’s spontaneous facial expressions (liris-cse),” Image and Vision Computing, vol. 83, pp. 61–69, 2019.

- [40] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.

- [41] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition. arxiv preprint arxiv: 151203385,” 2015.

- [42] G. Huang, Z. Liu, L. Van Der Maaten, and K. Q. Weinberger, “Densely connected convolutional networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4700–4708, 2017.

- [43] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going deeper with convolutions,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1–9, 2015.

- [44] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” Communications of the ACM, vol. 60, no. 6, pp. 84–90, 2017.

- [45] N. Tajbakhsh, J. Y. Shin, S. R. Gurudu, R. T. Hurst, C. B. Kendall, M. B. Gotway, and J. Liang, “Convolutional neural networks for medical image analysis: Full training or fine tuning?,” IEEE transactions on medical imaging, vol. 35, no. 5, pp. 1299–1312, 2016.

- [46] M. R. Zafar and N. M. Khan, “DLIME: A deterministic local interpretable model-agnostic explanations approach for computer-aided diagnosis systems,” arXiv preprint arXiv:1906.10263, 2019.

- [47] S. M. Lundberg and S.-I. Lee, “A unified approach to interpreting model predictions,” Advances in neural information processing systems, vol. 30, 2017.

- [48] A. A. Taha and A. Hanbury, “An efficient algorithm for calculating the exact hausdorff distance,” IEEE transactions on pattern analysis and machine intelligence, vol. 37, no. 11, pp. 2153–2163, 2015.

- [49] P. J. Besl and N. D. McKay, “Method for registration of 3-D shapes,” in Sensor fusion IV: control paradigms and data structures, vol. 1611, pp. 586–606, Spie, 1992.

- [50] I.-S. Kim and W. McLean, “Computing the hausdorff distance between two sets of parametric curves,” Communications of the Korean Mathematical Society, vol. 28, no. 4, pp. 833–850, 2013.