Engineering Reliable Interactions

in the Reality-Artificiality Continuum

Abstract

Milgram’s reality-virtuality continuum applies to interaction in the physical space dimension, going from real to virtual. However, interaction has a social dimension as well, that can go from real to artificial depending on the companion with whom the user interacts. In this paper we present our vision of the Reality-Artificiality bidimensional Continuum (RAC), we identify some challenges in its design and development and we discuss how reliable interactions might be supported inside RAC.

1 Introduction

According to the Merriam-Webster Dictionary, interaction is the “mutual or reciprocal action or influence” [45]; its definition covers both social interaction carried out by humans and socially-capable entities via communication, and physical interaction among physical entities and/or humans.

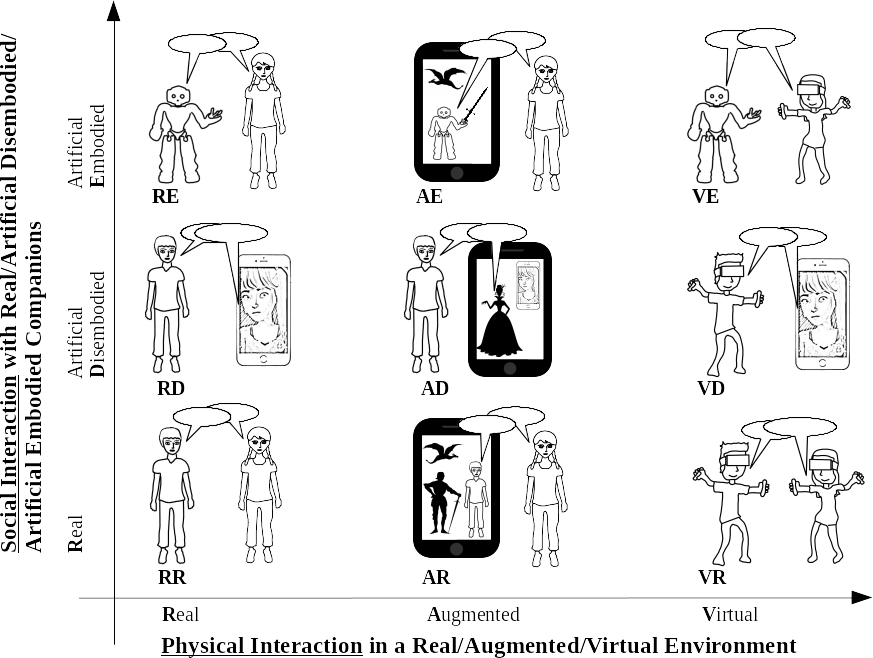

The reality-virtuality continuum was introduced by Milgram in 1994 [46] and applies to interaction in the physical space dimension, going from real to virtual. Given that the word interaction is also strongly characterized by a social dimension, the reality-virtuality continuum paradigm should be considered inside a bidimensional space, as shown in Figure 1, rather than inside a unidimensional one. The physical dimension, drawn on the horizontal axis, involves interaction with an environment, be it real, augmented or virtual. The social dimension, associated with the vertical axis, involves communicative interaction via natural language with companions, be them human or artificial.

A virtual environment is composed of virtual elements, only; an augmented reality environment is the combination of both virtual and real objects. Though the virtual elements should be consistent with the real world surrounding the user for both augmented and virtual scenes, the perceptual issues and the alignment constraints are different. Indeed, in immersive virtual reality the environment is coherent since the whole environment is virtual and only an interaction coherence is required. Whereas in augmented environments the visual alignment issues can rise among virtual and real parts of the scene, even for small spatial errors.

While Milgram’s reality-virtuality continuum in the physical dimension is commonly accepted, we are not aware of an analogous “social interaction reality-artificiality continuum” paradigm, as depicted on the vertical axis.

In our vision, social interaction goes from real to artificial, the latter involving humans and artificial companions, further classified as disembodied, like chatbots and software agents, and embodied, like robots and other autonomous systems equipped with a hardware structure. The various forms of social interaction can be combined with the various forms of physical interaction, leading to the identification of different areas in Figure 1. Although the best interaction style for most people is in the real physical space, and with real persons (the RR bottom-left area in Figure 1), sometimes this interaction is not possible.

Before the Covid-19 pandemia, the impossibility to interact in the RR area was mainly associated with persons living in rural and alpine areas not well connected with villages nearby, and with hospitalized or disabled people, namely with fragile categories at risk of social exclusion. Covid-19 made instead clear that billions of humans can be suddenly prevented from moving in the RR area for a long time. Today, frailty of interactions is a global condition, and our well-being is dramatically affected by their lack: social distancing prevents interaction and the effects of prolonged isolation may worsen or trigger mental health problems111https://www.sciencenews.org/article/coronavirus-covid-19-social-distancing-psychological-fallout,

https://www.nationalgeographic.com/history/2020/04/psychologists-watching-coronavirus-social-distancing-coping/,

https://www.forbes.com/sites/traversmark/2020/04/17/social-distancing-is-harming-mental-health-more-than-physical-health-according-to-new-survey-data/..

In our futuristic vision, interaction will be possible even when no real environment, and no real companions, are available: the real or artificial companion may be immersed in a real, augmented, or virtual environment, and the human user may interact with it in natural language. Virtual Reality (VR) and Augmented Reality (AR) may be the media through which users interact with their companions, to overcome isolation when the RR area is not accessible. To make our vision feasible, the results already achieved in integrating robotics & VR/AR should be merged with the results in the chatbots & VR/AR field, and should be further extended to cope with the peculiar issues of both areas and with the challenges raised by their merge. We name the logical vision resulting from this merge, that we depict in Figure 1, RAC, for Reality-Artificiality Continuum.

In this position paper, we overview some of the challenges raised by the design and development of the RAC vision. In particular, we discuss some techniques suitable to design and implement reliable interactions in RAC, with special attention to applications related with well-being. We borrow the definition of reliability by [22]: “ For systems offering a service or function, reliability means that the service or function is available when needed. A software system is reliable to the extent that it meets its requirements consistently, namely that it makes good decisions in all situations. Among the requirements we envisage for a system implementing the RAC vision, we mention

1. to be available to anyone who needs interaction, anywhere, hence, to be based on free/open-source software and on low cost technologies; while it is clearly impossible to provide all RAC users with embodied companions, most of them should at least be able to interact with disembodied companions in VR/AR settings;

2. to be safe, hence, to meet privacy issues, to avoid dangerous behaviors, to avoind hurting the users’ feelings by always respecting their sensibility, as represented by their user’s profile;

3. to be realistic and engaging, in order to provide a believable and rewarding experience to the user, hence, to ensure the coherence between the real environment where the user in immersed and the augmented/virtual one built on top of the real one;

4. to comply with the most recent guidelines for ethics in robotics [10, 29] and in autonomous systems [54, 57].

The above requirements may be further specialized for the different areas of the bidimensional RAC space. From one hand the reliability of social interactions can be preserved in immersive VR since all the agents are coherent among them since all are virtual. Whereas in augmented environments users can feel differences among virtual and real agents. On the other hand, immersive VR may not be well accepted by some people with special needs, since VR creates a completely alternative reality.

Despite these differences between the different types of interaction, which create further specific challenges, the four requirements above are the most important for our RAC vision, and should always be met. Designing and implementing a system providing (some of) the interaction functionalities sketched in Figure 1 in such a way that it meets the four requirements above is what we mean by “engineering reliable interactions in the Reality-Artificiality Continuum”.

2 State of the art

In the last 10 years, the interest of the research community towards social inclusion in general, and inclusion of fragile people in particular, has been growing year after year. Social inclusion was one of the eleven priorities for Cohesion Policy in 2014-2020 (“thematic objective 9”222https://ec.europa.eu/regional_policy/en/policy/how/priorities.) and several European Community research programs have funded, or are going to fund in the near future, projects aiming to address these needs333http://www.europeannetforinclusion.org/,

http://www.interreg-alcotra.eu/it/decouvrir-alcotra/les-projets-finances/pro-sol,

https://eacea.ec.europa.eu/erasmus-plus/news/social-inclusion-and-common-values-the-

contribution-in-the-field-of-education-and-training-2019_en.. At the end of April 2020, a third of the world population was on some form of a coronavirus lockdown, meaning their movements are restricted and controlled by their respective governments444https://www.statista.com/chart/21240/enforced-covid-19-lockdowns-by-people-affected-per-country/: many of us became fragile, and the need for social inclusion is now affecting at least 2.5 billions people. One way to achieve social inclusion is by boosting interaction.

The starting point of our vision is hence the Milgram’s reality-virtuality continuum [46], or Mixed Reality (MR) continuum, a commonly accepted paradigm, which describes Virtual and Augmented Reality (VR/AR) applications like a unitary approach, rather than distinct concepts, and which is depicted in the RR, AR, VR areas in Figure 1. The MR continuum is a scale between completely real environments with no computer enhancements, towards virtual environments that are totally generated by the computer. In between these two extremes are Augmented Reality and Augmented Virtuality (AV), which are a combination of real and virtual worlds. Similarly, the devices could go from non-immersive and standard visualization devices, e.g. monitors or tablets, to highly immersive systems, like the VR head-mounted displays, typically used for VR applications, but also for wearable AR [33].

So far, the most critical issues when engineering VR/AR applications involve their perceptual issues, undesired effects, and effective use in contexts different from the entertainment: these are open and largely discussed problem, e.g. addressing the wrong estimation of distances [12, 27, 30, 39, 40, 49]. This negatively affects interaction, for example in industrial or medical training tasks, but how to interact in a natural way in such environments is also important for their acceptance, especially by fragile people [6]. Indeed, immersion, presence and interactivity are all factors that influence VR acceptance and use [14, 47]. Some studies analyze acceptance of VR for specific classes of subjects, e.g. for cognitive deficits caused by diverse disorders or traumatic brain injury [15], with positive results both in terms of patients’ involvement and positive effects on their rehabilitation, also considering immersive VR head-mounted displays, or for e-Health systems for the elderly [9, 13]. The problem of acceptance of VR/AR has recently been studied also in education [16] and tourism [37].

Moving towards the acceptance of disembodied artificial companions in the real physical environment (RD area in Figure 1), despite the longstanding history of studies on the connections between sentiments, emotions and interaction in natural language with conversational agents and chatbots, with ad-hoc task also in the most recent SemEval competition [53], studies dealing with adoption and acceptance of chatbots by humans are very recent [50], especially when chatbots are used in the healthcare domain [34]. One requirement for a conversational agent to be accepted by its user is its capability to adapt to her/him. The literature on conversation adaptation is also very recent and almost limited [28, 31]. Apart from Amazon Sumerian555https://aws.amazon.com/sumerian/., it seems that no frameworks for integrating chatbots into VR/AR applications (AD and VD areas in Figure 1) exist, which makes the RAC vision extremely novel and original. Sumerian was launched in 2018 [21] and some authors criticize it for its lack of transparency and its questionable data policy, concluding that it is inappropriate for health-related applications [42]. Moreover, the use of applications developed through Amazon Sumerian implies a payment, which contrasts with the first RAC requirement.

As far as the AE and VE areas in Figure 1 are concerned, many solid proposals to integrate robotics and VR/AR exist, but these proposals do not take human-robot natural language interaction into account. Seminal works on robotics and VR/AR integration date back to the turn of the millennium [11, 24] and recent scientific proposals, some of which near-market, span many different domains. Training is among the most lively ones: in September 2019, Guerin and Hager patented a system and methods to create an immersive virtual environment using a VR system that receives parameters corresponding to a real-world robot that may be simulated to create a virtual robot based on the received parameters. Users may train the virtual robot, and the real-world robot may be programmed based on the virtual robot training666https://patentimages.storage.googleapis.com/e4/81/62/ca61f9719139a0/US20190283248A1.pdf.. Just one month later the Toyota Research Institute released a video showing how they use VR to allow human trainers to teach robots in-home tasks777https://youtu.be/6IGCIjp2bn4.. The just published paper by Makhataeva and Varol [41] surveys about 100 AR applications in medical robotics, robot control and planning, human-robot interaction and robot swarms from 2015 and 2019. Recent experiments demonstrate the value in using modern VR/AR technologies to mediate human-robot interactions [52] and boost robots acceptance by their users.

Finally, we mention the very active field of natural language human-robot interaction, positioned in the top-left RE area in Figure 1. Robots must understand the natural language, and robotic systems capable of executing complex commands issued in natural language that ground into robotic percepts have been designed and implemented [26], grammar based approaches to human-robot interaction have been experimented and compared with data driven ones [7], algorithms targetted to recognize motion verbs such as “follow”, “meet”, “avoid” have been proposed [32]. However, to be believable and pleasant companions, robots should also generate natural language. The reasons why so few current social robots make use of sophisticated generation techniques are discussed by Foster in her paper on “natural language generation for social robotics: opportunities and challenges” [23].

3 Engineering Reliable Interactions in RAC

The analysis of the state of the art shows that many building blocks that are needed to implement the vision put forward in Figure 1 are already available to the research community, at least as working prototypes. Nevertheless, the RAC building is still to come.

We believe that many years will pass by before we will see antropomorphic robots interacting with humans in natural language inside a virtual reality (VE area in Figure 1, the most challenging one). However, some approaches may boost the integrated RAC vision take off, and allow its developers to engineer interactions that are reliable. In this section we review some of them.

The different combinations of physical and social interaction raise different research challenges and offer different opportunities for the users. As an example, a pure virtual experience could be well accepted by a young user, but it might not be applied to elderly, who might more benefit from a communication-intensive, voice-based interaction where words are carefully selected from a vocabulary of words that are familiar to that specific person, and that (s)he can easily recognize and understand; for this kind of person, interaction with the augmented reality should not be an immersive one.

To engineer reliable interactions in RAC, users should be profiled, the real environment where they operate should be recognized and abstracted into a model, and interactions should dynamically adapt to their profile and real environment, which may change over time, and at the same time should follow some patterns, or protocols, that are “safe by design”.

We disregard the profiling issue, already well covered by the literature [17, 51], and we discuss how reliability of interaction could be addressed by exploiting ontologies and runtime verification.

Making virtual and real environments semantically consistent via ontologies. In order to make interactions believable and suitable to the human user, even when taking place in the most challenging VE area, the immersive virtual environment should be consistent with the real world surrounding the user. We believe that ontologies may help in achieving this goal. “In the context of computer and information sciences, an ontology defines a set of representational primitives with which to model a domain of knowledge or discourse. The representational primitives are typically classes (or sets), attributes (or properties), and relationships (or relations among class members). ” [25].

Once the objects in the real environment are detected and the corresponding bounding box created, the virtual scenes should be composed on-the-fly888The bounding box is the volume that fully encloses the real object. A virtual scene is a digital counterpart of a real scene, where the objects are virtual and not real., and in a (semi-)automatic way, by inserting objects of the same shape, position and spatial properties of the corresponding real ones. These virtual objects should be semantically consistent with the virtual scene. The scene is composed on-the-fly in order to handle objects that can move in the real scene and it is necessary an automatic procedure to carry out this process. A human operator can contribute to the creation of a virtual scene by working on the semantic consistency of the objects and on the kind of environment that the operator wants to implement. The whole scene can be built off-line to decrease the computational cost and to handle on-the-fly only the objects that can change their position in the real scene.

For example, if the virtual scene represents a bush, and a real object has been detected with a parallelepipedal bounding box starting from the ground and 70cm tall, it might be substituted, in the virtual scene, by a shrub. If the height is 1.70cm, it might be substituted by a tree. If the virtual scene represents a shore, those two objects might be substituted by a chair and a beach umbrella, respectively, and if it is a urban environment, they might be a trash bin and a pole with a road sign.

In order to associate virtual objects with real objects in a semantically consistent way, we need to represent the knowledge about which virtual objects make sense into a virtual scene, also depending on their geometric features, via one or more ontologies. To the best of our knowledge, the connections between VR/AR and ontologies have been explored in a very limited way, and the idea of exploiting ontologies in VR/AR to make virtual and real objects consistent is an original one. The most similar proposal dates back to 2011 and applies to 3D virtual worlds; it aims at labelling some things with tags like “chair” and “table” and associate functional specifications to enable computers to reason about them, but no real-to-virtual semantic alignment is foreseen [18].

Once ontologies enter the RAC, they can also serve as a means to ensure semantic interoperability among different users and to ease natural language interaction. This would be a more standard way to use ontologies in RAC, since the relationships between natural language processing and ontologies have been studied since the advent of the semantic web [43, 44, 56].

The exploitation of ontologies makes both physical and social interaction reliable because, as observed by Uschold and Gruninger more than 20 years ago, “a formal representation makes possible the automation of consistency checking resulting in more reliable software” [55].

As an example, by writing disjunction axioms in the ontology it would be possible to formally ensure that a physical object is never rendered with a virtual appearance, if that virtual look may hurt a user with some given profile.

In a similar way, ontologies might ensure that the words selected by the artificial companion when generating sentences are compliant with the profile of the user, and that they are properly disambiguated when uttered by the user. To make an example, a user with a young profile might use “paw” as a slang acronym for “Parents Are Watching” while a cat lady would use it with its most common meaning.

By avoiding misunderstandings and choices of words or virtual shapes unsuitable for some categories of users, reliability of interactions would increase.

Verifying social and physical interactions at runtime. The other approach that we envision as a way to make interactions in RAC more reliable, is runtime verification.

Runtime verification is a formal method aimed at checking the current run of the system. It takes as input a data stream of time-stamped sensor or software values and a requirement to verify, expressed in the form of a temporal logic formula, most commonly Linear Time Temporal Logic [8], Mission-time Linear Temporal Logic (MLTL) [38], First-Order Linear Temporal Logic [5], or via trace-based formalisms like Trace Expressions [3]; the output is a stream of tuples containing a time stamp and the verdict from the valuation of the requirement (true, false, or unknown when a three valued logic is adopted), evaluated over the data stream starting from that time step.

The runtime verification formalism and engine we have been developing in the last seven years, Trace Expressions, has proven useful both to monitor agent interaction protocols (AIP, [19, 48]) taking place in purely software multiagent systems [4, 20], also when self adaptation of agents is a requirement [2], and to verify event patterns taking place in physical environments like the Internet of Things [35, 36]. The Runtime Monitoring Language, RML999https://rmlatdibris.github.io/, is a synthesis of these years of research: it decouples monitoring from instrumentation by allowing users to write specifications and to synthesize monitors from them, independently of the system under scrutiny and its instrumentation.

RML would be suitable to monitor physical interactions in any portion of the RAC bidimentional space. To make an example inspired by the current lockdown experience, the number of human users in the same room at the same time should be no more than 2, they should keep a 1m distance, and no user could stay in the room for more than 45 minutes. If users were equipped with sensors suitable for indoor localization, RML could monitor the balance of “user X enters the room at time T1” and “user X leaves the room at time T2” events and check that the number of users in the room and the time spent there meet the lockdown rules. A further continuous check that if two users are in the room, they keep the mandatory distance, could also be easily implemented.

What would be definitely harder, but worth exploring, is the runtime monitoring of natural language interactions. Let us consider the following situation: Alice is interacting with her artificial companion, which is aware that Alice profile is “very polite”. The artificial companion expects that after a greeting, Alice answers with a greeting, and after the accomplishment of some task, Alice thanks. There are many ways Alice can greet her companion or express her gratitude, so there are many different concrete utterances that should be tagged ad “greeting” or “gratitude”. It would be up to some sophisticated natural language processing component to tag utterances in the right way. Assuming that utterances are managed as events, each tagged with its event type (, ), the interaction protocol with very polite users might require that and where means that uttered a sentence tagged as to , and is the prefix operator meaning that after one event, another must take place. represents the end of the interaction.

Being able to correctly tag utterances on the fly would allow RML to detect violations of the protocol. For example, Alice might not thank her companion for some task it carried out. While this may be due to any reason, not necessarily a serious one, it might also be a signal of something wrong in Alice’ mood, and might require some special action to take, or some ad hoc sub-protocol to be triggered.

The idea that some sentences can be collapsed into the same category, and hence tagged with the same “event type”, is already supported by many languages for building chatbots. As an example AIML, the Artificial Intelligence Markup Language101010http://www.aiml.foundation/doc.html, provides the srai mechanism for this purpose, and IBM Watson Assistant111111https://www.ibm.com/cloud/watson-assistant/ achieves the same goal via the notion of “intent” exemplified by a list of sentences.

At the state of the art, runtime verification of natural language interactions is out of reach. However, by integrating existing approaches to chatbot development and RML we might be able to move a step forward in this direction.

As a final remark, we observe that although the RAC integrated vision is still far to come, we believe that it represents a promising direction towards the satisfaction of anywhere-anytime social inclusion needs. Many ethical, social, psychological and medical aspects should be addressed if the RAC became available, besides the technical ones.

As part of our close future work, we are going to address some of the latter: on the one hand, we will work on the alignment of virtual and real objects via ontologies. On the other, we will explore the possibility to integrate runtime verification mechanisms in human-chatbot interactions.

Acknowledgements. We gratefully acknowledge Simone Ancona for the drawings of Figure 1.

References

- [1]

- [2] Davide Ancona, Daniela Briola, Angelo Ferrando & Viviana Mascardi (2015): Global Protocols as First Class Entities for Self-Adaptive Agents. In Gerhard Weiss, Pinar Yolum, Rafael H. Bordini & Edith Elkind, editors: Proceedings of the 2015 International Conference on Autonomous Agents and Multiagent Systems, AAMAS 2015, Istanbul, Turkey, May 4-8, 2015, ACM, pp. 1019–1029. Available at http://dl.acm.org/citation.cfm?id=2773282.

- [3] Davide Ancona, Angelo Ferrando & Viviana Mascardi (2016): Comparing Trace Expressions and Linear Temporal Logic for Runtime Verification. In Erika Ábrahám, Marcello M. Bonsangue & Einar Broch Johnsen, editors: Theory and Practice of Formal Methods - Essays Dedicated to Frank de Boer on the Occasion of His 60th Birthday, Lecture Notes in Computer Science 9660, Springer, pp. 47–64, 10.1007/978-3-319-30734-3_6.

- [4] Davide Ancona, Angelo Ferrando & Viviana Mascardi (2017): Parametric Runtime Verification of Multiagent Systems. In Kate Larson, Michael Winikoff, Sanmay Das & Edmund H. Durfee, editors: Proceedings of the 16th Conference on Autonomous Agents and MultiAgent Systems, AAMAS 2017, São Paulo, Brazil, May 8-12, 2017, ACM, pp. 1457–1459. Available at http://dl.acm.org/citation.cfm?id=3091328.

- [5] David A. Basin, Felix Klaedtke, Samuel Müller & Birgit Pfitzmann (2008): Runtime Monitoring of Metric First-order Temporal Properties. In: Proceedings of 28th IARCS Conference on Foundations of Software Technology and Theoretical Computer Science (FSTTCS’08), pp. 49–60, 10.4230/LIPIcs.FSTTCS.2008.1740.

- [6] Chiara Bassano, Fabio Solari & Manuela Chessa (2018): Studying Natural Human-computer Interaction in Immersive Virtual Reality: A Comparison between Actions in the Peripersonal and in the Near-action Space. In Paul Richard, Manuela Chessa & José Braz, editors: Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2018) - Volume 2: HUCAPP, Funchal, Madeira, Portugal, January 27-29, 2018, SciTePress, pp. 108–115, 10.5220/0006622701080115.

- [7] Emanuele Bastianelli, Giuseppe Castellucci, Danilo Croce, Roberto Basili & Daniele Nardi (2014): Effective and Robust Natural Language Understanding for Human-Robot Interaction. In Torsten Schaub, Gerhard Friedrich & Barry O’Sullivan, editors: ECAI 2014 - 21st European Conference on Artificial Intelligence, 18-22 August 2014, Prague, Czech Republic - Including Prestigious Applications of Intelligent Systems (PAIS 2014), Frontiers in Artificial Intelligence and Applications 263, IOS Press, pp. 57–62, 10.3233/978-1-61499-419-0-57.

- [8] Andreas Bauer, Martin Leucker & Christian Schallhart (2011): Runtime Verification for LTL and TLTL. ACM Trans. Softw. Eng. Methodol. 20(4), pp. 14:1–14:64, 10.1145/2000799.2000800.

- [9] Cristina Botella, Ernestina Etchemendy, Diana Castilla, Rosa María Baños, Azucena García-Palacios, Soledad Quero, Mariano Alcañiz Raya & José Antonio Lozano (2009): An e-Health System for the Elderly (Butler Project): A Pilot Study on Acceptance and Satisfaction. Cyberpsychology Behav. Soc. Netw. 12(3), pp. 255–262, 10.1089/cpb.2008.0325.

- [10] British Standards Institution (BSI) (2016): BS 8611 – Robots and Robotic Devices — Guide to the ethical design and application. Available at https://shop.bsigroup.com/ProductDetail/?pid=000000000030320089.

- [11] Grigore C. Burdea (1999): Invited review: the synergy between virtual reality and robotics. IEEE Trans. Robotics Autom. 15(3), pp. 400–410, 10.1109/70.768174.

- [12] Andrea Canessa, Paolo Casu, Fabio Solari & Manuela Chessa (2019): Comparing Real Walking in Immersive Virtual Reality and in Physical World using Gait Analysis. In: Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications - Volume 2: HUCAPP,, INSTICC, SciTePress, pp. 121–128, 10.5220/0007380901210128.

- [13] Manuela Chessa, Chiara Bassano, Elisa Gusai, Alice E. Martis & Fabio Solari (2018): Human-Computer Interaction Approaches for the Assessment and the Practice of the Cognitive Capabilities of Elderly People. In Laura Leal-Taixé & Stefan Roth, editors: Computer Vision - ECCV 2018 Workshops - Munich, Germany, September 8-14, 2018, Proceedings, Part VI, Lecture Notes in Computer Science 11134, Springer, pp. 66–81, 10.1007/978-3-030-11024-6_5.

- [14] Manuela Chessa, Guido Maiello, Alessia Borsari & Peter J. Bex (2019): The Perceptual Quality of the Oculus Rift for Immersive Virtual Reality. Human-Computer Interaction 34(1), pp. 51–82, 10.1080/07370024.2016.1243478.

- [15] Rosa Maria Esteves Moreira da Costa & Luís Alfredo Vidal de Carvalho (2004): The acceptance of virtual reality devices for cognitive rehabilitation: a report of positive results with schizophrenia. Comput. Methods Programs Biomed. 73(3), pp. 173–182, 10.1016/S0169-2607(03)00066-X.

- [16] Che Samihah Che Dalim, Hoshang Kolivand, Huda Kadhim, Mohd Shahrizal Sunar & Mark Billinghurst (2017): Factors Influencing the Acceptance of Augmented Reality in Education: A Review of the Literature. J. Comput. Sci. 13(11), pp. 581–589, 10.3844/jcssp.2017.581.589.

- [17] Christopher Ifeanyi Eke, Azah Anir Norman, Liyana Shuib & Henry Friday Nweke (2019): A survey of user profiling: state-of-the-art, challenges, and solutions. IEEE Access 7, pp. 144907–144924, 10.1109/ACCESS.2019.2944243.

- [18] Joshua Eno & Craig W. Thompson (2011): Virtual and Real-World Ontology Services. IEEE Internet Comput. 15(5), pp. 46–52, 10.1109/MIC.2011.75.

- [19] Amal El Fallah-Seghrouchni, Serge Haddad & Hamza Mazouzi (1999): Protocol Engineering for Multi-agent Interaction. In Francisco J. Garijo & Magnus Boman, editors: MultiAgent System Engineering, 9th European Workshop on Modelling Autonomous Agents in a Multi-Agent World, MAAMAW ’99, Valencia, Spain, June 30 - July 2, 1999, Proceedings, Lecture Notes in Computer Science 1647, Springer, pp. 89–101, 10.1007/3-540-48437-X_8.

- [20] Angelo Ferrando, Davide Ancona & Viviana Mascardi (2017): Decentralizing MAS Monitoring with DecAMon. In Kate Larson, Michael Winikoff, Sanmay Das & Edmund H. Durfee, editors: Proceedings of the 16th Conference on Autonomous Agents and MultiAgent Systems, AAMAS 2017, São Paulo, Brazil, May 8-12, 2017, ACM, pp. 239–248. Available at http://dl.acm.org/citation.cfm?id=3091164.

- [21] Kirk Fiedler (2019): Virtual Reality in the Cloud: Amazon Sumerian as a Tool and Topic. In: 25th Americas Conference on Information Systems, AMCIS 2019, Cancún, Mexico, August 15-17, 2019, Association for Information Systems. Available at https://aisel.aisnet.org/amcis2019/treo/treos/12.

- [22] Michael Fisher, Viviana Mascardi, Kristin Yvonne Rozier, Bernd-Holger Schlingloff, Michael Winikoff & Neil Yorke-Smith (2020): Towards a Framework for Certification of Reliable Autonomous Systems. CoRR abs/2001.09124. Available at https://arxiv.org/abs/2001.09124.

- [23] Mary Ellen Foster (2019): Natural language generation for social robotics: opportunities and challenges. Philosophical Transactions of the Royal Society B 374(1771), p. 20180027, 10.1098/rstb.2018.0027.

- [24] Eckhard Freund & Jürgen Rossmann (1999): Projective virtual reality: bridging the gap between virtual reality and robotics. IEEE Trans. Robotics Autom. 15(3), pp. 411–422, 10.1109/70.768175.

- [25] Tom Gruber (2009): Definition of Ontology. In Ling Liu & M. Tamer Özsu, editors: Encyclopedia of Database Systems, Springer-Verlag, pp. 1959–1959. Available at https://tomgruber.org/writing/ontology-definition-2007.htm, 10.1016/0004-3702(80)90011-9.

- [26] Sergio Guadarrama, Lorenzo Riano, Dave Golland, Daniel Göhring, Yangqing Jia, Dan Klein, Pieter Abbeel & Trevor Darrell (2013): Grounding spatial relations for human-robot interaction. In: 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, November 3-7, 2013, IEEE, pp. 1640–1647, 10.1109/IROS.2013.6696569.

- [27] Florian Heinrich, Kai Bornemann, Kai Lawonn & Christian Hansen (2019): Depth Perception in Projective Augmented Reality: An Evaluation of Advanced Visualization Techniques. In: 25th ACM Symposium on Virtual Reality Software and Technology, VRST ’19, Association for Computing Machinery, New York, NY, USA, 10.1145/3359996.3364245.

- [28] Evelien Heyselaar & Tibor Bosse (2019): Using Theory of Mind to Assess Users’ Sense of Agency in Social Chatbots. In Asbjørn Følstad, Theo B. Araujo, Symeon Papadopoulos, Effie Lai-Chong Law, Ole-Christoffer Granmo, Ewa Luger & Petter Bae Brandtzæg, editors: Chatbot Research and Design - Third International Workshop, CONVERSATIONS 2019, Amsterdam, The Netherlands, November 19-20, 2019, Revised Selected Papers, Lecture Notes in Computer Science 11970, Springer, pp. 158–169, 10.1007/978-3-030-39540-7_11.

- [29] Institute of Electrical and Electronics Engineers (2017): P7007 – Ontological Standard for Ethically Driven Robotics and Automation Systems. Available at https://standards.ieee.org/project/7007.html.

- [30] Mathias Klinghammer, Immo Schütz, Gunnar Blohm & Katja Fiehler (2016): Allocentric information is used for memory-guided reaching in depth: A virtual reality study. Vision Research 129, pp. 13–24,10.1016/j.visres.2016.10.004.

- [31] Lorenz Cuno Klopfenstein, Saverio Delpriori & Alessio Ricci (2018): Adapting a Conversational Text Generator for Online Chatbot Messaging. In Svetlana S. Bodrunova, Olessia Koltsova, Asbjørn Følstad, Harry Halpin, Polina Kolozaridi, Leonid Yuldashev, Anna S. Smoliarova & Heiko Niedermayer, editors: Internet Science - INSCI 2018 International Workshops, St. Petersburg, Russia, October 24-26, 2018, Revised Selected Papers, Lecture Notes in Computer Science 11551, Springer, pp. 87–99, 10.1007/978-3-030-17705-8_8.

- [32] Thomas Kollar, Stefanie Tellex, Deb Roy & Nicholas Roy (2010): Grounding Verbs of Motion in Natural Language Commands to Robots. In Oussama Khatib, Vijay Kumar & Gaurav S. Sukhatme, editors: Experimental Robotics - The 12th International Symposium on Experimental Robotics, ISER 2010, December 18-21, 2010, New Delhi and Agra, India, Springer Tracts in Advanced Robotics 79, Springer, pp. 31–47, 10.1007/978-3-642-28572-1_3.

- [33] Ernst Kruijff, J. Edward Swan II & Steven Feiner (2010): Perceptual issues in augmented reality revisited. In: 9th IEEE International Symposium on Mixed and Augmented Reality, ISMAR 2010, Seoul, Korea, 13-16 October 2010, IEEE Computer Society, pp. 3–12, 10.1109/ISMAR.2010.5643530.

- [34] Sven Laumer, Christian Maier & Fabian Tobias Gubler (2019): Chatbot Acceptance in Healthcare: Explaining User Adoption of Conversational Agents for disease Diagnosis. In Jan vom Brocke, Shirley Gregor & Oliver Müller, editors: 27th European Conference on Information Systems - Information Systems for a Sharing Society, ECIS 2019, Stockholm and Uppsala, Sweden, June 8-14, 2019.

- [35] Maurizio Leotta, Davide Ancona, Luca Franceschini, Dario Olianas, Marina Ribaudo & Filippo Ricca (2018): Towards a Runtime Verification Approach for Internet of Things Systems. In Cesare Pautasso, Fernando Sánchez-Figueroa, Kari Systä & Juan Manuel Murillo Rodriguez, editors: Current Trends in Web Engineering - ICWE 2018 International Workshops, MATWEP, EnWot, KD-WEB, WEOD, TourismKG, Cáceres, Spain, June 5, 2018, Revised Selected Papers, Lecture Notes in Computer Science 11153, Springer, pp. 83–96, 10.1007/978-3-030-03056-8_8.

- [36] Maurizio Leotta, Diego Clerissi, Luca Franceschini, Dario Olianas, Davide Ancona, Filippo Ricca & Marina Ribaudo (2019): Comparing Testing and Runtime Verification of IoT Systems: A Preliminary Evaluation based on a Case Study. In Ernesto Damiani, George Spanoudakis & Leszek A. Maciaszek, editors: Proceedings of the 14th International Conference on Evaluation of Novel Approaches to Software Engineering, ENASE 2019, Heraklion, Crete, Greece, May 4-5, 2019, SciTePress, pp. 434–441, 10.5220/0007745604340441.

- [37] M Leue, TH Jung et al. (2014): A theoretical model of augmented reality acceptance. E-review of Tourism Research 5, 10.1080/13683500.2015.1070801.

- [38] Jianwen Li, Moshe Vardi & Kristin Yvonne Rozier (2019): Satisfiability Checking for Mission-Time LTL. In: Proceedings of 31st International Conference on Computer Aided Verification (CAV’19), LNCS, Springer, 10.1007/978-3-030-25543-51.

- [39] Martin Luboschik, Philip Berger & Oliver Staadt (2016): On Spatial Perception Issues In Augmented Reality Based Immersive Analytics. In: Proceedings of the 2016 ACM Companion on Interactive Surfaces and Spaces, ISS ’16 Companion, Association for Computing Machinery, New York, NY, USA, p. 47–53, 10.1145/3009939.3009947.

- [40] Guido Maiello, Manuela Chessa, Fabio Solari & Peter J Bex (2015): The (in) effectiveness of simulated blur for depth perception in naturalistic images. PloS one 10(10),10.1371/journal.pone.0140230.s004.

- [41] Zhanat Makhataeva & Huseyin Atakan Varol (2020): Augmented Reality for Robotics: A Review. Robotics 9(2), 10.3390/robotics9020021.

- [42] Sebastian von Mammen, Andreas Müller, Marc Erich Latoschik, Mario Botsch, Kirsten Brukamp, Carsten Schröder & Michel Wacker (2019): VIA VR: A Technology Platform for Virtual Adventures for Healthcare and Well-Being. In Fotis Liarokapis, editor: 11th International Conference on Virtual Worlds and Games for Serious Applications, VS-Games 2019, Vienna, Austria, September 4-6, 2019, IEEE, pp. 1–2, 10.1109/VS-Games.2019.8864580.

- [43] Diana Maynard, Kalina Bontcheva & Isabelle Augenstein (2016): Natural Language Processing for the Semantic Web. Synthesis Lectures on the Semantic Web: Theory and Technology, Morgan & Claypool Publishers, 10.2200/S00741ED1V01Y201611WBE015.

- [44] Chris Mellish & Xiantang Sun (2006): The semantic web as a Linguistic resource: Opportunities for natural language generation. Knowl. Based Syst. 19(5), pp. 298–303, 10.1016/j.knosys.2005.11.011.

- [45] Merriam-Webster Dictionary (2020): Definition of Interaction. Available at https://www.merriam-webster.com/dictionary/interaction.

- [46] Paul Milgram, Haruo Takemura, Akira Utsumi & Fumio Kishino (1994): Augmented Reality: A Class of Displays on the Reality-Virtuality Continuum. In: Proceedings of Telemanipulator and Telepresence Technologies, 2351, SPIE, pp. 282–292, 10.1117/12.197321.

- [47] Joschka Mütterlein & Thomas Hess (2017): Immersion, Presence, Interactivity: Towards a Joint Understanding of Factors Influencing Virtual Reality Acceptance and Use. In: 23rd Americas Conference on Information Systems, AMCIS 2017, Boston, MA, USA, August 10-12, 2017, Association for Information Systems. Available at http://aisel.aisnet.org/amcis2017/AdoptionIT/Presentations/17.

- [48] James Odell, H. Van Dyke Parunak & Bernhard Bauer (2000): Representing Agent Interaction Protocols in UML. In Paolo Ciancarini & Michael J. Wooldridge, editors: Agent-Oriented Software Engineering, First International Workshop, AOSE 2000, Limerick, Ireland, June 10, 2000, Revised Papers, Lecture Notes in Computer Science 1957, Springer, pp. 121–140, 10.1007/3-540-44564-1_8.

- [49] Grant Pointon, Chelsey Thompson, Sarah Creem-Regehr, Jeanine Stefanucci & Bobby Bodenheimer (2018): Affordances as a measure of perceptual fidelity in augmented reality. In: Proceedings of the 2018 IEEE VR 2018 Workshop on Perceptual and Cognitive Issues in AR (PERCAR), pp. 1–6.

- [50] Tim Rietz, Ivo Benke & Alexander Maedche (2019): The Impact of Anthropomorphic and Functional Chatbot Design Features in Enterprise Collaboration Systems on User Acceptance. In Thomas Ludwig & Volkmar Pipek, editors: Human Practice. Digital Ecologies. Our Future. 14. Internationale Tagung Wirtschaftsinformatik (WI 2019), February 24-27, 2019, Siegen, Germany, University of Siegen, Germany / AISeL, pp. 1642–1656. Available at https://aisel.aisnet.org/wi2019/track13/papers/7.

- [51] Silvia Rossi, François Ferland & Adriana Tapus (2017): User profiling and behavioral adaptation for HRI: A survey. Pattern Recognit. Lett. 99, pp. 3–12, 10.1016/j.patrec.2017.06.002.

- [52] Daniel Szafir (2019): Mediating Human-Robot Interactions with Virtual, Augmented, and Mixed Reality. In Jessie Y. C. Chen & Gino Fragomeni, editors: Virtual, Augmented and Mixed Reality. Applications and Case Studies - 11th International Conference, VAMR 2019, Held as Part of the 21st HCI International Conference, HCII 2019, Orlando, FL, USA, July 26-31, 2019, Proceedings, Part II, Lecture Notes in Computer Science 11575, Springer, pp. 124–149, 10.1007/978-3-030-21565-1_9.

- [53] Shabnam Tafreshi & Mona T. Diab (2019): GWU NLP Lab at SemEval-2019 Task 3 : EmoContext: Effectiveness ofContextual Information in Models for Emotion Detection inSentence-level at Multi-genre Corpus. In Jonathan May, Ekaterina Shutova, Aurélie Herbelot, Xiaodan Zhu, Marianna Apidianaki & Saif M. Mohammad, editors: Proceedings of the 13th International Workshop on Semantic Evaluation, SemEval@NAACL-HLT 2019, Minneapolis, MN, USA, June 6-7, 2019, Association for Computational Linguistics, pp. 230–235, 10.18653/v1/s19-2038.

- [54] The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems, editor (2019): Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems. IEEE. Available at https://standards.ieee.org/content/ieee-standards/en/industry-connections/ec/autonomous-systems.html.

- [55] Mike Uschold & Michael Gruninger (1996): Ontologies: Principles, methods and applications. The knowledge engineering review 11(2), pp. 93–136, 10.1017/S0269888900007797.

- [56] Yorick Wilks & Christopher Brewster (2009): Natural Language Processing as a Foundation of the Semantic Web. Found. Trends Web Sci. 1(3-4), pp. 199–327, 10.1561/1800000002.

- [57] Alan F. T. Winfield, Katina Michael, Jeremy Pitt & Vanessa Evers (2019): Machine Ethics: The Design and Governance of Ethical AI and Autonomous Systems. Proceedings of the IEEE 107(3), pp. 509–517, 10.1109/JPROC.2019.2900622.