End-to-end Kernel Learning

via Generative Random Fourier Features

Abstract

Random Fourier features (RFFs) provide a promising way for kernel learning in a spectral case. Current RFFs-based kernel learning methods usually work in a two-stage way. In the first-stage process, learning an optimal feature map is often formulated as a target alignment problem, which aims to align the learned kernel with a pre-defined target kernel (usually the ideal kernel). In the second-stage process, a linear learner is conducted with respect to the mapped random features. Nevertheless, the pre-defined kernel in target alignment is not necessarily optimal for the generalization of the linear learner. Instead, in this paper, we consider a one-stage process that incorporates the kernel learning and linear learner into a unifying framework. To be specific, a generative network via RFFs is devised to implicitly learn the kernel, followed by a linear classifier parameterized as a full-connected layer. Then the generative network and the classifier are jointly trained by solving an empirical risk minimization (ERM) problem to reach a one-stage solution. This end-to-end scheme naturally allows deeper features, in correspondence to a multi-layer structure, and shows superior generalization performance over the classical two-stage, RFFs-based methods in real-world classification tasks. Moreover, inspired by the randomized resampling mechanism of the proposed method, its enhanced adversarial robustness is investigated and experimentally verified.

1 Introduction

Kernel methods reveal the non-linear property hidden in data and have been extensively studied in recent decades [1, 2]. The selection of the kernel still remains a non-trivial problem, which requires prior knowledge and directly affects the algorithm performance. Hence, learning a suitable kernel from data has been a universal choice [3, 4]. In recent decades, a series of researches have been devoted to exploit the random Fourier features (RFFs) [5] in the kernel learning task [6, 7].

The pioneering work [5] of RFFs constructed an explicit feature map to approximate a positive definite, shift-invariant kernel. The feature map maps the input point to the so-called random Fourier features , where the associated weights are sampled from the spectral distribution of the kernel function. A linear learner is then conducted on the mapped features to categorize them. Intuitively, RFFs allow us to learn the weights in a spectral sense, i.e., to learn a kernel [6, 7]. To be specific, to involve the data information, typical approaches [6, 7] proposed to solve the following target alignment problem [8] to learn the weights ,

| (1) |

where and denote the -th and the -th training samples respectively. The inner product of the two mapped features denotes the implicit kernel function value . Sinha and Duchi [6] learned an optimal weight subset of by solving Eq.(1) for an optimal feature subset of the vanilla RFFs, and proved the consistency and generalization bound. The proposed algorithm [6] is efficient and highly scalable. Li et al. [7] incorporated a deep neural network to implicitly learn the kernel, and trained the network by solving Eq.(1). The work in [7] shows that the performance could be improved by involving a network to model the kernel distribution.

These kernel learning methods [6, 7] follow a two-stage scheme: They first learn the random features , which is equivalent to learning the weights since the map is explicit, by solving Eq.(1), and then learn a linear classifier on the features. The optimization target of Eq.(1) is to align the learned kernel with the pre-defined ideal kernel . However, this commonly-used ideal kernel in target alignment is not necessarily optimal for the generalization of the linear classifier learned in the second stage. Hence, in such a two-stage way, the random features learned in the first stage perhaps show excellent approximation performance towards the ideal kernel, but do not take much care of the generalization or classification performance, which could be further improved.

To address this issue, towards learning a more well-generalized kernel, we propose to jointly learn the random features and the classifier in an end-to-end way by straightly solving the following expectation risk minimization problem,

| (2) |

where denotes the loss function and denotes the noise random variable. A generative network , a.k.a. a generator, is devised to learn the kernel distribution, which takes a set of noises as input and samples weights from the learned kernel distribution. Then, the corresponding random features are constructed based on the generated weights according to the feature map . A linear classifier parameterized as a full-connected (FC) layer is applied to the random features to categorize them. The generative network and linear classifier are jointly trained by directly minimizing the loss between the true labels and predicted ones. Therefore, we achieve an end-to-end, one-stage solution, which no longer pursues the approximation ability of random features towards any pre-defined kernels. Instead, it is expected that distributions of the underlying kernel can be modeled by the generative network for better classification or generalization performance. The proposed method is called Generative RFFs (GRFFs) since the random features are built via the generative network.

Besides, this end-to-end training strategy naturally allows deeper features, in correspondence to a multi-layer structure of GRFFs. An associated progressively training strategy is designed to empirically guarantee the convergence of the multi-layer GRFFs. The multi-layer structure further boosts the generalization performance, since a good kernel on features is learned in this way. Moreover, the randomized resampling mechanism of GRFFs yields the non-deterministic weights and brings the merit of adversarial robustness gains, which is investigated and experimentally verified.

Our contributions can be summarized as follows,

-

•

To the best of our knowledge, this is the first work in RFF-based kernel learning algorithms to incorporate the kernel learning and the linear learner into a unifying framework via generative models for an end-to-end and one-stage solution. Empirical results indicate the superior generalization performance over the classical two-stage, RFFs-based methods.

-

•

The end-to-end strategy enables us to employ a multi-layer structure for GRFFs, indicating a good kernel on deeper features. A progressively training strategy is devised to efficiently train the multiple generators. The multi-layer structure further advances the generalization performance.

-

•

The adversarial robustness of the proposed method is also investigated. Empirical results show that, to some degree, the randomized resampling mechanism associated with the learned distributions can alleviate the performance decrease against adversarial attacks.

The rest of this paper is organized as follows. We briefly introduce the random Fourier features and the generative models in Section 2. The one-stage framework, multi-layer structure, and progressively training strategy of the proposed method are explained in detail in Section 3. Experiment results on classification tasks and adversarial robustness are shown in Section 4. The discussions and the conclusions are drawn in Section 5.

2 Preliminaries

2.1 Random Fourier Features

In kernel methods, a positive definite kernel with defines a map , which satisfies , where denotes the inner product in a Reproducing Kernel Hilbert Space . However, in large-scale kernel machines, there exist unacceptable high computation and memory costs: kernel evaluations, in space, and even in time to compute the inverse of the kernel matrix. Therefore, randomized features are introduced in large-scale cases to approximate the kernel function [5]. The theoretical foundation is based on the Bochner’s theorem [9], which indicates that the Fourier transform of a kernel function is associated to a probability distribution: A continuous and shift-invariant kernel on is positive definite if and only if is the Fourier transform of a non-negative measure . That is,

| (3) |

Considering a real-valued, shift-invariant and positive definite kernel , by applying the Euler’s formula to Eq.(3), we have

| (4) |

Therefore, one can construct an explicit feature map as follows:

| (5) |

known as the random Fourier features [5]. The set of weights is sampled from the spectral distribution of the kernel function. The mapped random Fourier features satisfy [5]. A detailed analysis of the convergence can be found in [10].

The vanilla RFFs [5] accelerate the large-scale kernel machines a lot and are data-independent. Afterwards, RFFs have been widely utilized, including the kernel approximation [11], extreme learning machines [12], the bias-variance trade-off in machine learning [13] and the kernel learning task [6, 7]. A systematic survey on RFFs-based algorithms can be found in [14].

Inspired by the vanilla RFFs, learning the features is equal to learning the weights , while learning the weights is equal to learning the kernel distribution. Hence, to efficiently learn the distribution, generative models are introduced.

2.2 Generative Models

Generative models have been widely applied in learning distributions from data [15]. Various generative models can be categorized into two types: models that perform explicit probability density estimations, and models that perform as a sampler sampling from the estimated distribution without a precise probability function.

Suppose a training set includes samples from a distribution , then the first type of generative models returns an estimation of . Given a particular value as input, the estimation will output the corresponding probability. While the second type tries to learn the latent as well, but in a rather different way: The learned model actually simulates a sampling process, through which one can generate more samples from the estimated distribution.

There exist lots of researches in the second type of generative models using a deep neural network as the sampler. In computer vision, the family of generative adversarial networks (GANs) [16] has shown great generality in various situations [17, 18]. The generative network in GANs performs as a useful image generator, which generates images by sampling from the learned image distribution. Besides, in Bayesian deep learning, there is a series of hypernetwork-based works, which use a neural network, called hypernet, to generate the parameters in another neural network, called primary net. The hypernet also performs as a sampler to learn the parameter posterior distribution of the primary net [19, 20].

Therefore, the generative networks are employed in the proposed GRFFs due to the powerful distribution learning ability. The resulting merits are two-fold: On the one hand, the GRFFs could learn a well-generalized kernel distribution from data. On the other hand, the generative networks could produce non-deterministic weights, bringing the adversarial robustness gains.

3 Generative Random Fourier Features Model

3.1 One-stage Generative Random Fourier Features

Previous RFFs-based kernel learning methods construct random features via sampling from the spectral distribution of the kernel [5] or via approximating the ideal kernel [6, 7], and then train a classifier on these features. The classification performance of the random features learned in this two-stage manner can perhaps be further improved. Therefore, we propose generative RFFs to jointly learn the features and the classifier by optimizing the expectation risk minimization problem in an end-to-end manner, which leads to a one-stage solution with better classification performance.

We first describe a general framework of our one-stage model, illustrated in Fig.1. A generator is designed to learn and to sample from the latent kernel distribution. Then, the generative random Fourier features of the original data are constructed by the sampled weights from the learned distribution. Finally, a linear classifier is applied to the features to categorize them.

The generator in our method performs as a sampler. It implicitly learns some distribution and generates samples from it. Given an arbitrary noise distribution and a set of noises sampled from , the generator takes them as input and generates the corresponding set of weights sampled from :

| (6) |

Given a training set , the generative random Fourier features of each training point will be constructed with full use of the weights according to Eq.(5):

| (7) |

Notice that the weight and the data point are of the same dimension and that the dimension of equals to . In the end, the linear classifier will be applied on the GRFFs to predict the label .

3.2 Multi-layer Generative Random Fourier Features

The end-to-end training strategy by straightly optimizing the ERM problem naturally allows us to employ a multi-layer structure of GRFFs, shown in Fig.2. To be specific, with one generator, we can build the random features of the original data, which can be viewed as the first layer of features. Then, with another generator, a second layer of random features can be constructed in the same way based on the features from the previous layer. After such a layer-by-layer operation, the random features in the last layer are followed with an FC layer. The total networks, i.e., all the generators and the last FC layer, are again jointly trained to solve the ERM problem. In this way, in each layer, there exists a corresponding generator which models the specific distribution on this layer of features, indicating a good kernel learned on features.

In those two-stage approaches [5, 6, 7], the distribution is associated with a pre-defined kernel, which is restricted to a single-layer structure, since the kernel for the multi-layer case is not clear. By contrast, we cover the multi-layer case, and thus have an advantage in learning a much more linear-separable pattern from data under the guidance of the learned kernels on features.

Without loss of generality, a detailed elaboration on the two-layer structure is presented below as an example, which could be naturally generalized to any number of layers (see Alg.1). Denote and as the two generators in the first and second layers respectively. The two generators, and , take two sets of noises and independently sampled from the same distribution as input respectively, and generate the corresponding weights and :

| (9) |

where and are the distributions modeled by and respectively.

In the first layer, a training sample cooperates together with to construct the generative random Fourier feature according to Eq.(5):

| (10) |

Then, the random feature cooperates together with the generative weights from the second layer to construct the generative random Fourier feature in the second layer in the same way:

| (11) |

Again, notice that and are of the same dimension and that the dimension of equals to . Accordingly, the dimension of equals to and the dimension of equals to . Finally, there is a linear classifier applied to the last random feature to output the prediction.

Now we rewrite the ERM problem in Eq.(8) for the two-layer GRFFs as follows,

| (12) |

Advantages of the multi-layer structure are obvious. The generator in the current layer actually models some distribution on features from the previous layer, indicating a good kernel on features. Besides, associated with the proposed progressively training strategy (introduced in section 3.3), during the whole training process, by updating the generators layer-by-layer, there are distinct improvements on both the loss curves and the accuracy curves (see Fig.3), implying that adding more layers leads to significant performance leaps. More details about the distributions on features are illustrated in section 4.

3.3 Progressively Training

For the multi-layer generative random Fourier features, since there exist several generative networks, it is hard to efficiently update all the parameters in multiple networks simultaneously, which possibly results in a total failure of convergence [21]. Therefore, inspired by the training in ProGAN [18], we propose a progressively training strategy to efficiently train the multiple generators in an inverse and layer-by-layer order.

The progressively training strategy contains several phases. The number of phases equals to the number of generators. In the first phase, we freeze the update of all the parameters except the last generator and the FC layer. After the training converges, we step into the next phase. Now the penultimate generator is unfrozen and gets updated together with the last one and the FC layer until convergence, while the others are still kept fixed. In such an inverse and layer-by-layer order, we progressively unfreeze the generators in each layer, add them to the training sequence one by one, and finally train all these generators together with the FC layer, which is empirically proved as an efficient training way for the multi-layer structure. This progressively training alogrithm is demonstrated in Alg.1.

In addition, more details of the generator are described. Every generator is parameterized as a multi-layer perceptron (MLP), which can be viewed as a composition of several cascaded blocks. Among these blocks, except for the last one, each block sequentially holds a linear layer, a batch normalization [22] layer, and an activation layer with leaky ReLU function. In the last block, we remove the batch normalization layer and replace leaky ReLU with tanh function as the activation. Fig.1 illustrates the described components inside the generator. Furthermore, one can customize the network according to the practical cases. For example, it is allowed to increase the number of blocks in the MLP to enhance its learning ability or to add dropout [23] to alleviate overfitting. The number of neurons in the last FC layer can be modified freely for multi-classification or regression tasks.

The standard normal distribution is set as the input noise distribution , which corresponds to a radial basis function (RBF) kernel, the most widely used universal kernel. At each iteration, we independently resample noises from to optimize the generators on the expectation of the distribution, and update simultaneously the parameters of the generators and the classifier by Adam [24] optimizer. We choose the cross entropy function as the loss function . One can estimate whether the model is trained until convergence by watching the variation of the cross entropy loss value, with which the final classification performance is closely connected.

For the inference process, given a new sample , random noises are first sampled from , then the weights are generated via the generators, then the corresponding generative random Fourier feature is constructed via the weights and by applying Eq.(7) or Eq.(10), Eq.(11). Finally, the linear classifier will predict the label of .

So far, we finish building an end-to-end, one-stage kernel learning method, which implicitly learns the kernel distribution by solving an ERM problem via the generative RFFs. To learn the latent kernel distribution, a generative network is designed to simulate the sampling process without an explicit definition of the probability density function of the distribution. Besides, jointly learning the features and the classifier allows us to increase the depth of features. The resulting multi-layer structure can learn a good kernel on features and thus even boosts the generalization performance. In addition, a progressively training strategy is proposed to efficiently train the multiple generative networks.

Remark We would like to reiterate the following facts. It’s worth noting that the initial motivation of RFFs-based methods is to reduce the time and space complexity in large-scale kernel machines. For instance, the vanilla RFFs [5] speed up the kernel machines a lot, then get even accelerated in [6]. Further, random features are then extended to kernel learning, since RFFs actually provide a good justification for kernel learning in the spectral cases. The researches in [6, 7] are two typical kernel learning algorithms via random features, see a recent survey [14] on the development of RFFs-based algorithms for kernel learning. Regarding to our method, the target is to perform kernel learning via RFFs, but NOT reducing the time-consuming. In fact, since the neural network requires training, our method and the method in [7] are inevitably inferior in the processing speed during training and inference, compared with those algorithms specifically designed for kernel acceleration [5, 6]. Instead, the purpose of our method is to learn a more well-generalized kernel based on the fact that the optimization objective in [6, 7], i.e., solving the target alignment, is not necessarily optimal in classification tasks. To achieve such a purpose, we introduce the generative networks and adopt an end-to-end scheme to reach a one-stage solution. The resulting two merits of this solution, better generalization and stronger robustness, will be empirically illustrated in the next section.

4 Experiments and Results

We evaluate the classification performance of the proposed GRFFs on a wide range of data sets. Following the work in [6, 7], we first test the performance on a synthetic data set. Second, we choose several benchmark data sets from UCI repository111https://archive.ics.uci.edu/ml/datasets.html and LIBSVM data222https://www.csie.ntu.edu.tw/˜cjlin/libsvmtools/datasets/, including both large-scale and small-scale sets, and test the performance on these data sets. Finally, we introduce a variant of the multi-layer GRFFs for image data and conduct an adversarial attack on this variant to illustrate its adversarial robustness.

The simplest version of GRFFs only includes a single layer, i.e., one single generative network, denoted as SL-GRFF. We denote the multi-layer GRFFs including more than one generators as ML-GRFF. We compare SL-GRFF and ML-GRFF with the following approaches.

-

•

RFF. Rahimi and Recht [5] first proposed the vanilla RFFs to approximate the kernel function by sampling from its spectral distribution. This approach is data-independent.

-

•

OPT-RFF. Based on the vanilla RFFs, Sinha and Duchi [6] proposed to optimize the random features by solving a target alignment problem. A feature subset of an optimal size is learned, which shows great superiority in high processing speed in large-scale kernel machines.

-

•

IKL. Li et al. [7] adopted a neural network to model the spectral distribution of the kernel function. The network is trained by optimizing a target alignment problem. A linear classifier is applied on the features.

-

•

MLP. The traditional MLP with ReLU activation function is also included in the comparison. Specifically, the numbers of layers in the MLP equal to that in the ML-GRFF, and the numbers of neurons in each hidden layer in the MLP equal to the numbers of sampled noises in each layer in the ML-GRFF.

In all the experiments, for RFF and OPT-RFF, the random features are generated by sampling weights from a normal distribution, which makes the features approximate an RBF kernel. Regarding to the number of sampled weights, i.e., the value of in Eq.(5), following the implement333https://github.com/duchi-lab/learning-kernels of OPT-RFF [6], a large enough number of weights are initially sampled in OPT-RFF to learn a feature subset of an optimal size . Then this learned is employed to build the vanilla RFFs [5]. The ridge regression is set as the linear classifier in the second stage of RFF and OPT-RFF. We only compare with the results of IKL claimed in the paper [7] on the synthetic data set in section 4.1.

For our proposed GRFFs, in section 4.1 and 4.2, for the multi-layer version, we adopt a two-layer structure including two generators, each of which is parameterized as an MLP. We set and . The structures of the first and second generators are and respectively, where denotes the input data dimension. The FC layer contains neurons. For the single-layer GRFFs in section 4.1, the generator is also parameterized as an MLP and holds a structure of . We set , thus the following FC layer contains neurons. We discuss GRFFs with more than 2 generators in A.

4.1 Performance on Synthetic Data

For the synthetic set, we generate with , where is data dimension. The training set includes samples and the test set contains samples. Fig.4a shows the data distribution when . An RBF kernel is ill-suited for this data set since the Euclidean distance used in this kernel fails to correctly capture the data pattern near the boundary [6]. One may need to carefully tune the kernel width to alleviate the inherent inappropriateness between the RBF kernel and the data set.

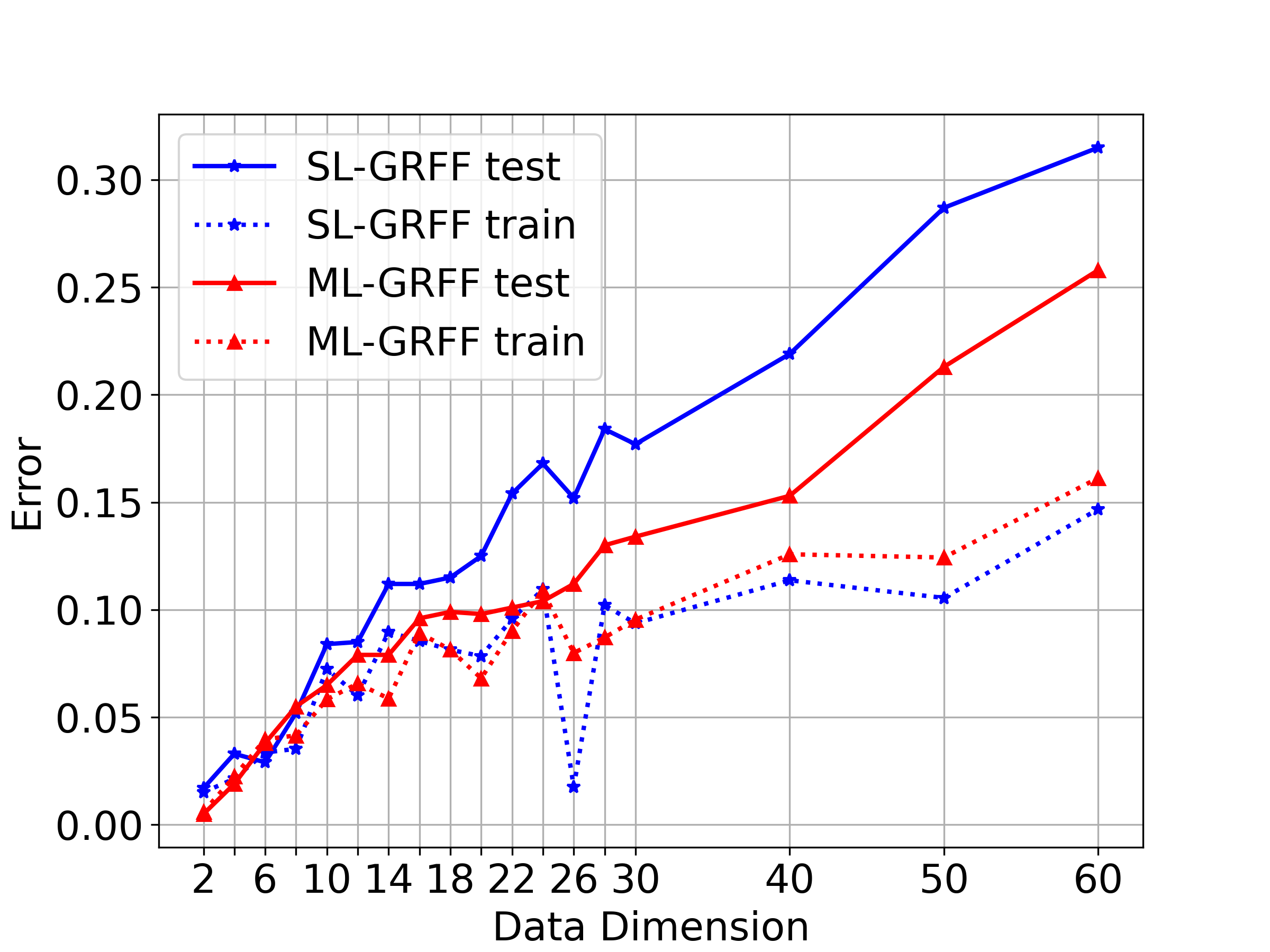

Fig.4b illustrates the test errors of different methods corresponding to different data dimensions . Both RFF and OPT-RFF manifest a sharp performance decrease along with the increase of . By contrast, IKL and ML-GRFF both have rather stable performance as the dimension increases, since they try to learn the kernel distribution via a neural network without any prior kernel function definition. Particularly, by directly optimizing the ERM problem in an end-to-end manner, ML-GRFF characterizes the data pattern much better than the others and achieves the lowest test errors. Fig.4c shows the comparison results on the training and test sets of SL-GRFF and ML-GRFF respectively. In a wide range of data dimensions, ML-GRFF always has an evident superiority of classification errors on the test set over SL-GRFF.

Besides, to visualize the generative RFFs, we take PCA [25] to extract the top-3 principal components of random features in SL-GRFF and different layers of ML-GRFF, shown in Fig.5. SL-GRFF and ML-GRFF are trained on the synthetic data of two dimensions, and , respectively. When , both SL-GRFF and ML-GRFF have similar performance, while when , ML-GRFF performs better than SL-GRFF. For ML-GRFF, the extracted principal components in the 1st layer (see Fig.5b and Fig.5e) do not show any evident linear separability. But, the components in the 2nd layer (see Fig.5c and Fig.5f) follow a clear linear separable distribution. For SL-GRFF, we can also see the linear separability of the extracted components from Fig.5a and Fig.5d, which is obviously weaker than that of the extracted components of the 2nd layer in ML-GRFF. Hence, by going deeper and deeper, ML-GRFF is able to learn a good kernel on features, which results in better performance.

To illustrate the traits of the progressively training strategy, we record the loss and accuracy curves during training on the synthetic data of different dimensions in Fig.6. When the data is easy to be separated, i.e., it is low-dimensional (), the whole training process does not show much differences between the two training phases in Fig.6a and Fig.6b. As the dimension increases, there are obvious improvements, i.e., the drop of loss and the step-up of accuracy, from the 1st training phase to the 2nd training phase in Fig.6c Fig.6f, at the turning point of the 200-th epoch. By progressively training the generators layer by layer, the convergence is achieved and there is good performance.

4.2 Performance on Benchmark Data Sets

The models are trained on small-scale data sets and large-scale data sets. We randomly pick half of the data as the training set, the other half as the test set, except for the data sets of which the training and test sets have already been divided. After normalizing the data to in advance by min-max normalization, of the training data are randomly selected as the validation set. For RFF and OPT-RFF, the validation set is adopted to perform validations on a group of 10 -s ranging from to with an interval of , where is the steep hyper-parameter in the RBF kernel: . For MLP and ML-GRFF, during training, models that achieve best performance on the partitioned validation set are selected. All the experiments on every data set are repeated 5 times.

| method | data set | monks1 | monks2 | monks3 | australia | climate | diabetic | sonar |

|---|---|---|---|---|---|---|---|---|

| (#tr;#te;) | (124;432;6) | (169;432;6) | (122;432;6) | (345;345;14) | (270;270;18) | (576;575;19) | (104;104;60) | |

| RFF | train | |||||||

| test | ||||||||

| OPT-RFF | train | |||||||

| test | ||||||||

| MLP | train | |||||||

| test | ||||||||

| ML-GRFF | train | |||||||

| test |

Tab.1 and Tab.2 illustrate the training and test accuracy of different methods on the small-scale and large-scale data sets respectively. The sizes of the training and test sets and the data dimensions are also listed in the two tables. One can find that in most cases, ML-GRFF outperforms RFF and OPT-RFF a lot and achieves competitive (sometimes even slightly better) results compared with MLP.

| method | data set | adult | ijcnn | phishing |

|---|---|---|---|---|

| (#tr; #te; ) | (32,561; 16,281; 123) | (49,990; 91,701; 22) | (5,528; 5,527; 68) | |

| RFF | train | |||

| test | ||||

| OPT-RFF | train | |||

| test | ||||

| MLP | train | |||

| test | ||||

| ML-GRFF | train | |||

| test |

4.3 Performance on Image Data and Adversarial Robustness

As aforementioned, the promising performance on generalization of the proposed one-stage, end-to-end kernel learning method is empirically validated on both synthetic and real-world data. In the following, we conduct another experiment on adversarial robustness to demonstrate the superiority of our GRFF.

4.3.1 Background and Motivation

The robustness of DNNs is demonstrated to be vulnerable and sensitive to imperceptible perturbations, a.k.a. adversarial examples [26, 27], created by white-box adversarial attackers, which need availability to the complete information of DNNs, e.g., model parameters [27, 28]. Hence, a line of adversarial defenses are based on an observation that adversarial examples created on one model by these attacks might totally lose efficacy on another one of different parameters [29], which inspires us to investigate the adversarial robustness of GRFF.

In GRFF, the independent samplings from the generators yield non-deterministic weights from the learned kernel distributions. In other words, every time the independent sampling produces a GRFF model of different parameters. Intuitively, such non-deterministic weights of GRFF raise the possibility of circumventing adversarial attacks on fixed parameters, thus bringing stronger adversarial robustness. In the remainder of this subsection, we first describe a variant of GRFF for image data, then evaluate its adversarial robustness against an iterative, gradient-based adversarial attack [28].

4.3.2 Variant of GRFF for Image Data

When dealing with image data, an image of size, where ,, denote the number of channels, height and width respectively, can be simply stretched to a 1-dimensional vector. Then multiple 1-dimensional weight vectors of size could be generated to build the corresponding GRFFs of the image vector. However, it is impractical to directly include so many neurons in the output layer of the generator, resulting in extremely numerous parameters and heavy computational burden. Therefore, we design a variant of GRFF to deal with image data. In the following, we elaborate two main changes of this variant in detail: how to generate weights and how to build random features.

To avoid posing neurons at the output layer, the generators are now devised to generate small-size, 2-dimensional weights, which resemble the common convolution kernels and could efficiently reduce computational costs. Accordingly, the generator structure should be modified. Specifically, the generators are parameterized the same as those in DCGAN [17], instead of MLPs. Compared with the cascaded blocks in MLPs described in section 3.3 before, all the linear layers in the blocks are now substituted by transposed convolution [30] layers to generate 2-dimensional weights, and the leaky ReLUs are replaced by ReLUs. The others still remain the same.

The way to build random features for image data still basically follows the feature map of Eq.(5). The inner product between weights and vectors is now replaced by the convolution on image via the generated 2-dimensional weights. The convoluted images then pass through cosine and sine functions respectively as indicated in Eq.(5), and the resulting two pieces are concatenated along the image channels, followed by a pooling operation. These manipulations could also be sequentially executed on the output of a preceding layer to build random features at the current layer, thus forming a multi-layer structure. After such multi-layer operations, i.e., convolution, cosine and sine activation, concatenation and pooling, the last random features are stretched to a 1-dimensional vector and an FC layer will make the predictions.

4.3.3 Performance against Adversarial Attacks

Omitting the generators in the variant of GRFF described above, the model itself will reduce to a Convolution Neural Network (CNN) with cosine and sine activation functions. The generators actually learn the convolution kernels in every layer of the CNN. During inference, by resampling from the learned distributions for many times, the generators will keep generating non-deterministic and independent convolution kernels, which every time will lead to a specific CNN. Hence, inspired by the randomized resampling mechanism associated with the distribution learning ability of GRFF, we investigate its application in defending the adversarial attacks.

In this subsection, we mainly exploit the robustness of GRFF against an iterative, gradient-based attack, called Iteratively, Least-Likely (Iter.L.L.) attack [28], which is developed from a one-step, gradient-based attack, Fast Gradient Sign Method (FGSM, [27]). By taking the gradient on input images and moving the images a small step along the direction of the sign of the gradient, FGSM creates the corresponding adversarial examples. Based on this one-step FGSM, to acquire better attack performance, Kurakin et al. [28] proposed the iterative version, i.e., Iter.L.L. attack. The steps for creating the adversarial example of an original example can be summarized as follows,

| (13) |

At the -th iteration, Iter.L.L. attack computes the loss between the predicted label and the least-likely label , moves the image a small step along the direction of the sign of the gradient of the input, and clips the pixel value of the perturbed image to a specified range . The step size is set as by default. The number of iterations is set as .

Iter.L.L. attack aims at linearizing the loss function around given fixed model parameters. While the weights in GRFF are generated independently and thus are non-deterministic. Hence, we evaluate the ability of GRFF in defending Iter.L.L. attack on MNIST [31] and have the following experiment design.

-

1.

Given an image and noises sampled from , GRFF can predict its label and compute the loss between and the least-likely label.

-

2.

By back propagation, the gradients on are determined. Then the corresponding adversarial example is created according to Eq.(13).

-

3.

GRFF can again take the original noises as input and output a prediction of .

-

4.

By resampling independent noises from , now GRFF can take the new noises as input and output another prediction on .

Based on the predicted results , and above, we could determine the corresponding unattacked accuracy , attacked accuracy with original noises , and attacked accuracy with resampled noises . It is expected that the attacked accuracies and should be smaller than , and that should be larger than since resampling should be helpful in defending the Iter.L.L. attack.

In this experiment, for the variant of GRFF, we also adopt a two-layer structure, the generators of which generate and weights of size in the 1st and 2nd layers respectively. The max-pooling operator takes a perception field of size. The experiment results are shown in Fig.7. By omitting the generators, we acquire a CNN with cosine and sine activation functions. We also record the results of the Iter.L.L. attack on such a CNN in Fig.7.

One can find that under Iter.L.L. attack, by resampling non-deterministic weights, are generally larger than , indicating stronger adversarial robustness brought by the resampling mechanism of GRFF. When equals to with iterations, all the adversarial examples successfully fool CNN and GRFF with original noises and result in zero accuracy. While by resampling independent weights, GRFF successfully identifies nearly of these adversarial examples, which is a promising improvement. Besides, both the attacked accuracies with resampled noises and original noises are higher than the attacked accuracy of CNN. Therefore, due to the randomized resampling mechanism associated with GRFF, our model is able to alleviate the performance decrease brought by the Iter.L.L. attack. It also shows superiority over the common CNN of the same structure in defending the Iter.L.L. attack. Part of the adversarial examples are shown in Fig.8.

5 Discussion and Conclusion

In conclusion, we proposed a one-stage kernel learning approach, which models some latent distribution of the kernel via a generative network based on the random Fourier features. Not like the existing methods that learn the distribution by the target alignment and then train a linear classifier, we directly solved the ERM problem to jointly learn the features and the classifier for better generalization performance. Further, such an end-to-end manner enables the model itself to extend to deeper layers, which leads to a multi-layer structure and implies a good kernel on features. Besides, a progressively training strategy was proposed to efficiently train the multiple generators in an inverse, layer-by-layer order. Empirical results illustrate the superiority of ML-GRFF in classification tasks over the classical two-stage, RFFs-based methods. Meanwhile, GRFF enables us to resample independent and non-deterministic parameters from the generators. Such parameter randomness is helpful in defending adversarial attacks and is empirically verified by the enhanced robustness against the adversarial examples.

One of the limitations of the proposed method is that its performance on large-scale image data and deeper networks is still restricted. In our method, for image data, the generators try to learn the distributions on or weights. However, in deeper network, like ResNet [32], a usual size of kernels in the convolution layer is . The generator cannot include neurons in its output layer since it is a heavy burden for GPUs to compute the gradients. Besides, more layers indicate more generators. Training tens of, or even hundreds of generators still remains a difficult problem.

Since the parameter distribution is modeled by the generative networks, which cost huge memory and computation resources, future work will focus on how to design cheap and efficient mechanism to model the parameter randomness and to achieve better robustness. Besides, based on the existing researches on the convergence of GANs [33, 34], a theoretical guarantee on the convergence of the GRFF will be developed in the future.

References

- Scholkopf and Smola [2001] Bernhard Scholkopf and Alexander J Smola. Learning with kernels: support vector machines, regularization, optimization, and beyond. MIT press, 2001.

- Filippone et al. [2008] Maurizio Filippone, Francesco Camastra, Francesco Masulli, and Stefano Rovetta. A survey of kernel and spectral methods for clustering. Pattern Recognition, 41(1):176–190, 2008.

- Nazarpour and Adibi [2015] Abdollah Nazarpour and Peyman Adibi. Two-stage multiple kernel learning for supervised dimensionality reduction. Pattern Recognition, 48(5):1854–1862, 2015.

- Lauriola et al. [2020] Ivano Lauriola, Claudio Gallicchio, and Fabio Aiolli. Enhancing deep neural networks via multiple kernel learning. Pattern Recognition, 101:107194, 2020.

- Rahimi and Recht [2008] Ali Rahimi and Benjamin Recht. Random features for large-scale kernel machines. In Advances In Neural Information Processing Systems, pages 1177–1184, 2008.

- Sinha and Duchi [2016] Aman Sinha and John C Duchi. Learning kernels with random features. In Advances In Neural Information Processing Systems, pages 1298–1306, 2016.

- Li et al. [2019] Chun-Liang Li, Wei-Cheng Chang, Youssef Mroueh, Yiming Yang, and Barnabas Poczos. Implicit kernel learning. In The 22nd International Conference on Artificial Intelligence and Statistics, pages 2007–2016, 2019.

- Cristianini et al. [2002] Nello Cristianini, John Shawe-Taylor, Andre Elisseeff, and Jaz S Kandola. On kernel-target alignment. In Advances In Neural Information Processing Systems, pages 367–373, 2002.

- Rudin [1962] Walter Rudin. Fourier analysis on groups, volume 121967. Wiley Online Library, 1962.

- Mohri et al. [2018] Mehryar Mohri, Afshin Rostamizadeh, and Ameet Talwalkar. Foundations of machine learning. MIT press, 2018.

- Liu et al. [2020] Fanghui Liu, Xiaolin Huang, Yudong Chen, Jie Yang, and Johan Suykens. Random fourier features via fast surrogate leverage weighted sampling. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, pages 4844–4851, 2020.

- Zhang et al. [2019] Wenyu Zhang, Zhenjiang Zhang, Lifu Wang, Han-Chieh Chao, and Zhangbing Zhou. Extreme learning machines with expectation kernels. Pattern Recognition, 96:106960, 2019.

- Belkin et al. [2019] Mikhail Belkin, Daniel Hsu, Siyuan Ma, and Soumik Mandal. Reconciling modern machine-learning practice and the classical bias–variance trade-off. Proceedings of the National Academy of Sciences, 116(32):15849–15854, 2019.

- Liu et al. [2021] Fanghui Liu, Xiaolin Huang, Yudong Chen, and Johan A. K. Suykens. Random features for kernel approximation: A survey on algorithms, theory, and beyond. IEEE Transactions on Pattern Analysis and Machine Intelligence, pages 1–1, 2021. doi: 10.1109/TPAMI.2021.3097011.

- Goodfellow et al. [2016] Ian Goodfellow, Yoshua Bengio, and Aaron Courville. Deep learning. MIT press, 2016.

- Goodfellow et al. [2014] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. In Advances In Neural Information Processing Systems, pages 2672–2680, 2014.

- Radford et al. [2016] Alec Radford, Luke Metz, and Soumith Chintala. Unsupervised representation learning with deep convolutional generative adversarial networks. In International Conference on Learning Representations, 2016.

- Karras et al. [2018] Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. Progressive growing of gans for improved quality, stability, and variation. In International Conference on Learning Representations, 2018.

- Ukai et al. [2018] Kenya Ukai, Takashi Matsubara, and Kuniaki Uehara. Hypernetwork-based implicit posterior estimation and model averaging of cnn. In Asian Conference on Machine Learning, pages 176–191, 2018.

- Ratzlaff and Fuxin [2019] Neale Ratzlaff and Li Fuxin. Hypergan: A generative model for diverse, performant neural networks. In International Conference on Machine Learning, pages 5361–5369, 2019.

- Kawaguchi and Huang [2019] Kenji Kawaguchi and Jiaoyang Huang. Gradient descent finds global minima for generalizable deep neural networks of practical sizes. In 2019 57th Annual Allerton Conference on Communication, Control, and Computing (Allerton), pages 92–99. IEEE, 2019.

- Ioffe and Szegedy [2015] Sergey Ioffe and Christian Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International Conference on Machine Learning, pages 448–456, 2015.

- Srivastava et al. [2014] Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. Dropout: a simple way to prevent neural networks from overfitting. Journal of Machine Learning Research, 15(1):1929–1958, 2014.

- Kingma and Ba [2015] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In International Conference on Learning Representations, 2015.

- Wold et al. [1987] Svante Wold, Kim Esbensen, and Paul Geladi. Principal component analysis. Chemometrics and intelligent laboratory systems, 2(1-3):37–52, 1987.

- Szegedy et al. [2014] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. Intriguing properties of neural networks. In International Conference on Learning Representations, 2014.

- Goodfellow et al. [2015] Ian J Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and harnessing adversarial examples. In International Conference on Learning Representations, 2015.

- Kurakin et al. [2017] Alexey Kurakin, Ian Goodfellow, and Samy Bengio. Adversarial machine learning at scale. In International Conference on Learning Representations, 2017.

- Fang et al. [2020] Kun Fang, Qinghua Tao, Yingwen Wu, Tao Li, Jia Cai, Feipeng Cai, Xiaolin Huang, and Jie Yang. Towards robust neural networks via orthogonal diversity. arXiv preprint arXiv:2010.12190, 2020.

- Zeiler et al. [2010] Matthew D Zeiler, Dilip Krishnan, Graham W Taylor, and Rob Fergus. Deconvolutional networks. In 2010 IEEE Computer Society Conference on computer vision and pattern recognition, pages 2528–2535. IEEE, 2010.

- LeCun et al. [1998] Yann LeCun, Léon Bottou, Yoshua Bengio, Patrick Haffner, et al. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- Liu et al. [2017] Shuang Liu, Olivier Bousquet, and Kamalika Chaudhuri. Approximation and convergence properties of generative adversarial learning. In Advances In Neural Information Processing Systems, pages 5545–5553, 2017.

- Farnia and Ozdaglar [2020] Farzan Farnia and Asuman Ozdaglar. Do GANs always have Nash equilibria? In International Conference on Machine Learning, pages 3029–3039. PMLR, 2020.

Appendix A More discussions on ML-GRFF

In this section, discussions on ML-GRFF including over 2 layers are further raised. Specifically, experiments on synthetic data are designed and executed to illustrate the feasibility of the training algorithm Alg.1 even in the case of more than 2 layers. We show that a two-layer structure is an empirically-optimal choice, simultaneously considering the improved generalization performance and the difficulty of tuning hyper-parameters. The designed experiments and results are outlined below.

Settings:

The experiments are executed on synthetic data, where follows of dimension and with . The training set includes samples and the test set includes samples. A validation set containing 20% of the training data is partitioned to evaluate the model performance during training.

For ML-GRFF with layers, we train multiple models w.r.t. . Important hyper-parameters, e.g., numbers of sampled noises and numbers of epochs , are listed in Table 3. The experiments are repeated 5 times and the average results are recorded in Fig.9.

| # layers | ||

|---|---|---|

Results and discussions:

As indicated in Fig.9, as the number of layers increases over 2, the progressively training strategy could still guarantee the convergence of ML-GRFF and produce better results than RFF, OPT-RFF and single-layer GRFF. On the other hand, the numbers of hyper-parameters of ML-GRFF also increase along with that of layers. It remains a difficult problem to efficiently tune these hyper-parameters to always achieve significant generalization performance improvements in the case of more and more layers. Based on the results in Fig.9, empirically, a two-layer structure is an appropriate choice, and thus we focus on ML-GRFF of two layers in all the experiments.