End-to-End Chinese Landscape Painting Creation Using Generative Adversarial Networks

Abstract

Current GAN-based art generation methods produce unoriginal artwork due to their dependence on conditional input. Here, we propose Sketch-And-Paint GAN (SAPGAN), the first model which generates Chinese landscape paintings from end to end, without conditional input. SAPGAN is composed of two GANs: SketchGAN for generation of edge maps, and PaintGAN for subsequent edge-to-painting translation. Our model is trained on a new dataset of traditional Chinese landscape paintings never before used for generative research. A 242-person Visual Turing Test study reveals that SAPGAN paintings are mistaken as human artwork with 55% frequency, significantly outperforming paintings from baseline GANs. Our work lays a groundwork for truly machine-original art generation.

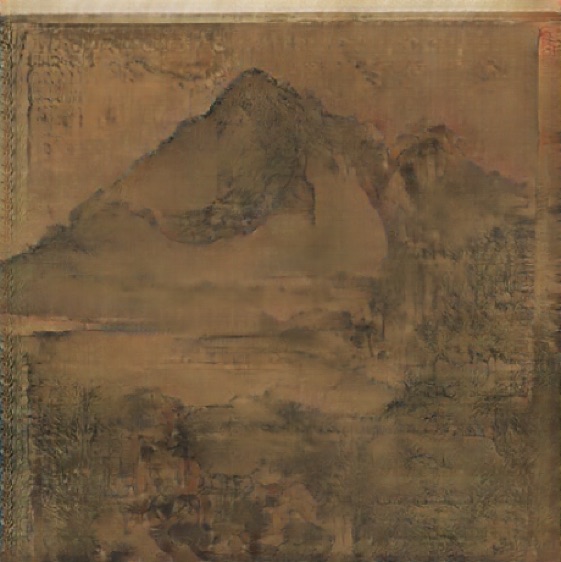

| (a) Human | (b) Baselines | (c) Ours (RaLSGAN+Pix2Pix) | (d) Ours (StyleGAN2+Pix2Pix) |

|---|---|---|---|

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8942d88a-8a34-4ef5-a078-5d79b32de243/human_1.jpg) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8942d88a-8a34-4ef5-a078-5d79b32de243/ralsgan.jpg) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8942d88a-8a34-4ef5-a078-5d79b32de243/ralsgan_p2p_1.jpg) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8942d88a-8a34-4ef5-a078-5d79b32de243/stylegan_p2p_1.jpg) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8942d88a-8a34-4ef5-a078-5d79b32de243/human_2.jpg) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8942d88a-8a34-4ef5-a078-5d79b32de243/stylegan.jpg) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8942d88a-8a34-4ef5-a078-5d79b32de243/ralsgan_p2p_2.jpg) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8942d88a-8a34-4ef5-a078-5d79b32de243/stylegan_p2p_2.jpg) |

1 Introduction

Generative Adversarial Networks (GAN) have been popularly applied for artistic tasks such as turning photographs into paintings, or creating paintings in the style of modern art [23][3]. However, there are two critically underdeveloped areas in art generation research that we hope to address.

First, most GAN research focuses on Western art but overlooks East Asian art, which is rich in both historical and cultural significance. For this reason, in this paper we focus on traditional Chinese landscape paintings, which are stylistically distinctive from and just as aesthetically meaningful as Western art.

Second, popular GAN-based art generation methods such as style transfer rely too heavily on conditional inputs, e.g. photographs [23] or pre-prepared sketches [16][32]. There are several downsides to this. A model dependant upon conditional input is restricted in the number of images it may generate, since each of its generated images is built upon a single, human-fed input. If instead the model is not reliant on conditional input, it may generate an infinite amount of paintings seeded from latent space. Furthermore, these traditional style transfer methods can only produce derivative artworks that are stylistic copies of conditional input. In end-to-end art creation, however, the model can generate not only the style but also the content of its artworks.

In the context of this paper, the limited research dedicated to Chinese art has not strayed from conventional style transfer methods [16][15][18]. To our knowledge, no one has developed a GAN able to generate high-quality Chinese paintings from end to end.

Here we introduce a new GAN framework for Chinese landscape painting generation that mimics the creative process of human artists. How do painters determine their painting’s composition and structure? They sketch first, then paint. Similarly, our 2-stage framework, Sketch-and-Paint GAN (SAPGAN), consists of two stages. The first-stage GAN is trained on edge maps from Chinese landscape paintings to produce original landscape “sketches,” and the second-stage GAN is a conditional GAN trained on edge-painting pairs to “paint” in low-level details.

The final outputs of our model are Chinese landscape paintings which: 1) originate from latent space rather than from conditional human input, 2) are high-resolution, at 512x512 pixels, and 3) possess definitive edges and compositional qualities reflecting those of true Chinese landscape paintings.

In summary, the contributions of our research are as follows:

-

•

We propose Sketch-and-Paint GAN, the first end-to-end framework capable of producing high-quality Chinese paintings with intelligible, edge-defined landscapes.

-

•

We introduce a new dataset of 2,192 high-quality traditional Chinese landscape paintings which are exclusively curated from art museum collections. These valuable paintings are in large part untouched by generative research and are released for public usage at https://github.com/alicex2020/Chinese-Landscape-Painting-Dataset.

-

•

We present experiments from a 242-person Visual Turing Test study. Results show that our model’s artworks are perceived as human-created over half the time.

2 Related Work

2.1 Generative Adversarial Networks

The Generative Adversarial Network (GAN) consists of two models—a discriminator network and a generator model —which are pitted against each other in a minimax two-player game [5]. The discriminator’s objective is to accurately predict if an input image is real or fake; the generator’s objective is to fool the discriminator by producing fake images that can pass off as real. The resulting loss function is:

| (1) |

where is taken from the real images denoted , and is a latent vector from some probability distribution by the generator .

Since its inception, the GAN has been widely undertaken as a dominant research interest for generative tasks such as video frame predictions [14], 3D modeling [21], image captioning [1], and text-to-image synthesis [29]. Improvements to GAN distinguish between fine and coarse image representations to create high-resolution, photorealistic images [7]. Many GAN architectures are framed with an emphasis on a multi-stage, multi-generator, or multi-discriminator network distinguishing between low and high-level refinement [2] [10] [31] [12].

2.2 Neural Style Transfer

Style transfer refers to the mapping of a style from one image to another by preserving the content of a source image, while learning lower-level stylistic elements to match a destination style [4].

A conditional GAN-based model called Pix2Pix performs image-to-image translation on paired data and has been popularly used for edge-to-photo image translation [8]. NVIDIA’s state-of-the-art Pix2PixHD introduced photorealistic image translation operating at up to 1024x1024 pixel resolution [25].

2.2.1 Algorithmic Chinese Painting Generation

Neural style transfer has been the basis for most published research regarding Chinese painting generation. Chinese painting generation has been attempted using sketch-to-paint translation. For instance, a CycleGAN model was trained on unpaired data to generate Chinese landscape painting from user sketches [32]. Other research has obtained edge maps of Chinese paintings using holistically-nested edge detection (HED), then trained a GAN-based model to create Chinese paintings from user-provided simple sketches [16].

Photo-to-painting translation has also been researched for Chinese painting generation. Photo-to-Chinese ink wash painting translation has been achieved using void, brush stroke, and ink wash constraints on a GAN-based architecture [6]. CycleGAN has been used to map landscape painting styles onto photos of natural scenery [18]. A mask-aware GAN was introduced to translate portrait photography into Chinese portraits in different styles such as ink-drawn and traditional realistic paintings [27]. However, none of these studies have created Chinese paintings without an initial conditional input like a photo or edge map.

3 Gap in Research and Problem Formulation

Can a computer originate art? Current methods of art generation fail to achieve true machine originality, in part due to a lack of research regarding unsupervised art generation. Past research regarding Chinese painting generation rely on image-to-image translation. Furthermore, the most popular GAN-based art tools and research are focused on stylizing existing images by using style transfer-based generative models [8][20][23].

Our research presents an effective model that moves away from the need for supervised input in the generative stages. Our model, SAPGAN, achieves this by disentangling content generation from style generation into two distinct networks.

To our knowledge, the most similar GAN architecture to ours is the Style and Structure Generative Adversarial Network () consisting of two GANs: a Structure-GAN to generate the surface normal maps of indoor scenes and Style-GAN to encode the scene’s low-level details [26]. Similar methods have also been used in pose-estimation studies generating skeletal structures as well as mapping final appearances onto those structures [24][30].

However, there are several gaps in research that we address. First, to our knowledge, this style and structure-generating approach has never been applied to art generation. Second, we significantly optimize ’s framework with comparisons between combinations of state-of-the-art GANs such as Pix2PixHD, RaLSGAN, and StyleGAN2, which have each individually allowed for high-quality, photo-realistic image synthesis [25][9][13]. We report a “meta” state-of-the-art model capable of generating human-quality paintings at high resolution, and outperforms current state-of-the-art models. Third, we show that generating minimal structures in the form of HED edge maps is sufficient to produce realistic images. Unlike (which relies on the time-intensive data collection of the XBox Kinect Sensor [26]) or pose estimation GANs (which are specifically tailored for pose and sequential image generation [24][30]), our data processing and models are likely generalizable to any dataset encodable via HED edge detection.

4 Proposed Method

4.1 Dataset

We find current datasets of Chinese paintings ill-suited for our purposes for several reasons: 1) many are predominantly scraped from Google or Baidu image search engines, which often present irrelevant results; 2) none are exclusive to the traditional Chinese landscape paintings; 3) the image quality and quantity are lacking. In the interest of promoting more research in this field, we build a new dataset of high-quality traditional Chinese landscape paintings.

Collection. Traditional Chinese landscape paintings are collected from open-access museum galleries: the Smithsonian Freer Gallery, Metropolitan Museum of Art, Princeton University Art Museum, and Harvard University Art Museum.

Cleaning. We manually filter out non-landscape artworks, and hand-crop large chunks of calligraphy or silk borders out of the paintings.

Cropping and Resizing. Paintings are first oriented vertically and resized by width to 512 pixels while maintaining aspect ratios. A painting with a low height-to-width ratio means that the image is almost square and only a center-crop of 512x512 is needed. Paintings with a height-to-width ratio greater than 1.5 are cropped into vertical, non-overlapping 512x512 chunks. Finally, all cropped portions of reoriented paintings are rotated back to their original horizontal orientation. The final dataset counts are shown in Table 1.

| Source | Image Count |

|---|---|

| Smithsonian | 1,301 |

| Harvard | 101 |

| Princeton | 362 |

| Metropolitan | 428 |

| Total | 2,192 |

|

|

|

Edge Maps. HED performs edge detection using a deep learning model which consists of fully convolutional neural networks, allowing it to learn hierarchical representations of an image by aggregating edge maps of coarse-to-fine features [28]. HED is chosen over Canny edge detection due to HED’s ability to clearly outline higher-level shapes while still preserving some low-level detail. We find from our experiments that Canny often misses important high-level edges as well as produces disconnected low-level edges. Thus, 512x512 HED edge maps are generated and concatenated with dataset images in preparation for training.

4.2 Sketch-And-Paint GAN

We propose a framework for Chinese landscape painting generation which decomposes the process into content then style generation. Our stage-I GAN, which we term “SketchGAN,” generates high-resolution edge maps from a vector sampled from latent space. A stage-II GAN, “PaintGAN,” is dedicated to image-to-image translation and receives the stage-I-generated sketches as input. A full model schema is diagrammed in Figure 3.

Within this framework, we test different combinations of existing architectures. For SketchGAN, we train RaLSGAN and StyleGAN2 on HED edge maps. For PaintGAN, we train Pix2Pix, Pix2PixHD, and SPADE on edge-painting pairs and test these trained models on edges obtained from either RaLSGAN or StyleGAN2.

4.2.1 Stage I: SketchGAN

We test two models to generate HED-like edges, which serve as “sketches.” SketchGAN candidates are chosen due to their ability to unconditionally synthesize high-resolution images:

RaLSGAN. Jolicoeur-Martineau et al. in [9] introduced a relativistic GAN for high-quality image synthesis and stable training. We adopt their Relativistic Average Least-Squares GAN (RaLSGAN) and use a PACGAN discriminator ([17]), architecture following [9].

StyleGAN2. Karras et al in [13] introduced StyleGAN2, a state-of-the-art model for unconditional image synthesis, generating images from latent vectors. We choose StyleGAN2 over its predecessors, StyleGAN [12] and ProGAN [11], because of its improved image quality and removal of visual artifacts arising from progressive growing. To our knowledge, StyleGAN2 has never been researched for Chinese painting generation.

4.2.2 Stage II: PaintGAN

PaintGAN is a conditional GAN trained with HED edges and real paintings. The following image-to-image translation models are our PaintGAN candidates.

Pix2Pix. Like the original implementation, we use a U-net generator and PACGAN discriminator [8]. The main change we make to the original architecture is to account for a generation of higher-resolution, 512x512 images by adding an additional downsampling and upsampling layer to the generator and discriminator.

Pix2PixHD. Pix2PixHD is a state-of-the-art conditional GAN for high-resolution, photorealistic synthesis [25]. Pix2PixHD is composed of a coarse-to-fine generator consisting of a global and local enhancer network, and a multiscale discriminator operating at three different resolutions.

SPADE. SPADE is the current state-of-the-art model for image-to-image translation. Building upon Pix2PixHD, SPADE reduces the “washing-away” effect of the information encoded by the semantic map, reintroducing the input map in a spatially-adaptive layer [22].

5 Experiments

To optimize the SAPGAN framework, we test combinations of GANs for SketchGAN and PaintGAN. In Section 5.3, we assess the visual quality of individual and joint outputs from these models. In Section 5.3.3, we report findings from a user study.

5.1 Training Details

Training of the two GANs occurs in parallel: SketchGAN on edge maps generated from our dataset, and PaintGAN on edge-painting pairings. The outputs of SketchGAN are then loaded into the trained PaintGAN model.

SketchGAN. RaLSGAN: The model is trained for 400 epochs. Adam optimizer is used with betas = 0.9 and 0.999, weight decay = 0, and learning rate = 0.002. StyleGAN2: We use mirror augmentation, training from scratch for 2100 kimgs, with truncation psi of 0.5.

PaintGAN. Pix2Pix: Pix2Pix is trained for 400 epochs with a batch size of 1. Adam optimizer with learning rate = 0.0002 and beta = 0.05 is use for U-net generator. Pix2PixHD: Pix2PixHD is trained for 200 epochs with a global generator, batch size = 1, and number of generator filters = 64. SPADE: SPADE is trained for 225 epochs with batch size of 4, load size of 512x512, and 64 filters in the generator’s first convolutional layer.

5.2 Baselines

DCGAN. We find that DCGAN generates only 512x512 static noise due to vanishing gradients. No DCGAN outputs are shown for comparison, but it is an implied low baseline.

RaLSGAN. RaLSGAN is trained on all landscape paintings from our dataset with same configurations as listed above.

StyleGAN2. StyleGAN2 is trained on all landscape paintings with the same configurations as listed above.

5.3 Visual Quality Comparisons

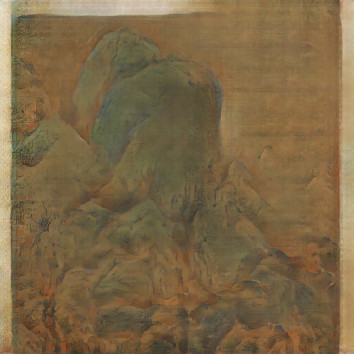

| (a) Human | (b) DCGAN | (c) RaLSGAN | (d) StyleGAN2 |

|---|---|---|---|

|

|

|

|

|

|

|

|

| (a) Edge | (b) SPADE | (c) Pix2PixHD | (d) Pix2Pix |

|---|---|---|---|

|

|

|

|

|

|

|

|

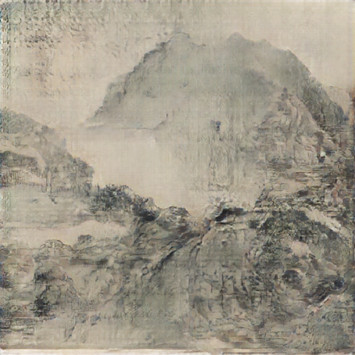

| (a) Human | (b) Baseline (StyleGAN2 [13]) | (c) Baseline (RaLSGAN [9]) | (d) Ours (SAPGAN) | (e) Ours (SAPGAN) |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

5.3.1 SketchGAN and PaintGAN Output

We first examine the training results of SketchGAN and PaintGAN separately.

SketchGAN. DCGAN, RaLSGAN, and StyleGAN2 are tested for their ability to synthesize realistic edges. Figure 4 shows sample outputs from these models when trained on HED edge maps. DCGAN edges show little semblance of landscape definition. Meanwhile, StyleGAN and RaLSGAN outputs are clear and high-quality. Their sketches outline high-level shapes of mountains, as well as low-level details such as rocks in the terrain.

PaintGAN. PaintGAN candidates SPADE, Pix2PixHD, and Pix2Pix are shown in Figure 5. StyleGAN2-generated sketches are used as conditional input to a) SPADE, b) Pix2PixHD, and c) Pix2Pix (Figure 5). Noticeably, SPADE outputs’ colors show evidence of over-fitting; the colors are oversaturated, yellow, and unlike those of normal landscape paintings (Figure 5b). Thus, we proceed further SPADE testing without SPADE. In Pix2PixHD, there are also visual artifacts, seen from the halo-like coloring around the edges of the mountains (Figure 5c). Pix2Pix performs the best, with fewer visual artifacts and more varied coloring. PaintGAN candidates do poorly at the granular level needed to “fill in” Chinese calligraphy, producing the blurry characters (Figure 5, bottom row). However, within the scope of this research, we focus on generating landscapes rather than Chinese calligraphy, which merits its own paper.

5.3.2 Baseline Comparisons

Both baseline models underperform in comparison to our SAPGAN models. Baseline RaLSGAN paintings show splotches of color rather than any meaningful representation of a landscape, and baseline StyleGAN2 paintings show distorted, unintelligible landscapes (Figure 6).

Meanwhile, SAPGAN paintings are superior to baseline GAN paintings in regards to realism and artistic composition. The SAPGAN configuration, RaLSGAN edges + Pix2Pix (for brevity, the word “edges” is henceforth omitted when referencing SAPGAN models), would sometimes even separate foreground objects from background, painting distant mountains with lighter colors to establish a fading perspective (Figure 6e, bottom image). RaLSGAN+Pix2Pix also learned to paint mountainous terrains faded in mist and use negative space to represent rivers and lakes (Figure 6e, top image). The structural composition and well-defined depiction of landscapes mimic characteristics of traditional Chinese landscape paintings, adding to the paintings’ realism.

5.3.3 Human Study: Visual Turing Tests

We recruit 242 participants to take a Visual Turing Test. Participants are asked to judge if a painting is human or computer-created, then rate its aesthetic qualities. Among the test-takers, 29 are native Chinese speakers and the rest are native English speakers. The tests consist of 18 paintings each, split evenly between human paintings, paintings from the baseline model RaLSGAN, and paintings from SAPGAN (RaLSGAN+Pix2Pix).

For each painting, participants are asked three questions:

Q1: Was this painting created by a human or computer? (Human, Computer)

Q2: How certain were you about your answer? (Scale of 1-10)

Q3: The painting was: Aesthetically pleasing, Artfully-composed, Clear, Creative. (Each statement has choices: Disagree, Somewhat disagree, Somewhat agree, Agree)

The Student’s two-tailed t-test is used for statistical analysis, with denoting statistical significance.

Results. Among the 242 participants, paintings from our model where mistaken as human-produced over half the time. Table 2 compares the frequency that SAPGAN versus baseline paintings were mistaken for human. While SAPGAN paintings passed off as human art with a 55% frequency, the baseline RaLSGAN paintings did so only 11% of the time ().

Furthermore, as Table 3 shows, our model was rated consistently higher than baseline in all of the artistic categories: “aesthetically pleasing,” “artfully-composed,” “clear,” and “creativity” (all comparisons ). However, in these qualitative categories, both the baseline and SAPGAN models were rated consistently lower than human artwork. The category that SAPGAN had the highest point difference from human paintings was the “Clear” category. Interestingly, though lacking in realism, baseline paintings performed best (relative to their other categories) in “Creativity”—most likely due to the abstract nature of the paintings which deviated typical landscape paintings.

We also compared results of the native Chinese- versus English-speaking participants to see if cultural exposure would allow Chinese participants to judge the paintings correctly. However, the Chinese-speaking test-takers scored 49.2% on average, significantly lower than the English-speaking test-takers, who scored 73.5% on average (). Chinese speakers also mistook SAPGAN paintings for human 70% of the time, compared with the overall 55%. Evidently, regardless of familiarity with Chinese culture, the participants had trouble distinguishing the sources of the paintings, indicating the realism of SAPGAN-generated paintings.

| Average | Stddev | |

|---|---|---|

| Baseline | 0.11 | 0.30 |

| Ours | 0.55 (p 0.0001) | 0.17 |

| Aesthetics | Composition | Clarity | Creativity | |

|---|---|---|---|---|

| Baseline | 1.24 | 1.25 | 1.67 | 0.90 |

| Ours | 0.35* | 0.37* | 0.93* | 0.34* |

| (a) Baseline | (b) Baseline | (c) Ours | (d) Ours | |

| Query |  |

|

|

|

| Nearest Neighbors |  |

|

|

|

|

|

|

|

|

|

|

|

|

5.4 Nearest Neighbor Test

The Nearest Neighbor Test is used to judge a model’s ability to deviate from its training dataset. To find a query’s closest neighbors, we compute pixel-wise distances from the query image to each image in our dataset. Results show that baselines, especially StyleGAN2, produce output that is visually similar to training data. Meanwhile, paintings produced by our models creatively stray from the original paintings (Figure 8). Thus, unlike baseline models, SAPGAN does not memorize its training set and is robust to over-fitting, even on a small dataset.

5.5 Latent Interpolations

Latent walks are shown to judge the quality of interpolations by SAPGAN (Figure 9). With SketchGAN (StyleGAN2), we first generate six frames of sketch interpolations from two random seeds, then feed them into PaintGAN (Pix2Pix) to generate interpolated paintings. Results show that our model can generate paintings with intelligible landscape structures at every step, most likely due to the high quality of StyleGAN2’s latent space interpolations as reported in [13].

6 Future Work

Future work may substitute different GANs for SketchGAN and PaintGAN, allowing for more functionality such as multimodal generation of different painting styles [33]. Combinations GANs that are capable of adding brushstrokes or calligraphy onto the generated paintings may also increase appearances of authenticity [19].

Importantly, apart from being trained on a Chinese landscape painting dataset, our proposed model is not specifically tailored to Chinese paintings and may be generalized to other artistic styles which also emphasize edge definition. Future work may test this claim.

7 Conclusion

We propose the first model that creates high-quality Chinese landscape paintings from scratch. Our proposed framework, Sketch-And-Paint GAN (SAPGAN), splits the generation process into sketch generation to create high-level structures, and paint generation via image-to-image translation. Visual quality assessments find that paintings from the RaLSGAN+Pix2Pix and StyleGAN2+Pix2Pix configurations for SAPGAN are more edge-defined and realistic in comparison to baseline paintings, which fail to evoke intelligible structures. SAPGAN is trained on a new dataset of exclusively museum-curated, high-quality traditional Chinese landscape paintings. Among 242 human evaluators, SAPGAN paintings are mistaken for human art over half of the time (55% frequency), significantly higher than that of paintings from baseline models. SAPGAN is also robust to over-fitting compared with baseline GANs, suggesting that it can creatively deviate from its training images. Our work supports the possibility that a machine may originate artworks.

8 Acknowledgments

We thank Professor Brian Kernighan, the author’s senior thesis advisor, for his guidance and mentorship throughout the course of this research. We also thank Princeton Research Computing for computing resources, and the Princeton University Computer Science Department for funding.

References

- [1] Bo Dai, Sanja Fidler, Raquel Urtasun, and Dahua Lin. Towards diverse and natural image descriptions via a conditional gan. In Proceedings of the IEEE International Conference on Computer Vision, pages 2970–2979, 2017.

- [2] Emily L Denton, Soumith Chintala, Rob Fergus, et al. Deep generative image models using a laplacian pyramid of adversarial networks. In Advances in neural information processing systems, pages 1486–1494, 2015.

- [3] Ahmed Elgammal, Bingchen Liu, Mohamed Elhoseiny, and Marian Mazzone. Can: Creative adversarial networks, generating“ art” by learning about styles and deviating from style norms. arXiv preprint arXiv:1706.07068, 2017.

- [4] Leon A Gatys, Alexander S Ecker, and Matthias Bethge. A neural algorithm of artistic style. arXiv preprint arXiv:1508.06576, 2015.

- [5] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. In Advances in neural information processing systems, pages 2672–2680, 2014.

- [6] Bin He, Feng Gao, Daiqian Ma, Boxin Shi, and Ling-Yu Duan. Chipgan: A generative adversarial network for chinese ink wash painting style transfer. In Proceedings of the 26th ACM international conference on Multimedia, pages 1172–1180, 2018.

- [7] He Huang, Philip S Yu, and Changhu Wang. An introduction to image synthesis with generative adversarial nets. arXiv preprint arXiv:1803.04469, 2018.

- [8] Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1125–1134, 2017.

- [9] Alexia Jolicoeur-Martineau. The relativistic discriminator: a key element missing from standard gan. arXiv preprint arXiv:1807.00734, 2018.

- [10] Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196, 2017.

- [11] Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196, 2017.

- [12] Tero Karras, Samuli Laine, and Timo Aila. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4401–4410, 2019.

- [13] Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8110–8119, 2020.

- [14] Yong-Hoon Kwon and Min-Gyu Park. Predicting future frames using retrospective cycle gan. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 1811–1820, 2019.

- [15] Bo Li, Caiming Xiong, Tianfu Wu, Yu Zhou, Lun Zhang, and Rufeng Chu. Neural abstract style transfer for chinese traditional painting. In Asian Conference on Computer Vision, pages 212–227. Springer, 2018.

- [16] Daoyu Lin, Yang Wang, Guangluan Xu, Jun Li, and Kun Fu. Transform a simple sketch to a chinese painting by a multiscale deep neural network. Algorithms, 11(1):4, 2018.

- [17] Zinan Lin, Ashish Khetan, Giulia Fanti, and Sewoong Oh. Pacgan: The power of two samples in generative adversarial networks. In Advances in neural information processing systems, pages 1498–1507, 2018.

- [18] XiaoXuan Lv and XiWen Zhang. Generating chinese classical landscape paintings based on cycle-consistent adversarial networks. In 2019 6th International Conference on Systems and Informatics (ICSAI), pages 1265–1269. IEEE, 2019.

- [19] Pengyuan Lyu, Xiang Bai, Cong Yao, Zhen Zhu, Tengteng Huang, and Wenyu Liu. Auto-encoder guided gan for chinese calligraphy synthesis. In 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), volume 1, pages 1095–1100. IEEE, 2017.

- [20] Mehdi Mirza and Simon Osindero. Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784, 2014.

- [21] Thu Nguyen-Phuoc, Chuan Li, Lucas Theis, Christian Richardt, and Yong-Liang Yang. Hologan: Unsupervised learning of 3d representations from natural images. In Proceedings of the IEEE International Conference on Computer Vision, pages 7588–7597, 2019.

- [22] Taesung Park, Ming-Yu Liu, Ting-Chun Wang, and Jun-Yan Zhu. Semantic image synthesis with spatially-adaptive normalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2337–2346, 2019.

- [23] Wei Ren Tan, Chee Seng Chan, Hernán E Aguirre, and Kiyoshi Tanaka. Artgan: Artwork synthesis with conditional categorical gans. In 2017 IEEE International Conference on Image Processing (ICIP), pages 3760–3764. IEEE, 2017.

- [24] Ruben Villegas, Jimei Yang, Yuliang Zou, Sungryull Sohn, Xunyu Lin, and Honglak Lee. Learning to generate long-term future via hierarchical prediction. arXiv preprint arXiv:1704.05831, 2017.

- [25] Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 8798–8807, 2018.

- [26] Xiaolong Wang and Abhinav Gupta. Generative image modeling using style and structure adversarial networks. In European conference on computer vision, pages 318–335. Springer, 2016.

- [27] Yuan Wang, Weibo Zhang, and Peng Chen. Chinastyle: A mask-aware generative adversarial network for chinese traditional image translation. In SIGGRAPH Asia 2019 Technical Briefs, pages 5–8. 2019.

- [28] Saining Xie and Zhuowen Tu. Holistically-nested edge detection. In Proceedings of the IEEE international conference on computer vision, pages 1395–1403, 2015.

- [29] Tao Xu, Pengchuan Zhang, Qiuyuan Huang, Han Zhang, Zhe Gan, Xiaolei Huang, and Xiaodong He. Attngan: Fine-grained text to image generation with attentional generative adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1316–1324, 2018.

- [30] Yichao Yan, Jingwei Xu, Bingbing Ni, Wendong Zhang, and Xiaokang Yang. Skeleton-aided articulated motion generation. In Proceedings of the 25th ACM international conference on Multimedia, pages 199–207, 2017.

- [31] Jianwei Yang, Anitha Kannan, Dhruv Batra, and Devi Parikh. Lr-gan: Layered recursive generative adversarial networks for image generation. arXiv preprint arXiv:1703.01560, 2017.

- [32] Le Zhou, Qiu-Feng Wang, Kaizhu Huang, and Cheng-Hung Lo. An interactive and generative approach for chinese shanshui painting document. In 2019 International Conference on Document Analysis and Recognition (ICDAR), pages 819–824. IEEE, 2019.

- [33] Jun-Yan Zhu, Richard Zhang, Deepak Pathak, Trevor Darrell, Alexei A Efros, Oliver Wang, and Eli Shechtman. Toward multimodal image-to-image translation. In Advances in neural information processing systems, pages 465–476, 2017.