Encrypted Value Iteration and Temporal Difference Learning over Leveled Homomorphic Encryption

Abstract

We consider an architecture of confidential cloud-based control synthesis based on Homomorphic Encryption (HE). Our study is motivated by the recent surge of data-driven control such as deep reinforcement learning, whose heavy computational requirements often necessitate an outsourcing to the third party server. To achieve more flexibility than Partially Homomorphic Encryption (PHE) and less computational overhead than Fully Homomorphic Encryption (FHE), we consider a Reinforcement Learning (RL) architecture over Leveled Homomorphic Encryption (LHE). We first show that the impact of the encryption noise under the Cheon-Kim-Kim-Song (CKKS) encryption scheme on the convergence of the model-based tabular Value Iteration (VI) can be analytically bounded. We also consider secure implementations of TD(0), SARSA(0) and Z-learning algorithms over the CKKS scheme, where we numerically demonstrate that the effects of the encryption noise on these algorithms are also minimal.

I INTRODUCTION

The growing demand of applications such as smart grids [1] and smart cities [2] assures the important role of data usage and connectivity via advanced data-driven algorithms. However, the utility of advanced data-driven algorithms may be limited in many real-world control systems since components within the network are often resource-constrained. The cloud-based control can be an appealing solution in such scenarios, although a naive outsourcing comes with a steep cost of privacy. Recent literature in control has shown that the use of HE could mitigate the issue of privacy to a certain extent. However, there are many remaining challenges in encrypted control technologies. For instance, in the current encrypted control literature, the potential of FHE is not fully utilized, even though FHE would be necessary to encrypt advanced control algorithms, such as deep reinforcement learning [3]. RL framework has recently accomplished impressive feats with successful applications from AlphaGo Zero to traffic signal control, robot controls and many others, [4, 5, 6]. Its success is also bolstered by the availability of large-scale data and connectivity. Thus, we wish to study a cloud-based control synthesis architecture which can integrate two promising technologies, namely HE and RL. In particular, we examine the effects of using HE on RL both theoretically and numerically.

The first applications of HE to control systems utilized PHE since its relatively low computational overhead was suitable for real-time implementations. On the other hand, FHE or LHE can support more general classes of computations in the ciphertext domain, although its computational overhead makes it less suitable for real-time systems. In this regard, we first make an observation that the encrypted control can be better suited to the control synthesis problems rather than control implementations. For instance, explicit model predictive control (MPC) or RL problems require heavy computations or a large set of data to synthesize the control policy but implementing such policy may be kept local as they are often relatively light computations. In addition, real-time requirement is less stringent in control law synthesis problems than control law implementation counterpart.

I-A Related Work

Various HE schemes were applied to the linear controller in [7, 8, 9]. Importance of quantization and using non-deterministic encryption scheme was identified in [7]. Necessary conditions on encryption parameters for closed-loop stability were shown by [8]. The feasibility of FHE in encrypted control was first shown in [9] by managing multiple controllers. Evaluation of affine control law in explicit MPC was shown by [10]. In [11] encrypted implicit MPC control was shown to be feasible but the cost of privacy as increased complexity was identified. Real-time proximal gradient method was used in [12] to treat encrypted implicit MPC control and it showed undesired computation loads of encrypted control system. Privacy and performance degradation was further highlighted in [13] through experiments on motion control systems. Dynamic controller was encrypted in [14] using FHE exploiting the stability of the system while not relying on bootstrapping. Despite significant progress, encrypted control has been limited to simple computations where the motivation for cloud computing is questionable.

I-B Contribution

To study the feasibility of RL over HE, this paper makes the following contributions. First, as a first step towards more advanced problems of private RL, we formally study the convergence of encrypted tabular VI. We show that the impact of the encryption-induced noise can be made negligible if the -factor is decrypted in each iteration. Second, we present implementation results of temporal difference (TD) learning (namely, TD(0), SARSA(0), and Z-learning [15]) over the CKKS encryption scheme and compare their performances with un-encrypted cases. Although the formal performance analysis for this class of algorithms are difficult with encryption noise, we show numerically that these RL schemes can be implemented accurately over LHE.

I-C Preview

In section II, we summarize the relevant essentials of RL and HE. In section III, we set up the encrypted control synthesis problem and specialize it to the encrypted model-based and model-free RL algorithms. We analyze how the encryption-induced noise influences the convergence of standard VI. In section IV-A, we perform the simulation studies to demonstrate the encrypted model-free RL over HE. Finally, in section V, we summarize our contributions and discuss the future research directions.

II Preliminariess

II-A Reinforcement Learning

RL can be formalized through the finite state Markov decision process (MDP). We define a finite MDP as a tuple , where is a finite state space, is a finite action space, is a Markov state transition probability and is a reward function for the state-transition. A policy can be formalized via a sequence of stochastic kernels , where is a mapping for each state to the probability of selecting the action given the history .

We consider a discounted problem with a discount factor throughout this paper. Under a stationary policy , the Bellman’s optimality equation can be defined through a recursive operator applied on the initial value vector of the form :

| (1) |

Throughout this paper, we adopt the conventional definition for the maximum norm . The following results are standard [16].

Lemma 1

-

(a)

For any vectors and , we have

-

(b)

The optimal value vector is the unique solution to the equation ,

-

(c)

We have

for any vector .

If some policy attains , we call the optimal policy and the goal is to attain the optimal policy for the given MDP environment.

Often the state values are written conveniently as a function of state-action pairs called -values:

| (2) |

and for all state , .

RL is a class of algorithms that solves MDPs when the model of the environment (e.g., and/or ) is unknown. RL can be further classified into two groups: model-based RL and model-free RL [17]. A simple model-based RL first estimates the transition probability and reward function empirically (to build an artificial model) then utilizes the VI to solve the MDP. Model-free RL on the other hand directly computes the value function by averaging over episodes (Monte Carlo methods) or by estimating the values iteratively like TD learning.

TD(0) is one of the simplest TD learning algorithm where the tuple is sampled from the one-step ahead observation [18]. The on-policy iterative update rule for estimating the value is called TD(0) and is written as follows:

| (3) |

denotes the TD error and is computed with the sample as

starting with an initial guess . We use to indicate that the values are estimated. With some standard assumptions on the step size and exploring policies, the convergence of TD(0) is standard in the literature, see [19].

II-B Homomorphic Encryption

HE is a structure-preserving mapping between the plain-text domain and the cipher-text domain . Thus, encrypted data in with a function can be outsourced to the cloud for confidential computations.

PHE supports only the addition or the multiplication. On the other hand, LHE can support both the addition and the multiplication but the noise growth of ciphertext multiplication is significant without the bootstrapping [20]. The bootstrapping operation can promote a LHE scheme to FHE enabling unlimited number of multiplications but it requires a heavy computation resource on its own.

In order to use both addition and multiplication necessary in evaluating RL algorithms, we use an LHE. In particular, we employ CKKS encryption scheme proposed in [21] as it is well suited for engineering applications and also supports parallel computations. Also, there exists a widely available library optimized for implementations. We will only list and describe here several key operations and properties with more detailed information found in Appendix. We are particularly concerned with the fact that the CKKS encryption is noisy (due to error injection for security) but if properly implemented, the noise is manageable.

The security of CKKS scheme assumes the difficulty of the Ring Learning With Errors (RLWE) problem. Security parameters are a power-of-two integer , a ciphertext modulus and the variance used for drawing error from a distribution. The operation KeyGen uses security parameters (, , ) to create a -bit public key pk, a secret key sk, and an evaluation key evk.

III Encrypted learning over the cloud

III-A Cloud-based reinforcement learning

Our control loop consists of the plant (environment) and the controller (client) and the cloud server. We are concerned with a possible data breach at the cloud. In order to prevent the privacy compromise of our data such as training data set and other parameters at play, we add the homomorphic encryption module as seen in Fig. 1. In particular, we ignore the malleability risks [22] inherent to the considered HE scheme, which is beyond the scope of this paper. In our context, can be thought of as a ciphertext domain implementation of RL algorithms. However, is a general control synthesis that can be evaluated remotely. Ideal uses for would include advanced data-driven control synthesis procedures such as MPC, or Deep RL. We emphasize that the encryption adds communication delays for control policy synthesis but does not add an extra computation cost to control implementation. For RL, the controller’s task is to implement the most up-to-date state-action map that is recently synthesized, which can be done locally.

We assume the tabular representation of the state and action pairs. The client explores its plant and samples the information set . Other necessary parameters such as learning rate and are grouped as denoting the hyperparmeters. This set of data is then encrypted by the CKKS encryption module to generate ciphertexts , , , , and , which are encrypted data for , , , , and , and will be used to implement (3). Note that the subscripts refer to the value when these ciphertexts are decrypted. The cloud is instructed on how to evaluate the requested algorithms in ciphertexts. One may concern about the cloud knowing the form of the algorithm, but this can be resolved by the circuit privacy. That is, we can hide the actual computation steps by adding zeros or multiplying ones in ciphertexts on the original algorithm. We also assume that the cloud is semi-honest so that the cloud faithfully performs the algorithm as instructed. The output of the cloud is a newly synthesized policy , which, after a decryption, can be accessed on real-time by the client to produce the control action .

As a first step towards investigating more sophisticated RL algorithms, we specialize the proposed framework to solving RL problems in finite MDP with more elementary methods. In particular, we consider the basic model-based RL and model-free RL using tabular algorithms. For the model-based, we theoretically analyze the convergence of VI under the presence of encryption-induced noise. For the model-free, we implement the encrypted TD algorithms to estimate the values and investigate how the encryption noise affects the output.

III-B Encrypted Model-based RL

The client is assumed to explore the plant, sample the information sets and build the model first. A simple model of the state-transition probability can be in the form

| (4) |

where denotes the number of visits to the triplet and to the pair with . Similarly, the reward function can be quantified by the average rewards accumulated per the state-action pair.

Then, the client can encrypt the initial values of the state along with the model and and the discount rate and request the cloud to perform the computation of (2) over the ciphertext domain. Since comparison over HE is non-trivial, the max operation is difficult to be implemented homomorphically. Thus, the cloud computes the values and the controller will receive the decrypted values and completes the VI process for iteration index :

| (5) |

However, note that the values computed by the cloud will be noisy due to the encryption, hence we use and to denote the noisy value. Let be the encryption-induced noise produced by computations over HE. Then, the noisy values can be written as

| (6) |

where the noise term is bounded such that for some . Appendix shows how depends on the encryption parameter and operations used to evaluate the given algorithm.

We now analyze the discrepancy between two sequences of vectors and . We separate the analysis into the synchronous and the asynchronous cases.

III-B1 Synchronous VI

The synchronous VI means that the VI is applied to all state simultaneously. First note that is computed by the noiseless VI

| (7) | ||||

and is computed by the noisy VI

| (8) |

We will utilize the following simple lemma, whose proof is straightforward and hence omitted.

Lemma 2

For any arbitrary vectors and such that ,

| (9) |

for some constant satisfying .

By applying Lemma on the noisy VI (8),

we obtain

| (10) | ||||

where the vector satisfies . In the vector form, can be written as

| (11) | ||||

We can now quantify the worst-case performance degradation due to the encryption-induced noise. The result is summarized in the next Theorem.

Theorem 1

Proof:

Let be the sequence of vectors computed by the noiseless VI (7) with arbitrary initial conditions and . Then,

| (12a) | ||||

| (12b) | ||||

| (12c) | ||||

| (12d) | ||||

where the first inequality (12b) follows from (11), and (12c) follows from the triangular inequality, and the last inequality is due to Lemma (1). Now define a sequence of positive numbers by

| (13) |

with . By geometric series,

Comparing (12) and (13), we have by induction

Therefore,

| (14) |

Since (14) holds for any , we can pick for which we have for by lemma (1), we obtain the desired result. ∎

III-B2 Asynchronous VI

It is often necessary or beneficial to run the VI algorithm asynchronously, or state-by–state, for simulations. We can define the asynchronous noiseless and noisy VI with the new mapping and , respectively.

| (15) |

| (16) |

In each case, we make the following assumption.

Assumption 1

-

(a)

Each state is visited for updates infinitely often.

-

(b)

There exists a finite constant , which is greater than or equal to the number of updates to sweep through each state at least once.

Assumption 1 is essential for the following theorem as it ensures that the mapping and are contraction operators as long as all the states are visited at least once and that the time it takes for visiting all the states at least once is finite. A common approach to ensure this assumption would be to incorporate exploring actions as seen in -greedy policy.

Now, define the iteration sub-sequence such that and each state is visited at least once between and . For instance, consider a finite MDP with states denoted . If the state trajectory is for , then the sub-sequence formed is .

Theorem 2

Let be the optimal value function as defined previously. Suppose is the sequence of vectors computed by the asynchronous noisy VI (16) under Assumption 1. For an arbitrary initial condition , we have

Proof:

By definition of mapping and , we can write:

| (17) | ||||

Similar to the proof of Theorem , the inequality is due to the bound of the error vector and the triangle inequality. Now, we note that the asynchronous mapping is a contraction mapping with respect to a sequence index . Thus, we have

By forming a sequence with as it is done in the previous proof, and by the Assumption (b), it yields

| (18) |

By expanding the left hand side and applying the reverse traingle inequality

| (19) | ||||

for which we know the second term approaches zero in the limit. Since the left hand side is bounded in the limit with constants, we achieve the proposed result. ∎

Theorem 2 assures that the encrypted VI outsourced to the cloud also guarantees a comparable performance asymptotically.

III-C Encrypted Model-free RL

Consider again the TD(0) update rule, (3). The cloud receives the set of ciphertexts , , , , . Then, the update rule in the ciphertext domain becomes:

| (20) |

Upon decryption of , we need to remember that the computed value is corrupted with the noise. A similar error analysis for the TD algorithms can be performed (perhaps using stochastic approximation theory). However, the formal analysis in this domain presents some difficulties. Whereas a conventional theory on stochastic approximation with an exogenous noise seen in [16] requires the noise to approach zero in the limit and bounded, the encryption-induced noise satisfies only the bounded condition. We thus only provide some implementation results at this time to gain some insight. We hope to rigorously prove this case as in the future work.

IV Simulation

We present implementation results of various model-free (TD(0), SARSA(0), and Z-learning) RL algorithms over the CKKS implementation scheme. The environment used is the grid world with the state size for all three and the action size for the first two. The available actions are up, up-right, right, down-right, down, down-left, left, up-left, and stay. The reward is fixed as randomly set real numbers to simulate unknown environment. The client starts with a policy at the box coordinate (1,1), top-left and the grid world has three trap states and one goal state, marked by letters T and G, which terminate the current episode. In each episode, we set the maximum number of steps at which the current episode terminates as well. The learning parameter set consists of the discount factor, learning rate, and exploration percentage. As soon as the new data set containing the reward (or cost) and values of states and become available, the client uploads the encrypted data to the cloud.

Choosing encryption parameters is not straightforward but there exists a standardization effort, [23]. Also, an open-source library SEAL [24] provides a practical tutorial and accessible tools along with encryption parameter generator. We use the default 128-bit security () encryption parameters () generated by SEAL with the user input of . These are listed in Table I. The size of need not be this large as there is no parallel operation exploited for the particular example application considered in this paper. However, future applications such as multi agents RL or deep RL can find such capability useful as they contain many batch operations.

The CKKS encryption without employing a bootstrapping allows a predetermined depth for multiplication. Thus, for interested users, it is important to note the largest depth of ciphertext multiplications needed to evaluate the algorithm at hand. For example, TD(0) update rule considered in equation (20) requires at the most. This is factored into the design your encryption parameters.

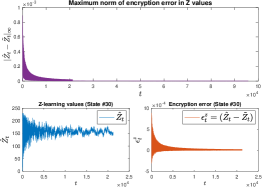

To examine the effect of encryption noise, we created two tables. One keeps track of the values of un-encrypted updates and the other keeps track of the values updated over HE. We recorded the error between two values through each iteration. At final stages of learning, these errors were confirmed to be bounded by some constant of very small magnitude. Although formal convergence analysis of model-free algorithms such as TD(0) are currently not available in this paper, simulation results suggest that they can be performed in the encrypted domain as equally well. Formal analysis of these algorithms based on the analysis already done is left as future research.

| Param. | TD(0) | SARSA(0) | Z-learning |

|---|---|---|---|

| 8192 | 8192 | 16384 | |

| 219 | 219 | 441 | |

IV-A Prediction: TD(0)

We implement a GLIE (greedy in the limit with infinite exploration) type learning policy seen in [25], where the client starts completely exploratory () and slowly becomes greedy () with more episodes. The learning rate is set to be for non-visited states and for visited states, we set , with counting the number of visits to the state at time , to satisfy the standard learning rate assumptions. The discount factor is . *RULE* for TD(0) is the right hand side of equation (20).

Client (Start)

Cloud

Client

IV-B Control: SARSA(0)

The update rule for SARSA(0) is:

| (21) |

where

The policy for SARSA(0) is also a GLIE (greedy in the limit with infinite exploration) type learning policy used in TD(0). The learning rate and the discount factor are unchanged. *RULE* for SARSA(0) is the right hand side of equation (20) after substituting and with and .

.

IV-C Control: Z-Learning

An off-policy learning control called Z-learning was proposed in [15]. It is formulated on the key observation that the control action can be regarded as an effort to change the passive state transition dynamics. The update rule for Z-learrning is:

| (22) |

where . The estimate is named the desirability function and it uses the cost associated with the unknown state-transitions, rather than the reward. Z-learrning by default is exploratory. Thus, we fix the policy to be greedy () but it will continuously explore, too. The learning rate and the discount factor are unchanged. *RULE* for Z-learning is evaluating the right hand side of equation (22) after encrypting. Since evaluating over a ciphertext is not straightforward, we approximate with Taylor series.

IV-D Results

We observed that encryption-induced noise over time approached some small numbers with minimal fluctuations. Moreover, the effects of encryption-induced noise were minimal regarding the convergence of values. This observation complements the analysis on VI in Section III as the TD-algorithms are sampling based value estimation algorithms.

Encryption and decryption are all done at the client’s side and so significant computation time is expected for the client. For this reason, the ideal application will require less frequent needs for uploading and downloading and more advanced synthesis procedure that operate on a large set of data.

V Conclusion and Future Work

We considered an architecture of confidential cloud-based RL over LHE. For the model-based RL, we showed that the impact of the encryption noise on the convergence performance can be analytically bounded. For the model-free RL, we numerically tested implementations of TD(0), SARSA(0), and Z-learning and numerically confirmed that the impacts of the encryption noise on these algorithms are also minimal. Although the applications considered do not necessarily require the cloud, we can develop the framework to adopt to more advanced synthesis algorithms in the future.

There are numerous directions to extend this paper. First, the effort to derive analytical performance guarantees for encrypted RL (including the model-free schemes considered in this paper) is necessary to prove the utility of the encrypted RL concept. Second, an encrypted RL scheme that does not require periodic decryption (similar to the case in [26]) is highly desired as the periodic decryption and communication between the cloud and the controller is costly. Finally, more extensive numerical experiments are needed to fully understand the potential of advanced RL (e.g., deep Q learning) over HE. The interplay between computational overhead, delay, accuracy and security levels must be studied from both theoretical and experimental perspectives.

VI Appendix

VI-A CKKS Encryption Scheme

CKKS encoding procedure maps the vector of complex numbers sized to the message in plain-text space . The plaintext is defined as the set , where denotes the polynomials of whose coefficients are integers modulo , and denotes the degree cyclotomic polynomials. This allows for CKKS encryption scheme to accept multiple complex-valued inputs of a size at once, which is convenient for many computing applications.

Then, encryption on the message yields the ciphertext

| (23) |

Each ciphertext is designated with a level , which indicates how many multiplications you can perform before decryption fails. This is a key limitation in comparison to FHE.

The encryption also creates a noise polynomial . A properly encrypted ciphertext has a bound on the message and also on the noise when the norm is defined on those polynomials. Thus, a CKKS ciphertext can be defined as a tuple . Then, decryption can be defined as follows.

| (24) |

where is the coefficient modulus at level .

For ciphertexts at the same level ,

| (25) | ||||

| (26) |

where .

References

- [1] E. Hossain, I. Khan, F. Un-Noor, S. S. Sikander, and M. S. H. Sunny, “Application of big data and machine learning in smart grid, and associated security concerns: A review,” IEEE Access, vol. 7, pp. 13 960–13 988, 2019.

- [2] M. Mohammadi, A. Al-Fuqaha, M. Guizani, and J. Oh, “Semisupervised deep reinforcement learning in support of IoT and smart city services,” IEEE Internet of Things Journal, vol. 5, no. 2, pp. 624–635, April 2018.

- [3] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski, et al., “Human-level control through deep reinforcement learning,” Nature, vol. 518, no. 7540, p. 529, 2015.

- [4] D. Silver, J. Schrittwieser, K. Simonyan, I. Antonoglou, A. Huang, A. Guez, T. Hubert, L. Baker, M. Lai, A. Bolton, Y. Chen, T. Lillicrap, F. Hui, L. Sifre, G. van den Driessche, T. Graepel, and D. Hassabis, “Mastering the game of go without human knowledge,” Nature, pp. 354–, Oct. 2017.

- [5] I. Arel, C. Liu, T. Urbanik, and A. G. Kohls, “Reinforcement learning-based multi-agent system for network traffic signal control,” IET Intelligent Transport Systems, vol. 4, no. 2, pp. 128–135, June 2010.

- [6] S. Levine, C. Finn, T. Darrell, and P. Abbeel, “End-to-end training of deep visuomotor policies,” J. Mach. Learn. Res., vol. 17, no. 1, p. 1334–1373, Jan. 2016.

- [7] K. Kogiso and T. Fujita, “Cyber-security enhancement of networked control systems using homomorphic encryption,” 2015 54th IEEE Conference on Decision and Control (CDC), pp. 6836–6843, 2015.

- [8] F. Farokhi, I. Shames, and N. Batterham, “Secure and private cloud-based control using semi-homomorphic encryption,” IFAC-PapersOnLine, vol. 49, no. 22, pp. 163 – 168, 2016.

- [9] J. Kim, C. Lee, H. Shim, J. H. Cheon, A. Kim, M. Kim, and Y. Song, “Encrypting controller using fully homomorphic encryption for security of cyber-physical systems,” IFAC-PapersOnLine, vol. 49, no. 22, pp. 175 – 180, 2016.

- [10] M. Schulze Darup, A. Redder, I. Shames, F. Farokhi, and D. Quevedo, “Towards encrypted MPC for linear constrained systems,” IEEE Control Systems Letters, vol. 2, no. 2, pp. 195–200, 2018.

- [11] A. B. Alexandru, M. Morari, and G. J. Pappas, “Cloud-based MPC with encrypted data,” in 2018 IEEE Conference on Decision and Control (CDC), 2018, pp. 5014–5019.

- [12] M. S. Darup, A. Redder, and D. E. Quevedo, “Encrypted cloud-based MPC for linear systems with input constraints,” IFAC-PapersOnLine, vol. 51, no. 20, pp. 535 – 542, 2018.

- [13] K. Teranishi, M. Kusaka, N. Shimada, J. Ueda, and K. Kogiso, “Secure observer-based motion control based on controller encryption,” in 2019 American Control Conference (ACC), 2019, pp. 2978–2983.

- [14] J. Kim, H. Shim, and K. Han, “Comprehensive introduction to fully homomorphic encryption for dynamic feedback controller via lwe-based cryptosystem,” CoRR, vol. abs/1904.08025, 2019.

- [15] E. Todorov, “Efficient computation of optimal actions,” Proceedings of the National Academy of Sciences, vol. 106, no. 28, pp. 11 478–11 483, 2009.

- [16] D. P. Bertsekas, Neuro-dynamic programmingNeuro-Dynamic Programming. Boston, MA: Springer US, 2009.

- [17] D. Silver, “Lecture 8: Integrating learning and planning.” [Online]. Available: https://www.davidsilver.uk/teaching/

- [18] R. S. Sutton and A. G. Barto, Reinforcement learning: an introduction. The MIT Press, 2018.

- [19] J. N. Tsitsiklis, “Asynchronous stochastic approximation and -learning,” in Proceedings of 32nd IEEE Conference on Decision and Control, Dec 1993, pp. 395–400 vol.1.

- [20] C. Gentry, “A fully homomorphic encryption scheme,” Ph.D. dissertation, Stanford University, 2009, crypto.stanford.edu/craig.

- [21] J. H. Cheon, A. Kim, M. Kim, and Y. Song, “Homomorphic encryption for arithmetic of approximate numbers,” Cryptology ePrint Archive, Report 2016/421, 2016, https://eprint.iacr.org/2016/421.

- [22] K. Teranishi and K. Kogiso, “Control-theoretic approach to malleability cancellation by attacked signal normalization,” 09 2019.

- [23] M. Albrecht, M. Chase, H. Chen, J. Ding, S. Goldwasser, S. Gorbunov, S. Halevi, J. Hoffstein, K. Laine, K. Lauter, S. Lokam, D. Micciancio, D. Moody, T. Morrison, A. Sahai, and V. Vaikuntanathan, “Homomorphic encryption security standard,” HomomorphicEncryption.org, Toronto, Canada, Tech. Rep., November 2018.

- [24] “Microsoft SEAL (release 3.4),” https://github.com/Microsoft/SEAL, Oct. 2019, microsoft Research, Redmond, WA.

- [25] S. Singh, T. Jaakkola, M. L. Littman, and C. Szepesvári, “Convergence results for single-step on-policy reinforcement-learning algorithms,” Machine learning, vol. 38, no. 3, pp. 287–308, 2000.

- [26] J. Kim, H. Shim, and K. Han, “Dynamic controller that operates over homomorphically encrypted data for infinite time horizon,” arXiv preprint arXiv:1912.07362, 2019.