EMV-LIO: An Efficient Multiple Vision aided LiDAR-Inertial Odometry

Abstract

To deal with the degeneration caused by the incomplete constraints of single sensor, multi-sensor fusion strategies especially in the LiDAR-vision-inertial fusion area have attracted much interest from both the industry and the research community in recent years. Considering that a monocular camera is vulnerable to the influence of ambient light from a certain direction and fails, which makes the system degrade into a LiDAR-inertial system, multiple cameras are introduced to expand the visual observation so as to improve the accuracy and robustness of the system. Besides, removing LiDAR’s noise via range image, setting condition for nearest neighbor search, and replacing kd-Tree with ikd-Tree are also introduced to enhance the efficiency. Based on the above, we propose an Efficient Multiple vision aided LiDAR-inertial odometry system (EMV-LIO), and evaluate its performance on both open datasets and our custom datasets. Experiments show that the algorithm is helpful to improve the accuracy, robustness, and efficiency of the whole system compared with LVI-SAM [4]. Our implementation is available at https://github.com/BingqiShen/EMV-LIO.

I Introduction

Recent years have seen great development in the field of application of robots, especially in the domain of navigating uncharted terrains devoid of GPS signals [1]. To localize the mobile robots in unknown environments, different sensors have been adopted while cameras and LiDARs are the most commonly used among them [2]. It is well known that LiDAR-based methods can capture the details of the environment from considerable distances, thus acquiring comprehensive geometric information of the surroundings. However, this method often encounters challenges in degenerated environments such as a long tunnel or an open field, since features extracted by LiDAR are almost the same everywhere, rendering it less effective in such scenarios[3] [24]. While vision-based methods are especially suitable for place recognition tasks and perform well in texture-rich environments, most of them are extremely sensitive to light changes, fast motion, and initialization.

To solve these problems caused by the incomplete constraints of single sensor, multi-sensor fusion strategies for LiDAR-visual-inertial odometry (LVIO) have attracted much attention from both the industry and the research community in recent years and achieved anti-degenerated state estimation in aforementioned environments since LiDAR-inertial odometry (LIO) contributes precise feature depth to avoid initialization failure of visual-inertial odometry (VIO) while VIO offers an initial pose estimation for LIO. Considering that the measurements of a monocular camera are easily limited by its field of vision (FoV), chances are that the monocular vision aided LiDAR-inertial Odometry system (MoV-LIO) will degrade into LIO or even fail due to the fact that the VIO subsystem provides the wrong initial value of pose for the LIO subsystem. Besides, current LIO systems that can achieve high accuracy are mostly optimization-based such as [22] [23]. However, few of them can achieve real-time performance on resource-constrained platforms due to the high computational demands of iterative solving of nonlinear systems. Therefore, it’s essential for us to deal with the issue that how to provide a more accurate and robust fusion method under the condition of limited computing resources.

In this paper, we propose an Efficient Multiple vision aided LiDAR-inertial odometry system (EMV-LIO) based on LVI-SAM [4], introducing multiple cameras in the VIO subsystem to expand the range of visual observation to guarantee the whole system can still maintain the relatively high accuracy in case of the failure of the monocular visual observation. Apart from this, an efficiency-enhanced LVIO system is also introduced to increase the system’s efficiency, including removing LiDAR’s noise via range image, setting condition for nearest neighbor search, and replacing kd-Tree with ikd-Tree. Experiments show that our proposed method is more robust than LVI-SAM and more accurate than LIO-SAM [10] facing challenging scenes without affecting efficiency. In summary, the main contributions of this paper are that:

1) Multiple cameras are introduced in the VIO subsystem for more robust and accurate results.

2) An efficiency-enhanced LVIO system is introduced by removing LiDAR’s noise via range image, setting condition for nearest neighbor search, and replacing kd-Tree with ikd-Tree to improve the efficiency of the whole system.

3) An Efficient Multiple vision aided LiDAR-inertial odometry system (EMV-LIO) is designed and experiments on both open datasets and our custom datasets are carried out. Experiments show that our system outperforms in robustness and accuracy while maintaining efficiency(see Fig. 1).

The rest of this paper is organized as follows: Section II discusses related work on LiDAR-visual-inertial SLAM, and Section III elaborates on our proposed algorithm. To testify to our analysis, we conduct relevant experiments and the results are presented in Section IV. Ultimately, the whole work is concluded in Section V.

II Related Work

II-A Loosely-coupled LiDAR-visual-inertial Odometry

The early research work on LiDAR-visual-inertial fusion was V-LOAM [5] proposed by the author of LOAM [6], Zhang et al, based on their previous research. The issue of robots encountering positioning failures during high-motion scenarios was examined, highlighting its resemblance to the degeneracy challenge stemming from sparse feature data. This observation offers valuable insight for addressing SLAM difficulties in environments characterized by degeneration. In 2019, Wang [7] combined VINS-mono [9] and LOAM in a novel approach. Here, VINS-mono functioned as a Visual-Inertial Odometry (VIO) subsystem to provide the initial pose value. Subsequently, the outcome is fed into LOAM to further optimize the pose by minimizing the residual of extracted feature points. In 2020, Khattack introduced CompSLAM, which is a loosely coupled filter combining LOAM, ROVIO [26], and kinematic-inertial odometry (TSIF [27]), thereby demonstrating a comprehensive fusion of these components. More recently, Wang [25] proposed an advanced SLAM framework that directly fuses visual and LIDAR data. This framework comprises three integral components: a frame-by-frame tracking module, an enhanced sliding window-based refinement module, and a parallel global and local search loop closure detection (PGLS-LCD) module. It ingeniously combines the bag-of-visual-words (BoW) technique with LIDAR iris features to achieve effective location recognition.

II-B Tightly-coupled LiDAR-visual-inertial Odometry

Given the fact that the above fusion methods are loosely-coupled, where the LiDAR measurements are not jointly optimized with the visual or inertial measurements. In order to enhance the anti-degeneration ability and robustness of the system, an increasing number of scholars have directed their efforts towards these tightly-coupled approaches. In 2018, Graeter launched LIMO [8], pioneering the concept of leveraging LiDAR point depth information to estimate the depth of visual feature points. This innovative approach effectively creates a system similar to RGB-D cameras for the first time. Apart from this, LIC-Fusion [28] presents a distinctive real-time spatial and temporal multi-sensor calibration, utilizing MSCKF [29] framework as its foundation. Nonetheless, it is worth mentioning that this work is not publicly available. Inspired by the aforementioned idea, Shan developed LVI-SAM [4], which combines LIO-SAM [10] with VINS-mono. Notably, in scenarios where the LIO subsystem or the VIO subsystem fails, the other system can still remain functional autonomously. This dual-system setup safeguards the overall system’s robustness. At the same time, Lin introduced R2LIVE[12] based on FAST-LIO2 [11] to fuse the measurements of LiDAR, IMU, and camera within the framework of ES-IKF (Error State Iterative Kalman Filter), which achieves better real-time performance compared with LVI-SAM. Additionally, R2LIVE adopts a comprehensive approach by incorporating all measurements into a factor graph. It optimizes the visual landmarks present within the local sliding window, thereby contributing to the refinement of visual features within the map, and consequently enhancing accuracy. Then in 2021, they launched R3LIVE [13] on the basis of the above work. This iteration refined the VIO algorithm and rendered the LiDAR point cloud. Similar two submodules, LIO and VIO are also used in FAST-LIVO [14] and achieve real-time performance at the level of state-of-the-art.

III Methodology

In this section, we describe in detail the proposed EMV-LIO framework. EMV-LIO mainly includes two parts: Multi-camera VIO subsystem and Efficiency-enhanced LVIO system, which will be introduced in the following subsections.

III-A Assumptions and Notations

We assume that the intrinsic parameters of each camera are known. The extrinsic parameters between the three kinds of sensors are calibrated, and they are time synchronized. The relationship of different frames is shown in Fig. 2.

Let us denote the pose of the robot under the World coordinate system at each timestamp as as follows:

| (1) |

where refer to the 3D rotations from base to odometry at timestamp k while refer to the 3D translations.

III-B Multi-camera VIO Subsystem

The Multi-camera VIO in our system develops from VINS-mono. All visual features from multiple cameras are detected using a corner detector [15] first and tracked by the Kanade–Lucas–Tomasi algorithm [16]. Each feature point is endowed with a unique ID which is managed by establishing a one-way mapping between the feature point set and the camera set. After that, either camera will be selected for initialization by performing Structure from Motion (SfM), including estimating the camera’s motion based on epipolar geometry constraint, estimating the depth of feature points by triangulation, and optimizing the camera’s pose and the position of feature points by solving the Perspective-n-Point (PnP) problem by Bundle Adjustment (BA). By setting the position of the first frame of the camera as the origin of the reference coordinate system of SfM, we can calculate the pose of the camera for initialization in the IMU coordinate system on the premise of knowing the camera-IMU external parameters ().

| (2) |

where is the unknown scaling parameter of SfM. () is the relative pose from to , and () is that from to . Considering that it is often inaccurate during initialization, the data association between visual features and LiDAR’s points will be conducted so that the depth information of the LiDAR’s point can be used as the initial value of its corresponding visual feature point for optimization.

Assuming that there are cameras in the system, we perform bundle adjustment in a sliding-window which adds the observation residuals of feature points from all cameras into the following cost function:

| (3) |

where and are residuals for IMU and visual measurements from the camera, respectively. is the prior information from marginalization. is the covariance matrix of IMU from timestamp to timestamp and is the covariance matrix of the visual feature in the frame. All variables above can be deduced from [9]. The Ceres solver [21] is used for solving this nonlinear problem.

III-C Efficiency-enhanced LVIO System

After obtaining the estimation from VIO as mentioned before, it is feasible for us to utilize it as the initial guess of scan-matching in LIO, which can be solved by minimizing the following cost function [4].

| (4) |

where and refer to the distance between a feature and its edge or planar patch correspondence at timestamp relatively. is the edge feature and is the planar feature while and are the set of edge features and planar features at timestamp . All of them can be referred from [10]. To accelerate the process of scan-matching, three tips are proposed as follows:

-

•

Removing LiDAR’s noise via range image: It’s well known that the raw data processing including feature extraction and distortion removal is typically the first step in the LiDAR-based SLAM system before further analysis is performed. Considering that the noise in the raw point cloud will decrease the accuracy of odometry while increasing the processing time for the back-end optimization, it is essential to remove the noise at the very beginning of the LIO. In this paper, a method referred from [17] is adopted. With knowing the vertical scanning angles of a LiDAR, we can project the raw point cloud onto a range image where each valid point is represented by a pixel and the value of it records the Euclidean distance from the point to the LiDAR’s origin. Supposing that the horizontal angular resolutions is , clustering is performed assuming that two neighboring points in the horizontal or vertical direction belong to the same object if the angle between their connected line and the laser beam is above a certain threshold . can be calculated by applying trigonometric equations.

(5) where and are the depths of two adjacent points belonging to the same ring which can be obtained from the range image. Then we discard small clusters since noise may be contained and it will offer unreliable constraints in optimization.

-

•

Setting condition for nearest neighbor search: Most of the LiDAR-based SLAM perform Nearest Neighbor Search (NNS) to find the nearest neighbor point in the previous frame or local map for each point in the current scan during each iteration, which is the most time-consuming step during the whole optimization process. Considering that when the optimization process is about to end, chances are that the result of the current NNS is the same as the result of the previous iteration since the optimization result tends to converge, which leads to unnecessary searches. In this paper, we propose to skip this round of search and adopt the result of the previous round of search once , where is the incremental iteration result of the round at timestamp . is the convergence threshold of iteration and is a ratio which can be set as 2.

-

•

Replacing kd-Tree with ikd-Tree: The data structure used for NNS is usually kd-Tree. However, it does not support incremental updates, which means that after building kd-Tree for the current frame point cloud, new points can be added to the frame point cloud only by building a new kd-Tree, which adds additional computing overhead. Therefore, we adopt incremental kd-Tree (ikd-Tree) proposed by Fast-LIO2 [11], which can incrementally insert and delete points and be automatically re-balanced by partial re-building which enables efficient NNS in later stages.

IV Experiments

For the purpose of ensuring the authenticity and objectivity of the experimental results, we conducted experiments on both open datasets and our custom datasets. Open datasets we choose for accuracy and efficiency test are - and - sequences of the Newer College Multi-camera Dataset (NCD-Multi) [18] which uses an AlphaSense multi-camera sensor with synchronized IMU and Ouster LiDAR. Besides, we name as the front camera, as the right camera, and as the left camera as shown in Fig. 3(a) for convenience. Our custom datasets for efficiency tests are collected by three cameras, a LiDAR and an IMU as shown in Fig. 3(b). The hardware and overview of the above datasets are listed in Table 1, from which we can conclude that each dataset contains fast motion scenes and there are also some challenging scenes like strong light or being occluded by a wall as shown in Fig. 4. Each experiment was conducted using a desktop PC with an Intel Core i7-6700 with 64 GB RAM.

| Dataset | LiDAR | Camera | IMU | Max acc/angv. |

| - | OS0-128 | Alphasense | BMI085 | 13.03/1.54 |

| - | OS0-128 | Alphasense | BMI085 | 12.64/2.34 |

| Custom | Pandar XT-32 | MER2-202 | MTI-300 | 20.21/0.58 |

IV-A Accuracy Test

| Method | RMSE | MEAN | MAX | MIN |

| LIO-SAM | 0.0975 | 0.0857 | 0.3829 | 0.0047 |

| LVI-SAM (front) | - | - | - | - |

| LVI-SAM (right) | - | - | - | - |

| LVI-SAM (left) | 0.0978 | 0.0854 | 0.4123 | 0.0075 |

| EMV-LIO (front + right) | 0.0982 | 0.0864 | 0.4002 | 0.0046 |

| EMV-LIO (front + left) | 0.0969 | 0.0861 | 0.4610 | 0.0073 |

| EMV-LIO (right + left) | 0.0974 | 0.0866 | 0.4857 | 0.0055 |

| EMV-LIO (front + right + left) | 0.0976 | 0.0868 | 0.4602 | 0.0081 |

| Method | RMSE | MEAN | MAX | MIN |

| LIO-SAM | 0.2140 | 0.1862 | 0.7270 | 0.0043 |

| LVI-SAM (front) | - | - | - | - |

| LVI-SAM (right) | - | - | - | - |

| LVI-SAM (left) | 0.3586 | 0.2325 | 1.5116 | 0.0168 |

| EMV-LIO (front + right) | 0.1416 | 0.1294 | 0.4448 | 0.0113 |

| EMV-LIO (front + left) | 0.1411 | 0.1287 | 0.4503 | 0.0083 |

| EMV-LIO (right + left) | 0.1426 | 0.1296 | 0.4175 | 0.0095 |

| EMV-LIO (front + right + left) | 0.1362 | 0.1239 | 0.4000 | 0.0107 |

-

represents failure of odometry.

Experiments on LIO-SAM, LVI-SAM using different cameras, and our proposed EMV-LIO using different combinations of cameras were conducted and Relative Pose Errors (RPE) described in [19] were adopted to evaluate the performances. The RPE information estimated by different methods is summarized in Table II. Since there are many challenging scenes in the two sequences like fast motion or being occluded by the wall, the failure of VIO is likely to occur in LVI-SAM depending on a monocular camera so that a wrong initial guess may be provided for LIO, leading to a worse result compared with LIO-SAM. Nevertheless, for EMV-LIO using multiple cameras proposed in this paper, when one of the above cameras fails due to inadequate trackable features caused by challenging scenes, the feature points tracked by the other cameras are relatively stable at this time, which can provide more accurate visual-inertial odometry result for the LiDAR-inertial odometry as a priori, thus improving the robustness of the overall system, which is a vivid annotation of Do not put all your eggs into one basket. In addition, the introduction of more visual re-projection error items during optimization can bring a certain degree of accuracy improvement.

IV-B Efficiency Test

| Method | LIO-Prehandle | LIO-Optimization | VIO | |||||||

| Number of point | Total time (ms) | CPU (%) | NNS (ms) | Extracting KF | Total time (ms) | CPU (%) | Total time (ms) | CPU(%) | ||

| Method A | 5817.8 | 29.6 | 60.5 | 22.3 | 45.8 | 80.1 | 135.2 | 61.3 | 238.4 | |

| Method B | 4742.7 | 41.6 | 66.1 | 19.8 | 32.4 | 54.2 | 117.2 | 61.3 | 238.4 | |

| Method C | 4742.7 | 41.6 | 66.1 | 17.8 | 32.3 | 52.2 | 117.3 | 61.3 | 238.4 | |

| Method D | 4742.7 | 41.6 | 66.1 | 15.1 | 0.0 | 17.9 | 83.7 | 61.3 | 238.4 | |

We divide the LIO subsystem into two main modules, which are the prehandle module and the optimization module. In order to evaluate the efficiency performance, we report the number of processing points in the prehandle module, the time usage of NNS, the time usage of extracting keyframes in the optimization module, and the total time usage and the CPU utilization percentage of the two modules in the LIO subsystem while the total time usage and the CPU utilization percentage of the VIO subsystem are also recorded. For the sake of convenience, different methods will be defined as follows:

Method A: The original LVI-SAM [4].

Method B: The method with the addition of removing LiDAR’s noise via range image based on Method A

Method C: The method with the addition of setting condition for nearest neighbor search based on Method B

Method D: The method with the addition of replacing based on Method C.

| Method | LIO-SAM | Method A | Method B | Method C | Method D |

| Ravg. rate | 0.0% | -7.6% | -4.3% | -3.2% | +2.1% |

| Method | LIO-SAM | Method A | Method B | Method C | Method D |

| Ravg. rate | 0.0% | -9.7% | -7.5% | -6.4% | -0.2% |

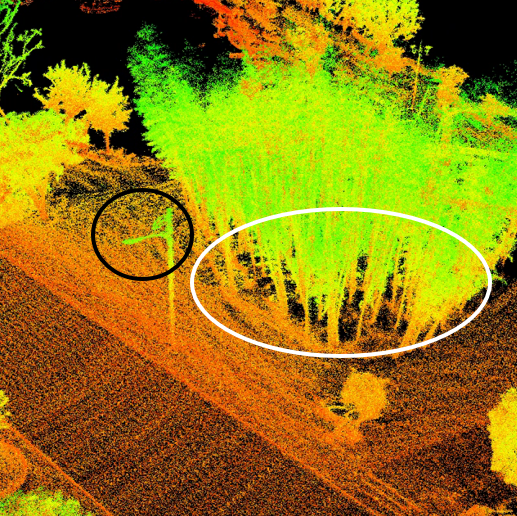

The efficiency information based on different methods is summarized in Table III. The result of Method A and Method B indicates that removing LiDAR’s noise via range image can reduce the number of processing points at the cost of increased time consumption in prehandle step, which can decrease the time consumption in optimization. As shown in Fig. 5 circled by white ovals, the point cloud of the bamboo grove after noise removal is obviously clearer than that of before. Nevertheless, it tends to underperform in reconstructing objects at high places circled by black ovals for instance, since their values in range image change greatly and will be treated as different kinds of objects during clustering and then filtered out. The introduction of setting condition for nearest neighbor search can reduce the search times to help improve the efficiency of the whole system, which can be concluded from the comparison between Method B and Method C. Besides, replacing kd-Tree with ikd-Tree can significantly decrease the CPU utilization percentage and the optimization time consumption. Since the emphasis is only put on the efficiency improvement of the LIO subsystem, the total time usage and the CPU utilization percentage of the VIO subsystem remain the same throughout the experiment.

The relative odometry frame average rate based on different methods is summarized in Table IV. We set the odometry frame average rate of LIO-SAM as the baseline and report the relative values compared with it. The result of LIO-SAM and Method A shows that the introduction of visual observation can reduce the odometry frame rate intuitively. However, our proposed method can help compensate for the reduction and even perform better than LIO-SAM which does not take visual observation into account.

V Conclusions

Given the fact that the monocular vision aided LiDAR-inertial odometry system (MoV-LIO) is easy to degrade into LiDAR-inertial odometry system (LIO) when facing strong light or a texture-less white wall, where degeneration is still prone to happen, we propose an Efficient Multiple vision aided LiDAR-inertial odometry system (EMV-LIO) based on LVI-SAM [4], which introduces visual measurements from multiple cameras to assist odometry. Besides, an efficiency-enhanced LVIO system including removing LiDAR’s noise via range image, setting condition for nearest neighbor search, and replacing kd-Tree with ikd-Tree are also presented in this paper. To testify to our proposed method, experiments on accuracy and efficiency were conducted, and the final results show that our proposed method can help improve the accuracy and efficiency of the whole system.

There are several directions for future work. Since we still initialize the VIO subsystem via a monocular camera, chances are initialization via multiple cameras will be taken into account. Besides, Table III shows that the bottleneck of efficiency improvement of our proposed system now is the VIO subsystem, on which will be put more emphasis. Apart from this, some methods such as changing the way of point cloud downsampling or replacing kd-Tree with IVox [20] can be applied to further improve the efficiency of the whole system.

References

- [1] H. Durrant-Whyte and T. Bailey, ”Simultaneous localization and mapping: Part I,” in IEEE Robotics Automation Magazine, vol. 13, no. 2, pp. 99-110, June 2006, doi: 10.1109/MRA.2006.1638022.

- [2] R. Voges and B. Wagner, ”Interval-Based Visual-LiDAR Sensor Fusion,” in IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 1304-1311, April 2021, doi: 10.1109/LRA.2021.3057572.

- [3] Shao W, Vijayarangan S, Li C, et al. Stereo visual inertial lidar simultaneous localization and mapping[C]//2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). pp. 370-377, 2019.

- [4] T. Shan, B. Englot, C. Ratti and D. Rus, ”LVI-SAM: Tightly-coupled Lidar-Visual-Inertial Odometry via Smoothing and Mapping,” 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 2021, pp. 5692-5698, doi: 10.1109/ICRA48506.2021.9561996.

- [5] J Zhang, S Singh. Laser–visual–inertial odometry and mapping with high robustness and low drift[J]. Journal of field robotics, vol. 35, no. 8, pp. 1242-1264, 2018.

- [6] J Zhang, S Singh. LOAM: Lidar odometry and mapping in real-time[C]//Robotics: Science and Systems. 2014, 2(9): 1-9.

- [7] Z. Wang, J. Zhang, S. Chen, C. Yuan, J. Zhang and J. Zhang, ”Robust High Accuracy Visual-Inertial-Laser SLAM System,” 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 2019, pp. 6636-6641, doi: 10.1109/IROS40897.2019.8967702.

- [8] J. Graeter, A. Wilczynski and M. Lauer, ”LIMO: Lidar-Monocular Visual Odometry,” 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 2018, pp. 7872-7879, doi: 10.1109/IROS.2018.8594394.

- [9] T. Qin, P. Li and S. Shen, ”VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator,” in IEEE Transactions on Robotics, vol. 34, no. 4, pp. 1004-1020, Aug. 2018, doi: 10.1109/TRO.2018.2853729.

- [10] T. Shan, B. Englot, D. Meyers, W. Wang, C. Ratti and D. Rus, ”LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping,” 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 2020, pp. 5135-5142, doi: 10.1109/IROS45743.2020.9341176.

- [11] W. Xu, Y. Cai, D. He, J. Lin and F. Zhang, ”FAST-LIO2: Fast Direct LiDAR-Inertial Odometry,” in IEEE Transactions on Robotics, vol. 38, no. 4, pp. 2053-2073, Aug. 2022, doi: 10.1109/TRO.2022.3141876.

- [12] J. Lin, C. Zheng, W. Xu and F. Zhang, ”R 2 LIVE: A Robust, Real-Time, LiDAR-Inertial-Visual Tightly-Coupled State Estimator and Mapping,” in IEEE Robotics and Automation Letters, vol. 6, no. 4, pp. 7469-7476, Oct. 2021, doi: 10.1109/LRA.2021.3095515.

- [13] J. Lin and F. Zhang, ”R3LIVE: A Robust, Real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state Estimation and mapping package,” 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 2022, pp. 10672-10678, doi: 10.1109/ICRA46639.2022.9811935.

- [14] C. Zheng, Q. Zhu, W. Xu, X. Liu, Q. Guo and F. Zhang, ”FAST-LIVO: Fast and Tightly-coupled Sparse-Direct LiDAR-Inertial-Visual Odometry,” 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 2022, pp. 4003-4009, doi: 10.1109/IROS47612.2022.9981107.

- [15] Jianbo Shi and Tomasi, ”Good features to track,” 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 1994, pp. 593-600, doi: 10.1109/CVPR.1994.323794.

- [16] Lucas B D, Kanade T. An iterative image registration technique with an application to stereo vision[C]//IJCAI’81: 7th international joint conference on Artificial intelligence. 1981, 2: 674-679.

- [17] I. Bogoslavskyi and C. Stachniss, ”Fast range image-based segmentation of sparse 3D laser scans for online operation,” 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea (South), 2016, pp. 163-169, doi: 10.1109/IROS.2016.7759050.

- [18] L Zhang, M Camurri, M Fallon. Multi-camera LiDAR inertial extension to the newer college dataset[J]. arXiv preprint arXiv:2112.08854, 2021.

- [19] J. Sturm, N. Engelhard, F. Endres, W. Burgard and D. Cremers, ”A benchmark for the evaluation of RGB-D SLAM systems,” 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 2012, pp. 573-580, doi: 10.1109/IROS.2012.6385773.

- [20] C. Bai, T. Xiao, Y. Chen, H. Wang, F. Zhang and X. Gao, ”Faster-LIO: Lightweight Tightly Coupled Lidar-Inertial Odometry Using Parallel Sparse Incremental Voxels,” in IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 4861-4868, April 2022, doi: 10.1109/LRA.2022.3152830.

- [21] S.Agarwal et al. “Ceres solver.” [Online] Available: http://ceres-solver.org

- [22] P. Geneva, K. Eckenhoff, Y. Yang and G. Huang, ”LIPS: LiDAR-Inertial 3D Plane SLAM,” 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 2018, pp. 123-130, doi: 10.1109/IROS.2018.8594463.

- [23] H. Ye, Y. Chen and M. Liu, ”Tightly Coupled 3D Lidar Inertial Odometry and Mapping,” 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 2019, pp. 3144-3150, doi: 10.1109/ICRA.2019.8793511.

- [24] F Han, H Zheng, W Huang, et al. DAMS-LIO: A Degeneration-Aware and Modular Sensor-Fusion LiDAR-inertial Odometry[J]. arXiv preprint arXiv:2302.01703, 2023.

- [25] W Wang, J Liu, C Wang, et al. DV-LOAM: Direct visual lidar odometry and mapping[J]. Remote Sensing, vol. 13, no. 16, pp. 3340, 2021.

- [26] S Leutenegger, S Lynen, M Bosse, et al. Keyframe-based visual–inertial odometry using nonlinear optimization[J]. The International Journal of Robotics Research, 2015, 34(3): 314-334.

- [27] R Hartley, M Ghaffari, R M Eustice, et al. Contact-aided invariant extended Kalman filtering for robot state estimation[J]. The International Journal of Robotics Research, vol. 39, no. 4, pp. 402-430, 2020.

- [28] X. Zuo, P. Geneva, W. Lee, Y. Liu and G. Huang, ”LIC-Fusion: LiDAR-Inertial-Camera Odometry,” 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 2019, pp. 5848-5854, doi: 10.1109/IROS40897.2019.8967746.

- [29] A. I. Mourikis and S. I. Roumeliotis, ”A Multi-State Constraint Kalman Filter for Vision-aided Inertial Navigation,” Proceedings 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 2007, pp. 3565-3572, doi: 10.1109/ROBOT.2007.364024.