Ekya: Continuous Learning of Video Analytics Models on Edge Compute Servers

Abstract

Video analytics applications use edge compute servers for the analytics of the videos (for bandwidth and privacy). Compressed models that are deployed on the edge servers for inference suffer from data drift where the live video data diverges from the training data. Continuous learning handles data drift by periodically retraining the models on new data. Our work addresses the challenge of jointly supporting inference and retraining tasks on edge servers, which requires navigating the fundamental tradeoff between the retrained model’s accuracy and the inference accuracy. Our solution Ekya balances this tradeoff across multiple models and uses a micro-profiler to identify the models that will benefit the most by retraining. Ekya’s accuracy gain compared to a baseline scheduler is higher, and the baseline requires more GPU resources to achieve the same accuracy as Ekya.

1. Introduction

Video analytics applications, such as for urban mobility and smart cars (bellevue-report, ), are being powered by deep neural network (DNN) models for object detection and classification, e.g., Yolo (yolo9000-1, ), ResNet (deepresidual-2, ) and EfficientNet (efficientnet-3, ). Video analytics deployments stream the videos to edge servers (azure-ase, ; aws-outposts, ) placed on-premise (ieee-computer, ; edgevideo-1, ; edgevideo-2, ; getmobile, ). Edge computation is preferred for video analytics as it does not require expensive network links to stream videos to the cloud (getmobile, ), while also ensuring privacy of the videos (e.g., many European cities mandate against streaming their videos to the cloud (sweden-data, ; azure-data, )).

Edge compute is provisioned with limited resources (e.g., with weak GPUs (aws-outposts, ; azure-ase, )). This limitation is worsened by the mismatch between the growth rate of the compute demands of models and the compute cycles of processors (ion-blog, ; openai-blog, ). As a result, edge deployments rely on model compression (compression-4, ; compression-5, ; compression-6, ). The compressed DNNs are initially trained on representative data from each video stream, but while in the field, they are affected by data drift, i.e., the live video data diverges significantly from the data that was used for training (datadrift-7, ; datadrift-8, ; datadrift-a, ; datadrift-b, ). Cameras in streets and smart cars encounter varying scenes over time, e.g., lighting, crowd densities, and changing object mixes. It is difficult to exhaustively cover all these variations in the training, especially since even subtle variations affect the accuracy. As a result, there is a sizable drop in the accuracy of edge DNNs due to data drift (by 22%; §2.3). In fact, the fewer weights and shallower architectures of compressed DNNs often make them unsuited to provide high accuracy when trained with large variations in the data.

Continuous model retraining. A promising approach to address data drift is continuous learning. The edge DNNs are incrementally retrained on the new video samples even as some knowledge from before is retained (compressiondrift-11, ; continuous-12, ). Continuous learning techniques retrain the DNNs periodically (distribution-20, ; mullapudi2019, ); we refer to the period between two retrainings as the “retraining window” and we use a sample of the data that is accumulated during each window for retraining. Such ongoing learning (incremental-13, ; icarl-14, ; incremental-15, ) helps the compressed edge models maintain high accuracy even with changing data characteristics.

Edge servers use their GPUs (azure-ase, ) for DNN inference on many live video streams (e.g., traffic cameras in a city). Adding continuous training to edge servers presents a tradeoff between the live inference accuracy and drop in accuracy due to data drift. Allocating more resources to the retraining job allows it to finish faster and provide a more accurate model sooner. At the same time, during the retraining, taking away resources from the inference job lowers its accuracy (because it may have to sample the frames of the video to be analyzed).

Central to the resource demand and accuracy of the jobs are their configurations. For retraining jobs, configurations refer to the hyperparameters, e.g., number of training epochs, that substantially impact the resource demand and accuracies (§3.1). The improvement in accuracy due to retraining also depends on how much the characteristics of the live videos have changed. For inference jobs, configurations like frame sampling and resolution impact the accuracy and resources needed to keep up with analyzing the live video (chameleon, ; noscope, ).

Problem statement. We make the following decisions for continuous retraining. () in each retraining window, decide which of the edge models to retrain; () allocate the edge server’s GPU resources among the retraining and inference jobs, and () select the configurations of the retraining and inference jobs. We also constraint our decisions such that the inference accuracy at any point in time does not drop below a minimum value (so that the outputs continue to remain useful to the application). Our objective in making the above three decisions is to maximize the inference accuracy averaged over the retraining window (aggregating the accuracies during and after the retrainings) across all the videos analyzed on the edge server. Maximizing the inference accuracy over the retraining window creates new challenges as it is different from video inference systems that optimize only the instantaneous accuracy (videostorm, ; noscope, ; chameleon, ), model training systems that optimize only the eventual accuracy (hyperparameter-16, ; DBLP:journals/jmlr/BergstraB12, ; DBLP:conf/nips/SnoekLA12, ; Swersky_scalablebayesian, ; DBLP:conf/osdi/XiaoBRSKHPPZZYZ18, ; DBLP:conf/eurosys/PengBCWG18, ).

Addressing the fundamental tradeoff between the retrained model’s accuracy and the inference accuracy is computationally complex. First, the decision space is multi-dimensional consisting of a diverse set of retraining and inference configurations, and choices of resource allocations over time. Second, it is difficult to know the performance of different configurations (in resource usage and accuracy) as it requires actually retraining using different configurations. Data drift exacerbates these challenges because a decision that works well in a retraining window may not do so in the future.

Solution components. Our solution Ekya has two main components: a resource scheduler and a performance estimator.

In each retraining window, the resource scheduler makes the three decisions listed above in our problem statement. In its decisions, Ekya’s scheduler prioritizes retraining the models of those video streams whose characteristics have changed the most because these models have been most affected by data drift. The scheduler decides against retraining the models which do not improve our target metric. To prune the large decision space, the scheduler uses the following techniques. First, it simplifies the spatial complexity by considering GPU allocations only in coarse fractions (e.g., 10%) that are accurate enough for the scheduling decisions, while also being mindful of the granularity achievable in modern GPUs (nvidia-mps, ). Second, it does not change allocations to jobs during the retraining, thus largely sidestepping the temporal complexity. Finally, our micro-profiler (described below) prunes the list of configurations to only the promising options.

To make efficient choices of configurations, the resource scheduler relies on estimates of accuracy after the retraining and the resource demands. We have designed a micro-profiler that observes the accuracy of the retraining configurations on a small subset of the training data in the retraining window with just a few epochs. It uses these observations to extrapolate the accuracies when retrained on a larger dataset for many more epochs. Further, we restrict the micro-profiling to only a small set of promising retraining configurations. Together, these techniques result in Ekya’s micro-profiler being nearly more efficient than exhaustive profiling while still estimating accuracies with an error of . To estimate the resource demands, the micro-profiler measures the retraining duration per epoch when of the GPU is allocated, and scales out the training time for different allocations, number of epochs, and training data sizes.

Implementation and Evaluation. We have implemented and evaluated Ekya using a system deployment and trace-driven simulation. We used video workloads from dashboard cameras of smart cars (Waymo (waymo, ) and Cityscapes (cityscapes, )) as well as from statically mounted traffic and building cameras over 24 hour durations. Ekya’s accuracy compared to competing baselines is . higher. As a measure of Ekya’s efficiency, attaining the same accuracy as Ekya will require more GPU resources on the edge server with the baseline.

Contributions: Our work makes the following contributions.

1) We introduce the metric of inference accuracy averaged over the retraining window for continuous training systems.

2) We design an efficient micro-profiler to estimate the benefits and costs of retraining edge DNN models.

3) We design a scalable resource scheduler for joint retraining and inference on edge servers.

This work does not raise any ethical issues.

2. Continuous training of models on edge compute

2.1. Edge Computing for Video Analytics

Video analytics deployments commonly analyze videos on edge servers placed on-premise (e.g., AWS Outposts (aws-outposts, ) or Azure Stack Edge (azure-ase, )). Due to cost and energy constraints, compute efficiency is one of the key design goals of edge computing. A typical edge server supports tens of video streams (videoedge, ), e.g., on the cameras in a building, with customized analytics and models for each stream (rocket-github, ) (see Figure 1).

Video analytics applications adopt edge computing for reasons of limited network bandwidth to the cloud, unreliability of the network, and privacy of the video content (getmobile, ; edgevideo-1, ; ieee-computer, ).

Edge deployments are often in locations where the uplink network to the cloud is expensive for shipping continuous video streams, e.g., in oil rigs with expensive satellite network or smart cars with data-limited cellular network. 111The uplinks of LTE cellular or satellite links is Mb/s (39-getmobile, ; 57-getmobile, ), which can only support a couple of p 30 fps HD video streams whereas a typical deployment has many more cameras (getmobile, ).

Network links out of the edge locations experience outages (20-getmobile, ; getmobile, ). Edge compute provides robustness against disconnection to the cloud (chick-fill, ) and prevents disruptions (37-getmobile, ).

Videos often contain sensitive and private data that users do not want sent to the cloud (e.g., many EU cities legally mandate that traffic videos be processed on-premise (sweden-data, ; azure-data, )).

Thus, due to reasons of network cost and video privacy, it is preferred to run both inference and retraining on the edge compute device itself without relying on the cloud. In fact, with bandwidths typical in edge deployments, cloud-based solutions are slower and result in lower accuracies (§6.5).

2.2. Compressed DNN Models and Data drift

Advances in computer vision research have led to high-accuracy DNN models that achieve high accuracy with a large number of weights, deep architectures, and copious training data. While highly accurate, using these heavy and general DNNs for video analytics is both expensive and slow (noscope, ; DBLP:conf/osdi/HsiehABVBPGM18, ), which make them unfit for resource-constrained edge computing. The most common approach to addressing the resource constraints on the edge is to train and deploy specialized and compressed DNNs (compression-4, ; compression-5, ; compression-6, ; compression-17, ; compression-18, ; compression-19, ), which consist of far fewer weights and shallower architectures. These compressed DNNs are trained to only recognize the limited objects and scenes specific to each video stream. In other words, to maintain high accuracy, they forego generality for improved compute efficiency (noscope, ; DBLP:conf/osdi/HsiehABVBPGM18, ; mullapudi2019, ).

Data drift: As specialized edge DNNs have fewer weights and shallower architectures than general DNNs, they can only memorize limited amount of object appearances, object classes, and scenes. As a result, specialized edge DNNs are particularly vulnerable to data drift (datadrift-7, ; datadrift-8, ; datadrift-a, ; datadrift-b, ), where live video data diverges significantly from the initial training data. For example, variations in the angles of objects, scene density (e.g. rush hours), and lighting (e.g., sunny vs. rainy days) over time make it difficult for traffic cameras to accurately identify the objects of interest (cars, bicycles, road signs). Cameras in modern cars observe vastly varying scenes (e.g., building colors, crowd sizes) as they move through different neighborhoods and cities. Further, the distribution of the objects change over time, which in turn, reduces the edge model’s accuracy (distribution-20, ; distribution-21, ). Owing to their ability to memorize limited amount of object variations, to maintain high accuracy, edge DNNs have to be continuously updated with the recent data and to the changing object distributions.

Continuous training: The preferred approach, that has gained significant attention, is for edge DNNs to continuously learn as they incrementally observe new samples over time (incremental-13, ; icarl-14, ; incremental-15, ). The high temporal locality of videos allows the edge DNNs to focus their learning on the most recent object appearances and object classes (DBLP:conf/cvpr/ShenHPK17, ; mullapudi2019, ). In Ekya, we use a modified version of iCaRL (icarl-14, ) though our techniques are generally applicable. Our learning algorithm on-boards new classes, as well as adapts to the changing characteristics of the existing classes. Since manual labeling is not feasible for continuous training systems on the edge, we obtain the labels using a “golden model” that is highly accurate but is far more expensive because it uses a deeper architecture with large number of weights. The golden model cannot keep up with inference on the live videos and we use it to label only a small fraction of the videos in the retraining window that we use for retraining. Our approach is essentially that of supervising a low-cost “student” model with a high-cost “teacher” model (or knowledge distillation (44873, )), and this has been broadly applied in computer vision literature (incremental-13, ; mullapudi2019, ; incremental-15, ; distribution-20, ).

2.3. Accuracy benefits of continuous learning

To show the benefits of continuous learning, we use the video stream from one example city in the Cityscapes dataset (cityscapes, ) that consists of videos from dashboard cameras in many cities. In our evaluation in §6, we use both moving dashboard cameras as well as static cameras over long time periods. We divide the video data in our example city into ten fixed retraining windows (200s in this example). Figure 2(a) shows how the distribution of object classes changes among the different windows. The initial five windows see a fair amount of persons and bicycles, but bicycles rarely show up in windows 6 and 7, while the share of persons varies considerably across windows . Even persons have different appearances (e.g., clothing and angles) over time (Figures 2(c) and 2(d)).

Figure 2(b) plots inference accuracy of an edge DNN (a compressed ResNet18 classifier) in the last five windows using different training options. Training a compressed ResNet18 with video data on all other cities of the Cityscapes dataset does not result in good performance. Unsurprisingly, we observe that training the edge DNN once using data from the first five windows of this example city improves the accuracy. Continuous retraining using the most recent data for training achieves the highest accuracy consistently. Its accuracy is higher than the other options by up to .

Interestingly, using the data from the first five windows to train the larger ResNet101 DNN (not graphed) achieves better accuracy that nearly matches the continuously retrained ResNet18. The substantially better accuracy of ResNet101 compared to ResNet18 when trained on the same data of the first five windows also shows that this training data was indeed fairly representative. But the lightweight ResNet18’s weights and architecture limits its ability to learn and is a key contributor to its lower accuracy. Nonetheless, ResNet101 is slower than the compressed ResNet18 (cnn-perf, ). This makes the efficient ResNet18 more suited for edge deployments and continuous learning enables it to maintain high accuracy even with data drift. Hence, the need for continuous training of edge DNNs is ongoing and not just during a “ramp-up” phase.

3. Scheduling retraining and inference jointly

We propose joint retraining and inference on edge servers. The joint approach utilizes resources better than statically provisioning compute for retraining on edge servers. Since retraining is periodic (distribution-20, ; mullapudi2019, ) and its compute demands are far higher than inference, static provisioning causes idling and wastage. Compared to uploading videos to the cloud for retraining, our approach has clear advantages in privacy (§2.1) as well as network costs and accuracy (quantified in §6.5).

3.1. Configuration diversity of retraining and inference

Tradeoffs in retraining configurations. The hyperparameters for retraining, or “retraining configurations”, influence the resource demands and accuracy. Retraining fewer layers of the DNN (or, “freezing” more layers) consumes lesser GPU resources, as does training on fewer data samples, but they also produce a model with lower accuracy; Figure 3(a).

Figure 3(b) illustrates the resource-accuracy trade-offs for an edge DNN (ResNet18) with various hyperparameters: number of training epochs, batch sizes, number of neurons in the last layer, number of frozen layers, and fraction of training data. We make two observations. First, there is a wide spread in the resource usage (measured in GPU seconds), by upto a factor of . Second, higher resource usage does not always yield higher accuracy. For the two configurations circled in Figure 3(b), their GPU demands vary by even though their accuracies are the same (). Thus, careful selection of the configurations considerably impacts the resource efficiency. However, with the characteristics of the videos changing over time, it is challenging to efficiently obtain the resource-accuracy profiles for retraining configurations.

Tradeoffs in inference configurations. Inference pipelines also allow for flexibility in their resource demands at the cost of accuracy through configurations to downsize and sample frames (rocket-github, ). Prior work has made dramatic advancements in developing profilers to efficiently obtain the resource-accuracy relationship for inference configurations (chameleon, ). We use these efficient inference profilers in our joint solution for retraining and inference, and also to ensure that the inference pipelines continue to keep up with analyzing the live video streams with their currently allocated resources.

3.2. Illustrative scheduling example

We use an example with GPUs and two video streams, A and B, to show the considerations in scheduling inference and retraining tasks jointly. Each retraining uses data samples accumulated since the beginning of the last retraining (referred to as the “retraining window”).222Continuous learning targets retraining windows of durations of tens of seconds to few minutes (distribution-20, ; mullapudi2019, ). We use 120 seconds in this example. Our solution is orthogonal to the duration of the retraining window and works with any given duration in its decisions. To simplify the example, we assume the scheduler has knowledge of the resource-accuracy profiles, but these are expensive to get in practice (we describe our efficient solution for profiling in §4.3). Table 1 shows the retraining configurations (Cfg1A, Cfg2A, Cgf1B, and Cgf2B), their respective accuracies after the retraining, and GPU cost. The scheduler is responsible for selecting configurations and allocating resources for inference and retraining jobs.

| Configuration | Retraining Window 1 | Retraining Window 2 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

||||||||

| Video A Cfg1A | 75 | 85 | 95 | 90 | |||||||

| Video A Cfg2A (*) | 70 | 65 | 90 | 40 | |||||||

| Video B Cfg1B | 90 | 80 | 98 | 80 | |||||||

| Video B Cfg2B (*) | 85 | 50 | 90 | 70 | |||||||

Uniform scheduling: Building upon prior work in cluster schedulers (fair-1, ; fair-2, ) and video analytics systems (videostorm, ), a baseline solution for resource allocation evenly splits the GPUs between video streams, and each stream evenly partitions its allocated GPUs for retraining and inference tasks; see Figure 4(a). Just like model training systems (vizier, ; hyperband, ; pbt, ), the baseline always picks the configuration for retraining that results in the highest accuracy (Cfg1A, Cfg1B for both windows).

Figure 4(c) shows the result of the uniform scheduler on the inference accuracies of the two live streams. We see that when the retraining tasks take resources away from the inference tasks, the inference accuracy drops significantly (from for video A and for video B in Window 1). While the inference accuracy increases significantly after retraining, it leaves too little time in the window to reap the benefit of retraining. Averaged across both retraining windows, the inference accuracy across the two video streams is only because the gains due to the improved accuracy of the retrained model are undercut by the time taken for retraining (during which inference accuracy suffered).

Accuracy-optimized scheduling: Figures 4(b) and 4(d) illustrate an accuracy-optimized scheduler, which by taking a holistic view on the multi-dimensional tradeoffs, provides an an average inference accuracy of . In fact, to match the accuracies, the above uniform scheduler would require nearly twice the GPUs (i.e., GPUs instead of GPUs). 333The techniques in our scheduler apply to other optimization metrics too, like max-min of accuracy. Evaluating other metrics is left to future work.

This scheduler makes three key improvements. First, the scheduler selects the hyperparameter configurations based on their accuracy improvements relative to their GPU cost. It selects lower accuracy options (Cfg2A/Cfg2B) instead of the higher accuracy ones (Cfg1A/Cfg1B) because these configurations are substantially cheaper (Table 1). Second, the scheduler prioritizes retraining tasks that yield higher accuracy improvement, so there is more time to reap the higher benefit from retraining. For example, the scheduler prioritizes video B’s retraining in Window 1 as its inference accuracy after retraining increases by (compared to for video A). Third, the scheduler controls the accuracy drops during retraining by balancing between the retraining time and the resources taken away from the inference tasks.

4. Ekya: Solution Description

Continuous training on limited edge resources requires smartly deciding when to retrain each video stream’s model, how much resources to allocate, and what configurations to use. But making these decisions has two challenges.

First, the decision space of multi-dimensional configurations and resource allocations (§3.1) is complex. In fact, our problem is computationally more complex than two fundamentally challenging problems of multi-dimensional knapsack and multi-armed bandit (§4.1). Hence, we design a thief resource scheduler (§4.2), a heuristic that makes joint retraining-inference scheduling to be tractable in practice.

Second, the decision making requires knowing the model’s exact performance (in resource usage and inference accuracy), but that is difficult to obtain as it requires actually retraining using all the configurations. We address this challenge by designing a micro-profiling based estimator (§4.3), which profiles only a few promising configurations on a tiny fraction of the training data with early termination.

Our techniques (in §4.2 and §4.3) drastically reduce the cost of decision making and performance estimation. Figure 5 presents an overview of Ekya’s components.

4.1. Formulation of joint inference and retraining

The problem of joint inference and retraining aims to maximize overall inference accuracy for all videos streams within a given retraining window . (The duration of each retraining window is .) All inference and retraining must be done in GPUs. Thus, the total compute capability is GPU-time. Without loss of generality, let be the smallest granularity of GPU allocation. Table 2 lists the notations. As explained in §3.1, each video has a set of retraining configurations and a set of inference configurations .

Decisions. For each video in a window , we decide: (1) the retraining configuration ( means no retraining); (2) the inference configuration ; and (3) how many GPUs (in multiples of ) to allocate for retraining () and inference (). We use binary variables to denote these decisions (see Table 2 for the definition). These decisions require GPU-time and yields overall accuracy of . As we saw in §3.2, is averaged across the window , and the inference accuracy at each point in time is determined by the above decisions.

| Notation | Description |

|---|---|

| Set of video streams | |

| A video stream () | |

| A retraining window with duration | |

| Set of all retraining configurations | |

| A retraining configuration () | |

| Set of all inference configurations | |

| An inference configuration () | |

| Total number of GPUs | |

| The unit for GPU resource allocation | |

| Inference accuracy for video for | |

| given configurations and allocations | |

| Compute cost in GPU-time for video for | |

| given configurations and allocations | |

| A set of binary variables (). | |

| iff we use retraining config , | |

| inference config , GPUs for retraining, | |

| GPUs for inference for video |

Optimization Problem. The optimization problem maximizes inference accuracy averaged across all videos in a retraining window within the GPU resource limit.

| (1) | ||||

| subject to | ||||

The first constraint ensures that the GPU allocation does not exceed the available GPU-time available in the retraining window. The second constraint ensures that at any point in time the GPU allocation (in multiples of ) does not exceed the total number of available GPUs. The third constraint ensures that at most one retraining configuration and one inference configuration are picked for each video .

Complexity Analysis. Assuming all the values are known, the above optimization problem can be reduced to a multi-dimensional binary knapsack problem, a NP-hard problem (DBLP:journals/mor/MagazineC84, ). Specifically, the optimization problem is to pick binary options () to maximize overall accuracy while satisfying two capacity constraints (the first and second constraints in Eq 1). In practice, however, getting all the is infeasible because this requires training the edge DNN using all retraining configurations and running inference using all the retrained DNNs with all possible GPU allocations and inference configurations.

The uncertainty of resembles the multi-armed bandits (MAB) problem (robbins1952some, ) to maximize the expected rewards given a limited number of trials for a set of options. Our optimization problem is more challenging than MAB for two reasons. First, unlike the MAB problem, the cost of trials () varies significantly, and the optimal solution may need to choose cheaper yet less rewarding options to maximize the overall accuracy. Second, getting the reward after each trial requires ”ground truth” labels that are obtained using the large golden model, which can only be used judiciously on resource-scarce edges (§2.2).

In summary, our optimization problem is computationally more complex than two fundamentally challenging problems (multi-dimensional knapsack and multi-armed bandits).

4.2. Thief Scheduler

Our scheduling heuristic makes the scheduling problem tractable by decoupling resource allocation (i.e., and ) and configuration selection (i.e., and ) (Algorithm 1). We refer to Ekya’s scheduler as the “thief” scheduler and it iterates among all inference and retraining jobs as follows.

(1) It starts with a fair allocation for all video streams (line 2 in Algorithm 1). In each step, it iterates over all the inference and retraining jobs of each video stream (lines 5-6), and steals a tiny quantum of resources (in multiples of ; see Table 2) from each of the other jobs (lines 10-11).

(2) With the new resource allocations (temp_alloc[]), it then selects configurations for the jobs using the PickConfigs method (line 14 and Algorithm 2) that iterates over all the configurations for inference and retraining for each video stream. For inference jobs, among all the configurations whose accuracy is , PickConfigs picks the configuration with the highest accuracy that can keep up with the inference of the live video stream within the current allocation (line 3-4 in Algorithm 2).

For retraining jobs, PickConfigs picks the configuration that maximizes the accuracy over the retraining window for each video (lines 6-12 in Algorithm 2). EstimateAccuracy in Algorithm 2 (line 7) aggregates the instantaneous accuracies over the retraining window for a given pair of inference configuration (chosen above) and retraining configuration. Ekya’s micro-profiler (§4.3) provides the estimate of the accuracy and the time to retrain for a retraining configuration when of GPU is allocated, and EstimateAccuracy proportionately scales the GPU-time for the current allocation (in temp_alloc[]) and training data size. In doing so, it avoids configurations whose retraining durations exceed with the current allocation (first constraint in Eq. 1).

(3) After reassigning the configurations, Ekya uses the estimated average inference accuracy (accuracy_avg) over the retraining window (line 14 in Algorithm 1) and keeps the new allocations only if it improves up on the accuracy from prior to stealing the resources (line 15 in Algorithm 1).

The thief scheduler repeats the process till the accuracy stops increasing (lines 15-20 in Algorithm 1) and until all the jobs have played the “thief”. Algorithm 1 is invoked at the beginning of each retraining window, as well as on the completion of every training job during the window to reallocate resources to the other training and inference jobs.

Design rationale: We call out the key aspects that makes the scheduler’s decision efficient by pruning the search space.

-

Coarse allocations: The thief scheduler allocates GPU resources in quantums of . We empirically pick the value of that is coarse and yet accurate enough for scheduling decisions, while also being mindful of the granularity achievable in modern GPUs (nvidia-mps, ). We analyze the sensitivity of in §6.3. Resource stealing always ensures that the total allocation is within the limit (second constraint in Eq 1).

-

Reallocating resources only when a retraining job completes: Although one can reallocate GPU resource among jobs at finer temporal granularity (e.g., whenever a retraining job has reached a high accuracy), we empirically find that the gains from such complexity is marginal. That said, Ekya periodically checkpoints the model (§5) so that inference can get the up-to-date accuracy from retraining.

-

Pruned configuration list: Our micro-profiler (described next) speeds up the thief scheduler by giving it only the more promising configurations. Thus, the list used in Algorithm 1 is significantly smaller than the exhaustive set.

4.3. Performance estimation with micro-profiling

Ekya’s scheduling decisions in §4.2 rely on estimations of post-retraining accuracy and resource demand of the retraining configurations. Specifically, at the beginning of each retraining window , we need to profile for each video and each configuration , the accuracy after retraining using and the corresponding time taken to retrain.

Profiling in Ekya vs. hyperparameter tuning: While Ekya’s profiling may look similar to hyperparameter tuning (e.g., (DBLP:conf/nips/SnoekLA12, ; DBLP:journals/jmlr/LiJDRT17, )) at first blush, there are two key differences. First, Ekya needs the performance estimates of a broad set of candidate configurations for the thief scheduler, not just of the single best configuration, because the best configuration is jointly decided across the many retraining and inference jobs. Second, in contrast to hyperparameter tuning which runs separately of the eventual inference/training, Ekya’s profiling must share compute resource with all retraining and inference.

One strawman is to predict the performance of configurations based on their history from prior training instances, but we have found that this works poorly in practice. In fact, even when we cached and reused models from prior retraining windows with similar class distributions, the accuracy was still substantially lower due to other factors that are difficult to model like lighting, angle of objects, density of the scene, etc. (see §6.5). Thus we adopt an online approach for estimation by using the current retraining window’s data.

Opportunities: Ekya leverages three empirical observations for efficient profiling of the retraining configurations. Resource demands of the configurations are deterministic. Hence, we measure the GPU-time taken to retrain for each epoch in the current retraining window when of the GPU is allocated to the retraining. This allows us to scale the time for varying number of epochs, GPU allocations, and training data sizes in Algorithm 1. Post-retraining accuracy can be roughly estimated by training on a small subset of training data for a handful of epochs. The thief scheduler’s decisions are not impacted by small errors in the estimations.

Micro-profiling design: The above insights inspired our approach, called micro-profiling, where for each video, we test various retraining configurations, but on a small subset of the retraining data and only for a small number of epochs (well before models converge). Our micro-profiler is nearly more efficient than exhaustive profiling (of all configurations on the entire training data), while predicting accuracies with an error of , which is low enough in practice to mostly ensure that the thief scheduler makes the same decisions as it would with a fully accurate prediction. We explain the techniques that make Ekya’s micro-profiling efficient.

1) Training data sampling: Ekya’s micro-profiling works on only a small fraction (say, ) of the training data in the retraining window (which is already a subset of all the videos accumulated in the retraining window). While we considered weighted sampling techniques for the micro-profiling, we find that uniform random sampling is the most indicative of the configuration’s performance on the full training data, since it preserves all the data distributions and variations.

2) Early termination: Similar to data sampling, Ekya’s micro-profiling only tests each configuration for a small number (say, 5) of training epochs. Compared to a full fledged profiling that needs few tens of epochs to converge, such early termination greatly speeds up the micro-profiling process.

After early termination on the sampled training data, we obtain the (validation) accuracy of each configuration at each epoch it was trained. We then fit the accuracy-epoch points to the a non-linear curve model from (optimus, ) using a non-negative least squares solver (nnls, ). This model is then used to extrapolate the accuracy that would be obtained by retraining with all the data for larger number of epochs. The use of this extrapolation is consistent with similar work in this space (themis, ; optimus, ).

3) Pruning out bad configurations: Finally, Ekya’s micro-profiling also prunes out those configurations for micro-profiling (and hence, for retraining) that have historically not been useful. These are configurations that are usually significantly distant from the configurations on the Pareto curve of the resource-accuracy profile (see Figure 3(b)), and thus unlikely to be picked by the thief scheduler. Avoiding these configurations improves the efficiency of the micro-profiling.

Annotating training data: For both the micro-profiling as well as the retraining, Ekya acquires labels using a “golden model” (§2.2). This is a high-cost but high-accuracy model trained on a large dataset. As explained in §2, the golden model cannot keep up with inference on the live videos and we use it to label only a small subset of the videos for retraining.

5. Ekya Implementation

Ekya uses PyTorch (pytorch, ) for running and training ML models.

Modularization: Our implementation uses a collection of logically distributed modules for ease of scale-out to many video streams and resources. Each module acts as either the Ekya scheduler, micro-profiler, or a training/inference job, and is implemented by a long-running “actor” in Ray (ray, ). A benefit of using the actor abstraction is its highly optimized initialization cost and failure recovery.

Dynamic reallocation of resources: Ekya reallocates GPU resources between training and inference jobs at timescales that are far more dynamic than required by prior frameworks (where the GPU allocations for jobs are fixed upfront (kubernetes, ; yarn, )). While a middle layer like Nvidia MPS (nvidia-mps, ) provides resource isolation in the GPU by intercepting CUDA calls and re-scheduling them, it also requires terminating and restarting a process to change its resource allocation. Despite this drawback, Ekya uses Nvidia MPS due to its practicality, while the restarting costs are largely avoided by the actor-based implementation that keeps DNN model in GPU memory.

Placement onto GPUs: The resource allocations produced by the thief scheduler are “continuous”, i.e., it assumes that the fractional resources can be spanned across two discrete GPUs. To avoid the consequent expensive inter-GPU communication, Ekya first quantizes the allocations to inverse powers of two (e.g., 1/2, 1/4, 1/8). This makes the jobs amenable to packing. Ekya then allocates jobs to GPUs in descending order of demands to reduce fragmentation (tetris, ).

Model checkpointing and reloading: Ekya can improve inference accuracy by checkpointing the model during retraining and dynamically loading it as the inference model (tf-checkpoint, ; torch-checkpoint, ). Checkpointing can, however, disrupt both the retraining and the inference jobs, so Ekya weighs the cost of the disruption (i.e., additional delay on retraining and inference) due to checkpointing against its benefits (i.e., the more accurate model is available sooner). Implementing checkpointing in Ekya is also made easy by the actor-based programming model that allows for queuing of requests when the actor (model) is unavailable when its new weights are being loaded.

Adapting estimates during retraining: When the accuracy during the retraining varies from the expected value from micro-profiling, Ekya reactively adjusts its allocations. Every few epochs, Ekya uses the current accuracy of the model being retrained to estimate its eventual accuracy when all the epochs are complete. It updates the expected accuracy in the profile of the retraining () with the new value, and then reruns Algorithm 1 for new resource allocations (but leaves the configuration that is used currently, , to be unchanged).

6. Evaluation

We evaluate Ekya’s performance and the key findings are:

1) Compared to static retraining baselines, Ekya achieves upto 29% higher accuracy. For the baseline to match Ekya’s accuracy, it would require additional GPU resources. (§6.2)

2) Both micro-profiling and thief scheduler contribute sizably to Ekya’s gains. (§6.3) In particular, the micro-profiler estimates accuracy with low median errors of . (§6.4)

3) The thief scheduler efficiently makes its decisions in 9.4s when deciding for 10 video streams across 8 GPUs with 18 configurations per model for a 200s retraining window. (§6.3)

4) Compared to alternate designs, including retraining the models in the cloud or using pre-trained cached models, Ekya achieves a higher accuracy without the network costs. (§6.5)

6.1. Setup

Datasets: We use both on-road videos captured by dashboard cameras as well as urban videos captured by mounted cameras. The dashboard camera videos are from cars driving through cities in the US and Europe, Waymo Open (waymo, ) (1000 video segments with in total 200K frames) and Cityscapes (cityscapes, ) (5K frames captured by 27 cameras) videos. The urban videos are from stationary cameras mounted in a building (“Urban Building”) as well as from five traffic intersections (“Urban Traffic”), both collected over 24-hour durations. We use a retraining window of 200 seconds in our experiments, and split each of the videos into 200 second segments. Since the Waymo and Cityscapes dataset do not contain continuous timestamps, we create retraining windows by concatenating images from the same camera in chronological order to form a long video stream and split it into 200 second segments.

DNN models.: We use the ResNet18 object classifier model as our edge DNN. As explained in §2.2, we use an expensive golden model (ResNeXt 101 (wang2019elastic, )) to get ground truth labels for training and testing. On a subset of data that have human annotations, we confirm that the labels produced by the golden model are very similar to human-annotated labels.

Testbed and trace-driven simulator: We run Ekya’s implementation (§5) on AWS EC2 p3.2xlarge instances for 1 GPU experiments and p3.8xlarge instances for 2 GPU experiments. Each instance has Nvidia V100 GPUs with NVLink interconnects and Intel Skylake Xeon processors.

We also built a simulator to test Ekya under a wide range of resource constraints, workloads, and longer durations. The simulator takes as input the accuracy and resource usage (in GPU time) of training/inference configurations logged from our testbed. For each training job in a window, we log the training-accuracy progression over GPU-time. We also log the inference accuracy on the real videos to replay it in our simulator. This exhaustive trace allows us to mimic the jobs with high fidelity under different scheduling policies.

Retraining configurations: As listed in §3.1, our retraining configurations are obtained by combining these hyperparameters: number of epochs to train, batch size, number of neurons in the last layer, number of layers to retrain, and the fraction of data between retraining windows to use for retraining.

Baselines: Our baseline, called uniform scheduler, uses a fixed retraining configuration, and a static retraining/inference resource allocation (these are adopted by prior schedulers (fair-1, ; fair-2, ; videostorm, )). For each dataset, we test all retraining configurations on a hold-out dataset 444 The same hold-out dataset is used to customize the off-the-shelf DNN inference model. This is a common strategy in prior work (e.g., (noscope, )). (i.e., two video streams that were never used in later tests) to produce the Pareto frontier of the accuracy-resource tradeoffs (e.g., Figure 3). The uniform scheduler then picks two points on the Pareto frontier as the fixed retraining configurations to represent “high” (Config 1) and “low” (Config 2) resource usage, and uses one of them for all retraining windows in a test.

We also consider two alternative designs. (1) offloading retraining to the cloud, and (2) caching and re-using a retrained model from history. We will present their details in §6.5.

6.2. Overall improvements

We evaluate Ekya and the baselines along three dimensions—inference accuracy (% of images correctly classified), resource consumption (in GPU time), and capacity (the number of concurrently processed video streams). Note that the performance is always tested while keeping up with the original video frame rate (i.e., no indefinite frame queueing).

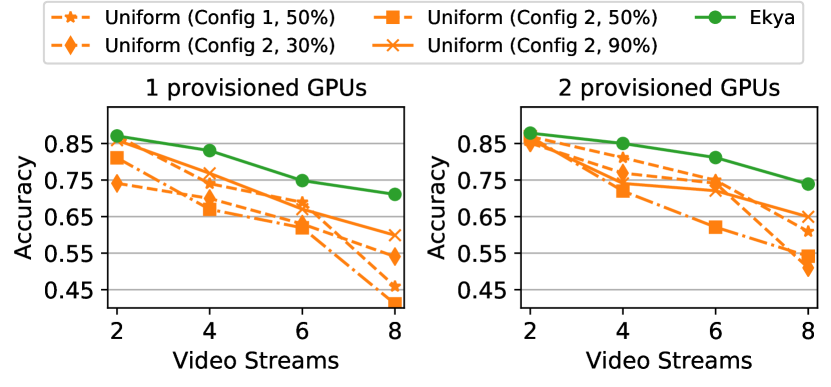

Accuracy vs. Number of concurrent video streams: Figure 6 shows that the accuracy of Ekya and the uniform baselines when analyzing a growing number of concurrent video streams under a fixed number of provisioned GPUs for Waymo and Cityscapes datasets. The uniform baselines use different combinations of pre-determined retraining configurations and resource partitionings. As the number of video streams increases, Ekya enjoys a growing advantage (upto 29% under 1 GPU and 23% under 2 GPU) in accuracy over the uniform baselines. This is because Ekya gradually shifts more resource from retraining to inference and uses cheaper retraining configurations. In contrast, increasing the number of streams forces the uniform baseline to allocate less GPU cycles to each inference job, while retraining jobs, which use fixed configurations, slow down and take the bulk of each window. This trend persists with different GPUs.

| Scheduler | Capacity | Scaling factor | |

|---|---|---|---|

| 1 GPU | 2 GPUs | ||

| Ekya | 2 | 8 | 4x |

| Uniform (Config 1, 50%) | 2 | 2 | 1x |

| Uniform (Config 2, 90%) | 2 | 4 | 2x |

| Uniform (Config 2, 50%) | 2 | 4 | 2x |

| Uniform (Config 2, 30%) | 0 | 2 | - |

Number of video streams vs. provisioned resource: We compare Ekya’s capacity (defined by the maximum number of concurrent video streams subject to an accuracy threshold) with that of uniform baseline, as more GPUs are available. Setting an accuracy threshold is common in practice, since applications usually require accuracy to be above a threshold for the inference to be usable. Table 3 uses the Cityscapes results (Figure 6) to derive the scaling factor of capacity vs. the number of provisioned GPUs and shows that with more provisioned GPUs, Ekya scales faster than uniform baselines.

Accuracy vs. provisioned resource: Finally, Figure 7 stress-tests Ekya and the uniform baselines to process 10 concurrent video streams and shows their average inference accuracy under different number of GPUs. To scale to more GPUs, we use the simulator (§6.1), which uses profiles recorded from real tests and we verified that it produced similar results as the implementation at small-scale. As we increase the number of provisioned GPUs, we see that Ekya consistently outperforms the best of the two baselines by a considerable margin and more importantly, with 4 GPUs Ekya achieves higher accuracy (marked with the dotted horizontal line) than the baselines at 16 GPUs (i.e., 4 resource saving).

Summary: The results highlight two advantages of Ekya. First, it allocates resources to retraining only when the accuracy gain from the retraining outweighs the temporary inference accuracy drop due to frame subsampling. Second, when it allocates resource to retraining, it retrains the model with a configuration that can finish in time for the inference to leverage the higher accuracy from the retrained model.

6.3. Understanding Ekya improvements

Component-wise contribution: Figure 8 understands the contributions of resource allocation and configuration selection (on 10 video streams with 4 GPUs provisioned). We construct two variants from Ekya: Ekya-FixedRes, which removes the smart resource allocation in Ekya (i.e., using the inference/training resource partition of the uniform baseline), and Ekya-FixedConfig removes the microprofiling-based configuration selection in Ekya (i.e., using the fixed configuration of the uniform baseline). Figure 8 shows that both adaptive resource allocation and configuration selection has a substantial contribution to Ekya’s gains in accuracy, especially when the system is under stress (i.e., fewer resources are provisioned).

(Inference accuracy = 0.82)

(Inference accuracy = 0.83)

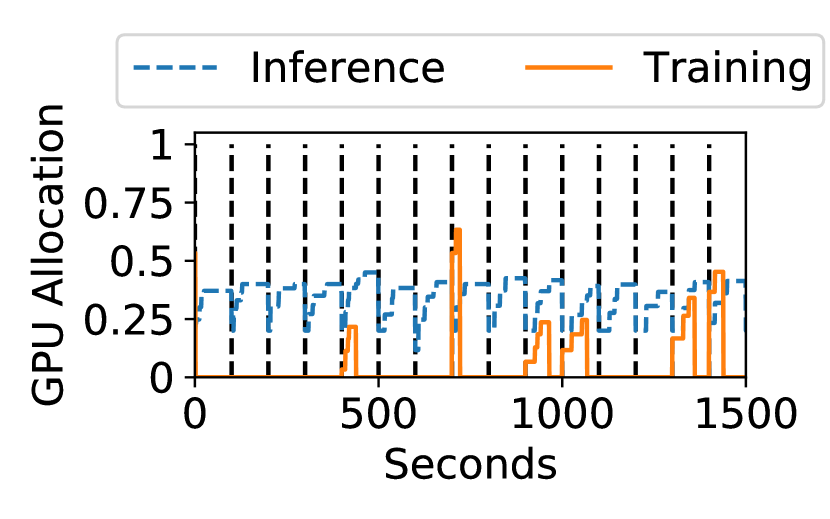

Resource allocation across streams: Figure 9 shows Ekya’s resource allocation across two example video streams over several retraining windows. In contrast to the uniform baselines that use the same retraining configuration and allocate equal resource to retraining and inference (when retraining takes place), Ekya retrains the model only when it benefits and allocates different amounts of GPUs to the retraining jobs of video streams, depending on how much accuracy gain is expected from retraining on each stream. In this case, more resource is diverted to video stream #1 (#1 can benefit more from retraining than #2) and both video streams achieve much higher accuracies (0.82 and 0.83) than the uniform baseline.

Impact of scheduling granularity: A key parameter in Ekya’s scheduling algorithm (§4.2) is the allocation quantum : it controls the runtime of the scheduling algorithm and the granularity of resource allocation. Figure 10 plots this tradeoff with the same setting as Figure 7 (10 video streams). While increasing from (coarse-grained; one full GPU) to (fine-grained; fraction of a GPU), we see the accuracy increases substantially . Though the runtime also increases to 9.5 seconds, it is still a tiny fraction () of the retraining window (s), and we use in our experiments.

6.4. Effectiveness of micro-profiling

Finally, we examine the effectiveness of the micro-profiler.

Errors of microprofiled accuracy estimates: Ekya’s micro-profiler estimates the accuracy of each configuration (§4.3) by training it on a subset of the data for a small number of epochs. To evaluate the micro-profiler’s estimates, we run it on all configurations for 5 epochs and on 10% of the retraining data from all streams of the Cityscapes dataset, and calculate the estimation error with respect to the retrained accuracies when trained on 100% of the data for 5, 15 and 30 epochs. Figure 11(a) plots the distribution of the errors in accuracy estimation and and show that the micro-profiled estimates are largely unbiased with an median absolute error of 5.8%.

| Bandwidth (Mbps) | Accuracy | More bandwidth needed | |||

| Uplink | Downlink | Uplink | Downlink | ||

| Cellular | 5.1 | 17.5 | 68.5% | 10.2 | 3.8 |

| Satellite | 8.5 | 15 | 69.2% | 5.9 | 4.4 |

| Cellular () | 10.2 | 35 | 71.2% | 5.1 | 1.9 |

| Ekya | - | - | 77.8% | - | - |

Sensitivity to microprofiling estimation errors: Finally, we test the impact of accuracy estimation errors (§4.3) on Ekya’s inference accuracy. We add a controlled Gaussian noise on top of the real retraining accuracy as the predictions when the microprofiler is queried. Figure 11(b) shows that Ekya is quite robust to accuracy estimate errors: with upto 20% errors (in which all errors in Figure 11(a) lie) in the profiler prediction, the maximum accuracy drop is merely 3%.

6.5. Comparison with alternative designs

Ekya vs. Cloud-based retraining: One may upload a sub-sampled video stream to the cloud, retrain the model in the cloud, and download the model to the edge server (khani2020real, ). While this solution compromises the privacy of the videos and is not an option for many deployments due to legal stipulations (sweden-data, ; azure-data, ), we nonetheless evaluate this option as it lets the edge servers focus on inference. We conclude that the cloud-based solution results in lower accuracy due to significant network delays on the constrained networks typical of edges (getmobile, ).

As a simple example, consider 8 video streams with a ResNet18 model and a retraining window of 400 seconds. For a HD (720p) video stream at 4Mbps and 10% data sub-sampling (typical in our experiments), this amounts to 160Mb of training data per camera per window. Uploading 160Mb for each of the 8 cameras over a 4G cellular uplink (5.1 Mbps (57-getmobile, )) and downloading the trained ResNet18 models (each of size of 398 Mb (torchvision-models, )) over the 17.5 Mbps downlink (57-getmobile, ) takes a total of 432 seconds (even excluding the model retraining time), which already exceeds the retraining window.

To test on the Cityscapes dataset, we extend our simulator (§6.1) to account for network delays during retraining, and test with 8 videos and 4 GPUs. We use the conservative assumption that retraining in the cloud is “instantaneous” (cloud GPUs are powerful than edge GPUs). Table 4 lists the accuracies with cellular 4G links (one and two subscriptions) and a satellite link, which are both indicative of edge deployments (getmobile, ). We use two cellular links so as to meet the 400s retraining window (based on the description above).

For the cloud alternatives to match Ekya’s accuracy, we will need to provision additional uplink capacity of 5-10 and downlink capacity of 2-4 (of the already expensive links). In summary, Ekya’s edge-based solution is better than a cloud based alternate for retraining in both metrics of accuracy as well as network usage (Ekya sends no data out of the edge), all while providing privacy for the videos.

Ekya vs. Re-using pretrained models: Another alternative to Ekya’s continuous retraining is to cache retrained models and reuse them, e.g., pick the model that was trained on a similar class distribution. To test this baseline, we pre-train and cache a few tens of DNNs from earlier retraining windows from the Cityscapes dataset. In each retraining window of our experiment with 8 GPUs and 10 video streams, we pick the cached DNN whose class distribution (vector of object class frequencies) of its training data has the closest Euclidean distance with the current window’s data. GPU cycles are evenly shared by the inference jobs (since there is no retraining). The resulting average inference accuracy is 0.72 is lower than the Ekya’s accuracy of 0.78 (see Figure 7(a)). This is because even though the class distributions may be similar, the models cannot be directly reused from any window as the appearances of objects may still differ considerably (Figure 2).

7. Related Work

1) ML training systems. For large scale training in the cloud, model and data parallel frameworks (DBLP:conf/nips/DeanCMCDLMRSTYN12, ; DBLP:journals/pvldb/LowGKBGH12, ; 199317, ; mxnet, ) and various resource schedulers (DBLP:journals/corr/abs-1907-01484, ; DBLP:conf/osdi/XiaoBRSKHPPZZYZ18, ; DBLP:conf/nsdi/GuCSZJQLG19, ; DBLP:conf/eurosys/PengBCWG18, ; DBLP:conf/sigcomm/GrandlAKRA14, ; DBLP:conf/cloud/ZhangSOF17, ) have been developed to schedule the cluster for ML workloads. These systems, however, target different objectives than Ekya, like maximizing parallelism, efficiency, fairness, or minimizing average job completion. Collaborative training systems (DBLP:journals/corr/abs-1902-01046, ; DBLP:conf/edge/LuSTLZCP19, ) work on decentralized data on mobile phones. Their focus is on coordinating the training between phones and the cloud, and not on training the models alongside inference.

2) Video analytics systems. Prior work has built low-cost, high-accuracy and highly scalable video analytics systems across the edge and cloud (videostorm, ; chameleon, ; noscope, ). VideoStorm (videostorm, ) investigates quality-lag requirements in video queries. NoScope exploits difference detectors and cascaded models to speedup queries (noscope, ). Focus uses low-cost models to index videos (DBLP:conf/osdi/HsiehABVBPGM18, ). Chameleon exploits correlations in camera content to amortize profiling costs (chameleon, ). All of these works optimize only the inference accuracy unlike Ekya’s focus on retraining.

3) Hyper-parameter optimization. Efficient techniques to explore the space of hyper-parameters is crucial in training systems to find the model with the best accuracy. Techniques range from simplistic grid or random search (DBLP:journals/jmlr/BergstraB12, ), to more sophisticated approaches using random forests (DBLP:conf/lion/HutterHL11, ), Bayesian optimization (DBLP:conf/nips/SnoekLA12, ; Swersky_scalablebayesian, ), probabilistic model (DBLP:conf/middleware/RasleyH0RF17, ), or non-stochastic infinite-armed bandits (DBLP:journals/jmlr/LiJDRT17, ). Unlike the focus of these techniques towards finding the hyper-parameters that train the model with the highest accuracy, our focus is on resource allocation. Further, we are focused on the inference accuracy over the retrained window, where often producing the best retrained model turns out to be sub-optimal.

4) Continuous learning. Machine learning literature on continuous learning adapts models as new data comes in. A common approach used is transfer learning (DBLP:journals/corr/RazavianASC14, ; DBLP:conf/edge/LuSTLZCP19, ; mullapudi2019, ; 44873, ). Research has also been conducted on handling catastrophic forgetting (DBLP:journals/corr/abs-1708-01547, ; datadrift-a, ), using limited amount of training data (icarl-14, ; DBLP:journals/corr/abs-1905-10887, ), and dealing with class imbalance (Belouadah_2019_ICCV, ; DBLP:journals/corr/abs-1905-13260, ). Ekya builds atop continuous learning techniques for its scheduling and implementation, to enable them in edge deployments.

5) Edge compute systems. By deploying computation close to the data sources, edge computing benefits many applications, including video analytics (edgevideo-1, ; ieee-computer, ; getmobile, ). While there has been edge-based solutions for video processing (videoedge, ), we enable joint optimization of video inference and retraining.

8. Conclusion

Continuous learning enables edge DNNs to maintain high accuracy even with data drift, but it also poses a complex and fundamental tradeoff between retraining and inference. We introduce Ekya, an efficient system that maximizes inference accuracy by balancing across multiple retraining and inference tasks. Ekya’s resource scheduler makes the problem practical and tractable by pruning the large decision space and prioritizing promising retraining tasks. Ekya’s performance estimator provides essential accuracy estimation with very little overheads. Our evaluation with a diverse set of of video streams shows that Ekya achieves higher accuracy than a baseline scheduler, and the baseline needs more GPU resources to achieve Ekya’s accuracy. We conclude Ekya is a practical system for continuous learning for video analytics on the edge, and we hope that our findings will spur further research into the tradeoff between retraining and inference.

References

- [1] MxNet: a flexible and efficient library for deep learning. https://mxnet.apache.org/.

- [2] Nvidia multi-process service. https://docs.nvidia.com/deploy/pdf/CUDA_Multi_Process_Service_Overview.pdf. (Accessed on 09/16/2020).

- [3] scipy.optimize.nnls — scipy v1.5.2 reference guide. https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.nnls.html. (Accessed on 09/17/2020).

- [4] torch.utils.checkpoint — pytorch 1.6.0 documentation. https://pytorch.org/docs/stable/checkpoint.html. (Accessed on 09/16/2020).

- [5] torchvision.models — pytorch 1.6.0 documentation. https://pytorch.org/docs/stable/torchvision/models.html. (Accessed on 09/16/2020).

- [6] A Comprehensive List of Hyperparameter Optimization & Tuning Solutions. https://medium.com/@mikkokotila/a-comprehensive-list-of-hyperparameter-optimization-tuning-solutions-88e067f19d9, 2018.

- [7] A. Ghodsi, M. Zaharia, B. Hindman, A. Konwinski, S. Shenker, and I. Stoica. Fair allocation of multiple resource types. In USENIX NSDI, 2011.

- [8] M. Abadi, P. Barham, J. Chen, Z. Chen, A. Davis, J. Dean, M. Devin, S. Ghemawat, G. Irving, M. Isard, M. Kudlur, J. Levenberg, R. Monga, S. Moore, D. G. Murray, B. Steiner, P. Tucker, V. Vasudevan, P. Warden, M. Wicke, Y. Yu, and X. Zheng. TensorFlow: A System for Large-Scale Machine Learning. In USENIX OSDI, 2016.

- [9] Achieving Compliant Data Residency and Security with Azure.

- [10] AI and Compute. https://openai.com/blog/ai-and-compute/, 2018.

- [11] G. Ananthanarayanan, V. Bahl, P. Bodík, K. Chintalapudi, M. Philipose, L. R. Sivalingam, and S. Sinha. Real-time Video Analytics – the killer app for edge computing. IEEE Computer, 2017.

- [12] AWS Outposts. https://aws.amazon.com/outposts/.

- [13] Azure Stack Edge. https://azure.microsoft.com/en-us/services/databox/edge/.

- [14] E. Belouadah and A. Popescu. IL2M: Class Incremental Learning With Dual Memory. In IEEE ICCV, 2019.

- [15] J. Bergstra and Y. Bengio. Random Search for Hyper-Parameter Optimization. J. Mach. Learn. Res., 13:281–305, 2012.

- [16] K. Bonawitz, H. Eichner, W. Grieskamp, D. Huba, A. Ingerman, V. Ivanov, C. Kiddon, J. Konecný, S. Mazzocchi, H. B. McMahan, T. V. Overveldt, D. Petrou, D. Ramage, and J. Roselander. Towards Federated Learning at Scale: System Design. In SysML, 2019.

- [17] B. Burns, B. Grant, D. Oppenheimer, E. Brewer, and J. Wilkes. Borg, omega, and kubernetes. ACM Queue, 14:70–93, 2016.

- [18] Chien-Chun Hung, Ganesh Ananthanarayanan, Peter Bodik, Leana Golubchik, Minlan Yu, Paramvir Bahl, Matthai Philipose. Videoedge: Processing camera streams using hierarchical clusters. In ACM/IEEE SEC, 2018.

- [19] CLIFFORD, M. J., PERRONS, R. K., ALI, S. H.,ANDGRICE, T. A. Extracting Innovations: Mining, Energy, and Technological Changein the Digital Age. In CRC Press, 2018.

- [20] cnn-benchmarks. https://github.com/jcjohnson/cnn-benchmarks#resnet-101, 2017.

- [21] D. Kang, J. Emmons, F. Abuzaid, P. Bailis and M. Zaharia. Noscope: Optimizing neural network queries over video at scale. In VLDB, 2017.

- [22] D Maltoni, V Lomonaco. Continuous learning in single-incremental-task scenarios. In Neural Networks, 2019.

- [23] J. Dean, G. Corrado, R. Monga, K. Chen, M. Devin, Q. V. Le, M. Z. Mao, M. Ranzato, A. W. Senior, P. A. Tucker, K. Yang, and A. Y. Ng. Large Scale Distributed Deep Networks. In NeurIPS, 2012.

- [24] Edge Computing at Chick-fil-A. https://medium.com/@cfatechblog/edge-computing-at-chick-fil-a-7d67242675e2. 2019.

- [25] Ganesh Ananthanarayanan, Victor Bahl, Yuanchao Shu, Franz Loewenherz, Daniel Lai, Darcy Akers, Peiwei Cao, Fan Xia, Jiangbo Zhang, Ashley Song. Traffic Video Analytics – Case Study Report. 2019.

- [26] GI Parisi, R Kemker, JL Part, C Kanan, S Wermter . Continual lifelong learning with neural networks: A review. In Neural Networks, 2019.

- [27] D. Golovin, B. Solnik, S. Moitra, G. Kochanski, J. Karro, and D. Sculley. Google vizier: A service for black-box optimization. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’17, page 1487–1495, New York, NY, USA, 2017. Association for Computing Machinery.

- [28] R. Grandl, G. Ananthanarayanan, S. Kandula, S. Rao, and A. Akella. Multi-resource packing for cluster schedulers. In Proceedings of the 2014 ACM Conference on SIGCOMM, SIGCOMM ’14, page 455–466, New York, NY, USA, 2014. Association for Computing Machinery.

- [29] R. Grandl, G. Ananthanarayanan, S. Kandula, S. Rao, and A. Akella. Multi-resource packing for cluster schedulers. In ACM SIGCOMM, 2014.

- [30] J. Gu, M. Chowdhury, K. G. Shin, Y. Zhu, M. Jeon, J. Qian, H. H. Liu, and C. Guo. Tiresias: A GPU cluster manager for distributed deep learning. In USENIX NSDI, 2019.

- [31] Haoyu Zhang, Ganesh Ananthanarayanan, Peter Bodík, Matthai Philipose, Victor Bahl, Michael Freedman. Live video analytics at scale with approximation and delay-tolerance. In USENIX NSDI, 2017.

- [32] G. Hinton, O. Vinyals, and J. Dean. Distilling the Knowledge in a Neural Network. In NeurIPS Deep Learning and Representation Learning Workshop, 2015.

- [33] K. Hsieh, G. Ananthanarayanan, P. Bodík, S. Venkataraman, P. Bahl, M. Philipose, P. B. Gibbons, and O. Mutlu. Focus: Querying Large Video Datasets with Low Latency and Low Cost. In USENIX OSDI, 2018.

- [34] F. Hutter, H. H. Hoos, and K. Leyton-Brown. Sequential Model-Based Optimization for General Algorithm Configuration. In Learning and Intelligent Optimization, 2011.

- [35] Joseph Redmon, Ali Farhadi . Yolo9000: Better, faster, stronger. In CVPR, 2017.

- [36] Junchen Jiang, Ganesh Ananthanarayanan, Peter Bodík, Siddhartha Sen, Ion Stoica. Chameleon: Scalable adaptation of video analytics. In ACM SIGCOMM, 2018.

- [37] Junjue Wang, Ziqiang Feng, Shilpa George, Roger Iyengar, Pillai Padmanabhan, Mahadev Satyanarayanan. Towards scalable edge-native applications. In ACM/IEEE Symposium on Edge Computing, 2019.

- [38] K He, X Zhang, S Ren, J Sun . Deep residual learning for image recognition. In CVPR, 2016.

- [39] M. Khani, P. Hamadanian, A. Nasr-Esfahany, and M. Alizadeh. Real-time video inference on edge devices via adaptive model streaming. arXiv preprint arXiv:2006.06628, 2020.

- [40] Konstantin Shmelkov, Cordelia Schmid, Karteek Alahari . Incremental learning of object detectors without catastrophic forgetting. In ICCV, 2017.

- [41] J. Lee, J. Yoon, E. Yang, and S. J. Hwang. Lifelong Learning with Dynamically Expandable Networks. In ICLR, 2018.

- [42] A. Li, O. Spyra, S. Perel, V. Dalibard, M. Jaderberg, C. Gu, D. Budden, T. Harley, and P. Gupta. A generalized framework for population based training. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’19, page 1791–1799, New York, NY, USA, 2019. Association for Computing Machinery.

- [43] L. Li, K. Jamieson, G. DeSalvo, A. Rostamizadeh, and A. Talwalkar. Hyperband: A novel bandit-based approach to hyperparameter optimization. J. Mach. Learn. Res., 18(1):6765–6816, Jan. 2017.

- [44] L. Li, K. G. Jamieson, G. DeSalvo, A. Rostamizadeh, and A. Talwalkar. Hyperband: A novel bandit-based approach to hyperparameter optimization. J. Mach. Learn. Res., 18:185:1–185:52, 2017.

- [45] Y. Low, J. Gonzalez, A. Kyrola, D. Bickson, C. Guestrin, and J. M. Hellerstein. Distributed graphlab: A framework for machine learning in the cloud. PVLDB, 5(8):716–727, 2012.

- [46] Y. Lu, Y. Shu, X. Tan, Y. Liu, M. Zhou, Q. Chen, and D. Pei. Collaborative learning between cloud and end devices: an empirical study on location prediction. In ACM/IEEE SEC, 2019.

- [47] M McCloskey, NJ Cohen. Catastrophic interference in connectionist networks: The sequential learning problem. In Psychology of learning and motivation, 1989.

- [48] M Sandler, A Howard, Menglong Zhu, Andrey Zhmoginov, Liang-Chieh Chen . Mobilenetv2: Inverted residuals and linear bottlenecks. In CVPR, 2018.

- [49] M. J. Magazine and M. Chern. A note on approximation schemes for multidimensional knapsack problems. Math. Oper. Res., 9(2), 1984.

- [50] K. Mahajan, A. Balasubramanian, A. Singhvi, S. Venkataraman, A. Akella, A. Phanishayee, and S. Chawla. Themis: Fair and efficient GPU cluster scheduling. In 17th USENIX Symposium on Networked Systems Design and Implementation (NSDI 20), pages 289–304, Santa Clara, CA, Feb. 2020. USENIX Association.

- [51] K. Mahajan, A. Singhvi, A. Balasubramanian, S. Venkataraman, A. Akella, A. Phanishayee, and S. Chawla. Themis: Fair and efficient GPU cluster scheduling for machine learning workloads. In USENIX NSDI, 2020.

- [52] Marius Cordts, Mohamed Omran, Sebastian Ramos, Timo Rehfeld, Markus Enzweiler, Rodrigo Benenson, Uwe Franke, Stefan Roth, and Bernt Schiele . The cityscapes dataset for semantic urban scene understanding. In CVPR, 2016.

- [53] Measuring Fixed Broadband - Eighth Report, FEDERAL COMMUNICATIONS COMMISSION OFFICE OF ENGINEERING AND TECHNOLOGY. https://www.fcc.gov/reports-research/reports/measuring-broadband-america/measuring-fixed-broadband-eighth-report. 2018.

- [54] Microsoft-Rocket-Video-Analytics-Platform. https://github.com/microsoft/Microsoft-Rocket-Video-Analytics-Platform.

- [55] Mingxing Tan, Bo Chen, Ruoming Pang, Vijay Vasudevan, Mark Sandler, Andrew Howard, Quoc V. Le. Mnasnet: Platform-aware neural architecture search for mobile. In CVPR, 2019.

- [56] Mingxing Tan, Quoc V. Le . Efficientnet: Rethinking model scaling for convolutional neural networks. In ICML, 2019.

- [57] P. Moritz, R. Nishihara, S. Wang, A. Tumanov, R. Liaw, E. Liang, M. Elibol, Z. Yang, W. Paul, M. I. Jordan, and I. Stoica. Ray: A distributed framework for emerging ai applications. In Proceedings of the 13th USENIX Conference on Operating Systems Design and Implementation, OSDI’18, page 561–577, USA, 2018. USENIX Association.

- [58] Ningning Ma, Xiangyu Zhang, Hai-Tao Zheng, and Jian Sun . Shufflenet v2: Practical guidelines for efficient cnn architecture design. In ECCV, 2018.

- [59] OPENSIGNAL. Mobile Network Experience Report . https://www.opensignal.com/reports/2019/01/usa/mobile-network-experience. 2019.

- [60] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, A. Desmaison, A. Kopf, E. Yang, Z. DeVito, M. Raison, A. Tejani, S. Chilamkurthy, B. Steiner, L. Fang, J. Bai, and S. Chintala. Pytorch: An imperative style, high-performance deep learning library. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems 32, pages 8024–8035. Curran Associates, Inc., 2019.

- [61] Pavlo Molchanov, Stephen Tyree, Tero Karras, Timo Aila, Jan Kautz. Pruning convolutional neural networks for resource efficient inference. In ICLR, 2017.

- [62] Pei Sun and Henrik Kretzschmar and Xerxes Dotiwalla and Aurelien Chouard and Vijaysai Patnaik and Paul Tsui and James Guo and Yin Zhou and Yuning Chai and Benjamin Caine and Vijay Vasudevan and Wei Han and Jiquan Ngiam and Hang Zhao and Aleksei Timofeev and Scott Ettinger and Maxim Krivokon and Amy Gao and Aditya Joshi and Yu Zhang and Jonathon Shlens and Zhifeng Chen and Dragomir Anguelov. Scalability in perception for autonomous driving: Waymo open dataset, 2019.

- [63] Y. Peng, Y. Bao, Y. Chen, C. Wu, and C. Guo. Optimus: an efficient dynamic resource scheduler for deep learning clusters. In ACM EuroSys, 2018.

- [64] Y. Peng, Y. Bao, Y. Chen, C. Wu, and C. Guo. Optimus: An efficient dynamic resource scheduler for deep learning clusters. In Proceedings of the Thirteenth EuroSys Conference, EuroSys ’18, New York, NY, USA, 2018. Association for Computing Machinery.

- [65] J. Rasley, Y. He, F. Yan, O. Ruwase, and R. Fonseca. HyperDrive: exploring hyperparameters with POP scheduling. In ACM/IFIP/USENIX Middleware, 2017.

- [66] Ravi Teja Mullapudi, Steven Chen, Keyi Zhang, Deva Ramanan, Kayvon Fatahalian. Online model distillation for efficient video inference. In ICCV, 2019.

- [67] S. V. Ravuri and O. Vinyals. Classification accuracy score for conditional generative models. 2019.

- [68] A. S. Razavian, H. Azizpour, J. Sullivan, and S. Carlsson. CNN features off-the-shelf: an astounding baseline for recognition. In IEEE CVPR Workshop, 2014.

- [69] Residential landline and fixed broadband services . https://www.ofcom.org.uk/__data/assets/pdf_file/0015/113640/landline-broadband.pdf. 2019.

- [70] RM French. Catastrophic forgetting in connectionist networks. In Trends in cognitive sciences, 1999.

- [71] H. Robbins. Some aspects of the sequential design of experiments. Bulletin of the American Mathematical Society, 58(5), 1952.

- [72] Ronald Kemker, Marc McClure, Angelina Abitino, Tyler L. Hayes, and Christopher Kanan. Measuring catastrophic forgetting in neural networks. In AAAI, 2018.

- [73] H. F. Scheduler. https://hadoop.apache.org/docs/r2.4.1/hadoop-yarn/hadoop-yarn-site/FairScheduler.html.

- [74] Shadi Noghabi, Landon Cox, Sharad Agarwal, Ganesh Ananthanarayanan. The emerging landscape of edge-computing. In ACM SIGMOBILE GetMobile, 2020.

- [75] H. Shen, S. Han, M. Philipose, and A. Krishnamurthy. Fast video classification via adaptive cascading of deep models. In CVPR, 2017.

- [76] Shivangi Srivastava, Maxim Berman, Matthew B. Blaschko, Devis Tuia . Adaptive compression-based lifelong learning. In BMVC, 2019.

- [77] Si Young Jang, Yoonhyung Lee, Byoungheon Shin, Dongman Lee, Dionisio Vendrell Jacinto . Application-aware iot camera virtualization for video analytics edge computing. In ACM/IEEE SEC, 2018.

- [78] J. Snoek, H. Larochelle, and R. P. Adams. Practical bayesian optimization of machine learning algorithms. In NIPS, 2012.

- [79] Song Han, Huizi Mao, William J. Dally . Accelerating very deep convolutional networks for classification and detection. In ICLR, 2017.

- [80] Sweden Data Collection & Processing.

- [81] K. Swersky, R. Kiros, N. Satish, N. Sundaram, M. M. A. Patwary, and R. P. Adams. Scalable Bayesian Optimization Using Deep Neural Networks. In ICML, 2015.

- [82] Sylvestre-Alvise Rebuffi, Alexander Kolesnikov, Georg Sperl, Christoph H. Lampert. icarl: Incremental classifier and representation learning. In CVPR, 2017.

- [83] TensorFlow Training checkpoints. https://www.tensorflow.org/guide/checkpoint.

- [84] The Future of Computing is Distributed. https://www.datanami.com/2020/02/26/the-future-of-computing-is-distributed/, 2020.

- [85] V. K. Vavilapalli, A. C. Murthy, C. Douglas, S. Agarwal, M. Konar, R. Evans, T. Graves, J. Lowe, H. Shah, S. Seth, et al. Apache hadoop yarn: Yet another resource negotiator. In Proceedings of the 4th annual Symposium on Cloud Computing, page 5. ACM, 2013.

- [86] H. Wang, A. Kembhavi, A. Farhadi, A. L. Yuille, and M. Rastegari. Elastic: Improving cnns with dynamic scaling policies. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2258–2267, 2019.

- [87] Y. Wu, Y. Chen, L. Wang, Y. Ye, Z. Liu, Y. Guo, and Y. Fu. Large scale incremental learning. In IEEE CVPR, 2019.

- [88] Xi Yin, Xiang Yu, Kihyuk Sohn, Xiaoming Liu and Manmohan Chandraker. Feature transfer learning for face recognition with under-represented data. In IEEE CVPR, 2019.

- [89] Xiangyu Zhang, Jianhua Zou, Kaiming He, and Jian Sun. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. In IEEE PAMI, 2016.

- [90] W. Xiao, R. Bhardwaj, R. Ramjee, M. Sivathanu, N. Kwatra, Z. Han, P. Patel, X. Peng, H. Zhao, Q. Zhang, F. Yang, and L. Zhou. Gandiva: Introspective Cluster Scheduling for Deep Learning. In USENIX OSDI, 2018.

- [91] Z. Li and D. Hoiem . Learning without forgetting. In ECCV, 2016.

- [92] H. Zhang, L. Stafman, A. Or, and M. J. Freedman. SLAQ: quality-driven scheduling for distributed machine learning. In SoCC, 2017.

- [93] Ziwei Liu, Zhongqi Miao, Xiaohang Zhan, Jiayun Wang, Boqing Gong, Stella X. Yu . Large-scale long-tailed recognition in an open world. In CVPR, 2019.