Efficient Variational Inference for Sparse Deep Learning with Theoretical Guarantee

Abstract

Sparse deep learning aims to address the challenge of huge storage consumption by deep neural networks, and to recover the sparse structure of target functions. Although tremendous empirical successes have been achieved, most sparse deep learning algorithms are lacking of theoretical support. On the other hand, another line of works have proposed theoretical frameworks that are computationally infeasible. In this paper, we train sparse deep neural networks with a fully Bayesian treatment under spike-and-slab priors, and develop a set of computationally efficient variational inferences via continuous relaxation of Bernoulli distribution. The variational posterior contraction rate is provided, which justifies the consistency of the proposed variational Bayes method. Notably, our empirical results demonstrate that this variational procedure provides uncertainty quantification in terms of Bayesian predictive distribution and is also capable to accomplish consistent variable selection by training a sparse multi-layer neural network.

1 Introduction

Dense neural network (DNN) may face various problems despite its huge successes in AI fields. Larger training sets and more complicated network structures improve accuracy in deep learning, but always incur huge storage and computation burdens. For example, small portable devices may have limited resources such as several megabyte memory, while a dense neural networks like ResNet-50 with 50 convolutional layers would need more than 95 megabytes of memory for storage and numerous floating number computation (Cheng et al.,, 2018). It is therefore necessary to compress deep learning models before deploying them on these hardware limited devices.

In addition, sparse neural networks may recover the potential sparsity structure of the target function, e.g., sparse teacher network in the teacher-student framework (Goldt et al.,, 2019; Tian,, 2018). Another example is from nonparametric regression with sparse target functions, i.e., only a portion of input variables are relevant to the response variable. A sparse network may serve the goal of variable selection (Feng and Simon,, 2017; Liang et al.,, 2018; Ye and Sun,, 2018), and is also known to be robust to adversarial samples against and attacks (Guo et al.,, 2018).

Bayesian neural network (BNN), which dates back to MacKay, (1992); Neal, (1992), comparing with frequentist DNN, possesses the advantages of robust prediction via model averaging and automatic uncertainty quantification (Blundell et al.,, 2015). Conceptually, BNN can easily induce sparse network selection by assigning discrete prior over all possible network structures. However, challenges remain for sparse BNN including inefficient Bayesian computing issue and the lack of theoretical justification. This work aims to resolve these two important bottlenecks simultaneously, by utilizing variational inference approach (Jordan et al.,, 1999; Blei et al.,, 2017). On the computational side, it can reduce the ultra-high dimensional sampling problem of Bayesian computing, to an optimization task which can still be solved by a back-propagation algorithm. On the theoretical side, we provide a proper prior specification, under which the variational posterior distribution converges towards the truth. To the best of our knowledge, this work is the first one that provides a complete package of both theory and computation for sparse Bayesian DNN.

Related work.

A plethora of methods on sparsifying or compressing neural networks have been proposed (Cheng et al.,, 2018; Gale et al.,, 2019). The majority of these methods are pruning-based (Han et al.,, 2016; Zhu and Gupta,, 2018; Frankle and Carbin,, 2018), which are ad-hoc on choosing the threshold of pruning and usually require additional training and fine tuning. Some other methods could achieve sparsity during training. For example, Louizos et al., (2018) introduced regularized learning and Mocanu et al., (2018) proposed sparse evolutionary training. However, the theoretical guarantee and the optimal choice of hyperparameters for these methods are unclear. As a more natural solution to enforce sparsity in DNN, Bayesian sparse neural network has been proposed by placing prior distributions on network weights: Blundell et al., (2015) and Deng et al., (2019) considered spike-and-slab priors with a Gaussian and Laplacian spike respectively; Log-uniform prior was used in Molchanov et al., (2017); Ghosh et al., (2018) chose to use the popular horseshoe shrinkage prior. These existing works actually yield posteriors over the dense DNN model space despite applying sparsity induced priors. In order to derive explicit sparse inference results, users have to additionally determine certain pruning rules on the posterior. On the other hand, theoretical works regarding sparse deep learning have been studied in Schmidt-Hieber, (2017), Polson and Rockova, (2018) and Chérief-Abdellatif, (2020), but finding an efficient implementation to close the gap between theory and practice remains a challenge for these mentioned methods.

Detailed contributions.

We impose a spike-and-slab prior on all the edges (weights and biases) of a neural network, where the spike component and slab component represent whether the corresponding edge is inactive or active, respectively. Our work distinguished itself from prior works on Bayesian sparse neural network by imposing the spike-and-slab prior with the Dirac spike function. Hence automatically, all posterior samples are from exact sparse DNN models.

More importantly, with carefully chosen hyperparameter values, especially the prior probability that each edge is active, we establish the variational posterior consistency, and the corresponding convergence rate strikes the balance of statistical estimation error, variational error and the approximation error. The theoretical results are validated by various simulations and real applications. Empirically we also demonstrate that the proposed method possesses good performance of variable selection and uncertainty quantification. While Feng and Simon, (2017); Liang et al., (2018); Ye and Sun, (2018) only considered the neural network with single hidden layer for variable selection, we observe correct support recovery for neural networks with multi-layer networks.

2 Preliminaries

Nonparametric regression.

Consider a nonparametric regression model with random covariates

| (1) |

where 111This compact support assumption is generally satisfied given the standardized data, and may be relaxed., denotes the uniform distribution, is the noise term, and is an underlying true function. For simplicity of analysis, we assume is known. Denote and as the observations. Let denote the underlying probability measure of data, and denote the corresponding density function.

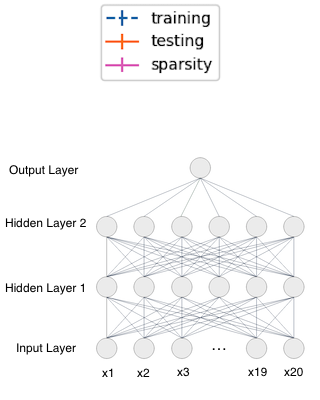

Deep neural network.

An -hidden-layer neural network is used to model the data. The number of neurons in each hidden layer is denoted by for . The weight matrix and bias parameter in each layer are denoted by and for . Let be the activation function, and for any and any , we define as for . Then, given parameters and , the output of this DNN model can be written as

| (2) |

In what follows, with slight abuse of notation, is also viewed as a vector that contains all the coefficients in ’s and ’s, , i.e., , where the length .

Variational inference.

Bayesian procedure makes statistical inferences from the posterior distribution , where is the prior distribution. Since MCMC doesn’t scale well on complex Bayesian learning tasks with large datasets, variational inference (Jordan et al.,, 1999; Blei et al.,, 2017) has become a popular alternative. Given a variational family of distributions, denoted by 222For simplicity, it is commonly assumed that is the mean-field family, i.e. ., it seeks to approximate the true posterior distribution by finding a closest member of in terms of KL divergence:

| (3) |

The optimization (3) is equivalent to minimize the negative ELBO, which is defined as

| (4) |

where the first term in (4) can be viewed as the reconstruction error (Kingma and Welling,, 2014) and the second term serves as regularization. Hence, the variational inference procedure minimizes the reconstruction error while being penalized against prior distribution in the sense of KL divergence.

When the variational family is indexed by some hyperparameter , i.e., any can be written as , then the negative ELBO is a function of as . The KL divergence term in (4) could usually be integrated analytically, while the reconstruction error requires Monte Carlo estimation. Therefore, the optimization of can utilize the stochastic gradient approach (Kingma and Welling,, 2014). To be concrete, if all distributions in can be reparameterized as 333“” means equivalence in distribution for some differentiable function and random variable , then the stochastic estimator of and its gradient are

| (5) |

where ’s are randomly sampled data points and ’s are iid copies of . Here, and are minibatch size and Monte Carlo sample size, respectively.

3 Sparse Bayesian deep learning with spike-and-slab prior

We aim to approximate in the generative model (1) by a sparse neural network. Specifically, given a network structure, i.e. the depth and the width , is approximated by DNN models with sparse parameter vector . From a Bayesian perspective, we impose a spike-and-slab prior (George and McCulloch,, 1993; Ishwaran and Rao,, 2005) on to model sparse DNN.

A spike-and-slab distribution is a mixture of two components: a Dirac spike concentrated at zero and a flat slab distribution. Denote as the Dirac at 0 and as a binary vector indicating the inclusion of each edge in the network. The prior distribution thus follows:

| (6) |

for , where and are hyperparameters representing the prior inclusion probability and the prior Gaussian variance, respectively. The choice of and play an important role in sparse Bayesian learning, and in Section 4, we will establish theoretical guarantees for the variational inference procedure under proper deterministic choices of and . Alternatively, hyperparameters may be chosen via an Empirical Bayesian (EB) procedure, but it is beyond the scope of this work. We assume is in the same family of spike-and-slab laws:

| (7) |

for , where .

Comparing to pruning approaches (e.g. Zhu and Gupta,, 2018; Frankle and Carbin,, 2018; Molchanov et al.,, 2017) that don’t pursue sparsity among bias parameter ’s, the Bayesian modeling induces posterior sparsity for both weight and bias parameters.

In the literature, Polson and Rockova, (2018); Chérief-Abdellatif, (2020) imposed sparsity specification as follows that not only posts great computational challenges, but also requires tuning for optimal sparsity level . For example, Chérief-Abdellatif, (2020) shows that given , two error terms occur in the variation DNN inference: 1) the variational error caused by the variational Bayes approximation to the true posterior distribution and 2) the approximation error between and the best bounded-weight -sparsity DNN approximation of . Both error terms and depend on (and their specific forms are given in next section). Generally speaking, as the model capacity (i.e., ) increases, will increase and will decrease. Hence the optimal choice that strikes the balance between these two is

Therefore, one needs to develop a selection criteria for such that . In contrast, our modeling directly works on the whole sparsity regime without pre-specifying , and is shown later to be capable of automatically attaining the same rate of convergence as if the optimal were known.

4 Theoretical results

In this section, we will establish the contraction rate of the variational sparse DNN procedure, without knowing . For simplicity, we only consider equal-width neural network (similar as Polson and Rockova, (2018)).

The following assumptions are imposed:

Condition 4.1.

that can depend on n, and .

Condition 4.2.

is 1-Lipschitz continuous.

Condition 4.3.

The hyperparameter is set to be some constant, and satisfies and .

Condition 4.2 is very mild, and includes ReLU, sigmoid and tanh. Note that Condition 4.3 gives a wide range choice of , even including the choice of independent of (See Theorem 4.1 below).

We first state a lemma on an upper bound for the negative ELBO. Denote the log-likelihood ratio between and as . Given some constant , we define

Recall that and denote the variational error and the approximation error.

Lemma 4.1.

Noting that is the negative ELBO up to a constant, we therefore show the optimal loss function of the proposed variational inference is bounded.

The next lemma investigates the convergence of the variational distribution under the Hellinger distance, which is defined as

In addition, let for any . An assumption on is required to strike the balance between and :

Condition 4.4.

and .

Lemma 4.2.

Remark.

The result (9) is of exactly the same form as in the existing literature (Pati et al.,, 2018), but it is not trivial in the following sense. The existing literature require the existence of a global testing function that separates and with exponentially decay rate of Type I and Type II errors. If such a testing function exists only over a subset (which is the case for our DNN modeling), then the existing result (Yang et al.,, 2020) can only characterize the VB posterior contraction behavior within , but not over the whole parameter space . Therefore our result, which characterizes the convergence behavior for the overall VB posterior, represents a significant improvement beyond those works.

The above two lemmas together imply the following guarantee for VB posterior:

Theorem 4.1.

The denotes the estimation error from the statistical estimator for . The variational Bayes convergence rate consists of estimating error, i.e., , variational error, i.e., , and approximation error, i.e., . Given that the former two errors have only logarithmic difference, our convergence rate actually strikes the balance among all three error terms. The derived convergence rate has an explicit expression in terms of the network structure based on the forms of , and , in contrast with general convergence results in Pati et al., (2018); Zhang and Gao, (2019); Yang et al., (2020).

Remark.

Theorem 4.1 provides a specific choice for , which can be relaxed to the general conditions on in Lemma 4.2. In contrast to the heuristic choices such as (BIC; Hubin and Storvik,, 2019), this theoretically justified choice incorporates knowledge of input dimension, network structure and sample size. Such an will be used in our numerical experiments in Section 6, but readers shall be aware of that its theoretical validity is only justified in an asymptotic sense.

Remark.

The convergence rate is derived under Hellinger metric, which is of less practical relevance than norm representing the common prediction error. One may obtain a convergence result under norm via a VB truncation (refer to supplementary material, Theorem A.1).

Remark.

If is an -Hölder-smooth function with fixed input dimension , then by choosing some , , combining with the approximation result (Schmidt-Hieber,, 2017, Theorem 3), our theorem ensures rate-minimax convergence up to a logarithmic term.

5 Implementation

To conduct optimization of (4) via stochastic gradient optimization, we need to find certain reparameterization for any distribution in . One solution is to use the inverse CDF sampling technique. Specifically, if , its marginal ’s are independent mixture of (7). Let be the CDF of , then holds where . This inverse CDF reparameterization, although valid, can not be conveniently implemented within the state-of-art python packages like PyTorch. Rather, a more popular way in VB is to utilize the Gumbel-softmax approximation.

We rewrite the loss function as

| (10) |

Since it is impossible to reparameterize the discrete variable by a continuous system, we apply continuous relaxation, i.e., to approximate by a continuous distribution. In particular, the Gumbel-softmax approximation (Maddison et al.,, 2017; Jang et al.,, 2017) is used here, and is approximated by , where

is called the temperature, and as it approaches 0, converges to in distribution (refer to Figure 4 in the supplementary material). In addition, one can show that , which implies . Thus, is viewed as a soft version of , and will be used in the backward pass to enable the calculation for gradients, while the hard version will be used in the forward pass to obtain a sparse network structure. In practice, is usually chosen no smaller than 0.5 for numerical stability. Besides, the normal variable is reparameterized by for .

The complete variational inference procedure with Gumbel-softmax approximation is stated below.

The Gumbel-softmax approximation introduces an additional error that may jeopardize the validity of Theorem 4.1. Our exploratory studies (refer to Section B.3 in supplementary material) demonstrates little differences between the results of using inverse-CDF reparameterization and using Gumbel-softmax approximation in some simple model. Therefore, we conjecture that Gumbel-softmax approximation doesn’t hurt the VB convergence, and thus will be implemented in our numerical studies.

6 Experiments

We evaluate the empirical performance of the proposed variational inference through simulation study and MNIST data application. For the simulation study, we consider a teacher-student framework and a nonlinear regression function, by which we justify the consistency of the proposed method and validate the proposed choice of hyperparameters. As a byproduct, the performance of uncertainty quantification and the effectiveness of variable selection will be examined as well.

For all the numerical studies, we let , the choice of follows Theorem 4.1 (denoted by ): . The remaining details of implementation (such as initialization, choices of , and learning rate) are provided in the supplementary material. We will use VB posterior mean estimator to assess the prediction accuracy, where are samples drawn from the VB posterior and . The posterior network sparsity is measured by . Input nodes who have connection with to the second layer is selected as relevant input variables, and we report the corresponding false positive rate (FPR) and false negative rate (FNR) to evaluate the variable selection performance of our method.

Our method will be compared with the dense variational BNN (VBNN) (Blundell et al.,, 2015) with independent centered normal prior and independent normal variational distribution, the AGP pruner (Zhu and Gupta,, 2018), the Lottery Ticket Hypothesis (LOT) (Frankle and Carbin,, 2018), the variational dropout (VD) (Molchanov et al.,, 2017) and the Horseshoe BNN (HS-BNN) (Ghosh et al.,, 2018). In particular, VBNN can be regarded as a baseline method without any sparsification or compression. All reported simulation results are based on 30 replications (except that we use 60 replications for interval estimation coverages). Note that the sparsity level in methods AGP and LOT are user-specified. Hence, in simulation studies, we try a grid search for AGP and LOT, and only report the ones that yield highest testing accuracy. Furthermore, note that FPR and FNR are not calculated for HS-BNN since it only sparsifies the hidden layers nodewisely.

Simulation I: Teacher-student networks setup

We consider two teacher network settings for : (A) densely connected with a structure of 20-6-6-1, , , , , and network parameter is randomly sampled from ; (B) sparsely connected as shown in Figure 1 (c), , , , and , the network parameter ’s are fixed (refer to supplementary material for details).

| RMSE | Input variable selection | ||||||

|---|---|---|---|---|---|---|---|

| Method | Train | Test | FPR(%) | FNR(%) | Coverage (%) | Sparsity(%) | |

| Dense | SVBNN | 1.01 0.02 | 1.01 0.00 | - | - | 97.5 1.71 | 6.45 0.83 |

| VBNN | 1.00 0.02 | 1.00 0.00 | - | - | 91.4 3.89 | 100 0.00 | |

| VD | 0.99 0.02 | 1.01 0.00 | - | - | 76.4 4.75 | 28.6 2.81 | |

| HS-BNN | 0.98 0.02 | 1.02 0.01 | - | - | 83.5 0.78 | 64.9 24.9 | |

| AGP | 0.99 0.02 | 1.01 0.00 | - | - | - | 30.0 0.00 | |

| LOT | 1.04 0.01 | 1.02 0.00 | - | - | - | 10.0 0.00 | |

| Sparse | SVBNN | 0.99 0.03 | 1.00 0.01 | 0.00 0.00 | 0.00 0.00 | 96.4 4.73 | 2.15 0.25 |

| VBNN | 0.92 0.05 | 1.53 0.17 | 100 0.00 | 0.00 0.00 | 90.7 8.15 | 100 0.00 | |

| VD | 0.86 0.04 | 1.07 0.03 | 72.9 6.99 | 0.00 0.00 | 75.5 7.81 | 20.8 3.08 | |

| HS-BNN | 0.90 0.04 | 1.29 0.04 | - | - | 67.0 8.54 | 32.1 20.1 | |

| AGP | 1.01 0.03 | 1.02 0.00 | 16.9 1.81 | 0.00 0.00 | - | 5.00 0.00 | |

| LOT | 0.96 0.01 | 1.04 0.01 | 19.5 2.57 | 0.00 0.00 | - | 5.00 0.00 | |

First, we examine the impact of different choices of on our VB sparse DNN modeling. A set of different values are used, and for each , we compute the training square root MSE (RMSE) and testing RMSE based on . Results for the simulation setting (B) are plotted in Figure 1 along with error bars (Refer to Section B.4 in supplementary material for the plot under the simulation setting (A)). The figure shows that as increases, the resultant network becomes denser and the training RMSE monotonically decreases, while testing RMSE curve is roughly U-shaped. In other words, an overly small leads to over-sparsified DNNs with insufficient expressive power, and an overly large leads to overfitting DNNs. The suggested successfully locates in the valley of U-shaped testing curve, which empirically justifies our theoretical choice of .

We next compare the performance of our method (with ) to the benchmark methods, and present results in Table 1. For the dense teacher network (A), our method leads to the most sparse structure with comparable prediction error; For the sparse teacher network (B), our method not only achieves the best prediction accuracy, but also always selects the correct set of relevant input variables. Besides, we also explore uncertainty quantification of our methods, by studying the coverage of 95% Bayesian predictive intervals (refer to supplementary material for details). Table 1 shows that our method obtains coverage rates slightly higher than the nominal levels while other (Bayesian) methods suffer from undercoverage problems.

Simulation II: Sparse nonlinear function

Consider the following sparse function :

| (11) |

all covariates are iid and data set contains observations. A ReLU network with and is used. Similar to the simulation I, we study the impact of , and results in Figure 2 justify that is a reasonable choice. Table 2 compares the performances of our method (under ) to the competitive methods. Our method exhibits the best prediction power with minimal connectivity, among all the methods. In addition, our method achieves smallest FPR and acceptable FNR for input variable selection. In comparison, other methods select huge number of false input variables. Figure 2 (c) shows the selected network (edges with ) in one replication that correctly identifies the input variables.

| Method | Train RMSE | Test RMSE | FPR(%) | FNR(%) | Sparsity(%) |

|---|---|---|---|---|---|

| SVBNN | 1.19 0.05 | 1.21 0.05 | 0.00 0.21 | 16.0 8.14 | 2.97 0.48 |

| VBNN | 0.96 0.06 | 1.99 0.49 | 100 0.00 | 0.00 0.00 | 100 0.00 |

| VD | 1.02 0.05 | 1.43 0.19 | 98.6 1.22 | 0.67 3.65 | 46.9 4.72 |

| HS-BNN | 1.17 0.52 | 1.66 0.43 | - | - | 41.1 36.5 |

| AGP | 1.06 0.08 | 1.58 0.11 | 82.7 3.09 | 1.33 5.07 | 30.0 0.00 |

| LOT | 1.08 0.09 | 1.44 0.14 | 83.6 2.94 | 0.00 0.00 | 30.0 0.00 |

MNIST application.

We evaluate the performance of our method on MNIST data for classification tasks, by comparing with benchmark methods. A 2-hidden layer DNN with 512 neurons in each layer is used. We compare the testing accuracy of our method (with ) to the benchmark methods at different epochs using the same batch size (refer to supplementary material for details). Figure 3 shows our method achieves best accuracy as epoch increases, and the final sparsity level for SVBNN, AGP and VD are , and .

In addition, an illustration of our method’s capability for uncertainty quantification on MNIST can be found in the supplementary material, where additional experimental results on UCI regression datasets can also be found.

7 Conclusion and discussion

We proposed a variational inference method for deep neural networks under spike-and-slab priors with theoretical guarantees. Future direction could be investigating the theory behind choosing hyperparamters via the EB estimation instead of deterministic choices.

Furthermore, extending the current results to more complicated networks (convolutional neural network, residual network, etc.) is not trivial. Conceptually, it requires the design of structured sparsity (e.g., group sparsity in Neklyudov et al., (2017)) to fulfill the goal of faster prediction. Theoretically, it requires deeper understanding of the expressive ability (i.e. approximation error) and capacity (i.e., packing or covering number) of the network model space. For illustration purpose, we include an example of Fashion-MNIST task using convolutional neural network in the supplementary material, and it demonstrates the usage of our method on more complex networks in practice.

Broader Impact

We believe the ethical aspects are not applicable to this work. For future societal consequences, deep learning has a wide range of applications such as computer version and natural language processing. Our work provides a solution to overcome the drawbacks of modern deep neural network, and also improves the understanding of deep learning.

The proposed method could improve the existing applications. Specifically, sparse learning helps apply deep neural networks to hardware limited devices, like cell phones or pads, which will broaden the horizon of deep learning application. In addition, as a Bayesian method, not only a result, but also the knowledge of confidence or certainty in that result are provided, which could benefit people in various aspects. For example, in the application of cancer diagnostic, by providing the certainty associated with each possible outcome, Bayesian learning would assist the medical professionals to make a better judgement about whether the tumor is a cancer or a benign one. Such kind of ability to quantify uncertainty would contribute to the modern deep learning.

Acknowledgments and Disclosure of Funding

We would like to thank Wei Deng for the helpful discussion and thank the reviewers for their thoughtful comments. Qifan Song’s research is partially supported by National Science Foundation grant DMS-1811812. Guang Cheng was a member of the Institute for Advanced Study in writing this paper, and he would like to thank the institute for its hospitality.

References

- Blei et al., (2017) Blei, D., Kucukelbir, A., and McAuliffe, J. (2017). Variational inference: A review for statisticians. Journal of the American Statistical Association, 112:859–877.

- Blundell et al., (2015) Blundell, C., Cornebise, J., Kavukcuoglu, K., et al. (2015). Weight uncertainty in neural networks. In Proceedings of the 32nd International Conference on International Conference on Machine Learning (ICML 15), pages 1613–1622, Lille, France.

- Boucheron et al., (2013) Boucheron, S., Lugosi, G., and Massart, P. (2013). Concentration inequalities: A nonasymptotic theory of independence. Oxford University press.

- Cheng et al., (2018) Cheng, Y., Wang, D., Zhou, P., et al. (2018). Model compression and acceleration for deep neural networks: The principles, progress, and challenges. IEEE Signal Processing Magazine, 35(1):126–136.

- Chérief-Abdellatif, (2020) Chérief-Abdellatif, B.-E. (2020). Convergence rates of variational inference in sparse deep learning. In Proceedings of the 37th International Conference on Machine Learning (ICML 2020), Vienna, Austria.

- Chérief-Abdellatif and Alquier, (2018) Chérief-Abdellatif, B.-E. and Alquier, P. (2018). Consistency of variational bayes inference for estimation and model selection in mixtures. Electronic Journal of Statistics, 12(2):2995–3035.

- Deng et al., (2019) Deng, W., Zhang, X., Liang, F., and Lin, G. (2019). An adaptive empirical Bayesian method for sparse deep learning. In 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, Canada.

- Feng and Simon, (2017) Feng, J. and Simon, N. (2017). Sparse input neural networks for high-dimensional nonparametric regression and classification. arXiv preprint arXiv:1711.07592.

- Frankle and Carbin, (2018) Frankle, J. and Carbin, M. (2018). The lottery ticket hypothesis: Finding sparse, trainable neural networks. arXiv preprint arXiv:1803.03635.

- Gale et al., (2019) Gale, T., Elsen, E., and Hooker, S. (2019). The state of sparsity in deep neural networks. arXiv preprint arXiv:1902.09574.

- George and McCulloch, (1993) George, E. and McCulloch, R. (1993). Variable selection via gibbs sampling. Journal of the American Statistical Association, 88:881–889.

- Ghosal and Van Der Vaart, (2007) Ghosal, S. and Van Der Vaart, A. (2007). Convergence rates of posterior distributions for noniid observations. The Annals of Statistics, 35(1):192–223.

- Ghosh and Doshi-Velez, (2017) Ghosh, S. and Doshi-Velez, F. (2017). Model selection in Bayesian neural networks via horseshoe priors. arXiv preprint arXiv:1705.10388.

- Ghosh et al., (2018) Ghosh, S., Yao, J., and Doshi-Velez, F. (2018). Structured variational learning of Bayesian neural networks with horseshoe priors. In Proceedings of the 35th International Conference on Machine Learning (ICML 2018), Stockholm, Sweden.

- Goldt et al., (2019) Goldt, S., Advani, M. S., Saxe, A. M., Krzakala, F., and Zdeborová, L. (2019). Dynamics of stochastic gradient descent for two-layer neural networks in the teacher-student setup. In 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, Canada.

- Guo et al., (2018) Guo, Y., Zhang, C., Zhang, C., and Chen, Y. (2018). Sparse DNNs with improved adversarial robustness. In 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), pages 240–249, Montréal, Canada.

- Han et al., (2016) Han, S., Mao, H., and Dally, W. (2016). Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. In International Conference on Learning Representations (ICLR).

- Hernández-Lobato and Adams, (2015) Hernández-Lobato, J. and Adams, R. (2015). Probabilistic backpropagation for scalable learning of bayesian neural networks. In Proceedings of the 32nd International Conference on Machine Learning (ICML 2015), Lille, France.

- Hubin and Storvik, (2019) Hubin, A. and Storvik, G. (2019). Combining model and parameter uncertainty in bayesian neural networks. arXiv:1903.07594.

- Ishwaran and Rao, (2005) Ishwaran, H. and Rao, S. (2005). Spike and slab variable selection: Frequentist and bayesian strategies. Annals of Statistics, 33(2):730–773.

- Jang et al., (2017) Jang, E., Gu, S., and Poole, B. (2017). Categorical reparameterization with gumbel-softmax. In International Conference on Learning Representations (ICLR 2017).

- Jordan et al., (1999) Jordan, M., Ghahramani, Z., Jaakkola, T., et al. (1999). An introduction to variational methods for graphical models. Machine Learning.

- Kingma and Welling, (2014) Kingma, D. and Welling, M. (2014). Auto-encoding variational Bayes. arXiv:1312.6114.

- Le Cam, (1986) Le Cam, L. (1986). Asymptotic methods in statistical decision theory. Springer Science & Business Media, New York.

- Liang et al., (2018) Liang, F., Li, Q., and Zhou, L. (2018). Bayesian neural networks for selection of drug sensitive genes. Journal of the American Statistical Association, 113:955–972.

- Louizos et al., (2018) Louizos, C., Welling, M., and Kingma, D. P. (2018). Learning sparse neural networks through l0 regularization. In ICLR 2018.

- MacKay, (1992) MacKay, D. (1992). A practical bayesian framework for backpropagation networks. Nerual Computation.

- Maddison et al., (2017) Maddison, C., Mnih, A., and Teh, Y. W. (2017). The concrete distribution: A continuous relaxation of discrete random variables. In International Conference on Learning Representations (ICLR 2017).

- Mocanu et al., (2018) Mocanu, D., Mocanu, E., Stone, P., et al. (2018). Scalable training of artificial neural networks with adaptive sparse connectivity inspired by network science. Nature Communications, 9:2383.

- Molchanov et al., (2017) Molchanov, D., Ashukha, A., and Vetrov, D. (2017). Variational dropout sparsifies deep neural networks. In Proceedings of the 34th International Conference on Machine Learning (ICML 2017), pages 2498–2507.

- Neal, (1992) Neal, R. (1992). Bayesian learning via stochastic dynamics. In Advances in Neural Information Processing Systems 5 (NIPS 1992), pages 475–482.

- Neklyudov et al., (2017) Neklyudov, K., Molchanov, D., Ashukha, A., and Vetrov, D. (2017). Structured Bayesian pruning via log-normal multiplicative noise. In 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA.

- Pati et al., (2018) Pati, D., Bhattacharya, A., and Yang, Y. (2018). On the statistical optimality of variational bayes. In Proceedings of the 21st International Conference on Artificial Intelligence and Statistics (AISTATS) 2018, Lanzarote, Spain.

- Polson and Rockova, (2018) Polson, N. and Rockova, V. (2018). Posterior concentration for sparse deep learning. In 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), pages 930–941, Montréal, Canada.

- Schmidt-Hieber, (2017) Schmidt-Hieber, J. (2017). Nonparametric regression using deep neural networks with ReLU activation function. arXiv:1708.06633v2.

- Sønderby et al., (2016) Sønderby, C., Raiko, T., Maaløe, L., Sønderby, S., and Ole, W. (2016). How to train deep variational autoencoders and probabilistic ladder networks. In Proceedings of the 33rd International Conference on International Conference on Machine Learning (ICML 16), New York, NY.

- Tian, (2018) Tian, Y. (2018). A theoretical framework for deep locally connected ReLU network. arXiv preprint arXiv:1809.10829.

- Yang et al., (2020) Yang, Y., Pati, D., and Bhattacharya, A. (2020). -variational inference with statistical guarantees. Annals of Statistics, 48(2):886–905.

- Ye and Sun, (2018) Ye, M. and Sun, Y. (2018). Variable selection via penalized neural network: a drop-out-one loss approach. In Proceedings of the 35th International Conference on International Conference on Machine Learning (ICML 18), pages 5620–5629, Stockholm, Sweden.

- Zhang and Gao, (2019) Zhang, F. and Gao, C. (2019). Convergence rates of variational posterior distributions. arXiv preprint arXiv:1712.02519.

- Zhu and Gupta, (2018) Zhu, M. and Gupta, S. (2018). To prune, or not to prune: Exploring the efficacy of pruning for model compression. In International Conference on Learning Representations (ICLR).

Supplementary Document to the Paper "Efficient Variational Inference for Sparse Deep Learning with Theoretical Guarantee"

In this document, the detailed proofs for the theoretical results are provided in the first section, along with additional numerical results presented in the second section.

Appendix A Proofs of theoretical results

A.1 Proof of Lemma 4.1

As a technical tool for the proof, we first restate the Lemma 6.1 in Chérief-Abdellatif and Alquier, (2018) as follows.

Lemma A.1.

For any , the KL divergence between any two mixture densities and is bounded as

where .

Proof of Lemma 4.1

Proof.

It suffices to construct some , such that w.h.p,

where , are some positive constants if , or any diverging sequences if .

Recall , then can be constructed as

| (12) | |||

| (13) |

We define as follows, for :

| (14) |

where .

To prove (12), denote as the set of all possible binary inclusion vectors with length , then and could be written as mixtures

and

where is the probability for vector under prior distribution . Then,

where and are some fixed constants. The first inequality is due to Lemma A.1 and the second inequality is due to Condition .

Furthermore, by Appendix G of Chérief-Abdellatif, (2020), it can be shown

Noting that

Denote

Since ,

Noting that , then

where due to Cauchy-Schwarz inequality. Therefore, , and w.h.p., , where is some positive constant if or is any diverging sequence if . Therefore,

which concludes this lemma together with (12). ∎

A.2 Proof of Lemma 4.2

Under Condition 4.1 - 4.2, we have the following lemma that shows the existence of testing functions over , where denotes the set of parameter whose norm is bounded by .

Lemma A.2.

Let for any and some large constant M. Let . Then there exists some testing function and , , such that

Proof.

Due to the well-known result (e.g., Le Cam, (1986), page 491 or Ghosal and Van Der Vaart, (2007), Lemma 2), there always exists a function , such that

for all satisfying that .

Let denote the covering number of set , i.e., there exists Hellinger-balls with radius , that completely cover . For any (W.O.L.G, we assume belongs to the th Hellinger ball centered at ), if , then we must have that and there exists a testing function , such that

Now we define . Thus we must have

Note that

| (15) |

where is some positive constant, the first inequality is due to the fact

and , the second inequality is due to Lemma 10 of Schmidt-Hieber, (2017)444Although Schmidt-Hieber, (2017) only focuses on ReLU network, its Lemma 10 could apply to any 1-Lipchitz continuous activation function., and the last inequality is due to . Therefore,

for some . On the other hand, for any , such that , say belongs to the th Hellinger ball, then we have

where . Hence we conclude the proof. ∎

Lemma A.3 restates the Donsker and Varadhan’s representation for the KL divergence, whose proof can be found in Boucheron et al., (2013).

Lemma A.3.

For any probability measure and any measurable function with ,

Proof of Lemma 4.2

Proof.

Denote as the truncated parameter space , where is defined in Lemma A.2. Noting that

| (16) |

it suffices to find upper bounds of the two components in RHS of (16).

We start with the first component. Denote to be the truncated prior on set , i.e., , then by Lemma A.2 and the same argument used in Theorem 3.1 of Pati et al., (2018), it could be shown

| (17) |

for some , where . We further denote the restricted on as , i.e., , then by Lemma A.3 and (17), w.h.p.,

| (18) |

Furthermore,

and similarly,

Combining the above two equations together, we have

| (19) |

The second component of (16) trivially satisfies that . Thus, together with (19), we have that w.h.p.,

| (20) |

The second term in the RHS of (20) is bounded by w.h.p., due to Lemma 4.1, where is either positive constant or diverging sequence depending on whether diverges.

The third term in the RHS of (20) is bounded by

Conditional on ’s, follows a normal distribution , where . Thus conditional on ’s, the third term in the RHS of (20) is bounded by

| (21) |

Noting that for any diverging sequence , (21) is further bounded, w.h.p., by

Therefore, the third term in the RHS of (20) can be bounded by w.h.p. (by choosing ).

The fourth term in the RHS of (20) is bounded by

Similarly, the fifth term in the RHS of (20) is bounded by .

Combine all the above result together, w.h.p.,

where is some constant. ∎

Lemma A.4 (Chernoff bound for Poisson tail).

Let be a Poisson random variable. For any ,

Lemma A.5.

If for any positive diverging sequence , then w.h.p., .

Proof.

By Lemma 4.1, we have that w.h.p.,

where is either a constant or any diverging sequence, depending on whether diverges. By the similar argument used in the proof of Lemma 4.1,

where is a normal distributed , where . Therefore, , and . Since , we must have w.h.p., . On the other hand,

| (22) |

Let us choose , and , then the above inequality (22) implies that . Noting that , it further implies .

Under distribution , by Bernstein inequality,

for some constant , where the last inequality holds since .

Under distribution , is stochastically smaller than . Since , then by Lemma A.4,

for some . Trivially, it implies that w.h.p, for VB posterior .

∎

A.3 Main theorem

Theorem A.1.

Under Conditions 4.1-4.2, 4.4 and set for any constant , we then have that w.h.p.,

where is some positive constant and is any diverging sequence. If , and we truncated the VB posterior on , i.e., , then, w.h.p.,

where , and is the VB posterior mass of .

Proof.

The convergence under squared Hellinger distance is directly result of Lemma 4.1 and 4.2, by simply checking the choice of satisfies required conditions. The convergence under distance relies on inequality for when both and are bounded by . Then, w.h.p,

∎

Appendix B Additional experimental results

B.1 Comparison between Bernoulli variable and the Gumbel softmax approximation

Denote and , then we have that

Fig 4 demonstrates the functional convergence of towards as goes to zero. In Fig 4(a), by fixing , we show converges to as a function of . Fig 4 (b) demonstrates that converges to as a function of when is fixed. These two figures show that as , . Formally, Maddison et al., (2017) rigorously proved that converges to in distribution as approaches 0.

B.2 Algorithm implementation details for the numerical experiments

Initialization

As mentioned by Sønderby et al., (2016) and Molchanov et al., (2017), training sparse BNN with random initialization may lead to bad performance, since many of the weights could be pruned too early. In our case, we assign each of the weights and biases a inclusion variable, which could reduce to zero quickly in the early optimization stage if we randomly initialize them. As a consequence, we deliberately initialize to be close to 1 in our experiments. This initialization strategy ensures the training starts from a fully connected neural network, which is similar to start training from a pre-trained fully connected network as mentioned in Molchanov et al., (2017). The other two parameters and are initialized randomly.

Other implementation details in simulation studies

We set and during training. For Simulation I, we choose batch size and for (A) and (B) respectively, and run 10000 epochs for both cases. For simulation II, we use and run 7000 epochs. Although it is common to set up an annealing schedule for temperature parameter , we don’t observe any significant performance improvement compared to setting as a constant, therefore we choose in all of our experiments. The optimization method used is Adam.

B.3 Toy example: linear regression

In this section, we aim to demonstrate that there is little difference between the results using inverse-CDF reparameterization and Gumbel-softmax approximation via a toy example.

Consider a linear regression model:

We simulate a dataset with 1000 observations and 200 predictors, where , and for all other .

A spike-and-slab prior is imposed on such that

for , where and . The variational distribution is chosen as

We use both Gumbel-softmax approximation and inverse-CDF reparameterization for the stochastic optimization of ELBO, and plot posterior mean (blue curve) against the true value (red curve). Figure 5 shows that inverse-CDF reparameterization exhibits only slightly higher error in estimating zero coefficients than the Gumbel-softmax approximation, which indicates the two methods has little difference on this toy example.

B.4 Teacher student networks

The network parameter for the sparse teacher network setting (B) is set as following: ; .

Figure 6 displays the simulation result for simulation I under dense teacher network (A) setting. Unlike the result under sparse teacher network (B), the testing accuracy seems monotonically increases as increases (i.e., posterior network gets denser). However, as shown, the increasing of testing performance is rather slow, which indicates that introducing sparsity has few negative impact to the testing accuracy.

Coverage rate

In this paragraph, we explain the details of how we compute the coverage rate values of Bayesian intervals reported in the main text. A fixed point is prespecified, and let be 1000 equidistant points from to . In each run, we compute the Bayesian credible intervals of response means (estimated by 600 Monte Carlo samples) for 1000 different input ’s: . It is repeated by 60 times and the average coverage rate (over all different ’s and 60 runs) is reported. Similarly, we replace (or ) by (), and compute their average coverage rate. The complete coverage rate results are shown in Table 3. Note that Table 1 in the main text shows coverage of for (A) and coverage of for (B).

| 90 % coverage (%) | 95% coverage (%) | ||||||

|---|---|---|---|---|---|---|---|

| Method | |||||||

| Dense | SVBNN | 93.8 2.84 | 93.1 4.93 | 93.1 2.96 | 97.9 1.01 | 97.9 1.69 | 97.5 1.71 |

| VBNN | 85.8 2.51 | 82.4 2.62 | 86.3 1.88 | 92.7 2.83 | 91.3 2.61 | 91.4 2.43 | |

| VD | 61.3 2.40 | 60.0 2.79 | 64.9 6.17 | 74.9 1.79 | 71.8 2.33 | 76.4 4.75 | |

| HS-BNN | 83.1 1.67 | 80.0 1.21 | 76.9 1.70 | 88.1 1.13 | 84.1 1.48 | 83.5 0.78 | |

| Sparse | SVBNN | 92.3 8.61 | 94.6 5.37 | 98.3 0.00 | 96.4 4.73 | 97.7 3.71 | 100 0.00 |

| VBNN | 86.7 10.9 | 87.0 11.3 | 93.3 0.00 | 90.7 8.15 | 91.9 9.21 | 96.7 0.00 | |

| VD | 65.2 0.08 | 63.7 6.58 | 65.9 0.83 | 75.5 7.81 | 74.6 7.79 | 76.6 0.40 | |

| HS-BNN | 59.0 8.52 | 59.4 4.38 | 56.6 2.06 | 67.0 8.54 | 68.2 3.62 | 66.5 1.86 | |

B.5 Real data regression experiment: UCI datasets

We follow the experimental protocols of Hernández-Lobato and Adams, (2015), and choose five datasets for the experiment. For the small datasets "Kin8nm", "Naval", "Power Plant" and "wine", we choose a single-hidden-layer ReLU network with 50 hidden units. We randomly select and for training and testing respectively, and this random split process is repeated for 20 times (to obtain standard deviations for our results). We choose minibatch size , and run 500 epochs for "Naval", "Power Plant" and "Wine", 800 epochs for "Kin8nm". For the large dataset "Year", we use a single-hidden-layer ReLU network with 100 hidden units, and the evaluation is conducted on a single split. We choose , and run 100 epochs. For all the five datasets, is chosen as : , which is the same as other numerical studies. We let and use grid search to find that yields the best prediction accuracy. Adam is used for all the datasets in the experiment.

We report the testing squared root MSE (RMSE) based on (defined in the main text) with , and also report the posterior network sparsity . For the purpose of comparison, we list the results by Horseshoe BNN (HS-BNN) (Ghosh and Doshi-Velez,, 2017) and probalistic backpropagation (PBP) (Hernández-Lobato and Adams,, 2015). Table 4 demonstrates that our method achieves best prediction accuracy for all the datasets with a sparse structure.

| Test RMSE | Posterior sparsity(%) | ||||

|---|---|---|---|---|---|

| Dataset | SVBNN | HS-BNN | PBP | SVBNN | |

| Kin8nm | 8192 (8) | 0.08 0.00 | 0.08 0.00 | 0.10 0.00 | 64.5 1.85 |

| Naval | 11934 (16) | 0.00 0.00 | 0.00 0.00 | 0.01 0.00 | 82.9 1.31 |

| Power Plant | 9568 (4) | 4.01 0.18 | 4.03 0.15 | 4.12 0.03 | 56.6 3.13 |

| Wine | 1599 (11) | 0.62 0.04 | 0.63 0.04 | 0.64 0.01 | 59.9 4.92 |

| Year | 515345 (90) | 8.87 NA | 9.26 NA | 8.88 NA | 20.8 NA |

B.6 Real data classification experiment: MNIST dataset

The MNIST data is normalized by mean equaling 0.1306 and standard deviation equaling 0.3081. For all methods, we choose the same minibatch size , for our method and for the others, total number of epochs is 400 and the optimization algorithm is RMSprop. AGP is pre-specified at sparsity level.

Besides the testing accuracy reported in the main text, we also examine our method’s ability of uncertainty quantification for MNIST classification task. We first create ambiguous images by overlaying two examples from the testing set as shown in Figure 7 (a). To perform uncertainty quantification using our method, for each of the overlaid images, we generate from the VB posterior for , and calculate the associated predictive probability vector where is the overlaid image input, and then use the estimated posterior mean as the Bayesian predictive probability vector. As a comparison, we also calculate the predictive probability vector for each overlaid image using AGP as a frequentist benchmark. Figure 7 (b) shows frequentist method gives almost a deterministic answer (i.e., predictive probability is almost 1 for certain digit) that is obviously unsatisfactory for this task, while our VB method is capable of providing knowledge of certainty on these out-of-domain inputs, which demonstrates the advantage of Bayesian method in uncertainty quantification on the classification task.

B.7 Illustration of CNN: Fashion-MNIST dataset

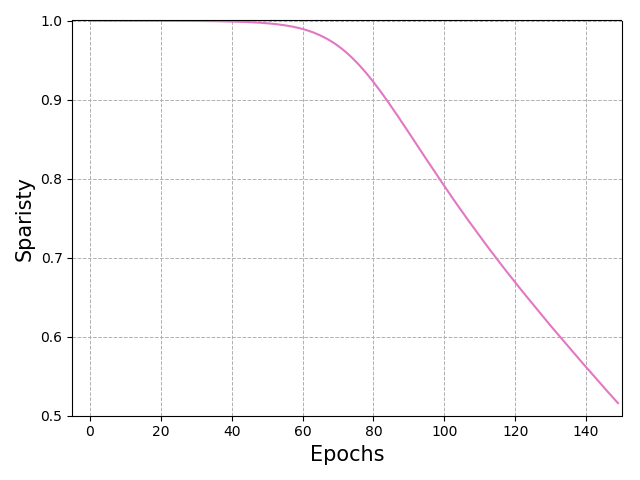

In this section, we perform an experiment on a more complex task, the Fashion-MNIST dataset. To illustrate the usage of our method beyond feedforward networks, we consider using a 2-Conv-2-FC network: The feature maps for the convolutional layers are set to be 32 and 64, and the filter size are and respectively. The paddings are 2 for both layers and the it has a max pooling for each of the layers; The fully-connected layers have neurons. The activation functions are all ReLUs. The dataset is prepocessed by random horizontal flip. The batchsize is 1024, learning rate is 0.001, and Adam is used for optimization. We run the experiment for 150 epochs.

We use both SVBNN and VBNN for this task. In particular, the VBNN, which uses normal prior and variational distributions, is the full Bayesian method without compressing, and can be regarded as the baseline for our method. Figure 8 exhibits our method attains higher accuracy as epoch increases and then decreases as the sparisty goes down. Meanwhile, the baseline method - full BNN suffers from overfitting after 80 epochs.