∎

410 Allen Hall, 175 President’s Circle, Mississippi State, MS, 39762

22email: [email protected]

Efficient exponential Runge–Kutta methods of high order: construction and implementation ††thanks: This work has been supported in part by National Science Foundation through award NSF DMS–2012022.

Abstract

Exponential Runge–Kutta methods have shown to be competitive for the time integration of stiff semilinear parabolic PDEs. The current construction of stiffly accurate exponential Runge–Kutta methods, however, relies on a convergence result that requires weakening many of the order conditions, resulting in schemes whose stages must be implemented in a sequential way. In this work, after showing a stronger convergence result, we are able to derive two new families of fourth- and fifth-order exponential Runge–Kutta methods, which, in contrast to the existing methods, have multiple stages that are independent of one another and share the same format, thereby allowing them to be implemented in parallel or simultaneously, and making the methods to behave like using with much less stages. Moreover, all of their stages involve only one linear combination of the product of -functions (using the same argument) with vectors. Overall, these features make these new methods to be much more efficient to implement when compared to the existing methods of the same orders. Numerical experiments on a one-dimensional semilinear parabolic problem, a nonlinear Schrödinger equation, and a two-dimensional Gray–Scott model are given to confirm the accuracy and efficiency of the two newly constructed methods.

Keywords:

Exponential Runge–Kutta methods Exponential integrators Stiff PDEs Efficient implementationMSC:

MSC 65L04 MSC 65M06 MSC 65N121 Introduction

In this paper, we are concerned with the construction and implementation of new efficient exponential Runge–Kutta integrators for solving stiff parabolic PDEs. These PDEs, upon their spatial discretizations, can be cast in the form of semilinear problems

| (1) |

where the linear part usually causes stiffness. The nonlinearity is assumed to satisfy a local Lipschitz condition in a strip along the exact solution.

Exponential Runge–Kutta methods are a popular class of exponential integrators HO10 , which have shown a great promise as an alternative to standard time integration solvers for stiff systems and applications in recent years, see e.g. HO05 ; LO12b ; LO14b ; LO13 ; LO14a ; Luan2014 ; LO16 ; L17 ; Michels2017 ; Ju2017 ; LD18 ; Luan18 ; Pieper2019a . The main idea behind these methods is to solve the linear portion of (1) exactly and integrate the remaining nonlinear portion explicitly based on a representation of the exact solution using the variation-of-constants formula.

A -stage explicit exponential Runge–Kutta (expRK) method HO05 applied to (1) can be reformulated (see LO12b ; LO14b ) as

| (2a) | ||||

| (2b) | ||||

where

| (3) |

Here, denote the internal stages that approximate using the time step size and nodes . By construction, the coefficients and are usually linear combinations of the entire functions

| (4) |

and their scaled versions .

A common approach that has been used to determine the unknown matrix functions and is to expand them as , (e.g. using classical Taylor series expansions) to obtain order conditions. Clearly, the boundedness of the remainder terms of these expansions (and thus the error terms) are dependent of . Due to stability reasons, such resulting methods might not be suitable for integrating stiff PDEs, which typically has a large norm or is even unbounded operator. These methods are thus usually referred as classical (non-stiffly accurate) expRK methods. Unlike this approach, in a seminal contribution HO05 , Hochbruck and Ostermann derived a new error expansion with the remainder terms that are bounded independently of the stiffness (i.e. not involving the powers of ), leading to stiff order conditions, which give rise to the construction of stiffly accurate expRK methods of orders up to four. Following this, in LO13 Luan and Ostermann developed a systematic theory of deriving stiff order conditions for expRK methods of arbitrary order, thereby allowing the construction of a fifth-order method in LO14b .

In view of the existing stiffly accurate expRK methods in the literature, we observe that they were derived based on a convergence result that requires weakening many of the stiff order conditions (in order to minimize the number of required stages and matrix functions used in each internal stages ). As a result, their structures contain internal stages that are dependent of the preceding stages, implying that such methods must be implemented by computing each of these stages sequentially. Also, the very last stages usually involve several different linear combinations of -functions (using different nodes in their arguments) acting on different sets of vectors. This would introduce additional computational effort for these stages. For more details, we refer to Sections 2 and 5.

Motivated by the observations above, in this work we show a stronger convergence result for expRK methods up to order five which requires weakening only one order condition (thereby could improve the stability and accuracy) and offers more degree of freedoms in solving order conditions. Using this result and inspired by our recent algorithm, , proposed in Luan18 (which allows one to simultaneously compute multiple linear combinations of - functions acting on a same set of vectors), we construct new methods of orders 4 and 5 which involve only one linear combination of - functions for each stage and have multiple internal stages that are independent of one another, thereby allowing them to be computed in parallel. Furthermore, one can derive these independent stages in a way that they share the same form of linear combination of - functions acting on the same set of vectors, allowing them to be implemented simultaneously (by one evaluation). While these independent states can be computed in parallel (as mentioned above) by any algorithm which approximates the action of (the linear combination of) - functions, we note that the possibility to compute them simultaneously is a new feature that can be used with our algorithm (other algorithms, e.g., that do not require the construction of Krylov subspaces, might not support computing these stages simultaneously). Overall, this makes the new methods to behave like methods using much less number of stages (even when compared to the existing methods of the same orders), meaning that they require much less number of evaluations for linear combinations of - functions, and are thus more efficient.

The paper is organized as follows. In Section 2, we describe our motivation, propose new ideas, and review the existing expRK methods in the literature with respect to these ideas. Following this, in Section 3 we prove a stronger convergence result (Theorem 3.1) for expRK methods, which requires relaxing only one order condition. This allows us to construct more efficient methods in Section 4. In particular, we are able to derive two new families of fourth- and fifth- order stiffly accurate expRK methods called (4th-order 6-stage but requires 4 independent stage evaluation only) and (5th-order 10-stage but requires 5 independent stage evaluation only), respectively. In Section 5, we present details implementation of these two new methods, as well as the existing stiffly accurate expRK schemes of the same orders (for comparison). In the last section, numerical examples including one and two-dimensional stiff PDEs are presented to demonstrate the accuracy and efficiency of the two newly constructed expRK integrators.

2 Motivation and existing methods

In this section, we start our motivation by taking a closer look at an efficient way for implementing expRK methods (2). Then, we propose some ideas to derive more efficient methods with respect to this efficient implementation along with reviewing the current methods.

2.1 An efficient way of implementation

Clearly, each stage ( or ) of (2) requires computing matrix functions of the form (), where is some vector (could be or a linear combination of these). Thanks to recent developments AH11 ; NW12 ; CKOS16 ; GaudreaultPudykiewicz16 , one can efficiently compute a linear combination of -functions acting on a set of input vectors

| (5) |

where is some square matrix. This is crucial when implementing exponential integrators. Very recently, in Luan18 , we were able to improve the implementations presented in NW12 ; GaudreaultPudykiewicz16 , resulting in the routine . The underlying method in this algorithm is the use of an adaptive time-stepping technique combined with Krylov subspace methods, which allows us to simultaneously compute multiple linear combinations of type (5) using different scaling factors of , i.e.,

| (6) | ||||

Now taking and considering () as nodes used in expRK methods immediately suggests that one can compute the following () linear linear combinations

| (7) |

simultaneously by using only one evaluation (i.e., one call to ). Note that this requires the use of a same set of vectors for all the linear combinations in (7).

Motivated by this, we see that if a -stage expRK scheme (2) is constructed in such a way that each internal stage has the form

| (8) |

which includes only one linear combination of - functions using exactly node as an argument in all functions, then the scheme will contain a total of such linear combinations ( for and 1 for as (2b) can be always written in the form of (8) with ), thereby requiring evaluations only. Furthermore, since the sets of vectors in (8) are usually different for each , (7) also suggests that the efficiency will be significantly increased if one could build such stages (or a group of) of the form (8) that share the same format (i.e., having the same set of acting vectors ) or that are independent of one another. As this allows to compute such stages simultaneously by one evaluation or to implement them in parallel similarly to our construction of parallel exponential Rosenbrock methods LO16 ), it certainly reduces the total number of required evaluations and thus speedups the computing time.

With respect to these observations, we now review the existing expRK schemes in the literature. Since our focus is on stiff problems, we will discuss only on stiffly accurate expRK methods, meaning that they satisfy the stiff order conditions (see Section 3 below).

2.2 Existing schemes and remarks

In HO05 , expRK methods of orders up to four have been derived. For later reference, we name the second-order, the third-order, and the fourth-order methods in that work as , , and , respectively. In LO14b , we have constructed an expRK method of order five called . To discuss all of these schemes in terms of the implementation, we rewrite their internal stages and as linear combinations of - functions like (8) and display them as follows (Note that since the first-order method, the exponential Euler scheme has no internal stage, we do not consider it here).

:

| (9) | ||||

(a representative with ):

| (10) | ||||

(the only existing fourth-order stiffly accurate expRK method constructed by Hochbruck and Ostermann HO05 ):

| (11) | ||||

(the only existing fifth-order stiffly accurate expRK method constructed by Luan and Ostermann LO14b ):

Remark 1

In view of the structures of these schemes, one can see that only the second- and third-oder schemes (, ) have all in the form (8). While requires one internal stage , needs two internal stages with depends on , making these stages cannot be computed simultaneously. As for , to the best of our knowledge, this 5-stage scheme is the only existing fourth-order stiffly accurate expRK method. As seen, among its internal stages the three internal stages , , and are of the form (8) but again their corresponding sets of vectors are not the same (), and because of (3), they are not independent of one another ( and depend on their preceding stages). Therefore one needs 3 sequential evaluations for computing these three stages. Also, we note that the last internal stage depends on all of its preceding stages and involves two different linear combinations of - functions with different scaling factors and , namely, and (grouped in two different brackets ), which has to be implemented by 2 separate evaluations. The final stage depends on and . As a result, this scheme must be implemented in a sequential way, which requires totally 6 evaluations for 6 different linear combinations. Similarly, to the best of our knowledge, is also the only existing fifth-order stiffly accurate expRK methods. From the construction of this scheme LO14b , one needs 8 stages. Among them, the first five internal stages are of the form (8). We note, however, that the last two internal stages and involves 2 and 3 different linear combinations (grouped in different brackets ) of - functions (with different scaling factors) acting on different sets of vectors. And each stage ( or ) depends on all the preceding stages (except for the first stage ). Thus, this scheme must be also implemented in a sequential way (it also does not have any group of internal stages that can be computed simultaneously). Clearly, it requires totally 11 evaluations (11 different linear combinations of functions).

Remark 2

The resulting structures of the expRK schemes discussed in Remark 1 can be explained by taking a closer look at their constructions presented in HO05 ; LO14b . Namely, these methods have been analyzed and derived by using a weakened convergence result, i.e., weakening of many order conditions in order to minimize the number of required stages and the number of matrix functions in each internal stage . Specifically, for fourth-order methods (e.g., ) 4 out of 9 order conditions have to be relaxed and for fifth-order methods (e.g., ) 9 out of 16 order conditions have to be relaxed. As a trade off, each stage of these methods depends on the preceding stages (thus the resulting schemes must be implemented by computing each stage sequentially) and the very last stages usually involve different linear combinations of -functions (with several different nodes as scaling factors) acting on not the same set of vectors, which then require additional sequential evaluations. For more details, see Section 4 below.

3 Stiff order conditions and convergence analysis

Inspired by the motivation and remarks in Section 2, we next present a stronger convergence result which later allows a construction of new efficient methods of high order. For this, we first recall the stiff order conditions for expRK methods up to order 5 (see LO12b ; LO14b ).

3.1 Stiff order conditions for methods up to order 5

Let denote the local error of (2), i.e., the difference between the numerical solution obtained by (2) after one step starting from the ‘initial condition’ and the corresponding exact solution of (1) at .

To simplify the notation in this section we set as done in HO05 , and additionally denote be the -th partial Fréchet derivative (with respect to ) evaluated at . Our results in LO12b (Sect. 4.2) or LO13 (Sect. 5.1) showed that

| (12) | ||||

with the remaining terms

| (13) | ||||

Here, (and from now on) we use the abbreviations , and

| (14a) | ||||

| (14b) | ||||

Requiring a local error truncation results in the stiff order conditions for methods of order up to 5, which are displayed in Table 1 below.

| No. | Stiff order condition | Order |

|---|---|---|

| 1 | 2 | |

| 2 | 3 | |

| 3 | 3 | |

| 4 | 4 | |

| 5 | 4 | |

| 6 | 4 | |

| 7 | 4 | |

| 8 | 5 | |

| 9 | 5 | |

| 10 | 5 | |

| 11 | 5 | |

| 12 | 5 | |

| 13 | 5 | |

| 14 | 5 | |

| 15 | 5 | |

| 16 | 5 |

3.2 A stronger convergence result

The convergence analysis of exponential Runge–Kutta methods is usually performed in the framework of analytic semigroups on a Banach space with the following assumptions (see e.g. HO05 ; LO14b ):

Assumption 1. The linear operator is the infinitesimal generator of an analytic semigroup on . This implies that

| (15) |

and consequently , the coefficients and of the method are bounded operators. Furthermore, the following stability bound (see (HO05, , Lemma 1))

| (16) |

holds uniformly for all and with .

Assumption 2 (for high-order methods). The solution of (1) is sufficiently smooth with derivatives in and is sufficiently often Fréchet differentiable in a strip along the exact solution. All occurring derivatives are assumed to be uniformly bounded.

Let denote the global error at time . In LO14b , we have shown that satisfies the recursion

| (17) |

where are bounded operators on .

Motivated by Remark 2, we now give a stronger convergence result (compared to those results given in HO05 ; LO14b ) in the sense that it requires relaxing only one order condition.

Theorem 3.1

(Convergence) Let the initial value problem (1) satisfy Assumptions 1–2. Consider for its numerical solution an explicit exponential Runge–Kutta method (2) that fulfills the order conditions of Table 1 up to order () in a strong form with the exception that only one condition holds in a weakened form, i.e., . Then, the method is convergent of order . In particular, the numerical solution satisfies the error bound

| (18) |

uniformly on compact time intervals with a constant that depends on , but is independent of and .

Proof

The proof can be carried out in a very similar way as done in (LO14b, , Theorem 4.2). In view of (12) and (13) and employing the assumptions of Theorem 3.1 on the order conditions, we have and thus

| (19) |

where is defined in Section 3.1 and involves the terms multiplying and higher order in (12) (clearly, ). Inserting (19) (with index in place of ) into (17) and using the fact that there exists a bounded operator such that yields

| (20) |

Using (15), (16) and an application of a discrete Gronwall lemma shows (18).∎

With the result of Theorem 3.1 in hand, we are now ready to derive more efficient methods. In particular, we will solve the system of stiff order conditions of Table 1 in the context of Theorem 3.1. It turns out that for methods of high order this will require an increase in the number of stages . However, we will have more degree of freedoms for constructing our desired methods as seen in Section 4 below. In addition, by relaxing only one order condition, we expect methods resulted from Theorem 3.1 to have better stability (and thus may be more accurate) when integrating stiff systems (see Section 6).

4 Derivation of new efficient exponential Runge–Kutta methods

In this section, we will derive methods which have the following features: (i) containing multiple internal stages that are independent of each other (henceforth called parallel stages) and share the same format (thereby allowing them to be implemented in parallel); (ii) involving less number of evaluations of the form (8) when compared to the existing methods of the same orders (thus behaving like methods that use fewer number of stages ).

We first start with methods of order . When solving order conditions for these methods (requiring at least and for second- and third-order methods, respectively), one can easily show that it is not possible to fulfill the desired feature (ii), particularly when comparing with (order 2, 2-stage) and (order 3, 3-stage) mentioned in Section 2. We omit the details. Therefore, we will focus on the derivation of new methods of higher orders, namely, orders 4 and 5.

4.1 A family of fourth-order methods with parallel stages

Deriving methods of order 4 requires solving the set of 7 stiff order conditions 1–7 in Table 1. First, we discuss on the required number of stages . It is shown in (HO05, , Sect.5.3) that is the minimal number of stages required to construct a family of fourth-order methods which satisfies conditions 1–3 in the strong sense and conditions 4–7 in the weakened form (relaxing as ). In other words, with it is not possible to fulfill the order conditions in the context of Theorem 3.1, which requires only condition 4 holds in a weakened form or equivalently . Therefore, we consider . In this case, conditions 1, 2, and the weakened condition 4 are

| (21a) | ||||

| (21b) | ||||

| (21c) | ||||

and conditions 3, 5, 7 and 6 are

| (22a) | |||

| (22b) | |||

| (22c) | |||

| (22d) | |||

We now solve these order conditions. We note from (14b) that

| (23) |

and thus (since ). Using (23), one can infer that either or must be nonzero as well (if both are zero then , which is impossible since and are linearly independent). This strongly suggests that in order to later fulfill (22) in the strong sense with arbitrary square matrices and . Next, we further observe that if one may need both (which solves , ). However, this makes the second term in (22d) to be nonzero which is then very difficult to satisfy (22d) in the strong form. Putting together, it requires that . Using this sufficient condition we can easily solve (21) to get

for any choice of distinct nodes , satisfying the condition

| (24) |

Since , we must enforce and to satisfy conditions (22a)–(22c). Using (23), this leads to the following 2 systems of two linear equations

| (25a) | ||||

| (25b) | ||||

and

| (26a) | ||||

| (26b) | ||||

To satisfy conditions (22d), we further enforce (since ), which immediately solves (25) for coefficients (with )

| (27) |

and thus we also need (since ), which gives

| (28a) | ||||

| (28b) | ||||

After fulfilling all the required order conditions in (21)–(22), we see from (26) and (28b) that either or and one of the coefficients among can be taken as free parameters. We now use them to construct parallel stages. Guided by (27) and (28a), we choose to make is independent of so that both these stages only depend on , and choose to make is independent of so that both these stages only depend on the two preceding stages (since ). From this we determine the remaining coefficients

| (29) |

Putting altogether and rearranging terms in as linear combinations of functions, we obtain the following family of 4th-order 6-stage methods (with the pairs of parallel stages and ), which will be called :

| (30a) | ||||

| (30b) | ||||

| (30c) | ||||

| (30d) | ||||

For the numerical experiments given in Section 6, we choose , which gives due to (24).

Remark 3

(A comparison with ). As seen, is resulted from weakening only condition 4 of Table 1 instead of weakening four conditions 4–7 as in the derivation of . While the 5-stage method requires 6 sequential evaluations in each step (as mentioned in Section 2), the new fourth-order 6-stage method requires only 4 sequential evaluations, making it to behave like a 4-stage method. This is due to the fact has the pairs of parallel stages and and all within these pairs have the same format, i.e., same (one) linear combination of , allowing them to be computed in parallel or simultaneously (see Section 5).

4.2 A family of fifth-order methods with parallel stages

Constructing fifth-order exponential Runge-Kutta methods needs much more effort as one has to solve 16 order conditions in Table 1. As mentioned in Section 2, the only existing method of order 5 in the literature is (see LO14b ) which requires stages. Like , this method does not have any parallel stages and must be implemented in a sequential way. It also does not satisfy the assumption on the order conditions stated in Theorem 3.1. Indeed, it was constructed by fulfilling conditions 1–7 in the strong form and weakening conditions 8–16 (9 out of 16 order conditions) with in place of . This resulted in the last two internal stages and that involve several different linear combinations of (with different scalings of ), for which additional computational efforts are required to compute those stages (as shown in Section 2).

Therefore, to derive a method based on Theorem 3.1 which later allows us to derive parallel stages schemes with involving only one linear combination of , we have to increase . To make it easier for readers to follow, we consider first and later employ the similar procedure to show that it is not possible to fulfill condition 11 of Table 1 in the strong form (and thus not satisfying Theorem 3.1) with .

a) The case : Similarly to the derivation presented in Subsection 4.1, using (23), it strongly suggests in order to solve conditions 3, 5, 9, 7, 16, 13, and 15 in their strong form. Using this, these conditions now read as

| (31a) | |||

| (31b) | |||

| (31c) | |||

| (31d) | |||

| (31e) | |||

| (31f) | |||

| (31g) | |||

respectively. And conditions 1, 2, 4, and 8 (weakened form) become

| (32a) | ||||

| (32b) | ||||

| (32c) | ||||

| (32d) | ||||

Solving (32) gives

| (33a) | ||||

| (33b) | ||||

| (33c) | ||||

where , and are distinct and positive nodes satisfying the algebraic equation

| (34) |

Clearly, so one has to enforce

| (35) |

to satisfy (31) in the strong sense with arbitrary square matrices and . Next, we consider conditions 6 and 10 taken into account that () and (35), which can be now simplified as

| (36) |

respectively. In order to satisfy the strong form of (36) one needs

| (37) |

(this is again due to (23)) and

| (38) |

With (37), we note that are independent of the internal stages . Taking into all the requirements above, one can easily see that conditions 12 and 14 are now automatically fulfilled. Therefore, the only remaining condition to satisfy is condition 11.

Before working with condition 11, we first solve (35) using (37). For this, we observe that several coefficients can be considered as free parameters. To have are independent of each other, we choose

| (39) |

The resulting systems of linear equations from (35) is then solved with the unique solution

| (40) |

(i.e., are distinct nodes).

We now use (), (35), (37), (38), and (39) to simplify condition 11 as

| (41) |

Since , coefficients in (40) () are also nonzero, and that , we must enforce

| (42) |

and require that

| (43) |

in order to satisfy (41) in the strong sense. Note, because of (42), one could not require or () in (41) or both as this does not agree with the requirement in (38) (in other words, the linear system of equations displayed in (25) represented for this requirement has no solution). This justifies the requirement (43).

Finally, we solve (43) and (38) for the remaining coefficients . When solving (43) (see (28)), we choose to have is independent of . This gives

| (44) |

When solving (38) (using (42)), we choose to have are independent of each other. This results in the following 6 coefficients:

| (45) |

(i.e., are distinct nodes).

Inserting all the obtained coefficients and into and rewriting these stages as linear combinations of functions, we obtain the following family of 5th-order 10-stage methods (with the groups of parallel stages , , and ) which will be called :

where

| (46) |

with for , and for (note that are distinct indices and that are distinct (positive) nodes).

For our numerical experiments, we choose

, , ,

, , and (satisfying (34)).

Remark 4

(A comparison with ). Although the new fifth-order method has 10 stages (compared to 8 stages of displayed in Section 2), its special structure offers much more efficient for implementation. In particular, all in this scheme involve only one linear combination of which can be computed by one evaluation for each; and more importantly, due to the same format of multiple stages within each of the three groups , , and (same linear combination with different inputs ), they can be computed simultaneously or implemented in parallel (see Section 5). This makes to behave like a 5-stage method only, thereby requiring only 5 sequential evaluations in each step. Moreover, while requires weakening 9 out of 16 order conditions of Table 1, requires only one condition (number 8) held in the weakened form. Note that by following the similar way of deriving , we can derive a scheme that satisfies all the stiff order conditions in Table 1 in the strong sense with . Such a scheme, however, still behaves like a 5-stage method. Therefore, we do not discuss further on this case.

b) The case (which does not work): Clearly, in this case we have less degree of freedoms than the case when solving the order conditions in Table 1. Nonetheless, one can still proceed in a similar way as done for . Again, it strongly suggests (which solves for from conditions 1, 2, 4) and

| (47) |

in order to satisfy conditions 1, 2, 3, 4, 5, 7, 9, 13, 15, 16 in the strong form. With this, conditions 6 and 10 now become

| (48) |

Again, due to the fact that and either or must be nonzero, one needs to enforce in (48). Using this to solve (47) for () gives a unique solution (with and are distinct) for , which then determines . Next, one can solve (47) for to obtain that are independent of , as well as are independent of each other, by requiring the three free parameters . As a result, one gets . This immediately suggests to completely fulfill (48) with arbitrary square matrix . With all of these in place, conditions 12 and 14 are automatically fulfilled, and condition 11 is now reduced to

| (49) |

Clearly, since , , and , (49) can be satisfied in the strong sense only if we have one of the following conditions: or . Unfortunately, either of these requirements is in contradiction with which is needed for conditions 6 and 10 mentioned above. For example, solving results in .

5 Details implementation of fourth- and fifth-order schemes

In this section, we present details implementation of the old and new fourth- and fifth-order expRK schemes (, , , ) mentioned above.

As mentioned in Section 2.1, we will use the MATLAB routine phipm_simul_iom (described in details in Luan18 ) to implement expRK methods. In particular, given the following inputs: an array of scaling factors with ( could be a positive scalar), an -by- matrix , and a set of column vectors (each is an -by- vector), a tolerance , an initial value for the dimension of the Krylov subspace, and an incomplete orthogonalization length of , a call to this function

| (50) |

simultaneously computes the following linear combinations

| (51) |

Note that, by setting (), (51) becomes (6). In other words, all the linear combinations in (6) ( if are given instead of ) can be then computed at the same time with one call (50) by using scaled vectors for the input .

In the following, we set

| (52) |

to simplify notations in presenting details of implementation of the fourth- and fifth-order methods mentioned above. When calling (50), we use , (default value), and (as in Luan18 ).

Implementation of (): As discussed in Remark 1, requires a sequential implementation of the following 6 different linear combinations of the form (51), corresponding to 6 calls to phipm_simul_iom:

-

(i)

Evaluate with to get .

-

(ii)

Evaluate with to get .

-

(iii)

Evaluate with to get .

-

(iv)

Evaluate with and

-

(v)

Evaluate with

to get . -

(vi)

Evaluate with to get .

Since which depends on , these are the 6 (sequential) evaluations.

Implementation of (): As discussed in Remark 3, can be implemented like a 4-stage method by evaluating the following 4 sequential evaluations, corresponding to 4 calls to phipm_simul_iom:

-

(i)

Evaluate with to get .

-

(ii)

Evaluate and simultaneously using to get both and .

-

(iii)

Evaluate and simultaneously with to get both and .

-

(iv)

Evaluate with to get .

Implementation of (): As discussed in Remark 1, requires a sequential implementation of 11 different linear combinations of the form (51), corresponding to the following 11 calls to phipm_simul_iom:

-

(i)

Evaluate with to get .

-

(ii)

Evaluate with to get .

-

(iii)

Evaluate with to get .

-

(iv)

Evaluate with to get .

-

(v)

Evaluate with to get .

-

(vi)

Evaluate with and

-

(vii)

Evaluate with to get .

-

(viii)

Evaluate with and

-

(ix)

Evaluate with and

-

(x)

Evaluate with

to get . -

(xi)

Evaluate with to get .

Implementation of (, , , , , and ): As discussed in Remark 4, can be implemented like a 5-stage method by evaluating the following 5 sequential evaluations, corresponding to 5 calls to phipm_simul_iom:

-

(i)

Evaluate with to get .

-

(ii)

Evaluate and simultaneously using to get both and .

-

(iii)

Evaluate , , and simultaneously using ,

to get , , . -

(iv)

Evaluate , , and simultaneously using ,

to get , , . -

(v)

Evaluate with to get (coefficients are given in (46)).

6 Numerical experiments

In this section, we demonstrate the efficiency of our newly derived fourth- and fifth-order expRK time integration methods (, ). Specifically, we will compare their performance against the existing methods of the same orders (, ) on several examples of stiff PDEs. All the numerical simulations are performed in MATLAB on a single workstation, using an iMac 3.6 GHz Intel Core i7, 32 GB 2400 MHz DDR4.

Example 1

(A one-dimensional semilinear parabolic problem HO05 ): We first verify the order of convergence for the new derived fourth- and fifth-order expRK schemes (, ) by considering the following PDE for , , and subject to homogeneous Dirichlet boundary conditions,

| (53) |

whose exact solution is known to be for a suitable choice of the source function .

Spatial discretization: For this example, we use standard second order finite differences with grid points. This leads to a very stiff system of the form (1) (with ).

The resulting system is then integrated on the time interval using constant step sizes, corresponding to the number of time steps . The time integration errors at the final time are measured in the maximum norm.

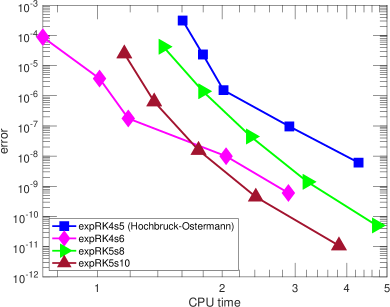

In Figure 1, we plot orders for all the employed integrators in the left diagram and the total CPU time versus the global errors in the right diagram. The left diagram clearly shows a perfect agreement with our convergence result in Theorem 3.1, meaning that the two new integrators and fully achieve orders 4 and 5, respectively. When compared to the old integrators of the same orders and , we note that, given the same number of time steps, is slightly more accurate but is much faster than (see the right diagram). In a similar manner, gives almost identical global errors but is also much faster than . Finally, we observe that, for this example, for a global error that is larger than , the new fourth-order method is the fastest one, and for more stringent errors, is the fastest integrator.

|

Example 2

(A nonlinear Schrödinger equation Cazenave1989 ; Berland2005 ): We consider the following one-dimensional nonlinear Schrödinger (NLS) equation with periodic boundary conditions

| (54) | ||||

where the potential function , the initial condition , and the constant (see Berland2005 ).

Spatial discretization: For this example, we use a discrete Fourier transform with modes, leading to a mildly stiff system of the form (1) with

| (55) | ||||

Next, we integrate this system on the time interval with constant step sizes, corresponding to the number of time steps , . Since the exact solution of (54) is unknown, a reliable reference solution is computed by the stiff solver with . Again, the time integration errors are measured in a discrete maximum norm at the final time .

As seen from the two double-logarithmic diagrams in Figure 2, we plot the accuracy of the four employed integrators (, , , and ) as functions of the number of time steps (left) and the total CPU time (right). The left digram clearly indicates that the two new integrators and achieve their corresponding expected orders 4 and 5. While is a little more accurate than , is much more accurate than for a given same number of time steps, meaning that it can take much larger time steps while achieving the same accuracy. Moreover, the right precision digram displays the efficiency plot indicating that both and are much faster than their counterparts and , respectively. More specifically, a similar story is observed: for lower accuracy requirements, say error , the new fourth-order method is the most efficient, whereas for error or tighter the new fifth-order method is the most efficient.

|

Example 3

(A 2D Gray–Scott model Gray1984 ; Berland2007 ): Consider the following two-dimensional reaction-diffusion equation–the Gray–Scott equation model, for on the square , (here, we choose ) subject to periodic boundary conditions

| (56) | ||||

where is the Laplacian operator, the diffusion coefficients , and the bifurcation parameters . The initial conditions are Gaussian pulses

Spatial discretization: For this example, we use standard second order finite differences using 150 grid points in each direction with mesh width . This gives a stiff system of the form (1).

The system is then solved on the time interval using constant step sizes. In the absence of an analytical solution of (56), a high-accuracy reference solution is computed using the method with a sufficient small time step. Errors are measured in a discrete maximum norm at the final time .

In Figure 3, using the same number of time steps , , we again display the order plots of the taken integrators. One can see that is much more accurate than and is slightly more accurate than .

In Figure 4, we display the efficiency plot for which the time step sizes were chosen for each integrator to obtain about same error thresholds (The corresponding number of time steps for each integrator are displayed in Table 2. As seen, given about the same level of accuracy, the new methods use smaller steps than the old ones of the same order, meaning that they can take larger step sizes). Again, is much faster than and it is interesting that this new fourth-order method turns out to be the most efficient (although for error thresholds tighter than the new fifth-order method seems to become the most efficient).

| Method | Error threshold vs. Number of time steps | ||||||

|---|---|---|---|---|---|---|---|

| 18 | 36 | 66 | 121 | 215 | 385 | 685 | |

| 10 | 19 | 28 | 46 | 122 | 230 | 420 | |

| 7 | 18 | 33 | 57 | 92 | 149 | 238 | |

| 8 | 17 | 30 | 51 | 82 | 130 | 208 | |

The numerical results presented on the three examples above clearly confirm the advantage of constructing parallel stages expRK methods based on Theorem 3.1, leading to more efficient and accurate methods and .

Acknowledgements.

The author would like to thank Reviewer 1 for the valuable comments and helpful suggestions. He would like also to thank the National Science Foundation, which supported this research under award NSF DMS–2012022.References

- (1) Al-Mohy, A.H., Higham, N.J.: Computing the action of the matrix exponential, with an application to exponential integrators. SIAM J. Sci. Comput. 33, 488–511 (2011)

- (2) Berland, H., Skaflestad, B.: Solving the nonlinear Schrödinger equation using exponential integrators. Tech. rep. (2005)

- (3) Berland, H., Skaflestad, B., Wright, W.M.: Expint—a matlab package for exponential integrators. ACM Transactions on Mathematical Software (TOMS) 33(1), 4–es (2007)

- (4) Caliari, M., Kandolf, P., Ostermann, A., Rainer, S.: The Leja method revisited: Backward error analysis for the matrix exponential. SIAM J. Sci. Comp. 38(3), A1639–A1661 (2016)

- (5) Cazenave, T.: An introduction to nonlinear Schrödinger equations, vol. 22. Universidade Federal do Rio de Janeiro, Centro de Ciências Matemáticas e da … (1989)

- (6) Gaudreault, S., Pudykiewicz, J.: An efficient exponential time integration method for the numerical solution of the shallow water equations on the sphere. J. Comput. Phys. 322, 827–848 (2016)

- (7) Gray, P., Scott, S.: Autocatalytic reactions in the isothermal, continuous stirred tank reactor: Oscillations and instabilities in the system . Chemical Engineering Science 39(6), 1087–1097 (1984)

- (8) Hochbruck, M., Ostermann, A.: Explicit exponential Runge–Kutta methods for semilinear parabolic problems. SIAM J. Numer. Anal. 43, 1069–1090 (2005)

- (9) Hochbruck, M., Ostermann, A.: Exponential integrators. Acta Numerica 19, 209–286 (2010)

- (10) Ju, L., Wang, Z.: Exponential time differencing Gauge method for incompressible viscous flows. Communications in Computational Physics 22(2), 517–541 (2017)

- (11) Luan, V.T.: High-order exponential integrators. Ph.D. thesis, University of Innsbruck (2014)

- (12) Luan, V.T.: Fourth-order two-stage explicit exponential integrators for time-dependent PDEs. Applied Numerical Mathematics 112, 91–103 (2017)

- (13) Luan, V.T., Michels, D.: Explicit exponential Rosenbrock methods and their application in visual computing. (Revised) (2020)

- (14) Luan, V.T., Ostermann, A.: Exponential B-series: The stiff case. SIAM J. Numer. Anal. 51, 3431–3445 (2013)

- (15) Luan, V.T., Ostermann, A.: Explicit exponential Runge–Kutta methods of high order for parabolic problems. J. Comput. Appl. Math. 256, 168–179 (2014)

- (16) Luan, V.T., Ostermann, A.: Exponential Rosenbrock methods of order five–construction, analysis and numerical comparisons. J. Comput. Appl. Math. 255, 417–431 (2014)

- (17) Luan, V.T., Ostermann, A.: Stiff order conditions for exponential Runge–Kutta methods of order five. In: H.B. et al. (ed.) Modeling, Simulation and Optimization of Complex Processes - HPSC 2012, pp. 133–143. Springer (2014)

- (18) Luan, V.T., Ostermann, A.: Parallel exponential Rosenbrock methods. Comput. Math. Appl. 71, 1137–1150 (2016)

- (19) Luan, V.T., Pudykiewicz, J.A., Reynolds, D.R.: Further development of efficient and accurate time integration schemes for meteorological models. J. Comput. Phys. 376, 817–837 (2019)

- (20) Michels, D.L., Luan, V.T., Tokman, M.: A stiffly accurate integrator for elastodynamic problems. ACM Transactions on Graphics (TOG) 36(4), 116 (2017)

- (21) Niesen, J., Wright, W.M.: Algorithm 919: A Krylov subspace algorithm for evaluating the -functions appearing in exponential integrators. ACM Trans. Math. Soft. (TOMS) 38(3), 22 (2012)

- (22) Pieper, K., Sockwell, K.C., Gunzburger, M.: Exponential time differencing for mimetic multilayer ocean models. J. Comput. Phys. 398, 817–837 (2019)