Efficient Distributed Framework for Collaborative Multi-Agent Reinforcement Learning

Abstract

Multi-agent reinforcement learning for incomplete information environments has attracted extensive attention from researchers. However, due to the slow sample collection and poor sample exploration, there are still some problems in multi-agent reinforcement learning, such as unstable model iteration and low training efficiency. Moreover, most of the existing distributed framework are proposed for single-agent reinforcement learning and not suitable for multi-agent. In this paper, we design an distributed MARL framework based on the actor-work-learner architecture. In this framework, multiple asynchronous environment interaction modules can be deployed simultaneously, which greatly improves the sample collection speed and sample diversity. Meanwhile, to make full use of computing resources, we decouple the model iteration from environment interaction, and thus accelerate the policy iteration. Finally, we verified the effectiveness of propose framework in MaCA military simulation environment and the SMAC 3D realtime strategy gaming environment with imcomplete information characteristics.

Index Terms:

multi-agent reinforcement learning, distributed reinforcement learning, shared memoryI Introduction

In general, reinforcement learning (RL) can be seen as a hybrid of game theory [1] and dynamic strategy modeling [2]. Compared to traditional machine learning methods, RL performs policy update based on the feedback from the environment. In this way, the RL can alter the policy dynamically and make more real-time decision in a changing environment. Deep reinforcement learning technology [3], which combines reinforcement learning and deep learning, has been shown to have perceived decision-making abilities. In recent years, it has achieved spectacular results in the field of stock market trading [4], autonomous driving [5], robotics [6], machine translation [7], recommendation systems [8], and it is progressively expanding from many applications.

However, there are still many obstacles that limit the application of RL, such as lack of training samples, low sample utilization and unstable training, etc. Specifically, the training samples of RL are obtained through real-time interactions between the agent and the environment, which limits the diversity of training samples, and result to the situation of insufficient training samples. In particular, when the problem is extended to the field of multi-agent, the demand of training sample becomes even more severe. The scarce of sample will lead to the inefficiency of training and the instability of iteration for multi-agent model. What’s more, the effect of model training are also impacted by the diversity of empirical samples, which makes this problem even worse. Therefore, increasing the amount of sample data can not only improve the training efficiency but also can reflect a more integrity of the environment, and thus resulting in a stable agent learning.

Researchers have lately begun to investigate distributed reinforcement learning (distributed RL) domains. The distributed framework can be used to boost data throughput and solve the problems of samples scarce and unstable training, which is critical to the reinforcement learning training process. However, the most of the current distributed RL framework is proposed for single-agent RL, and not suitable for the solving the multi-agent problem.

To address the above problems, in this paper, we proposed two practical distributed training frameworks for MARL. Firstly, we propose a actor-worker based MARL training framework. The framework consists of a worker and many actors, and information is transmitted between worker and actors through pipelines. It worth noting that, in this framework, the actors interact with multiple environments in an asynchronous way, which significantly improve the efficiency of sample collection and thus reduce the cost of multi-agent training.

Moreover, we extend the actor-work to actor-worker-learner distributed framework (DMCAF), which further decouples environment interaction from the model iteration process. Unlike the A3C method that transfers gradients, this framework merely transmits observation data from actors to workers so that actors do not need to participate the model training. This further improves ample diversity and policy iteration efficiency, and makes the multi-agent training process becomes more stable and fast.

II Background

II-A Related Work in Reinforcement Learning

Watkins created a table-based Q-learning reinforcement learning approach in 1989, which laid the groundwork for today’s advancement of RL. But it has the issue that the table needed may be large in case of numerous states. The DeepMind team proposed the DQN (Deep Q Network) method in 2013 to solve the issue of Q-learning. The DQN technique trains Atari game bots to outperform human players by combining the target network and experience replay mechanism [9]. Following its triumph in conquering the Atari game, Google DeepMind’s Go agent AlphaGo has now defeated top Go masters Li Sedol and Ke Jie [10]. The enhanced version AlphaGoZero surpasses the human level in just a few days through self-play [11, 12]. In addition to Go, DouZero [13], a chess and card-type landlord agent developed by the domestic Kuaishou team, and Suphx [14], a mahjong agent developed by the Microsoft team, combine RL, supervised learning, self-play, and monte carlo search that have made breakthroughs in different chess and card fields. In real-time strategy games with larger dimensions and more complex environments, the agent AlphaStar [15] developed by DeepMind shows an excellent game level on StarCraft II.

In the field of distributed RL, the actor-critic algorithm is a classic method, which combines policy and value networks, greatly improving the level of the agent. The A3C algorithm is an asynchronous distributed version of the actor-critic algorithm in single-agent RL [16], which maintains multiple secondary networks and a primary network. Among them, each secondary network contains actors and critics and the primary network contains actors and critics , which have the same network structure. After interacting with the environment, the secondary network computes and accumulates the gradients of actor and critic:

| (1) |

| (2) |

The primary network receives the gradients accumulated by the secondary networks, and updates the gradients for actor and critic with learning rates and , respectively:

| (3) |

| (4) |

The GA3C method proposed by Babaeizadeh et al. uses multiple actors to interact with the environment. Each actor only transmits the environment state and training samples to the learner through a queue, which reduces the number of policy models and training resources [17]. The IMPALA algorithm proposed by Deepmind improves A3C from another dimension. Different from the gradient transfer in A3C, IMPALA adopts the method of transferring training samples to update the model and proposes the V-trace method to correct the policy-lagging issue[18]. The Ape-X algorithm proposed by Horgan et al. is a value-based distributed RL method. This method uses multiple processes to realize multiple environment interactions, which improves the training speed of the single-process DQN [19].

II-B On-policy and Off-policy

According to the policy which generates training data, distributed MARL can be divided into on-poliy and off-policy. As shown in Fig. 2(a), if the experience samples sampled by the agent during training come from the current target strategy, this method is called on-policy learning [20]. Off-policy learning [21] is the method that employs an exploratory behavioral strategy to interact with the environment and gather experience. And it also stores experience samples in the experience replay pool [22] for sampling and iterative updating of the target strategy, which is shown in Fig. 2(b).

One drawback of the on-policy learning is the need to guarantee that the parameters of the model network in each environmental process are consistent with the parameters of the primary model network, as well as the synchronization of each environmental interaction. Thus when two environments are out of sync, they must wait for each other. Another drawback is the ability to allow asynchronous sampling of the environment, which solves the problem of low training efficiency but brings the additional policy-lagging issue. The training samples collected by the primary model network are policy-lagging, meaning they are obtained based on the past policy rather than the current policy. So during the iterative update of the policy, the primary model network must execute importance sampling on the experience samples, turning them into learnable experience samples.

| (5) | ||||

| (6) |

The importance sampling is created to handle the problem of calculating the expectation using a known distribution When the original distribution is difficult to sample, which is shown in (5) and (6).

In on-policy distributed RL, the importance sampling is used to remedy the policy-lagging problem produced by samples from different policies. But the off-policy distributed RL does not require as many modifications to the samples and expectation computations due to the presence of the experience replay mechanism. Each environmental process can store the collected samples into the experience replay pool at any time, and the primary network takes out the historical sample data from the experience replay pool to iteratively update the model.

II-C The Meaning of Exploring distributed MARL

With the development of MARL, RL has been able to deal with the common incomplete information and multi-agent challenges in the environment [23]. But the instability of training and performance have become the factors restricting its development. And from the results of distributed single-agent reinforcement learning algorithms, distributed technology is useful for improving performance.

However, the methods in distributed RL are nearly in the single-agent domain, and it is difficult to directly extend them to the multi-agent domain. Specifically, the model calculation becomes more complicated as the number of agents increased, so does the sample requirement for multi-agent model training.

As a result, the research on distributed MARL is of great significance for improving the stability and performance. Therefore,we propose the DMCAF in this paper.

III Method

For the reasons stated above, we focus on proposing a distributed MARL method. To avoid the high-complexity problem caused by policy correction technology [24], we select the value-based MACRL method as the basic algorithmic model and propose a distributed MARL algorithmic framework. The algorithmic framework we proposed is mainly divided into two parts, the latter part is the continuous deepening and extension of the former part.

III-A Actor-worker Distributed Multi-agent Asynchronous Communication Framework

We first propose an actor-worker distributed algorithm training framework for multiple agents. The algorithmic training framework is shown in Fig. 3. The operation of the framework is divided into four steps.

-

•

Step 1: The environment passes the new state through the observation-action pipeline in actor to the worker at each time step.

-

•

Step 2: The worker sends the state into its primary network for decision-making and puts the action back into the corresponding observation-action pipeline which is passed to the corresponding actor.

-

•

Step 3: The environment gets the action from pipeline and goes to the next state.

-

•

Step 4: After each round of data collection, the actor sends the entire gathered trajectory to the experience replay pool via the experience pipeline. The worker randomly samples from the updated experience replay pool to update the policy until it iteratively converges.

However,when employing the training framework described above, the historical knowledge shared by multiple agents in different contexts must be explicitly split. The hidden state unit in the distributed MARL method is shown in Fig. 4. Otherwise, the agents in different environments will misuse historical information, lowering the performance of the algorithm.

After the foregoing modifications, multiple environments can be deployed simultaneously. Therefore, the speed and scalability of sample collecting will be increased. And moreover, the asynchronous interaction of multiple actors further lowers the correlation between samples. Meanwhile, because all policy updates are accomplished iteratively in workers, each actor just need to maintains a distinct environment instead of the model with large amount of parameters, thus lowering the computational cost of large-scale model training.

III-B DMCAF:Actor-worker-learner Distributed Multi-agent Decoupling Algorithmic Framework

The worker-learner layer is presented after proposing the actor-worker framework, and model sharing of the primary network and the secondary network is implemented in the worker-learner layer. The actor-worker structure is extended to the actor-worker-learner structure.

The A3C algorithm is the most representative asynchronous algorithm, but it has two problems. The first is that the primary network is updated slowly and has poor robustness. Secondly, this way of passing gradients makes the model iteratively slower. So we use the method of transferring empirical samples from the secondary network to the primary network instead of transferring gradients. In this way, the data collection and model updates can be decoupled. This decoupling approach can well connect the actor-worker asynchronous communication framework proposed in the previous section. Therefore, we take the actor-worker asynchronous communication framework as the underlying structure, and introduce the decoupled worker-learner structure as the model sharing layer.

Specifically, under this decoupling framework, although the worker has a policy network, it is only responsible for decision-making and sample collection during training. The worker does not calculate and transmit gradients, thus avoiding the problem of gradient conflict. After multiple actors collect the experience samples of the whole game through interaction, the workers send the samples to the experience replay pool, and the learner takes experience from the experience replay pool to update the policy as needed. The samples in the experience replay pool can be updated continuously because the asynchronous interaction of multiple environments maintained by the actor greatly improves the speed of data collection. So the learner does not need to wait for the gradient to be calculated or for data to be collected.

In order to promote further optimization of training, in terms of the model sharing, we use the shared memory technology to periodically update the parameters of the primary network in learner to the secondary network in worker instead of transferring parameters. The use of shared memory technology enables the proposed method to avoid the instability of data transmission when synchronizing the model, and reduces the communication cost while improving the communication efficiency.

In summary, we propose the actor-worker-learner distributed decoupling framework, as shown in Fig. 5. The worker layer acts as an intermediate transition layer, maintaining multiple actors and a neural network that periodically synchronizes with the learner. The worker starts training with multiple actors at the same time and communicates with each actor. Each actor maintains an independent environment, and each of them interacts with the environment under the guidance of the policy network in the worker to complete the sample collection. The algorithm of the actor is shown in Algorithm 1.

III-B1 Actor Algorithm

When the actor receives the new state of the environment, it delivers the observation into the observation-action pipeline, and the action of the worker is retrieved from the observation-action pipeline to interact with the environment. Each step of interactions between the agent and the environment is recorded by the actor, and at the end of the game, the entire game experience is transmitted to the sample queue for the learner to use.

Input: environment parameters ,

interaction times

Global: sample queue ,

observation-action pipe

III-B2 Worker Algorithm

The decoupled workers and learners work asynchronously while training. The algorithm of the worker is shown in 2. The worker deploys multiple actors, gets observations from the observation-action pipeline, makes decisions using the policy network that are periodically synchronized from the shared memory pool. Then it pips actions back to the actors. When the worker fails to get new observations from the observation-action pipeline, the loop ends.

Input: the number of actors ,

total interaction times

Global: network parameters ,

shared memory pool ,

observation-action pipeline

III-B3 Learner Algorithm

The learner maintains the sample queue, shared memory pool, and experience replay pool. The cyclic iterative training of model begins when multiple workers are initiated. The sample queue sends a huge number of samples created by the interaction of different environments to the shared experience replay pool. When the experience pool is not empty, the learner continues to train and update the model. Meanwhile, it synchronizes the most recent model parameters to the shared memory pool, allowing workers to interact with the environment using the latest policy model to gain fresh experience samples. The training comes to a halt when the sample queue is empty, indicating that no environment is interacting.

Input: the number of workers ,

batch size

Global: experience replay pool ,

shared memory pool ,

sample queue ,

network parameters

| Scenario Name | Number of Actions | Number of Agents | State Dimension | Observation Dimension | Time-step limit |

|---|---|---|---|---|---|

| 9 | 3 | 48 | 30 | 60 | |

| 14 | 8 | 168 | 80 | 120 | |

| 11 | 5 | 120 | 80 | 120 | |

| 12 | 5 | 98 | 55 | 70 |

| Scenario Name | Own Unit | Enemy Unit | scenario Type |

|---|---|---|---|

| and | and | ||

This framework has strong scalability and can configure several actors and workers in a flexible manner. The upper-layer decoupling framework separates the data acquisition and model training modules, allowing for higher data throughput, more efficient model iteration, and better GPU use. The deployment of large-scale environments has substantially improved the sample collecting speed, extending the benefits of distributed systems even further. The asynchronous updating of numerous models promotes agent exploration in terms of policies and alleviates the exploration and utilization difficulties in RL. Meanwhile, the correlation of RL training samples is weakened, and the diversity of samples is increased, thereby improving the robustness of the algorithm and making the training process more stable.

IV Experiment

IV-A Environment

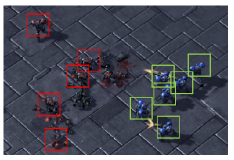

SMAC (StarCraft Multi-Agent Challenge) [25] is a MARL research platform about the game StarCraft II. It is designed to evaluate the ability of multi-agent models to solve complex tasks through collaboration. Many researchers have regarded the SMAC platform as a benchmark platform in the field of MACRL.

The difficulty on the SMAC platform can be customized, and we use the ”extremely challenging” mode with a level of in the experiment. On the SMAC platform, each scenario depicts a battle between two forces. Depending on whether the type is the same or not, battle scenarios can be separated into symmetrical and asymmetric battle scenarios, as well as isomorphic and heterogeneous battle scenarios based on the unit types on both sides.

The experimental environment is comprised of four scenarios: , , , and ,which is shown in Fig. 1. The isomorphic symmetric scenario, isomorphic symmetric scenario, and heterogeneous symmetric scenario are among the most commonly used experimental scenarios for MACRL. And they are used in the experiments of the original papers of the Qmix and CoMA algorithms. The necessary characteristic information for the four cases is listed in Table II.

We use the control variable method to uniformly set the observations, states, actions, and rewards of all scenarios to the default values of the platform. The specific settings are listed in Table I.

IV-B Experimental Display and Results Discussion

We select the MACRL algorithm that is currently optimal in multiple scenarios of the SMAC platform as the baseline algorithm. Under the same scenario settings, the distributed multi-agent collaborative algorithm proposed is verified from the aspects of the speed of sample collection and performance.

-

•

Qmix:The original Qmix algorithm, which currently achieves optimal performance in multiple scenarios on the SMAC platform.

-

•

AW-Qmix:Distributed Qmix algorithm based on Actor-Worker framework.

-

•

AWL-Qmix:Distributed Qmix algorithm based on DMCAF.

We use the Qmix, AW-Qmix, and AWL-Qmix to train in four scenarios, set the same collection number in sample for each method in the same scenario, record the sample collection time, and calculate the average collection speed of the sample. The experimental results are shown in the Table III and Fig. 6.

| Scenario Name | Qmix | AW-Qmix | AWL-Qmix |

|---|---|---|---|

| 2.12 | 3.90 | 14.0 | |

| 1.15 | 1.82 | 7.47 | |

| 1.02 | 1.80 | 7.76 | |

| 1.54 | 3.12 | 9.86 |

The Qmix only manages one environment at a time. By opening numerous environments and leveraging asynchronous communication, the AW-Qmix allows for a minor increase in the update speed of model. Compared to the other two methods, the AWL-Qmix completely decouples model updating from environment interaction, allowing the model to be trained repeatedly using large-scale updated data which is shown in Fig. 7.

The counterfactual multi-agent policy gradient algorithm CoMA [26] is used as a horizontal comparison approach in the experiment to further validate the performance of algorithm. In the tests, four actors are used for the AW-Qmix and four workers are used for the AWL-Qmix, with each worker including three actors. Depending on the difficulty, the interaction times of different environments are established for different scenarios. To ensure the scientificity of environment and rigor, the same amount of interactions are employed for each approach.

IV-B1 SMAC

It can be seen from Fig. 8, 8, 8, 8 that within the same time step in the 3m scenario, AW-Qmix and AWL-Qmix converge to the optimal value earlier. At this time, Qmix is still fluctuating and does not reach a stable reward value. Although CoMA tends to converge earlier, the reward value achieved is low and the performance is at a disadvantage.

In the 8m scenario, as the number of agents increases, the convergence of AW-Qmix is hindered, but AWL-Qmix still maintains better performance and stability. The possible reason is that AW-Qmix adopts a single-model decision-making network structure, and samples and updates after collecting samples in each environment. When the model encounters conflicting samples, the information brought by the samples may cause the model to converge in different directions, resulting in the model needing more exploration to find the correct convergence direction. The multi-model network and shared model technology adopted by AWL-Qmix can allow multiple decision networks to be updated in time, and the update directions of each decision network are consistent, thereby reducing the possibility of conflict between interactive samples.

It is shown that AW-Qmix and AWL-Qmix are marginally better than Qmix in terms of convergence speed and final performance, and are much better than CoMA in the heterogeneous symmetric scenario 2s3z in Fig. 8, 8. The most demanding and complex case for the agent is the symmetric scenario. It necessitates the learning of the agent from a disadvantageous situation to defeat the enemy. CoMA is tough to defeat the opponent in this difficult scenario, as shown in Fig. 8, 8, and AWL-Qmix has a significant advantage in rewards and winning rate curves over the other three approaches.

IV-B2 MaCA

We install distributed decoupling architectures of various scales on the experimental platform MaCA [27] to further test the generality of the proposed DMCAF on diverse platforms.

The performance comparison of the three methods is shown in Fig. 9. It can be seen that AWL-hierq-12 and AWL-hierq-4 have a certain advantage in reward score compared with Hierq-1, and this advantage increases with the increase of the distributed scale. This result once again verifies the effectiveness of DMCAF, and also demonstrates the application of DMCAF in large-scale reinforcement learning training.

V Conclusion

In this paper, we introduced two distributed frameworks for MARL. In the actor-worker framework, the idea of distributed training in the field of single-agent reinforcement learning is extended to the field of MARL. And a multi-agent asynchronous communication algorithm based on actor-worker is designed to solve the urgent needs of sample collection of MARL. Then, we extended the actor-worker to the actor-worker-learner framework. In this framework, the environment interaction is decoupled from the model iteration process, which further increases the diversity of training sample. Finally, the effectiveness of our method is investigated on the StarCraft II reinforcement learning benchmark platform and the MaCA platform. In the future work, we will try to introduce a multi-agent-oriented priority experience replay mechanism based on the distributed algorithm for optimization.

References

- [1] F. Fang, S. Liu, A. Basak, Q. Zhu, C. D. Kiekintveld, and C. A. Kamhoua, “Introduction to game theory,” Game Theory and Machine Learning for Cyber Security, pp. 21–46, 2021.

- [2] S. Kikuchi, D. Tominaga, M. Arita, K. Takahashi, and M. Tomita, “Dynamic modeling of genetic networks using genetic algorithm and S-system,” Bioinformatics, vol. 19, pp. 643–650, 03 2003.

- [3] Y. Li, X. Hu, Y. Zhuang, Z. Gao, P. Zhang, and N. El-Sheimy, “Deep reinforcement learning (drl): Another perspective for unsupervised wireless localization,” IEEE Internet of Things Journal, vol. 7, no. 7, pp. 6279–6287, 2020.

- [4] Z. Zhang, S. Zohren, and S. Roberts, “Deep reinforcement learning for trading,” The Journal of Financial Data Science, vol. 2, no. 2, pp. 25–40, 2020.

- [5] D. Isele, R. Rahimi, A. Cosgun, K. Subramanian, and K. Fujimura, “Navigating occluded intersections with autonomous vehicles using deep reinforcement learning,” in 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 2034–2039, 2018.

- [6] S. Levine, P. Pastor, A. Krizhevsky, J. Ibarz, and D. Quillen, “Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection,” The International Journal of Robotics Research, vol. 37, no. 4-5, pp. 421–436, 2018.

- [7] G. Kumar, G. Foster, C. Cherry, and M. Krikun, “Reinforcement Learning based Curriculum Optimization for Neural Machine Translation,” arXiv e-prints, p. arXiv:1903.00041, Feb. 2019.

- [8] V. Derhami, J. Paksima, and H. Khajah, “Web pages ranking algorithm based on reinforcement learning and user feedback,” Journal of AI and Data Mining, vol. 3, no. 2, pp. 157–168, 2015.

- [9] Y. Li, X. Hu, Y. Zhuang, Z. Gao, P. Zhang, and N. El-Sheimy, “Deep reinforcement learning (drl): Another perspective for unsupervised wireless localization,” IEEE Internet of Things Journal, vol. 7, no. 7, pp. 6279–6287, 2020.

- [10] D. Silver, A. Huang, C. J. Maddison, A. Guez, L. Sifre, G. Van Den Driessche, J. Schrittwieser, I. Antonoglou, V. Panneershelvam, M. Lanctot, et al., “Mastering the game of go with deep neural networks and tree search,” nature, vol. 529, no. 7587, pp. 484–489, 2016.

- [11] D. Silver, J. Schrittwieser, K. Simonyan, I. Antonoglou, A. Huang, A. Guez, T. Hubert, L. Baker, M. Lai, A. Bolton, et al., “Mastering the game of go without human knowledge,” nature, vol. 550, no. 7676, pp. 354–359, 2017.

- [12] D. Silver, T. Hubert, J. Schrittwieser, I. Antonoglou, M. Lai, A. Guez, M. Lanctot, L. Sifre, D. Kumaran, T. Graepel, et al., “A general reinforcement learning algorithm that masters chess, shogi, and go through self-play,” Science, vol. 362, no. 6419, pp. 1140–1144, 2018.

- [13] D. Zha, J. Xie, W. Ma, S. Zhang, X. Lian, X. Hu, and J. Liu, “Douzero: Mastering doudizhu with self-play deep reinforcement learning,” in Proceedings of the 38th International Conference on Machine Learning (M. Meila and T. Zhang, eds.), vol. 139 of Proceedings of Machine Learning Research, pp. 12333–12344, PMLR, 18–24 Jul 2021.

- [14] J. Li, S. Koyamada, Q. Ye, G. Liu, C. Wang, R. Yang, L. Zhao, T. Qin, T.-Y. Liu, and H.-W. Hon, “Suphx: Mastering Mahjong with Deep Reinforcement Learning,” arXiv e-prints, p. arXiv:2003.13590, Mar. 2020.

- [15] K. Arulkumaran, A. Cully, and J. Togelius, “Alphastar: An evolutionary computation perspective,” in Proceedings of the Genetic and Evolutionary Computation Conference Companion, GECCO ’19, (New York, NY, USA), p. 314–315, Association for Computing Machinery, 2019.

- [16] V. Mnih, A. P. Badia, M. Mirza, A. Graves, T. Lillicrap, T. Harley, D. Silver, and K. Kavukcuoglu, “Asynchronous methods for deep reinforcement learning,” in Proceedings of The 33rd International Conference on Machine Learning (M. F. Balcan and K. Q. Weinberger, eds.), vol. 48 of Proceedings of Machine Learning Research, (New York, New York, USA), pp. 1928–1937, PMLR, 20–22 Jun 2016.

- [17] M. Babaeizadeh, I. Frosio, S. Tyree, J. Clemons, and J. Kautz, “Reinforcement Learning through Asynchronous Advantage Actor-Critic on a GPU,” arXiv e-prints, p. arXiv:1611.06256, Nov. 2016.

- [18] L. Espeholt, H. Soyer, R. Munos, K. Simonyan, V. Mnih, T. Ward, Y. Doron, V. Firoiu, T. Harley, I. Dunning, S. Legg, and K. Kavukcuoglu, “IMPALA: Scalable distributed deep-RL with importance weighted actor-learner architectures,” in Proceedings of the 35th International Conference on Machine Learning (J. Dy and A. Krause, eds.), vol. 80 of Proceedings of Machine Learning Research, pp. 1407–1416, PMLR, 10–15 Jul 2018.

- [19] D. Horgan, J. Quan, D. Budden, G. Barth-Maron, M. Hessel, H. van Hasselt, and D. Silver, “Distributed Prioritized Experience Replay,” arXiv e-prints, p. arXiv:1803.00933, Mar. 2018.

- [20] A. Matsikaris, M. Widmann, and J. Jungclaus, “On-line and off-line data assimilation in palaeoclimatology: a case study,” Climate of the Past, vol. 11, no. 1, pp. 81–93, 2015.

- [21] T. Degris, M. White, and R. S. Sutton, “Off-Policy Actor-Critic,” arXiv e-prints, p. arXiv:1205.4839, May 2012.

- [22] T. Schaul, J. Quan, I. Antonoglou, and D. Silver, “Prioritized Experience Replay,” arXiv e-prints, p. arXiv:1511.05952, Nov. 2015.

- [23] D. Billings, A. Davidson, J. Schaeffer, and D. Szafron, “The challenge of poker,” Artificial Intelligence, vol. 134, no. 1-2, pp. 201–240, 2002.

- [24] M. Chen, A. Beutel, P. Covington, S. Jain, F. Belletti, and E. H. Chi, “Top-k off-policy correction for a reinforce recommender system,” in Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, pp. 456–464, 2019.

- [25] M. Samvelyan, T. Rashid, C. Schroeder de Witt, G. Farquhar, N. Nardelli, T. G. J. Rudner, C.-M. Hung, P. H. S. Torr, J. Foerster, and S. Whiteson, “The StarCraft Multi-Agent Challenge,” arXiv e-prints, p. arXiv:1902.04043, Feb. 2019.

- [26] J. Foerster, G. Farquhar, T. Afouras, N. Nardelli, and S. Whiteson, “Counterfactual multi-agent policy gradients,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32, Apr. 2018.

- [27] F. Gao, S. Chen, M. Li, and B. Huang, “Maca: a multi-agent reinforcement learning platform for collective intelligence,” in 2019 IEEE 10th International Conference on Software Engineering and Service Science (ICSESS), pp. 108–111, 2019.