75 University Ave W, Waterloo, Ontario, Canada N2L 3C5

22institutetext: BCAM - Basque Center for Applied Mathematics, Bilbao, Spain

22email: {tthieu, rmelnik}@wlu.ca

Effects of noise on leaky integrate-and-fire neuron models for neuromorphic computing applications

Abstract

Artificial neural networks (ANNs) have been extensively used for the description of problems arising from biological systems and for constructing neuromorphic computing models. The third generation of ANNs, namely, spiking neural networks (SNNs), inspired by biological neurons enable a more realistic mimicry of the human brain. A large class of the problems from these domains is characterized by the necessity to deal with the combination of neurons, spikes and synapses via integrate-and-fire neuron models. Motivated by important applications of the integrate-and-fire of neurons in neuromorphic computing for bio-medical studies, the main focus of the present work is on the analysis of the effects of additive and multiplicative types of random input currents together with a random refractory period on a leaky integrate-and-fire (LIF) synaptic conductance neuron model. Our analysis is carried out via Langevin stochastic dynamics in a numerical setting describing a cell membrane potential. We provide the details of the model, as well as representative numerical examples, and discuss the effects of noise on the time evolution of the membrane potential as well as the spiking activities of neurons in the LIF synaptic conductance model scrutinized here. Furthermore, our numerical results demonstrate that the presence of a random refractory period in the LIF synaptic conductance system may substantially influence an increased irregularity of spike trains of the output neuron.

Keywords:

ANNs SNNs LIF Langevin stochastic models neuromorphic computing random input currents synaptic conductances neuron spiking activities uncertainty factors membrane and action potentials neuron refractory periods1 Introduction

In recent years, the modelling with artificial neural networks (ANNs) offers many challenging questions to some of the most advanced areas of science and technology [7]. The progress in ANNs has led to improvements in various cognitive tasks and tools for vision, language, behavior and so on. Moreover, some ANN models together with the numerical algorithms bring the outcome achievements at the human-level performance. In general, biological neurons in the human brain transmit information by generating spikes. To improve the biological plausibility of the existing ANNs, spiking neural networks (SNNs) are known as the third generation of ANNs. SNNs play an important role in the modelling of important systems in neuroscience since SNNs more realistically mimic the activity of biological neurons by the combination of neurons and synapses [6]. In particular, neurons in the SNNs transmit information only when a membrane potential, i.e. an intrinsic quality of the neuron related to its membrane electrical charge, reaches a specific threshold value. The neuron fires, and generates a signal that travels to other neurons when the membrane reaches its threshold. Hence, a neuron that fires in a membrane potential model at the moment of threshold crossing is called a spiking neuron. Many models have been proposed to describe the spiking activities of neurons in different scenarios. One of the simplest models, providing a foundation for many neuromorphic applications, is a leaky integrate-and-fire (LIF) neuron model [18, 24, 31]. The LIF model mimics the dynamics of the cell membrane in the biological system [5, 20] and provides a suitable compromise between complexity and analytical tractability when implemented for large neural networks. Recent works have demonstrated the importance of the LIF model that has become one of the most popular neuron models in neuromorphic computing [2, 12, 10, 15, 16, 28]. However, ANNs are intensively computed and often deal with many challenges from severe accuracy degradation if the testing data is corrupted with noise [7, 17], which may not be seen during training. Uncertainties coming from different sources [11], e.g. inputs, devices, chemical reactions, etc would need to be accounted for. Furthermore, the presence of fluctuations can effect on the transmission of a signal in nonlinear systems [3, 4]. Recent results provided in [1] have shown that multiplicative noise is beneficial for the transmission of sensory signals in simple neuron models. To get closer to the real scenarios in biological systems as well as in their computational studies, we are interested in evaluating the contribution of uncertainty factors arising in LIF systems. In particular, we investigate the effects of the additive and multiplicative noise input currents together with the random refractory period on the dynamics of a LIF synaptic conductance system. A better understanding of random input factors in LIF synaptic conductance models would allow for a more efficient usage of smart SNNs and/or ANNs systems in such fields as biomedicine and other applications [7, 32].

Motivated by LIF models and their applications in SNNs and ANNs subjected to natural random factors in the description of biological systems, we develop a LIF synaptic conductance model of neuronal dynamics to study the effects of additive and multiplicative types of random external current inputs together with a random refractory period on the spiking activities of neurons in a cell membrane potential setting. Our analysis focuses on considering a Langevin stochastic equation in a numerical setting for a cell membrane potential with random inputs. We provide numerical examples and discuss the effects of random inputs on the time evolution of the membrane potential as well as the spiking activities of neurons in our model. Furthermore, the model of LIF synaptic conductances is examined on the data from dynamic clamping (see, e.g., [30, 20, 32]) in the Poissonian input spike train setting.

2 Random factors and a LIF synaptic conductance neuron model

2.1 SNN algorithm and a LIF synaptic conductance neuron model

Let us recall the SNN algorithm, presented schematically in Fig. 1 (see, e.g., [10]). At the first step, pre-synaptic neuronal drivers provide the input voltage spikes. Then, we convert the input driver for spikes to a gently varying current signal proportional to the synaptic weights and . Next, the synaptic current response is summed into the input of LIF neuron . Then, the LIF neuron integrates the input current across a capacitor, which raises its potential. After that, resets immediately (i.e. loses stored charge) once the potential reaches/exceeds a threshold. Finally, every time reaches the threshold, a driver neuron D3 produces a spike.

In general, the biological neuronal network is related to the SNN algorithm. Moreover, the main role of SNNs is to understand and mimic human brain functionalities since SNNs enable to approximate efficient learning and recognition tasks in neuro-biology. Hence, to have a better implementation of SNNs in hardware, it would be necessary to describe an efficient analog of the biological neuron. Therefore, in what follows, we are interested in the SNN algorithm starting from the third step, where the synaptic current response is summed into the input of LIF neuron, to the last step of the SNN algorithm. In particular, at the third step of SNN algorithm, it is assumed that the summation of synaptic current responses can be a constant, in a deterministic form or can be even represented by a random type of current. To get closer to the real scenarios of neuronal models, we should also account for the existence of random fluctuations in the systems. Specifically, the random inputs arise primarily through sensory fluctuations, brainstem discharges and thermal energy (random fluctuations at a microscopic level, such as Brownian motions of ions). The stochasticity can arise even from the devices which are used for medical treatments, e.g. devices for injection currents into the neuronal systems. For simplicity, we consider a LIF synaptic conductance model with additive and multiplicative noise input currents in presence of a random refractory period.

In biological systems such as brain networks, instead of physically joined neurons, a spike in the presynaptic cell causes a chemical, or a neurotransmitter, to be released into a small space between the neurons called the synaptic cleft [14]. Therefore, in what follows, we will focus on investigating chemical synaptic transmission and study how excitation and inhibition affect the patterns in the neurons’ spiking output. In this section, we consider a model of synaptic conductance dynamics. In particular, neurons receive a myriad of excitatory and inhibitory synaptic inputs at dendrites. To better understand the mechanisms of synaptic conductance dynamics, we investigate the dynamics of the random excitatiory (E) and inhibitory inputs to a neuron [21].

In general, synaptic inputs are the combination of excitatory neurotransmitters. Such neurotransmitters depolarize the cell and drive it towards the spike threshold, while inhibitory neurotransmitters hyperpolarize it and drive it away from the spike threshold. These chemical factors cause specific ion channels on the postsynaptic neuron to open. Then, the results make a change in the neuron’s conductance. Therefore, the current will flow in or out of the cell [14].

For simplicity, we define transmitter-activated ion channels as an explicitly time-dependent conductivity . Such conductance transients can be generated by the following equation (see, e.g., [9, 14]):

| (1) |

where (synaptic weight) is the maximum conductance elicited by each incoming spike, while is the synaptic time constant and is the Dirac delta function. Note that the summation runs over all spikes received by the neuron at time . Using Ohm’s law, we have the following formula for converting conductance changes to the current:

| (2) |

where represents the direction of current flow and the excitatory or inhibitory nature of the synapse.

In general, the total synaptic input current is the sum of both excitatory and inhibitory inputs. We assume that the total excitatory and inhibitory conductances received at time are and , and their corresponding reversal potentials are and , respectively. We define the total synaptic current by the following equation:

| (3) |

Therefore, the corresponding membrane potential dynamics of the LIF neuron under synaptic current (see, e.g., [21]) can be described as follows:

| (4) |

where is the membrane potential, is the external input current, while is the membrane time constant. We consider the membrane potential model where a spike takes place whenever crosses . Here, denotes the membrane potential threshold to fire an action potential. In that case, a spike is recorded and resets to value. This is summarized in the reset condition if . We define the following LIF model with and a reset condition:

| (5) | ||||

| (6) |

In this model, we consider a random synaptic input by introducing the following random input current (additive noise) , where is the zero-mean Gaussian white noise with unit variance. For the multiplicative noise case, the applied current is set to . Here, denote the standard deviations of these random components to the inputs. When considering such random input currents, the equation (4) can be considered as the following Langevin stochastic equation (see, e.g., [25]):

| (7) |

In our model, we use the simplest input spikes with Poisson process which provide a suitable approximation to stochastic neuronal firings [29]. This input spikes will be added in the quantity in the equation (1). In particular, the input spikes are given when every input spike arrives independently of other spikes. For designing a spike generator of spike train, let us call the probability of firing a spike within a short interval (see, e.g. [9]) , where with representing the instantaneous excitatory and inhibitory firing rates, respectively. This expression is designed to generate a Poisson spike train by first subdividing time into a group of short intervals through small time steps . At each time step, we define a random variable with uniform distribution over the range between 0 and 1. Then, we compare this with the probability of firing a spike, which is described as follows:

| (8) |

In this work, we also investigate the effects of random refractory periods [22]. We define the random refractory periods as , where .

2.2 Firing rate and spike time irregularity

In general, the irregularity of spike trains can provide information about stimulating activities in a neuron. A LIF synaptic conductance neuron with multiple inputs and coefficient of variation (CV) of the inter-spike-interval (ISI) can bring an output decoded neuron. In this work, we show that the increase can lead to an increase in the irregularity of the spike trains (see also [13]).

We define the spike regularity via coefficient of variation of the inter-spike-interval (see, e.g., [8, 13]) as follows:

where is the standard deviation and is the mean of the ISI of an individual neuron.

In the next section, we consider the output firing rate as a function of Gaussian white noise mean or direct current value, known as the input-output transfer function of the neuron.

3 Numerical results for the LIF synaptic conductance model

In this subsection, we take a single neuron at the dendrite and study how the neuron behaves when it is bombarded with both excitatory and inhibitory spike trains (see, e.g., [20, 21]).

The simulations this section have been carried out by using by a discrete-time integration based on the Euler method inplemented in Python.

In the simulations, we choose the parameter set as follows: (mV), (mV), (mV), (mV), (mV), , (ms), (ms), (ms), (nS), (nS), , , spikes, spikes. Here, and represent the number of excitatory and inhibitory presynaptic spike trains, respectively. These parameters have also been used in [20, 21] for dynamic clamp experiments and we take them for our model validation. In this subsection, we use the excitatory and inhibitory conductances provided in Fig. 2 for all of our simulations.

The main numerical results of our analysis here are shown in Figs.3-11, where we have plotted the time evolution of the membrane potential calculated based on model (4), the distribution of the ISI and the corresponding spike irregularity profile. We investigate the effects of additive and multiplicative types of random input currents inpresence of a random refractory period on a LIF neuron under synaptic conductance dynamics. Under a Poissonian spike input, the random external currents and random refractory period influence the spiking activity of a neuron in the cell membrane potential.

3.1 Additive noise

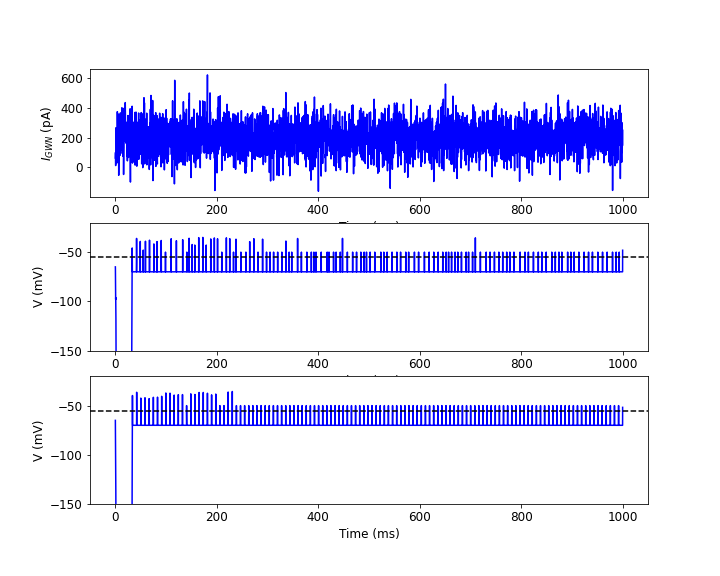

In Fig. 3, we have plotted the Gaussian white noise current profile, the time evolution of the membrane potential with Gaussian white noise input current ( (pA)) and direct input current ( (pA)). In this case, we fix the value of (ms). We observe that the time evolution of the membrane potential looks quite similar in the two cases. Note that a burst occurred when a neuron spiked more than once within 25 (ms) (see, e.g., [26]). In this case, when considering the presence of random input current and random refractory period in the system, we observe there exist bursts in the case presented in the second row of Fig. 3.

|

|

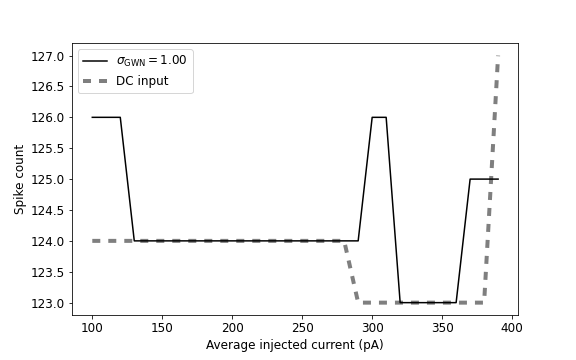

We look also at the input-output transfer function of the neuron, the output firing as a function of average injected current in Fig. 4. In particular, we see that the spike count values are slightly fluctuating when we add the random refractory period into the system (in the right panel of Fig. 4). Moreover, in the averaged injected current intervals (pA) and (pA), the spike count value is the same in both cases: the Gaussian white noise and direct currents in the right panel of Fig. 4. We have seen this phenomenon for (pA) also in the Fig. 3.

|

|

In Fig.5, we have plotted the spike count distribution as a function of ISI. We observe that the coefficient increases when we increase the value of . The spikes are distributed almost entirely in the ISI interval from 6 to 9 (ms) in the first row, while the spikes are distributed mostly in from 0 to 21 (ms) in the second row of Fig.5. The ISI distribution presented in the first row of Fig.5 reflects bursting moods. To understand better such phenomenon let us look at the following spike irregularity profile in Fig. 6.

|

|

|

|

In Fig.6, we look at the corresponding spike irregularity profile of the spike count in Figs. 5-6. In this plot, we fix the external current (pA) together with considering different values of . We observe that when we increase the value of the coefficient increases. In general, when we increase the mean of the Gaussian white noise, at some point, the effective input means are above the spike threshold and then the neuron operates in the so-called mean-driven regime. Hence, as the input is sufficiently high, the neuron is charged up to the spike threshold and then it is reset. This essentially gives an almost regular spiking. However, in our case, by considering various values of the random refractory period, we see that increases when we increase the values of . This is visible in Fig. 6, increases from 0.1 to 0.6 when increases from 1 to 6. Note that an increased ISI regularity could result in bursting [23]. Moreover, the spike trains are substantially more regular with a range , and more irregular when [27]. Therefore, in some cases, the presence of random input current with oscilations could lead to the burst discharge.

3.2 Multiplicative noise

In Fig. 7, we have plotted the Gaussian white noise current profile, the time evolution of the membrane potential with multiplicative noise input current ( (pA)) and direct input current ( (pA)). In this case, we fix the value of (ms). There are bursting moods in the membrane potential in both two cases. This is due to the presence of in the input current together with the random refractory period in the system.

However, when we increase the leak conductance from (nS) to (nS), the burst discharges are dramatically reduced in the case with multiplicative noise in Fig. 8. In particular, we observe fluctuations in the membrane potential in the second row of Fig. 8. There is an increase in the time interval between two nearest neighbor spikes in both cases. In order to understand better such phenomena, let us look at the following plots. From now on, we will use the parameter (nS) for cases in Figs. 9-11.

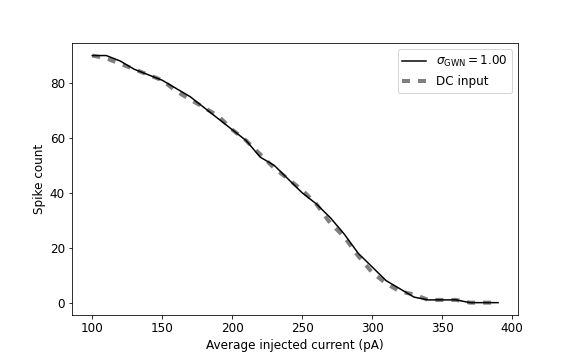

|

|

In Fig. 9, we look at the input-output transfer function of the neuron, output firing rate as a function of input means. We observe that the input-output transfer function looks quite similar in both cases: direct and random refractory periods. There are slight fluctuations in the spike count profile in the case with random refractory period. It is clear that the presence of multiplicative noise strongly affects the spiking activity in our system compared to the case with additive noise. The spiking activity of the neuron dramatically reduces in presence of the multiplicative noise in the system.

|

|

In Fig. 10, we have plotted the spike distribution as a function of ISI. In the first row of Fig. 10, we see that the spikes are distributed almost entirely in the ISI interval [9;18] (ms). Moreover, when we increase the value of from 0.5 to 4 the spike irregularity values increase. In addition, they are distributed almost entirely in the ISI interval [1;25] (ms) in the second row of Fig. 10. To understand better such phenomenon we look at the spike irregularity profile of our system in presence of multiplicative noise and random refractory period in Fig. 11. In particular, we observe that the spike irregularity increases when we increase the values of similar to the case of additive noise. Furthermore, we see that the larger injected currents are, the higher are the values of .

|

|

|

|

Additionally, we notice that the presence of the random refractory period increases the spiking activity of the neuron. The presence of additive and multiplicative noise causes burst discharges in the system. However, when we increase the value of leak conductance, the burst discharges are strongly reduced in the case with multiplicative noise. Under suitable values of average injected current as well as the values of random input current and random refractory period, the irregularity of spike trains increases. The presence of additive noise could lead to the occurrence of bursts, while the presence of multiplicative noise with random refractory period could reduce the burst discharges in some cases. This effect may lead to an improvement in the carrying of information about stimulating activities in the neuron [1]. Moreover, the study of random factors in the LIF conductance model would potentially contribute to further progress in addressing the challenge of how the active membrane currents generating bursts of action potentials affect neural coding and computation [19]. Finally, we remark that noise may come from different sources, e.g., devices, environment, chemical reactions. Moreover, as such, noise is not always a problem for neurons, it can also bring benefits to nervous systems [11, 1].

4 Conclusion

We have proposed and described a LIF synaptic conductance model with random inputs. Using the description based on Langevin stochastic dynamics in a numerical setting, we analyzed the effects of noise in a cell membrane potential. Specifically, we provided details of the models along with representative numerical examples and discussed the effects of random inputs on the time evolution of the cell membrane potentials, the corresponding spiking activities of neurons and the firing rates. Our numerical results have shown that the random inputs strongly affect the spiking activities of neurons in the LIF synaptic conductance model. Furthermore, we observed that the presence of multiplicative noise causes burst discharges in the LIF synaptic conductance dynamics. However, when increasing the value of the leak conductance, the bursting moods are reduced. When the values of average injected current are large enough together with an increased standard deviation of the refractory period, the irregularity of spike trains increases. With more irregular spike trains, we can potentially expect a decrease in bursts in the LIF synaptic conductance system. Random inputs in LIF neurons could reduce the response of the neuron to each stimulus in SNNs and/or ANNs systems. A better understanding of uncertainty factors in neural network systems could contribute to further developments of SNN algorithms for higher-level brain-inspired functionality studies and other applications.

Acknowledgment

Authors are grateful to the NSERC and the CRC Program for their support. RM is also acknowledging support of the BERC 2022-2025 program and Spanish Ministry of Science, Innovation and Universities through the Agencia Estatal de Investigacion (AEI) BCAM Severo Ochoa excellence accreditation SEV-2017-0718 and the Basque Government fund AI in BCAM EXP. 2019/00432.

References

- [1] Bauermann, J., Lindner, B.: Multiplicative noise is beneficial for the transmission of sensory signals in simple neuron models. BioSystems 178, 25–31 (2019)

- [2] Brigner, W.H., Hu, X., Hassan, N., Jiang-Wei, L., Bennett, C.H., Garcia-Sanchez, F., Akinola, O., Pasquale, M., Marinella, M.J., Incorvia, J.A.C., Friedman, J.S.: Three artificial spintronic leaky integrate-and-fire neurons. SPIN 10(2), 2040003 (2020)

- [3] Burkitt, A.: A review of the integrate-and-fire neuron model: I. homogeneous synaptic input. Biological Cybernetics 95(2), 97–112 (2006)

- [4] Burkitt, A.: A review of the integrate-and-fire neuron model: II. inhomogeneous synaptic input and network properties. Biological Cybernetics 95(1), 1–19 (2006)

- [5] Cavallari, S., Panzeri, S., Mazzoni, A.: Comparison of the dynamics of neural interactions between current-based and conductance-based integrate-and-fire recurrent networks. Front. Neural Circuits 8(12) (2014)

- [6] Chen, X., Yajima, T., Inoue, I.H., Iizuka, T.: An ultra-compact leaky integrate-and-fire neuron with long and tunable time constant utilizing pseudo resistors for spiking neural networks. Accepted for publication in Japanese Journal of Applied Physics (2021)

- [7] Chowdhury, S.S., Lee, C., Roy, K.: Towards understanding the effect of leak in spiking neural networks. Neurocomputing 464, 83–94 (2021)

- [8] Christodoulou, C., Bugmann, G.: Coefficient of variation vs. mean interspike interval curves: What do they tell us about the brain? Neurocomputing 38-40, 1141–1149 (2001)

- [9] Dayan, P., Abbott, L.F.: Theoretical Neuroscience. The MIT Press Cambridge, Massachusetts London, England (2005)

- [10] Dutta, S., Kumar, V., Shukla, A., Mohapatra, N.R., Ganguly, U.: Leaky integrate and fire neuron by charge-discharge dynamics in floating-body mosfet. Scientific Reports 7(8257) (2017)

- [11] Faisal, A.D., Selen, L.P.J., Wolpert, D.M.: Noise in the nervous system. Nature Reviews Neuroscience 9, 292–303 (2008)

- [12] Fardet, T., Levina, A.: Simple models including energy and spike constraints reproduce complex activity patterns and metabolic disruptions. PLoS Comput Biol 16(12), e1008503 (2020)

- [13] Gallinaro, J.V., Clopath, C.: Memories in a network with excitatory and inhibitory plasticity are encoded in the spiking irregularity. PLoS Comput Biol 17(11), e1009593 (2021)

- [14] Gerstner, W., Kistler, W.M., Naud, R., Paninski, L.: Neuronal Dynamics: From single neurons to networks and models of cognition. Cambridge University Press (2014)

- [15] Gerum, R.C., Schilling, A.: Integration of leaky-integrate-and-fire neurons in standard machine learning architectures to generate hybrid networks: A surrogate gradient approach. Neural Computation 33, 2827–2852 (2021)

- [16] Guo, T., Pan, K., Sun, B., Wei, L., Y., Zhou, Y.N., W, Y.A.: Adjustable leaky-integrate-and-fire neurons based on memristor coupled capacitors. Materials Today Advances 12(100192) (2021)

- [17] Hendrycks, D., Dietterich, T.: Benchmarking neural network robustness to common corruptions and perturbations. in: International Conference on Learning (2019)

- [18] Jaras, I., Harada, T., Orchard, M.E., Maldonado, P.E., Vergara, R.C.: Extending the integrate-and-fire model to account for metabolic dependencies. Eur J Neurosci. 54(3), 5249–5260 (2021)

- [19] Kepecs, A., Lisman, J.: Information encoding and computation with spikes and bursts. Network: Computation in Neural Systems 14, 103–118 (2003)

- [20] Latimer, K.W., Rieke, F., Pillow, J.W.: Inferring synaptic inputs from spikes with a conductance-based neural encoding model. eLife 8(e47012) (2019)

- [21] Li, S., Liu, N., Yao, L., Zhang, X., Zhou, D., Cai, D.: Determination of effective synaptic conductances using somatic voltage clamp. PLoS Comput Biol 15(3), e1006871 (2019)

- [22] Mahdi, A., Sturdy, J., Ottesen, J.T., Olufsen, M.S.: Modeling the afferent dynamics of the baroreflex control system. PLoS Comput Biol 9(12), e1003384 (2013)

- [23] Maimon, G., Assad, J.A.: Beyond Poisson: Increased spike-time regularity across primate parietal cortex. Neuron 62(3), 426–440 (2009)

- [24] Nandakumar, S.R., Boybat, I., Gallo, M.L., Eleftheriou, E., Sebastian, A., Rajendran, B.: Experimental demonstration of supervised learning in spiking neural networks with phase change memory synapses. Scientific Reports 10(8080) (2020)

- [25] Roberts, J.A., Friston, K.J., Breakspear, M.: Clinical applications of stochastic dynamic models of the brain, part i: A primer. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging 2, 216–224 (2017)

- [26] So, R.Q., Kent, A.R., Grill, W.M.: Relative contributions of local cell and passing fiber activation and silencing to changes in thalamic fidelity during deep brain stimulation and lesioning: a computational modeling study. J Comput Neurosci 32, 499–519 (2012)

- [27] Stiefel, K.M., Englitz, B., Sejnowski, T.J.: Origin of intrinsic irregular firing in cortical interneurons. PNAS 110(19), 7886–7891 (2013)

- [28] Teeter, C., Iyer, R., Menon, V., Gouwens, N., Feng, D., Berg, J., Szafer, A., Cain, N., Zeng, H., Hawrylycz, M., Koch, C., Mihalas, S.: Generalized leaky integrate-and-fire models classify multiple neuron types. Nature Communications 9(709) (2018)

- [29] Teka, W., Marinov, T.M., Santamaria, F.: Neuronal integration of synaptic input in the fluctuation-driven regime. The Journal of Neuroscience 24(10), 2345–2356 (2004)

- [30] Teka, W., Marinov, T.M., Santamaria, F.: Neuronal spike timing adaptation described with a fractional leaky integrate-and-fire model. PLoS Comput Biol 10(3) (2014)

- [31] Van Pottelbergh, T. , Drion, G., Sepulchre, R.: From biophysical to integrate-and-fire modeling. Neural Computation 33(3), 563–589 (2021)

- [32] Woo, J., Kim, S.H., Han, K., M.: Characterization of dynamics and information processing of integrate-and-fire neuron models. J. Phys. A: Math. Theor. 54(445601) (2021)