Eberhard limit for photon-counting Bell tests and its utility in quantum key distribution

Abstract

Loophole-free Bell tests are essential if one wishes to perform device-independent quantum key distribution (QKD), since any loophole could be used by a potential adversary to undermine the security of the protocol. Crucial work by Eberhard demonstrated that weakly entangled two-qubit states have a far greater resistance to the detection loophole than maximally entangled states, allowing one to close the loophole with detection efficiency greater than 2/3. Here we demonstrate that this same limit holds for photon-counting CHSH Bell tests which can demonstrate non-locality for higher dimensional multiphoton states such as two-mode squeezed vacuum and generalized Holland-Burnett states. In fact, we show evidence that these tests are in some sense universal, allowing feasible detection loophole-free tests for any multiphoton bipartite state, as long as the two modes are well correlated in photon number. Additionally, by going beyond the typical two-input two-output Bell scenario, we show that there are also photon-counting CGLMP inequalities which can also match the Eberhard limit, paving the way for more exotic loophole-free Bell tests. Finally we show that by exploiting this increased loss tolerance of non maximally entangled states, one can increase the key rates and loss tolerances of QKD protocols based on photon-counting tests.

I Introduction

Loophole-free Bell tests are the gold standard of entanglement testing [1, 2, 3, 4, 5]. They provide definitive proof that multiple parties share quantum entanglement which can then be used for applications such as device-independent quantum key distribution (DI-QKD)[6, 7, 8, 9, 10, 11, 12, 13], quantum metrology[14, 15, 16, 17] and device-independent randomness generation[18, 19, 20]. If instead there exists some loopholes, the proof is not definitive but relies on some extra assumptions. For example, the detection loophole is opened by filtering out some measurement results (usually when a photon is lost) i.e. performing post-selection. The proof of entanglement is then not definitive but based on additional fair-sampling assumptions [21, 22]. In particular, QKD without loophole-free Bell tests are then vulnerable to attacks by exploiting these extra security assumptions, and commercial devices have been routinely hacked as a result [23, 24].

Closing the detection loophole is experimentally demanding and requires minimizing losses to increase the detection efficiency above some threshold. The crucial first steps towards experimental tests which closed the detection loophole were enabled by pivotal works by Eberhard, who demonstrated that using non-maximally entangled states can greatly lower the strict efficiency requirements [25]. Specifically, using a maximally entangled polarization Bell pair , the detection loophole is closed only for detector efficiencies , whereas using a non-maximally entangled state , one can approach the limit as . Paradoxically, this is when the state approaches the separable , so that it becomes less tolerant to other types of noise. In practice, the optimal value of then depends on the relative importance of different sources of imperfection. The first experiments exploiting the Eberhard inequality chose a value of [26].

Nowadays loophole-free Bell tests are becoming more common [1, 2, 3, 4], with the first fully DI-QKD demonstrations being performed [27]. However, until now all these Bell tests have been performed for 2-qubit polarization/spin singlet states and using the typical CHSH test based on polarization/spin measurements[28], precluding the possibility of testing the entanglement of some more exotic states, such as multiphoton/qudit states. Bell tests for multiphoton states of light based on photon-counting experiments were first theorised in the 1990s[29, 30, 31, 32] and have since been expanded on in a number of papers [33, 34, 35, 36], including a proposal for DI-QKD based on these tests [37]. These tests work for a variety of important states such as two-mode squeezed vacuum[33], generalized Holland-Burnett states[37] and hybrid-entangled Schrödinger cat states[38].

In this work we investigate whether these photon-counting tests form a universal test for entanglement in the photon-number degree of freedom. We demonstrate that any entangled state with high photon-number correlations can violate one of a number of Bell inequalities without employing any post-selection or filtering of results, thus having the possibility of closing the detection loophole. This requires low losses and high efficiency detectors, however we demonstrate that states which asymptotically approach the vacuum state have the maximum possible efficiency tolerance , matching that obtained by Eberhard for polarization/spin measurements. Moreover, this tolerance is not limited to the CHSH tests introduced in the original papers, but is also a property of the multiple output CGLMP inequalities[39], opening up loophole-free Bell tests to both more exotic states and more exotic inequalities. Finally, we demonstrate that the Eberhard inequality found in this paper has utility for DI-QKD protocols, allowing one to increase both the loss tolerance and key rates using less than maximally entangled states.

II Photon-counting Bell tests

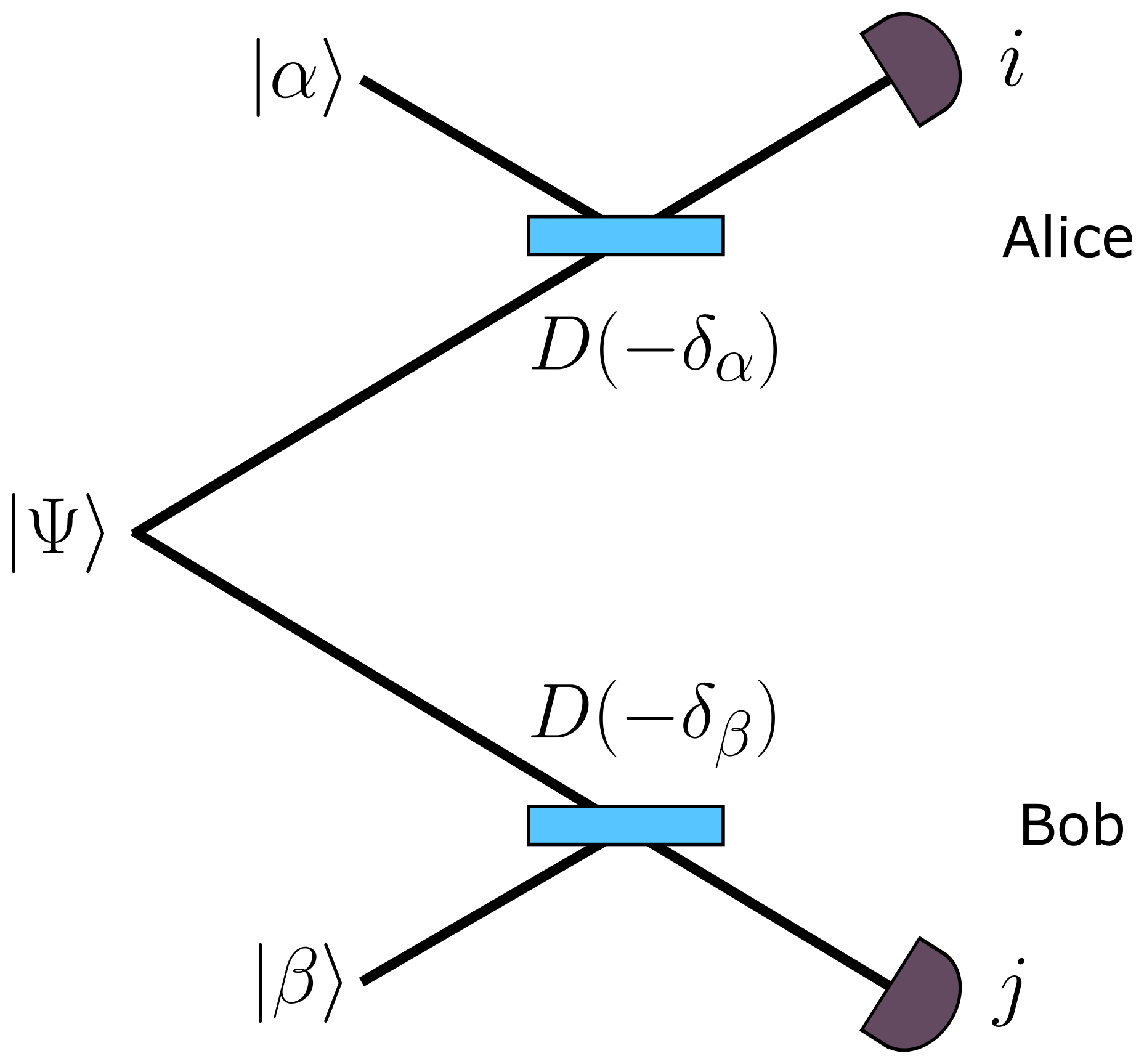

Figure 1 depicts a Bell test for a two-mode state of light . The two modes are spatially separated, with one mode being sent to Alice and the other to Bob. Each party interferes their mode with a coherent state and , on variable beam splitters with reflectivities and respectively. Finally they use photon-number-resolving (PNR) detectors to measure the number of photons , in the two transmitted modes behind the beam splitters. The combination of the complex amplitude and reflectivity form a ‘measurement setting’ for Alice, while and form the corresponding setting for Bob. In the limits and the beam splitter interactions are equivalent to displacement operators and where and defined similarly. By collecting measurement statistics for settings per party, Alice and Bobs can prove entanglement of their shared state, either by showing violation of a Bell inequality or via linear programming methods.

Since the coherent states have a finite probability to contain any number of photons, can be any integer, and the number of measurement outcomes is unbounded. To make use of known Bell inequalities it is thus necessary to reduce these to a finite number of outcomes, . The simplest case has been analyzed in the previous literature [29, 30, 31, 32]. One way to reduce the output to two outcomes is for Alice (Bob) to assign a measurement outcome of when zero photons are transmitted and an outcome of when non-zero photons are transmitted. The probability for a joint measurement of zero photons is then

| (1) |

which is simply the two-mode Q-function of the state (we neglect the usual factors of ). Moreover, the marginal probability that Alice detects zero photons

| (2) |

is just the Q-function of her reduced state, , and Bob’s probability is defined similarly. Here is a trace over Bob’s modes. If Alice and Bob choose and randomly from the sets and respectively, the CHSH inequality[28] for this zero/non-zero test can then be expressed entirely in terms of Q-functions as follows

| (3) |

, where , and .

Another simple way to obtain two outcomes is for Alice (Bob) to assign a measurement outcome of when an even number of photons are transmitted and an outcome of when an odd number are transmitted. This even/odd test can also be directly related to a quasi-probability distribution, since the quantum correlation is given directly by the two-mode Wigner function

| (4) |

The CHSH inequality for this test is then

| (5) |

, where .

Perhaps the most simple way to generalize the above tests is to extend the number of outcomes beyond two. This requires the following general formula to measure arbitrary photon numbers

| (6) | ||||

where is the transition element of the displacement operator between two Fock states

| (7) | ||||

Using Eq. (6) we can evaluate inequalities in the scenario , . The facet-defining inequalities here are the CGLMP inequalities[39], plus the usual projections onto CHSH. Instead of separating into zero/non-zero outcomes as before, we can now separate into zero photons, single photons and two or more photons, forming our three outcomes per party. The CGLMP inequality for this scenario may be written as

| (8) |

, where

| (9) | ||||

Notice that all of the described photon-counting Bell tests take into account every possible experimental outcome, including cases where photons are lost, so that no post-selection or fair sampling assumptions are required. This allows the possibility for these tests to close the detection loophole without any modifications.

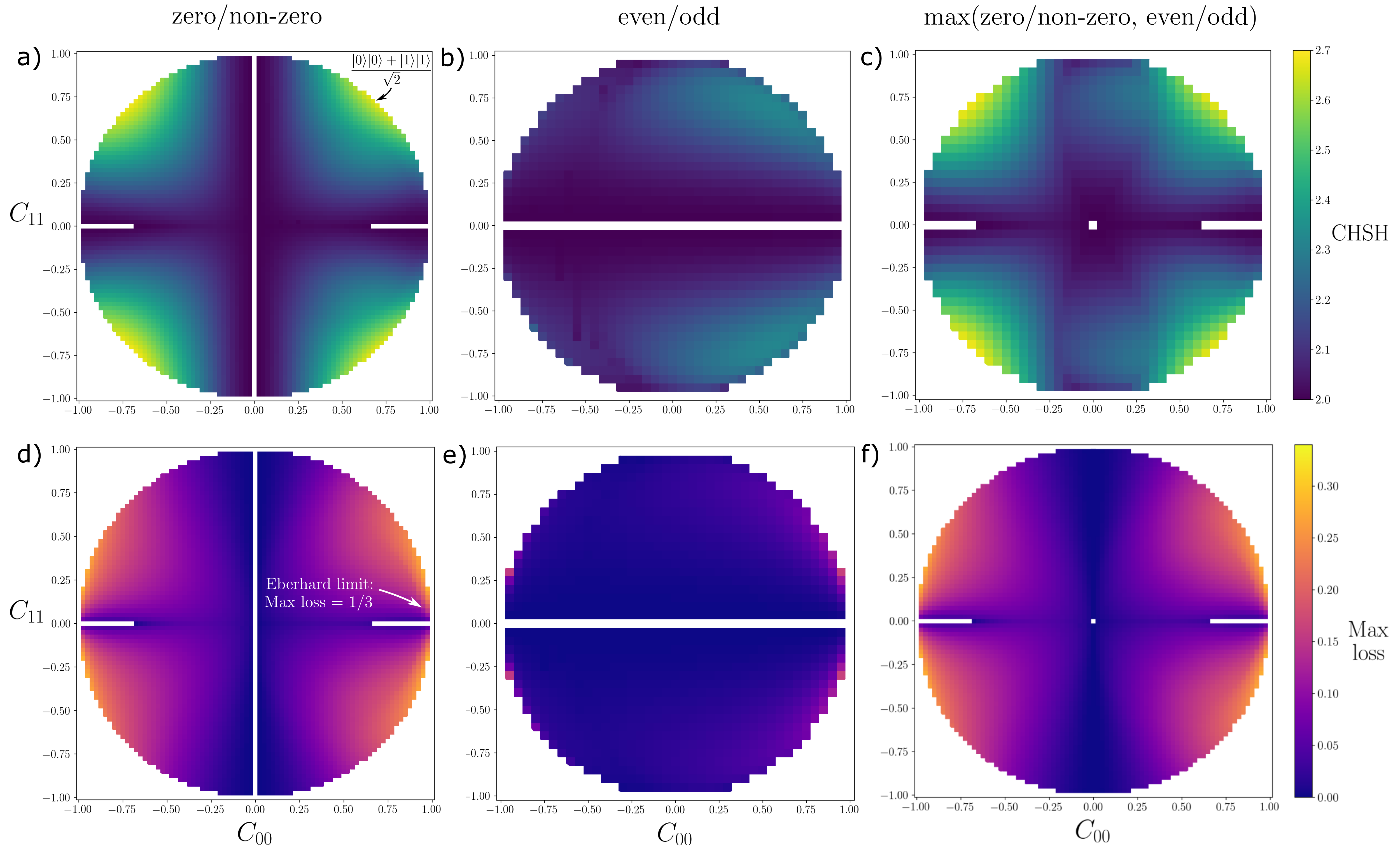

To determine whether such tests work as a universal test for entanglement-verification in the photon number regime, we consider states of the form . We first considered the perfectly correlated states , and performed an exhaustive search over all complex values for . By numerically maximizing the CHSH violation over all four complex displacements, we found that the test worked to prove entanglement for the vast majority of states, the only exception being states with very weak levels of entanglement i.e. those that are close to separable states. Thus we may conjecture that the photon-counting Bell tests work for all perfectly correlated pure entangled states, and since these tests do not require any post-selection or filtering of results, they have the possibility of closing the detection loophole. To illustrate this point, we plot in Fig. 2 the results for states of up to photons, with real coefficients . and are varied while is fixed by the normalization condition . The positive square root can be taken without loss of generality since the negative square root is equivalent to flipping the sign of all other coefficients, as the state would change only by a global phase.

Fig. 2(a) shows the CHSH value for the zero/non-zero test. One observes that the test succeeds mostly everywhere, notably except along the line , i.e. states which have no vacuum component. The test also fails for states like , i.e. states that are close to vacuum with no single photon component. Everywhere else this test works well, and can yield fairly high violations of local realism. The greatest violation of 2.69 is found for the states . Although these states are maximally entangled in 2 dimensions, the basis states are not qubits but Fock states which are infinite dimensional. Fig. 2(b) shows the CHSH value for the even/odd test, where one observes that it is much weaker than the zero/non-zero test. One advantage however is that, unlike the zero/non-zero test, it can succeed for states with no vacuum component . This test instead fails for states with a definite photon number parity e.g. along the line , as could be expected. Performing both of these tests can then eliminate the weaknesses of the individual tests, leading to fairly large CHSH violations for the vast majority of photon-number correlated states, as shown in Fig. 2(c). In contrast, states without perfect correlation, for , cannot always be verified by these tests. In general, as the levels of entanglement and photon-number correlations increase, the more likely the test will succeed.

III Loss Tolerance

The amount of CHSH violation does not give a complete picture, however. A crucial factor for experimental tests is how tolerant the Bell inequality violation is to photonic losses. These losses may be modelled by interfering the state with vacuum on a fictional beamsplitter of reflectivity and tracing out the reflected beam, equivalent to a photon being successfully transmitted with probability . With these losses considered solely to occur after the displacement operation, can be interpreted as the detection efficiency of the two detectors. Fig. 2(d) shows the loss tolerance of the zero/non-zero test for the 2-photon states. Interestingly, one observes that as one approaches the vacuum state , the loss tolerance approaches , exactly the Eberhard limit. Placing the losses in the source i.e. before the displacement operation, leads to very similar conclusions with only a slightly more strict limit . From a mathematical point of view the fact that we can reach the Eberhard limit is not too surprising, since the state in this limit can be approximated as something like which is mathematically identical to the 2-qubit state originally considered by Eberhard. However, it is interesting that this limit can be approached through this completely different measurement scheme, with routine optical experimental devices[40, 41], and with entanglement in photon-number rather than polarization or spin. The even/odd test has a much worse loss tolerance, as seen in Fig. 2(e). Although it also displays Eberhard level loss tolerance in the same limit, it drops rapidly as we leave the vicinity of the vacuum state , so that it is not really visible in this figure.

For a concrete example we can consider the two-mode squeezed vacuum state which is a qudit state of in principle infinite dimension. The photon-counting Bell tests have been considered for these states in the early papers[29, 30, 31, 32], althought they didn’t find the greatest violation of the Bell inequalities and didn’t realise its large loss tolerance in the low squeezing limit .

The relevant Q-functions for the TMSV state are

| (10) |

| (11) |

with defined analogously, while the two-mode Wigner function is

| (12) | ||||

| (13) |

The CHSH parameters for both tests, and were numerically maximized by a combination of differential evolution, Nelder-mead and simulated annealing methods, using Eqs. (3) and (5). For the zero/non-zero test, the optimal choice of measurement settings maximizing were found to have the form , where and are positive real numbers which depend on the amount of squeezing . This choice of relative phases can be understood as those maximizing and while minimizing . The relative phase between and is then fixed, forcing to be minimized. For the even/odd test, the optimal settings maximizing have the same form, with similar reasoning, in this case maximizing/minimizing the various Wigner functions rather than the Q functions.

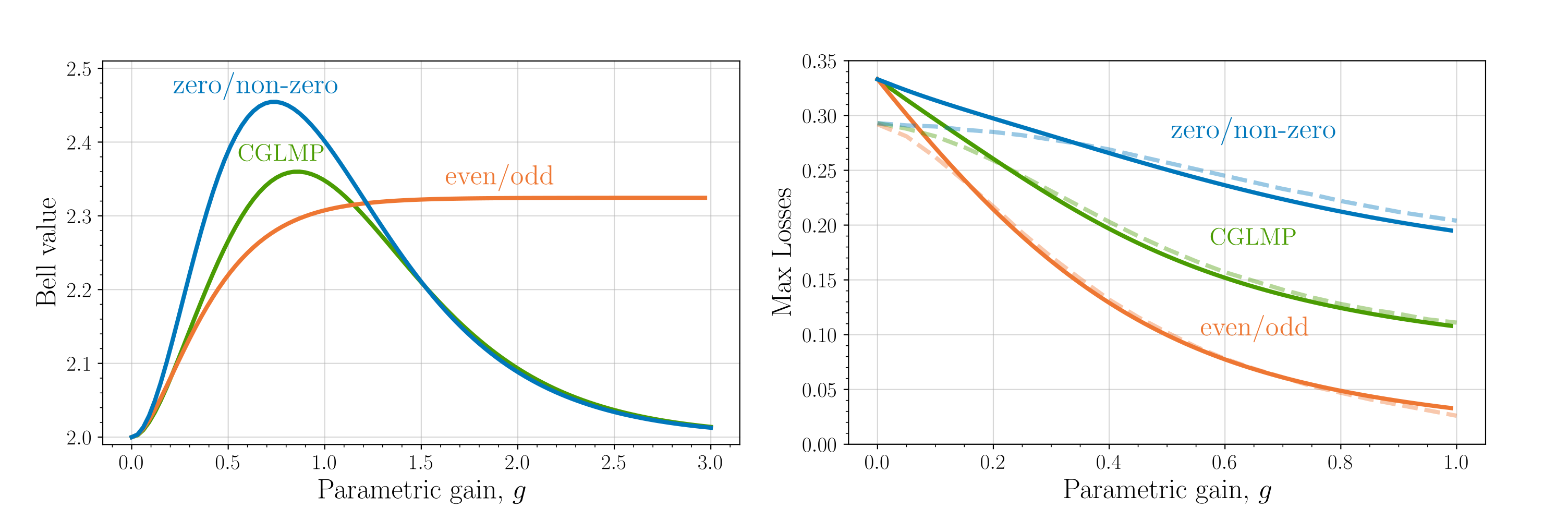

The results are shown in Fig. 3(a) as a function of parametric gain, . One sees that the zero/non-zero test is optimal for the more experimentally relevant low gain regime, shown by the fact that , reaching an optimal value of at . Increasing further, the zero/non-zero test weakens due to the fact that zero photon events become increasing unlikely. The even/odd test dominates here , and reaches a maximum value in the limit of high gain . Since the TMSV state becomes the original EPR state in this limit, in theory the even/odd test becomes a solution to the EPR paradox as originally proposed. However, as pointed out in the original analysis [30], there is difficulty both reaching, and performing the relevant measurements in, this limit. These results further highlight the general features we noticed before. The zero/non-zero test works better for low photon-number states i.e. low squeezing , and the even/odd test works better for high photon-number states i.e. high squeezing . Additionally, we also consider the CGLMP inequality with 3 outputs, distinguishing between 0, 1 and 2 or more photons. This performs similarly to the zero/non-zero test, although the Bell inequality violation is slightly lower. Furthermore from Fig. 3(b), one sees that all three tests reach the Eberhard level loss tolerance , although the zero/non-zero test has a loss tolerance which decays much less rapidly than the other two, so is more practical for testing the non-locality of the two-mode squeezed vacuum state. The reason for this is that losing a photon is catastrophic for the even/odd test since it changes an even result to an odd one and vice-versa. In contrast the zero/non-zero test is more robust since a non-zero result can remain non-zero. The fact that the CGLMP inequality also displays Eberhard level loss tolerace in the low squeezing regime, opens up the possibility of loophole-free Bell inequality tests using higher dimensional inequalities. Unfortunuately, the loss tolerance degrades faster with parametric gain than the zero/non-zero CHSH test, so that it appears to have limited practical use for the two-mode squeezed vacuum state in particular.

The Eberhard limit is reached when we consider detector losses i.e. with the losses occurring after the displacement operation, however we have also considered losses in the source i.e. with losses occurring before the displacement operation. The loss tolerance in this case is shown as the dashed lines in Fig. 3(b). The conclusions are not much changed, except that we reach a slightly worse maximum loss tolerance of .

IV Utility for QKD

As an example of the utility of this Eberhard limit for photon-counting, we can consider a DI-QKD protocol based upon these tests, similar to what has been considered in[37]. There, a proposal was considered in which generalized Holland-Burnett states (i.e. the output of two Fock states interfering on a beam splitter) are distributed between two parties, and the zero/non-zero Bell test was used to establish a shared secret key. We now consider the non-maximally entangled state and demonstrate that using non-maximal entanglement can yield increased key rates and loss tolerances for key distribution. For this state becomes which leads to the same Bell inequality violation and loss tolerance as the state considered in [37]. We have already seen that lowering from this symmetrical value can increase the loss tolerance of the Bell tests, approaching the Eberhard limit, but it remains to be seen whether it can also increase the loss tolerance of the DI-QKD protocol as a whole, since in general the loss tolerance of DI-QKD is even more strict than the loophole-free Bell test itself.

We consider a simple DI-QKD scenario based on CHSH violation as outlined in[6, 7, 8, 11]. In addition to the two measurement settings per party used in the CHSH test and , Alice now uses a third setting . Each time the entangled state is distributed to them, Alice performs a measurement randomly choosing between her three settings, and Bob randomly chooses between his two settings. After a number of trials , they publicly communicate the settings used for each trial. A number of results using settings from the sets and are also communicated publicly and used to evaluate the CHSH inequality, while a small sample, of results using the combination and are kept secret and form the raw key shared between Alice and Bob. Violation of the CHSH inequality guarantees that Eve cannot obtain full information about the key, providing information-theoretic security of communications where they key is used in a one-time-pad.

After performing classical privacy amplification and error correction on their raw key of length , they obtain the final key of reduced length where the key rate is given by[42]

| (14) |

Here is the mutual information between Alice and Bob (when using settings ), specifying the fraction of bits that are kept after performing error correction, while is the mutual information between Bob and Eve, giving the number of bits that are sacrificed for privacy amplification. Assuming Eve performs optimal collective attacks against the protocol, the key rate can be lower bounded[8, 9, 10] by

| (15) |

where is the binary entropy function, is the obtained CHSH inequality value, and is the conditional entropy between Alice and Bob. There are also methods for upgrading security against collective attacks to security against the most general coherent attacks [11]. Having loophole-free Bell inequality violation is required, but not sufficient, to obtain a finite key rate , since the entropy must also be minimized. For this reason, we cannot obtain a loss tolerance of for DI-QKD, but by using non-maximally entangled states, the loss tolerance can still be improved.

By numerically maximizing Eq. (15) with respect to the three settings of Alice and two settings of Bob, we have calculated the key rate that Alice and Bob may obtain by distributing the state , for varying levels of photonic loss. The results for the maximally entangled state are shown in the blue line Fig. 4, where one observes that after 7% loss, the key rate drops to zero, indicating a rather strict efficiency requirement of 93%. However, this can be improved considerably by instead distributing non-maximally entangled states. For each value of loss we have found the optimal value of and calculated the key rates, which are shown as the orange line in Fig. 4. Compared to maximally entangled states, the loss tolerance is increased from 7% to 10.5%, a 50% improvement.

V Conclusion

We have demonstrated the Eberhard inequality for photon-counting Bell tests, showing that detection loophole-free Bell tests are possible with efficiencies as low as 2/3, for photon-number entangled states which are generally multiphoton. We have shown this for two types of CHSH tests, based on discriminating between zero/non-zero photons, and even/odd numbers of photons respectively, but we have also shown it for CGLMP inequalities with more than two outputs. This opens up loophole-free Bell tests, and applications such as QKD, to more exotic states and types of entanglement than typical polarization/spin singlet states. The Eberhard limit for CGLMP inequalities could also allow detection loophole-free tests other than CHSH for the first time. The CHSH tests considered have been shown to work for states that are perfectly correlated in photon number, although for states without perfect correlation it can sometimes fail. Generally the more correlated in photon number states are, the more likely they are to violate these photon counting Bell tests, although a complete set of criteria to understand which states should violate these tests is still sought after.

To highlight the utility of the Eberhard level of loss tolerance for photon-counting Bell tests, we have shown that the loss tolerances and key rates of DI-QKD protocols can be drastically improved by the distribution of photon-number entangled states which are not maximally entangled. DI-QKD represents the ultimate level of cryptography which is immune to any eavesdropping at either the source or detection, and DI-QKD proposals based on photon number entanglement is an active area of research[37] since it has been shown to yield high key rates and immunity to large transmission losses. Thus our work represents a key step toward realization of this ambitious goal.

Acknowledgements.

T.M. and M.S. were supported by the Foundation for Polish Science “First Team” project No. POIR.04.04.00-00- 220E/16-00 (originally FIRST TEAM/2016-2/17). A.M.-N. and M.S. were supported by the National Science Centre “Sonata Bis” project No. 2019/34/E/ST2/00273. M.M. and M.S. were supported by the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie project “AppQInfo” No. 956071. T.M. and M.S. were supported by the National Science Centre within the QuantERA II Programme that has received funding from the European Union’s Horizon 2020 research and innovation programme under Grant Agreement No 101017733, project “PhoMemtor” No. 2021/03/Y/ST2/00177.References

- Hensen et al. [2015] B. Hensen, H. Bernien, A. E. Dréau, A. Reiserer, N. Kalb, M. S. Blok, J. Ruitenberg, R. F. L. Vermeulen, R. N. Schouten, C. Abellán, W. Amaya, V. Pruneri, M. W. Mitchell, M. Markham, D. J. Twitchen, D. Elkouss, S. Wehner, T. H. Taminiau, and R. Hanson, Experimental loophole-free violation of a Bell inequality using entangled electron spins separated by 1.3 km (2015), arXiv:1508.05949 [quant-ph] .

- Giustina et al. [2015a] M. Giustina, M. A. M. Versteegh, S. Wengerowsky, J. Handsteiner, A. Hochrainer, K. Phelan, F. Steinlechner, J. Kofler, J.-A. Larsson, C. Abellán, W. Amaya, V. Pruneri, M. W. Mitchell, J. Beyer, T. Gerrits, A. E. Lita, L. K. Shalm, S. W. Nam, T. Scheidl, R. Ursin, B. Wittmann, and A. Zeilinger, Significant-loophole-free test of bell’s theorem with entangled photons, Phys. Rev. Lett. 115, 250401 (2015a).

- Giustina et al. [2015b] M. Giustina, M. A. M. Versteegh, S. Wengerowsky, J. Handsteiner, A. Hochrainer, K. Phelan, F. Steinlechner, J. Kofler, J.-A. Larsson, C. Abellán, W. Amaya, V. Pruneri, M. W. Mitchell, J. Beyer, T. Gerrits, A. E. Lita, L. K. Shalm, S. W. Nam, T. Scheidl, R. Ursin, B. Wittmann, and A. Zeilinger, Significant-loophole-free test of bell’s theorem with entangled photons, Phys. Rev. Lett. 115, 250401 (2015b).

- Shalm et al. [2015] L. K. Shalm, E. Meyer-Scott, B. G. Christensen, P. Bierhorst, M. A. Wayne, M. J. Stevens, T. Gerrits, S. Glancy, D. R. Hamel, M. S. Allman, K. J. Coakley, S. D. Dyer, C. Hodge, A. E. Lita, V. B. Verma, C. Lambrocco, E. Tortorici, A. L. Migdall, Y. Zhang, D. R. Kumor, W. H. Farr, F. Marsili, M. D. Shaw, J. A. Stern, C. Abellán, W. Amaya, V. Pruneri, T. Jennewein, M. W. Mitchell, P. G. Kwiat, J. C. Bienfang, R. P. Mirin, E. Knill, and S. W. Nam, Strong loophole-free test of local realism, Phys. Rev. Lett. 115, 250402 (2015).

- Brunner et al. [2014] N. Brunner, D. Cavalcanti, S. Pironio, V. Scarani, and S. Wehner, Bell nonlocality, Rev. Mod. Phys. 86, 419 (2014).

- Acín et al. [2006] A. Acín, S. Massar, and S. Pironio, Efficient quantum key distribution secure against no-signalling eavesdroppers, New Journal of Physics 8, 126 (2006).

- Acín et al. [2007] A. Acín, N. Brunner, N. Gisin, S. Massar, S. Pironio, and V. Scarani, Device-independent security of quantum cryptography against collective attacks, Phys. Rev. Lett. 98, 230501 (2007).

- Pironio et al. [2009] S. Pironio, A. Acín, N. Brunner, N. Gisin, S. Massar, and V. Scarani, Device-independent quantum key distribution secure against collective attacks, New Journal of Physics 11, 045021 (2009).

- Masanes et al. [2011] L. Masanes, S. Pironio, and A. Acín, Secure device-independent quantum key distribution with causally independent measurement devices, Nature Communications 2, 10.1038/ncomms1244 (2011).

- Woodhead et al. [2021] E. Woodhead, A. Acín, and S. Pironio, Device-independent quantum key distribution with asymmetric CHSH inequalities, Quantum 5, 443 (2021).

- Vazirani and Vidick [2014] U. Vazirani and T. Vidick, Fully device-independent quantum key distribution, Phys. Rev. Lett. 113, 140501 (2014).

- Primaatmaja et al. [2022] I. W. Primaatmaja, K. T. Goh, E. Y. Z. Tan, J. T. F. Khoo, S. Ghorai, and C. C. W. Lim, Security of device-independent quantum key distribution protocols: a review (2022), arXiv:2206.04960 .

- Zapatero et al. [2022] V. Zapatero, T. van Leent, R. Arnon-Friedman, W.-Z. Liu, Q. Zhang, H. Weinfurter, and M. Curty, Advances in device-independent quantum key distribution (2022), arXiv:2208.12842 .

- Giovannetti et al. [2011] V. Giovannetti, S. Lloyd, and L. Maccone, Advances in quantum metrology, Nature Photonics 5, 222 (2011).

- Colombo et al. [2022] S. Colombo, E. Pedrozo-Peñafiel, A. F. Adiyatullin, Z. Li, E. Mendez, C. Shu, and V. Vuletić, Time-reversal-based quantum metrology with many-body entangled states, Nature Physics 18, 925 (2022).

- Liu et al. [2021a] J. Liu, M. Zhang, H. Chen, L. Wang, and H. Yuan, Optimal scheme for quantum metrology, Advanced Quantum Technologies 5, 2100080 (2021a).

- Thekkadath et al. [2020] G. S. Thekkadath, M. E. Mycroft, B. A. Bell, C. G. Wade, A. Eckstein, D. S. Phillips, R. B. Patel, A. Buraczewski, A. E. Lita, T. Gerrits, S. W. Nam, M. Stobińska, A. I. Lvovsky, and I. A. Walmsley, Quantum-enhanced interferometry with large heralded photon-number states, npj Quantum Information 6, 10.1038/s41534-020-00320-y (2020).

- Pironio et al. [2010] S. Pironio, A. Acín, S. Massar, A. B. de la Giroday, D. N. Matsukevich, P. Maunz, S. Olmschenk, D. Hayes, L. Luo, T. A. Manning, and C. Monroe, Random numbers certified by bell’s theorem, Nature 464, 1021 (2010).

- Liu et al. [2021b] W.-Z. Liu, M.-H. Li, S. Ragy, S.-R. Zhao, B. Bai, Y. Liu, P. J. Brown, J. Zhang, R. Colbeck, J. Fan, Q. Zhang, and J.-W. Pan, Device-independent randomness expansion against quantum side information, Nature Physics 17, 448 (2021b).

- Avesani et al. [2021] M. Avesani, H. Tebyanian, P. Villoresi, and G. Vallone, Semi-device-independent heterodyne-based quantum random-number generator, Phys. Rev. Applied 15, 034034 (2021).

- Pearle [1970] P. M. Pearle, Hidden-variable example based upon data rejection, Phys. Rev. D 2, 1418 (1970).

- Clauser and Horne [1974] J. F. Clauser and M. A. Horne, Experimental consequences of objective local theories, Phys. Rev. D 10, 526 (1974).

- Makarov [2009] V. Makarov, Controlling passively quenched single photon detectors by bright light, New Journal of Physics 11, 065003 (2009).

- Lydersen et al. [2010] L. Lydersen, C. Wiechers, C. Wittmann, D. Elser, J. Skaar, and V. Makarov, Hacking commercial quantum cryptography systems by tailored bright illumination, Nature Photonics 4, 686 (2010).

- Eberhard [1993] P. H. Eberhard, Background level and counter efficiencies required for a loophole-free einstein-podolsky-rosen experiment, Phys. Rev. A 47, R747 (1993).

- Giustina et al. [2013] M. Giustina, A. Mech, S. Ramelow, B. Wittmann, J. Kofler, J. Beyer, A. Lita, B. Calkins, T. Gerrits, S. W. Nam, R. Ursin, and A. Zeilinger, Bell violation using entangled photons without the fair-sampling assumption, Nature 497, 227 (2013).

- Zhang et al. [2022] W. Zhang, T. van Leent, K. Redeker, R. Garthoff, R. Schwonnek, F. Fertig, S. Eppelt, W. Rosenfeld, V. Scarani, C. C.-W. Lim, and H. Weinfurter, A device-independent quantum key distribution system for distant users, Nature 607, 687 (2022).

- Clauser et al. [1969] J. F. Clauser, M. A. Horne, A. Shimony, and R. A. Holt, Proposed Experiment to Test Local Hidden-Variable Theories, Physical Review Letters 23, 880 (1969).

- Banaszek and Wódkiewicz [1996] K. Banaszek and K. Wódkiewicz, Direct Probing of Quantum Phase Space by Photon Counting, Physical Review Letters 76, 4344 (1996).

- Banaszek and Wódkiewicz [1998] K. Banaszek and K. Wódkiewicz, Nonlocality of the einstein-podolsky-rosen state in the wigner representation, Phys. Rev. A 58, 4345 (1998).

- Banaszek and Wódkiewicz [1999] K. Banaszek and K. Wódkiewicz, Testing Quantum Nonlocality in Phase Space, Physical Review Letters 82, 2009 (1999).

- Banaszek et al. [2002] K. Banaszek, A. Dragan, K. Wódkiewicz, and C. Radzewicz, Direct measurement of optical quasidistribution functions: Multimode theory and homodyne tests of bell’s inequalities, Phys. Rev. A 66, 043803 (2002).

- Jeong et al. [2003] H. Jeong, W. Son, M. S. Kim, D. Ahn, and i. c. v. Brukner, Quantum nonlocality test for continuous-variable states with dichotomic observables, Phys. Rev. A 67, 012106 (2003).

- Lee and Jeong [2011] S.-W. Lee and H. Jeong, High-dimensional bell test for a continuous-variable state in phase space and its robustness to detection inefficiency, Phys. Rev. A 83, 022103 (2011).

- Dastidar and Sarbicki [2022] M. G. Dastidar and G. Sarbicki, Detecting entanglement between modes of light, Phys. Rev. A 105, 062459 (2022).

- Brask and Chaves [2012] J. B. Brask and R. Chaves, Robust nonlocality tests with displacement-based measurements, Phys. Rev. A 86, 010103 (2012).

- Mycroft et al. [2022] M. E. Mycroft, T. McDermott, A. Buraczewski, and M. Stobińska, Proposal for distribution of multi-photon entanglement with TF-QKD rate-distance scaling (2022), arXiv:1812.10935 [quant-ph] .

- Ketterer [2016] A. Ketterer, Modular Variables in Quantum Information, Ph.D. thesis, Université Paris 7, Sorbonne Paris Cité (2016).

- Collins et al. [2002] D. Collins, N. Gisin, N. Linden, S. Massar, and S. Popescu, Bell inequalities for arbitrarily high-dimensional systems, Phys. Rev. Lett. 88, 040404 (2002).

- Banaszek et al. [1999] K. Banaszek, C. Radzewicz, K. Wódkiewicz, and J. S. Krasiński, Direct measurement of the wigner function by photon counting, Phys. Rev. A 60, 674 (1999).

- Nehra et al. [2019] R. Nehra, A. Win, M. Eaton, R. Shahrokhshahi, N. Sridhar, T. Gerrits, A. Lita, S. W. Nam, and O. Pfister, State-independent quantum state tomography by photon-number-resolving measurements, Optica 6, 1356 (2019).

- Devetak and Winter [2005] I. Devetak and A. Winter, Distillation of secret key and entanglement from quantum states, Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences 461, 207 (2005).