Earthquake Location and Magnitude Estimation

with Graph Neural Networks

Abstract

We solve the traditional problems of earthquake location and magnitude estimation through a supervised learning approach, where we train a Graph Neural Network to predict estimates directly from input pick data, and each input allows a distinct seismic network with variable number of stations and positions. We train the model using synthetic simulations from assumed travel-time and amplitude-distance attenuation models. The architecture uses one graph to represent the station set, and another to represent the model space. The input includes theoretical predictions of data, given model parameters, and the adjacency matrices of the graphs defined link spatially local elements. As we show, graph convolutions on this combined representation are highly effective at inference, data fusion, and outlier suppression. We compare our results with traditional methods and observe favorable performance.

Index Terms:

Seismic Networks, Graphs, Earthquake CharacterizationI Introduction

Earthquake location and magnitude estimation are two longstanding challenges in seismology. These parameters are estimated for millions of earthquakes every year using data from many regional monitoring networks around the world. Solutions are usually framed in well understood signal processing and Bayesian inverse theory contexts [16]. By their nature, ‘traditional’ inverse methods have certain subjective biases, such as assuming fixed parameter likelihood functions (e.g., Gaussian kernels), stacking estimates over stations, or assuming uncorrelated noise between stations (i.e., neglecting epistemic uncertainties due to physical model errors and local correlated sources of noise). These weaknesses induce errors and biases in catalogs resulting point estimates of source parameters, that are generally weakly correlated in space and time [8].

.

When applied to the same type of traditional inverse problem, Graph Neural Networks (GNN), and graph signal processing in general [13], offer a powerful new approach to arrive at stable global model estimates of parameters (i.e., location and magnitude) through processing complex, noisy data, on irregularly distributed station networks. As a graph is defined as a set of nodes, with an adjacency matrix that specifies which pairs of nodes have a (directed) edge linking them - if the nodes represent data points - the adjacency matrix gives access to an object that behaves in a similar way as a covariance matrix (with off diagonal terms included) encountered in traditional signal processing and Bayesian inverse theory. Since the adjacency matrix links a subset of nearby nodes together, and algorithms are applied to ‘collect and aggregate’ information between local neighborhoods of the graph, these tools allow utilizing local coherency and redundancy between nodes to, for example: denoise and stabilize measurement estimates, hierarchically cluster and weight data to obtain global estimates of model parameters, or suppress the effect of data outliers [4].

We use Graph Neural Networks to solve the traditional point estimation problems of location and magnitude from arrival time and amplitude data. This approach combines the strengths of graphs and the capability of deep learning to predict the target quantities directly from input data, rather than relying on globally optimizing an explicitly posed objective function [2, 11, 18, 12]. Advantages of training a ‘surrogate model’ to predict solutions from data, are many, such as (i) the estimates are available immediately without need to apply an optimizer, (ii) the estimates can be more robust in the presence of high noise and outlier data points, and (iii) the model can be trained to account for variable seismic network geometry.

Finally, as a major component of our architecture, we introduce an important type of graph: the Cartesian product graph of . This graph represents data measured over all stations, and over all points of model space, where the model space is the or . It effectively represents information about all aspects of the problem (observed data, and predicted data, given model parameters). By giving these graphs structure, through local edge schemes that connect nearby points in model space and nearby stations, we allow local interaction between both model space points and station data during iterative graph convolutions. As we show, the pairwise structure is the natural object on which observed data can be parsed, and thus convolving directly on this structure should improve inference.

II Methods

II-A Graph Message Passing

The basic computational unit of a standard GNN is the generic message passing layer. The message passing layer captures the powerful notion of graph convolution (e.g., [6, 1]), wherein the latent states of nodes on graphs are updated based on (learned) mappings from the latent states of neighboring nodes. The operator is generically defined as , and is given as

| (1) |

which maps a latent signal measured on graph (of feature dimension ) to a latent signal measured on graph (of feature dimension ), with directed edges pointing nodes from into . When , this is a bipartite mapping between two distinct graphs; when , it is regular graph convolution within a single graph ( when ), yet either case is easily represented by this operator.

In the forward call (1), on a per-node basis , the latent features of each neighboring node are collected, and transformed by the learnable Fully Connected Network (FCN) layer, , prior to being pooled over the entire neighborhood, , where the pooling is over the node axis (), and is any global pooling operator such as sum-, mean, or max-pool. The pooled aggregate message is then concatenated with the self state of node , and transformed by an additional learnable FCN, , resulting in the updated state on node . Inside the message passing layer , the optional term of represents edge data between two nodes , such as Cartesian offsets of node coordinates (if nodes represent spatially located points), and is an optional ‘global’ summary feature, that can be extracted from the entire graph at each step, using an additional learnable FCN, . By combining these facets, the overall capability of the graph convolution cell is to learn local feature transformations that work well for a wide range of nodes, while also exploiting local information transfer through the adjacency matrix.

II-B Adjacency Matrices

In our method there is one component (or graph) that specifies the station set, , and another that represents the model space, , where we consider either source region or magnitude axis . For either model space, we simply need a (regular or irregular) grid that spans the region of interest. For , we take a collection of 100’s of sparsely distributed points covering the source region of interest, and for the magnitude axis we take a fine spacing of nodes in magnitude (e.g., [-3,7] M with 0.1 or 0.25 M increment).

Rather than leave the station set and model spaces as unordered sets, we give these sets structure by interpreting them as graphs. Specifically, is the station graph, is the source graph, and is the magnitude graph, where we define edge sets, , or equivalently adjacency matrices, that specify which elements in the respective sets are linked. While many edge construction schemes are possible [3], GNN’s often work well for a wide range of adjacency matrix choices [20] because the GNN can learn how to use whichever distribution of graphs it is trained over. For point cloud data, such as represented in and , a natural choice of edge construction scheme is to use K-nearest-neighbor (K-NN) graphs or distance graphs [3], since these favor connecting nearby elements to one another. For , while it is simply a linear grid of nodes, and distance graphs effectively represent 1D convolution kernels over the axis, and are hence also a natural choice.

For our purposes we use instead of distance graphs for all of , , , since these limit the incoming degree of all nodes to , whereas distance graphs can have substantially different degrees across different nodes of the graph. While we did not optimize these choices fully, modest trial and error led to

| (2a) | |||

| (2b) | |||

| (2c) |

where represent the -nearest-neighbors for the input node. Note that, these ‘edge sets’ specify which entries in all possible pairs for a graph of nodes are linked, and that the relationship is generally not symmetric (i.e., does not necessarily imply ). Edge sets are hence equivalent to adjacency matrices, but contain the same data in a more compact form.

We still need to define one essential graph type: the Cartesian product graph of , which has as nodes all pairs of station-model (, ) space coordinates. As discussed earlier, and as highlighted in the next section, it is most naturally all pairs of stations, and model space coordinates, that we can use to parse the data. The graphs already defined can be used directly to construct the Cartesian product graph with two-edge types, given by

| (3a) | |||

| (3b) |

As can be seen in (3), these edge types capture two different types of relationships between nodes on the Cartesian product: connecting either (i) a fixed station, and two neighboring source nodes (or magnitude nodes), or (ii) a fixed source node (or fixed magnitude node), and two neighboring station nodes. Intuitively, the alternative relationships offer complementary insights into the local and global features between different nodes on the graph. While the cardinality of is high (e.g., 250 stations 200 spatial nodes 50,000), the incoming degree of each node (for either edge type) is limited by the choices of for any of the component graphs, , and hence these graphs are highly sparse.

II-C Input Features

The input features we pass to the GNN are defined for arbitrary input pick data sets, where for location, it includes pick times (with assigned phase types), and for magnitude it includes amplitude measurements (with assigned phase types). Each input can be any given station set, , and individual stations in this set can still have missing picks or false picks. For the source location problem our input tensor is defined analogously to the ‘pre-stack’ back-projection metric (e.g., [14]), which, for each spatial coordinate is the measure of how close the nearest arrival, , of phase type on station , is to the theoretical arrival time from that source coordinate. Hence, we compute

| (4) |

where is the theoretical travel time calculator, for phase types , and is a fixed kernel wide enough to capture a range of misfit times appropriate to the scale of the application (e.g., 5 s). Travel times are computed with the Fast Marching Method [15] using the regional 3D velocity model of [17].

For the magnitude input, a similar input scheme is defined. We compute

| (5) |

where predicts the theoretical amplitude on station , from source , with magnitude , for phase type . Hence, this feature measures for a given magnitude, how close the observed log-amplitude is to the theoretical log-amplitude. The Gaussian kernel width, , is application dependent, but is chosen to be large enough to capture a range of misfits in the feature space (e.g., = 0.5). For our purposes we use a locally calibrated linear magnitude scale [7], that is fit to predict magnitudes consistent with the USGS in northern California, using maximum amplitude measurements around the times of P- and S-waves from the EHZ component of NC seismic network stations.

II-D Architecture

The GNN architecture we propose is designed to be a simple, effective way to map the very high-dimensional input tensors , , which are feature vectors measured on all pairs of station-model space points, to explicit predictions of source likelihood , and magnitude likelihood , at any query points within the full continuous Euclidean model space, . Each input can also be for a different number of stations, and a different station graph.

To achieve this overall mapping, we first (i) apply repeated graph convolutions on the Cartesian product , then (ii) embed into the model space by stacking over stations (in the latent space, ). Then, (iii) we repeat graph convolutions on , and finally (iv) we make predictions of likelihood at arbitrary coordinates , by using local masked-attention (i.e., effectively graph-based interpolation [19]) of the queries with respect to the fixed nodes in . This design is straightforward, and in a natural, physically motivated way, transforms the raw data measured over arbitrary station networks to predictions over arbitrary model space domains. The architecture is given in more detail in Algorithm (1).

Inside each , the learnable FCN’s use feature dimensions between [15-30], and PReLU activation. The graph convolutions in steps [7,8,10] include edge data, , of Cartesian offsets between nodes, and in [8], a global state, is also included. The edge set points nodes in the Cartesian product into , by linking all stations for each fixed . is the graph, which for each query has edges linked to the -nearest nodes in . The total number of free parameters of the GNN is 28,000.

II-E Training Details

To train the models, we generate a diverse set of synthetic data, for the assumed physical travel time and local magnitude scale models. In either case, for a batch of 150 samples, for each sample, we (i) sample an arbitrary subset of 50 - 450 stations of the NC network, (ii) pick a random source coordinate uniformly over the domain (and random magnitude uniformly over the axis), (iii) compute theoretical arrival times and amplitude measurements, (iv) remove arrivals of source-receiver distances greater than with Uniform [10, 1000] km per event, and (0, 30 km) per event and per station, (v) add per-pick Laplacian noise of std. 15 the quantity for travel time, and 25250 the quantity for amplitude (hence, uncertainties increase proportional to the observed quantity). Finally, we (vi) randomly delete 1080 of arrivals, and randomly corrupt 1030 of the remaining arrivals to have anomalous (uniformly random) arrival time and amplitude measurements. These simple steps can produce complex input datasets (including strong noise and anomalous picks), for variable seismic networks, with data that are still physically tied to the assumed travel time and magnitude scale models.

For the labels, we represent each target solution as a continuous Gaussian, wherein the target spatial prediction is a multivariate Gaussian of fixed width (= 25 km), localized at the correct source coordinate, and similarly, the target magnitude prediction is a 1D Gaussian, localized on the true magnitude, with width (= 0.5 M). If a particular input sample has non-corrupted arrivals following the synthetic scheme above, the labels are set to all zero for that sample. Models are implemented in PyTorch Geometric [5], and we train by computing L2-norm losses of the output with respect to the target over the batch, and optimizing with the Adam optimizer [10] for 10,000 update steps with a learning rate of . In 10’s of training runs, the GNN performed similarly and converged in training at similar rates, hence indicating that training was stable.

III Results

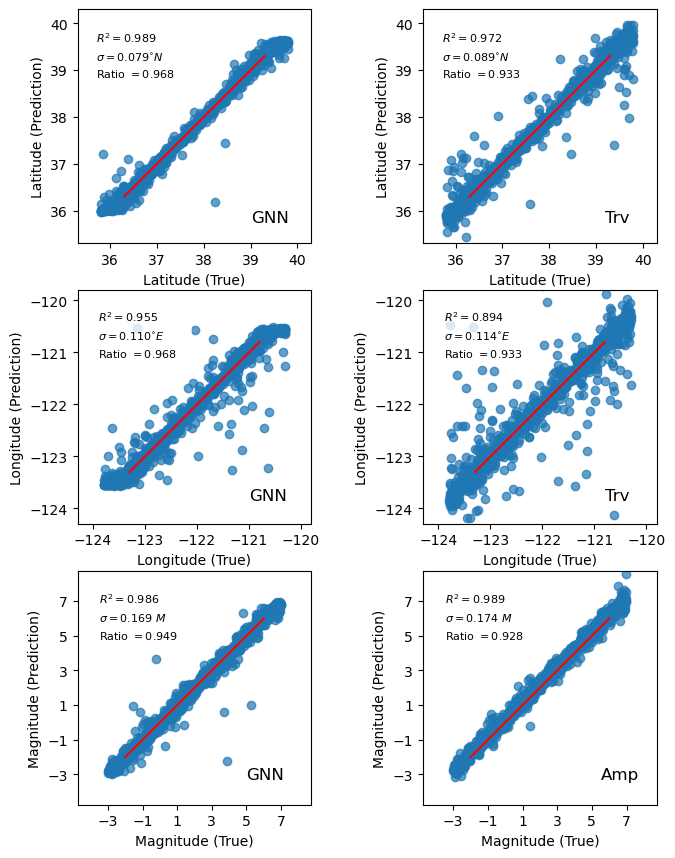

To evaluate the performance of our method, we apply the trained GNN to locate and compute magnitudes of events for 1,500 new events generated through the synthetic data construction scheme described above. This allows measuring the bulk performance of the GNN as the available station network changes, source coordinates vary, and as the pick data has highly variable levels of noise, number of picks, and anomalous pick rates. We compare the results with traditional methods. For location, we minimize the unweighted least squares residual, using the average best solution of five particle swarm global optimization runs [9]. For magnitude, we compute magnitude estimates for all picks using our calibrated magnitude scale, and take the median of the set of points within the 1090 quantile range of the distribution.

The results of the location and magnitude analysis are summarized in Fig. 1, where we observe comparable, and slightly favorable performance of the GNN compared with the traditional method in nearly all bulk statistics. Notably, the scores for the GNN are 0.95 in latitude and longitude, and improve upon the traditional methods performance by 0.015, and 0.05 units, respectively. Also, by declaring ‘matched events’ as those where the predicted event is within epicentral distance of the true location, we measure a recovery rate of 0.968 for the GNN, and 0.933 for the travel time method, highlighting the GNN’s improved tolerance to outliers. Both methods also estimate event depths, however because the station spacing is large compared to the range of seismogenic depths ( km) and all observations are from the Earth’s surface, depths are more poorly constrained by the noisy input data than horizontal coordinates, and have = 0.29 and = 0.22 for the GNN, and travel time method, respectively. For the magnitude application, we find comparable performance between both methods, with the GNN having slightly reduced residuals and rates of missed events. The magnitude residuals of the traditional method are systematically positive by 0.16 M, while the GNN residuals are well localized around a mean of zero for both magnitude, and location predictions.

IV Conclusion

By making use of the local structure in data through information passing along the adjacency matrix, graph-based methods have the potential to parse subtle, but important feature interactions in datasets. Our application demonstrates the potential of GNN’s to solve traditional seismological inverse problems, while directly incorporating the physics of the problem both through the defined input features, and the adjacency matrices. The framework we propose can be easily adapted and extended to other similar inverse problems, and the model can also be trained or applied on real data.

Acknowledgment

This work is supported by Department of Energy (Basic Energy Sciences; Award DE-SC0020445).

References

- [1] P. W. Battaglia, J. B. Hamrick, V. Bapst, A. Sanchez-Gonzalez, V. Zambaldi, M. Malinowski, A. Tacchetti, D. Raposo, A. Santoro, R. Faulkner et al., “Relational inductive biases, deep learning, and graph networks,” arXiv preprint arXiv:1806.01261, 2018.

- [2] K. J. Bergen, P. A. Johnson, V. Maarten, and G. C. Beroza, “Machine learning for data-driven discovery in solid earth geoscience,” Science, vol. 363, no. 6433, 2019.

- [3] L. Berton, A. de Andrade Lopes, and D. A. Vega-Oliveros, “A comparison of graph construction methods for semi-supervised learning,” in 2018 International Joint Conference on Neural Networks (IJCNN). IEEE, 2018, pp. 1–8.

- [4] P. Djuric and C. Richard, Cooperative and graph signal processing: principles and applications. Academic Press, 2018.

- [5] M. Fey and J. E. Lenssen, “Fast graph representation learning with pytorch geometric,” arXiv preprint arXiv:1903.02428, 2019.

- [6] W. L. Hamilton, R. Ying, and J. Leskovec, “Inductive representation learning on large graphs,” arXiv preprint arXiv:1706.02216, 2017.

- [7] L. Hutton and D. M. Boore, “The ml scale in southern california,” Bulletin of the Seismological Society of America, vol. 77, no. 6, pp. 2074–2094, 1987.

- [8] Y. Y. Kagan, “Accuracy of modern global earthquake catalogs,” Physics of the Earth and Planetary Interiors, vol. 135, no. 2-3, pp. 173–209, 2003.

- [9] J. Kennedy and R. Eberhart, “Particle swarm optimization,” in Proceedings of ICNN’95-international conference on neural networks, vol. 4. IEEE, 1995, pp. 1942–1948.

- [10] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [11] S. M. Mousavi and G. C. Beroza, “Bayesian-deep-learning estimation of earthquake location from single-station observations,” IEEE Transactions on Geoscience and Remote Sensing, vol. 58, no. 11, pp. 8211–8224, 2020.

- [12] J. Münchmeyer, D. Bindi, U. Leser, and F. Tilmann, “Earthquake magnitude and location estimation from real time seismic waveforms with a transformer network,” Geophysical Journal International, vol. 226, no. 2, pp. 1086–1104, 2021.

- [13] A. Ortega, P. Frossard, J. Kovačević, J. M. Moura, and P. Vandergheynst, “Graph signal processing: Overview, challenges, and applications,” Proceedings of the IEEE, vol. 106, no. 5, pp. 808–828, 2018.

- [14] F. Ringdal and T. Kværna, “A multi-channel processing approach to real time network detection, phase association, and threshold monitoring,” Bulletin of the Seismological Society of America, vol. 79, no. 6, pp. 1927–1940, 1989.

- [15] J. A. Sethian, “A fast marching level set method for monotonically advancing fronts,” Proceedings of the National Academy of Sciences, vol. 93, no. 4, pp. 1591–1595, 1996.

- [16] A. Tarantola and B. Valette, “Generalized nonlinear inverse problems solved using the least squares criterion,” Reviews of Geophysics, vol. 20, no. 2, pp. 219–232, 1982.

- [17] C. Thurber, H. Zhang, T. Brocher, and V. Langenheim, “Regional three-dimensional seismic velocity model of the crust and uppermost mantle of northern california,” Journal of Geophysical Research: Solid Earth, vol. 114, no. B1, 2009.

- [18] M. P. van den Ende and J.-P. Ampuero, “Automated seismic source characterization using deep graph neural networks,” Geophysical Research Letters, vol. 47, no. 17, p. e2020GL088690, 2020.

- [19] P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Lio, and Y. Bengio, “Graph attention networks,” arXiv preprint arXiv:1710.10903, 2017.

- [20] J. Zhou, G. Cui, S. Hu, Z. Zhang, C. Yang, Z. Liu, L. Wang, C. Li, and M. Sun, “Graph neural networks: A review of methods and applications,” AI Open, vol. 1, pp. 57–81, 2020.