Early stopping and polynomial smoothing in regression with reproducing kernels

Abstract

In this paper, we study the problem of early stopping for iterative learning algorithms in a reproducing kernel Hilbert space (RKHS) in the nonparametric regression framework. In particular, we work with the gradient descent and (iterative) kernel ridge regression algorithms. We present a data-driven rule to perform early stopping without a validation set that is based on the so-called minimum discrepancy principle. This method enjoys only one assumption on the regression function: it belongs to a reproducing kernel Hilbert space (RKHS). The proposed rule is proved to be minimax-optimal over different types of kernel spaces, including finite-rank and Sobolev smoothness classes. The proof is derived from the fixed-point analysis of the localized Rademacher complexities, which is a standard technique for obtaining optimal rates in the nonparametric regression literature. In addition to that, we present simulation results on artificial datasets that show the comparable performance of the designed rule with respect to other stopping rules such as the one determined by fold cross-validation.

keywords:

[class=MSC]keywords:

2007.06827 \startlocaldefs \endlocaldefs

and

1 Introduction

Early stopping rule (ESR) is a form of regularization based on choosing when to stop an iterative algorithm based on some design criterion. Its main idea is lowering the computational complexity of an iterative algorithm while preserving its statistical optimality. This approach is quite old and initially was developed for Landweber iterations to solve ill-posed matrix problems in the 1970s [20, 36]. Recent papers provided some insights for the connection between early stopping and boosting methods [6, 14, 40, 43], gradient descent, and Tikhonov regularization in a reproducing kernel Hilbert space (RKHS) [7, 29, 42]. For instance, [14] established the first optimal in-sample convergence rate of -boosting with early stopping. Raskutti et al. [29] provided a result on a stopping rule that achieves the minimax-optimal rate for kernelized gradient descent and ridge regression over different smoothness classes. This work established an important connection between the localized Radamacher complexities [5, 24, 38], that characterizes the size of the explored function space, and early stopping. The main drawback of the result is that one needs to know the RKHS-norm of the regression function or its tight upper bound in order to apply this early stopping rule in practice. Besides that, this rule is design-dependent, which limits its practical application. In the subsequent work, [40] showed how to control early stopping optimality via the localized Gaussian complexities in RKHS for different boosting algorithms (-boosting, LogitBoost, and AdaBoost). Another theoretical result for a not data-driven ESR was built by [11], where the authors proved a minimax-optimal (in the out-of-sample norm) stopping rule for conjugate gradient descent in the nonparametric regression setting. [2] proposed a different approach, where the authors focused on both time/memory computational savings, combining early stopping with Nystrom subsampling technique.

Some stopping rules, that (potentially) could be applied in practice, were provided by [9, 10] and [33], and were based on the so-called minimum discrepancy principle [11, 13, 20, 23]. This principle consists of monitoring the empirical risk and determining the first time at which a given learning algorithm starts to fit the noise. In the papers mentioned, the authors considered spectral filter estimators such as gradient descent, Tikhonov (ridge) regularization, and spectral cut-off regression for the linear Gaussian sequence model, and derived several oracle-type inequalities for the proposed ESR. The main deficiency of the works [9, 10, 33] is that the authors dealt only with the linear Gaussian sequence model, and the minimax optimality result was restricted to the spectral cut-off estimator. It is worth mentioning that [33] introduced the so-called polynomial smoothing strategy to achieve the optimality of the minimum discrepancy principle ESR over Sobolev balls for the spectral cut-off estimator. More recently, [18] studied a minimum discrepancy principle stopping rule and its modified (they called it smoothed as well) version, where they provided the range of values of the regression function regularity, for which these stopping rules are optimal for different spectral filter estimators in RKHS.

Contribution. Hence, to the best of our knowledge, there is no fully data-driven stopping rule for gradient descent or ridge regression in RKHS that does not use a validation set, does not depend on the parameters of the model such as the RKHS-norm of the regression function, and explains why it is statistically optimal. In our paper, we combine techniques from [9], [29], and [33] to construct such an ESR. Our analysis is based on the bias and variance trade-off of an estimator, and we try to catch the iteration of their intersection by means of the minimum discrepancy principle [9, 13, 18] and the localized Rademacher complexities [5, 24, 27, 38]. In particular, for the kernels with infinite rank, we propose to use a special technique [13, 33] for the empirical risk in order to reduce its variance. Further, we introduce new notions of smoothed empirical Rademacher complexity and smoothed critical radius to achieve minimax optimality bounds for the functional estimator based on the proposed rule. This can be done by solving the associated fixed-point equation. It implies that the bounds in our analysis cannot be improved (up to numeric constants). It is important to note that in the present paper, we establish an important connection between a smoothed version of the statistical dimension of -dimensional kernel matrix, introduced by [41] for randomized projections in kernel ridge regression, with early stopping (see Section 4.3 for more details). We also show how to estimate the variance of the model, in particular, for the infinite-rank kernels. In the meanwhile, we provide experimental results on artificial data indicating the consistent performance of the proposed rules.

Outline of the paper. The organization of the paper is as follows. In Section 2, we introduce the background on nonparametric regression and reproducing kernel Hilbert space. There, we explain the updates of two spectral filter iterative algorithms: gradient descent and (iterative) kernel ridge regression, that will be studied. In Section 3, we clarify how to compute our first early stopping rule for finite-rank kernels and provide an oracle-type inequality (Theorem 3.1) and an upper bound for the risk error of this stopping rule with fixed covariates (Corollary 3.2). After that, we present a similar upper bound for the risk error with random covariates (Theorem 3.3) that is proved to be minimax-rate optimal. By contrast, Section 4 is devoted to the development of a new stopping rule for infinite-rank kernels based on the polynomial smoothing [13, 33] strategy. There, Theorem 4.2 shows, under a quite general assumption on the eigenvalues of the kernel operator, a high probability upper bound for the performance of this stopping rule measured in the in-sample norm. In particular, this upper bound leads to minimax optimality over Sobolev smoothness classes. In Section 5, we compare our stopping rules to other rules, such as methods using hold-out data and fold cross-validation. After that, we propose using a strategy for the estimation of the variance of the regression model. Section 6 summarizes the content of the paper and describes some perspectives. Supplementary and more technical proofs are deferred to Appendix.

2 Nonparametric regression and reproducing kernel framework

2.1 Probabilistic model and notation

The context of the present work is that of nonparametric regression, where an i.i.d. sample of cardinality is given, with and . The goal is to estimate the regression function from the model

| (1) |

where the error variables are i.i.d. zero-mean Gaussian random variables , with . In all what follows (except for Section 5, where results of empirical experiments are reported), the values of is assumed to be known as in [29] and [40].

Along the paper, calculations are mainly derived in the fixed-design context, where the are assumed to be fixed, and only the error variables are random. In this context, the performance of any estimator of the regression function is measured in terms of the so-called empirical norm, that is, the -norm defined by

where for any bounded function over , and denotes the related inner-product defined by for any functions and bounded over . In this context, and denote the probability and expectation, respectively, with respect to the .

By contrast, Section 3.1.2 discusses some extensions of the previous results to the random design context, where both the covariates and the responses are random variables. In this random design context, the performance of an estimator of is measured in terms of the -norm defined by

where denotes the probability distribution of the . In what follows, and , respectively, state for the probability and expectation with respect to the couples .

Notation.

Throughout the paper, and are the usual Euclidean norm and inner product in . We shall write whenever for some numeric constant for all . whenever for some numeric constant for all . Similarly, means and . for any . For , we denote by the largest natural number that is smaller than or equal to . We denote by the smallest natural number that is greater than or equal to . Throughout the paper, we use the notation to show that numeric constants do not depend on the parameters considered. Their values may change from line to line.

2.2 Statistical model and assumptions

2.2.1 Reproducing Kernel Hilbert Space (RKHS)

Let us start by introducing a reproducing kernel Hilbert space (RKHS) denoted by [4, 8, 22, 37]. Such a RKHS is a class of functions associated with a reproducing kernel and endowed with an inner-product denoted by , and satisfying for all . Each function within admits a representation as an element of , which justifies the slight abuse when writing (see [19] and [18, Assumption 3]).

Assuming the RKHS is separable, under suitable regularity conditions (e.g., a continuous positive-semidefinite kernel), Mercer’s theorem [31] guarantees that the kernel can be expanded as

where and are, respectively, the eigenvalues and corresponding eigenfunctions of the kernel integral operator , given by

| (2) |

It is then known that the family is an orthonormal basis of , while is an orthonormal basis of . Then, any function can be expanded as , where for all such that , the coefficients are

| (3) |

Therefore, each functions can be represented by the respective sequences such that

with the inner-product in the Hilbert space given by This leads to the following representation of as an ellipsoid

2.2.2 Main assumptions

From the initial model given by Eq. (1), we make the following assumption.

Assumption 1 (Statistical model).

Let denote a reproducing kernel as defined above, and is the induced separable RKHS. Then, there exists a constant such that the -sample satisfies the statistical model

| (4) |

where the are i.i.d. Gaussian random variables with and .

The model from Assumption 1 can be vectorized as

| (5) |

where and , which turns to be useful all along the paper.

In the present paper, we make a boundness assumption on the reproducing kernel .

Assumption 2.

Let us assume that the measurable reproducing kernel is uniformly bounded on its support, meaning that there exists a constant such that

Moreover in what follows, we assume that without loss of generality.

Assumption 2 holds for many kernels. On the one hand, it is fulfilled with an unbounded domain with a bounded kernel (e.g., Gaussian, Laplace kernels). On the other hand, it amounts to assume the domain is bounded with an unbounded kernel such as the polynomial or Sobolev kernels [31]. Let us also mention that Assumptions 1 and 2 (combined with the reproducing property) imply that is uniformly bounded since

| (6) |

Considering now the Gram matrix , the related normalized Gram matrix turns out to be symmetric and positive semidefinite. This entails the existence of the empirical eigenvalues (respectively, the eigenvectors ) such that for all . Remark that Assumption 2 implies .

For technical convenience, it turns out to be useful rephrasing the model (5) by using the SVD of the normalized Gram matrix . This leads to the new (rotated) model

| (7) |

where , and is a zero-mean Gaussian random variable with the variance .

2.3 Spectral filter algorithms

Spectral filter algorithms were first introduced for solving ill-posed inverse problems with deterministic noise [20]. Among others, one typical example of such an algorithm is the gradient descent algorithm (that is named as well as -boosting [14]). They were more recently brought to the supervised learning community, for instance, by [7, 15, 21, 42]. For estimating the vector from Eq. (5) in the fixed-design context, such a spectral filter estimator is a linear estimator, which can be expressed as

| (8) |

where is called the admissible spectral filter function [7, 21]. For example, the choice , corresponds to the kernel ridge estimator with regularization parameter (see [9, 18] for other possible choices)

From the model expressed in the empirical eigenvectors basis (7), the resulting spectral filter estimator (8) can be expressed as

| (9) |

where is a decreasing function mapping to a regularization parameter value at time , and is defined by

Under the assumption that , it can be proved that is a non-decreasing function of , , and . Moreover, implies , as it is the case for the kernels with a finite rank, that is, when almost surely.

Thanks to the remark above, we define the following convenient notations (for functions) and (for vectors), with a continuous time , by

| (10) |

where is the sampling operator and is its adjoint, i.e. and .

In what follows, we introduce an assumption on a function that will play a crucial role in our analysis.

Assumption 3.

for some positive constants and .

Let us mention two famous examples of spectral filter estimators that satisfy Assumption 3 with (see Lemma A.1 in Appendix). These examples will be further studied in the present paper.

-

•

Gradient descent (GD) with a constant step-size and as :

(11) The constant step-size can be replaced by any non-increasing sequence satisfying [29]

-

–

, for ,

-

–

as .

-

–

-

•

Kernel ridge regression (KRR) with the regularization parameter with :

(12) The linear parameterization is chosen for theoretical convenience.

The examples of above were derived for as an initialization condition without loss of generality.

2.4 Key quantities

From a set of parameters (stopping times) for an iterative learning algorithm, the present goal is to design from the data such that the functional estimator is as close as possible to the optimal one among

Numerous classical model selection procedures for choosing already exist, e.g. the (generalized) cross validation [35], AIC and BIC criteria [1, 32], the unbiased risk estimation [17], or Lepski’s balancing principle [26]. Their main drawback in the present context is that they require the practitioner to calculate all the estimators in the first step, and then choose the optimal estimator among the candidates in a second step, which can be computationally demanding.

By contrast, early stopping is a less time-consuming approach. It is based on observing one estimator at each and deciding to stop the learning process according to some criterion. Its aim is to reduce the computational cost induced by this selection procedure while preserving the statistical optimality properties of the output estimator.

The prediction error (risk) of an estimator at time is split into a bias and a variance term [29] as

with

| (13) |

The bias term is a non-increasing function of converging to zero, while the variance term is a non-decreasing function of . Assume further that , which implies that almost surely, then the empirical risk is introduced with the notation of Eq. (7).

| (14) |

An illustration of the typical behavior of the risk, empirical risk, bias, and variance is displayed by Figure 1.

Our main concern is formulating a data-driven stopping rule (a mapping from the data to a positive time ) so that the prediction errors or, equivalently, are as small as possible.

The analysis of the forthcoming early stopping rules involves the use of a model complexity measure known as the localized empirical Rademacher complexity [5, 24, 38] that we generalize to its smoothed version, for .

Definition 2.1.

For any , , consider the localized smoothed empirical Rademacher complexity of as

| (15) |

It corresponds to a rescaled sum of the empirical eigenvalues truncated at and smoothed by .

For a given RKHS and noise level , let us finally define the empirical smoothed critical radius as the smallest positive value such that

| (16) |

There is an extensive literature on the empirical critical equation and related empirical critical radius [5, 27, 29], and it is out of the scope of the present paper providing an exhaustive review on this topic. Nevertheless, Appendix G establishes that the smoothed critical radius does exist, is unique and achieves the equality in Ineq. (16). Constant in Ineq. (16) is for theoretical convenience only. If , , and .

3 Data-driven early stopping rule and minimum discrepancy principle

Let us start by recalling that the expression of the empirical risk in Eq. (14) gives that the empirical risk is a non-increasing function of (as illustrated by Fig. 1 as well). This is consistent with the intuition that the amount of available information within the residuals decreases as grows. If there exists time such that , then the empirical risk is approximately equal to (level of noise), that is,

| (17) |

By introducing the reduced empirical risk and recalling that ,

| (18) |

where is due to Eq. (17). This heuristic argument gives rise to a first deterministic stopping rule involving the reduced empirical risk and given by

| (19) |

Since is not achievable in practice, an estimator of is given by the data-driven stopping rule based on the so-called minimum discrepancy principle

| (20) |

The existing literature considering the MDP-based stopping rule usually defines by the event [9, 11, 13, 20, 23, 33]. Notice that with a full-rank kernel matrix, the reduced empirical risk is equal to the classical empirical risk , leading then to the same stopping rule. From a practical perspective, the knowledge of the rank of the Gram matrix avoids estimating the last components of the vector , which are already known to be zero (see [29, Section 4.1] for more details).

3.1 Finite-rank kernels

3.1.1 Fixed-design framework

Let us start by discussing our results with the case of RKHS of finite-rank kernels with rank , and . Examples that include these kernels are the linear kernel and the polynomial kernel of degree .

The following theorem applies to any functional estimator generated by (10) and initialized at . The main part of the proof of this result consists of properly upper bounding and follows the same trend of Proposition 3.1 in [9].

Proof of Theorem 3.1.

In this proof, we will use the following inequalities: for any , and for .

Let us first prove the subsequent oracle-type inequality for the difference between and . Consider

From the definition of (18), one notices that

From for centered and for any , and , it comes

Applying the inequalities for any and , we arrive at

∎

First of all, it is worth noting that the risk of the estimator is proved to be optimal for gradient descent and kernel ridge regression no matter the kernel we use (see Appendix C for the proof), so it remains to focus on the remainder term on the right-hand side in Ineq. (21). Theorem 3.1 applies to any reproducing kernel, but one remarks that for infinite-rank kernels, , and we achieve only the rate . This rate is suboptimal since, for instance, RKHS with polynomial eigenvalue decay kernels (will be considered in the next subsection) has the minimax-optimal rate for the risk error of the order , with . Therefore, the oracle-type inequality (21) could be useful only for finite-rank kernels due to the fast rate of the remainder term.

Notice that, in order to make artificially the term a remainder one (even for cases corresponding to infinite-rank kernels), [9, 10] introduced in the definitions of their stopping rules a restriction on the ”starting time” . However, in the mentioned work, this restriction incurred the price of possibility to miss the designed time . Besides that, [10] developed an additional procedure based on standard model selection criteria such as AIC-criterion for the spectral cut-off estimator to recover the ”missing” stopping rule and achieve optimality over Sobolev-type ellipsoids. In our work, we removed such a strong assumption.

As a corollary of Theorem 3.1, one can prove that provides a minimax estimator of over the ball of radius .

Corollary 3.2.

Proof of Corollary 3.2.

Note that the critical radius cannot be arbitrary small since it should satisfy Ineq. (16). As it will be clarified later, the squared empirical critical radius is essentially optimal.

3.1.2 Random-design framework

We would like to transfer the minimax optimality bound for the estimator from the empirical -norm to the population norm by means of the so-called localized population Rademacher complexity. This complexity measure became a standard tool in empirical processes and nonparametric regression [5, 24, 29, 38].

For any kernel function class studied in the paper, we consider the localized Rademacher complexity that can be seen as a population counterpart of the empirical Rademacher complexity (15) introduced earlier:

| (25) |

Using the localized population Rademacher complexity, we define its population critical radius to be the smallest positive solution that satisfies the inequality

| (26) |

In contrast to the empirical critical radius , this quantity is not data-dependent, since it is specified by the population eigenvalues of the kernel operator underlying the RKHS.

Theorem 3.3.

Remark.

The full proof is deferred to Section F. Regarding Ineq. (27), is proven to be the minimax-optimal rate for the norm in a RKHS (see [5, 27, 29]). As for the risk error in Ineq. (28), the (exponential) remainder term should decrease to zero faster than , and Theorem 3.3 provides a rate that matches up to a constant the minimax bound (see, e.g., [28, Theorem 2(a)] with ), when belongs to the -norm ball of a fixed radius , thus not improvable in general. A similar bound for finite-rank kernels was achieved in [29, Corollary 4].

We summarize our findings in the following corollary.

3.2 Practical behavior of with infinite-rank kernels

A typical example of RKHS that produces an infinite-rank kernel is the -order Sobolev spaces for some fixed integer with Lebesgue measure on a bounded domain. We consider Sobolev spaces that consist of functions that have -order weak derivatives being Lebesgue integrable and . It is worth mentioning that for such classes, the eigenvalues of the kernel operator , with . Another example of kernel with this decay condition for the eigenvalues is the Laplace kernel (see [31, p.402]).

Firstly, let us now illustrate the practical behavior of ESR (20) (its histogram) for gradient descent (9) with the step-size and one-dimensional Sobolev kernel that generates the reproducing space

| (30) |

We deal with the model (1) with two regression functions: a smooth piece-wise linear and nonsmooth heavisine functions. The design points are random . The number of observations is . For both functions, , and we set up a middle difficulty noise level . The number of repetitions is .

In panel (a) of Figure 2, we detect that our stopping rule has a high variance. However, if we change the signal from the smooth to nonsmooth one, the regression function does not belong anymore to defined in (30). In this case (panel (b) in Figure 2), the stopping rule performs much better than for the previous regression function. In order to get a stable early stopping rule that will be close to , we propose using a special smoothing technique for the empirical risk.

4 Polynomial smoothing

As was discussed earlier, the main issue of poor behavior of the stopping rule for infinite-rank kernels is the variability of the empirical risk around its expectation. A solution that we propose is to smooth the empirical risk by means of the eigenvalues of the normalized Gram matrix.

4.1 Polynomial smoothing and minimum discrepancy principle rule

We start by defining the squared -norm as for all and , from which we also introduce the smoothed risk, bias, and variance of a spectral filter estimator as

with

| (31) |

The smoothed empirical risk is

| (32) |

Recall that the kernel is bounded by , thus for all , then the smoothed bias and smoothed variance are smaller their non-smoothed counterparts.

Analogously to the heuristic derivation leading to the stopping rule (20), the new stopping rule is based on the discrepancy principle applied to the smoothed empirical risk, that is,

| (33) |

where is the natural counterpart of in the case of a full-rank kernel matrix and the norm.

4.2 Related work

The idea of smoothing the empirical risk (the residuals) is not new in the literature. For instance, [11, 12, 13] discussed various smoothing strategies applied to (kernelized) conjugate gradient descent, and [18] considered spectral regularization with spectral filter estimators. More closely related to the present work, [33] studied a statistical performance improvement allowed by polynomial smoothing of the residuals (as we do here) but restricted to the spectral cut-off estimator.

In [12, 13], the authors considered the following statistical inverse problem: , where is a self-adjoint operator and is Gaussian noise. In their case, for the purpose of achieving optimal rates, the usual discrepancy principle rule ( is an iteration number, is a parameter) was modified and took the form , where and is the normalized variance of Gaussian noise.

In [11], the minimum discrepancy principle was modified to the following: each iteration of conjugate gradient descent was represented by a vector , is the pseudo-inverse of the normalized Gram matrix, and the learning process was stopped if for some positive , where Thus, this method corresponds (up to a threshold) to the stopping rule (33) with

In the work [33], the authors concentrated on the inverse problem and its corresponding Gaussian vector observation model , where are the singular values of the linear bounded operator and are Gaussian noise variables. They recovered the signal by a cut-off estimator of the form . The discrepancy principle in this case was for some positive They found out that, if the smoothing parameter lies in the interval , where is the polynomial decay of the singular values , then the cut-off estimator is adaptive to Sobolev ellipsoids. Therefore, our work could be considered as an extension of [33] in order to generalize the polynomial smoothing strategy to more complex filter estimators such as gradient descent and (Tikhonov) ridge regression in the reproducing kernel framework.

4.3 Optimality result (fixed-design)

We pursue the analogy a bit further by defining the smoothed statistical dimension as

| (34) |

and if no such index exists. Combined with (15), this implies that

| (35) |

Let us emphasize that [41] already introduced the so-called statistical dimension (corresponds to in our notation). It appeared that the statistical dimension provides an upper bound on the minimax-optimal dimension of randomized projections for kernel ridge regression (see [41, Theorem 2, Corollary 1]). In our case, can be seen as a (-smooth) version of the statistical dimension.

The purpose of the following result is to give more insight into understanding of Eq. (34) regarding the minimax risk.

Theorem 4.1 (Lower bound from Theorem 1 in [41]).

For any regular kernel class, meaning that for any , , and any estimator of satisfying the nonparametric model defined in Eq. (1), we get

for some numeric constant .

Firstly, in [41], the regularity assumption was formulated as , which directly stems from the assumption in Theorem 4.1. Let us remark that the same assumption (as in Theorem 4.1) has been already made by [18, Assumption 6]. Secondly, Theorem 4.1 applies to any kernel, as long as the condition on the tail of eigenvalues is fulfilled, which is in particular true for the reproducing kernels from Section 3.2. Thus, the fastest achievable rate by an estimator of is .

A key property for the smoothing to yield optimal results is that the value of has to be large enough to control the tail sum of the smoothed eigenvalues by the corresponding cumulative sum, which is the purpose of the assumption below.

Assumption 4.

There exists , such that for all and ,

| (36) |

where denotes a numeric constant.

We enumerate several classical examples for which this assumption holds.

Example 1 (-polynomial eigenvalue decay kernels).

Let us assume that the kernel operator satisfy that there exist numeric constants such that

| (37) |

For the polynomial eigenvalue-decay kernels, Assumption 4 holds with

| (38) |

Example 2 (-exponential eigenvalue-decay kernels).

Let us assume that the eigenvalues of the kernel operator satisfy that there exist numeric constants and a constant such that

Instances of kernels within this class include the Gaussian kernel with respect to the Lebesgue measure on the real line (with ) or on a compact domain (with ) (up to factor in the exponent, see [38, Example 13.21]). Then, Assumption 4 holds with

For any regular kernel class satisfying the above assumption, the next theorem provides a high probability bound on the performance of (measured in terms of the -norm), which depends on the smoothed empirical critical radius.

Theorem 4.2 (Upper bound on empirical norm).

The complete proof of Theorem 4.2 is given in Appendix D. The main message is that the final performance of the estimator is controlled by the smoothed critical radius . From the existing literature on the empirical critical radius [28, 29, 38, 41], it is already known that the non-smooth version is the typical quantity that leads to minimax rates in the RKHS (see also Theorem 4.1). The behavior of with respect to is likely to depend on , as emphasized by the notation. Intuitively, this suggests that there could exist a range of values of , for which is of the same order as (or faster than) , leading therefore to optimal rates.

Another striking aspect of Ineq. (40) is related to the additional terms involving the exponential function in Ineq. (40). As far as (39) is a statement with ”high probability”, this term is expected to converge to 0 at a rate depending on . Therefore, the final convergence rate as well as the fact that this term is (or not) negligible will depend on .

As a consequence of Theorem 4.1, as far as there exist values of such that is at most as large as , the estimator is optimal.

4.4 Consequences for -polynomial eigenvalue-decay kernels

The leading idea in the present section is identifying values of , for which the bound (39) from Theorem 4.2 scales as .

Let us recall the definition of a polynomial decay kernel from (37):

One typical example of the reproducing kernel satisfying this condition is the Sobolev kernel on given by with [29]. The corresponding RKHS is the first-order Sobolev class, that is, the class of functions that are almost everywhere differentiable with the derivative in .

Lemma 4.3.

For any -polynomial eigenvalue decay kernel, there exist numeric constants such that for , one has

The proof of Lemma 4.3 was deferred to Lemma A.2 in Appendix A and is not reproduced here. Therefore, if , then . Let us now recall from (38) that Assumption 4 holds for . All these arguments lead us to the next result, which establishes the minimax optimality of with any kernel satisfying the -polynomial eigenvalue-decay assumption, as long as .

Corollary 4.4.

Corollary 4.4 establishes an optimality result in the fixed-design framework since as long as , the upper bound matches the lower bound up to multiplicative constants. Moreover, this property holds uniformly with respect to , provided the value of is chosen appropriately. An interesting feature of this bound is that the optimal value of only depends on the (polynomial) decay rate of the empirical eigenvalues of the normalized Gram matrix. This suggests that any effective estimator of the unknown parameter could be plugged into the above (fixed-design) result and would lead to an optimal rate. Note that [33] has emphasized a similar trade-off for the smoothing parameter (polynomial smoothing), considering the spectral cut-off estimator in the Gaussian sequence model. Regarding convergence rates, Corollary 4.4 combined with Lemma 4.3 suggests that the convergence rate of the expected risk is of the order . This is the same as the already known one in nonparametric regression in the random design framework [29, 34], which is known to be minimax-optimal as long as belongs to the RKHS .

5 Empirical comparison with existing stopping rules

The present section aims at illustrating the practical behavior of several stopping rules discussed along the paper as well as making a comparison with existing alternative stopping rules.

5.1 Stopping rules involved

The empirical comparison is carried out between the stopping rules (20) and with (33), and four alternative stopping rules that are briefly described in the what follows. For the sake of comparison, most of them correspond to early stopping rules already considered in [29].

Hold-out stopping rule

We consider a procedure based on the hold-out idea [3]. Data are split into two parts: the training sample and the test sample so that the training sample and test sample represent a half of the whole dataset. We train the learning algorithm for and estimate the risk for each by , where denotes the output of the algorithm trained at iteration on and evaluated at the point of the test sample. The final stopping rule is defined as

| (42) |

Although it does not completely use the data for training (loss of information), the hold-out strategy has been proved to output minimax-optimal estimators in various contexts (see, for instance, [15, 16] with Sobolev spaces and ).

V-fold stopping rule

The observations are randomly split into equal sized blocks. At each round (among the ones), blocks are devoted to training , and the remaining one serves for the test sample . At each iteration , the risk is estimated by , where was described for the hold-out stopping rule. The final stopping time is

| (43) |

V-fold cross validation is widely used in practice since, on the one hand, it is more computationally tractable than other splitting-based methods such as leave-one-out or leave-p-out (see the survey [3]), and on the other hand, it enjoys a better statistical performance than the hold-out (lower variability).

Raskutti-Wainwright-Yu stopping rule (from [29])

The use of this stopping rule heavily relies on the assumption that is known, which is a strong requirement in practice. It controls the bias-variance trade-off by using upper bounds on the bias and variance terms. The latter involves the localized empirical Rademacher complexity . It stops as soon as (upper bound of) the bias term becomes smaller than (upper bound on) the variance term, which leads to

| (44) |

Theoretical minimum discrepancy-based stopping rule

The fourth stopping rule is the one introduced in (19). It relies on the minimum discrepancy principle and involves the (theoretical) expected empirical risk :

This stopping time is introduced for comparison purposes only since it cannot be computed in practice. This rule is proved to be optimal (see Appendix C) for any bounded reproducing kernel, so it could serve as a reference in the present empirical comparison.

Oracle stopping rule

The ”oracle” stopping rule defines the first time the risk curve starts to increase.

| (45) |

In situations where only one global minimum does exists for the risk, this rule coincides with the global minimum location. Its formulation reflects the realistic constraint that we do not have access to the whole risk curve (unlike in the classical model selection setup).

5.2 Simulation design

Artificial data are generated according to the regression model , , where with the equidistant , and . The same experiments have been also carried out with uniform (not reported here) without any change regarding the conclusions. The sample size varies from to .

The gradient descent algorithm (9) has been used with the step-size and initialization .

The present comparison involves two regression functions with the same -norms of the signal : a piecewise linear function called ”smooth” , and a ”sinus” .

To ease the comparison, the piecewise linear regression function was set up as in [29, Figure 3].

The case of finite-rank kernels is addressed in Section 5.3.1 with the so-called polynomial kernel of degree defined by on the unit square . By contrast, Section 5.3.2 tackles the polynomial decay kernels with the first-order Sobolev kernel on the unit square .

The performance of the early stopping rules is measured in terms of the squared norm averaged over independent trials.

For our simulations, we use a variance estimation method that is described in Section 5.4. This method is asymptotically unbiased, which is sufficient for our purposes.

5.3 Results of the simulation experiments

5.3.1 Finite-rank kernels

Figure 3 displays the (averaged) -norm error of the oracle stopping rule (45), our stopping rule (20), (19), minimax-optimal stopping rule (44), and -fold cross validation stopping time (43) versus the sample size. Figure 3(a) shows the results for the piecewise linear regression function whereas Figure 3(b) corresponds to the ”sinus” regression function.

All the curves decrease as grows. From these graphs, the overall worst performance is achieved by , especially with a small sample size, which can be due to the additional randomness induced by the preliminary random splitting with . By contrast, the minimum discrepancy-based stopping rules ( and ) exhibit the best performances compared to the results of and . The averaged mean-squared error of is getting closer to the one of as the number of samples increases, which was expected from the theory and also intuitively, since has been introduced as an estimator of . From Figure 3(a), is less accurate for small sample sizes, but improves a lot as grows up to achieving a performance similar to that of . This can result from the fact that is built from upper bounds on the bias and variance terms, which are likely to be looser with a small sample size, but achieve an optimal convergence rate as increases. On Figure 3(b), the reason why exhibits (strongly) better results than owes to the main assumption on the regression function, namely that . This could be violated for the ”sinus” function.

5.3.2 Polynomial eigenvalue decay kernels

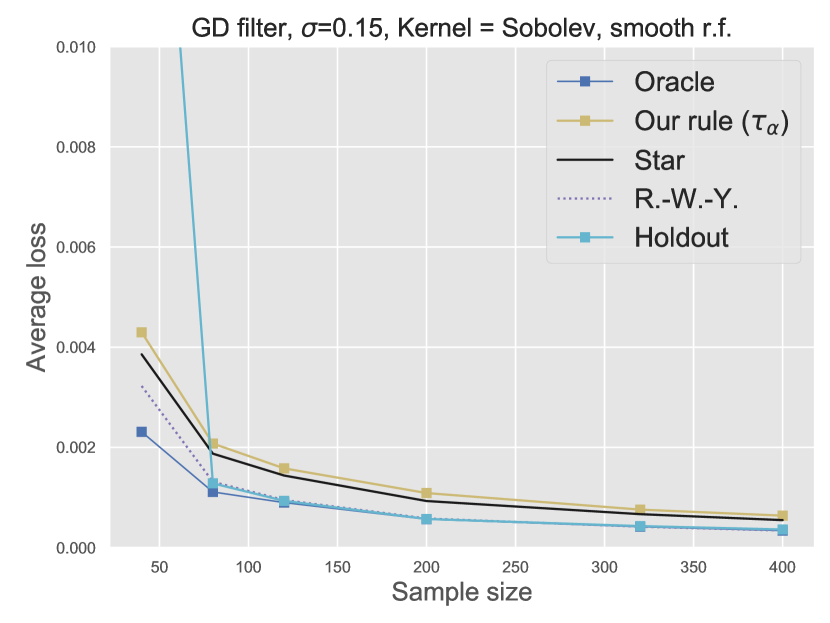

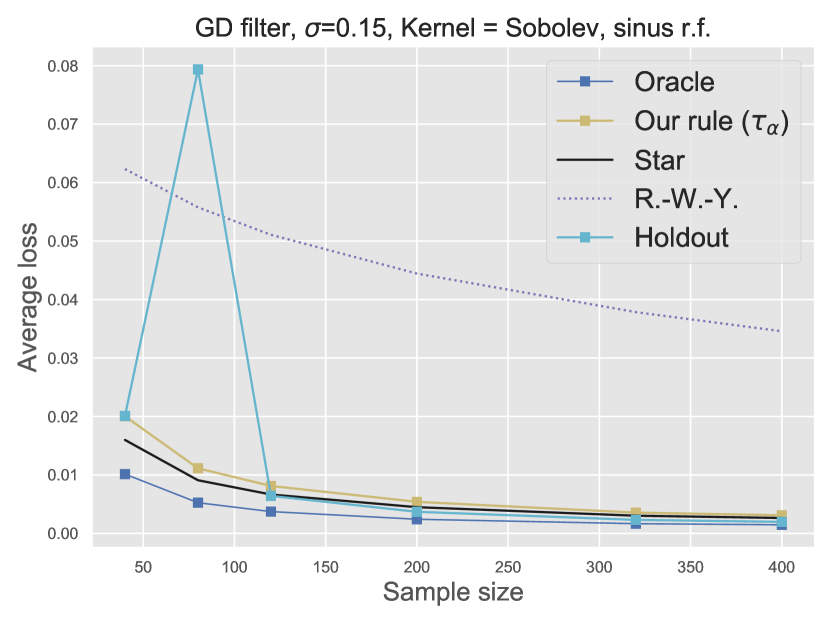

Figure 4 displays the resulting (averaged over repetitions) -error of (with ) (33), (44), (19), and (42) versus the sample size.

Figure 4(a) shows that all stopping rules seem to work equivalently well, although there is a slight advantage for and compared to and . However, as grows to , the performances of all stopping rules become very close to each other. Let us emphasize that the true value of is not known in these experiments. Therefore, the value has been estimated from the decay of the empirical eigenvalue of the normalized Gram matrix. This can explain why the performance of remains worse than that of .

The story described by Figure 4(b) is somewhat different. The first striking remark is that completely fails on this example, which still stems from the (unsatisfied) constraint on the -norm of . However, the best performance is still achieved by the Hold-out stopping rule, although and remain very close to the latter. The fact that remains close to the oracle stopping rule (without any need for smoothing) supports the idea that the minimum discrepancy is a reliable principle for designing an effective stopping rule. The deficiency of (by contrast to ) then results from the variability of the empirical risk, which does not remain close enough to its expectation. This bad behavior is then balanced by introducing the polynomial smoothing at level within the definition of , which enjoys close to optimal practical performances.

Let us also mention that exhibit some variability, in particular, with small sample sizes as illustrated by Figures 4(a) and 4(b).

The overall conclusion is that the smoothed minimum discrepancy-based stopping time leads to almost optimal performances provided , where quantifies the polynomial decay of the empirical eigenvalues .

5.4 Estimation of variance and decay rate for polynomial eigenvalue decay kernels

The purpose of the present section is to describe two strategies for estimating: the decay rate of the empirical eigenvalues of the normalized Gram matrix, and the variance parameter .

5.4.1 Polynomial decay parameter estimation

From the empirical version of the polynomial decay assumption (37), one can easily derive upper and lower bounds for as . The difference between these upper and lower bounds is equal to , which is minimized for . Then the best precision on the estimated value of is reached with , which yields the estimator .

5.4.2 Variance parameter estimation

There is a bunch of suggestions for variance estimation with linear smoothers; see, e.g., Section 5.6 in the book [39]. In our simulation experiments, two cases are distinguished: the situation where the reproducing kernel has finite rank , and the situation where . In both cases, an asymptotically unbiased estimator of is designed.

Finite-rank kernel.

With such a finite-rank kernel, the estimation of the noise is made from the coordinates corresponding to the situation, where (see Section 4.1.1 in [29]). Actually, these coordinates (which are pure noise) are exploited to build an easy-to-compute estimator of , that is,

| (46) |

Infinite-rank kernel.

If , we suggest using the following result.

Lemma 5.1.

For any regular kernel (see Theorem 4.1), any value of satisfying as yields that is an asymptotically unbiased estimator of .

A sketch of the proof of Lemma 5.1 is given in Appendix H. Based on this lemma, we suggest taking , where is the maximum number of iterations allowed to execute due to computational constraints. Notice that as long as we access closed-form expressions of the estimator, there is no need to compute all estimators for between . The final estimator of used in the experiments of Section 5.3 is given by

| (47) |

6 Conclusion

In this paper, we describe spectral filter estimators (e.g., gradient descent, kernel ridge regression) for the non-parametric regression function estimation in RKHS. Two new data-driven early stopping rules (20) and (33) for these iterative algorithms are designed. In more detail, we show that for the infinite-rank reproducing kernels, has a high variance due to the variability of the empirical risk around its expectation, and we proposed a way to reduce this variability by means of smoothing the empirical -norm (and, as a consequence, the empirical risk) by the eigenvalues of the normalized kernel matrix. We demonstrate in Corollaries 3.4 and 4.4 that our stopping times and yield minimax-optimal rates, in particular, for finite-rank kernel classes and Sobolev spaces. It is worth emphasizing that computing the stopping times requires only the estimation of the variance and computing . Theoretical results are confirmed empirically: and with the smoothing parameter , where is the polynomial decay rate of the eigenvalues of the normalized Gram matrix, perform favorably in comparison with stopping rules based on hold-out data and 4-fold cross-validation.

There are various open questions that could be tackled after our results. A deficiency of our strategy is that the construction of and is based on the assumption that the regression function belongs to a known RKHS, which restricts (mildly) the smoothness of the regression function. We would like to understand how our results extend to other loss functions besides the squared loss (for example, in the classification framework), as it was done in [40]. Another research direction could be to use early stopping with fast approximation techniques for kernels [30] to avoid calculation of all eigenvalues of the normalized Gram matrix that can be prohibited for large-scale problems.

Appendix A Useful results

In this section, we present several auxiliary lemmas that are repeatedly used in the paper.

Lemma A.1.

[29, in Lemma 8 and in Lemma 13] For any bounded kernel, with corresponding to gradient descent or kernel ridge regression, for every ,

| (48) |

The following result shows the magnitude of the smoothed critical radius for polynomial eigenvalue decay kernels.

Lemma A.2.

Assume that , for , one has

Proof of Lemma A.2.

For the next two lemmas define the positive self-adjoint trace-class covariance operator

where is the Kronecker product between two elements in such that , for every . We know that and have the same eigenvalues . Moreover, we introduce the smoothed empirical covariance operator as

| (50) |

Lemma A.3.

For each , any , , and , one has

Proof.

Let be the orthogonal projection from onto the span of the eigenfunctions . Then by the variational characterization of partial traces, one has and . One concludes that

By reproducing property and Mercer’s theorem, , and

Since , one has , and by Bernstein’s inequality, for any ,

Then, by using when , and for any , one gets

for any . ∎

Lemma A.4.

For each , any , , and , one has

Proof.

The proof of [18, Lemma 33] could be easily generalized to the smoothed version by using the proof of Lemma A.3. Let be the orthogonal projection from onto the span of the population eigenfunctions . Then by the variational characterization of partial traces, one has and . One concludes that

Hence,

Since and by using the reproducing property and Mercer’s theorem, , one has

Bernstein’s inequality yields that for any ,

Using the inequalities , when , and

one gets

for any and . ∎

Appendix B Handling the smoothed bias and variance

Here, we recall one concentration result from [29, Section 4.1.2]. For any and , one has , and

| (53) |

Appendix C Auxiliary lemma for finite-rank kernels

Let us first transfer the critical inequality (16) from to .

Definition C.1.

Set in (16), and let us define as the largest positive solution to the following fixed-point equation

| (54) |

Note that the empirical critical radius , and such a point exists since exists and is unique [27, 5, 29]. Moreover, provides the equality in Ineq. (54).

Remark that at . Thus, due to the construction of ( is the point of intersection of an upper bound on the bias and a lower bound on ) and monotonicity (in ) of all the terms involved, we get .

Lemma C.2.

Appendix D Proofs for polynomial smoothing (fixed design)

In the proofs, we will need three additional definitions below.

Definition D.1.

In Definition 15, set , then for any , the smoothed critical inequality (15) is equivalent to

| (56) |

Due to Lemma G.1, the left-hand side of (56) is non-decreasing in , and the right-hand side is non-increasing in .

Definition D.2.

For any , define the stopping rule such that

| (57) |

then Ineq. (56) becomes the equality at thanks to the monotonicity and continuity of both terms in the inequality.

Further, we define the stopping time and , a lower bound and an upper bound on , .

Definition D.3.

Define the smoothed proxy variance and the following stopping times

| (58) | ||||

Notice that at :

At :

Thus, and satisfy the smoothed critical inequality (56). Moreover, is always greater than or equal to and since is the largest value satisfying Ineq. (56). As a consequence of Lemma G.1 and continuity of (56) in , one has . We assume for simplicity that

for some positive numeric constants , that do not depend on , due to the fact that and .

The following lemma decomposes the risk error into several parts that will be further analyzed in subsequent Lemmas D.7, D.8.

Lemma D.4.

Proof of Lemma D.4.

Let us define the noise vector and, for each , two vectors that correspond to the bias and variance parts:

| (59) |

It gives the following expressions for the stochastic part of the variance and bias:

| (60) |

General expression for the -norm error at takes the form

| (61) |

Therefore, applying the inequality for any , and (60), we obtain

| (62) |

∎

D.1 Two deviation inequalities for

This is the first deviation inequality for that will be used in Lemma D.7 to control the variance term.

Lemma D.5.

Proof of Lemma D.5.

Set , then due to the monotonicity of the smoothed empirical risk, for all ,

Consider

| (63) |

Define

where .

Further, set , and recall that for . This implies

Then for the event from Corollary A.5, by standard concentration results on linear and quadratic sums of Gaussian random variables (see, e.g., [25, Lemma 1]),

| (64) | ||||

| (65) |

where .

In what follows, we simplify the bounds above.

Firstly, recall that , which implies , and , and

Secondly, we will upper bound the Euclidean norm of . Recall Corollary A.5 with and , the definition of the smoothed statistical dimension , and Ineq. (35): , which implies

Finally, using the upper bound for all and the fact that for the event from Corollary A.5, one gets

for some positive numeric that depends only on .

∎

What follows is the second deviation inequality for that will be further used in Lemma D.8 to control the bias term.

Lemma D.6.

Proof of Lemma D.6.

Set . Note that by construction.

Further, for all , due to the monotonicity of ,

Consider . At , we have , thus

Then for the event from Corollary A.5, by standard concentration results on linear and quadratic sums of Gaussian random variables (see, e.g., [25, Lemma 1]),

| (67) | ||||

where .

In what follows, we simplify the bounds above.

D.2 Bounding the stochastic part of the variance term at

Lemma D.7.

D.3 Bounding the bias term at

Lemma D.8.

Appendix E Proof of Theorem 4.2

From Lemmas D.4, D.7, and D.8, we get

| (72) |

with probability at least , where depends only on . Moreover, by taking the expectation in Ineq. (62), it yields

Let us upper bound and . First, define , thus

| (73) | ||||

Defining from Lemma D.8 and using the upper bound for any gives the following.

| (74) |

As for ,

| (75) | ||||

and due to Lemma D.7 and Cauchy-Schwarz inequality,

| (76) |

Notice that , and

| (77) |

At the same time, thanks to Lemma D.7,

Thus, by inserting the last two inequalities into (76), it gives

Finally, summing up all the terms together,

where a constant depends only on , constant is numeric.

Appendix F Proof of Theorem 3.3

We will use the definition of (20) with the threshold so that, due to the monotonicity of the ”reduced” empirical risk ,

where

| (78) |

Assume that . Remark that

| (79) |

By applying [25, Lemma 1] to , it yields

| (80) |

where . In addition, [38, Proposition 2.5] gives us

| (81) |

Define a stopping time as follows.

| (82) |

Note that serves as an upper bound on and as a lower bound on . Moreover, satisfies the critical inequality (54). Therefore, due to Lemma G.1 and continuity of (54) in , there is a positive numeric constant , that do not depend on , such that .

Since applying [29, Section 4.3], , one can bound as follows.

| (83) |

Remark that in (80) , and

As for a lower bound on ,

| (84) |

From [29, Lemma 9], with probability at least . Thus, Ineq. (84) allows to say:

It implies that with the same probability. Thus, according to [38, Theorem 14.1], for some positive numeric constants

with probability (w.r.t. ) at least and with probability (w.r.t. ) at least .

Moreover, the same arguments (with and without Assumption 4) as in the proof of Theorem 4.2, [38, Proposition 14.25] and [29, Section 4.3.1] yield

| (85) |

with probability at least . Then by the Cauchy-Schwarz inequality,

Since , where the empirical covariance operator

and , one has

and due to the definition of ,

We know that

and

Further,

Thus, subtracting the empirical term from the RKHS term, one gets

where .

Firstly, , and

Secondly,

Thirdly, since for any and , and , one gets

Fourthly,

Combining everything together and using for each ,

The last equation implies that

As a consequence of the last inequality,

Appendix G Auxiliary results

Lemma G.1.

Lemma G.2.

Thus, provides a smallest positive solution to the non-smooth version of the critical inequality.

Appendix H Proof of Lemma 5.1

Let us prove the lemma only for kernel ridge regression. W.l.o.g. assume that and notice that

| (90) | ||||

From Lemma B.1, . As for the denominator,

With the parameterization and , since , is a non-decreasing function in ,

From [41, Section 2.3], , which implies

[Acknowledgments] The authors would like to thank the anonymous referees, an Associate Editor and the Editor for their constructive comments that improved the quality of this paper.

References

- [1] {bincollection}[author] \bauthor\bsnmAkaike, \bfnmHirotogu\binitsH. (\byear1998). \btitleInformation theory and an extension of the maximum likelihood principle. In \bbooktitleSelected papers of hirotugu akaike \bpages199–213. \bpublisherSpringer. \endbibitem

- [2] {barticle}[author] \bauthor\bsnmAngles, \bfnmTomas\binitsT., \bauthor\bsnmCamoriano, \bfnmRaffaello\binitsR., \bauthor\bsnmRudi, \bfnmAlessandro\binitsA. and \bauthor\bsnmRosasco, \bfnmLorenzo\binitsL. (\byear2015). \btitleNYTRO: When Subsampling Meets Early Stopping. \bjournalarXiv e-prints \bpagesarXiv:1510.05684. \endbibitem

- [3] {barticle}[author] \bauthor\bsnmArlot, \bfnmSylvain\binitsS., \bauthor\bsnmCelisse, \bfnmAlain\binitsA. \betalet al. (\byear2010). \btitleA survey of cross-validation procedures for model selection. \bjournalStatistics surveys \bvolume4 \bpages40–79. \endbibitem

- [4] {barticle}[author] \bauthor\bsnmAronszajn, \bfnmNachman\binitsN. (\byear1950). \btitleTheory of reproducing kernels. \bjournalTransactions of the American mathematical society \bvolume68 \bpages337–404. \endbibitem

- [5] {barticle}[author] \bauthor\bsnmBartlett, \bfnmPeter L\binitsP. L., \bauthor\bsnmBousquet, \bfnmOlivier\binitsO., \bauthor\bsnmMendelson, \bfnmShahar\binitsS. \betalet al. (\byear2005). \btitleLocal rademacher complexities. \bjournalThe Annals of Statistics \bvolume33 \bpages1497–1537. \endbibitem

- [6] {barticle}[author] \bauthor\bsnmBartlett, \bfnmPeter L\binitsP. L. and \bauthor\bsnmTraskin, \bfnmMikhail\binitsM. (\byear2007). \btitleAdaboost is consistent. \bjournalJournal of Machine Learning Research \bvolume8 \bpages2347–2368. \endbibitem

- [7] {barticle}[author] \bauthor\bsnmBauer, \bfnmFrank\binitsF., \bauthor\bsnmPereverzev, \bfnmSergei\binitsS. and \bauthor\bsnmRosasco, \bfnmLorenzo\binitsL. (\byear2007). \btitleOn regularization algorithms in learning theory. \bjournalJournal of complexity \bvolume23 \bpages52–72. \endbibitem

- [8] {bbook}[author] \bauthor\bsnmBerlinet, \bfnmAlain\binitsA. and \bauthor\bsnmThomas-Agnan, \bfnmChristine\binitsC. (\byear2011). \btitleReproducing kernel Hilbert spaces in probability and statistics. \bpublisherSpringer Science & Business Media. \endbibitem

- [9] {barticle}[author] \bauthor\bsnmBlanchard, \bfnmGilles\binitsG., \bauthor\bsnmHoffmann, \bfnmMarc\binitsM. and \bauthor\bsnmReiß, \bfnmMarkus\binitsM. (\byear2018). \btitleOptimal adaptation for early stopping in statistical inverse problems. \bjournalSIAM/ASA Journal on Uncertainty Quantification \bvolume6 \bpages1043–1075. \endbibitem

- [10] {barticle}[author] \bauthor\bsnmBlanchard, \bfnmGilles\binitsG., \bauthor\bsnmHoffmann, \bfnmMarc\binitsM., \bauthor\bsnmReiß, \bfnmMarkus\binitsM. \betalet al. (\byear2018). \btitleEarly stopping for statistical inverse problems via truncated SVD estimation. \bjournalElectronic Journal of Statistics \bvolume12 \bpages3204–3231. \endbibitem

- [11] {barticle}[author] \bauthor\bsnmBlanchard, \bfnmGilles\binitsG. and \bauthor\bsnmKrämer, \bfnmNicole\binitsN. (\byear2016). \btitleConvergence rates of kernel conjugate gradient for random design regression. \bjournalAnalysis and Applications \bvolume14 \bpages763–794. \endbibitem

- [12] {barticle}[author] \bauthor\bsnmBlanchard, \bfnmGilles\binitsG. and \bauthor\bsnmMathé, \bfnmPeter\binitsP. (\byear2010). \btitleConjugate gradient regularization under general smoothness and noise assumptions. \bjournalJournal of Inverse and Ill-posed Problems \bvolume18 \bpages701–726. \endbibitem

- [13] {barticle}[author] \bauthor\bsnmBlanchard, \bfnmGilles\binitsG. and \bauthor\bsnmMathé, \bfnmPeter\binitsP. (\byear2012). \btitleDiscrepancy principle for statistical inverse problems with application to conjugate gradient iteration. \bjournalInverse problems \bvolume28 \bpages115011. \endbibitem

- [14] {barticle}[author] \bauthor\bsnmBühlmann, \bfnmPeter\binitsP. and \bauthor\bsnmYu, \bfnmBin\binitsB. (\byear2003). \btitleBoosting with the L 2 loss: regression and classification. \bjournalJournal of the American Statistical Association \bvolume98 \bpages324–339. \endbibitem

- [15] {barticle}[author] \bauthor\bsnmCaponnetto, \bfnmAndrea\binitsA. (\byear2006). \btitleOptimal Rates for Regularization Operators in Learning Theory. \endbibitem

- [16] {barticle}[author] \bauthor\bsnmCaponnetto, \bfnmAndrea\binitsA. and \bauthor\bsnmYao, \bfnmYuan\binitsY. (\byear2010). \btitleCross-validation based adaptation for regularization operators in learning theory. \bjournalAnalysis and Applications \bvolume8 \bpages161–183. \endbibitem

- [17] {barticle}[author] \bauthor\bsnmCavalier, \bfnmLaurent\binitsL., \bauthor\bsnmGolubev, \bfnmGK\binitsG., \bauthor\bsnmPicard, \bfnmDominique\binitsD., \bauthor\bsnmTsybakov, \bfnmAB\binitsA. \betalet al. (\byear2002). \btitleOracle inequalities for inverse problems. \bjournalThe Annals of Statistics \bvolume30 \bpages843–874. \endbibitem

- [18] {barticle}[author] \bauthor\bsnmCelisse, \bfnmAlain\binitsA. and \bauthor\bsnmWahl, \bfnmMartin\binitsM. (\byear2021). \btitleAnalyzing the discrepancy principle for kernelized spectral filter learning algorithms. \bjournalJournal of Machine Learning Research \bvolume22 \bpages1–59. \endbibitem

- [19] {barticle}[author] \bauthor\bsnmCucker, \bfnmFelipe\binitsF. and \bauthor\bsnmSmale, \bfnmSteve\binitsS. (\byear2002). \btitleOn the mathematical foundations of learning. \bjournalBulletin of the American mathematical society \bvolume39 \bpages1–49. \endbibitem

- [20] {bbook}[author] \bauthor\bsnmEngl, \bfnmHeinz Werner\binitsH. W., \bauthor\bsnmHanke, \bfnmMartin\binitsM. and \bauthor\bsnmNeubauer, \bfnmAndreas\binitsA. (\byear1996). \btitleRegularization of inverse problems \bvolume375. \bpublisherSpringer Science & Business Media. \endbibitem

- [21] {barticle}[author] \bauthor\bsnmGerfo, \bfnmL Lo\binitsL. L., \bauthor\bsnmRosasco, \bfnmLorenzo\binitsL., \bauthor\bsnmOdone, \bfnmFrancesca\binitsF., \bauthor\bsnmVito, \bfnmE De\binitsE. D. and \bauthor\bsnmVerri, \bfnmAlessandro\binitsA. (\byear2008). \btitleSpectral algorithms for supervised learning. \bjournalNeural Computation \bvolume20 \bpages1873–1897. \endbibitem

- [22] {bbook}[author] \bauthor\bsnmGu, \bfnmChong\binitsC. (\byear2013). \btitleSmoothing spline ANOVA models \bvolume297. \bpublisherSpringer Science & Business Media. \endbibitem

- [23] {bbook}[author] \bauthor\bsnmHansen, \bfnmPer Christian\binitsP. C. (\byear2010). \btitleDiscrete inverse problems: insight and algorithms \bvolume7. \bpublisherSiam. \endbibitem

- [24] {barticle}[author] \bauthor\bsnmKoltchinskii, \bfnmVladimir\binitsV. \betalet al. (\byear2006). \btitleLocal Rademacher complexities and oracle inequalities in risk minimization. \bjournalThe Annals of Statistics \bvolume34 \bpages2593–2656. \endbibitem

- [25] {barticle}[author] \bauthor\bsnmLaurent, \bfnmBeatrice\binitsB. and \bauthor\bsnmMassart, \bfnmPascal\binitsP. (\byear2000). \btitleAdaptive estimation of a quadratic functional by model selection. \bjournalAnnals of statistics \bpages1302–1338. \endbibitem

- [26] {barticle}[author] \bauthor\bsnmMathé, \bfnmPeter\binitsP. and \bauthor\bsnmPereverzev, \bfnmSergei V\binitsS. V. (\byear2003). \btitleGeometry of linear ill-posed problems in variable Hilbert scales. \bjournalInverse problems \bvolume19 \bpages789. \endbibitem

- [27] {binproceedings}[author] \bauthor\bsnmMendelson, \bfnmShahar\binitsS. (\byear2002). \btitleGeometric parameters of kernel machines. In \bbooktitleInternational Conference on Computational Learning Theory \bpages29–43. \bpublisherSpringer. \endbibitem

- [28] {barticle}[author] \bauthor\bsnmRaskutti, \bfnmGarvesh\binitsG., \bauthor\bsnmWainwright, \bfnmMartin J\binitsM. J. and \bauthor\bsnmYu, \bfnmBin\binitsB. (\byear2012). \btitleMinimax-optimal rates for sparse additive models over kernel classes via convex programming. \bjournalJournal of Machine Learning Research \bvolume13 \bpages389–427. \endbibitem

- [29] {barticle}[author] \bauthor\bsnmRaskutti, \bfnmGarvesh\binitsG., \bauthor\bsnmWainwright, \bfnmMartin J\binitsM. J. and \bauthor\bsnmYu, \bfnmBin\binitsB. (\byear2014). \btitleEarly stopping and non-parametric regression: an optimal data-dependent stopping rule. \bjournalJournal of Machine Learning Research \bvolume15 \bpages335–366. \endbibitem

- [30] {binproceedings}[author] \bauthor\bsnmRudi, \bfnmAlessandro\binitsA., \bauthor\bsnmCamoriano, \bfnmRaffaello\binitsR. and \bauthor\bsnmRosasco, \bfnmLorenzo\binitsL. (\byear2015). \btitleLess is more: Nyström computational regularization. In \bbooktitleAdvances in Neural Information Processing Systems \bpages1657–1665. \endbibitem

- [31] {bbook}[author] \bauthor\bsnmScholkopf, \bfnmBernhard\binitsB. and \bauthor\bsnmSmola, \bfnmAlexander J\binitsA. J. (\byear2001). \btitleLearning with kernels: support vector machines, regularization, optimization, and beyond. \bpublisherMIT press. \endbibitem

- [32] {barticle}[author] \bauthor\bsnmSchwarz, \bfnmGideon\binitsG. \betalet al. (\byear1978). \btitleEstimating the dimension of a model. \bjournalThe annals of statistics \bvolume6 \bpages461–464. \endbibitem

- [33] {bmisc}[author] \bauthor\bsnmStankewitz, \bfnmBernhard\binitsB. (\byear2019). \btitleSmoothed residual stopping for statistical inverse problems via truncated SVD estimation. \endbibitem

- [34] {barticle}[author] \bauthor\bsnmStone, \bfnmCharles J\binitsC. J. \betalet al. (\byear1985). \btitleAdditive regression and other nonparametric models. \bjournalThe annals of Statistics \bvolume13 \bpages689–705. \endbibitem

- [35] {barticle}[author] \bauthor\bsnmWahba, \bfnmGrace\binitsG. (\byear1977). \btitlePractical approximate solutions to linear operator equations when the data are noisy. \bjournalSIAM Journal on Numerical Analysis \bvolume14 \bpages651–667. \endbibitem

- [36] {bincollection}[author] \bauthor\bsnmWahba, \bfnmGrace\binitsG. (\byear1987). \btitleThree topics in ill-posed problems. In \bbooktitleInverse and ill-posed problems \bpages37–51. \bpublisherElsevier. \endbibitem

- [37] {bbook}[author] \bauthor\bsnmWahba, \bfnmGrace\binitsG. (\byear1990). \btitleSpline models for observational data \bvolume59. \bpublisherSiam. \endbibitem

- [38] {bbook}[author] \bauthor\bsnmWainwright, \bfnmMartin J\binitsM. J. (\byear2019). \btitleHigh-dimensional statistics: A non-asymptotic viewpoint \bvolume48. \bpublisherCambridge University Press. \endbibitem

- [39] {bbook}[author] \bauthor\bsnmWasserman, \bfnmLarry\binitsL. (\byear2006). \btitleAll of nonparametric statistics. \bpublisherSpringer Science & Business Media. \endbibitem

- [40] {binproceedings}[author] \bauthor\bsnmWei, \bfnmYuting\binitsY., \bauthor\bsnmYang, \bfnmFanny\binitsF. and \bauthor\bsnmWainwright, \bfnmMartin J\binitsM. J. (\byear2017). \btitleEarly stopping for kernel boosting algorithms: A general analysis with localized complexities. In \bbooktitleAdvances in Neural Information Processing Systems \bpages6067–6077. \endbibitem

- [41] {barticle}[author] \bauthor\bsnmYang, \bfnmYun\binitsY., \bauthor\bsnmPilanci, \bfnmMert\binitsM. and \bauthor\bsnmWainwright, \bfnmMartin J\binitsM. J. (\byear2017). \btitleRandomized sketches for kernels: Fast and optimal nonparametric regression. \bjournalThe Annals of Statistics \bvolume45 \bpages991–1023. \endbibitem

- [42] {barticle}[author] \bauthor\bsnmYao, \bfnmYuan\binitsY., \bauthor\bsnmRosasco, \bfnmLorenzo\binitsL. and \bauthor\bsnmCaponnetto, \bfnmAndrea\binitsA. (\byear2007). \btitleOn Early Stopping in Gradient Descent Learning. \bjournalConstructive Approximation \bvolume26 \bpages289–315. \bdoi10.1007/s00365-006-0663-2 \endbibitem

- [43] {barticle}[author] \bauthor\bsnmZhang, \bfnmTong\binitsT., \bauthor\bsnmYu, \bfnmBin\binitsB. \betalet al. (\byear2005). \btitleBoosting with early stopping: Convergence and consistency. \bjournalThe Annals of Statistics \bvolume33 \bpages1538–1579. \endbibitem