EARL: An Elliptical Distribution aided Adaptive Rotation Label Assignment for Oriented Object Detection in Remote Sensing Images

Abstract

Label assignment is a crucial process in object detection, which significantly influences the detection performance by determining positive or negative samples during training process. However, existing label assignment strategies barely consider the characteristics of targets in remote sensing images (RSIs) thoroughly, e.g., large variations in scales and aspect ratios, leading to insufficient and imbalanced sampling and introducing more low-quality samples, thereby limiting detection performance. To solve the above problems, an Elliptical Distribution aided Adaptive Rotation Label Assignment (EARL) is proposed to select high-quality positive samples adaptively in anchor-free detectors. Specifically, an adaptive scale sampling (ADS) strategy is presented to select samples adaptively among multi-level feature maps according to the scales of targets, which achieves sufficient sampling with more balanced scale-level sample distribution. In addition, a dynamic elliptical distribution aided sampling (DED) strategy is proposed to make the sample distribution more flexible to fit the shapes and orientations of targets, and filter out low-quality samples. Furthermore, a spatial distance weighting (SDW) module is introduced to integrate the adaptive distance weighting into loss function, which makes the detector more focused on the high-quality samples. Extensive experiments on several popular datasets demonstrate the effectiveness and superiority of our proposed EARL, where without bells and whistles, it can be easily applied to different detectors and achieve state-of-the-art performance. The source code will be available at: https://github.com/Justlovesmile/EARL.

Index Terms:

Label assignment, remote sensing images, adaptive scale sampling, elliptical distribution, anchor-free detector.I Introduction

Object detection in remote sensing images (RSIs), which aims to determine the locations and categories of the interested targets, has become one of the most common and potential image interpretation steps for numerous applications[1, 2, 3, 4], such as maritime rescuing[5], urban planning[6] and traffic management[7].

In the past few years, substantial methods[8, 9, 10, 11, 12] have made significant progress for generic object detection that are designed for nature images, which have greatly promoted the development of object detection in RSIs[13, 14, 15, 16] and attract wide attention from researchers. However, object detection in RSIs is still facing challenges from the characteristics of targets, such as arbitrary orientations and large variations in scales and aspect ratios. To tackle these challenges, current detectors for RSIs [17, 18, 19, 20, 21] often enhance orientation prediction by incorporating additional branches and alleviate scale variations by adopting multi-level feature maps[22, 23, 24, 25]. However, it usually faces the crucial issue of selecting training (i.e., positive) samples at reasonable pixel-wise locations (i.e., spatial assignment) on appropriate levels of feature maps (i.e., scale assignment), namely label assignment [26].

As a fundamental and crucial process in object detection, label assignment significantly influences the detection performance[27]. However, existing detectors for RSIs often adopt label assignment strategies migrated from generic detectors, such as maximum intersection over union (IoU) based strategies [18, 21, 28] and center-based strategies [15, 29, 30], which are often unsuitable for object detection in RSIs due to the large variations in scales and aspect ratios of targets, resulting in insufficient and imbalanced sampling and introducing more low-quality samples (e.g., samples located on the background or near the boundaries), thereby limiting detection performance.

Concretely, existing methods (e.g., AOPG [15] and GGHL [31]) often employ the fixed scale assignment strategy, which heuristically assigns samples on a specific level of feature maps to the target decided by the predefined fixed threshold. However, this heuristic approach often results in insufficient and imbalance sampling, especially in RSIs. As illustrated in Fig. 1, few samples on the specific level of feature map are assigned to the targets with extreme scales and large aspect ratios (i.e., tiny car, elongated bus and large airplane), whereas for samples on other levels of feature maps, despite being located on the target itself, they are not assigned to the target (i.e., treated as negative samples), due to the fixed threshold. In addition, this approach often aggravates the scale-level sampling imbalance, when the target scales are unbalanced, especially in RSIs, where small targets are far more numerous than large targets. Consequently, the selected positive samples are often clustered in the low-level of feature maps, thus overwhelming the sufficient attention to the targets with other scales and introducing the scale-level bias. In contrast, other methods (e.g., FSDet[19]) assign all samples across all levels of feature maps within the bounding box to the target, which often introduce large amounts of low-quality samples (e.g., background noise) and ambiguous samples (i.e., samples that are assigned to multiple targets simultaneously[11]), especially for targets with overlapping relationships, such as harbours and ships, that would disturb the training process. Therefore, a more flexible method is required for sample selection on multi-level feature maps.

In addition, existing detectors usually use rectangle bounding box[30, 19] as the sampling range in spatial assignment, and tend to introduce more low-quality samples from the background of rectangle bounding box, as illustrated in Fig. 2 (a). In contrast, center-based strategies [27, 29, 15] can improve the quality of selected samples by only sampling central area of targets. However, it still rarely takes into account the large variations in aspect ratios of targets in RSIs, which leads to many foreground samples being misclassified as negatives and results in insufficient sampling, as shown in Fig. 2 (b). Hence, a more reliable spatial assignment strategy is necessary for detectors to capture positive samples with higher quality.

To address the above problems, this work proposed an elliptical distribution aided adaptive rotation label assignment (EARL) strategy to select high-quality positive samples adaptively in orientation anchor-free detectors and yield better performance. Specifically, an adaptive scale assignment (ADS) strategy is proposed to assign top- samples from high to low level of feature maps adaptively according to the scales of targets, i.e., assigning more high-level samples and fewer low-level samples to the large targets, and conducting the opposite for the small targets. Therefore, it can achieve adaptive and sufficient sampling for extreme targets with more balanced scale-level sample distribution. In addition, a dynamic elliptical distribution aided sampling (DED) strategy is presented to select samples following an elliptical distribution, which can adjust sampling range dynamically according to the aspect ratios of targets, i.e., the sampling range tends to be a contracted circular distribution when the shape of target is close to a square, and an inner tangent ellipse when the shape is elongated, which can achieve more flexible sample distribution to fit the shapes and orientations of targets and filter out low-quality samples. Moreover, a spatial distance weighting (SDW) module is introduced to assign different weights to samples at different pixel-wise locations, e.g., assigning larger weights to the samples close to the center of target, and smaller weights to the samples near the boundaries, which allows the detector to focus on the high-quality samples, thereby further enhancing the detection performance. Our proposed EARL can be easily deployed to different detectors to achieve better performance, where a simple anchor-free detector, R-FCOS [11], and an advanced detector, RTMDet-R [32], with the minimal modification are employed to verify the effectiveness of our method. Extensive experiments on several popular RSIs datasets demonstrate the effectiveness and superiority of our proposed EARL.

In summary, the contributions of this work are summarized as follows:

-

1.

An ADS strategy is proposed to select training samples adaptively among multi-level feature maps, which achieves sufficient sampling with more balanced scale-level sample distribution.

-

2.

A DED strategy is proposed to make the sample distribution more flexible to fit the shapes and orientations of the targets while filtering out low-quality samples.

-

3.

An SDW module is introduced to enhance the high-quality sample selection by integrating the adaptive distance weighing into loss function, and which can further improve the detection performance.

-

4.

Extensive experiments on three challenging datasets were conducted. The results verify the effectiveness of each component of the proposed EARL, and demonstrate that our strategy can be applied to different baseline detectors and improve the detection performance.

II Related Work

II-A Oriented Object Detection in RSIs

Due to its wide range of application scenarios, oriented object detection in RSIs has developed rapidly. Similar to generic detectors, recent methods can be divided into two categories, i.e., anchor-based detectors and anchor-free detectors. To detect targets with arbitrary orientations, some anchor-based detectors[33, 34, 35], which are built on the two-stage framework, i.e., Faster R-CNN[8], densely preset multiple anchors with different scales, aspect ratios and angles for better regression while introducing heavy anchor-related computations. After that, RoI-Transformer[36] proposed an efficient region of interests (RoI) learner to transform horizontal proposals to rotated ones. Gliding Vertex[37] described oriented targets by horizontal bounding boxes (HBB), which further avoided numerous anchors with multiple angles. Then, DODet[38] was proposed to deal with the spatial misalignment between horizontal proposals and oriented targets, as well as the feature misalignment between classification and localization. Whereas R3Det[21] and S2A-Net[20] followed the one-stage schema to balance accuracy and efficiency, and alleviated the feature misalignment issue. However, these methods still take a long time during training and inference[19].

To maintain high efficiency, anchor-free detectors are designed to avoid the usage of anchors. For instance, TOSO[30] directly regressed the surrounding HBB and transformation parameters to present rotated targets. IENet[17] was built upon the classic one-stage anchor-free framework, i.e., FCOS, adding an additional branch with the interactive embranchment module to regress angles. Later, BBAVector[39], O2-DNet[40] and ACE[14] employed a simplified architecture and designed new oriented bounding box (OBB) representation methods. However, these anchor-free detectors still not perform the state-of-the-art results compared with anchor-based detectors, and most of them are more likely to improve the architecture while not considering the training strategy, especially the label assignment strategy, which has been proven to be the essential difference between these two kinds of detectors[27].

II-B Label Assignment Strategies

Label assignment is a crucial component for convolutional neural network (CNN) based detectors to learn feature distribution of targets by determining positive or negative samples. Meanwhile, as the current detectors commonly adopt multi-scale feature maps to alleviate the scale variations, label assignment needs to simultaneously assign labels to samples at different spatial locations (spatial assignment) on different levels of feature maps (scale assignment)[26]. Most anchor-based detectors[8, 10, 18] assign the labels to anchors among multi-level feature maps by comparing the preset thresholds and IoU between anchors and ground truth bounding boxes. However, this strategy often involves many hyperparameters to adjust the scales and aspect ratios of anchors based on the datasets[41]. Whereas anchor-free methods[11, 42, 43] often select samples inside the ground truth bounding box or the central area of targets for spatial assignment, and choose samples on a specific level of feature maps according to the predefined target size threshold for scale assignment. However, above strategies, which depend on the heuristic rules, may not be optimal enough.

Thus, recent works provide dynamic label assignment strategies, which allow models to learn to select samples by itself. For instance, FSAF[23] dynamically assigned targets to the suitable feature levels based on computed loss. ATSS[27] proposed an adaptive training sample selection method by adjusting the IoU threshold according to the statistics of targets, which also proposed the center sampling strategy to improve the quality of positive samples. Autoassign[26] used two weighting modules to adjust the category-specific prior distribution according to the appearances of targets. As for oriented object detection, SASM[44] was motivated by the idea of ATSS and designed a dynamic IoU threshold that incorporates shape information. RTMDet[32] proposed a dynamic soft label assignment based on SimOTA[45]. GGHL[31] used a Gaussian heatmaps to define positive samples according to the shape and direction properties of targets. AOPG[15] applied a region assignment scheme to select positive samples within the shrinked OBB area. Oriented RepPoints[46] used an adaptive quality assessment and sample assignment scheme. FSDet[19] assigned a soft weight to each sample among all levels of feature maps. However, the label assignments used by these methods are rarely consider the specific characteristics of targets in RSIs, i.e., large variations in scales and aspect ratios, resulting in insufficient and imbalanced sampling and often introducing large amounts of low-quality samples. Therefore, this study focuses on designing an adaptive label assignment strategy for orientation anchor-free detectors by taking into account the important characteristics in RSIs to address aforementioned problems.

III The Proposed EARL Method

In this section, the architecture of our simple baseline anchor-free detector is firstly presented. Then, the three components of the proposed EARL, i.e., ADS strategy, DED strategy and SDW module, are introduced in detail.

III-A Architecture of the Simple Baseline Anchor-free Detector

To demonstrate the effectiveness of the proposed label assignment strategy, in this paper, a simple but classical anchor-free architecture, namely R-FCOS, is employed as our baseline detector. Compared with FCOS[11], R-FCOS removes centerness branch to be more compact and achieves more simplicity and efficiency, which only contains classification branch and regression branch for oriented object detection.

As shown in Fig. 3, the upper gray block shows the baseline architecture, which consists of the backbone network, feature pyramid network (FPN) and prediction heads. Let and be the feature maps from backbone network and FPN, respectively, where is the layer index, represents the size of feature maps and denotes the number of feature channels. In this work, following FCOS, five levels of multi-scale feature maps are used, where and are generated from and , respectively. Whereas and are generated via up-sampling and , respectively.

After obtaining the feature maps from FPN, the prediction heads with two fully convolutional subnets are employed to predict the categories and regressions for each location on feature maps. Specifically, given a set of ground truths in the input image, where denotes the total number of ground truths and is the index, each in is represented by , as shown in Fig. 4, where is the coordinate of center point of , and represent the width and height of , and represents the counterclockwise angle between the y-axis and long side, and is the class label that the ground truth belongs to.

To generate prediction, we adopt to denote the set of anchor points, which are the pixel-wise locations on . For each anchor point , it can be mapped back to the input image via:

| (1) |

where and represent the locations of on and the input image, respectively. denotes the stride on and denotes the round-down operator. Here, we directly regress the target bounding box at each . Note that, the label assignment strategies are often employed to decide whether each is a positive or negative sample, and allow the network to select higher-quality samples for training.

Then, if is a positive sample and assigned to the ground truth, e.g., , its class label equals to and it has a 5-dimensional vector being the regression targets for the location, where and are the coordinate offsets between the center point of and , as shown in Fig. 4, which can be formulated as:

| (2) |

, and equal to the width, height and angle of , respectively.

During inference, the prediction heads will directly generate classification and regression for each anchor point and output the classification scores and locations after post-processing, hence our proposed strategy is inference cost-free.

The above represents the pipeline of the simple baseline detector, as illustrated in Fig. 3. This simple baseline detector is only used for illustration to show how our proposed EARL strategy works on the baseline detector for sample selection by simply replacing its original label assignment strategy.

Note that, the proposed EARL strategy can be also easily deployed on other baseline detector in the same way. Specifically, we also equipped it with an advanced anchor-free detector, i.e., RTMDet-R [32], for performance evaluation to demonstrate the effectiveness of our study in the experiments.

In the next subsections, the components of our proposed EARL, which are given in the lower green block of Fig. 3, will be introduced in detail.

III-B Adaptive Scale Sampling Strategy

Different from the existing scale assignment strategies, the simple but novel ADS strategy is proposed to select positive samples adaptively according to the scales of targets. Its implementation can be summarized as sampling sequentially from high to low-level feature map, e.g., from to , as illustrated in Fig. 3.

Specifically, for each in the input image, a determined function is used to indicate whether each is inside a certain region of when mapped back to the input image, which can be simply understood as selecting samples inside the mask region on each , as shown in Fig. 3. Then, if is judged to be inside the mask region by , it will be selected into the candidate samples set , where will be discussed in more detail in Section III-C.

When the candidate sample selection is applied from to , we only keep the samples that are closest to the center point of as positive samples based on L2 distance, which is denoted as top- in this paper. Here is a hyperparameter that ensures a balanced number of positive samples for each target as possible, which is suggested in [10] that unbalanced positive samples will decrease the performance, and L2 distance indicates the Euclidean Distance. In details, let denote selection set on and denote the number of selected samples on , which is formulated as:

| (3) |

where denotes the number of candidate samples on . Finally, we subtract from and add the selected candidate samples to the positive samples set , after which we repeat this operation if is greater than 0. Algorithm 1 specifies the proposed method for positive sample selection.

As a result, more high-level samples and fewer low-level samples are assigned to the large targets, and the opposite is conducted for the small targets, according to the scales of targets, while the number of ambiguous samples can be reduced adaptively. In addition, if an anchor point is still assigned to multiple ground truths, it is forced to be assigned to the one with the longest side. Besides, as shown in Fig. 5, if a ground truth escapes from any samples, we assign it with the closest unassigned anchor point, which ensures the information from each target can be used reasonably and partially alleviates the insufficient sampling problem.

In this way, the sequentially adaptive sampling operation of ADS can obtain a better distribution of positive samples when compared with the fixed scale assignment (FSA) strategy and the top- samples for each target (TKT) strategy (i.e., ADS without the sequential selection). As shown in Fig. 6, FSA strategy constrains the level distribution of positive samples. Whereas TKT strategy selects samples clustered at the bottom levels of feature maps, resulting in the imbalanced scale-level sample distribution. Therefore, above strategies will lead to the scale-level bias, especially when the target scale distribution is unbalanced, which is a common phenomenon in RSIs. In contrast, our proposed ADS can alleviate the problems mentioned above by sequentially sampling from high to low level of feature maps adaptively according to the scale variation of targets in RSIs.

III-C Dynamic Elliptical Distribution aided Sampling Strategy

In the Section III-B, the Algorithm 1 has a determined function that takes the ground truth and the anchor point as input, and returns the true or false value depending on whether is inside the certain region of or not. Here, the determined function achieves spatial assignment by constraining the sampling range. Concretely, existing methods [19, 30] often adopt the central area or the rectangle bounding box as the sampling range for spatial assignment, as shown in Fig. 7 (b) and (c), respectively. However, these methods rarely consider the characteristics of targets in RSIs, which either introduce more background noise or result in insufficient sampling.

To this end, the proposed DED strategy selects samples following an elliptical distribution as illustrated in Fig. 7 (a), which can adjust sampling range dynamically according to the aspect ratios of targets, i.e., the sampling range tends to be a contracted circular distribution when the shape of target is close to a square, and an inner tangent ellipse when the shape is elongated. Such that it can achieve more flexible sample distribution to fit the shapes and orientations of targets.

Suppose that given a ground truth and an anchor point , which is mapped back to the input image, the function can be formulated as:

| (4) |

where and are calculated by the angle of and the offsets of coordinates between and , which are formulated respectively as:

| (5) |

the ratio factor is an adaptive threshold determined by the target shape that controls the range of elliptical distribution, and to select high-quality samples with less background noise. Here, is calculated as follows:

| (6) |

In this way, by considering the shapes and orientations of the targets, our proposed DED strategy can avoid introducing too much background noise, which is more suitable for object detection in RSIs.

III-D Spatial Distance Weighting Module

To further mitigate the effect of low-quality predictions generated from the anchor points far away from the center of ground truth, instead of employing extra prediction branch such as centerness branch[11] or IoU prediction branch[19], SDW module is proposed to enhance the high-quality sample selection by assigning different weights to the samples at different pixel-wise locations.

For each positive sample , SDW module calculates weight according to the scale of that it belongs to, and the L2 distance between and center point of , which is represented as . For classification, is used to weight the probability for the corresponding category. For box regression, we first establish the regression loss and then multiply by . Here, is formulated as:

| (7) |

where , and is the set of all the distance of positive samples for . The weight is normalized as in Eq. (7) to make sure that the highest weight is given to the closest positive sample, and achieves adaptive distance weighting, i.e., assigning larger weights to the samples close to the center of the target, and smaller weights to the samples near the boundaries.

Finally, is applied to weight the classification probability and box regression loss. The total loss is constructed with two types of loss, i.e., the classification loss and box regression loss , denoted as

| (8) |

where is the penalty parameter to balance these two types of loss, denotes the number of positive samples, which is used for normalization. is the indicator function, which equals to 1 if the condition is satisfied, otherwise 0. The classification loss is formulated as:

| (9) |

where is the ground truth for classification and is the classification score from the network, and are hyperparameters of the focal loss[10]. The box regression loss is formulated as:

| (10) |

where is smooth L1 loss function as defined in [47].

With SDW module to enhance the high-quality sample selection, our proposed EARL strategy can further improve the detection performance.

IV Experiments and Results

In this section, experiments on publicly available and challenging RSIs datasets are conducted to verify the effectiveness of our proposed EARL. Details of the experiment setup, parameter selection, ablation study, comparison with existing label assignment strategies and state-of-the-art methods are presented in the following subsections.

IV-A Experiment Setup

IV-A1 Datasets

DOTA[48] is a large-scale dataset for object detection in RSIs with OBB annotations for oriented targets, which contains 2,806 aerial images collected from different sensors and platforms. The fully annotated images contain 188,282 instances with a wide variety of scales, orientations and shapes, involving 15 common target categories: plane (PL), baseball diamond (BD), bridge (BR), ground track field (GTF), small vehicle (SV), large vehicle (LV), ship (SH), tennis court (TC), basketball court (BC), storage tank (ST), soccer ball field (SBF), roundabout (RA), harbor (HA), swimming pool (SP) and helicopter (HC). In our experiments, DOTA is used following [17], where both training and validation sets are used for training, and the test set is employed for evaluating by submitting results to the evaluation server from the dataset provider. Due to the large size of images, following [21], all images from the datasets are cropped to pixels with a stride of pixels for memory efficiency, and resized to pixels during both training and inference.

DIOR-R[15] is a large-scale oriented object detection dataset containing 23,463 images and 192,518 instances. The dataset includes 20 common target categories: airplane (APL), airport (APO), baseball field (BF), BC, BR, chimney (CH), expressway service area (ESA), expressway toll station (ETS), dam (DAM), golf field (GF), GTF, HA, overpass (OP), SH, stadium (STA), ST, TC, train station (TS), vehicle (VE) and windmill (WM). In our experiments, we keep the image size at the original size of pixels during both training and inference.

HRSC2016[49] is a challenging high resolution ship detection dataset, which contains 1,061 aerial images from two scenarios including inshore and offshore ships. The training, validation and test sets include 436 images, 181 images and 444 images, respectively. The size of images ranges from to pixels. In our experiments, the images of HRSC2016 are resized to pixels during both training and inference.

IV-A2 Implementation Details

Unless specified, we adopt ResNet-50[50] pretrained on ImageNet[51] as the backbone network, and FPN[22] as the neck network in our experiments. Then, the convolution weight that composes the prediction head is initialized with normal distribution, where the standard deviation is set to be 0.01. Also, the convolution bias is enabled and initialized with 0. We use the group normalization[52] in the network, which performs better than the batch normalization[53]. and are set to be 0.25 and 2.0 respectively in focal loss[10], and in the smooth L1 loss is set as 0.01.

During training, we use stochastic gradient descent (SGD)[54] as the optimization approach unless specified, and the weight decay, momentum and gamma of SGD are set to be 0.0001, 0.9 and 0.1, respectively. In addition, to stabilize the training process and yield better performance, we adopt a learning rate scheduler with the combination of warm-up training and multi-step learning rate. Here, a linear warm-up method is applied, where the warm-up factor is set as 0.001 with 1000 iterations at the beginning of training, and random flipping is employed for data augmentation. All the experiments were implemented on a computer with four NVIDIA A100 GPUs, while the inference speed is measured on a single GeForce RTX 3090 GPU with 24GB memory.

For experiments on DOTA dataset, our detectors are trained for 40k iterations with 16 images per device, and the learning rate is initialized with 0.045 but decayed to 1/10 at the iterations of 26k and 35k. As for DIOR-R dataset, models are trained for 20k iterations, and the learning rate is also initialized as 0.045 and decayed to 1/10 at 13k and 17.5k iterations. For experiments on HRSC2016 dataset, we train the models for 90k iterations, and the learning rate is initialized with 0.02 and decays at 60k and 80k iterations with a total of 16 images per batch.

IV-A3 Evaluation Metrics

For evaluating the accuracy of detectors, the mean Average Precision (mAP) with IoU threshold 0.5 is adopted, which is the widely used metric in object detection tasks. mAP07 and mAP12 indicate the mAP under PASCAL VOC2007 and VOC2012 metrics[55]. The Average Precision (AP) of each category is employed to validate the detection accuracy of different categories. The frames per second (fps) is used to evaluate the inference speed.

IV-B Parameter Selection

The sample number in ADS strategy is empirically selected in our study. Due to its importance, we conducted experiments on two complex datasets, i.e., DOTA and DIOR-R, to quantitatively analyze the impact of the choice of on detection performance. In addition, we found that the number of samples affects the training duration to some extent, so for the sake of efficiency, we test from 12 to 18, and the results are given in Fig. 8.

| Methods | PL | BD | BR | GTF | SV | LV | SH | TC | BC | ST | SBF | RA | HA | SP | HC | mAP (%) \bigstrut |

| R-FCOS (Baseline) | 88.60 | 81.08 | 45.74 | 64.58 | 79.83 | 77.89 | 86.95 | 90.52 | 80.24 | 84.09 | 40.55 | 58.31 | 66.24 | 71.78 | 49.70 | 71.07 \bigstrut[t] |

| +EARL (w/o -E, -W) | 89.87 | 80.57 | 46.22 | 65.61 | 80.51 | 78.35 | 87.39 | 90.76 | 78.41 | 86.28 | 45.10 | 61.42 | 65.08 | 69.56 | 51.70 | 71.79 (+0.72) |

| +EARL (w/o -A, -W) | 89.12 | 77.67 | 46.98 | 65.06 | 80.27 | 78.03 | 87.68 | 90.54 | 82.65 | 85.23 | 47.67 | 60.58 | 67.08 | 70.98 | 44.64 | 71.61 (+0.54) |

| +EARL (w/o -W) | 90.05 | 81.16 | 47.01 | 65.17 | 80.39 | 79.85 | 87.10 | 90.37 | 83.11 | 86.17 | 44.32 | 65.41 | 66.82 | 71.10 | 50.56 | 72.57 (+1.50) |

| +EARL | 89.76 | 78.79 | 47.01 | 65.20 | 80.98 | 79.99 | 87.33 | 90.74 | 79.17 | 86.23 | 49.09 | 65.87 | 65.75 | 71.86 | 55.21 | 72.87 (+1.80) \bigstrut[b] |

| Methods | APL | APO | BF | BC | BR | CH | ESA | ETS | DAM | GF | GTF | HA | OP | SH | STA | ST | TC | TS | VH | WM | mAP (%) \bigstrut |

| R-FCOS (Baseline) | 56.19 | 37.61 | 76.82 | 85.71 | 26.82 | 74.57 | 74.45 | 59.26 | 20.24 | 77.66 | 77.74 | 39.60 | 46.08 | 81.08 | 69.56 | 63.34 | 85.53 | 53.91 | 39.81 | 65.71 | 60.58 \bigstrut[t] |

| +EARL (w/o -E, -W) | 55.76 | 39.89 | 74.50 | 83.94 | 30.48 | 76.29 | 74.22 | 63.76 | 24.05 | 78.56 | 76.90 | 35.44 | 49.20 | 82.00 | 70.26 | 61.98 | 84.08 | 57.32 | 43.88 | 67.17 | 61.48 (+0.90) |

| +EARL (w/o -A, -W) | 61.43 | 41.14 | 75.76 | 86.24 | 26.85 | 76.13 | 74.69 | 59.51 | 22.41 | 79.28 | 77.95 | 39.64 | 46.20 | 81.21 | 69.76 | 67.24 | 85.27 | 55.10 | 39.29 | 64.02 | 61.46 (+0.88) |

| +EARL (w/o -W) | 58.38 | 43.95 | 75.59 | 83.95 | 29.99 | 76.91 | 76.89 | 61.08 | 22.39 | 79.31 | 79.32 | 38.75 | 48.26 | 82.37 | 70.54 | 66.23 | 86.34 | 54.51 | 44.00 | 64.75 | 62.18 (+1.60) |

| +EARL | 61.13 | 44.83 | 77.74 | 84.66 | 30.42 | 78.50 | 76.73 | 62.79 | 24.67 | 79.58 | 79.19 | 39.64 | 48.18 | 82.64 | 76.59 | 64.90 | 86.87 | 57.98 | 43.82 | 64.90 | 63.29 (+2.71) \bigstrut[b] |

For the performance on DOTA observed from Fig. 8, when =12, although the quality of each selected sample is guaranteed, the number of positive samples is inadequate for the model to learn feature distribution, so the mAP has only reached 71.52%. The performance of the model gradually improves as increases, and the detector achieves the best mAP of 72.87% when =15. However, as continues to increase, more background noise are included since samples located near the boundaries of targets are selected, so the mAP score drops from 72.87% to 71.93% when =18. Whereas for the selection of on DIOR-R, it shows the similar performance trend as that on DOTA, where the best performance is obtained when is set as 14. It can be seen that various selections yield relatively stable detection performance and can be simply changed the value of to suit different datasets.

IV-C Ablation Study

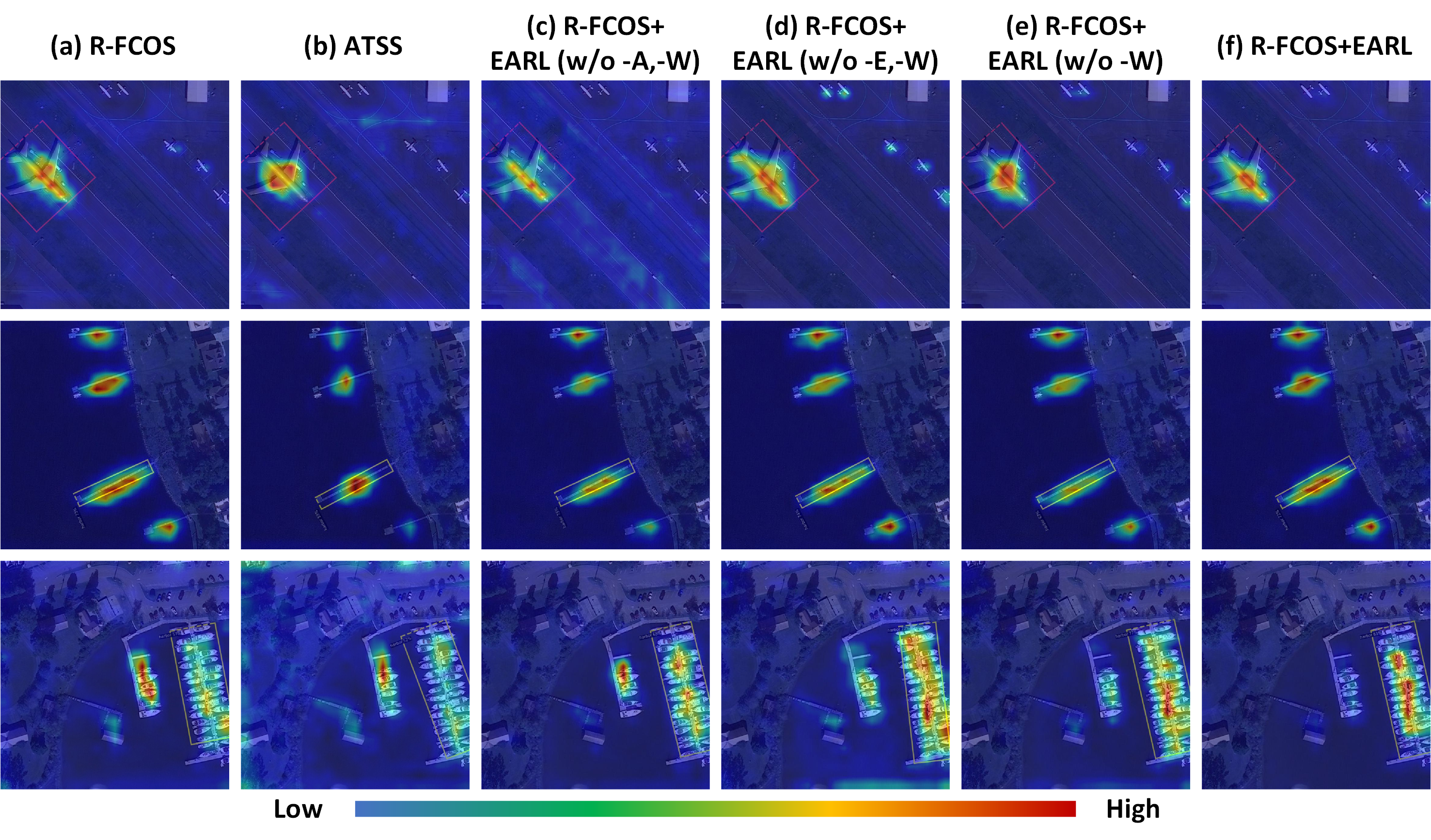

In this section, a series of ablation experiments were conducted on three popular datasets, i.e., DOTA, DIOR-R and HRSC2016, and the detailed results are listed in Tables I, II and III, respectively. In addition, we also visualized the gradient-based feature heatmaps[56] to further understand the effectiveness of each component of EARL. The visualization results are illustrated in Fig. 11. Here, the components of proposed EARL are indicated in abbreviated form, i.e., ‘-A’ indicates ADS strategy, ‘-E’ refers to DED strategy, and ‘-W’ means SDW module. In addition, ‘w/o’ is applied to indicate without specified modules.

IV-C1 Effectiveness of ADS

The proposed ADS strategy resolves the insufficient and imbalanced sampling problem for targets with extreme scales and aspect ratios. As shown in rows 1 and 2 of Tables I, II and III, the detectors trained with our ADS achieve the improvement of 0.72% and 0.90% in terms of mAP on DOTA and DIOR-R, and give a significant improvement of 2.28% in terms of mAP12 on HRSC2016, respectively, which demonstrates the effectiveness and robustness of ADS. In addition, the proposed ADS strategy boosts the AP performance of BR, SV, GTF and SBF on DOTA dataset by 0.48%, 0.68%, 1.03% and 4.55% compared with the baseline method, respectively, which indicates that our ADS is beneficial in assigning samples to targets with extreme scales and aspect ratios.

Taking PL and SV for instance, when using the baseline method, samples are restricted to be assigned on specific level of feature maps, as illustrated in Fig. 9, and yields feature insufficient for various scales of targets. By employing ADS strategy, samples are selected more balanced for both PL and SV on each feature map, hence features with more various scales can be learned. However, although ADS strategy is able to obtain a better scale-level distribution, it introduces background noise in higher-level feature maps for specific categories, e.g., BD and BC, and brings down the accuracy. In order to mitigate the noise, DED with SDW are applied and discussed in the following subsections.

| Methods | mAP07 (%) | mAP12 (%) \bigstrut |

| R-FCOS (Baseline) | 87.16 | 89.79 \bigstrut[t] |

| +EARL (w/o -E, -W) | 87.97 (+0.81) | 92.07 (+2.28) |

| +EARL (w/o -A, -W) | 87.86 (+0.70) | 90.72 (+0.93) |

| +EARL (w/o -W) | 88.75 (+1.59) | 92.78 (+2.99) |

| +EARL | 88.98 (+1.82) | 93.04 (+3.25) \bigstrut[b] |

IV-C2 Effectiveness of DED

The DED strategy is proposed to make the sample distribution more flexible to fit the shapes and orientations of targets. As shown in rows 1 and 3 of Tables I, II and III, our DED achieves 0.54% and 0.88% mAP improvement by replacing the original spatial assignment in the baseline method, i.e., R-FCOS, on DOTA and DIOR-R, respectively. Besides, it also provides 0.70% mAP07 and 0.93% mAP12 improvement on HRSC2016. The results demonstrate the effectiveness of our DED strategy. In addition, from rows 1 and 3 of Table I, the AP of the elongated targets, such as BR and HA, are improved by 1.24% and 0.84%, respectively, which further proves that our DED is more suitable for detecting targets with large aspect ratios.

Moreover, when combining DED to the proposed ADS, the mAP is improved by 1.50% and 1.60% on DOTA and DIOR-R, and the mAP07 and mAP12 are improved by 1.59% and 2.99% on HRSC2016, respectively, compared with the baseline method, as shown in rows 1 and 4 of Tables I, II and III. The results further demonstrate that our DED strategy can further unlock the potential of ADS strategy, due to its ability to filter out low-quality samples.

IV-C3 Effectiveness of SDW

SDW module is proposed to further mitigate the influence from low-quality samples by weighting the samples adaptively, and achieve high-quality sampling, which yields the mAP improvement of 0.30% and 1.11% on DOTA and DIOR-R, and gives the mAP12 improvement of 0.26% on HRSC2016, respectively, as compared rows 4 and 5 of Tables I, II and III. In addition, SDW module significantly improves AP for difficult categories, e.g., SBF, HC and DAM with 4.77%, 4.65% and 2.28% improvement respectively, by learning feature information from higher-quality samples. Moreover, to further demonstrate the effect of SDW module, the visualization comparison is provided to show the detection performance of our method with and without SDW module, as illustrated in Fig. 10, where we can see that our SDW module can further enhance the overall detection performance.

Overall, by employing ADS, DED and SDW, the proposed EARL strategy significantly enhances the detection performance in RSIs with 1.80% and 2.71% mAP improvement on DOTA and DIOR-R, and 1.82% mAP07 and 3.25% mAP12 improvement on HRSC2016, respectively, as shown in rows 1 and 5 of Tables I, II and III. The results demonstrate the effectiveness and robustness of the proposed EARL strategy by taking into account of the characteristics of targets.

IV-C4 Visualization Explanation

To further understand the effectiveness of each proposed component of EARL, the gradient-based class-discriminative saliency maps[56] are displayed to visualize the feature heatmaps of detectors with different label assignment strategies, as illustrated in Fig. 11. As illustrated in row 1 of Fig. 11 (a) and (c), EARL (w/o -A, -W) constricts the sampling range based on R-FCOS, so that less attention is paid to the background. From rows 1 and 2 of Fig. 11 (a) and (b), ATSS focuses too much on the central region resulting in its failure to learn sufficient features and can hardly reflect the orientation and shape characteristics. Row 3 of Fig. 11 (a) and (d) show that EARL (w/o -E, -W) can extract richer information of targets with extreme scales and aspect ratios. However, due to its ability to obtain training samples from multi-level feature maps, it may introduce background noise. As shown in Fig. 11 (d) and (e), using DED strategy can mitigate the impact of noise and release the potential of ADS strategy. In addition, as illustrated in Fig. 11 (e) and (f), SDW module makes the detector more focused on the high-quality samples to learn better feature representation of the targets, thereby it can accurately focus on the target regions in the images.

IV-D Comparison with Existing Label Assignment Strategies

To further justify the effectiveness and superiority of the proposed scale assignment strategy, i.e., ADS, and the spatial assignment strategy, i.e., DED, a series of experiments were conducted on DOTA and DIOR-R datasets. For fair comparison, all compared label assignment strategies are evaluated on the same baseline detector (i.e., R-FCOS), and the results are listed in Tables IV, V and VI.

IV-D1 Comparison with Existing Scale Assignments

The proposed ADS strategy is compared with four different scale assignment strategies, including the fixed scale assignment (FSA) strategy adopted in [31, 11, 15], selecting all samples among all levels of feature maps (ALS) used in [19], selecting top- samples per feature level (TKL) utilized in [27], and selecting top- samples for each target without sequential selection (TKT) used in [46]. The results are given in Table IV.

It can be observed that using the ALS strategy leads to performance degradation due to the introduction of large amounts of low-quality samples and ambiguous samples. Regarding the TKL and TKT strategies, they cannot reasonably exploit the large scale variations of targets, leading to the inferior performance than our proposed ADS. Where our proposed ADS achieves the mAP of 71.79% and 61.48% on DOTA and DIOR-R datasets, respectively, which outperform other scale assignment strategies. The results demonstrate the effectiveness of our ADS strategy by considering the large variations in scales and aspect ratios of targets in RSIs, which achieves more flexible and adaptive scale assignment, and is more suitable for object detection in RSIs.

| Sampling Range | RBB | CA | DED \bigstrut | ||||

| mAP (%) | DOTA | 71.07 | 70.61 | 71.61\bigstrut[t] | |||

| DIOR-R | 60.58 | 60.61 | 61.46\bigstrut[b] | ||||

IV-D2 Comparison with Existing Spatial Assignments

The proposed DED strategy is compared with other sampling range for spatial assignment based on the R-FCOS, i.e., the rectangle bounding box (RBB) and the central area (CA). The results are provided in Table V.

From Table V, we can see that our DED strategy improves mAP by 0.54% and 0.88% when compared with RBB and CA, respectively. The reason is that low-quality samples are often involved in RBB, as illustrated in Fig. 12 (a), and using CA often directly discards foreground samples at both ends of bounding box, resulting in insufficient sampling, as shown in Fig. 12 (b). When employing the proposed DED method, the sample distribution is more flexible to fit the shapes and orientations of targets, hence is more suitable for oriented object detection in RSIs, and achieves the mAP of 71.61% and 61.46% on DOTA and DIOR-R datasets, respectively.

In addition, the dynamic elliptical distribution in our proposed DED is compared with those with fixed ratio factor , which is introduced to control the size of sampling range. The results are given in Table VI, where the fixed elliptical distribution achieves their peak performance of 72.49% and 62.97% mAP when =0.6 on DOTA and DIOR-R datasets, respectively. Whereas our dynamic elliptical distribution achieves better performance of 72.87% and 63.29% on DOTA and DIOR-R datasets by dynamically adjusting sampling range according to the aspect ratios of targets, and avoids parameter selection.

| 0.4 | 0.6 | 0.8 | 1.0 | Ours \bigstrut | |||||

| mAP (%) | DOTA | 71.99 | 72.49 | 72.16 | 71.76 | 72.87\bigstrut[t] | |||

| DIOR-R | 62.38 | 62.97 | 62.68 | 62.36 | 63.29\bigstrut[b] | ||||

| Methods | Backbone | PL | BD | BR | GTF | SV | LV | SH | TC | BC | ST | SBF | RA | HA | SP | HC | mAP (%) | fps |

| Anchor-based | ||||||||||||||||||

| CenterMap-Net[13] | Res50 | 89.02 | 80.56 | 49.41 | 61.98 | 77.99 | 74.19 | 83.74 | 89.44 | 78.01 | 83.52 | 47.64 | 65.93 | 63.68 | 67.07 | 61.59 | 71.59 | - |

| R3Det[21] | Res50 | 89.02 | 75.47 | 43.86 | 65.84 | 75.83 | 73.44 | 86.03 | 90.57 | 81.11 | 82.84 | 55.57 | 59.10 | 56.57 | 70.31 | 50.45 | 70.40 | - |

| S2A-Net[20] | Res50 | 89.11 | 82.84 | 48.37 | 71.11 | 78.11 | 78.39 | 87.25 | 90.83 | 84.90 | 85.64 | 60.36 | 62.60 | 65.26 | 69.13 | 57.94 | 74.12 | 17.6 |

| S2A-Net[20]‡ | Res50 | 88.89 | 83.60 | 57.74 | 81.95 | 79.94 | 83.19 | 89.11 | 90.78 | 84.87 | 87.81 | 70.30 | 68.25 | 78.30 | 77.01 | 69.58 | 79.42 | 17.6 |

| RoI-Trans.[36]‡ | Res101 | 88.53 | 77.91 | 37.63 | 74.08 | 66.53 | 62.97 | 66.57 | 90.50 | 79.46 | 76.75 | 59.04 | 56.73 | 62.54 | 61.29 | 55.56 | 67.74 | 7.8 |

| SCRDet[28]‡ | Res101 | 89.98 | 80.65 | 52.09 | 68.36 | 68.36 | 60.32 | 72.41 | 90.85 | 87.94 | 86.86 | 65.02 | 66.68 | 66.25 | 68.24 | 65.21 | 72.61 | 9.5 |

| Gliding-Ver.[37]‡ | Res101 | 89.64 | 85.00 | 52.26 | 77.34 | 73.01 | 73.14 | 86.82 | 90.74 | 79.02 | 86.81 | 59.55 | 70.91 | 72.94 | 70.86 | 57.32 | 75.02 | 13.1 |

| Hou [24] | Res101 | 89.32 | 76.05 | 50.33 | 70.25 | 76.44 | 79.45 | 86.02 | 90.84 | 82.80 | 82.50 | 58.17 | 62.46 | 67.38 | 71.93 | 45.52 | 72.63 | - |

| Hou [24]‡ | Res101 | 88.69 | 79.41 | 52.26 | 65.51 | 74.72 | 80.83 | 87.42 | 90.77 | 84.31 | 83.36 | 62.64 | 58.14 | 66.95 | 72.32 | 69.34 | 74.44 | - |

| Anchor-free | ||||||||||||||||||

| ATSS[27]† | Res50 | 88.47 | 80.05 | 47.27 | 60.65 | 79.85 | 78.80 | 87.41 | 90.75 | 77.37 | 85.24 | 43.22 | 60.80 | 66.52 | 70.95 | 41.82 | 70.61 | - |

| SASM[44] | Res50 | 86.42 | 78.97 | 52.47 | 69.84 | 77.30 | 75.99 | 86.72 | 90.89 | 82.63 | 85.66 | 60.13 | 68.25 | 73.98 | 72.22 | 62.37 | 74.92 | - |

| AOPG[15] | Res50 | 89.27 | 83.49 | 52.50 | 69.97 | 73.51 | 82.31 | 87.95 | 90.89 | 87.64 | 84.71 | 60.01 | 66.12 | 74.19 | 68.30 | 57.80 | 75.24 | 12.4 |

| Oriented RepPoints[46] | Res50 | 87.02 | 83.17 | 54.13 | 71.16 | 80.18 | 78.40 | 87.28 | 90.90 | 85.97 | 86.25 | 59.90 | 70.49 | 73.53 | 72.27 | 58.97 | 75.97 | - |

| FSDet[19] | Res50 | 89.20 | 84.33 | 55.38 | 74.81 | 80.85 | 78.79 | 88.24 | 90.85 | 86.85 | 85.85 | 67.14 | 64.79 | 76.49 | 75.11 | 69.02 | 77.85 | - |

| GGHL[31] | DarkNet53 | 89.74 | 85.63 | 44.50 | 77.48 | 76.72 | 80.45 | 86.16 | 90.83 | 88.18 | 86.25 | 67.07 | 69.40 | 73.38 | 68.45 | 70.14 | 76.95 | 42.3 |

| IENet[17] | Res101 | 88.15 | 71.38 | 34.26 | 51.78 | 63.78 | 65.63 | 71.61 | 90.11 | 71.07 | 73.63 | 37.62 | 41.52 | 48.07 | 60.53 | 49.53 | 61.24 | 16.9 |

| Axis-Learning[43] | Res101 | 79.53 | 77.15 | 38.59 | 61.15 | 67.53 | 70.49 | 76.30 | 89.66 | 79.07 | 83.53 | 47.27 | 61.01 | 56.28 | 66.06 | 36.05 | 65.98 | 14.1 |

| BBAVectors[39] | Res101 | 88.35 | 79.96 | 50.69 | 62.18 | 78.43 | 78.98 | 87.94 | 90.85 | 83.58 | 84.35 | 54.13 | 60.24 | 65.22 | 64.28 | 55.70 | 72.32 | 18.4 |

| R-FCOS | Res50 | 88.60 | 81.08 | 45.74 | 64.58 | 79.83 | 77.89 | 86.95 | 90.52 | 80.24 | 84.09 | 40.55 | 58.31 | 66.24 | 71.78 | 49.70 | 71.07 | 33.5 |

| R-FCOS-EARL | Res50 | 89.76 | 78.79 | 47.01 | 65.20 | 80.98 | 79.99 | 87.33 | 90.74 | 79.17 | 86.23 | 49.09 | 65.87 | 65.75 | 71.86 | 55.21 | 72.87 | 33.5 |

| R-FCOS-EARL‡ | Res50 | 90.13 | 83.90 | 47.19 | 72.17 | 81.54 | 84.26 | 88.24 | 90.69 | 79.10 | 86.71 | 60.47 | 72.21 | 71.26 | 73.81 | 58.80 | 76.03 | 33.5 |

| R-FCOS-EARL | Res101 | 89.79 | 84.05 | 48.69 | 65.89 | 80.39 | 78.38 | 87.22 | 90.46 | 79.90 | 86.14 | 47.01 | 66.44 | 66.79 | 72.42 | 49.81 | 72.89 | 25.9 |

| R-FCOS-EARL‡ | Res101 | 89.28 | 83.80 | 49.34 | 72.27 | 80.69 | 84.01 | 88.30 | 90.77 | 82.35 | 87.39 | 59.90 | 75.59 | 69.61 | 71.93 | 53.82 | 75.94 | 25.9 |

| RTMDet-R[32]† | CSPNeXt-L | 89.44 | 77.91 | 54.97 | 74.54 | 80.05 | 83.15 | 89.06 | 90.90 | 83.75 | 86.32 | 60.06 | 68.53 | 78.03 | 79.74 | 64.78 | 77.41 | 29.3 |

| RTMDet-R-EARL | CSPNeXt-L | 88.38 | 77.93 | 52.71 | 74.55 | 80.29 | 82.88 | 88.76 | 90.58 | 83.34 | 86.85 | 62.38 | 68.39 | 75.85 | 80.54 | 69.61 | 77.54 | 29.3 |

| RTMDet-R-EARL‡ | CSPNeXt-L | 87.92 | 85.62 | 56.48 | 80.47 | 80.74 | 84.82 | 88.63 | 90.74 | 86.16 | 88.05 | 69.76 | 70.12 | 78.06 | 82.34 | 75.31 | 80.35 | 29.3 |

IV-E Comparison with State-of-the-Art

To evaluate the performance of the proposed EARL strategy, we conducted experiments compared with the state-of-the-art methods on three public RSIs datasets, i.e., DOTA, DIOR-R and HRSC2016, and the results are listed in Tables VII, VIII and IX, respectively. Note that, for the purpose of fair comparison, these results are from the corresponding papers of the comparison methods and datasets, unless specified. In addition, to fully validate the effectiveness and robustness of the proposed EARL strategy, a simple detector, i.e., R-FCOS, and an advanced detector, i.e., RTMDet-R, are selected as the baseline methods, where our EARL is deployed with them, denoted as R-FCOS-EARL and RTMDet-R-EARL, respectively.

IV-E1 Performance Evaluation on DOTA Dataset

Table VII gives the comparison of the proposed method with both anchor-based and anchor-free state-of-the-art detectors on DOTA dataset. It can be observed that anchor-based detectors can usually achieve higher performance, e.g., S2A-Net achieves 79.42% mAP. However, they often have limitations in inference speed due to complex structures and IoU calculations, which results in lower fps, e.g., 17.6 fps by S2A-Net. As a balance of trade-off of accuracy and efficiency, when using ResNet50 as the backbone network, R-FCOS-EARL achieves mAP of 72.87% and inference speed of 33.5 fps, even without any tricks.

When compared with anchor-free detectors, our R-FCOS-EARL method provides the promising performance of 72.87% mAP with ResNet50 and 72.89% mAP with ResNet101. Specifically, when applying multi-scale augmentation in experiments, our method achieves the mAP of 76.03% with ResNet50 and 75.94% with ResNet101 and obtains the best AP results for small targets, such as SV of 81.54%, which further demonstrates the superiority of our method for detecting targets with extreme scales. The visualization of detection results with our method is displayed in Fig. 13. It can be observed that the detector with our EARL strategy can accurately detect densely arranged targets and extreme targets benefited from the sufficient and balanced sampling. Furthermore, our EARL is simply a training strategy which can be easily deployed to other detectors, e.g., when deployed on RTMDet-R, it obtains the state-of-the-art performance, i.e., 80.35% mAP.

In addition, to further illustrate that our method can obtain a better scale-level distribution, as shown in Fig. 14, the proposed R-FCOS-EARL is compared with the most relevant label assignment strategy, ATSS, together with the baseline method R-FCOS on DOTA dataset, by comparing the distribution of the positive samples among multi-level feature maps statistically. It can be seen that R-FCOS and ATSS achieve scale assignment according to the predefined scale constraints, which causes imbalanced sample distribution, and leads to the scale-level bias, especially for targets with extreme scales and aspect ratios, such as GTF and SV. Whereas our method with ADS strategy ensures that targets with different scales and shapes can assign training samples from all feature maps adaptively, which alleviates the imbalanced sampling thus improves detection performance. As a result, R-FCOS-EARL achieves the mAP of 72.87%, which significantly improves mAP by 1.80% and 2.26% over R-FCOS and ATSS, respectively, as shown in Table VII. Furthermore, the AP performance in Table VII also confirms the improvement of our method for both extremely large and small targets, such as GTF, SBF, SV and LV. The above analysis proves that our EARL strategy is advantageous for object detection in RSIs.

| Methods | Backbone | APL | APO | BF | BC | BR | CH | ESA | ETS | DAM | GF | GTF | HA | OP | SH | STA | ST | TC | TS | VH | WM | mAP (%) \bigstrut |

| Anchor-based | ||||||||||||||||||||||

| Gliding-Ver.[37] | Res50 | 65.4 | 28.9 | 75.0 | 81.3 | 33.9 | 74.3 | 64.7 | 70.7 | 19.6 | 72.3 | 78.7 | 37.2 | 49.6 | 80.2 | 69.3 | 61.1 | 81.5 | 44.8 | 47.7 | 65.0 | 60.1 \bigstrut[t] |

| R3Det[21] | Res50 | 62.6 | 43.4 | 71.7 | 81.5 | 36.5 | 72.6 | 79.5 | 64.4 | 27.0 | 77.4 | 77.2 | 40.5 | 53.3 | 79.7 | 69.2 | 61.1 | 81.5 | 52.2 | 43.6 | 64.1 | 61.9 |

| S2A-Net[20] | Res50 | 65.4 | 42.0 | 75.2 | 83.9 | 36.0 | 72.6 | 75.1 | 65.1 | 28.0 | 75.6 | 80.5 | 35.9 | 52.1 | 82.3 | 65.9 | 66.1 | 84.6 | 54.1 | 48.0 | 69.7 | 62.9 |

| RoI-Trans.[36] | Res50 | 63.3 | 37.9 | 71.8 | 87.5 | 40.7 | 72.6 | 68.1 | 78.7 | 26.9 | 69.0 | 82.7 | 47.7 | 55.6 | 81.2 | 78.2 | 70.3 | 81.6 | 54.9 | 43.3 | 65.5 | 63.9 |

| DODet[38] | Res50 | 63.4 | 43.4 | 72.1 | 81.3 | 43.1 | 72.6 | 70.8 | 78.8 | 33.3 | 74.2 | 75.5 | 48.0 | 59.3 | 85.4 | 74.0 | 71.6 | 81.5 | 55.5 | 51.9 | 66.4 | 65.1 \bigstrut[b] |

| Anchor-free | ||||||||||||||||||||||

| SASM[44] | Res50 | 61.4 | 46.0 | 73.2 | 82.0 | 29.4 | 71.0 | 69.2 | 53.9 | 30.6 | 70.0 | 77.0 | 39.3 | 47.5 | 78.6 | 66.1 | 62.9 | 79.9 | 54.4 | 40.6 | 63.0 | 59.8 \bigstrut[t] |

| AOPG[15] | Res50 | 62.4 | 37.8 | 71.6 | 87.6 | 40.9 | 72.5 | 78.0 | 65.4 | 31.1 | 73.2 | 81.9 | 42.3 | 54.5 | 81.2 | 72.7 | 71.3 | 81.5 | 60.0 | 52.4 | 70.0 | 64.4 \bigstrut[b] |

| R-FCOS | Res50 | 56.2 | 37.6 | 76.8 | 85.7 | 26.8 | 74.6 | 74.5 | 59.3 | 20.2 | 77.7 | 77.7 | 39.6 | 46.1 | 81.1 | 69.6 | 63.3 | 85.5 | 53.9 | 39.8 | 65.7 | 60.6 \bigstrut[t] |

| R-FCOS-EARL | Res50 | 61.1 | 44.8 | 77.7 | 84.7 | 30.4 | 78.5 | 76.7 | 62.8 | 24.7 | 79.6 | 79.2 | 39.6 | 48.2 | 82.6 | 76.6 | 64.9 | 86.9 | 58.0 | 43.8 | 64.9 | 63.3 \bigstrut[b] |

| RTMDet-R[32]† | CSPNeXt-L | 71.1 | 49.9 | 71.8 | 86.7 | 43.3 | 80.9 | 80.6 | 70.9 | 34.5 | 76.4 | 81.6 | 49.4 | 57.6 | 89.4 | 71.5 | 77.9 | 81.6 | 57.9 | 52.6 | 65.6 | 67.5 \bigstrut[t] |

| RTMDet-R-EARL | CSPNeXt-L | 71.0 | 46.8 | 76.3 | 84.1 | 46.7 | 80.5 | 84.4 | 71.3 | 32.0 | 77.9 | 80.9 | 48.6 | 58.4 | 89.5 | 72.4 | 78.9 | 81.5 | 56.7 | 54.9 | 71.5 | 68.2 \bigstrut[b] |

| Methods | Backbone | mAP07 (%) | mAP12 (%) |

| Anchor-based | |||

| RoI-Trans.[36] | Res101 | 86.2 | - |

| Gliding-Ver.[37] | Res101 | 88.2 | - |

| DAL[58] | Res50 | 88.6 | - |

| CenterMap-Net[13] | Res50 | - | 92.8 |

| Anchor-free | |||

| IENet[17] | Res101 | 75.0 | - |

| Axis-Learning[43] | Res101 | 78.2 | - |

| TOSO[30] | Res101 | 79.3 | - |

| BBAVectors[39] | Res101 | 88.6 | - |

| DRN[59] | HG104 | - | 92.7 |

| R-FCOS | Res50 | 87.2 | 89.8 |

| R-FCOS-EARL | Res50 | 89.0 | 93.0 |

| R-FCOS-EARL | Res101 | 88.8 | 92.1 |

| RTMDet-R[32]† | CSPNeXt-L | 90.1 | 97.3 |

| RTMDet-R-EARL | CSPNeXt-L | 90.3 | 98.0 |

IV-E2 Performance Evaluation on Other Datasets

To further evaluate the robustness of the proposed EARL strategy, comparative experiments on two other commonly used RSIs datasets, i.e., DIOR-R and HRSC2016 datasets, are conducted, and the results are listed in Tables VIII and IX, respectively.

As shown in Table VIII, our EARL strategy with the simple anchor-free detector, i.e., R-FCOS-EARL, achieves outstanding performance of 63.3% mAP on DIOR-R dataset, which has surpassed several state-of-the-art anchor-based detectors, such as S2A-Net, R3Det and Gliding Vertex. In addition, RTMDet-R-EARL achieves the pretty high mAP of 68.2%, which further demonstrates the effectiveness and robustness of our EARL for oriented object detection in RSIs.

Furthermore, as shown in Table IX, when combined with the simple detector, our method (i.e., R-FCOS-EARL) achieves the promising performance of 89.0% mAP07 and 93.0% mAP12 on HRSC2016 dataset, compared with both anchor-based and anchor-free methods. The results indicate that R-FCOS-EARL is robust to detect targets with arbitrary orientations and large aspect ratios. In addition, our R-FCOS-EARL with ResNet101 achieves 88.8% mAP07 and 92.1% mAP12, which exceeds other compared methods but has a slight performance degradation over ResNet50, since ResNet101 requires more training data to fully capture the model parameters, while HRSC2016 is a small-scale dataset. Furthermore, when combined with the advanced detector, RTMDet-R-EARL achieves the higher performance, i.e., 90.3% mAP07 and 98.0% mAP12, which further illustrates the robustness and generalizability of our EARL strategy in detecting targets with large aspect ratios. The visualization of detection results on DIOR-R and HRSC2016 datasets are illustrated in Fig. 15, which intuitively demonstrates the effectiveness and robustness of the proposed EARL.

Though our EARL can yield better performance through sufficient and balanced sampling by considering large variations in scales and aspect ratios of targets in RSIs, it still suffers from failed detection for densely arranged targets, as illustrated in Fig. 16, where targets with dense distribution are miss detected. One possible solution to mitigate this phenomenon is to incorporate our EARL with a proprietary detector or loss function for object detection in crowd scenes, as our EARL is so simple and can be easily deployed on different models.

V Conclusion

In this paper, we presented a novel and effective label assignment strategy, namely EARL, for object detection in RSIs. In which, the ADS strategy was proposed to solve the problem of insufficient and imbalanced sampling for targets with large variations in scales and aspect ratios, by selecting samples from suitable and continuous multi-level feature maps. Considering the large aspect ratios of targets, the DED strategy was designed to obtain a more accurate and reasonable sampling range while filtering out low-quality samples, which can dynamically adjust sampling range according to the aspect ratios of targets. To make the detector more focused on high-quality samples, the SDW module was developed. The experimental results demonstrate the effectiveness and robustness of our proposed EARL on three publicly available and challenging RSIs datasets, including DOTA, DIOR-R and HRSC2016. Our EARL is so simple that it has the potential to be easily combined with different orientation detectors to achieve better performance.

References

- [1] Z. Deng, H. Sun, S. Zhou, J. Zhao, and H. Zou, “Toward fast and accurate vehicle detection in aerial images using coupled region-based convolutional neural networks,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 10, no. 8, pp. 3652–3664, 2017.

- [2] Y. Wang, D. Zhang, Y. Liu, B. Dai, and L. H. Lee, “Enhancing transportation systems via deep learning: A survey,” Transportation Research Part C: Emerging Technologies, vol. 99, pp. 144–163, 2019.

- [3] F. Shi, T. Zhang, and T. Zhang, “Orientation-aware vehicle detection in aerial images via an anchor-free object detection approach,” IEEE Transactions on Geoscience and Remote Sensing, vol. 59, no. 6, pp. 5221–5233, 2020.

- [4] Y. Yu, H. Guan, D. Li, T. Gu, E. Tang, and A. Li, “Orientation guided anchoring for geospatial object detection from remote sensing imagery,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 160, pp. 67–82, 2020.

- [5] L. A. Varga, B. Kiefer, M. Messmer, and A. Zell, “SeaDronesSee: A maritime benchmark for detecting humans in open water,” in Proceedings of the IEEE Winter Conference on Applications of Computer Vision, 2022, pp. 2260–2270.

- [6] L. Ma, Y. Li, J. Li, C. Wang, R. Wang, and M. A. Chapman, “Mobile laser scanned point-clouds for road object detection and extraction: A review,” Remote Sensing, vol. 10, no. 10, p. 1531, 2018.

- [7] C. Asha and A. Narasimhadhan, “Vehicle counting for traffic management system using YOLO and correlation filter,” in Proceedings of the IEEE International Conference on Electronics, Computing and Communication Technologies, 2018, pp. 1–6.

- [8] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards real-time object detection with region proposal networks,” Proceedings of Advances in Neural Information Processing Systems, vol. 28, pp. 91–99, 2015.

- [9] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 779–788.

- [10] T.-Y. Lin, P. Goyal, R. Girshick, K. He, and P. Dollár, “Focal loss for dense object detection,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 2980–2988.

- [11] Z. Tian, C. Shen, H. Chen, and T. He, “FCOS: Fully convolutional one-stage object detection,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 9627–9636.

- [12] K. Duan, S. Bai, L. Xie, H. Qi, Q. Huang, and Q. Tian, “CenterNet: Keypoint triplets for object detection,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 6569–6578.

- [13] J. Wang, W. Yang, H.-C. Li, H. Zhang, and G.-S. Xia, “Learning center probability map for detecting objects in aerial images,” IEEE Transactions on Geoscience and Remote Sensing, vol. 59, no. 5, pp. 4307–4323, 2020.

- [14] P. Dai, S. Yao, Z. Li, S. Zhang, and X. Cao, “ACE: Anchor-free corner evolution for real-time arbitrarily-oriented object detection,” IEEE Transactions on Image Processing, 2022.

- [15] G. Cheng, J. Wang, K. Li, X. Xie, C. Lang, Y. Yao, and J. Han, “Anchor-free oriented proposal generator for object detection,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–11, 2022.

- [16] G. Cheng, B. Yan, P. Shi, K. Li, X. Yao, L. Guo, and J. Han, “Prototype-cnn for few-shot object detection in remote sensing images,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–10, 2021.

- [17] Y. Lin, P. Feng, J. Guan, W. Wang, and J. Chambers, “IENet: Interacting embranchment one stage anchor free detector for orientation aerial object detection,” arXiv preprint arXiv:1912.00969, 2019.

- [18] R. Qin, Q. Liu, G. Gao, D. Huang, and Y. Wang, “MRDet: A multihead network for accurate rotated object detection in aerial images,” IEEE Transactions on Geoscience and Remote Sensing, 2021.

- [19] Y. Yu, X. Yang, J. Li, and X. Gao, “Object detection for aerial images with feature enhancement and soft label assignment,” IEEE Transactions on Geoscience and Remote Sensing, 2022.

- [20] J. Han, J. Ding, J. Li, and G.-S. Xia, “Align deep features for oriented object detection,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–11, 2021.

- [21] X. Yang, Q. Liu, J. Yan, A. Li, Z. Zhang, and G. Yu, “R3Det: Refined single-stage detector with feature refinement for rotating object,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 4, no. 35, 2021, pp. 3163–3171.

- [22] T.-Y. Lin, P. Dollár, R. Girshick, K. He, B. Hariharan, and S. Belongie, “Feature pyramid networks for object detection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 2117–2125.

- [23] C. Zhu, Y. He, and M. Savvides, “Feature selective anchor-free module for single-shot object detection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 840–849.

- [24] L. Hou, K. Lu, and J. Xue, “Refined one-stage oriented object detection method for remote sensing images,” IEEE Transactions on Image Processing, vol. 31, pp. 1545–1558, 2022.

- [25] J. Wang, Y. Wang, Y. Wu, K. Zhang, and Q. Wang, “FRPNet: A feature-reflowing pyramid network for object detection of remote sensing images,” IEEE Geoscience and Remote Sensing Letters, 2020.

- [26] B. Zhu, J. Wang, Z. Jiang, F. Zong, S. Liu, Z. Li, and J. Sun, “AutoAssign: Differentiable label assignment for dense object detection,” arXiv preprint arXiv:2007.03496, 2020.

- [27] S. Zhang, C. Chi, Y. Yao, Z. Lei, and S. Z. Li, “Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 9759–9768.

- [28] X. Yang, J. Yang, J. Yan, Y. Zhang, T. Zhang, Z. Guo, X. Sun, and K. Fu, “SCRDet: Towards more robust detection for small, cluttered and rotated objects,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 8232–8241.

- [29] L. Shi, L. Kuang, X. Xu, B. Pan, and Z. Shi, “CANet: Centerness-aware network for object detection in remote sensing images,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–13, 2021.

- [30] P. Feng, Y. Lin, J. Guan, G. He, H. Shi, and J. Chambers, “TOSO: Student’s-t distribution aided one-stage orientation target detection in remote sensing images,” in Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, 2020, pp. 4057–4061.

- [31] Z. Huang, W. Li, X.-G. Xia, and R. Tao, “A general Gaussian heatmap label assignment for arbitrary-oriented object detection,” IEEE Transactions on Image Processing, vol. 31, pp. 1895–1910, 2022.

- [32] C. Lyu, W. Zhang, H. Huang, Y. Zhou, Y. Wang, Y. Liu, S. Zhang, and K. Chen, “RTMDet: An empirical study of designing real-time object detectors,” arXiv preprint arXiv:2212.07784, 2022.

- [33] Z. Liu, J. Hu, L. Weng, and Y. Yang, “Rotated region based CNN for ship detection,” in Proceedings of the IEEE International Conference on Image Processing, 2017, pp. 900–904.

- [34] Z. Zhang, W. Guo, S. Zhu, and W. Yu, “Toward arbitrary-oriented ship detection with rotated region proposal and discrimination networks,” IEEE Geoscience and Remote Sensing Letters, vol. 15, no. 11, pp. 1745–1749, 2018.

- [35] X. Yang, H. Sun, K. Fu, J. Yang, X. Sun, M. Yan, and Z. Guo, “Automatic ship detection in remote sensing images from google earth of complex scenes based on multiscale rotation dense feature pyramid networks,” Remote Sensing, vol. 10, no. 1, p. 132, 2018.

- [36] J. Ding, N. Xue, Y. Long, G. Xia, and Q. Lu, “Learning RoI transformer for oriented object detection in aerial images,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 2849–2858.

- [37] Y. Xu, M. Fu, Q. Wang, Y. Wang, K. Chen, G.-S. Xia, and X. Bai, “Gliding vertex on the horizontal bounding box for multi-oriented object detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 4, pp. 1452–1459, 2020.

- [38] G. Cheng, Y. Yao, S. Li, K. Li, X. Xie, J. Wang, X. Yao, and J. Han, “Dual-aligned oriented detector,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–11, 2022.

- [39] J. Yi, P. Wu, B. Liu, Q. Huang, H. Qu, and D. Metaxas, “Oriented object detection in aerial images with box boundary-aware vectors,” in Proceedings of the IEEE Winter Conference on Applications of Computer Vision, 2021, pp. 2150–2159.

- [40] H. Wei, Y. Zhang, Z. Chang, H. Li, H. Wang, and X. Sun, “Oriented objects as pairs of middle lines,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 169, pp. 268–279, 2020.

- [41] X. Zhang, F. Wan, C. Liu, X. Ji, and Q. Ye, “Learning to match anchors for visual object detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 6, pp. 3096–3109, 2021.

- [42] T. Kong, F. Sun, H. Liu, Y. Jiang, L. Li, and J. Shi, “Foveabox: Beyound anchor-based object detection,” IEEE Transactions on Image Processing, vol. 29, pp. 7389–7398, 2020.

- [43] Z. Xiao, L. Qian, W. Shao, X. Tan, and K. Wang, “Axis learning for orientated objects detection in aerial images,” Remote Sensing, vol. 12, no. 6, p. 908, 2020.

- [44] L. Hou, K. Lu, J. Xue, and Y. Li, “Shape-adaptive selection and measurement for oriented object detection,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, no. 1, 2022, pp. 923–932.

- [45] Z. Ge, S. Liu, F. Wang, Z. Li, and J. Sun, “YOLOX: Exceeding YOLO series in 2021,” arXiv preprint arXiv:2107.08430, 2021.

- [46] W. Li, Y. Chen, K. Hu, and J. Zhu, “Oriented reppoints for aerial object detection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2022, pp. 1829–1838.

- [47] R. Girshick, “Fast R-CNN,” in Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 1440–1448.

- [48] G.-S. Xia, X. Bai, J. Ding, Z. Zhu, S. Belongie, J. Luo, M. Datcu, M. Pelillo, and L. Zhang, “DOTA: A large-scale dataset for object detection in aerial images,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 3974–3983.

- [49] Z. Liu, L. Yuan, L. Weng, and Y. Yang, “A high resolution optical satellite image dataset for ship recognition and some new baselines,” in Proceedings of the International Conference on Pattern Recognition Applications and Methods, vol. 2, 2017, pp. 324–331.

- [50] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 770–778.

- [51] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “ImageNet: A large-scale hierarchical image database,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2009, pp. 248–255.

- [52] Y. Wu and K. He, “Group normalization,” in Proceedings of the European Conference on Computer Vision, 2018, pp. 3–19.

- [53] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” in Proceedings of the International Conference on Machine Learning, 2015, pp. 448–456.

- [54] L. Bottou, “Large-scale machine learning with stochastic gradient descent,” in Proceedings of COMPSTAT’2010, 2010, pp. 177–186.

- [55] M. Everingham, L. Van Gool, C. K. Williams, J. Winn, and A. Zisserman, “The PASCAL visual object classes (VOC) challenge,” International Journal of Computer Vision, vol. 88, no. 2, pp. 303–338, 2010.

- [56] A. Chattopadhay, A. Sarkar, P. Howlader, and V. N. Balasubramanian, “Grad-CAM++: Generalized gradient-based visual explanations for deep convolutional networks,” in Proceedings of the IEEE Winter Conference on Applications of Computer Vision, 2018, pp. 839–847.

- [57] A. Newell, K. Yang, and J. Deng, “Stacked hourglass networks for human pose estimation,” in Proceedings of the European Conference on Computer Vision, 2016, pp. 483–499.

- [58] Q. Ming, Z. Zhou, L. Miao, H. Zhang, and L. Li, “Dynamic anchor learning for arbitrary-oriented object detection,” arXiv preprint arXiv:2012.04150, 2020.

- [59] X. Pan, Y. Ren, K. Sheng, W. Dong, H. Yuan, X. Guo, C. Ma, and C. Xu, “Dynamic refinement network for oriented and densely packed object detection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 11 207–11 216.