DynCIM: Dynamic Curriculum for Imbalanced Multimodal Learning

Abstract

Multimodal learning integrates complementary information from diverse modalities to enhance the decision-making process. However, the potential of multimodal collaboration remains under-exploited due to disparities in data quality and modality representation capabilities. To address this, we introduce DynCIM, a novel dynamic curriculum learning framework designed to quantify the inherent imbalances from both sample and modality perspectives. DynCIM employs a sample-level curriculum to dynamically assess each sample’s difficulty according to prediction deviation, consistency, and stability, while a modality-level curriculum measures modality contributions from global and local. Furthermore, a gating-based dynamic fusion mechanism is introduced to adaptively adjust modality contributions, minimizing redundancy and optimizing fusion effectiveness. Extensive experiments on six multimodal benchmarking datasets, spanning both bimodal and trimodal scenarios, demonstrate that DynCIM consistently outperforms state-of-the-art methods. Our approach effectively mitigates modality and sample imbalances while enhancing adaptability and robustness in multimodal learning tasks. Our code is available at https://github.com/Raymond-Qiancx/DynCIM.

1 Introduction

Multimodal learning exploits and integrates heterogeneous yet complementary semantic information from various modalities to mimic human perception and decision-making [47, 38, 8, 25]. However, the inherent heterogeneity across modalities poses challenges in mining cross-modal correlations and achieving effective inter-modal collaboration [17, 14, 18]. Existing studies indicate that in tasks like multimodal sentiment analysis [20, 17, 6], action recognition [25, 21, 1], autonomous driving [35, 36, 13], and large vision-language models [37, 30, 24], incorporating additional modalities often yield slight performance improvements over the strongest individual modality, as shown in Fig 1 (a). This suggests that the potential for inter-modal cooperation remains under-exploited, multimodal models still struggle with under-optimized challenges.

To fully unlock the potential of multimodal models and address existing under-optimized challenges, we identify three key issues that hinder effective multimodal learning: ❶ Inconsistent Learning Efficiency, different modalities converge at varying rates, resulting in inconsistent learning efficiency [28, 33], as shown in Fig. 1 (b). Therefore, ignoring modality-specific characteristics and applying uniform optimization strategies leads to suboptimal performance, necessitating a tailored approach that dynamically adapts to each modality’s convergence rate and representation capability. ❷ Modality Imbalance, the limited interpretability of deep models hinders the ability to observe the fine-grained contributions of each modality. Existing studies [34, 8, 40, 16, 48] show that models tend to prioritize dominant modalities while under-utilizing weaker ones, exacerbating inter-modal imbalances and further complicating the implementation of effective optimization strategies. ❸ Sample Quality Variability, the quality of multimodal data fluctuates across instances due to environmental factors or sensor issues [45, 46, 47]. As shown in Fig 2, the informativeness and representation capabilities of the visual modality differ across samples, resulting in varying contributions to multimodal predictions [47, 32, 3, 10]. However, mainstream methods typically batch multimodal samples randomly during iterative training, disregarding the inherent imbalances in sample difficulty, which can hinder model convergence and performance. Overall, single static optimization strategies are insufficient in addressing the dynamic fluctuations of sample quality and modality contributions, ultimately limiting the potential for effective multimodal collaboration and the exploitation of complementary inter-modal strengths.

To address these challenges, we propose DynCIM, a dynamic multimodal curriculum learning framework that adaptively optimizes cross-modal collaboration from both sample and modality perspectives. Specifically, Sample-level Dynamic Curriculum leverages back-propagation byproducts without extra neural modules to assess the real-time difficulty of multimodal samples. A dynamic weighting strategy prioritizes volatile metrics, allowing the model to emphasize critical aspects of sample evaluation under varying conditions. Meanwhile, Modality-level Dynamic Curriculum quantifies each modality’s contribution from global and local perspectives, using the Geometric Mean to evaluate overall performance improvement and the Harmonic Mean to detect redundant modalities. This dual-perspective approach ensures a more balanced fusion process by optimizing both collective impact and individual contributions, ultimately enhancing multimodal learning efficiency. To mitigate inter-modal imbalances, we introduce a Modality Gating Mechanism that dynamically adjusts each modality’s contribution, suppressing redundancy while amplifying informative signals. An adaptive balance factor further refines fusion by shifting the model’s focus between overall effectiveness and individual modality optimization, enhancing inter-modal coordination.

By integrating these mechanisms, DynCIM effectively addresses both sample and modality imbalances, dynamically guiding training to emphasize representative instances while progressively incorporating more challenging ones. This adaptive learning framework ensures a balanced, efficient, and robust multimodal learning process, ultimately improving inter-modal collaboration and fully leveraging complementary strengths for more effective decision-making. To validate its effectiveness and generalizability, we evaluate DynCIM across six widely used multimodal benchmarks in both bimodal and trimodal scenarios. Our contributions are threefold:

-

•

Dynamic Curricum Learning. We propose DynCIM, a multimodal curriculum learning framework that adaptively addresses sample- and modality-level imbalances, ensuring a progressive and balanced learning process.

-

•

Adaptive Optimization Strategy. We introduce a dual-level curriculum, combining Sample-level Curriculum for difficulty-aware training and Modality-level Curriculum for optimal modality fusion, with a Modality Gating Mechanism dynamically balancing modality contributions for effective multimodal learning.

-

•

Empirical Effectiveness. Extensive experiments on six multimodal benchmarks demonstrate that DynCIM consistently outperforms state-of-the-art methods in both multimodal fusion and imbalanced multimodal learning tasks, confirming its robustness and generalizability.

2 Related Work

2.1 Imbalanced Multimodal Learning

Multimodal learning enhances decision-making by leveraging the strengths of each modality. However, modality imbalance arises when certain modalities dominate due to uneven unimodal representational capabilities and training distribution bias [5, 33, 38]. To mitigate this, methods like OGM-GE [21], PMR [8], and SMV [32] adjust modality contributions through gradient modulation, prototype clustering, and Shapley-based resampling, respectively. While effective, these approaches primarily focus on modality contributions, overlooking sample-level imbalances caused by data quality variations or latent noise. Randomly batching samples can degrade performance and slow convergence, particularly when low-quality data dominates early training stages. To address this, we propose a curriculum learning strategy that dynamically reweights samples based on their difficulty and inter-modal collaboration effectiveness, ensuring a progressive learning process from simpler to more challenging examples.

2.2 Curriculum Learning

Curriculum learning [2] facilitates a gradual transition from simple to complex tasks during model training, guided by two components: the Difficulty Measurer and the corresponding Training Scheduler. This easy-to-hard strategy helps guide and denoise the learning process, accelerating convergence and enhancing generalization capabilities [29]. CMCL [19] and I2MCL [49] assess difficulty using pre-trained models, but their reliance on such models increases inference costs and limits applicability in resource-constrained scenarios. To overcome this, we propose a dynamic curriculum learning method that leverages back-propagation byproducts to assess real-time sample difficulty without extra neural modules. By dynamically adjusting training priorities based on sample difficulty and modality collaboration effectiveness, our approach enhances learning efficiency, accelerates convergence, and improves multimodal performance.

3 Proposed DynCIM

Motivation and Overview. Multimodal Learning faces two key challenges: (1) heterogeneous data quality and convergence rates across modalities, leading to inconsistent learning dynamics, (2) modality imbalance, where dominant modalities suppress weaker ones, limiting the potential for effective cross-modal collaboration. To address these, we introduce DyCIM, a dynamic curriculum learning framework that adaptively prioritizes samples and modalities based on their real-time difficulty and contribution. DyCIM consists of three components: ❶ Dynamic curriculum that evaluates the instantaneous difficulty of each multimodal sample using metrics from both sample and modality perspectives (Section 3.2-3.3). ❷ Dynamic fusion strategy that adaptively integrates dynamically weighted samples and modality contributions, balancing between overall fusion effectiveness and individual modality fine-tuning (Section 3.4). ❸ Overall multimodal curriculum learning framework that regulates the learning process across diverse multimodal samples, prioritizing informative samples and modalities while minimizing redundancy, thus enhancing training efficiency and robustness (Section 3.5).

3.1 Model Formulation

For multimodal tasks, given the dataset , where is the size of . Each sample has modalities, and is the corresponding label, is the size of label space. To leverage information from multiple modalities and achieve effective multimodal cooperation, each modality employs a specific pre-trained unimodal encoder to extract features of respective modal data, denoted as . For modality , its encoder can be regarded as a probabilistic model that convert an observation to a Softmax-based predictive distribution .

3.2 Sample-level Dynamic Curriculum

To systematically evaluate sample difficulty from multiple perspectives relevant to real-world multimodal tasks, we propose a task-oriented sample-level curriculum that quantifies sample difficulty based on prediction deviation, consistency, and stability. This formulation enables a dynamic assessment of each sample’s learning complexity relative to the model’s evolving capacity, ensuring a more adaptive and balanced training process.

3.2.1 Prediction Deviation

Conventional methods rely solely on multimodal loss as the supervisory signal, which tends to amplify dominant modalities and exacerbate inter-modal imbalances. To address this issue, we introduce both unimodal and multimodal losses, which leverage modality uncertainty to estimate predictive perplexity and reduce biases in multimodal joint training as

| (1) |

the weight is dynamically adjusted according to the entropy of each modality’s prediction space, , indirectly capturing each unimodal encoder’s maturity and adaptively adjusting the supervisory signal accordingly.

3.2.2 Prediction Consistency

To ensure each unimodal encoder supports multimodal training effectively, it is crucial to monitor the imbalances in decision-making across modalities. By measuring consistency between unimodal predictions and the fused multimodal result, we can address inter-modal discrepancies, promoting balanced collaboration among modalities as

| (2) |

where is the fused multimodal prediction probability. The squared Euclidean distance quantifies the divergence between the -th modality’s prediction and the fused result, with larger values indicating weaker, possibly dominated contributions. This metric dynamically tracks each modality’s impact, enabling real-time adjustments to balance influences and enhance fusion effectiveness.

3.2.3 Prediction Stability

In high-noise or ambiguous-feature scenarios, even when multimodal encoders achieve consistent decisions, unpredictable environmental factors can still impact each modality to varying degrees. By quantifying the confidence of each modality’s decisions in complex contexts, we gain further insight into robustness and generalization imbalances across modalities as

| (3) |

where and are the Softmax-based predicted probabilities of the correct and incorrect classes, respectively. This metric rewards correct predictions while penalizing incorrect ones, providing insight into the model’s reliability across varied multimodal contexts by highlighting the stability of predictions under challenging conditions.

3.2.4 Adaptive Multi-metric Weighting Strategy

In real-world multimodal scenarios, unpredictable environmental factors and inconsistent data collection can unevenly impact samples, making it challenging for a single metric to capture the complex supervisory needs required. To address this, we propose an adaptive multi-metric weighting strategy that dynamically shifts the model’s focus toward critical aspects by identifying metrics with higher volatility using standardization and exponential moving average (EMA). By assigning greater weight to these volatile metrics, the model prioritizes challenging samples, optimizing learning across diverse scenarios. Specifically, all metrics are first standardized via a sigmoid transformation as

| (4) |

with for negative indicators (e.g., loss) and for positive ones (e.g., consistency, stability), ensuring that higher values indicate greater sample difficulty. To track and smooth fluctuations, we then compute the exponential moving average (EMA) of each metric’s changes as

| (5) |

where is the EMA of volatility for the -th metric at training step , and is a smoothing factor that emphasizes recent data, providing a responsive measure of the model’s current capabilities. Metric weights are then dynamically assigned based on the normalized EMA of volatility, giving higher weights to metrics with greater variability as

| (6) |

These weights integrate metrics into a composite task-oriented difficulty score, , enabling adaptive assessment of sample-level difficulty and guiding the model to prioritize the most critical aspects of the multimodal task as training progresses.

3.3 Modality-level Dynamic Curriculum

Effectively leveraging multiple modalities is key to enhancing multimodal learning, yet modeling individual modality contributions remains challenging, often leading to imbalances and inefficiencies. To address this, we introduce Geometric Mean Ratio (GMR) and Harmonic Mean Improvement Rate (HMIR), which assess modality contributions from global and local perspectives, enabling finer-grained quantification for balanced fusion.

3.3.1 Geometric Mean Ratio

The Geometric Mean Ratio (GMR) offers a global perspective on the fusion model’s performance by capturing the overall improvement achieved through multimodal integration compared to individual modalities, thus highlighting the collective impact of fusion. GMR is defined as

| (7) |

When the GMR significantly exceeds 1, it indicates a substantial overall improvement in model performance resulting from effective fusion. Conversely, GMR close to or below 1 suggests that certain modalities contribute redundantly or insignificantly, providing insight into the global efficiency of the fusion process.

3.3.2 Harmonic Mean Improvement Rate

In contrast to GMR’s global focus, the Harmonic Mean Improvement Rate (HMIR) offers a local view of each modality’s fine-grained contribution, emphasizing the minimum impactful ones. This approach allows dynamic identification and adjustment of under-performing or redundant modalities in imbalanced tasks, ensuring no single modality disproportionately detracts from performance as

| (8) |

where is a small positive constant to prevent division by zero, and indicates the performance gain brought by multimodal fusion over each individual unimodal network as

| (9) |

the weight reflects the relative importance of each modality based on its contribution during multimodal fusion and satisfies . It is initialized as

| (10) |

By focusing on each modality’s minimal contribution, HMIR enables precise, targeted adjustments, ensuring the model dynamically adapts to minimize the impact of under-performing modalities.

3.4 Dynamic Multimodal Fusion

To leverage the most informative modalities while minimizing the impact of redundant ones, we introduced a dynamic network architecture that adaptively suppresses redundant modalities and adjusts the weights of each modality throughout the training process.

3.4.1 Modality Gating Mechanism

The modality gating mechanism dynamically controls the activation or suppression of each modality based on the fusion-level dynamic curriculum, thereby determining each modality’s contribution to decision-making. The gating function for the -th modality is

| (11) |

where is the sigmoid activation function, and is a balancing factor that dynamically shifts the model’s focus between overall fusion effectiveness and individual modality contributions. This balancing factor is defined as

| (12) |

where is initialized to 0.5 to balance overall and individual contributions, while represents the average gain across all modalities, helping adjust the relative importance of each modality based on its deviation from this average.

3.4.2 Multimodal Fusion and Prediction

Following the determination of activation states, the weights of active modalities are dynamically adjusted according to the modality-level curriculum, where the updated weight for the -th modality is given by . Here, is initialized based on Equation (10), with as the learning rate controls the adjustment magnitude. To aggregate the outputs from active modalities, the dynamically adjusted weights are applied to the respective modality features as

| (13) |

where denotes the the modalities activated by the gating mechanism, and is the unimodal output of modality , ensuring that the most informative modalities contribute proportionally to the final decision and enhance overall multimodal integration effectiveness.

3.5 Multimodal Curriculum Learning

To address the inherent cross-modal heterogeneity and varying quality across multimodal samples, we propose an integrated curriculum learning strategy that combines sample-level and modality-level dynamic curricula. This approach adaptively prioritizes samples and modalities based on their estimated difficulty and information richness, ensuring a structured and progressive learning process. Our approach dynamically adjusts sample importance according to its contribution to model progression. Specifically, the optimization objective is defined as:

| (14) |

where represents the task-oriented sample difficulty derived from prediction deviation, consistency, and stability, while reflects the effectiveness of multimodal fusion for the -th sample. The reweighting factor ensures the model prioritizes samples that are both informative and challenging, directing learning focus toward key aspects of multimodal optimization. To prevent overfitting or instability in sample weighting, we introduce a regularization term , which encourages smooth weight transitions and prevents the model from over-relying on specific samples. By jointly optimizing sample-level and modality-level curriculum, this strategy enables the model to dynamically adapt to data heterogeneity, effectively balance learning across modalities, and enhance robustness and efficiency in multimodal learning.

4 Experiments

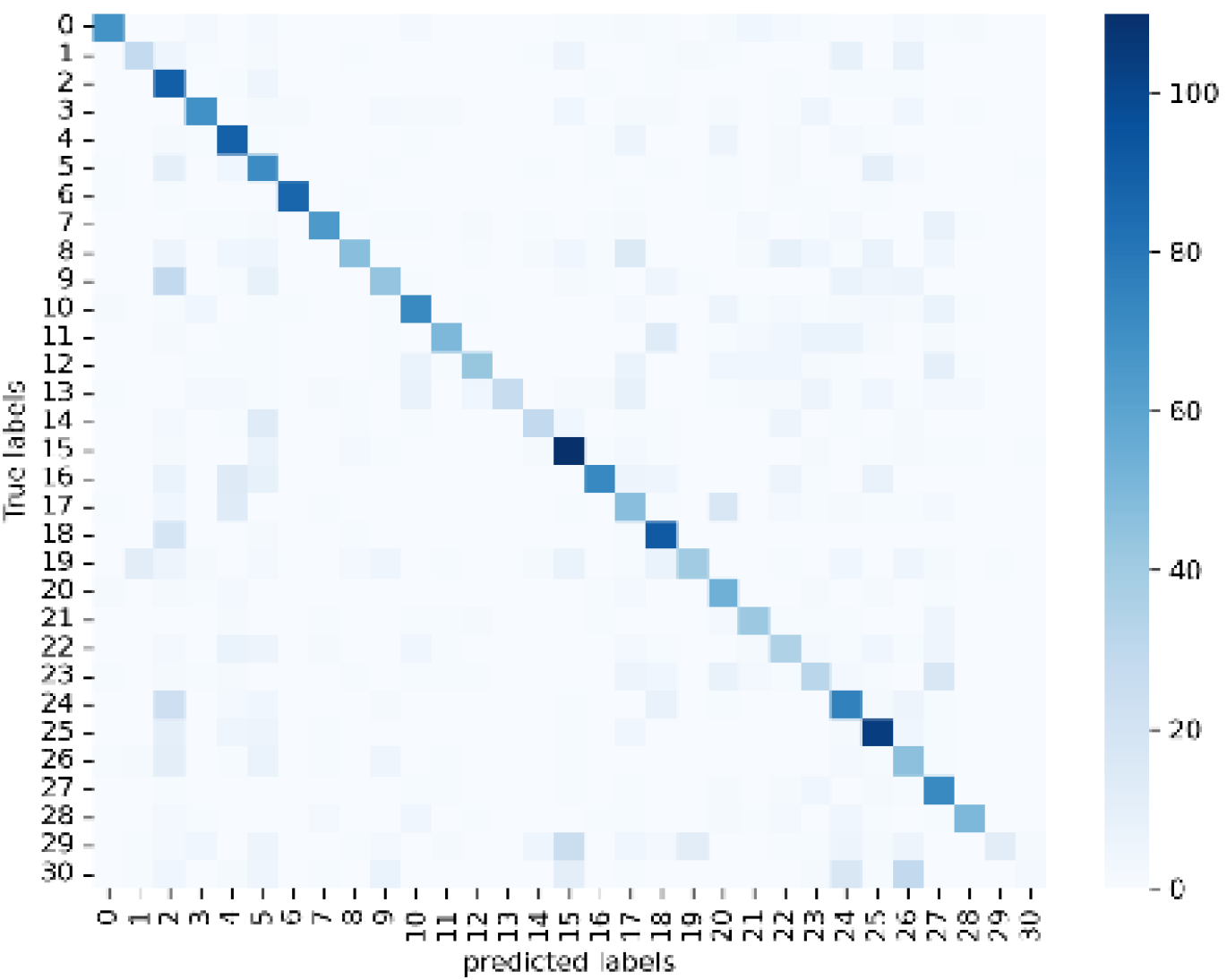

Datasets. We conduct experiments on six widely used multimodal benchmarks datasets, covering both bi-modal and tri-modal scenarios. Kinetic Sounds (KS) [1] is an audio-visual action recognition dataset across 31 human action classes from YouTube videos. CREMA-D [4] is an audio-visual dataset for speech emotion recognition, containing six emotions labeled by 2,443 crowd-sourced raters. UCF-101 [26] is a bi-modal action recognition dataset featuring both RGB and Optical Flow data across 101 human actions categories. CMU-MOSI [43], CMU-MOSEI [44] and CH-SIMS [41] are multimodal sentiment analysis datasets incorporating audio, visual, and textual modalities.

Experimental settings. All experiments are conducted on an NVIDIA A6000 Ada with 48GB memory. Specifically, for the KS, CREMA-D and UCF-101 datasets, we adopt ResNet-18 as the backbone to ensure a fair comparison, following prior works [33, 31, 12, 48]. For the MOSI, MOSEI, and CH-SIMS datasets, all datasets and experimental settings are implemented by MMSA-FET toolkit [42].

| Method | KS | CREMA-D | UCF-101 |

| (Audio+Visual) | (Audio+Visual) | (RGB+OF) | |

| Unimodal Baselines | |||

| Audio/RGB-only | 53.01 | 61.33 | 65.97 |

| Visual/OF-only | 48.75 | 55.85 | 78.33 |

| Conventional Multimodal Fusion Methods | |||

| Concatenation | 61.57 | 63.47 | 81.18 |

| Summation | 61.03 | 62.67 | 80.69 |

| FiLM [23] | 60.88 | 62.05 | 79.33 |

| BiGated [15] | 62.41 | 63.88 | 81.50 |

| Dynamic Multimodal Fusion Methods | |||

| TMC (TPAMI’22) | 65.88 | 65.57 | 82.46 |

| QMF (ICML’23) | 63.83 | 65.03 | 82.09 |

| PDF (ICML’24) | 66.18 | 66.67 | 83.67 |

| Imbalanced Multimodal Learning Methods | |||

| OGM-GE (CVPR’22) | 63.48 | 65.58 | 82.45 |

| PMR (CVPR’23) | 63.33 | 66.07 | 82.31 |

| I2MCL (MM’23) | 65.22 | 65.58 | 81.96 |

| UMT (CVPR’24) | 66.08 | 66.68 | 82.62 |

| SMV (CVPR’24) | 65.89 | 67.27 | 83.04 |

| MLA (CVPR’24) | 67.55 | 69.53 | 84.21 |

| MMPareto (ICML’24) | 67.18 | 71.08 | 84.89 |

| ReconBoost (ICML’24) | 68.22 | 71.62 | 85.41 |

| CGGM (NeurIPS’24) | 68.31 | 71.41 | 85.88 |

| LFM (NeurIPS’24) | 68.77 | 72.88 | 86.01 |

| DynCIM (Ours) | 71.22 | 75.55 | 88.76 |

| Method | CMU-MOSI | CMU-MOSEI | CH-SIMS |

| Unimodal Baselines | |||

| Audio-only | 54.69 | 52.21 | 58.17 |

| Visual-only | 57.55 | 58.04 | 62.88 |

| Text-only | 75.58 | 66.27 | 70.21 |

| Conventional Multimodal Fusion Methods | |||

| Concatenation | 76.57 | 67.78 | 73.31 |

| Summation | 76.08 | 67.35 | 73.01 |

| FiLM [23] | 75.94 | 67.01 | 72.68 |

| BiGated [15] | 76.97 | 68.01 | 73.78 |

| Dynamic Multimodal Fusion Methods | |||

| TMC (TPAMI’22) | 77.89 | 73.72 | 75.54 |

| QMF (ICML’23) | 77.06 | 73.11 | 75.12 |

| PDF (ICML’24) | 78.44 | 74.42 | 76.01 |

| Imbalanced Multimodal Learning Methods | |||

| OGM-GE (CVPR’22) | 77.08 | 71.76 | 74.03 |

| PMR (CVPR’23) | 77.42 | 72.04 | 74.24 |

| I2MCL (MM’23) | 77.21 | 72.43 | 74.67 |

| UMT (CVPR’24) | 77.64 | 72.78 | 75.35 |

| SMV (CVPR’24) | 77.88 | 72.49 | 75.07 |

| MLA (CVPR’24) | 78.53 | 74.58 | 77.42 |

| ReconBoost (ICML’24) | 78.27 | 74.02 | 77.15 |

| MMPareto (ICML’24) | 78.56 | 74.12 | 77.78 |

| CGGM (NeurIPS’24) | 78.98 | 74.42 | 77.64 |

| LFM (NeurIPS’24) | 79.41 | 74.88 | 78.13 |

| DynCIM (Ours) | 81.47 | 76.74 | 80.04 |

4.1 Comparsion with State-of-the-Arts

Conventional multimodal fusion methods. Feature-level fusion methods like Concatenation, FiLM, and BiGated outperform the decision-level Summation approach, which, despite its interpretability, serves better as a foundational component within DynCIM. While Concatenation and BiGated enhance feature integration and boost performance, they lack adaptability to dynamic modality contributions, limiting their effectiveness in addressing sample and modality imbalances.

Dynamic multimodal fusion methods. To further explore the benefits of adaptive fusion, we compare DynCIM with TMC [11], QMF [46], and PDF [3], which dynamically adjust modality weights to enhance performance. While these methods improve upon static fusion by leveraging uncertainty-based weighting, they are more susceptible to noise sensitivity due to reliance on uncertainty estimation. In contrast, DynCIM employs a gating mechanism that adaptively modulates each modality’s contribution based on real-time effectiveness, reducing redundancy and minimizing the impact of noisy modalities. Additionally, our adaptive balance factor dynamically shifts the focus between optimizing overall fusion and fine-tuning individual modality contributions, making DynCIM more robust and adaptable across diverse multimodal scenarios.

Imbalanced multimodal learning methods. To evaluate the effectiveness in addressing multimodal imbalances, we compare it againest OGM-GE [22], PMR [8], I2MCL [49], UMT [7], SMV [32], MLA [48], ReconBoost [12], MMPareto [31], CGGM [9] and LFM [39] in (Table 1 & 2). DynCIM consistently outperforms all methods in both bi-modal (KS, CREMA-D, UCF-101) and tri-modal (CMU-MOSI, CMU-MOSEI, CH-SIMS) settings. While MMPareto and ReconBoost improve modality alignment and CGGM and LFM refine fusion strategies, they rely on static optimization and struggle with adaptive adjustments. In contrast, DynCIM dynamically reweights samples and modalities through gradient-driven curriculum learning, prioritizing informative instances and balancing modality contributions in real-time. With a computational complexity of , DynCIM achieves state-of-the-art performance, offering superior efficiency, adaptability, and robustness in multimodal learning.

Modality Gap Analysis. Fig 4 visualizes the modality gap between Audio and Visual features on the KS dataset, comparing Concat Fusion and DynCIM. In Concat Fusion, the features are scattered and poorly aligned, indicating weak cross-modal consistency and ineffective fusion. In contrast, DynCIM significantly reduces the modality gap, producing a tightly aligned feature distribution. This improvement stems from MDC’s dynamic modality contribution adjustment and gating mechanism, which together enhance inter-modal alignment by suppressing redundancy and amplifying informative signals, resulting in a more cohesive multimodal representation.

| Module | KS | CREMA-D | UCF-101 | |

|---|---|---|---|---|

| SDC | MDC | (Audio+Visual) | (Audio+Visual) | (RGB+OF) |

| ✗ | ✗ | 62.19 | 65.23 | 81.69 |

| ✓ | ✗ | 66.45 | 70.22 | 84.50 |

| ✗ | ✓ | 68.19 | 72.73 | 86.29 |

| ✓ | ✓ | 71.22 | 75.55 | 88.76 |

| Measure | KS | CREMA-D | UCF-101 | ||

|---|---|---|---|---|---|

| (Audio+Visual) | (Audio+Visual) | (RGB+OF) | |||

| ✗ | ✗ | ✗ | 65.19 | 67.73 | 81.29 |

| ✓ | ✗ | ✗ | 68.70 | 71.80 | 84.94 |

| ✓ | ✓ | ✗ | 70.03 | 74.12 | 87.05 |

| ✓ | ✓ | ✓ | 71.22 | 75.55 | 88.76 |

| Measure | KS | CREMA-D | UCF-101 | |

|---|---|---|---|---|

| GMR | HMIR | (Audio+Visual) | (Audio+Visual) | (RGB+OF) |

| ✗ | ✗ | 66.45 | 69.22 | 82.50 |

| ✓ | ✗ | 69.86 | 72.81 | 85.34 |

| ✗ | ✓ | 69.37 | 73.55 | 86.07 |

| ✓ | ✓ | 71.22 | 75.55 | 88.76 |

| Methods | KS | CREMA-D | UCF-101 |

|---|---|---|---|

| Uniform Weighting | 67.09 | 71.35 | 84.18 |

| Modality Gating Mechanism | 71.22 | 75.55 | 88.76 |

4.2 Ablation Studies

To validate the effectiveness of each component in DynCIM, we conducted a series of four ablation studies on the KS, CREMA-D, and UCF-101 datasets, systematically analyzing the contributions of sample-level (SDC) and modality-level (MDC) dynamic curriculum, their individual evaluation metrics, and the modality gating mechanism.

Impact of SDC and MDC. To assess the impact of SDC and MDC, we analyze quantitative results (Table 3) across three datasets and t-SNE visualizations (Fig 5) on the KS dataset. Both components significantly enhance multimodal learning, with MDC providing greater improvement by dynamically optimizing modality contributions. The t-SNE results further support this: without SDC, feature distributions show weaker intra-class compactness, highlighting its role in refining sample representations and addressing imbalances. Without MDC, class separation is less distinct, demonstrating its importance in enhancing cross-modal integration. In contrast, the full DynCIM framework yields well-structured, compact feature distributions, confirming that SDC strengthens intra-class consistency while MDC optimizes inter-modal fusion, leading to more robust and discriminative multimodal representations.

Impact of specific mechanisms. We conduct three ablation studies to analyze DynCIM’s key mechanisms. First, evaluating individual SDC metrics (Table 4) shows that incorporating prediction deviation, consistency, and stability enhances performance, emphasizing the need for comprehensive difficulty assessment. Second, Table 5 reveals that combining Geometric Mean Ratio (GMR) and Harmonic Mean Improvement Ratio (HMIR) achieves optimal results, balancing overall modality impact and fine-grained adjustments. Lastly, comparing the Modality Gating Mechanism with Uniform Weighting (Table 6) highlights its effectiveness in dynamically adjusting modality contributions, reducing redundancy, and improving robustness.

Impact of learning strategies. To analyze the effectiveness of different learning strategies, we visualize their impact on the KS dataset in Fig 6. Concatenation-based Fusion lacks a structured training approach, leading to higher mis-classification rates. Hard-to-easy training struggles with early-stage instability, resulting in scattered predictions and poor convergence. Easy-to-hard training shows improved alignment but lacks adaptability to sample variations. In contrast, DynCIM achieves the most structured and well-aligned predictions, demonstrating that our progressive curriculum learning strategy effectively balances stability and adaptability, leading to smoother convergence and improved accuracy.

4.3 Parameter Sensitivity Analysis

The parameter in the EMA update significantly influences sample-level metric performance in model fusion, affecting overall convergence. As shown in Figure 7, we tested various values on the CREMA-D and UCF-101 datasets, with optimal accuracy achieved at . Smaller values cause current metrics to lag behind historical updates, hindering convergence. Conversely, larger values reduce the discrepancy between current and historical metrics, limiting the historical average’s ability to correct biases in the current metric weighting.

5 Conclusions

We propose DynCIM, a dynamic multimodal curriculum learning framework that integrates sample and modality-level curriculum to adjust training priorities based on real-time difficulty and modality contributions. A gating mechanism further enhances fusion effectiveness, mitigating redundancy and improving adaptability. Extensive experiments on six benchmarks demonstrate DynCIM’s superiority over state-of-the-art methods, achieving more balanced, robust, and efficient multimodal learning.

References

- Arandjelovic and Zisserman [2017] Relja Arandjelovic and Andrew Zisserman. Look, listen and learn. In Proceedings of the IEEE international conference on computer vision, pages 609–617, 2017.

- Bengio et al. [2009] Yoshua Bengio, Jérôme Louradour, Ronan Collobert, and Jason Weston. Curriculum learning. In Proceedings of the 26th annual international conference on machine learning, pages 41–48, 2009.

- Cao et al. [2024] Bing Cao, Yinan Xia, Yi Ding, Changqing Zhang, and Qinghua Hu. Predictive dynamic fusion. In International Conference on Machine Learning. PMLR, 2024.

- Cao et al. [2014] Houwei Cao, David G Cooper, Michael K Keutmann, Ruben C Gur, Ani Nenkova, and Ragini Verma. Crema-d: Crowd-sourced emotional multimodal actors dataset. IEEE transactions on affective computing, 5(4):377–390, 2014.

- Das et al. [2023] Arindam Das, Sudip Das, Ganesh Sistu, Jonathan Horgan, Ujjwal Bhattacharya, Edward Jones, Martin Glavin, and Ciarán Eising. Revisiting modality imbalance in multimodal pedestrian detection. In 2023 IEEE International Conference on Image Processing (ICIP), pages 1755–1759. IEEE, 2023.

- Das and Singh [2023] Ringki Das and Thoudam Doren Singh. Multimodal sentiment analysis: a survey of methods, trends, and challenges. ACM Computing Surveys, 55(13s):1–38, 2023.

- Du et al. [2021] Chenzhuang Du, Tingle Li, Yichen Liu, Zixin Wen, Tianyu Hua, Yue Wang, and Hang Zhao. Improving multi-modal learning with uni-modal teachers. arXiv preprint arXiv:2106.11059, 2021.

- Fan et al. [2023] Yunfeng Fan, Wenchao Xu, Haozhao Wang, Junxiao Wang, and Song Guo. Pmr: Prototypical modal rebalance for multimodal learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 20029–20038, 2023.

- Guo et al. [2024] Zirun Guo, Tao Jin, Jingyuan Chen, and Zhou Zhao. Classifier-guided gradient modulation for enhanced multimodal learning. Advances in Neural Information Processing Systems, 37:133328–133344, 2024.

- Han et al. [2022a] Zongbo Han, Fan Yang, Junzhou Huang, Changqing Zhang, and Jianhua Yao. Multimodal dynamics: Dynamical fusion for trustworthy multimodal classification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 20707–20717, 2022a.

- Han et al. [2022b] Zongbo Han, Changqing Zhang, Huazhu Fu, and Joey Tianyi Zhou. Trusted multi-view classification with dynamic evidential fusion. IEEE transactions on pattern analysis and machine intelligence, 45(2):2551–2566, 2022b.

- Hua et al. [2024] Cong Hua, Qianqian Xu, Shilong Bao, Zhiyong Yang, and Qingming Huang. Reconboost: Boosting can achieve modality reconcilement. In International Conference on Machine Learning, pages 19573–19597. PMLR, 2024.

- Hwang et al. [2024] Jyh-Jing Hwang, Runsheng Xu, Hubert Lin, Wei-Chih Hung, Jingwei Ji, Kristy Choi, Di Huang, Tong He, Paul Covington, Benjamin Sapp, et al. Emma: End-to-end multimodal model for autonomous driving. arXiv preprint arXiv:2410.23262, 2024.

- Jabeen et al. [2023] Summaira Jabeen, Xi Li, Muhammad Shoib Amin, Omar Bourahla, Songyuan Li, and Abdul Jabbar. A review on methods and applications in multimodal deep learning. ACM Transactions on Multimedia Computing, Communications and Applications, 19(2s):1–41, 2023.

- Kiela et al. [2018] Douwe Kiela, Edouard Grave, Armand Joulin, and Tomas Mikolov. Efficient large-scale multi-modal classification. In Proceedings of the AAAI conference on artificial intelligence, 2018.

- Li et al. [2023a] Bobo Li, Hao Fei, Lizi Liao, Yu Zhao, Chong Teng, Tat-Seng Chua, Donghong Ji, and Fei Li. Revisiting disentanglement and fusion on modality and context in conversational multimodal emotion recognition. In Proceedings of the 31st ACM International Conference on Multimedia, pages 5923–5934, 2023a.

- Li et al. [2023b] Yong Li, Yuanzhi Wang, and Zhen Cui. Decoupled multimodal distilling for emotion recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6631–6640, 2023b.

- Liang et al. [2024] Paul Pu Liang, Amir Zadeh, and Louis-Philippe Morency. Foundations & trends in multimodal machine learning: Principles, challenges, and open questions. ACM Computing Surveys, 56(10):1–42, 2024.

- Liu et al. [2021] Fenglin Liu, Shen Ge, and Xian Wu. Competence-based multimodal curriculum learning for medical report generation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 3001–3012, 2021.

- Meng et al. [2024] Tao Meng, Yuntao Shou, Wei Ai, Nan Yin, and Keqin Li. Deep imbalanced learning for multimodal emotion recognition in conversations. IEEE Transactions on Artificial Intelligence, 2024.

- Peng et al. [2022a] Xiaokang Peng, Yake Wei, Andong Deng, Dong Wang, and Di Hu. Balanced multimodal learning via on-the-fly gradient modulation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 8238–8247, 2022a.

- Peng et al. [2022b] Xiaokang Peng, Yake Wei, Andong Deng, Dong Wang, and Di Hu. Balanced multimodal learning via on-the-fly gradient modulation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 8238–8247, 2022b.

- Perez et al. [2018] Ethan Perez, Florian Strub, Harm De Vries, Vincent Dumoulin, and Aaron Courville. Film: Visual reasoning with a general conditioning layer. In Proceedings of the AAAI conference on artificial intelligence, 2018.

- Radford et al. [2021] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- Shen et al. [2023] Meng Shen, Yizheng Huang, Jianxiong Yin, Heqing Zou, Deepu Rajan, and Simon See. Towards balanced active learning for multimodal classification. In Proceedings of the 31st ACM International Conference on Multimedia, pages 3434–3445, 2023.

- Soomro [2012] K Soomro. Ucf101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402, 2012.

- Van der Maaten and Hinton [2008] Laurens Van der Maaten and Geoffrey Hinton. Visualizing data using t-sne. Journal of machine learning research, 9(11), 2008.

- Wang et al. [2020] Weiyao Wang, Du Tran, and Matt Feiszli. What makes training multi-modal classification networks hard? In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 12695–12705, 2020.

- Wang et al. [2021] Xin Wang, Yudong Chen, and Wenwu Zhu. A survey on curriculum learning. IEEE transactions on pattern analysis and machine intelligence, 44(9):4555–4576, 2021.

- Wang et al. [2024] Xiyao Wang, Jiuhai Chen, Zhaoyang Wang, Yuhang Zhou, Yiyang Zhou, Huaxiu Yao, Tianyi Zhou, Tom Goldstein, Parminder Bhatia, Furong Huang, et al. Enhancing visual-language modality alignment in large vision language models via self-improvement. arXiv preprint arXiv:2405.15973, 2024.

- Wei and Hu [2024] Yake Wei and Di Hu. Mmpareto: boosting multimodal learning with innocent unimodal assistance. arXiv preprint arXiv:2405.17730, 2024.

- Wei et al. [2024a] Yake Wei, Ruoxuan Feng, Zihe Wang, and Di Hu. Enhancing multimodal cooperation via sample-level modality valuation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 27338–27347, 2024a.

- Wei et al. [2024b] Yake Wei, Di Hu, Henghui Du, and Ji-Rong Wen. On-the-fly modulation for balanced multimodal learning. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024b.

- Wu et al. [2022] Nan Wu, Stanislaw Jastrzebski, Kyunghyun Cho, and Krzysztof J Geras. Characterizing and overcoming the greedy nature of learning in multi-modal deep neural networks. In International Conference on Machine Learning, pages 24043–24055. PMLR, 2022.

- Xing et al. [2024a] Shuo Xing, Hongyuan Hua, Xiangbo Gao, Shenzhe Zhu, Renjie Li, Kexin Tian, Xiaopeng Li, Heng Huang, Tianbao Yang, Zhangyang Wang, et al. Autotrust: Benchmarking trustworthiness in large vision language models for autonomous driving. arXiv preprint arXiv:2412.15206, 2024a.

- Xing et al. [2024b] Shuo Xing, Chengyuan Qian, Yuping Wang, Hongyuan Hua, Kexin Tian, Yang Zhou, and Zhengzhong Tu. Openemma: Open-source multimodal model for end-to-end autonomous driving. arXiv preprint arXiv:2412.15208, 2024b.

- Xing et al. [2025] Shuo Xing, Yuping Wang, Peiran Li, Ruizheng Bai, Yueqi Wang, Chengxuan Qian, Huaxiu Yao, and Zhengzhong Tu. Re-align: Aligning vision language models via retrieval-augmented direct preference optimization. arXiv preprint arXiv:2502.13146, 2025.

- Xu et al. [2023] Peng Xu, Xiatian Zhu, and David A Clifton. Multimodal learning with transformers: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023.

- Yang et al. [2024a] Yang Yang, Fengqiang Wan, Qing-Yuan Jiang, and Yi Xu. Facilitating multimodal classification via dynamically learning modality gap. Advances in Neural Information Processing Systems, 37:62108–62122, 2024a.

- Yang et al. [2024b] Zequn Yang, Yake Wei, Ce Liang, and Di Hu. Quantifying and enhancing multi-modal robustness with modality preference. In The Twelfth International Conference on Learning Representations, 2024b.

- Yu et al. [2020] Wenmeng Yu, Hua Xu, Fanyang Meng, Yilin Zhu, Yixiao Ma, Jiele Wu, Jiyun Zou, and Kaicheng Yang. Ch-sims: A chinese multimodal sentiment analysis dataset with fine-grained annotation of modality. In Proceedings of the 58th annual meeting of the association for computational linguistics, pages 3718–3727, 2020.

- Yu et al. [2021] Wenmeng Yu, Hua Xu, Ziqi Yuan, and Jiele Wu. Learning modality-specific representations with self-supervised multi-task learning for multimodal sentiment analysis. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 10790–10797, 2021.

- Zadeh et al. [2016] Amir Zadeh, Rowan Zellers, Eli Pincus, and Louis-Philippe Morency. Multimodal sentiment intensity analysis in videos: Facial gestures and verbal messages. IEEE Intelligent Systems, 31(6):82–88, 2016.

- Zadeh et al. [2018] AmirAli Bagher Zadeh, Paul Pu Liang, Soujanya Poria, Erik Cambria, and Louis-Philippe Morency. Multimodal language analysis in the wild: Cmu-mosei dataset and interpretable dynamic fusion graph. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 2236–2246, 2018.

- Zhang et al. [2023a] Qingyang Zhang, Haitao Wu, Changqing Zhang, Qinghua Hu, Huazhu Fu, Joey Tianyi Zhou, and Xi Peng. Provable dynamic fusion for low-quality multimodal data. In International conference on machine learning, pages 41753–41769. PMLR, 2023a.

- Zhang et al. [2023b] Qingyang Zhang, Haitao Wu, Changqing Zhang, Qinghua Hu, Huazhu Fu, Joey Tianyi Zhou, and Xi Peng. Provable dynamic fusion for low-quality multimodal data. In International conference on machine learning, pages 41753–41769. PMLR, 2023b.

- Zhang et al. [2024a] Qingyang Zhang, Yake Wei, Zongbo Han, Huazhu Fu, Xi Peng, Cheng Deng, Qinghua Hu, Cai Xu, Jie Wen, Di Hu, and Zhang Changqing. Multimodal fusion on low-quality data: A comprehensive survey. arXiv preprint arXiv:2404.18947, 2024a.

- Zhang et al. [2024b] Xiaohui Zhang, Jaehong Yoon, Mohit Bansal, and Huaxiu Yao. Multimodal representation learning by alternating unimodal adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 27456–27466, 2024b.

- Zhou et al. [2023] Yuwei Zhou, Xin Wang, Hong Chen, Xuguang Duan, and Wenwu Zhu. Intra- and inter-modal curriculum for multimodal learning. In Proceedings of the 31st ACM International Conference on Multimedia, pages 3724–3735, 2023.