Dynamic Spatial-temporal Hypergraph Convolutional Network for Skeleton-based Action Recognition

Abstract

Skeleton-based action recognition relies on the extraction of spatial-temporal topological information. Hypergraphs can establish prior unnatural dependencies for the skeleton. However, the existing methods only focus on the construction of spatial topology and ignore the time-point dependence. This paper proposes a dynamic spatial-temporal hypergraph convolutional network (DST-HCN) to capture spatial-temporal information for skeleton-based action recognition. DST-HCN introduces a time-point hypergraph (TPH) to learn relationships at time points. With multiple spatial static hypergraphs and dynamic TPH, our network can learn more complete spatial-temporal features. In addition, we use the high-order information fusion module (HIF) to fuse spatial-temporal information synchronously. Extensive experiments on NTU RGB+D, NTU RGB+D 120, and NW-UCLA datasets show that our model achieves state-of-the-art, especially compared with hypergraph methods.

Index Terms— Skeleton-based action recognition, hypergraph, hypergraph neural network

1 Introduction

Action recognition is one of the important tasks in computer vision, widely used in human-robot interaction, video understanding and intelligent detection. In recent years, skeleton-based action recognition has attracted increasing attention due to its computational efficiency and robustness to background or environmental changes.

Graph convolution methods are widely used to handle the spatial-temporal topology of the human skeleton. ST-GCN [1] proposed a joint segmentation strategy to delineate the joint points. However, using the human body’s static segmentation strategy is insufficient to adapt to the numerous actions. Therefore, Chen et al. [2] proposed CTR-GCN that generates new topologies based on sample features dynamically. But the spatial topology used in these methods [1, 3, 2] hardly reflects the multiple joints of unnatural dependencies and does not consider the issue of time-point dependencies. The emergence of hypergraph convolution-based approach [4, 5, 6] considers unnatural dependencies beyond the natural topology. While these methods also ignore the temporal dependencies between actions to a certain extent. In addition, both hypergraph and graph convolution methods [3, 7, 2] use a strategy of learning spatial-temporal separately. This results in the spatial-temporal parallel information of action sequences being ignored.

To solve the above problem, we construct a dynamic spatial-temporal hypergraph convolutional network (DST-HCN) that consists of dynamic spatial-temporal hypergraph convolution (DST-HC) and high-order information fusion (HIF). We use the K-NN method in DST-HC to generate dynamic time-point hypergraph (TPH) based on sample features. For the spatial topology, we construct three hypergraph topologies using methods such as k-means. The overview is clearly shown in Fig. 1. TPH and constructed spatial hypergraph topology are passed through a convolution operation to obtain high-order spatial-temporal feature information. In HIF, action modeling is done by aggregating and concatenating the high-order information through multi-feature channel operations. As shown in Fig. 2, we introduce TPH to solve the adjacent topological similarity problem. In addition, we use spatial-temporal parallel learning to fuse features efficiently.

In summary, our contributions are mainly in the following areas:

-

•

We propose the DST-HCN that captures important spatial-temporal feature information based on the constructed multiple hypergraphs. And an effective fusion is done through parallel spatial-temporal modeling to finalize the action recognition task.

-

•

We introduce the time-point hypergraph. By combining spatial hypergraphs, we construct completable spatial-temporal dependencies.

-

•

Extensive experiments show that DST-HCN achieves state-of-the-art on the NTU RGB+D, NTU RGB+D 120, and NW-UCLA datasets.

2 RELATED WORK

2.1 Skeleton-based Action Recognition

CNNs have achieved remarkable results in processing euclidean data like images and RNNs have achieved significant advantages in processing time series. So the research began to emerge with CNN and RNN methods [8, 9, 10]. The advent of graph convolutional neural networks (GCNs) have significantly improved the processing of non-Euclidean data. Yan et al. [1] proposed the ST-GCN model, which can better handle the topological relationship of skeletons to achieve more effectiveness. Chen et al. [2] proposed the CTR-GCN method, which can dynamically learn joint information on space to achieve channel topology modeling. Song et al. [11] proposed a family of efficient GCN baselines with high accuracies and small amounts of trainable parameters. This methods use a strategy of spatial-temporal separate learning in model configuration.

2.2 Hypergraph Neural Networks

The graph structure used in traditional graph convolutional neural networks focuses on establishing a correspondence between two objects to form a set. Hyperedges can connect arbitrary objects to form hypergraphs to obtain high-order dependencies. Feng et al. [12] proposed hypergraph neural network (HGNN) to learn the hidden layer representation considering the high-order data structure. Jo et al. [13] proposed a pairwise hypergraph transformation method that makes the network focus on the feature information of the edges. Jiang et al. [14] proposed a dynamic hypergraph network (DHNN) to update the hypergraph structure dynamically, etc. In this paper, we use the generic hypergraph convolution described with HGNN [12].

3 METHOD

3.1 Preliminaries

Graph Convolutional Network. A skeleton sequence consists of T single-frame skeletal maps, which is represented by [1], where is the set of joints, and is the set of skeletal edges. The skeleton graph is denoted by , which is the adjacency matrix about the joints. A conventional graph convolution method based on skeletal data is:

| (1) |

where denotes the input features of the i-th layer of the network, is the number of channels, denotes the adjacency matrix of the normalised spatial segmentation method, denotes spatial skeletal segmentation method [1], and denotes the learnable weight features.

3.2 Dynamic Spatial-temporal Hypergraph Convolution (DST-HC)

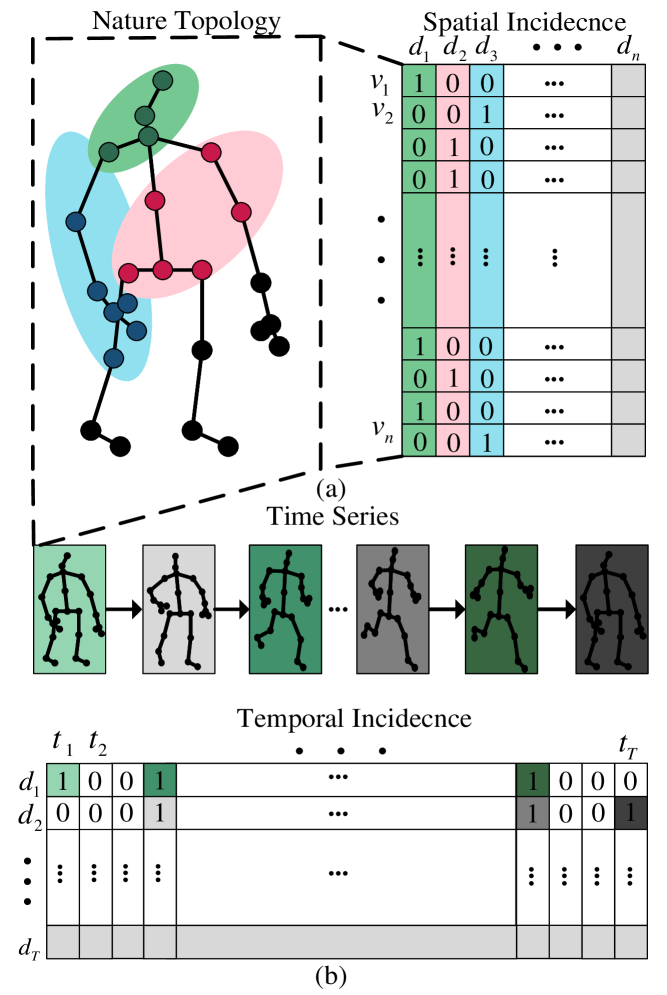

Hypergraph Construction. Unlike the graph convolution method, the hypergraph structure is represented by , where denotes the number of joints, is the set of hyperedges represented by the incidence matrix, and is represented as the set of weights of hyperedges. For the incidence matrix , is the set of joints and denotes the number of hyperedges. For each hyperedge: means the joint belongs to this hyperedges, and means there is no node in this hyperedges.

In the dynamic time-point hypergraph construction method, we use the k-NN method to construct the features after linear transformation and dimensionality reduction to reduce the computational cost. As shown in Fig. 3, the hypergraph is formulated as:

| (2) |

where denotes the number of neighbors of the each hyperedge, denotes the input features, is the linear transformation dimensionality reduction function, is the dimensionality transformation function, and denotes the k-NN function.

We use k-NN, k-means, and the combination of centrifugal and centripetal joints [1] to construct the spatial hypergraph matrix, as shown in Fig. 3. The spatial hypergraph method is formulated as:

| (3) |

where are the three methods mentioned above, and is the topological adjacency matrix.

Further, we construct two spatial-temporal dependencies based on the above hypergraph. As shown in the following equation:

| (4) |

where , and , denotes two dimensional transformation operation functions.

Hypergraph Convolution. We use transposition methods to enable the construction of hypergraph structures with additional learning to the feature of the hyperedges [13]. We use the following hypergraph convolution to capture important high-order feature information [4]:

| (5) |

where represents the learning weights, which are implemented in this paper by different convolution methods, is a hypergraph of different types of constructions, [12], are the diagonal matrices of hyperedges and node degrees respectively, is the diagonal matrix, initialized to the unit matrix, is the input feature,.

In addition, denotes the hyperedge feature is not considered:

| (6) |

Finally, we obtain the following features: , , , , , denoted by , respectively. The implementation process is shown in Fig. 3.

3.3 High-order Information Fusion (HIF)

With the multiple high-order information obtained from convolution operations, we constructed the HIF module for parallel modeling. The implementation is shown in Fig. 3. First, we use temporal features to refine spatial features to obtain high-order joint information. Then through spatial-temporal aggregation in the channel dimension and channel concatenating operations to capture the properties between features. The final output is .

Specifically, to avoid the loss of the original topology information, we use the original natural joint topology as an auxiliary learning component. It is combined with spatial hypergraph features to learn more connected joint features of the action. As indicated below:

| (7) |

where is the learning weights.

3.4 Model Architecture

We use a multi-scale convolution, TF, with an attention mechanism [15, 2] to extract information at different scales. As expressed in the following equation:

| (8) |

is the output of a block of dynamic spatial-temporal hypergraph convolutional network. The network consists of ten blocks. The difference in , , is composed of three different spatial hypergraph topologies, respectively.

4 Experiments

4.1 Datasets

Northwestern-UCLA. The dataset [16] is extracted from 1494 videos with ten types of actions. The training set comes from two Kinect cameras and the test set from remaining cameras.

NTU RGB+D. NTU RGB+D [8] is a large and widely used action recognition dataset obtained from three different views using the Kinect v2 sensor and contains 60 action classes. Evaluation benchmarks: Cross-Subject (X-Sub) and Cross-View (X-View).

NTU RGB+D 120. NTU RGB+D 120 [17] is an enhanced version of the NTU RGB+D dataset, with the number of action categories increased to 120. Evaluated using two division criteria: Cross-Subject (X-Sub) and Cross-Setup (X-Setup).

4.2 Implementation Details

Our experiments use cross entropy as the loss function and use three Tesla M40 GPUs. In addition, we use dual correlation channel data to aid fuse learning, which is obtained by subtracting two channels. The experiments use the stochastic gradient descent (SGD). The weight decay is set to 0.0004 and the Nesterov momentum is 0.9. The train contains 90 epochs, the first five epochs use a warm-up strategy, and we set cosine annealing for decay [18]. For the NTU-RGB+D [8] and NTU-RGB+D 120 datasets [17], the batch size is set to 64. For the Northwestern-UCLA dataset [16], we set the batch size to 16.

| Methods | FLOPs | Param. | Acc() | |

|---|---|---|---|---|

| DST-HCN w/o C,H,G | 3.05G | 2.71M | 89.5 | |

| DST-HCN w/o C,H | 3.50G | 2.93M | 90.1 ( 0.6) | |

| DST-HCN w/o C | 3.50G | 2.93M | 90.4 ( 0.9) | |

| DST-HCN | 3.50G | 2.93M | 90.9 ( 1.4) |

4.3 Ablation Study

Unless otherwise stated, we analyze our method on the X-sub benchmark of the NTU RGB+D dataset.

| Action | Ens Acc() | Similar Action | Ours Acc() | Acc() | Similar Action |

|---|---|---|---|---|---|

| writing | 48.90 | typing on a keyboard | 60.66 | 11.76 | typing on a keyboard |

| wield knife | 62.50 | hit other | 65.80 | 3.30 | hit other |

| blow nose | 59.65 | yawn | 64.52 | 4.87 | yawn |

| fold paper | 62.78 | ball up paper | 66.96 | 4.18 | counting money |

| yawn | 67.65 | hush (quite) | 70.26 | 2.61 | hush (quite) |

| snapping fingers | 65.51 | shake fist | 71.43 | 5.92 | make victory sign |

| sneeze/cough | 71.74 | touch head (headache) | 75.72 | 3.98 | touch head (headache) |

| apply cream on hand back | 72.30 | open bottle | 75.26 | 2.96 | rub two hands together |

| Type | Method | NTU RGB+D | NTU RGB+D 120 | NW-UCLA | ||

| X-Sub () | X-View () | X-Sub () | X-Set () | - () | ||

| GCN,CNN | ST-GCN [1] | 81.5 | 88.3 | 70.7 | 73.2 | - |

| 2s-AGCN [19] | 88.5 | 95.1 | 82.5 | 84.2 | - | |

| Shift-GCN [20] | 90.7 | 96.5 | 85.9 | 87.6 | 94.6 | |

| SGN [3] | 89.0 | 94.5 | 79.2 | 81.5 | 92.5 | |

| CTR-GCN [2] | 92.4 | 96.8 | 88.9 | 90.6 | 96.5 | |

| Ta-CNN [10] | 90.4 | 94.8 | 85.4 | 86.8 | 96.1 | |

| EfficientGCN-B4 [11] | 92.1 | 96.1 | 88.7 | 88.9 | - | |

| AGE-Ens [21] | 92.1 | 96.1 | 88.2 | 89.2 | - | |

| HypGCN | Hyper-GNN [4] | 89.5 | 95.7 | - | - | - |

| DHGCN [5] | 90.7 | 96.0 | 86.0 | 87.9 | - | |

| Selective-HCN [6] | 90.8 | 96.6 | - | - | - | |

| DST-HCN(Ours) | 92.3 | 96.8 | 88.8 | 90.7 | 96.6 | |

Visualization experiments. We demonstrate the spatial-temporal features of the two topologies of an action sample ”putting on glasses” in Fig. 4. (a), (b) are the output of the HIF module ”” in Fig. 3. (c), (d) are the output of HIF module ””. The coordinates are time and joints respectively.

In Fig. 4 (a), (b), We observe that different combinations of hypergraphs is able to learn different spatial-temporal information. In addition, (a) focuses more on information from the elbow joint and (b) focuses on information features of the hand, suggesting that these joints are more discriminatory for overall. In Fig. 4 (c), (d), the further changes in joint features occurred and the joints are differentiated in temporal aspects. It is shown that the channel learning method can effectively perform information fusion.

Ablation experiments of different components. We experimentally validate the importance of the components, where w/o G, H, and C denote the lack of the original natural topology, the original topology as a hypergraph structure, and the lack of cosine annealing, respectively. Table 1 shows that the model without cosine annealing has a performance drop of 0.5. The result drops by 0.6 without using the original graph structure, which shows that the unnatural joint connection needs to learn certain a prior knowledge of the natural topology. In addition, the result of using the original topology as the hypergraph structure by a margin of 0.3, reflecting the hypergraph structure’s advantage and is more beneficial for action recognition.

The comparison of confusing actions. The DST-HCN and the fine-grained recognition graph convolution method [21] were compared in terms of fine-grained action recognition performance. As shown in Table 2, the recognition performance of all relevant actions is improved. Especially in the writing and snapping fingers actions by a margin of 11.76 and 5.92, respectively. This indicates that our model can handle unnaturally dependent spatial-temporal variation features well.

4.4 Comparisons With the State-of-the-Art Methods

This section compares our dynamic spatial-temporal hypergraph neural network with state-of-the-art methods. For a fair comparison, we use four streams of fusion results, considering joint, bone, and motion data stream forms [2, 20, 19]. We use different fusion parameters [15] for different data to accommodate the diversity of varying stream forms.

The results are shown in Table 3. It can be seen that our model exhibits comparable or better performance than the state-of-the-art approach for the three datasets. Compared to the hypergraph methods, our proposed method has reached state-of-the-art accuracy in hypergraphs and by a margin of 1.5 and 2.8 on X-Sub, which fully illustrates the significant advantage of our method.

5 Conclusion

This paper proposes a dynamic spatial-temporal hypergraph neural network that contains dynamic spatial-temporal hypergraph convolution and high-order information fusion modules. The method presents a variety of hypergraph structures, such as a time-point hypergraph. The method simultaneously implements parallel spatial-temporal modeling. Finally, we validate the model on the NTU RGB+D, NTU RGB+D 120, and NW-UCLA datasets.

References

- [1] Sijie Yan, Yuanjun Xiong, and Dahua Lin, “Spatial temporal graph convolutional networks for skeleton-based action recognition,” in Thirty-second AAAI conference on artificial intelligence, 2018.

- [2] Yuxin Chen, Ziqi Zhang, Chunfeng Yuan, Bing Li, Ying Deng, and Weiming Hu, “Channel-wise topology refinement graph convolution for skeleton-based action recognition,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 13359–13368.

- [3] Pengfei Zhang, Cuiling Lan, Wenjun Zeng, Junliang Xing, Jianru Xue, and Nanning Zheng, “Semantics-guided neural networks for efficient skeleton-based human action recognition,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020. 2020, pp. 1109–1118, Computer Vision Foundation / IEEE.

- [4] Xiaoke Hao, Jie Li, Yingchun Guo, Tao Jiang, and Ming Yu, “Hypergraph neural network for skeleton-based action recognition,” IEEE Trans. Image Process., vol. 30, pp. 2263–2275, 2021.

- [5] Jinfeng Wei, Yunxin Wang, Mengli Guo, Pei Lv, Xiaoshan Yang, and Mingliang Xu, “Dynamic hypergraph convolutional networks for skeleton-based action recognition,” arXiv preprint arXiv:2112.10570, 2021.

- [6] Yiran Zhu, Guangji Huang, Xing Xu, Yanli Ji, and Fumin Shen, “Selective hypergraph convolutional networks for skeleton-based action recognition,” in Proceedings of the 2022 International Conference on Multimedia Retrieval, 2022, pp. 518–526.

- [7] Shuo Chen, Ke Xu, Xinghao Jiang, and Tanfeng Sun, “Spatiotemporal-spectral graph convolutional networks for skeleton-based action recognition,” in 2021 IEEE International Conference on Multimedia & Expo Workshops, ICME Workshops, Shenzhen, China, July 5-9, 2021. 2021, pp. 1–6, IEEE.

- [8] A. Shahroudy, J. Liu, T. T. Ng, and G. Wang, “Ntu rgb+d: A large scale dataset for 3d human activity analysis,” IEEE Computer Society, pp. 1010–1019, 2016.

- [9] Han Zhang, Yonghong Song, and Yuanlin Zhang, “Graph convolutional LSTM model for skeleton-based action recognition,” in IEEE International Conference on Multimedia and Expo, ICME 2019, Shanghai, China, July 8-12, 2019. 2019, pp. 412–417, IEEE.

- [10] Kailin Xu, Fanfan Ye, Qiaoyong Zhong, and Di Xie, “Topology-aware convolutional neural network for efficient skeleton-based action recognition,” in Proceedings of the AAAI Conference on Artificial Intelligence, 2022.

- [11] Yi-Fan Song, Zhang Zhang, Caifeng Shan, and Liang Wang, “Constructing stronger and faster baselines for skeleton-based action recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- [12] Yifan Feng, Haoxuan You, Zizhao Zhang, Rongrong Ji, and Yue Gao, “Hypergraph neural networks,” in Proceedings of the AAAI conference on artificial intelligence, 2019, vol. 33, pp. 3558–3565.

- [13] Jaehyeong Jo, Jinheon Baek, Seul Lee, Dongki Kim, Minki Kang, and Sung Ju Hwang, “Edge representation learning with hypergraphs,” Advances in Neural Information Processing Systems, vol. 34, pp. 7534–7546, 2021.

- [14] Jianwen Jiang, Yuxuan Wei, Yifan Feng, Jingxuan Cao, and Yue Gao, “Dynamic hypergraph neural networks.,” in IJCAI, 2019, pp. 2635–2641.

- [15] Shengqin Wang, Yongji Zhang, Fenglin Wei, Kai Wang, Minghao Zhao, and Yu Jiang, “Skeleton-based action recognition via temporal-channel aggregation,” arXiv preprint arXiv:2205.15936, 2022.

- [16] Jiang Wang, Xiaohan Nie, Yin Xia, Ying Wu, and Song-Chun Zhu, “Cross-view action modeling, learning and recognition,” CoRR, vol. abs/1405.2941, 2014.

- [17] J. Liu, A. Shahroudy, M. Perez, G. Wang, L. Y. Duan, and A. C. Kot, “Ntu rgb+d 120: A large-scale benchmark for 3d human activity understanding,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 10, pp. 2684–2701, 2020.

- [18] Ilya Loshchilov and Frank Hutter, “Sgdr: Stochastic gradient descent with warm restarts,” arXiv preprint arXiv:1608.03983, 2016.

- [19] Lei Shi, Yifan Zhang, Jian Cheng, and Hanqing Lu, “Two-stream adaptive graph convolutional networks for skeleton-based action recognition,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 12026–12035.

- [20] Ke Cheng, Yifan Zhang, Xiangyu He, Weihan Chen, Jian Cheng, and Hanqing Lu, “Skeleton-based action recognition with shift graph convolutional network,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 183–192.

- [21] Zhenyue Qin, Yang Liu, Pan Ji, Dongwoo Kim, Lei Wang, Bob McKay, Saeed Anwar, and Tom Gedeon, “Fusing higher-order features in graph neural networks for skeleton-based action recognition,” IEEE Transactions on Neural Networks and Learning Systems (TNNLS), 2022.