Dynamic Interpretable Change Point Detection

Abstract

Identifying change points (CPs) in a time series is crucial to guide better decision making across various fields like finance and healthcare and facilitating timely responses to potential risks or opportunities. Existing Change Point Detection (CPD) methods have a limitation in tracking changes in the joint distribution of multidimensional features. In addition, they fail to generalize effectively within the same time series as different types of CPs may require different detection methods. As the volume of multidimensional time series continues to grow, capturing various types of complex CPs such as changes in the correlation structure of the time-series features has become essential. To overcome the limitations of existing methods, we propose TiVaCPD, an approach that uses a Time-Varying Graphical Lasso (TVGL) to identify changes in correlation patterns between multidimensional features over time, and combines that with an aggregate Kernel Maximum Mean Discrepancy (MMD) test to identify changes in the underlying statistical distributions of dynamic time windows with varying length. The MMD and TVGL scores are combined using a novel ensemble method based on similarity measures leveraging the power of both statistical tests. We evaluate the performance of TiVaCPD in identifying and characterizing various types of CPs and show that our method outperforms current state-of-the-art methods in real-world CPD datasets. We further demonstrate that TiVaCPD scores characterize the type of CPs and facilitate interpretation of change dynamics, offering insights into real-life applications.

1 Introduction

In domains such as healthcare and finance, real-world time-series data is highly influenced by change points (Truong et al., 2018). Identifying and analyzing these changes in data distribution not only enhances our comprehension of the underlying patterns but also helps mitigate risks and improve decision-making. Change point detection (CPD) methods segment a time-series into distinct intervals with varying underlying properties. Precisely inferring time points associated with such transitions is essential to decipher the behaviors of the processes being modeled (Aminikhanghahi & Cook, 2017). With a substantial increase in the volume of time-series data collected in a variety of domains such as finance (Lavielle & Teyssière, 2007) and healthcare (Yang et al., 2007), the importance of CPD methods that automatically capture changes in the signal has grown. CPD provides a way for the identification and localization of sudden changes in signals, such as patient vital signs, without the need for labeled information. For instance, in a medical setting, CPD enables the timely identification of significant variations such as changes in heart rate or declines in oxygen saturation levels, alerting doctors to potential health issues that demand immediate attention. Most existing CPD methods fail to consider the underlying variability in the properties and root causes of change points (CPs) and therefore cannot generalize effectively to time-series with complex change dynamics. CPs can be characterized as changes in the distribution of the measurements over time. Alternatively, they could also be the result of changes in the correlation structure between features. The former is more studied in the literature, but the latter is also of significance in many applications. For instance, among physiological signals, the Heart Rate Variability (HRV) measure always shows a negative correlation with the Heart Rate (HR) measurement, but in some rare situations, they might exhibit a positive correlation, indicating a concerning change in the underlying health state of an individual. At the same time, changes in the average HR can be indicative of other conditions. Methods that rely solely on detecting changes in marginal distributions will fail to identify such scenarios.

In this paper, we propose a statistical CPD scoring method called TiVaCPD that captures different types of CPs in time-series without the need for labeled instances of change. TiVaCPD offers a non-parametric solution that does not require any distributional assumption of the generative process, and can therefore generalize to various scenarios. TiVaCPD, as shown on Figure 1, assigns a CP score to each time-point that consists of two parts: 1) change in the correlation of features and 2) change in the underlying distribution of the time-series features. Each part of the score is interpretable, allowing us to characterize and classify CPs with similar underlying properties and understand the cause of the change. To identify changes in the joint distribution of features over time, we rely on the hypothesis that changes in feature interactions can be effectively captured via correlation networks constructed from adjacent windows of time. To this end, we employ a dynamic network inference method, time-varying graphical lasso (TVGL) (Hallac et al., 2017), to acquire sparse time-varying precision matrices to detect changes in feature correlation patterns. To identify changes in the probability distributions of adjacent windows, we build on recent statistical results by Schrab et al. (2021) in the theory of non-parametric two-sample MMD tests. Unlike existing CPD methods that employ a fixed-size sliding-window approach, we dynamically establish the window size, accounting for the variable length of states between CPs. Fixing window sizes introduces issues: small windows are likely to compromise the power of the statistical test, and larger windows are at risk of aggregating different distributions. By dynamically adjusting the sliding window size, we address these concerns. We propose to ensemble different components of the TiVaCPD score. Briefly, the ensemble method adaptively assigns weights to scores based on their dissimilarity, placing greater emphasis on scores that capture changes not detected by other components. We evaluate our method’s ability in identifying various categories of CPs on 4 simulated and 2 real-life time-series datasets and compare its performance against 3 state-of-the-art CPD methods; showing that our method outperforms all competitors across real-word and one complex simulated datasets. There are four main contributions of this work:

-

•

We detect and characterize different types of changes in feature dynamics and/or distribution. The categorization and visualization of the underlying CP cause enhances interpretability providing valuable insights of the observed patterns.

-

•

We present a novel use of Time-Varying Graphical Lasso for quantifying changes in feature interactions. We demonstrate its ability to detect CPs that occur due to changes in the covariance of the joint distribution of features over time.

-

•

We introduced a dynamic window selection method that effectively addresses the limitations of static windows when detecting changes in data distribution.

-

•

We propose a novel ensemble technique to best aggregate unsupervised CP scores from different statistical tests. We also introduce a post-processing procedure to smooth the score estimate while preserving local minima and maxima, using the Savitsky-Golay filter (Press & Teukolsky, 1990).

2 Related Work

There is abundant literature on CPD methods (Truong et al., 2018; Aminikhanghahi & Cook, 2017; Reeves et al., 2007). CPD methods consider a time-series to be a collection of random variables with abrupt changes in distributional properties over time. Most of these methods are parametric (Yamanishi & Takeuchi, 2002; Kawahara et al., 2007) and involve estimating the underlying probability density function of the signal, which limits detection to certain type of distributions and is usually computationally expensive. Non-parametric methods (Chang et al., 2019; Cheng et al., 2020; Matteson & James, 2014) are used where the time-series dynamics cannot be easily modeled and prior assumptions about the data distribution cannot be made. An optimal transport-based method proposed by Cheng et al. (2020), conducts two-sample Wasserstein tests between the cumulative distribution of contiguous subsequences. It uses fixed-size sliding windows to compute the test statistic. However, basing CP decisions on the local maxima of this statistic can result in a higher false positive rate. Moreover, this method projects the data onto one-dimension and uses the mean statistic, potentially leading to the loss of detection power.

Deep learning-based methods are another type of non-parametric approach that recently gained popularity due to the increasing amount of available data. For example, Time-Invariant Representation (TIRE) (De Ryck et al., 2021), an autoencoder-based CPD approach, learns a partially time-invariant representation of time series and computes CPs using a dissimilarity measure. Another deep learning technique, referred to as (Deldari et al., 2021a), utilizes contrastive learning to detect CPs, leveraging the representation of time series acquired from temporal convolutional networks. Other deep learning-based CPD methods use kernel functions (Li et al., 2015) for greater flexibility in representing the density functions of intervals of time. One such method, KLCPD (Chang et al., 2019) uses deep generative models to increase the test power of the kernel two-sample MMD test statistic (Gretton et al., 2007). It overcomes limitations of prior kernel-based CPD methods by removing the need of a fixed number of CPs or relying on prior knowledge of a reference or training set for kernel calibration. However, its performance depends on the choice of kernel and kernel bandwidths.

The lack of interpretability in deep learning-based CPD methods hinders our understanding of why and how these methods make predictions. Moreover, current methods often fail to capture changes in correlation patterns that occur due to evolving dynamics of multivariate time series. To address such CPs, Gibberd & Nelson (2015) introduced GraphTime; A Group-Fused Graphical Lasso estimator for grouped estimation of CPs in dependency structures of a time-series captured by a dynamic Gaussian Graphical Model. As the estimated graph topology is piece-wise constant, this is useful only when we are interested in detecting jump points and abrupt changes, and leads to excessive number of false positives for other gradual CPs. Another CPD method (Roy et al., 2017) looks for dependencies between spatial or temporal variables using high-dimensional Markov random-field model. This method relies on two assumptions of known covariance structure and stationary for the data. These are a strong assuption that might not hold in many real-world applications.

3 Method

Problem Formulation

Consider a multivariate time-series sample to be a sequence of random variables with indicating the number of features in . represents the total number of measurements over time. To identify change points in time steps of a data sample , a score , is estimated for each time step that measures the amount of change in the underlying generative distribution of the data.

Our CPD Algorithm - TiVaCPD

In this section, we introduce our CPD algorithm called Time Variable Change Point Detection (TiVaCPD). The score generated by TiVaCPD is composed of two components: 1) a score that measures change in correlation-CovScore (Algorithm 1), 2) a score that measures change in the distribution-DistScore (Algorithm 2). In the rest of the section we introduce each component separately, explain how each results in a unifying score that captures a variety of CP types, and demonstrate how to interpret the score to better understand the CPs.

3.1 Detecting changes in the correlation structure (CovScore)

A CP can be caused by a change in the correlation between features. This results in a change in the covariance of the joint distribution that can be identified as a state change in the feature network. The evolving dynamics of features can be modeled using graphical models, i.e. at every time point, the interactions of features can be modeled as a graph network, with nodes and links corresponding to each feature and correlation between sets of variables, respectively (Figure 2(a)). However, in a time-series setting, estimating the graphical network at every time step is computationally challenging. To estimate these networks to detect CPs, we use Time-Varying Graphical Lasso (TVGL) (Hallac et al., 2017), an efficient algorithm for estimating the inverse covariance matrix (precision matrix) of multivariate time-series with time-varying structures. TVGL infers the structure of graph networks by estimating a sparse time-varying inverse covariance matrix, ( indicating the covariance matrix at time ), of the variables for all . TVGL extends the graphical lasso problem to dynamic networks by allowing covariance to vary over time, taking into account how the relationships between signals evolve. TVGL method enforces sparsity in the covariance matrix for less computational cost and easier interpretability of the graphical network structure. A scalable message-passing algorithm called Alternating Direction Method of Multipliers (Banerjee et al., 2008) is employed to estimate the sparse inverse covariance. Additional details on the TVGL method can be found in the Supplementary Material.

Learning the inverse covariance estimates from windows of data reveals the underlying evolutionary patterns present in the time-series. We employ TVGL technique to identify points of change in feature interactions between adjacent local windows of time. To promote the identification of shifts in the covariance pattern of features, we integrated an L2-norm penalty function into the estimation of the matrix inverse. Moreover, to ensure the invertibility of , we applied feature standardization and removed highly correlated features before feeding them to TVGL. The partial correlation of two features can be estimated from the joint precision matrix entries as , which means contrasting consecutive precision matrix entries over time quantifies the change in the features’ correlation. By taking the difference between the absolute values of adjacent precision matrices, we quantify these changes in the distribution. A value close to 0 in this matrix indicates nearly identical estimations of the network and therefore no CP in that feature interaction (see Algorithm 1). A negative value indicates an increase in absolute correlation and a positive value indicates a decrease.

3.2 Detecting shift in distribution (DistScore)

Assuming in a time-series sample , each is independently generated from a joint probability distribution , a CP occurs at time if observations after are generated from a different distribution. To compare the probability distributions of adjacent windows, we employ a non-parametric two-sample testing procedure called MMD Aggregate (MMDAgg), introduced in Schrab et al. (2021). Let represent the initial size of the window of before a query point , and let this prior window be denoted by . Similarly, the window of future observations can be denoted by , where represents the length of a future window. Kernel-based MMD tests serve as a measure between two probability distributions. With the statistical test threshold , if the null hypothesis , is rejected, the time-series may be partitioned by a CP at , signifying that measurements in the windows come from a different distribution than measurements in . The performance of a single kernel-based MMD test typically depends on the choice of kernel and kernel bandwidths. Since we compare adjacent windows with restricted number of samples, any loss of data to kernel bandwidth selection can be detrimental to our method’s performance. To overcome this problem, MMDAgg aggregates multiple MMD tests using different kernel bandwidths, ensuring maximized test power over the collection of kernels used and eliminating the need for data splitting or arbitrary kernel selection. The method considers a finite collection of bandwidths where the aggregated test is defined as a test that rejects if one of the tests over a given bandwidth rejects .

We propose to dynamically establish the window size based on the presence of CPs (Algorithm 2). Let represent the size of the dynamic window of data points from the last estimated CP (), up until the current time point (). Starting with a constant and a small window, the length of the running window increases with each new observation until a new CP occurs, according to the MMD test. If a significant change in distribution isn’t detected by the MMD test, i.e. the MMD score is smaller than a pre-defined threshold , the two sub-sequences are combined and compared against the next sub-sequence in the series. This process is also explained in Figure 2(b). Our dynamic windowing method eliminates the need for repetitive fixed-window comparisons and utilizes a growing sample set for the MMD test.

For determining the final CP score, we need to meaningfully ensemble the DistScore with the CovScore, which is challenging because the covariance score is bounded while MMD is a positive unbounded score. Hence, TiVaCPD incorporates kernel normalization in the MMDAgg algorithm. we use a generalization of Cosine normalization (Ah-Pine, 2010) to normalize our kernels so as to have a similarity index. For a given kernel function, represents the normalized kernel of order . We use the generalized mean with exponent (arithmetic mean), which means , where . This normalization technique projects the objects from the feature space to a unit hypersphere and guarantees .

3.3 Ensemble Score and CP Categorization

An effective method for combining unsupervised CPD scores is poorly studied. Here, we use an ensemble method that utilizes the score differences to highlight the scores that contribute the most to representing the CPs. Algorithm 3 indicates our ensemble technique for generating a unified score by combing CovScore and DistScore. We use four score variants derived from CovScore and DistScore to generate dynamic dissimilarity weight to effectively aggregate the scores into a unified one (As shown in Figure 1). The four scores used are as follows: a) b) Normalize(CovScore) and Normalize(DistScore): standardized CP scores using z-score normalization to bring them into the same scale, c) SG(CovScore): Since CovScore is sensitive to small distributional changes that can lead to false change point detection, we mitigate the risk of detecting spurious CPs by applying Savitzky-Golay (SG) smoothing filter. SG filtering technique is a widely used method for smoothing patterns and reducing noise in time series data (Press & Teukolsky, 1990). d) SG_combined: We also apply the filter to the sum of filtered CovScore and DistScore, which effectively reduces noise and improves the performance of CPD. The ensemble approach utilizes a weighted average of aforementioned four scores. The importance weight is determined by computing the mean absolute difference between scores. By assigning greater weight to scores that are more dissimilar, we can effectively identify CPs that may have been missed by other scoring methods. The weights are calculated for each 21 time-points window, as the distribution of scores’ importance may vary over time. To locate the exact time of the CPs, we look for peaks in the ensemble scores by searching for local maxima with a threshold that is used to remove number of false positive CPs created by noise. Using a threshold for peak detection is a common practice that requires careful tuning based on circumstances.

3.4 Understanding the change points and interpreting TiVaCPD score

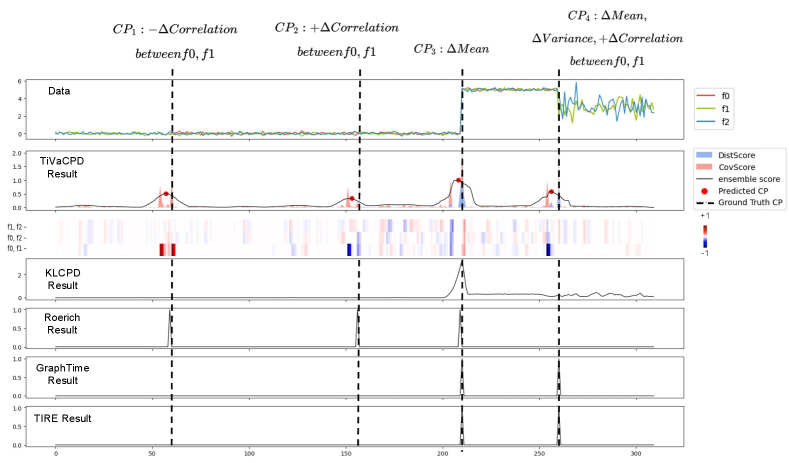

TiVaCPD offers valuable insights into the underlying nature of the observed change points. Detecting both changes in correlation and data distribution, such as an inverse correlation between heart rate variability and heart rate, is crucial in clinical practice, as it can indicate an adverse health event. The DistScore detects changes in the underlying distribution of the time-series, while the CovScore detects changes in the correlation structure of feature pairs, providing a detailed analysis of the feature dynamics at each time step. This information allows for the categorization of CPs, thereby enhancing interpretability, as shown in Figure 3. This figure presents a multivariate time series sample showcasing TiVaCPD results, featuring ConvScore, DistScore, and a weighted ensemble score. In addition, CovScore’s heatmap illustrates the feature pairs that caused a CP, and TiVaCPD identifies the direction of correlation change at each CP. It also includes a comparative analysis with other CPD methods, such as KLCPD, Roerich, GraphTime, and TIRE, providing a comprehensive evaluation of the TiVaCPD results against alternative CPD methods. The first two CPs ( and ) are caused by changes in the correlation between features 0 and 1, where corresponds to a negative change in correlation and corresponds to a positive change in correlation. CP 3 is caused by changes in the mean between features 0 and 1 as the DistScore is high. CP 4 is caused by changes in a combination of variance, correlation, and mean, which are simulated to resemble real-world data.

4 Experiments

4.1 Datasets and Hyper-parameter Settings

We demonstrate the performance of our method compared to multiple baselines on four simulated and two real-life multivariate datasets commonly used in the CPD literature. Different simulations test the functionality of CPD methods on a variety of potential CP scenarios. All hyper-parameters are determined based on random search over 10% of the datasets (more details on best parameters and sensitivity to hyperparameter change are provided in the supplementary material).

Simulated Data:

We created 4 different datasets to simulate different types of CPs. In all datasets, each time series sample consists of features, and each is sampled independently from a Gaussian distribution .

-

•

Jumping Mean: For this dataset, the variance is assumed to be constant over time and across all features and is set to . The ground-truth CPs correspond to abrupt jumps in the mean that can happen independently in any of the features.

-

•

Changing Variance: In this dataset, all three features are generated with constant mean , but their distribution variance changes over time. CPs are indicated as time points with changes in .

-

•

Changing Correlation: This data set consists of a multivariate time-series generated with constant and . To introduce correlation changes between variables, feature 2 is modified to be: , where controls the correlation between the two features that can vary over time and are randomly sampled from . Here, the ground truth CPs correspond to points in time where the correlation changes.

-

•

Arbitrary CPs:This data set consists of a multivariate time series with CPs due to varying , or , or correlations between pairs of variables, resulting in a mixture of CPs scattered over time.

Real-world Data:

-

•

Bee dance (Min Oh et al., 2008): This dataset consists of six three dimensional time-series of bees’ positions while performing three-stage waggle dances. The bees communicate through actions like left/right turn, and waggle. The transition between the states represents ground truth CP.

-

•

Human Activity Recognition (HAR) (Anguita et al., 2013): We use a subset of HAR which includes periods of 6 activities such as normal walking and standing. These activities are measured with 3-axial linear acceleration and angular velocity sensors, for a total of 6 features. The ground-truth CPs are labeled as the transitions between activities.

4.2 Baseline Methods

We compare the performance of TiVaCPD with SOTA CPD methods on various types of CPs. 111The implementation of the baselines was done using publicly released source code by the authors. The selected SOTA approaches include those that measure a change in distribution and those that focus on the graphical structure of features over time. In addition, we conducted an ablation study on various parts of the TiVaCPD score to analyse each component impact.

Kernel Change Point Detection (KLCPD) (Chang et al., 2019) is a kernel learning framework for CPD that uses a two-sample test and optimizes a lower bound of test power via an auxiliary generative model. For this method, we used window sizes for all experiments and trained the model for epochs, unless more training led to improved results. Consistent with our own post-processing steps, we performed peak detection to detect the exact time of change.

Roerich (Hushchyn & Ustyuzhanin, 2021) is a CPD method based on direct density ratio estimation to detect the change in distribution. We set all parameters to default and use window sizes for all experiments.

Group Fused Graph Lasso (GraphTime) (Gibberd & Nelson, 2015) is a time-varying graphical model based on the group fused-lasso. Similar to TiVaCPD it uses the graphical model to model the dependencies of variables in time series. GraphTime models the temporal dependencies between variables while TiVaCPD models the pairwise dependencies to identify CPs.

Time-Invariant Representation (TIRE) De Ryck et al. (2021) is an autoencoder-based CPD method, we used window sizes between for all experiments. For the parameter, we chose to include both time, and frequency domains. We used epochs to train the model.

4.3 Evaluation

| Jumping Mean | Changing Variance | |||||

|---|---|---|---|---|---|---|

| Method | Precision | Recall | F1 (M=5) | Precision | Recall | F1 (M=5) |

| TiVaCPD | 1.00 (0.00) | 1.00 (0.00) | 1.00 (0.00) | 0.86 (0.14) | 0.73 (0.18) | 0.78 (0.15) |

| TiVaCPD-CovScore | 0.00 (0.00) | 0.00 (0.00) | 0.00 (0.00) | 0.03 (0.07) | 0.03 (0.06) | 0.03 (0.06) |

| TiVaCPD-DistScore | 1.00 (0.00) | 1.00 (0.00) | 1.00 (0.00) | 0.79 (0.14) | 0.75 (0.17) | 0.76 (0.15) |

| KL-CPD | 0.76 (0.11) | 0.83 (0.09) | 0.79 (0.09) | 0.18 (0.10) | 0.30 (0.20) | 0.20 (0.15) |

| Roerich | 1.00 (0.00) | 1.00 (0.00) | 1.00 (0.00) | 1.00 (0.00) | 1.00 (0.00) | 1.00 (0.00) |

| GraphTime | 1.00 (0.00) | 1.00 (0.00) | 1.00 (0.00) | 0.05 (0.01) | 1.00 (0.00) | 0.09 (0.02) |

| TIRE | 0.90 (0.23) | 0.73 (0.29) | 0.77 (0.26) | 0.78 (0.24) | 0.60 (0.23) | 0.66 (0.22) |

| Changing Correlations | Arbitrary CPs | |||||

|---|---|---|---|---|---|---|

| Method | Precision | Recall | F1 (M=5) | Precision | Recall | F1 (M=5) |

| TiVaCPD | 0.48 (0.08) | 1.00 (0.00) | 0.64 (0.06) | 1.00 (0.00) | 1.00 (0.00) | 1.00 (0.00) |

| TiVaCPD-CovScore | 0.48 (0.07) | 1.00 (0.00) | 0.64 (0.06) | 0.39 (0.14) | 1.00 (0.00) | 0.54 (0.16) |

| TiVaCPD-DistScore | 0.00 (0.00) | 0.00 (0.00) | 0.00 (0.00) | 1.00 (0.00) | 0.96 (0.09) | 0.97 (0.06) |

| KL-CPD | 0.10 (0.10) | 0.13 (0.13) | 0.11 (0.11) | 0.72 (0.17) | 0.56 (0.09) | 0.63 (0.12) |

| Roerich | 1.00 (0.00) | 0.98 (0.06) | 0.99 (0.03) | 1.00 (0.00) | 0.43 (0.12) | 0.58 (0.12) |

| GraphTime | 0.08 (0.03) | 0.95 (0.08) | 0.15 (0.06) | 0.62 (0.17) | 1.00 (0.00) | 0.75 (0.12) |

| TIRE | 0.14 (0.13) | 0.10 (0.09) | 0.12 (0.11) | 1.00 (0.00) | 0.73 (0.21) | 0.80 (0.17) |

| Bee Dance | HAR | |||||

|---|---|---|---|---|---|---|

| Method | Precision | Recall | F1(M=5) | Precision | Recall | F1(M=5) |

| TiVaCPD | 0.36 (0.18) | 0.59 (0.22) | 0.45 (0.15) | 0.72 (0.06) | 0.48 (0.06) | 0.58 (0.06) |

| TiVaCPD-CovScore | 0.34 (0.26) | 0.36 (0.19) | 0.34 (0.21) | 0.62 (0.06) | 0.56 (0.05) | 0.57 (0.04) |

| TiVaCPD-DistScore | 0.48 (0.11) | 0.25 (0.13) | 0.32 (0.11) | 0.50 (0.06) | 0.35 (0.03) | 0.40 (0.03) |

| KL-CPD | 0.24 (0.26) | 0.10 (0.07) | 0.13 (0.11) | 0.66 (0.11) | 0.20 (0.03) | 0.30 (0.04) |

| Roerich | 0.50 (0.34) | 0.32 (0.26) | 0.40 (0.30) | 0.69 (0.15) | 0.11 (0.03) | 0.18 (0.05) |

| GraphTime | 0.13 (0.04) | 0.77 (0.13) | 0.22 (0.07) | 0.04 (0.002) | 0.96 (0.02) | 0.08 (0.01) |

| TIRE | 0.34 (0.44) | 0.14 (0.19) | 0.20 (0.26) | 0.52 (0.19) | 0.14 (0.05) | 0.22 (0.08) |

Tables 1-3 show the performance of TiVaCPD and all baselines on 4 simulated and 2 real-world data sets. Results are reported as scores, as well as precision and recall, measuring how well it detects CP locations in time. To estimate performance metrics, we define a margin of error for the exact location of CP, which is common practice in the CPD literature (van den Burg & Williams, 2020; Deldari et al., 2021b). Given a user-defined margin of error, , an estimated CP is a True Positive (TP) if the distance between the ground truth () and the estimated CP () is smaller than the margin, i.e. . As explained in Figure 4, if an estimated CP falls outside the margin, then it is considered False Positive (FP), i.e. . We show the impact of margin values on the performance of all baselines in the supplementary material.

Our method, TiVaCPD, effectively detects various types of CPs in simulated datasets by using different components of the score to capture specific CP’s types. The CovScore component excels at detecting CPs caused by changes in correlation, the DistScore component detects CPs caused by changes in distribution, and the ensemble score combines the strengths of both components for improved overall performance. Additionally, each component of the score individually outperforms baseline methods, indicating that the performance boost is not just the result of ensembling the scores, but each component of the score itself does a better job at detecting the relevant CPs compared to baseline methods. Although Roerich performs well in detecting simple CPs; however, it fails to address more complex CPs in both synthetic and real-world data. Our method is the only one that performs well in detecting complex CPs. The performance results on the real-life datasets are reported in Table 3, for all baselines with a margin value of 5. We show that our method outperforms all baselines in detecting the exact time of CP in real-world and simulation datasets. Figure 3 shows a graphical representation and comparison of the different CPD methods for a time-series sample (row 1), and demonstrates how different methods generate scores for CPs. The different components of TiVaCPD are shown in rows 2 and 3, and show what category of CPs they identify. The heatmaps of CovScore can be used to identify in which pair of features the change in correlation has occurred, and also in which direction the change was.

5 Discussion

In this paper, we introduce TiVaCPD, a novel CPD method for detecting and characterizing various types of CPs in time-series data. By capturing changes in feature distribution, dynamics, and correlation networks, TiVaCPD provides valuable insights into the underlying causes of CPs, enhancing interpretability for end users. This is particularly crucial in domains like healthcare, where the type of CP significantly influences downstream decision making. The method is currently designed for offline settings to retrospectively detect changes. For future work, we intend to extend TiVaCPD to the online setting, where real-time measurements are acquired. Moreover, we plan to incorporate techniques for imputing missing data by leveraging correlated features and temporal dynamics.

References

- Ah-Pine (2010) Ah-Pine, J. Normalized kernels as similarity indices. In Pacific-Asia Conference on Knowledge Discovery and Data Mining, pp. 362–373. Springer, 2010.

- Aminikhanghahi & Cook (2017) Aminikhanghahi, S. and Cook, D. J. A survey of methods for time series change point detection. Knowledge and Information Systems, 51:339–367, 2017.

- Anguita et al. (2013) Anguita, D., Ghio, A., Oneto, L., Parra, X., and Reyes-Ortiz, J. L. A public domain dataset for human activity recognition using smartphones. In European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, 2013.

- Banerjee et al. (2008) Banerjee, O., El Ghaoui, L., and d’Aspremont, A. Model selection through sparse maximum likelihood estimation for multivariate gaussian or binary data. Journal of Machine Learning Research, 9:485–516, 2008.

- Chang et al. (2019) Chang, W.-C., Li, C.-L., Yang, Y., and Póczos, B. Kernel change-point detection with auxiliary deep generative models. In International Conference on Learning Representations, 2019.

- Cheng et al. (2020) Cheng, K. C., Aeron, S., Hughes, M. C., Hussey, E., and Miller, E. L. Optimal transport based change point detection and time series segment clustering. In ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 6034–6038. IEEE, 2020.

- De Ryck et al. (2021) De Ryck, T., De Vos, M., and Bertrand, A. Change point detection in time series data using autoencoders with a time-invariant representation. IEEE Transactions on Signal Processing, 2021.

- Deldari et al. (2021a) Deldari, S., Smith, D. V., Xue, H., and Salim, F. D. Time series change point detection with self-supervised contrastive predictive coding. In Proceedings of The Web Conference 2021, WWW ’21. Association for Computing Machinery, 2021a. doi: 10.1145/3442381.3449903. URL https://doi.org/10.1145/3442381.3449903.

- Deldari et al. (2021b) Deldari, S., Smith, D. V., Xue, H., and Salim, F. D. Time series change point detection with self-supervised contrastive predictive coding. WWW ’21: Proceedings of the Web Conference, 2021b.

- Gibberd & Nelson (2015) Gibberd, A. J. and Nelson, J. D. Estimating dynamic graphical models from multivariate time-series data. In International Workshop on Advanced Analytics and Learning on Temporal Data, 2015.

- Gretton et al. (2007) Gretton, A., Borgwardt, K. M., Rasch, M., Schölkopf, B., and Smola, A. J. A kernel method for the two-sample-problem. International Conference on Neural Information Processing, 2007.

- Hallac et al. (2017) Hallac, D., Park, Y., Boyd, S., and Leskovec, J. Network inference via the time-varying graphical lasso. In 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 205–213, 2017.

- Hushchyn & Ustyuzhanin (2021) Hushchyn, M. and Ustyuzhanin, A. Generalization of change-point detection in time series data based on direct density ratio estimation. Journal of Computational Science, 53:101385, 2021.

- Kawahara et al. (2007) Kawahara, Y., Yairi, T., and Machida, K. Change-point detection in time-series data based on subspace identification. The IEEE International Conference on Data Mining (ICDM), 2007.

- Lavielle & Teyssière (2007) Lavielle, M. and Teyssière, G. Adaptive detection of multiple change-points in asset price volatility. Long Memory in Economics, 2007.

- Li et al. (2015) Li, S., Xie, Y., Dai, H., and Song, L. M-statistic for kernel change-point detection. International Conference on Neural Information Processing, 2015.

- Matteson & James (2014) Matteson, D. S. and James, N. A. A nonparametric approach for multiple change point analysis of multivariate data. Journal of the American Statistical Association, 109, 2014.

- Min Oh et al. (2008) Min Oh, S., Rehg, J. M., Balch, T., and Dellaert, F. Learning and inferring motion patterns using parametric segmental switching linear dynamic systems. In International Journal of Computer Vision (IJCV) Special Issue on Learning for Vision, pp. 103–124, 2008.

- Press & Teukolsky (1990) Press, W. H. and Teukolsky, S. A. Savitzky-golay smoothing filters. Computers in Physics 4, Volume 4, 1990.

- Reeves et al. (2007) Reeves, J., Chen, J., L. Wang, X., Lund, R., and Lu, Q. Q. A review and comparison of changepoint detection techniques for climate data. Journal of Applied Meteorology and Climatology, 46, 2007.

- Roy et al. (2017) Roy, S., Atchadé, Y., and Michailidis, G. Change point estimation in high dimensional markov random-field models. Journal of the Royal Statistical Society. Series B (Statistical Methodology), 79(4):1187–1206, 2017.

- Schrab et al. (2021) Schrab, A., Kim, I., Albert, M., Laurent, B., Guedj, B., and Gretton, A. Mmd aggregated two-sample test, 2021. URL https://arxiv.org/abs/2110.15073.

- Truong et al. (2018) Truong, C., Oudre, L., and Vayatis, N. Selective review of offline change point detection methods. Signal Processing Volume 167, 2018.

- van den Burg & Williams (2020) van den Burg, G. J. J. and Williams, C. K. I. An evaluation of change point detection algorithms. arXiv:2003.06222v2 [stat.ML], 2020.

- Yamanishi & Takeuchi (2002) Yamanishi, K. and Takeuchi, J.-i. A unifying framework for detecting outliers and change points from non-stationary time series data. Proceedings of the eighth ACM SIGKDD international conference on Knowledge discovery and data, pp. 676–681, 2002.

- Yang et al. (2007) Yang, P., Guy, D., and Ansermino, J. M. Adaptive change detection in heart rate trend monitoring in anesthetized children. IEEE Transactions on Biomedical Engineering, 2007.