Dynamic Graph-Based Anomaly Detection in the Electrical Grid

Abstract

Given sensor readings over time from a power grid, how can we accurately detect when an anomaly occurs? A key part of achieving this goal is to use the network of power grid sensors to quickly detect, in real-time, when any unusual events, whether natural faults or malicious, occur on the power grid. Existing bad-data detectors in the industry lack the sophistication to robustly detect broad types of anomalies, especially those due to emerging cyber-attacks, since they operate on a single measurement snapshot of the grid at a time. New ML methods are more widely applicable, but generally do not consider the impact of topology change on sensor measurements and thus cannot accommodate regular topology adjustments in historical data. Hence, we propose DynWatch, a domain knowledge based and topology-aware algorithm for anomaly detection using sensors placed on a dynamic grid. Our approach is accurate, outperforming existing approaches by 20 or more (F-measure) in experiments; and fast, averaging less than 1.7ms per time tick per sensor on a 60K+ branch case using a laptop computer, and scaling linearly with the size of the graph.

Index Terms:

anomaly detection, dynamic grid, graph distance, LODF, power system modelingI Introduction

Maintaining and improving the reliability of the electric power grid is a critically important goal. Estimates [1] suggest that reducing outages in the U.S. grid could save billion per year, reduce emissions by to , while improving efficiency could save an additional billion per year. Although grid operators and engineers work tirelessly to maintain reliability of the electric grids, many challenges persist. Climate change is increasing the frequency of natural disasters, resulting in higher rate of equipment failure. Adding to the climate risk is a new adversary in the form of cyber-intrusions that is capable of disrupting grid control and communication. This is evident from the recent reports of foreign hackers successfully penetrating control rooms of the U.S. power plants [2] and of cyber-attacks on the Ukrainian grid in 2015-2016 [3][4] that brought down sections of the network causing damages worth billions of dollars.

A key tool that the grid operators use to safeguard against these failures, whether naturally occurring or malicious, involves the anomaly detection capabilities that are implemented in the grid control rooms. The primary purpose of these techniques is to help grid operators isolate faulty data from the healthy ones to result in accurate situational awareness, which further allows grid operators to take rapid corrective actions. In almost real-time, these methods can analyze measurement values, dynamics and other informative features to detect abnormal events including erroneous topology or measurements, while accommodating normal grid behaviors, including regular topology changes and power configuration adjustments.

In existing power grids, anomaly detection is performed within the Energy Management Systems (EMS) [5] that are installed in the control rooms. The EMS through Supervisory Control and Data Acquisition (SCADA) system collects two primary sources of data: i) online analog measurement data from various sensors such as remote terminal units (RTUs) and phasor measurement units (PMUs) and ii) status data of switching devices and circuit breakers on various devices such as lines and transformers. Both types of data are collected every few seconds: for instance analog measurements are updated every 10s in PJM[6]; and status data are updated every 4s in ISO-NE[7]. Upon processing, separate analysis units within the EMS are run to identify anomalies in measurements and topology.

AC state-estimation (ACSE) [8] along with bad-data detection (BDD) algorithms [9] is used today for anomaly detection on measurement data from RTUs and PMUs, via hypothesis test on output residuals. These are run every 1 to 10 minutes (every 5 minutes in ERCOT and US Midwest ISO (MISO), every 3 minutes in the U.K. grid and ISO-NE, and every 1-2 minutes in PJM[10]). The most widely used problem formulation for ACSE is the weighted-least-square (WLS) form[9], minimizing mean-squared measurement error. Some other formulations are designed to achieve intrinsic robustness against bad data, including least absolute value (LAV) based [11], least median of squares based [12], as well as iteratively reweighted least-squares based approaches [13]. Unfortunately when RTUs are included, these methods generally suffer from difficult convergence due to non-linear measurement models. There have been recent attempts at convexification of the state-estimation problem [14][15][16][17]; however, several limitations persist. These include inability to detect coordinated attacks like false-data injection attack and high sensitivity to network topology errors.

On the other hand, events of topology change are detected by the network topology processor (NTP)[18][19], which transforms the input circuit breaker/switching status data into the bus-branch model in which network connectivity and meter locations are identified. However, existing operational NTP does not account for topology errors due to erroneous status data caused by communication, operator entry errors, cyber-attacks, etc. As a countermeasure, there exist research works that overcome some challenges of existing NTP. For instance, generalized topology processing [20] creates pseudo-measurements and applies hypothesis tests to detect topology anomalies. Another approach [21] applies hypothesis tests on WLS residuals from SE to detect topological errors, as an extended application of ACSE BDD. More recently, other advanced methods such as [22][23][15] have been developed where TE and ACSE are merged together to perform estimation on a node-breaker model. This enables measurement error and topology error to be effectively identified and separated. However, challenges persist here as well, mostly due to the lack of efficient and scalable methods to handle the non-linearity with conventional measurements and the inability of these methods to detect topology anomalies during coordinated cyber-attacks.

These challenges with existing TE and ACSE can be addressed by using anomaly detection based on statistical behavior. Instead of analysing the well-defined measurement models in a snapshot, one can leverage statistical behaviors to extract some patterns from historical data. This can be helpful since most anomalies, either unexpected faults or cyber-attacks, usually disrupt the statistical consistency of the data stream, despite being invisible from one single snapshot. Existing behavioral anomaly detection methods can be broadly categorized into model-based (where an expectation of observation is obtained by fitting a mathematical model)[24][25][26], representation based[27][28], graphical methods[29], and others (see Section II for related works). Generally, these families of methods are all directly applicable to the problem described in this paper. However, these methods either do not consider the impact of graph change on the observations [24][26][27], or assume a static topology across the data stream[30]. This is problematic for grid problems since topological changes can happen frequently on a real grid (see Figure 10(a) in Section V), and different configurations naturally cause differences in grid measurements. Hence, previous observations from disparate topologies and loadings may provide little value in assessing the anomalousness at a given time t. Without a selection of relevant data, methods may produce more false positives by falsely creating alarms when regular topology changes occur.

In light of these challenges, a more applicable way is to make the anomaly detection method context-aware, so that it indicates the expected patterns of behavior from data samples collected with relevant context of topology. With that viewpoint, we propose a method that processes the time series of measurements and the time series of their topology to be context-aware. Intuitively, the method works by defining graph distances based on domain knowledge and estimating a reliable distribution of measurements at time from the most relevant previous data. Then, an alarm is created if the measured value deviates greatly from the center of its distribution. The goal of the method is to directly consider the impact of topology on observations, so that regular topological changes are accommodated, while detecting any false measurement and topological errors. Furthermore, to account for large-scale grids, we develop a locally-sensitive variant, DynWatch-Local.

Figure 1 demonstrates the novelty of the proposed method. We apply a False Data Injection Attack[31] to a 14-bus network, a special type of cyber attack that modifies the measurement output of the selected sensors in the grid. We show that our proposed method is able to detect the anomalies without false positive (FP) outcomes, while the baseline methods are not. Details of this experiment are provided in Section IV-C.

II Background and Related Work

II-A Existing ML-based Anomaly Detection Approaches

Time Series Anomaly Detection

Numerous algorithms exist for anomaly detection in univariate time series [32]. For multivariate time series, LOF [27] uses a local density approach. Isolation Forests [29] partition the data using a set of trees for anomaly detection. Other approaches use neural networks [26], distance-based [33], and exemplars [34]. However, none of these consider the graph structure.

Anomaly Detection in Temporal Graphs

[35] finds anomalous changes in graphs using an egonet (i.e. neighborhood) based approach, while [36] uses a community-based approach. [37] finds connected regions with high anomalousness. [38] detects large and/or transient communities using Minimum Description Length. [39] finds change points in dynamic graphs, while other partition-based [40] and sketch-based [41] also exist for anomaly detection. However, these methods focus on detecting unusual communities or connections, while our approach has a very different goal of detecting disturbances which cause changes in sensor values.

Anomaly Detection with Domain Expert Knowledge

Domain-specific anomaly detectors based on optimal power flow[42], SE residual-based test (traditional SE BDI[9], and gross error detection[43][44]) and TE[18][20] already exist and are purely based on power system theories, yet they are typically limited to specific disturbances and attacks against the grid components. On the contrary, ML methods, as illustrated above, are more generally applicable; however, without a basic understanding of how the real-world grid operates, they are likely to perform poorly. Motivated by the pros and cons, many efforts [30][45] have combined the benefits of the two, embedding the domain-knowledge in general ML methods. Such methods with domain knowledge have been shown to have higher performance (see [30][45]) but still do not fully consider the dynamic nature of the electric grid.

To summarize, the major contribution of DynWatch when compared with existing methods are summarized in table I.

|

Time Series |

Graph-based |

GridWatch |

DynWatch |

|

|---|---|---|---|---|

| Graph Data | ✓ | ✓ | ✔ | |

| Anomalies in Sensor Data | ✓ | ✓ | ✔ | |

| Changing Graph | ✓ | ✔ | ||

| Locally Sensitive | ✔ |

II-B Handling Redundant Data

Transmission grids, in order to be observable, have a large number of RTUs and PMUs installed. For the anomaly detection algorithm developed in this paper, processing the large volume of redundant data for anomaly detection is unnecessary and computationally prohibitive. This is because each sensor predominantly captures the relative information of its neighboring sensors as well. Therefore, to create a proper input for anomaly detection, pre-processing techniques can be deployed: Principal Component Analysis (PCA)[46] creates a low-dimensional representation by extracting uncorrelated directions; projection pursuit[47] reduces the input to a low-dimensional projected time series that optimizes the kurtosis coefficient; Independent Component Analysis (ICA)[48] identifies a subset of independent variables. Alternative techniques like cross-correlation analysis[49] also help create a low-dimensional input. A detailed survey regarding dimensionality reduction can be found in[50]. Rather than transforming the redundant input, other algorithms for sensor placement [51] are also applicable, by suggesting the best several locations of sensors to be installed and providing observability. [30] has shown the selection of a small number of sensor locations with a provably near-optimal probability of detecting an anomaly.

II-C Standard graph distance/similarity measures

Many graph distance/similarity measures have been proposed in the past that relate to anomaly detection in dynamic graphs. A survey of such measures can be found in [52] [53]. Most of these measures fit in one of the following categories:

- 1.

- 2.

-

3.

Iterative methods based on the structural similarity of local neighborhoods: This type of method exchanges node/edge similarities until convergence and computes the similarity between two graphs by coupling the similarity scores of nodes and edges [52].

- 4.

While all of the above graph distance measures have unique advantages, none of them are designed for grid-specific challenges, nor do they capture the implicit physics of the power grid graph. Section III-C will provide a more detailed discussion about grid-specific challenges and develop a novel graph distance measure to meet the needs of the grid anomaly detection.

II-D Background: Line Outage Distribution Factor (LODF)

Line Outage Distribution Factor (LODF) is a sensitivity measure of how much an outage on a line affects real power flow on other lines in the system [61]. This factor can be easily and efficiently calculated by assuming a DC power flow model with lossless lines or a linearized AC power flow model around the operating point and is commonly used to estimate the linear impact of line outage. For an outage on line , LODF gives the ratio between power change on an observed line and the pre-outage real power on the outage line .

III Proposed DynWatch Algorithm

III-A Preliminaries

Table II shows the symbols used in this paper.

| Symbol | Interpretation |

|---|---|

| Input graph | |

| Subset of nodes to place sensors on | |

| Number of nodes | |

| Number of scenarios | |

| Set of edges adjacent to node | |

| Voltage at node at time | |

| Current at edge at time | |

| Power w.r.t. node and edge at time | |

| Power change: | |

| Sensor vector for scenario at time |

We are given a dynamic graph (grid) at each time tick , where denotes the set of nodes (active grid buses), and denotes the set of edges (active grid branches). Also we have a fixed set of sensors . Each sensor installed on node can obtain PMU or RTU measurements at each time .

For each sensor on node , we obtain the power flows on all lines adjacent to node , as observed in [30] that using power (rather than current) provides better anomaly detection in practice. For any PMU bus and edge , define the power w.r.t. along edge as , where ∗ is the complex conjugate.

Topology determination: At any time , the given dynamic graph can be considered a ‘reference topology’. The observed measurements should be consistent with the reference topology if there are no anomalous measurements and topology. In this work, we use the output from topology estimation (NTP) in the grid energy management system (EMS) as the reference topology. It is a valid reference because the operator believes that this is the best estimate of the grid topology at any time. Notably, this topology is not assumed to be perfect and accurate. Topological errors, along with false measurements, are to be detected by the proposed method.

III-B Motivation and Method Overview

Consider the simple power grid shown in Figure 2, which evolves over time from to to . For simplicity, assume that we have a single sensor, from which we want to detect anomalous events. How do we evaluate whether the current time point () is an anomaly? If the graph had not been changing, we could simply combine all past sensor values to learn a distribution of normal behavior (e.g. fitting a Gaussian distribution as in ), then evaluate the current time point using this Gaussian distribution (e.g. in terms of the number of standard deviations away from the mean).

In the changing graph setting, we still want to learn a model of normal behavior (), but while taking the graph changes into account. Note that and are only slightly different, while and are very different. Hence, the sensor values coming from (i.e. time to ) should be taken into account more highly when constructing , as compared to those from . Intuitively, the sensor values from are drawn from a very different distribution from the current graph, and thus should not influence our learned model . In general, the more similar a graph is to the current graph, the more we should take its sensor values into account when learning our current model. This motivates the 3-step process we use:

-

1.

Graph Distances: Measure the distance between each past graph and the current graph.

-

2.

Temporal Weighting: Weight the past sensor data, where data from graphs that are similar to the current one are given higher weight.

-

3.

Anomaly Detection: Learn a distribution of normal behavior () from the weighted sensor values, and measure the anomalousness at the current time based on its deviation from this distribution.

To further clarify the motivation and methodology, we provide an informal definition for the anomaly detection problem and a statistical definition of the anomaly pattern.

Definition III.1 (Dynamic electric grid anomaly detection problem).

Given time series data of sensor observations on a set of sensors , and a time series of topologies, find (1) the timestamps that correspond to an anomaly pattern (2) the top-k sensor locations that contribute most to the anomaly pattern.

Definition III.2 (Anomaly pattern).

At any time , given a time series of observations , a time series of topologies, a graph distance measure , and a detection threshold , we assign different (trust) weights to observations at , based on : the larger the distance, the lower the weight. This provides a statistical distribution of , parameterized by weighted median and weighted IQR(t). The instance is predicted as anomalous if is an outlier of the distribution, i.e., .

In electric grids, the target anomalies correspond to topological errors (unexpected topology changes unknown to the operator) and measurement errors. These are the types of anomalies that our method is proposed for and is likely to detect based on empirical results provided at the end of the manuscript.

In the following sections, we first introduce our domain-aware graph distance measure based on Line Outage Distribution Factors (LODF) [61]. Then, we describe our temporal weighting and anomaly detection framework, which flexibly allows for any given graph distance measure. Finally, we present an alternate distance measure that is locally sensitive, i.e., it accounts for the local neighborhood around a given sensor.

III-C Proposed Graph Distance Measure

In this section, we describe our proposed graph distance measure to calculate the distance between any pair of graphs. For ease of understanding, the rest of Section III uses the example of anomaly detection at in Figure 2 as an extended case study, but our approach can be easily extended to the general case.

Section II-C has provided a short review of existing graph distance measures, but these measures do not apply directly to the grid-specific challenge in this paper. For an anomaly detection algorithm to work well on the power grid applications, the choice of graph distance needs to consider problem-specific challenges along with desirable properties for an anomaly detection algorithm (scalability, sensitivity to change, and ‘importance-of-change awareness’). One grid-specific challenge is that the ideal graph distance should capture ‘grid physics’ rather than only graph structural changes. Specifically, the distance should be sensitive to the redistribution of power flow, not only the addition/deletion of nodes/edges, since the anomaly information is extracted from power flow measurements. Meanwhile, as the ‘importance-of-change awareness’ indicates, grid changes that cause big shifts in power flow (measurements) should result in larger graph distances, than changes that cause minor power impact. Unfortunately, none of the classical graph distances can capture the physics of the power flow and quantify the impact of graph change in terms of power. To handle this, this work proposes a novel design of graph distance by making use of the power sensitivity factor.

Intuitively, the goal is for our graph distance to represent redistribution of line power flow. Critical changes in topology result in large redistributions of power. Thus, the graph distance arising from a topology change should be large if the changed edges can potentially cause large amounts of power redistribution.

Hence, given two graphs and with different topology, we first define a transition state which takes the union of the two graphs:

Then the topology changes from to can be considered as different line deletions from their base graph . For each single line deletion, e.g. line , we define its contribution to graph distance by taking the average of its power impacts on all other lines as measured by LODF:

where denotes the cardinality of set , denotes the LODF coefficient with as outage line and as observed line.

Then graph distance is given by summing up the contributions of different line deletions from the base graph:

where and accordingly, denotes all the edge changes between the two graphs.

This definition uses LODF as a measure of the impact on power flow of the removal of line . Hence, edges with high LODF to many other edges can potentially cause greater changes in power flow, and thus our graph distance measure places greater importance on these edges. Appendix A-A demonstrates the effectiveness of the LODF-based graph distance by comparing it against traditional distance measures for anomaly detection.

III-D Proposed Temporal Weighting Framework

In this section, we assume that we are given any distance measurements between any pair of graphs and , and explain how to use them to assign weights to each previous sensor data. This procedure can take the LODF-based distance defined in the previous subsection as input, but also allows us to flexibly use any given graph distance measure. The proposed Temporal Weighting is given in Algorithm 1.

For the purpose of utilizing previous data from a series of dynamic graphs, Temporal Weighting plays an important role. The resulting weights directly determine how much information to extract from each previous record, thus requiring special care. Intuitively, the weights should satisfy the following principles:

-

•

The larger the distance , the lower the weight . This is because high indicates that time tick is drawn from a very different graph from the current one, and thus should not be given high weight when estimating the expected distribution at the current time

-

•

Positivity and Normalization:

To satisfy these conditions, we use a principled optimization approach based on bias-variance trade-off. Intuitively, the problem with using data with high is bias: it is drawn from a distribution that is very different from the current one, and that can be considered a biased sample. We treat as a measure of the amount of bias. Hence, given weights on previous data (in Figure 2 example), the total bias we incur can be defined as .

We could make the bias low simply by assigning positive weights to only time points from the most recent graph. However, this is still unsatisfactory as it results in a huge amount of variance: since very little data is used to learn , the resulting estimate has high variance. Multiplying a fixed random variable by a weight scales its variance proportionally to . Hence, given weights , the total amount of variance is proportional to , which we define as our variance term.

We thus formulate the following optimization problem as minimizing the sum of bias and variance, thereby balancing the goals of low bias (i.e. using data from similar graphs) and low variance (using sufficient data to form our estimates). We formulate the problem as:

subject to

By writing out its Lagrangian function:

and applying KKT conditions, we can see the optimal primal-dual solution must satisfy:

Since we have , by further manipulation we have:

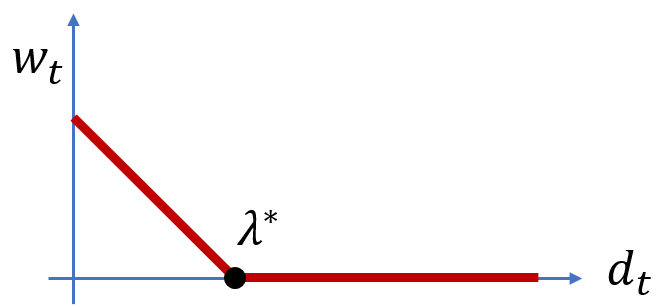

Moreover, there is a unique choice of such that the resulting weights sum up to . This against relationship is shown in Figure 4. This result is intuitive: as increases, the resulting weight we assign decreases, and if passes a certain threshold, it becomes large enough so that any reduction in variance it could provide is more than offset by its large bias, in which case we assign it a weight of .

Our Temporal Weighting algorithm is in Algorithm 2. During implementation, we adjust the relative importance of bias and variance by normalizing and scaling the graph distances (scaling factor 0.005 works well based on our empirical observation).

III-E Proposed Anomaly Detection Algorithm

Having obtained our weights , the remaining step is to compute our anomaly score, as shown in Algorithm 3.

We focus on 3 metrics from sensor data as indications of power system anomalies. These metrics were studied in [30] and found to be effective for detecting anomalies in power grid sensor data. In our setting, recall that for each sensor, we can obtain that contains changes of real and reactive power on the adjacent lines, over time. The 3 metrics are:

-

•

Edge anomaly metric: which measures the maximum line flow change among lines connected to the sensor. Let denote the set of lines connected to sensor :

-

•

Average anomaly metric: which measures the average line flow change on the lines connected to the sensor:

-

•

Diversion anomaly metric: which measures the standard deviation of line flow change over all lines connected to the sensor:

Intuitively, for each metric, we want to estimate a model of its normal behavior. To do this, we compute the weighted median and interquartile range (IQR)111IQR is the difference between 1st and 3rd quartiles of the distribution, and is commonly used as a robust measure of spread. of the detection metric, weighting the time points using our temporal weights . (Weighted) median and IQR are preferred choice of distribution parameters over the mean and variance for anomaly detection since they are robust measures of central tendency and statistical dispersion (i.e. they are less likely to be impacted by outliers)[62]. We can then estimate the anomalousness of the current time tick by computing the current value of a metric, then subtracting its weighted median and dividing by its IQR. The exact steps are given in Algorithm 3.

III-F Proposed Locally Sensitive Distance Measure

Motivation

In the previous section, we computed a single distance value between any pair of graphs. However, consider two graphs and in Figure 5 that are very different due to a small yellow localized region (e.g. in a single building that underwent heavy renovation). Hence, is large, indicating not to use data from when we analyse a time tick under . However, from the perspective of a single sensor (sensor 2) far away from the localized region, this sensor may experience little or no changes in the power system’s behavior, so that data from graph may have a similar distribution as data from graph , and so for this sensor (sensor 2) we can still use data from to improve anomaly detection performance. Hence, rather than computing a single distance , we compute a separate locally-sensitive distance specific to each sensor, which measures the amount of change between graphs and in the ‘local’ region to sensor . Clearly, the notion of ‘local regions’ must be carefully defined: we will define them based on LODF, recalling that LODF measures how much changes on one edge affect each other edge.

Intuitively, the local distance between two graphs with respect to sensor is large if the changed edges can potentially cause large power change nearby the sensor. Hence, given two graphs and with their transition state and a sensor of interest, the local graph distance contribution of line with respect to sensor can be calculated by multiplying the whole-grid-wide contribution with a weighing factor . This coefficient filters the power impact for sensor using the maximum power impact of line deletion on lines around this sensor:

where denotes the set of edges around sensor (e.g. in Figure 6, ), and denotes the LODF with as outage line and as observed line.)

Then, as before, the local graph distance with respect to sensor s is defined by summing up the local graph distance contributions of different line deletions from the graph:

III-G Statistical error analysis

This section focuses on a quantitative analysis of the performance of our method, through statistical error analysis.

Let denote the width of time window for analysis, then for sensor , the anomalousness of its observation is evaluated based on its previous data . The anomaly detection method works by assigning weights () to all the previous observations and an alarm is created if deviates by a certain threshold.

Here we investigate the properties of statistical error based on the following definitions and assumptions:

Assumption III.1 (Temporal independence).

For any sensor and time , its measured data is drawn from a Gaussian distribution independently from other time ticks, where accounts for all uncertainties caused by measurement noise, load/generation variation, weather uncertainty, etc.

Assumption III.2 (identical distribution conditioned on topology).

Given a certain topology and sensor , all data of under the same topology are drawn independently from the same distribution (i.e., for any two time ticks with the same topology , we have .)

Definition III.3 (Optimal graph distance).

For any time-series data of a sensor and its latest observation , denotes the graph distance between the graph at time and the graph at , i.e., . Then the optimal graph distance for satisfies , or equivalently, constant such that .

We first demonstrate that the statistical error can be bounded:

Theorem III.1 (Error bound).

Upon obtaining the error bound, here are some intuitive explanations of the upper bound being dependent on and :

-

•

: large value for this term indicates that the estimation method depends heavily on a particular prior data point with weight . This can lead to overfitting and as a result higher error (upper bound) due to high variance.

-

•

: large value for this term indicates that a prior data point from a very different distribution has been used for estimation, which can lead to higher error (upper bound) due to high bias.

Another question of interest to us is the properties in the limit of infinite data:

Theorem III.2 (Unbiased estimation under infinite data).

In the limit of infinite data, the statistical error limits at the lower bound:

and an unbiased estimate of the true distribution is obtainable using the previous samples, i.e.,

Detailed proofs of the two theorems are included in Appendix.A-B.

IV Experiments

We design experiments to answer the following questions:

-

•

Q1. Anomaly Detection Performance: how accurate is the anomaly detection from our method compared to other ML baselines?

-

•

Q2. Scalability: how do our algorithms scale with the graph size?

-

•

Q3. Practical Benefits: how can our algorithm enhance the standard practices (SE BDD) in today’s grid operator?

Our code and data are publicly available at https://github.com/bhooi/dynamic.git. Experiments were done on a 1.9 GHz Intel Core i7 laptop, 16 GB RAM running Microsoft Windows 10 Pro.

Case Data

We use 2 test cases: case2383 is an accurate reconstruction of part of the European high voltage network, and ACTIVSg25k is a synthetic network that mimics the Texas high-voltage grid in the U.S. The ACTIVSg25k represents a similarly sized system as the PJM (the largest independent service operator (ISO) in the U.S.) grid, which contains around 25 to 30k buses [63].

Selection of sensors and network observability: Due to the spatial impact of grid anomalies and the efficacy of anomaly metrics, full observability[64][65] and optimal sensor placement for observability[66] are not necessary for Dynwatch to perform. However, access to more sensors as inputs alongside optimal sensor selection[30] can improve the detection performance and help with localization of anomaly. To be conservative, for these experiments, we select random subsets of sensors (of varying sizes) as input. The good performance even with randomly selected sensor measurements validates the effectiveness of our method in selecting relevant time frames from historical data.

Threshold tuning: For a fair comparison of different methods, our experiment section, without using any threshold, compares the top K anomalies scored by each algorithm, where K is the number of anomalies simulated. However, in practical use, a detection threshold is required for the algorithm to identify an anomaly. A proper threshold can be either a fixed threshold from an empirical value or domain-knowledge or a learned threshold to facilitate optimal decision making. In particular, optimal threshold tuning needs to take class imbalance and asymmetric error into account. Since only a minority of instances are expected to be abnormal, there is an unbalanced nature of data. Moreover, as grid applications are safety-critical, mislabeling an anomaly as normal, i.e., false negatives (FN), could cause fatal consequences, while false positives (FP) which cause loss of ‘fidelity’ are less serious. Proper techniques for tuning a threshold offline include:

-

1.

Calculating the evaluation metric (e.g. F-measure which quantifies the balance of precision and recall) for each threshold to select the one that maximizes the metric.

-

2.

Plotting the ROC curve or precision-recall curve to select the threshold that gives the optimal balance.

-

3.

A cost approach that, when the cost of FP, FN, TP, TN are available, minimizes the average overall cost of a diagnostic test, yet domain-specific knowledge is needed for reasonable quantification of the costs.

IV-A Q1. Anomaly Detection Accuracy

In this section, we compare DynWatch against baseline anomaly detection approaches, while varying the number of sensors in the grid.

Experimental Settings

Starting with a particular test case as a base graph , we first create different topology scenarios where each of them deactivates a randomly chosen branch in the base graph. These subsequent network topologies represent the dynamic grid with topology changes due to operation and control. Then for each topology scenario, we use MatPower [63], a standard power grid simulator, to generate sets of synthetic measurements based on the load characteristics described in the following paragraph. As a result, the multivariate time series with time ticks mimics the real-world data setting where sensors receive measurements at each time tick , and the grid topology changes every time ticks. Finally, we sample random ticks out of as times when anomalies occur. Each of these anomalies is added by randomly deleting an edge on the corresponding topology.

Following [30], to generate an input time series of loads (i.e. real and reactive power at each node), we use the patterns estimated from two real datasets:

-

•

Carnegie Mellon University (CMU) campus load data recorded for days from July 29 to August 17, 2016;

-

•

Utility-provided 24 hour dataset of a real U.S. grid. See Section V-A for dataset description and statistical findings related to it.

to create synthetic time-series load based on a 5s interval, with the magnitude of daily load variation scaled to a predefined level, and with added Gaussian noise sampled from the extracted noise distribution [67]. The detailed data generation process is shown in Figure 7.

Given this input, each algorithm then returns a ranking of the anomalies. We evaluate this using standard metrics, AUC222AUC is the probability of correct ranking of a random “positive”-“negative” pair. (area under the ROC curve) and F-measure333F-measure is a trade-off between precision and recall. (), the latter computed on the top anomalies output by each algorithm.

Baselines

Dynamic graph anomaly detection approaches [35, 36, 38, 68] cannot be used as they consider graph structure only, but not sensor data. [37] allows sensor data but requires graphs with fully observed edge weights, which is inapplicable as detecting failed power lines with all sensors present reduces to checking if any edge has current equal to . Hence, instead, we compare DynWatch to GridWatch [30], an anomaly detection approach for sensors on a static graph, and the following multidimensional time-series based anomaly detection methods: Isolation Forests [29], Vector Autoregression (VAR) [24], Local Outlier Factor (LOF) [27], and Parzen Window [69]. Each uses the currents and voltages at the given sensors as features. For VAR, the norms of the residuals are used as anomaly scores; the remaining methods return anomaly scores directly. For Isolation Forests, we use trees (following the defaults in scikit-learn[70]). For VAR, following standard practice, we select the order by maximizing AIC. For LOF we use neighbors (following the default in scikit-learn), and we use neighbors for Parzen Window.

As shown in Figure 8, DynWatch clearly outperforms the baselines on both metrics, having an F-measure of higher than the best baseline.

IV-B Q2. Scalability

In this subsection, we seek to analyze the scalability of our DynWatch and DynWatch-Local. In reality, PJM, the largest ISO in the U.S., runs ACSE on a 28k bus model, performed every 1 min[10], thus any anomaly detection algorithm that takes significantly less than 1 min may provide valuable information to prevent wrong control decisions in real-time. The following results demonstrate the proposed method’s capability to achieve this.

Here, we generate test cases of different sizes by starting with the case2383 case and duplicating it times. After each duplication, edges are added to connect each node with its counterpart in the last duplication, so that the whole grid is connected. Then for each testcase, we generate 20 dynamic grids and sensor data with 1 randomly chosen sensor and 1200 time ticks, following the same settings as the previous sub-section. Finally, we measure

Figure 9 shows that our method is fast: even on a large case with 60k+ branches, both methods took less than 2s to apply anomaly detection on all 1200 time ticks of the sensor, corresponding to an average of less than 1.7ms per time tick per sensor. The ACTIVSg25k (similar to the largest real-time network in the US) has 32k+ branches, and thus run-time of anomaly detection at each time will be significantly less than 1 min. The figure also shows that our methods scale close to linearly with the grid size.

IV-C Q3. Practical Benefits

In this section, we explore how the proposed Dynwatch algorithm can improve the performance of the standard residual-based ACSE bad-data detection (BDD) method, by testing a type of grid-specific anomaly that SE BDD is known to fail against False Data Injection Attack (FDIA).

False Data Injection Attack (FDIA)[31] is a cyber-attack in which attackers manipulate the value of measurements according to the grid physical model such that the SE outputs incorrect grid estimates while ensuring that its residual does not change by much (ideally remains unchanged).

In this experiment, we construct an attack on a 14 bus network to mislead the operator into thinking that the load reduces by 20%. For any anomalous time tick , this is implemented by simulating power flow with the reduced load and generating measurements based on that.

The time-series measurement data and a comparison of anomaly scores are shown in Figure 1. Results show that the ACSE residual, which is metric for BDD reduced in value when anomalies occur (see the residual decrease in Figure 1 during anomalous operation shown by the dotted red line), implying that the standard SE BDD, along with any other residual-based method, will not be able to detect this coordinated attack.

In addition to standard ACSE BDD, we also implemented the auto-regression (VAR) method to detect grid anomalies. As the VAR algorithm does not consider dynamic graph properties of the power grid, it tends to create alarms on all abrupt measurement changes. This will easily lead to false positives since regular topological changes also result in sudden temporal change. This can be seen in the Figure 1 wherein during regular topology changes (shown in black dotted line) sudden spikes in VAR anomaly score are observed.

In comparison, our proposed method is able to detect all anomalies without False positives (FP). This indicates that the proposed algorithm is more likely to detect anomalies due to complex attack scenarios while being able to reduce the occurrences of false positives.

V Further Considerations

Some special conditions of interest are related to the application of our proposed method. They are discussed below.

V-A Realistic grid patterns

Here we explore the realistic grid pattern, using a utility-provided real-world dataset (for reasons of confidentiality, we cannot make this dataset public).

Dataset description- 24 hours worth of ACSE output data from a real utility in the Eastern Interconnect of the U.S. The dataset contains all operational data of the grid based on a 0.5-hour interval.

Here we document the statistical findings with the following notable observations:

-

•

Figure 10(a): Topology changes frequently in reality, thus our proposed method, which considers the impact of topology change, provides realistic values in handling time-series sensor data.

-

•

Figure 10(b): Real part of daily loads and generations change smoothly within a range of times their average values, with the generation following the load demand throughout the day.

-

•

Figure 11: of the time, an individual real part of load changes less than of its maximum daily value within a 30-min interval; less than within a 2-hour interval; and less than within 24 hours.

We observed similar behavior for reactive power in the system as well.

V-B Non-synchronization of measurements

In the worst possible case of measurement non-synchronization, the measurements and status data can be collected at such a different time that the measurements and reference topology are inconsistent with each other. If this happens, our method should detect it as an anomaly, since they are equivalent to topological or measurement errors.

However, the impact of this event is low. In case “uncorrected” unsynchronized measurements or topology exists, then that is an error that would disrupt the grid operator’s state estimation. In practice, the grid has some mechanisms to prevent this from happening:

-

1.

Both status data and analog measurements are sampled very frequently: status data are updated upon change of status in PJM and every 4s in ISO-NE; analog measurements are updated every 10s in PJM. Considering that the frequency of topology change is low, the high acquisition frequency is likely to guarantee all information is up to date.

-

2.

Topology estimation (TE) can check the consistency between switch status and analog measurements: e.g., if the switch is observed as ‘open’ but the current measurement on it is non-zero, then this status data is wrong and should be corrected. This processing is within the TE algorithms in the control room.

Therefore, it is likely that we can still obtain a good reference topology not affected by the non-synchronization.

V-C Abrupt changes in aggregated load or generation

Considering that: 1) the load changes slowly following a daily cycle; 2) conventional generators are re-dispatched every 15 min (less frequent than data acquisition we have sufficient time ticks before each re-dispatch) and each re-dispatch is limited by ramp rate; 3) renewable forecasts are improving and the auxiliary services like batteries are making them more stable and “firm” sources of energy, it’s still reasonable to assume that for any time , its recent previous measurements can provide meaningful information.

What if sudden load change happens? At transmission grid level the load is aggregated at high-voltage nodes because of which abrupt change in load is uncommon. However, there exist rare events like load shedding or an unusual re-dispatch where there might be a sudden redistribution of power in the grid in a very short interval. In case of these rare events, the proposed method may detect the abrupt change as an anomaly. In reality, these events may not be anomalous and will be misclassified as anomalies. During such instances, we will rely on the operator to clear these false positives in their decision-making process where additional trustworthy information is available. This is reasonable since these events are rare and the operators are well aware of the situation of active ongoing load-shedding in a region. In case that the abrupt load change is unexpected to the operator, an indicator for anomalous behavior might be a rather useful one.

VI Conclusion

In this paper, we proposed DynWatch, an online algorithm that accurately detects anomalies using sensor data on a changing graph (grid). DynWatch applies a similarity-based approach to measure how much the graph changes over time, with which we assign greater weight to previous graphs which are similar to the current graph. We use a domain-aware graph similarity measure based on Line Outage Distribution Factors (LODF), which exploit physics-based modeling of how changes in one line affect other lines in the graph.

By plugging in different graph similarity measures, our approach could be applied to other domains. Hence, future work could study how sensitive various detectors are for detecting anomalies in graph-based sensor data from different domains.

-

1.

Problem Formulation and Algorithm: we propose a novel and practical problem formulation, of anomaly detection using sensors on a changing graph. For this problem, we propose a graph-similarity based approach, and a domain-aware similarity measure based on Line Outage Distribution Factors (LODF).

-

2.

Effectiveness: Our DynWatch algorithm outperforms existing approaches in accuracy by 20 or more (F-measure) in experiments.

-

3.

Scalability: DynWatch is fast, taking 1.7ms on average per time tick per sensor on a 60k edge graph, and scaling linearly in the size of the graph.

Reproducibility: our code and data are publicly available at https://github.com/bhooi/dynamic.git.

Acknowledgment

Work in this paper is supported in part by C3.ai Inc. and Microsoft Corporation.

References

- [1] S. M. Amin, “Us grid gets less reliable [the data],” IEEE Spectrum, vol. 48, no. 1, pp. 80–80, 2011.

- [2] N. Perlroth, “Hackers are targeting nuclear facilities, homeland security dept. and fbi say,” New York Times, vol. 6, 2017.

- [3] D. U. Case, “Analysis of the cyber attack on the ukrainian power grid,” Electricity Information Sharing and Analysis Center (E-ISAC), vol. 388, 2016.

- [4] R. M. Lee, M. Assante, and T. Conway, “Crashoverride: Analysis of the threat to electric grid operations,” Dragos Inc., March, 2017.

- [5] F. Maghsoodlou, R. Masiello, and T. Ray, “Energy management systems,” IEEE Power and Energy Magazine, vol. 2, no. 5, pp. 49–57, 2004.

- [6] P. Manual, “1-control center and data exchange requirements,” 2011.

- [7] “Iso new england operating procedure 14 appendix f,” 2020. [Online]. Available: https://www.iso-ne.com/static-assets/documents/rules_proceds/operating/isone/op14/op14f_rto_final.pdf

- [8] F. C. Schweppe and J. Wildes, “Power system static-state estimation, part i: Exact model,” IEEE Transactions on Power Apparatus and systems, no. 1, pp. 120–125, 1970.

- [9] E. Handschin, F. C. Schweppe, J. Kohlas, and A. Fiechter, “Bad data analysis for power system state estimation,” IEEE Transactions on Power Apparatus and Systems, vol. 94, no. 2, pp. 329–337, 1975.

- [10] “Pjm manual 12: Balancing operations,” https://www.pjm.com/~/media/documents/manuals/m12.ashx, 2020.

- [11] M. Göl and A. Abur, “Lav based robust state estimation for systems measured by pmus,” IEEE Transactions on Smart Grid, vol. 5, no. 4, pp. 1808–1814, 2014.

- [12] L. Mili, V. Phaniraj, and P. J. Rousseeuw, “Least median of squares estimation in power systems,” IEEE Transactions on Power Systems, vol. 6, no. 2, pp. 511–523, 1991.

- [13] R. C. Pires, A. S. Costa, and L. Mili, “Iteratively reweighted least-squares state estimation through givens rotations,” IEEE Transactions on Power Systems, vol. 14, no. 4, pp. 1499–1507, 1999.

- [14] S. Li, A. Pandey, and L. Pileggi, “A wlav-based robust hybrid state estimation using circuit-theoretic approach,” arXiv preprint arXiv:2011.06021, 2020.

- [15] Y. Weng, M. D. Ilić, Q. Li, and R. Negi, “Convexification of bad data and topology error detection and identification problems in ac electric power systems,” IET Generation, Transmission & Distribution, vol. 9, no. 16, pp. 2760–2767, 2015.

- [16] A. Jovicic, M. Jereminov, L. Pileggi, and G. Hug, “An equivalent circuit formulation for power system state estimation including pmus,” in 2018 North American Power Symposium (NAPS). IEEE, 2018, pp. 1–6.

- [17] S. Li, A. Pandey, S. Kar, and L. Pileggi, “A circuit-theoretic approach to state estimation,” in 2020 IEEE PES Innovative Smart Grid Technologies Europe (ISGT-Europe), 2020, pp. 1126–1130.

- [18] A. Monticelli, State estimation in electric power systems: a generalized approach. Springer Science & Business Media, 2012.

- [19] M. Prais and A. Bose, “A topology processor that tracks network modifications,” IEEE Transactions on Power Systems, vol. 3, no. 3, pp. 992–998, 1988.

- [20] O. Alsac, N. Vempati, B. Stott, and A. Monticelli, “Generalized state estimation,” IEEE Transactions on power systems, vol. 13, no. 3, pp. 1069–1075, 1998.

- [21] F. F. Wu and W.-H. Liu, “Detection of topology errors by state estimation (power systems),” IEEE Transactions on Power Systems, vol. 4, no. 1, pp. 176–183, 1989.

- [22] E. M. Lourenço, E. P. Coelho, and B. C. Pal, “Topology error and bad data processing in generalized state estimation,” IEEE Transactions on Power Systems, vol. 30, no. 6, pp. 3190–3200, 2014.

- [23] B. Donmez, G. Scioletti, and A. Abur, “Robust state estimation using node-breaker substation models and phasor measurements,” in 2019 IEEE Milan PowerTech. IEEE, 2019, pp. 1–6.

- [24] J. D. Hamilton, Time series analysis. Princeton university press Princeton, 1994, vol. 2.

- [25] Z. Wang, J. Yang, Z. ShiZe, and C. Li, “Robust regression for anomaly detection,” in 2017 IEEE International Conference on Communications (ICC). IEEE, 2017, pp. 1–6.

- [26] S. Yi, J. Ju, M.-K. Yoon, and J. Choi, “Grouped convolutional neural networks for multivariate time series,” arXiv preprint arXiv:1703.09938, 2017.

- [27] M. M. Breunig, H.-P. Kriegel, R. T. Ng, and J. Sander, “Lof: identifying density-based local outliers,” in ACM sigmod record, vol. 29, no. 2. ACM, 2000, pp. 93–104.

- [28] M. Amer and S. Abdennadher, “Comparison of unsupervised anomaly detection techniques,” Bachelor’s Thesis, 2011.

- [29] F. T. Liu, K. M. Ting, and Z.-H. Zhou, “Isolation forest,” in ICDM. IEEE, 2008, pp. 413–422.

- [30] B. Hooi, D. Eswaran, H. A. Song, A. Pandey, M. Jereminov, L. Pileggi, and C. Faloutsos, “Gridwatch: Sensor placement and anomaly detection in the electrical grid,” in ECML-PKDD. Springer, 2018, pp. 71–86.

- [31] G. Liang, J. Zhao, F. Luo, S. R. Weller, and Z. Y. Dong, “A review of false data injection attacks against modern power systems,” IEEE Transactions on Smart Grid, vol. 8, no. 4, pp. 1630–1638, 2016.

- [32] E. Keogh, J. Lin, S.-H. Lee, and H. Van Herle, “Finding the most unusual time series subsequence: algorithms and applications,” Knowledge and Information Systems, vol. 11, no. 1, pp. 1–27, 2007.

- [33] S. Ramaswamy, R. Rastogi, and K. Shim, “Efficient algorithms for mining outliers from large data sets,” in ACM Sigmod Record, vol. 29, no. 2. ACM, 2000, pp. 427–438.

- [34] M. Jones, D. Nikovski, M. Imamura, and T. Hirata, “Anomaly detection in real-valued multidimensional time series,” in International Conference on Bigdata/Socialcom/Cybersecurity. Stanford University, ASE. Citeseer, 2014.

- [35] L. Akoglu, M. McGlohon, and C. Faloutsos, “Oddball: Spotting anomalies in weighted graphs,” in PAKDD. Springer, 2010, pp. 410–421.

- [36] Z. Chen, W. Hendrix, and N. F. Samatova, “Community-based anomaly detection in evolutionary networks,” Journal of Intelligent Information Systems, vol. 39, no. 1, pp. 59–85, 2012.

- [37] M. Mongiovi, P. Bogdanov, R. Ranca, E. E. Papalexakis, C. Faloutsos, and A. K. Singh, “Netspot: Spotting significant anomalous regions on dynamic networks,” in SDM. SIAM, 2013, pp. 28–36.

- [38] M. Araujo, S. Papadimitriou, S. Günnemann, C. Faloutsos, P. Basu, A. Swami, E. E. Papalexakis, and D. Koutra, “Com2: fast automatic discovery of temporal (‘comet’) communities,” in PAKDD. Springer, 2014, pp. 271–283.

- [39] L. Akoglu and C. Faloutsos, “Event detection in time series of mobile communication graphs,” in Army science conference, 2010, pp. 77–79.

- [40] C. C. Aggarwal, Y. Zhao, and S. Y. Philip, “Outlier detection in graph streams,” in Data Engineering (ICDE), 2011 IEEE 27th International Conference on. IEEE, 2011, pp. 399–409.

- [41] S. Ranshous, S. Harenberg, K. Sharma, and N. F. Samatova, “A scalable approach for outlier detection in edge streams using sketch-based approximations,” in SDM. SIAM, 2016, pp. 189–197.

- [42] J. Valenzuela, J. Wang, and N. Bissinger, “Real-time intrusion detection in power system operations,” IEEE Transactions on Power Systems, vol. 28, no. 2, pp. 1052–1062, 2012.

- [43] D. Falcao and S. De Assis, “Linear programming state estimation: error analysis and gross error identification,” IEEE Transactions on Power Systems, vol. 3, no. 3, pp. 809–815, 1988.

- [44] N. G. Bretas and A. S. Bretas, “A two steps procedure in state estimation gross error detection, identification, and correction,” International Journal of Electrical Power & Energy Systems, vol. 73, pp. 484–490, 2015.

- [45] R. Mitchell and R. Chen, “Behavior-rule based intrusion detection systems for safety critical smart grid applications,” IEEE Transactions on Smart Grid, vol. 4, no. 3, pp. 1254–1263, 2013.

- [46] S. Papadimitriou, J. Sun, and C. Faloutsos, “Streaming pattern discovery in multiple time-series,” 2005.

- [47] P. Galeano, D. Peña, and R. S. Tsay, “Outlier detection in multivariate time series by projection pursuit,” Journal of the American Statistical Association, vol. 101, no. 474, pp. 654–669, 2006.

- [48] R. Baragona and F. Battaglia, “Outliers detection in multivariate time series by independent component analysis,” Neural computation, vol. 19, no. 7, pp. 1962–1984, 2007.

- [49] H. Lu, Y. Liu, Z. Fei, and C. Guan, “An outlier detection algorithm based on cross-correlation analysis for time series dataset,” IEEE Access, vol. 6, pp. 53 593–53 610, 2018.

- [50] A. Blázquez-García, A. Conde, U. Mori, and J. A. Lozano, “A review on outlier/anomaly detection in time series data,” arXiv preprint arXiv:2002.04236, 2020.

- [51] D. J. Brueni and L. S. Heath, “The pmu placement problem,” SIAM Journal on Discrete Mathematics, vol. 19, no. 3, pp. 744–761, 2005.

- [52] L. A. Zager and G. C. Verghese, “Graph similarity scoring and matching,” Applied mathematics letters, vol. 21, no. 1, pp. 86–94, 2008.

- [53] L. Akoglu, H. Tong, and D. Koutra, “Graph based anomaly detection and description: a survey,” Data mining and knowledge discovery, vol. 29, no. 3, pp. 626–688, 2015.

- [54] P. Shoubridge, M. Kraetzl, W. Wallis, and H. Bunke, “Detection of abnormal change in a time series of graphs,” Journal of Interconnection Networks, vol. 3, no. 01n02, pp. 85–101, 2002.

- [55] K. M. Kapsabelis, P. J. Dickinson, and K. Dogancay, “Investigation of graph edit distance cost functions for detection of network anomalies,” Anziam Journal, vol. 48, pp. C436–C449, 2006.

- [56] M. E. Gaston, M. Kraetzl, and W. D. Wallis, “Using graph diameter for change detection in dynamic networks,” Australasian Journal of Combinatorics, vol. 35, pp. 299–311, 2006.

- [57] J. Jost and M. P. Joy, “Evolving networks with distance preferences,” Physical Review E, vol. 66, no. 3, p. 036126, 2002.

- [58] L. Zager, “Graph similarity and matching,” Ph.D. dissertation, Massachusetts Institute of Technology, 2005.

- [59] S. V. N. Vishwanathan, N. N. Schraudolph, R. Kondor, and K. M. Borgwardt, “Graph kernels,” Journal of Machine Learning Research, vol. 11, pp. 1201–1242, 2010.

- [60] H. Bunke, P. J. Dickinson, M. Kraetzl, and W. D. Wallis, A graph-theoretic approach to enterprise network dynamics. Springer Science & Business Media, 2007, vol. 24.

- [61] A. J. Wood, B. F. Wollenberg, and G. B. Sheblé, Power generation, operation, and control. John Wiley & Sons, 2013.

- [62] F. R. Hampel, “Robust statistics: A brief introduction and overview,” in Research report/Seminar für Statistik, Eidgenössische Technische Hochschule (ETH), vol. 94. Seminar für Statistik, Eidgenössische Technische Hochschule, 2001.

- [63] R. D. Zimmerman, C. E. Murillo-Sánchez, and R. J. Thomas, “Matpower: Steady-state operations, planning, and analysis tools for power systems research and education,” IEEE Transactions on power systems, vol. 26, no. 1, pp. 12–19, 2011.

- [64] E. Castillo, A. J. Conejo, R. E. Pruneda, and C. Solares, “Observability analysis in state estimation: A unified numerical approach,” IEEE Transactions on Power Systems, vol. 21, no. 2, pp. 877–886, 2006.

- [65] M. C. de Almeida, E. N. Asada, and A. V. Garcia, “Power system observability analysis based on gram matrix and minimum norm solution,” IEEE Transactions on Power Systems, vol. 23, no. 4, pp. 1611–1618, 2008.

- [66] S. Chakrabarti and E. Kyriakides, “Optimal placement of phasor measurement units for power system observability,” IEEE Transactions on power systems, vol. 23, no. 3, pp. 1433–1440, 2008.

- [67] H. A. Song, B. Hooi, M. Jereminov, A. Pandey, L. Pileggi, and C. Faloutsos, “Powercast: Mining and forecasting power grid sequences,” in ECML-PKDD. Springer, 2017, pp. 606–621.

- [68] N. Shah, D. Koutra, T. Zou, B. Gallagher, and C. Faloutsos, “Timecrunch: Interpretable dynamic graph summarization,” in KDD. ACM, 2015, pp. 1055–1064.

- [69] E. Parzen, “On estimation of a probability density function and mode,” The annals of mathematical statistics, vol. 33, no. 3, pp. 1065–1076, 1962.

- [70] F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and E. Duchesnay, “Scikit-learn: Machine learning in Python,” Journal of Machine Learning Research, vol. 12, pp. 2825–2830, 2011.

Appendix A Appendix

A-A Comparison of graph distance measures

To quantitatively justify the effectiveness of our proposed graph distance, we compared the proposed distance with other traditional measures applicable to power grids:

-

•

Simple GED[54]: the distance between two graphs is equal to the number of changed edges.

-

•

Variant of GED with line admittance used as weights assigned to the changed edges. Admittance is used here because the larger the admittance, the more likely the edge has large power flows on it, meaning it is important to the grid.

-

•

Equivalent conductance-based measure: the distance between two graphs is equal to the sum of the equivalent conductance of all changed edges. Equivalent conductance is able to take more consideration of the system-wise impact of each edge.

Result in Figure 12 shows our proposed measure outperforms the baselines above. Here the time series data is generated using the pattern from the utility-provided data set, following the process described in Figure 7.

A-B Proofs of statistical error analysis

Here we will prove the theorems demonstrated in Section III-G.

See III.1

Proof.

| (1) | ||||

| (2) | ||||

| (3) | ||||

| (4) |

Based on Assumption III.1, we have , and thus

Therefore we have

| (5) | ||||

| (6) | ||||

| (7) | ||||

| (8) | ||||

| (9) | ||||

| (10) | ||||

| (11) |

Similarly based on Assumption III.1, it is easy to show that

Thus we have

| (12) | ||||

| (13) | ||||

| (14) |

Based on Assumption III.1 and for , the variance term can be upper bounded by:

Further making use of Assumption III.2, it is easy to get an upper bound for the bias2 term:

Finally, as based on the assumption that , we are able to upper bound the statistical error as:

| (15) | |||

| (16) | |||

| (17) |

Meanwhile the lower bound is also obvious:

| (18) |

∎

See III.2

Proof.

In the limit of infinite data, there exist infinite data with the same topology as , thus it is possible to find the time series data such that are drawn independently from the same distribution, s.t, and .

Also we have,

And based on Bessel’s correction, it is easy to get

∎

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/df249d3a-a666-4d3e-9cfb-25724122e557/bio_Shimiao.jpg) |

Shimiao Li received the B.E. degree in electrical engineering from Tianjin University, China, in 2018. She is currently a Ph.D. candidate in the Department of Electrical and Computer Engineering (ECE) at Carnegie Mellon University, where she is advised by Lawrence Pileggi. Her research interests include analytical methods for grid operation and optimization, as well as physics-informed machine learning for real-world grid cyber-security, reliability and optimization appliations. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/df249d3a-a666-4d3e-9cfb-25724122e557/bio_Amrit.jpg) |

Amritanshu Pandey was born in Jabalpur, India. He received the M.Sc. and Ph.D. degree in Electrical Engineering in 2012 and 2019, respectively, from Carnegie Mellon University, Pittsburgh, PA, USA. He is currently a Special Faculty in the Electrical and Computer Engineering department at Carnegie Mellon University. He has previously worked at Pearl Street Technologies, Inc. as Senior Research Scientist and as an Engineer at MPR Associates, Inc. He develops first principles-based physics driven and ML-based data driven methods and models for energy systems that are expressive (i.e., capture sufficient physical behavior accurately) and efficient in terms of speed and scalability. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/df249d3a-a666-4d3e-9cfb-25724122e557/bio_Bryan.jpg) |

Bryan Hooi received his Ph.D. in Machine Learning from Carnegie Mellon University, where he was advised by Christos Faloutsos. He received the M.S. in Computer Science and the B.S. in Mathematics from Stanford University. He is currently an Assistant Professor in the School of Computing and the Institute of Data Science in National University of Singapore. His research interests include scalable machine learning, deep learning, graph algorithms, anomaly detection, and biomedical applications of AI. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/df249d3a-a666-4d3e-9cfb-25724122e557/bio_Christos.jpg) |

Christos Faloutsos is a Professor at Carnegie Mellon University and an Amazon Scholar. He is the recipient of the Fredkin Professorship in Artificial Intelligence (2020); he has received the Presidential Young Investigator Award by the National Science Foundation (1989), the Research Contributions Award in ICDM 2006, the SIGKDD Innovations Award (2010), the PAKDD Distinguished Contributions Award (2018), 29 “best paper” awards (including 8 “test of time” awards), and four teaching awards. Eight of his advisees or co-advisees have attracted KDD or SCS dissertation awards. He is an ACM Fellow, he has served as a member of the executive committee of SIGKDD; he has published over 400 refereed articles, 17 book chapters and three monographs. He holds 8 patents (and several more are pending), and he has given over 50 tutorials and over 25 invited distinguished lectures. His research interests include large-scale data mining with emphasis on graphs and time sequences; anomaly detection, tensors, and fractals. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/df249d3a-a666-4d3e-9cfb-25724122e557/bio_larry.jpg) |

Lawrence Pileggi is the Tanoto professor and Head of electrical and computer engineering at Carnegie Mellon University, and has previously held positions at Westinghouse Research and Development and the University of Texas at Austin. He received his Ph.D. in Electrical and Computer Engineering from Carnegie Mellon University in 1989. His research interests include various aspects of digital and analog integrated circuit design, and simulation, optimization and modeling of electric power systems. He was a co-founder of Fabbrix Inc., Extreme DA, and Pearl Street Technologies. He has received various awards, including Westinghouse corporation’s highest engineering achievement award, the Semiconductor Research Corporation (SRC) Technical Excellence Awards in 1991 and 1999, the FCRP inaugural Richard A. Newton GSRC Industrial Impact Award, the SRC Aristotle award in 2008, the 2010 IEEE Circuits and Systems Society Mac Van Valkenburg Award, the ACM/IEEE A. Richard Newton Technical Impact Award in Electronic Design Automation in 2011, the Carnegie Institute of Technology B.R. Teare Teaching Award for 2013, and the 2015 Semiconductor Industry Association (SIA) University Researcher Award. He is a co-author of ”Electronic Circuit and System Simulation Methods,” McGraw-Hill, 1995 and ”IC Interconnect Analysis,” Kluwer, 2002. He has published over 400 conference and journal papers and holds 40 U.S. patents. He is a fellow of IEEE. |