Dynamic Factor Analysis with Dependent Gaussian Processes for High-Dimensional Gene Expression Trajectories

Abstract

The increasing availability of high-dimensional, longitudinal measures of gene expression can facilitate understanding of biological mechanisms, as required for precision medicine. Biological knowledge suggests that it may be best to describe complex diseases at the level of underlying pathways, which may interact with one another. We propose a Bayesian approach that allows for characterising such correlation among different pathways through Dependent Gaussian Processes (DGP) and mapping the observed high-dimensional gene expression trajectories into unobserved low-dimensional pathway expression trajectories via Bayesian Sparse Factor Analysis. Our proposal is the first attempt to relax the classical assumption of independent factors for longitudinal data and has demonstrated a superior performance in recovering the shape of pathway expression trajectories, revealing the relationships between genes and pathways, and predicting gene expressions (closer point estimates and narrower predictive intervals), as demonstrated through simulations and real data analysis. To fit the model, we propose a Monte Carlo Expectation Maximization (MCEM) scheme that can be implemented conveniently by combining a standard Markov Chain Monte Carlo sampler and an R package GPFDA (Konzen et al.,, 2021), which returns the maximum likelihood estimates of DGP hyperparameters. The modular structure of MCEM makes it generalizable to other complex models involving the DGP model component. Our R package DGP4LCF that implements the proposed approach is available on CRAN.

Keywords: Dependent Gaussian processes; High-dimensional biomarker expression trajectories; Monte Carlo Expectation Maximization; Multivariate longitudinal data; Pathways; Sparse factor analysis.

1 Introduction

The development of high-throughput technologies has enabled researchers to collect high-dimensional biomarker data repeatedly over time, such as transcriptome, metabolome and proteome, facilitating the discovery of disease mechanisms. Biological knowledge suggests that it may be best to describe complex diseases at the level of pathways, rather than the level of individual biomarkers (Richardson et al.,, 2016). A biological pathway is a series of interactions among molecules that achieves a certain biological function. Pathways are often summarized by activity scores derived from expression values of corresponding biomarker set (Temate-Tiagueu et al.,, 2016); such scores form a basis of making further comparisons between people with different clinical statuses (Kim et al.,, 2021).

This work was motivated by longitudinal measurements of gene expression in a human challenge study, where the main goal is to understand biological mechanism of influenza virus infection within the host, which provides a basis for developing novel diagnostic approaches for differentiating viral respiratory infection from respiratory disease caused by other pathogens (Zaas et al.,, 2009). In brief, healthy individuals were inoculated with influenza virus H3N2, and then blood samples were collected at regular time intervals until the individuals were discharged after a fixed period of 7 days. The blood samples were assayed by DNA microarray, to produce gene expression values for genes. Binary labels denoting clinical status (symptomatic or asymptomatic) for each individual were also recorded.

To handle such high-dimensional data, one commonly used dimension-reduction technique is factor analysis (FA). In the context of our motivating H3N2 example, FA maps the observed high-dimensional gene expression data to a latent low-dimensional pathway expression representation (Carvalho et al.,, 2008). One classical assumption of FA is the independence among latent factors, which implies expressions of pathways are uncorrelated. However, it has been shown that pathways often interact with one another to achieve complex biological functions (Hsu and Yang,, 2012), so this assumption may not hold biologically.

There have been attempts to relax this assumption of independence for cross-sectional data. Oblique rotation has been proposed as an alternative to standard orthogonal rotation under the frequentist framework (Costello and Osborne,, 2019), and Conti et al., (2014) considered correlated factors within a Bayesian framework. However, for longitudinal data, no available method can characterize the potential cross-correlation between factor trajectories. To our knowledge, this paper is the first attempt to address this gap. Specifically, we model the latent factor trajectories using dependent Gaussian Processes (DGP), a tool from the machine learning community that allows for estimating cross-correlations and borrowing strength from correlated factors. Compared to an approach that assumes independence between factor trajectories, our approach performs better at recovering the shape of latent factor trajectories, estimating the relationship between genes and pathways, and predicting future gene expression.

In addition to the modeling innovation, another contribution is the algorithm developed for estimating hyperparameters of the DGP model when it is embedded within another model. To obtain the maximum likelihood estimate (MLE) of DGP hyperparameters, we developed a Monte Carlo Expectation Maximization (MCEM) algorithm, which can be conveniently implemented by combining an existing R package, GPFDA (Konzen et al.,, 2021), with a standard Markov Chain Monte Carlo (MCMC) sampler. Our R package DGP4LCF (Dependent Gaussian Processes for Longitudinal Correlated Factors) that implements the proposed method is available on CRAN.

The remainder of the article is organized as follows. In Section 2, we review Bayesian sparse factor analysis (BSFA) and DGP, then propose our integrated model based on them. In Section 3, we introduce the inference method for the proposed model. We explore the empirical performance of our proposed approach for prediction of gene expression, estimation of gene-pathway relationships and the shape of pathway expression trajectories under several simulated factor generation mechanisms in Section 4. In Section 5, we compare our results on real data with those of a previous analysis in Chen et al., (2011). We conclude with a discussion on future research directions in Section 6.

2 Model

Our proposed model is based on BSFA and DGP. Therefore, before presenting the proposed model in Section 2.3, we first introduce BSFA in Section 2.1 and DGP in Section 2.2.

Let denote the th measured time point of the th individual, , , where and are the number of subjects and subject-specific time points, respectively. At time , is the th gene expression, , where is the number of genes. We seek to describe these data in terms of latent factors/pathways , . In practice, we expect to be much smaller than . Throughout this paper we assume that is pre-specified and fixed (see Section 2.4 for discussion on the choice of ).

2.1 Uncovering sparse factor structure via BSFA

BSFA is an established approach to uncover a sparse factor structure. Sparsity stems from the fact that each pathway (i.e., factor) only involves a small proportion of genes (i.e., observed variable). Mathematically, BSFA connects observations with the unobserved latent factors via a factor loading matrix , where each element quantifies the extent to which the th gene expression is related to the th pathway expression, with larger absolute values indicating a stronger loading of the gene expression on the pathway expression.

| (1) |

where is the intercept term for the th gene of the th individual (hereafter the “subject-gene mean”), is the residual error. We assume is constant across all time points.

We incorporate the prior belief of sparsity by imposing a sparsity-inducing prior distribution on . Specifically we adopt point-mass mixture priors (Carvalho et al.,, 2008) because we want the model to shrink insignificant parameters completely to zero, without the need to further set up a threshold for inclusion, as in continuous shrinkage priors (Bhattacharya and Dunson,, 2011). The point-mass mixture prior is introduced by first decomposing , where the binary variable indicates inclusion and the continuous variable denotes the regression coefficient. We then specify a Bernoulli-Beta prior for with , and a Normal-Inverse-Gamma prior for with , , where and are pre-specified positive constants. An a priori belief about sparsity can be represented via , which controls the proportion of genes that contributes to the th pathway. If , meaning that the th gene does not relate to the th pathway, then the corresponding loading .

We assign normal priors to the subject-gene means , where is fixed to the mean of the th gene expression across all time points of all people, and the residuals ; inverse gamma priors to the variances and , where are pre-specified positive constants.

To implement the BSFA model, the software BFRM has been developed (Carvalho et al.,, 2008). However, the current version of BFRM has two major limitations. First, it only returns point estimates of parameters, without any quantification of uncertainty. Second, it can handle only cross-sectional data; therefore it does not account for within-individual correlation when supplied with longitudinal data.

2.2 Modeling correlated, time-dependent factor trajectories via DGP

Several approaches treating factor trajectories , as functional data have been proposed, including spline functions (Ramsay et al.,, 2009), differential equations (Ramsay and Hooker,, 2019), autoregressive models (Penny and Roberts,, 2002), and Gaussian Processes (GP)(Shi and Choi,, 2011). As mentioned previously, we are interested in incorporating the cross-correlation among different factors into the model. GP are well-suited to this task because DGP can account for the inter-dependence of factors in a straight-forward manner. Indeed, the DGP model has been widely applied to model dependent multi-output time series in the machine learning community (Alvarez and Lawrence,, 2011), where it is also known as “multitask learning”. Sharing information between tasks using DGP can improve prediction compared to using independent Gaussian Processes (IGP) (Bonilla et al.,, 2007; Cheng et al.,, 2020). DGP have also been used to model correlated, multivariate spatial data in the field of geostatistics, improving prediction performance over IGP (Dey et al.,, 2020).

The main difficulty with DGP modeling is how to appropriately define cross-covariance functions that imply a positive definite covariance matrix. Liu et al., (2018) reviewed existing strategies developed to address this issue and found, through simulation studies, that no single approach outperformed all others in all scenarios. When the intent was to improve the predictions of all the outputs jointly (such is our case here), the kernel convolution framework (KCF)(Boyle and Frean, 2005a, ) was among the best performers. The KCF has also been widely employed by other researchers (Alvarez and Lawrence,, 2011; Sofro et al.,, 2017). Therefore, we adopt the KCF strategy for DGP modeling here, which assumes that the correlation between factors is the same at each time point. A detailed description of the KCF can be found in Supplementary A.2.

The distribution of induced under KCF is a multivariate normal distribution (MVN) with mean vector and covariance matrix fully determined by parameters of kernel functions and a noise parameter; we use to denote all of them hereafter. The covariance matrix contains the information of both the auto-correlation for each single process and the cross-correlation between different processes. In this paper, we focus on the cross-correlation, which correspond to interactions across different biological pathways that have been ignored in previous analysis (Chen et al.,, 2011).

The KCF can be implemented via the R package GPFDA, which outputs the MLE for DGP hyperparameters given measurements of the processes . Its availability inspired and facilitated the algorithm developed for the proposed model. GPFDA assumes all input processes are measured at common time points, which is often unrealistic in practice; thus, in Section 3, we will adapt GPFDA to subject-specific time points.

2.3 Proposed integrated model

We propose a model that combines BSFA and DGP (referred as “BSFA-DGP” hereafter), and present it in matrix notation below. To accommodate irregularly measured time points across individuals, we first introduce the vector of all unique observation times across all individuals, and denote its length as ; each is a vector of observed time points for the th individual. We assume the irregularity of measurements is sufficiently limited such that Bayesian imputation is appropriate. Let be the matrix of pathway expression of the th individual, with denoting the th factor’s expression across all observation times ; and let be the sub-matrices of , denoting pathway expression at times when gene expression of the th individual are observed and missing, respectively. Let denote the column vector obtained by stacking the columns of matrix on top of one another; similar definitions apply to and .

Let be the matrix of gene expression measurements at the observation times for the th individual, with denoting the th gene’s trajectory; and correspondingly let be the matrix of subject-gene means, with , where is a -dimensional column vector consisting of the scalar . Furthermore, let be the matrix of regression coefficients and be the matrix of inclusion indicators,

| (2) |

where denotes element-wise matrix multiplication, is the covariance matrix induced via the KCF modeling, and is the residual matrix. The prior distributions for components of , , , and have been described in Section 2.1.

2.4 Choosing the number of factors

In practice, the choice of is primarily based on previous knowledge about the data at hand. Where this is unavailable, we recommend trying several different values for and comparing results, including quantitative metrics (such as prediction performance adopted in this work) and practical interpretation. Note that it may be possible to identify when is unnecessarily large through factor loading estimates (as we will show in the simulation), since redundant factors will not have non-zero loadings.

3 Inference

In this section, we describe inference for our proposed model. We develop an MCEM framework to obtain the MLE for DGP hyperparameters in Section 3.1 and the framework is summarized in Algorithm 1. For fixed DGP hyperparameter values, we propose a Gibbs sampler for the other variables in the model, denoted by , where , , , , , (Section 3.2). This sampler serves two purposes. First, within the MCEM algorithm (called “Gibbs-within-MCEM” hereafter), it generates samples for approximating the expectation in Equation 5, which is used for updating estimates of . Second, after the final DGP estimate, denoted by , is obtained from MCEM, we proceed with implementing the sampler (called “Gibbs-after-MCEM” hereafter) to find the final posterior distribution of interest, which is , where represents observed gene expression. We discuss how we handle identifiability challenges in Section 3.3.

3.1 MCEM for estimating cross-correlation determined by DGP hyperparameters

3.1.1 Options for Estimating DGP Hyperparameters

To estimate , two strategies have been widely adopted: Fully Bayesian (FB) and Empirical Bayes (EB). The former proceeds by assigning prior distributions to to account for our uncertainty. The latter proceeds by fixing to reasonable values based on the data; for example, we can set them to the MLE. Compared to FB, EB sacrifices the quantification of uncertainty for a lower computational cost. Here, we adopt the EB approach because the key quantities of interest for inference in our model are the factor loading matrix , latent factors , and the prediction of gene expression, rather than DGP hyperparameters . Therefore, we simply want a reasonably good estimate of to proceed, without expending excessive computation time.

3.1.2 Finding the MLE for DGP Hyperparameters via an MCEM Framework

To derive , we first note, with denoting probability density function, the factorisation

| (3) | ||||

which highlights that the marginal likelihood function with respect to for our proposed model in Section 2.3 requires high-dimensional integration.

To deal with the integration, one strategy is the Expectation-Maximization (EM) algorithm (Dempster et al.,, 1977), in which we view as hidden variables and then iteratively construct a series of estimates , , that converges to (Wu,, 1983) by alternating between E-steps and M-steps. At the th iteration, the E-step requires evaluation of the “Q-function”, which is the conditional expectation of the log-likelihood of the complete data given the observed data and the previously iterated value .

| (4) |

In the M-step, this expectation is maximized to obtain the updated parameter EM keeps updating parameters in this way until the pre-specified stopping condition is met.

As for many complex models, the analytic form of the conditional expectation in Equation 4 is unavailable. To address this issue, a Monte Carlo version of EM (MCEM) has been developed (Wei and Tanner,, 1990; Levine and Casella,, 2001), which uses Monte Carlo samples to approximate the exact expectation. Casella, (2001) showed that MCEM produces consistent estimates of posterior distributions and asymptotically valid confidence sets.

Suppose that at the th iteration (), we have samples drawn from the posterior distribution using an MCMC sampler (see Section 3.2), then Equation 4 can be approximated as,

| (5) | ||||

The M-Step maximizes Equation 5 with respect to , but after applying the factorisation in Equation 3, the only term depending on is . This means the Q-function can be simplified to

| (6) | ||||

As a result, the task has now been reduced to finding that can maximize the likelihood function of , given that each follows a DGP distribution determined by . Although gene expressions are measured at irregular time points for different individuals, samples of latent factors are available at common times ; therefore enabling the use of GPFDA to estimate the MLE.

The MCEM framework described above is summarized in Algorithm 1. To provide good initial values for MCEM, we implemented a two-step approach using available software (see Supplementary B for details). Implementation challenges of MCEM are rooted in one common consideration: the computational cost of the algorithm. We discuss these challenges, including the choice of MCMC sample size and the stopping condition, in Supplementary A.4 and Supplementary A.5, respectively.

3.2 Gibbs sampler for other variables under fixed GP estimates

To acquire samples for from the posterior distribution under fixed DGP estimates , we use a Gibbs sampler since full conditionals for variables in are analytically available. We summarise the high-level approach here; details are available in Supplementary A.1.

For the key variables , , and , we use blocked Gibbs to improve mixing. To block-update the th row of the binary matrix , we compute the posterior probability under possible values of , then sample with corresponding probabilities. To update the th row of the regression coefficient matrix , , we draw from a MVN distribution. When updating , the vectorized form of the th individual’s factor scores, we factorise , where denotes the remaining parameters excluding , according to the partition . The first factor follows a MVN distribution depending on measured gene expression. The second factor also follows a MVN distribution according to standard properties of the DGP model. Our sampler samples from these two factors in turn.

3.3 Identifiability challenges

A challenge of factor analysis is identifiability, and we address non-identifiability of two types (see Supplementary A.3 for details). First, for the covariance matrix for latent factors we constrain its main diagonal elements to be (in other words, the variance of each factor at each time point is ). Second, to address sign-permutation identifability of the factor loadings and factor scores , we post-process samples using the R package “factor.switch” (Papastamoulis and Ntzoufras,, 2022).

4 Simulation

To assess the performance of our approach, we simulated gene expression observations from our model as described in Section 4.1, and fitted the proposed and comparator models as described in Section 4.2. Section 4.3 introduces the assessment metrics and Section 4.4 describes the results.

4.1 Simulation setting

To mimic the real data, we chose the sample size . The number of training and test time points was set to and , respectively, for all individuals (i.e. we split the observed data into training and test datasets to assess models’ performance in predicting gene expression). We set the true number of latent factors as .

Since recovering latent factor trajectories is one of our major interests, we considered 4 different mechanisms for generating true latent factors according to a factorial design: the first variable determined whether different factors were actually correlated (“C”) or uncorrelated (“U”), and the second variable determined whether the variability of factors was small (“S”) or large (“L”). The mean value for each factor score was fixed to be , and we generated the covariance matrix for different scenarios in the following way: (1) under scenario “C”, we set the cross-correlations based on the estimated covariance matrix from real data under ; otherwise under scenario “U” the true cross-correlations were set to . (2) under scenario “S”, we set the standard deviation for factors 1-4 to be , , , and , respectively, following the estimated results from real data under ; while under scenario “L”, the standard deviation for each was set to be . Below we use the factors’ generation mechanism to name each scenario. For example, “scenario CS” refers to the case where true factors were correlated (C) and had small (S) variability.

Each factor was assumed to regulate of all genes, and the total number of genes was . Note that we allow for the possibility that one gene may be regulated by more than one factor. If a gene was regulated by an underlying factor, then the corresponding factor loading was generated from a normal distribution ; otherwise the factor loading was set to . Each subject-gene mean was generated from , where ranged between and (to match the real data) and . Finally, observed genes were generated according to Equation 1, , where .

Note that in addition to the aforementioned setting, we also considered more simulation scenarios, to investigate the performance of our approach under different , , and proportion of overlap between factors. Supplementary C.2.2-C.2.5 details results under those scenarios, and below we present results only under the setting described above.

4.2 Analysis methods

For all scenarios, we fit the data using the proposed approach BSFA-DGP under . In addition, we investigate how the mis-specification of impacts model performance using the scenario CS as an example (Supplementary C.2.1). Hyperparameters and other tuning parameters are detailed in Supplementary B.2.

We compared our approach to a traditional approach that models each latent factor independently, specifically assuming an IGP prior for each factor trajectory (other parts of the model remain unchanged). This model specification is referred to as BSFA-IGP. We assessed convergence of the Gibbs samples of the continuous variables from three parallel chains using Rhat (Gelman and Rubin,, 1992). Convergence of the latent factor scores and predictions of gene expression were of particular interest. We used as an empirical cutoff. For factor loadings , which are the product of binary variable and continuous variable , we first summarized the corresponding : if the proportion of exceeded for all chains, then was directly summarized as ; otherwise , and we assessed convergence for these factor loadings using Rhat.

4.3 Performance metrics

We assessed performance according to four aspects: estimation of the correlation structure; prediction of gene expression; recovery of factor trajectories; and estimating factor loadings.

To assess estimation of correlation structure, we compared the final correlation estimate with the truth and additionally, we monitored the change in the correlation estimate throughout the whole iterative process, to assess the speed of convergence of the algorithm.

We used three metrics to assess the performance in predicting gene expressions: mean absolute error (), mean width of the predictive interval (), and proportion of genes within the predictive interval () (see Supplementary A.6 for details).

To assess the performance in recovering factor trajectories underlying gene expression observed in the training data, we first calculate the mean absolute error () for each scenario. In addition, we plot true and estimated factor trajectories for visual comparison. For the convenience of discussing results, we present trajectories for factor 1 of person 1 as an example. This chosen person-factor estimation result is representative of all factors of all people.

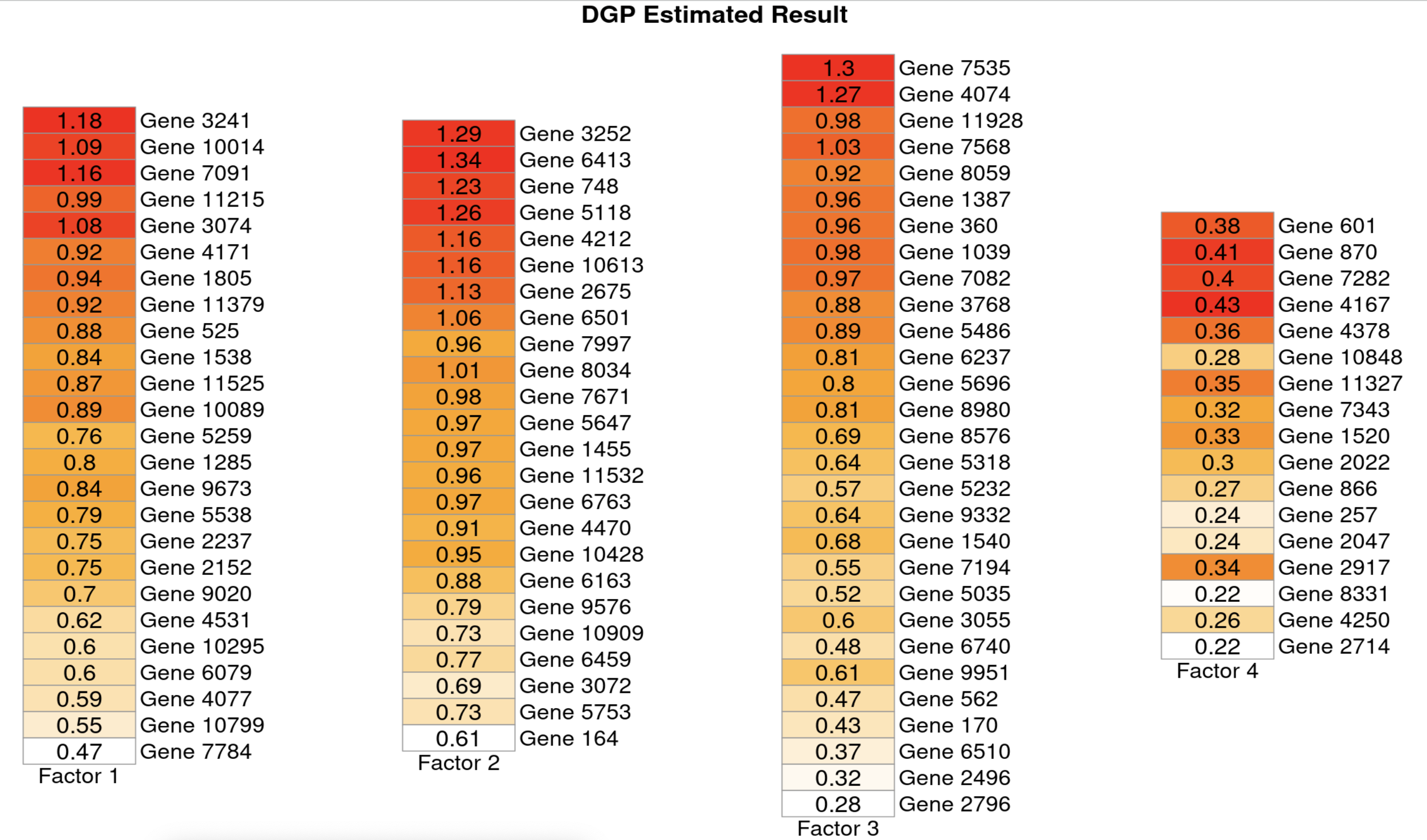

To evaluate the ability to estimate factor loadings, we consider two perspectives. First, for a specific factor, could the model identify all genes that were truly affected by this factor? Second, for a specific gene, could the model identify all factors that regulate this gene? To answer these questions, we calculated credible intervals for s and presented those s of which the interval did not contain in the heatmap, using posterior median estimates.

4.4 Results

When the number of latent factors is correctly specified, our model returns satisfactory cross-correlation estimates under all data generation mechanisms considered (Figure 1). Furthermore, despite the initial (from the two-step approach) being poor, MCEM is able to propose estimates of the cross-correlations that rapidly approximate the truth (Supplementary Figure 2 shows an example under the scenario CS).

For the inference of , , using Gibbs-after-MCEM, Supplementary Table 1 lists the largest Rhat for each variable type, and reflect no apparent concerns over non-convergence. In terms of predicting gene expression, similar is observed for both models. However, the DGP specification always leads to smaller and narrower , even when the factors are uncorrelated in truth. This indicates more accuracy and less uncertainty in prediction (Table 1).

Summary results in Supplementary Table 2 suggest that estimating factors is generally easier when the true variability of factors is larger. A closer inspection of factor trajectories delineated in Supplementary Figure 3 further confirms this: under scenarios CL and UL, both DGP and IGP models are able to recover the shape of factor trajectories very well. This could be explained by the relatively strong expression of latent factors. In contrast, in the case of CS and US, where expression is relatively weak, recovery of details of factor shapes is harder: if factors are not correlated at all (scenario US), both DGP and IGP fail to recover the details at the nd and th time point, though the overall shape is still close to the truth. However, if factors are truly correlated (scenario CS), DGP is able to recover trajectories very well, due to its ability to borrow information from other related factors.

With regard to factor loading estimation, Figure 2 shows results under the scenario CS, and Supplementary Figures 4,5 and 6 show results for the remaining scenarios. Both DGP and IGP perform well at identifying the correct genes for a given factor under all scenarios. Both models also perform well at identifying the correct factors for a given gene under scenarios CL, UL and US, but under the scenario CS we observe that DGP still performs well whereas IGP performs less well. As can be seen from Figure 2, IGP specification leads to the result that genes estimated to be significantly loaded on the first and second factor also significantly load on the fourth factor. One possible explanation for this is that, if in truth, factors have strong signals and/or are not correlated at all (as in scenarios CL, UL and US), then it is relatively easy to distinguish contributions from different factors. In contrast, under the scenario CS where factors actually have weak signals and are highly-correlated (true correlation between factor 1 and 4 is , and correlation between factor 2 and 4 is , as can be found from Figure 1), it is more difficult. DGP specification explicitly takes the correlation among factors into consideration, allowing improved estimation.

5 Data application

We ran iterations for the final Gibbs sampler, with a burn-in proportion and retained only every th iteration. We also compared our results with two alternative models, both of which assume independence between factors: the BSFA-IGP model, and the previous model in Chen et al., (2011), which adopted spline functions to model each factor trajectory independently.

5.1 Statistical results

We fitted the model with factors. We display results under after a comprehensive consideration of biological interpretation, computational cost and statistical prediction performance. Biologically, we found that biological pathways (immune response pathway and ribosome pathway; see Section 5.2 for details) were consistently identified when ranged from to while led to only pathway (immune response pathway) identified. Computationally, once was larger than , our algorithm became slower as the parameter space was very large. Statistically, among the remaining models, showed the best prediction performance (see Supplementary Table 6).

In terms of the prediction performance, similar is observed under BSFA-DGP () and BSFA-IGP (); whereas and are both smaller under BSFA-DGP (; ) than BSFA-IGP (; ). This again demonstrates the advantage of reducing prediction error and uncertainty if cross-correlations among factors are taken into consideration.

Estimated factor trajectories using BSFA-DGP are displayed in Figure 3. Among all, factor is able to distinguish symptomatic people from asymptomatic people most clearly, and we find that its shape is largely similar to the “principal factor” identified in Chen et al., (2011). Despite the similarity, it is noteworthy that the trajectory of our factor is actually more individualized and informative than their principal factor (see Supplementary D.2 for discussion in detail). Compared with BSFA-IGP, our model BSFA-DGP reduces the variability within subject-specific trajectory, and consequently it slightly increases the difference between the symptomatic and asymptomatic. Although results look similar at first glance (Supplementary Figure 17), a more careful inspection reveals that BSFA-DGP has a shrinkage effect compared to BSFA-IGP. Take factor 1 as an example (similar observations for other factors). For the blue line at the bottom: at hours, it remains around under BSFA-DGP while reaches as low as under BSFA-IGP, making it harder to differentiate this symptomatic subject from asymptomatic subjects with red lines.

5.2 Biological intepretation

To identify the biological counterpart (i.e., pathway) of the statistical factor, we used the KEGG Pathway analysis of the online bioinformatics platform DAVID. We uploaded the total genes as the “Background” and the top genes loaded on each factor as the “Gene List”. This showed that factor 1 can be interpreted as ‘immune response pathway’ and factor 3 as ‘ribosome pathway’, while other factors have no known biological meaning (see Supplementary D.4). In addition, our MCEM algorithm estimates the magnitude of the cross-correlation coefficient to be between factors 1 and 3. This statistically moderate but biologically noteworthy correlation between these two pathways is consistent with existing biological knowledge (e.g. see Bianco and Mohr,, 2019, which concludes that ribosome biogenesis restricts innate immune responses to virus infection). This interrelated relationship would be missed by an approach that assumed independence between pathways, such as BSFA-IGP.

6 Discussion

In this paper we propose a BSFA-DGP model, which is the first attempt to relax the classical assumption of independent factors for longitudinal data. By borrowing information from correlated factor trajectories, this model has demonstrated advantages in estimating factor loadings, recovering factor trajectories and predicting gene expression when comparing with other models.

We also develop an MCEM algorithm for the inference of the model; the main motivation for adopting this algorithm is that it can make full use of the existing package to estimate DGP hyperparameters. In the M-step of MCEM, GPFDA can be directly implemented to find the maximizer of the Q-function; thus we can exploit the existing optimization algorithm. In addition to its implementation convenience, the modular structure of the MCEM framework makes it generalizable to other complex models involving the DGP model component. For example, when using a DGP model for the latent continuous variable introduced to model multivariate dependent, non-continuous data (such as binary or count data), Sofro et al., (2017) used Laplace Approximation; the MCEM framework we develop would be an alternative inference approach in this case.

Computation time of our approach is largely dependent on GPFDA, which returns hyperparameter estimates for the DGP to maximize the exact likelihood of observing the inputs . The computational complexity of GPFDA at the th iteration of MCEM is , indicating the computational cost scales linearly with the sample size and the current number of MCMC samples , quadratically with the number of latent factors and the number of observed time points . MCEM scales well with , as it is not involved in the expression of complexity at all; it took around hours for the real data in Section 5 on a standard laptop (Quad-Core Intel Core i5). Potential approaches that can further improve the computational performance include a model approximation method proposed in Álvarez et al., (2010), or stochastic variational inference, as discussed in Hensman et al., (2013).

Throughout this paper, the number of latent factors was fixed and needed to be pre-specified. Though results of our model could infer redundant factors (as demonstrated in the simulation study), an automatic approach to infer this number from the data may be preferable in some contexts. A potential solution would be to introduce the Indian Buffet Process as a prior distribution over equivalence classes of infinite-dimensional binary matrices (Knowles and Ghahramani,, 2011). In addition, although our empirical evidence suggests that the constraints we adopt are sufficient to avoid non-identifiability, theoretical investigation would be helpful in future work. Extensions of our methodology to handle non-normal data, limits of detection, covariates and batch effects would also be interesting avenues for future research. Furthermore, in some settings it may be helpful to explicitly incorporate the clinical outcome into the model, which could be another direction for future research.

7 Software

The R code used for implementing the proposed BSFA-DGP model is available as an R package, DGP4LCF, on Github: https://github.com/jcai-1122/DGP4LCF. The package contains vignettes, which illustrate the usage of the functions within the package by applying them to analyze simulated dataset. The release used in this paper is available at https://doi.org/10.5281/zenodo.8108150.

Acknowledgments

This work is supported through the United Kingdom Medical Research Council programme grants MC_UU_00002/2, MC_UU_00002/20, MC_UU_00040/02 and MC_UU_00040/04. The authors are grateful to Oscar Rueda for the helpful discussion on the bioinformatics side of the project.

Conflict of Interest: None declared.

References

- Álvarez et al., (2010) Álvarez, M., Luengo, D., Titsias, M., and Lawrence, N. D. (2010). Efficient multioutput gaussian processes through variational inducing kernels. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, pages 25–32. JMLR Workshop and Conference Proceedings.

- Alvarez and Lawrence, (2011) Alvarez, M. A. and Lawrence, N. D. (2011). Computationally efficient convolved multiple output Gaussian processes. The Journal of Machine Learning Research, 12:1459–1500.

- Bekker and ten Berge, (1997) Bekker, P. A. and ten Berge, J. M. (1997). Generic global indentification in factor analysis. Linear Algebra and its Applications, 264:255–263.

- Bhattacharya and Dunson, (2011) Bhattacharya, A. and Dunson, D. B. (2011). Sparse Bayesian infinite factor models. Biometrika, 98(2):291–306.

- Bianco and Mohr, (2019) Bianco, C. and Mohr, I. (2019). Ribosome biogenesis restricts innate immune responses to virus infection and dna. Elife, 8:e49551.

- Bonilla et al., (2007) Bonilla, E. V., Chai, K., and Williams, C. (2007). Multi-task Gaussian process prediction. Advances in Neural Information Processing Systems, 20.

- Booth and Hobert, (1999) Booth, J. G. and Hobert, J. P. (1999). Maximizing generalized linear mixed model likelihoods with an automated Monte Carlo EM algorithm. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 61(1):265–285.

- (8) Boyle, P. and Frean, M. (2005a). Dependent Gaussian processes. Advances in Neural Information Processing Systems, 17:217–224.

- (9) Boyle, P. and Frean, M. (2005b). Multiple output Gaussian process regression.

- Caffo et al., (2005) Caffo, B. S., Jank, W., and Jones, G. L. (2005). Ascent-based Monte Carlo expectation–maximization. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 67(2):235–251.

- Carvalho et al., (2008) Carvalho, C. M., Chang, J., Lucas, J. E., Nevins, J. R., Wang, Q., and West, M. (2008). High-dimensional sparse factor modeling: applications in gene expression genomics. Journal of the American Statistical Association, 103(484):1438–1456.

- Casella, (2001) Casella, G. (2001). Empirical Bayes Gibbs sampling. Biostatistics, 2(4):485–500.

- Chen et al., (2011) Chen, M., Zaas, A., Woods, C., Ginsburg, G. S., Lucas, J., Dunson, D., et al. (2011). Predicting viral infection from high-dimensional biomarker trajectories. Journal of the American Statistical Association, 106(496):1259–1279.

- Cheng et al., (2020) Cheng, L.-F., Dumitrascu, B., Darnell, G., Chivers, C., Draugelis, M., Li, K., et al. (2020). Sparse multi-output gaussian processes for online medical time series prediction. BMC medical informatics and decision making, 20(1):1–23.

- Conti et al., (2014) Conti, G., Frühwirth-Schnatter, S., Heckman, J. J., and Piatek, R. (2014). Bayesian exploratory factor analysis. Journal of econometrics, 183(1):31–57.

- Costello and Osborne, (2019) Costello, A. B. and Osborne, J. (2019). Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Practical assessment, research, and evaluation, 10(1):7.

- Dempster et al., (1977) Dempster, A. P., Laird, N. M., and Rubin, D. B. (1977). Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society: Series B (Methodological), 39(1):1–22.

- Dey et al., (2020) Dey, D., Datta, A., and Banerjee, S. (2020). Graphical Gaussian process models for highly multivariate spatial data. arXiv preprint arXiv:2009.04837.

- Gelman and Rubin, (1992) Gelman, A. and Rubin, D. B. (1992). Inference from iterative simulation using multiple sequences. Statistical Science, 7(4):457–472.

- Guan and Haran, (2019) Guan, Y. and Haran, M. (2019). Fast expectation-maximization algorithms for spatial generalized linear mixed models. arXiv preprint arXiv:1909.05440.

- Hensman et al., (2013) Hensman, J., Fusi, N., and Lawrence, N. D. (2013). Gaussian processes for big data. arXiv preprint arXiv:1309.6835.

- Hsu and Yang, (2012) Hsu, C.-L. and Yang, U.-C. (2012). Discovering pathway cross-talks based on functional relations between pathways. BMC Genomics, 13(7):1–15.

- Jones, (2004) Jones, G. L. (2004). On the Markov chain central limit theorem. Probability Surveys, 1:299–320.

- Kim et al., (2021) Kim, S. Y., Choe, E. K., Shivakumar, M., Kim, D., and Sohn, K.-A. (2021). Multi-layered network-based pathway activity inference using directed random walks: application to predicting clinical outcomes in urologic cancer. Bioinformatics, 37(16):2405–2413.

- Knowles and Ghahramani, (2011) Knowles, D. and Ghahramani, Z. (2011). Nonparametric Bayesian sparse factor models with application to gene expression modeling. The Annals of Applied Statistics, 5(2B):1534 – 1552.

- Konzen et al., (2021) Konzen, E., Cheng, Y., and Shi, J. Q. (2021). Gaussian process for functional data analysis: The GPFDA package for R. arXiv preprint arXiv:2102.00249.

- Ledermann, (1937) Ledermann, W. (1937). On the rank of the reduced correlational matrix in multiple-factor analysis. Psychometrika, 2(2):85–93.

- Levine and Casella, (2001) Levine, R. A. and Casella, G. (2001). Implementations of the Monte Carlo EM algorithm. Journal of Computational and Graphical Statistics, 10(3):422–439.

- Levine and Fan, (2004) Levine, R. A. and Fan, J. (2004). An automated (Markov chain) Monte Carlo EM algorithm. Journal of Statistical Computation and Simulation, 74(5):349–360.

- Li et al., (2020) Li, Y., Wen, Z., Hau, K.-T., Yuan, K.-H., and Peng, Y. (2020). Effects of cross-loadings on determining the number of factors to retain. Structural Equation Modeling: A Multidisciplinary Journal, 27(6):841–863.

- Liu et al., (2018) Liu, H., Cai, J., and Ong, Y.-S. (2018). Remarks on multi-output Gaussian process regression. Knowledge-Based Systems, 144:102–121.

- Lunn et al., (2013) Lunn, D., Jackson, C., Best, N., Thomas, A., and Spiegelhalter, D. (2013). The BUGS book. A Practical Introduction to Bayesian Analysis, Chapman Hall, London.

- Neath, (2013) Neath, R. C. (2013). On convergence properties of the Monte Carlo EM algorithm. In Advances in modern statistical theory and applications: a Festschrift in Honor of Morris L. Eaton, pages 43–62. Institute of Mathematical Statistics.

- Papastamoulis and Ntzoufras, (2022) Papastamoulis, P. and Ntzoufras, I. (2022). On the identifiability of Bayesian factor analytic models. Statistics and Computing, 32(2):1–29.

- Penny and Roberts, (2002) Penny, W. and Roberts, S. (2002). Bayesian multivariate autoregressive models with structured priors. IEE Proceedings-Vision, Image and Signal Processing, 149(1):33–41.

- Ramsay and Hooker, (2019) Ramsay, J. and Hooker, G. (2019). Dynamic data analysis: modeling data with differential equations. Springer.

- Ramsay et al., (2009) Ramsay, J., Hooker, G., and Graves, S. (2009). Introduction to functional data analysis. In Functional data analysis with R and MATLAB, pages 1–19. Springer.

- Richardson et al., (2016) Richardson, S., Tseng, G. C., and Sun, W. (2016). Statistical methods in integrative genomics. Annual review of statistics and its application, 3:181.

- Shi and Choi, (2011) Shi, J. Q. and Choi, T. (2011). Gaussian process regression analysis for functional data. CRC Press.

- Sofro et al., (2017) Sofro, A., Shi, J. Q., and Cao, C. (2017). Regression analysis for multivariate dependent count data using convolved Gaussian processes. arXiv preprint arXiv:1710.01523.

- Temate-Tiagueu et al., (2016) Temate-Tiagueu, Y., Seesi, S. A., Mathew, M., Mandric, I., Rodriguez, A., Bean, K., et al. (2016). Inferring metabolic pathway activity levels from RNA-Seq data. BMC Genomics, 17(5):493–503.

- Wei and Tanner, (1990) Wei, G. C. and Tanner, M. A. (1990). A Monte Carlo implementation of the EM algorithm and the poor man’s data augmentation algorithms. Journal of the American Statistical Association, 85(411):699–704.

- Wu, (1983) Wu, C. J. (1983). On the convergence properties of the EM algorithm. The Annals of Statistics, pages 95–103.

- Ximénez et al., (2022) Ximénez, C., Revuelta, J., and Castañeda, R. (2022). What are the consequences of ignoring cross-loadings in bifactor models? a simulation study assessing parameter recovery and sensitivity of goodness-of-fit indices. Frontiers in Psychology, 13:923877.

- Zaas et al., (2009) Zaas, A. K., Chen, M., Varkey, J., Veldman, T., Hero III, A. O., Lucas, J., et al. (2009). Gene expression signatures diagnose influenza and other symptomatic respiratory viral infections in humans. Cell Host & Microbe, 6(3):207–217.

- Zhao et al., (2016) Zhao, S., Gao, C., Mukherjee, S., and Engelhardt, B. E. (2016). Bayesian group factor analysis with structured sparsity. Journal of Machine Learning Research, 17(196):1–47.

-

•

Center to obtain (as defined in Section 4.2), which is input to BFRM for .

-

•

Input to GPFDA for , and construct using and .

-

•

Initialize the starting sample size ; the counter , which counts the total number of sample size increase, and specify its upper limit as , as described in Supplementary A.5.

-

2.1

Draw samples of from the Gibbs sampler in Section 3.2, where is constructed by and .

-

2.2

Retain samples by discarding burn-in and thinning.

-

2.3

Align post-processed samples using R package “factor.switch”.

-

2.4

Input aligned samples to GPFDA to obtain .

-

2.5

Calculate the lower bound proposed by Caffo et al., (2005) using , and (see Supplementary A.4.2 for details).

| Factor Generation Mechanism | DGP, | IGP, | |||||

| Correlation | Variability | ||||||

| Correlated | Large | 1.29 | 6.52 | 0.95 | 1.42 | 7.85 | 0.95 |

| Small | 0.53 | 2.66 | 0.95 | 0.55 | 2.76 | 0.95 | |

| Uncorrelated | Large | 1.44 | 7.28 | 0.95 | 1.53 | 7.70 | 0.95 |

| Small | 0.55 | 2.78 | 0.95 | 0.56 | 2.79 | 0.95 | |

Supplementary Materials

A. Mathematical derivations

A.1 Full conditionals of the Gibbs sampler for the proposed model

Throughout the following derivation, “” denotes element-wise multiplication, “” denotes observed data and all parameters in the model other than the parameter under derivation, “” denotes the sum of squares of each element of the vector , “” denotes a diagonal matrix with the vector as its main diagonal elements, “” denotes that follows a multivariate normal distribution with mean and variance , and similar interpretations apply to other distributions. “” denotes the transpose of the vector or matrix , and “pos” is short for ‘posterior probability’.

-

•

Full conditional for the latent factors

We first sample for :

with

Then we sample for from , a MVN distribution due to the property of the DGP model (Shi and Choi,, 2011).

In the above equations:

-

–

is the covariance matrix of factor scores at full time , and is a sub-matrix of it (at subject-specific time points);

-

–

is constructed using components of the factor loading matrix : , where ;

-

–

, where represents a -dimensional row vector consisting of the scalar .

Note that when coding the algorithm, there is no need to directly create the diagonal matrix as the memory will be exhausted. To calculate the term , we can use the property of multiplication of a diagonal matrix: post-multiplying a diagonal matrix is equivalent to multiplying each column of the first matrix by corresponding elements in the diagonal matrix.

-

–

-

•

Full conditional for the binary matrix

Let denote the th row of the matrix ; then,We calculate the posterior probability under possible values of based on the above formula, then sample with corresponding probability.

-

•

Full conditional for the regression coefficient matrix

Let denote the th row of the matrix ; then,where

and .

-

•

Full conditional for the intercept

where

-

•

Full conditional for

-

•

Full conditional for

-

•

Full conditional for

-

•

Full conditional for

-

•

Full conditional for predictions of gene expression (only implemented when assessing models’ prediction performance on the test dataset)

Suppose that represent predicted gene expression and factor expression of the th individual at new time points, respectively. The posterior predictive distribution under MCEM-algorithm-returned can be expressed as

where the first term of the integrand is a MVN because of the assumed factor model, and the second term is also a MVN because of the assumed DGP model on latent factor trajectories (Shi and Choi,, 2011). Therefore, once a sample of parameters is generated, the th sample of can be generated from , then the th sample of can be sampled from .

A.2 Kernel convolution framework to model dependent Gaussian processes

A.2.1 Illustration using two processes

The KCF constructs correlated processes by introducing common “base processes”. Take two processes and as an example. KCF constructs them as,

where are residual errors from , and are processes constructed in the following way, illustrated in Supplementary Figure 1 below.

First, three independent, zero-mean base processes , and are introduced, which are all Gaussian white noise processes. The first process is shared by both and , thereby inducing dependence between them. Whereas and are specific to and , respectively; they are responsible for capturing the unique aspects of each process.

Second, Gaussian kernel functions are applied to convolve the base processes: with applied to the shared process and to the output-specific processes and ,

where the convolution operator is defined as . All kernel functions take the form , where and are positive parameters that are specific to each kernel function.

A.2.2 Specific form of covariance function

Under the kernel convolution framework, the covariance function between the time and within a single process , denoted as , can be decomposed as,

where are time indexes, , and if , otherwise .

The covariance function between the time of process and of process , denoted as , can be expressed as,

where .

Note that the original paper (Boyle and Frean, 2005b, ) provides derivation results under more generalized cases. Let denote the dimension of the input variable , and the number of shared base process . In our proposed approach, and . In Boyle and Frean, 2005b , , can be arbitrary positive integers.

A.3 Identifiability issue of the model

Similar to the classical factor analysis, we have identifiability issue caused by scaling and label switching. Differently, we do not have rotational invariance, due to the assumption of correlated factor trajectories (see Zhao et al., (2016) for a detailed description of identifiability issue caused by rotation, scaling and label switching under the classical factor analysis). Instead, we have a new source of non-identifiability, which is the covariance matrix itself. Below we explain specific issues in our model using mathematical expression and describe our corresponding solutions.

To facilitate illustrating the identifiability issue, we first re-express the proposed model in Section 2.3 as,

| (7) |

where , , and are vectorized from matrices , , and , respectively. To make the above equations hold, and are constructed using components of and , respectively. Specific forms are available in Supplementary Section A.1.

The distribution of after integrating out , is

| (8) |

where is a sub-matrix of that characterizes the covariance structure for .

First, there is identifiability issue with the covariance matrix . This issue arises from the invariance of the covariance in Equation 8. The uniqueness of has been ensured in previous research (Ledermann,, 1937; Bekker and ten Berge,, 1997; Conti et al.,, 2014; Papastamoulis and Ntzoufras,, 2022); given its identifiability, we are concerned with identifiability of . Non-identifiability is present because for any non-singular transformation matrix , the expression are equal under these two sets of estimators: the first estimator is and the second estimator is .

To address the issue, we place a constraint on that requires its main diagonal element to be . In other words, the covariance matrix of latent factors is forced to be a correlation matrix by assuming the variance of factor to be . This restriction has also been used in Conti et al., (2014), with multiple purposes: first, it ensures the uniqueness of ; in turn, it helps set the scale of and consequently helps set the scale of . In addition, note that the rotational invariance discussed in Zhao et al., (2016) does not exist here, as .

Second, even under the above constraint, there is still identifiability issue with . This is because might still equal when is a specific signed matrix (diagonal matrix with diagonal elements being or ) or permutation matrix (under this case, the identifiability problem is also known as label switching), as explained in Papastamoulis and Ntzoufras, (2022). The specific form of the transformation matrix leading to such invariance depends on the real correlation structure of the data.

To deal with this unidentifiability caused by signed permutation, a common approach is to post-process samples (Zhao et al.,, 2016). Here, we use the R package “factor.switch” developed in Papastamoulis and Ntzoufras, (2022) to align samples across Gibbs iterations. Alignment of should be completed before inputting factor scores to GPFDA for estimating DGP parameters within the MCEM algorithm, and also before the final posterior summary of using the Gibbs-After-MCEM samples. For the latter Gibbs sampler, we ran three chains in parallel; therefore alignment should be firstly carried out within each chain, then across chains.

A.4 Choosing the Gibbs sample size within the MCEM algorithm

One challenge with implementing the MCEM algorithm is the choice of the Gibbs sample size. First and foremost, the Gibbs-within-MCEM sample size determines the computational cost for both the Gibbs sampler and EM. Choosing too small, while saving computational expense for the Gibbs sampler, results in a less precise approximation of the Q-function, and thus leads to significantly slower convergence of the EM algorithm. In contrast, choosing too large, while providing an accurate approximation of the Q-function and faster EM convergence, results in the time taken for generating the required Gibbs samples becoming prohibitive. Consequently, the choice of must trade-off the accuracy of the Q-function with the computational cost of generating Gibbs-within-MCEM samples. We provided a literature review on this topic in Supplementary Section A.4.1 and adapted the approach proposed by Caffo et al., (2005) to our model in Supplementary Section A.4.2. This approach automatically determines when to increase dependent on whether the ascent property of the marginal likelihood under EM is preserved or not (Wu,, 1983).

A.4.1 Literature review

A thorough review of strategies for choosing can be found in Levine and Fan, (2004). We focus on approaches that automatically adjust the sample size to avoid tedious manual tuning; Neath, (2013) provided a review of this class of approaches. Briefly, there are primarily two methods with the main difference between them being the criterion to increase the sample size. The first approach (Booth and Hobert,, 1999; Levine and Casella,, 2001; Levine and Fan,, 2004) achieves automatic tuning by monitoring the Monte Carlo error associated with each individual parameter, which is the approximation error incurred when using Monte Carlo samples to approximate the exact expectation. Additional samples are needed if the Monte Carlo error for any of the parameters is deemed too large. However, this approach may be difficult to implement here because our experiments with GPFDA revealed that individual DGP parameters are not identifiable: for the same input data, different runs of GPFDA could return differing individual estimates yet still ensure similar estimates of the covariance matrix (therefore similar marginal likelihoods).

An alternative, proposed by Caffo et al., (2005), considers increasing the sample size dependent on whether the ascent property of the marginal likelihood under EM is preserved or not (Wu,, 1983). For exact EM, where the expectation can be calculated precisely, the likelihood function is non-decreasing as the algorithm progresses. However, under MCEM, where the E-step is estimated with Monte Carlo samples, it is possible for the likelihood to decrease, due to approximation error. The algorithm by Caffo et al., (2005) ensures that the likelihood still increases with a high probability, as the algorithm iterates, by introducing a mechanism for rejection of proposed parameter estimates. Specifically, they use the Q-function as a proxy for the marginal likelihood. An updated will be accepted only when it increases the Q-function compared to the previous ; otherwise, the algorithm will increase the sample size and will propose a new using the larger sample.

A.4.2 Adaptation of Caffo’s approach to our model

To apply the method of Caffo et al., (2005) to our model, we begin by writing out the exact Q-function after the th iteration of EM. Following Equations 3.5 and 3.8 of the main manuscript, . Thus, the change in the value of the Q-function after obtaining an updated compared to the current can be represented as,

where , and the expectation is with respect to . Under MCEM, we approximate this change using the approximate Q-function in Equation 3.7,

A generalized version of the Central Limit Theorem (CLT) (Jones,, 2004) shows that converges, in distribution, to N() as , where can be estimated using either the sample variance of when the samples are independent, or the batch means approach (Lunn et al.,, 2013; Guan and Haran,, 2019) when the samples are dependent (as is the case here, because they are obtained using an MCMC sampler). This implies that,

where is the estimate of , is the upper quantile of the standard normal distribution. is called the “Lower Bound” (LB) for (Caffo et al.,, 2005) because there is a high chance that is larger than this estimator if we choose to be small. When LB is positive, it is highly likely that is also positive. The automatic updating rule for the sample size is based on LB. In the th iteration, if LB is positive, then we accept the updated and keep the current sample size ; otherwise, we reject and continue generating additional samples under for updating again. Caffo et al., (2005) suggests a geometric rate of increase for the sample size, by drawing additional samples for some fixed .

A.5 Specifying the stopping condition

The other challenge when implementing the MCEM algorithm is to specify a stopping criterion. We propose to stop the algorithm when the total number of sample size increase exceeds a pre-specified value ; by doing so, the number of Gibbs samples and samples input to GPFDA is bounded, therefore ensuring the MCEM algorithm can return results within reasonable time.

Finally, post-processing Gibbs samples (including burn-in and thinning) before inputting them to GPFDA can help drastically reduce the computational cost of GPFDA while still preserving most information in the samples.

A.6 Metrics used for assessing the performance of models

We assessed the performance of predicting gene expressions using three metrics: mean absolute error (), mean width of the predictive interval (), and proportion of genes within the predictive interval ().

where , , , denotes the number of subjects, biomarkers, training time points and test time points, respectively; denotes the true value of , and denotes , , and quantiles of the posterior samples, respectively; and if is within the predictive interval, otherwise it is .

Similarly, we assessed the overall performance of estimating factor trajectories using mean absolute error ,

where , , denotes the number of subjects, factors, and training time points, respectively; is the true value of , and is the quantile of the posterior samples.

B. Implementation details of the proposed approach

B.1 Obtaining good initial values

To provide good initial values for MCEM, we implemented a two-step approach using available software. First, point estimates of latent factor scores were obtained using BFRM. We centered the gene expression within each individual before inputting it into BFRM: the input data consisted of the centered , . We did so because BFRM assumes independent data, therefore the intercept is specific to only a gene and not to a subject; in other words, it cannot estimate subject-gene mean . Second, initial values of GP parameters were obtained by inputting to GPFDA, with either a DGP or IGP specification.

Note that occasionally, the software BFRM may return an extremely bad initial (i.e., far from the truth), which may consequently result in a local optimum during MCEM rather than a global optimum. To ensure a good estimate of DGP hyperparameters is identified, we suggest users fit the model with multiple initials from BFRM and compare prediction performance for model selection.

B.2 Specification of hyperparameters and other tuning parameters

We specified hyperparameters and other tuning parameters as follows; these specifications were used for both the simulation study and the real-data application.

Specifically, we chose for an uninformative Inverse-Gamma prior distribution for the variance terms. We also set and to obtain a sparsity-inducing prior on , as the prior expectation of under this specification is , implying that only of genes are expected to be involved in each pathway. For the pre-specified values in the MCEM algorithm, we set the rate of sample size increase to and the maximum number of increasing the sample size to .

B.3. Summary of the Gibbs-after-MCEM algorithm

-

•

Observed gene expression and time points , for people.

-

•

Prediction time points .

-

•

.

-

•

Number of parallel chains .

-

•

Posterior samples of , including the pathway expression and gene-pathway relationship .

-

•

Posterior samples of predicted gene expression at .

-

•

Construct using , and .

-

•

Specify the Gibbs sample size .

C. Simulation study

C.1 Additional results under the setting in the manuscript

This section presents additional results under the setting described in the manuscript, where the number of subjects , the number of biomarkers , the number of training time points , and the number of latent factors .

| Scenario US | ||

| Model | DGP | IGP |

| Variable | MCMC iteration = | MCMC iteration = |

| Predicted gene expression | 1.01 | 1.01 |

| Factor loadings | 1.02 | 1.02 |

| Latent factors | 1.01 | 1.01 |

| Scenario CS | ||

| Model | DGP | IGP |

| Variable | MCMC iteration = | MCMC iteration = () |

| Predicted gene expression | 1.01 | 1.04 (1.02) |

| Factor loadings | 1.01 | 1.09 (4.37) |

| Latent factors | 1.01 | 1.04 (2.45) |

| Scenario UL | ||

| Model | DGP | IGP |

| Variable | MCMC iteration = () | MCMC iteration = () |

| Predicted gene expression | 1.04 (1.01) | 1.04 (1.01) |

| Factor loadings | 1.04 (1.48) | 1.06 (1.48) |

| Latent factors | 1.05 (1.40) | 1.06 (1.40) |

| Scenario CL | ||

| Model | DGP | IGP |

| Variable | MCMC iteration = () | MCMC iteration = () |

| Predicted gene expression | 1.04 (1.01) | 1.04 (1.01) |

| Factor loadings | 1.02 (1.29) | 1.06 (1.45) |

| Latent factors | 1.04 (1.25) | 1.06 (1.37) |

| Factor Generation Mechanism | |||

| Correlation | Variability | DGP | IGP |

| Correlated | Large | 0.15 | 0.16 |

| Small | 0.23 | 0.41 | |

| Uncorrelated | Large | 0.16 | 0.17 |

| Small | 0.23 | 0.23 | |

C.2 Further simulation settings under the scenario CS

This section discusses the performance of our model under more simulation settings. For the ease of illustration, these additional settings were considered under the scenario CS (true factors are correlated and have small variability) only.

C.2.1 Mis-specification of the number of latent factors

We investigated how the mis-specification of impacts model performance using the scenario CS with , , as an example. We tried different number of latent factors , ranging from to .

Supplementary Table 3 shows that correct specification of leads to the smallest and narrowest .

| 2 | 0.54 | 2.75 |

| 3 | 0.55 | 2.82 |

| 5 | 0.55 | 2.76 |

| 6 | 0.54 | 2.78 |

| 7 | 0.54 | 2.70 |

| 8 | 0.53 | 2.71 |

Another important metric is factor loading estimates. We have found that our results can always estimate the truth reasonably well regardless of the mis-specification. If is mis-specified larger than truth, then in practice we have found that the model is able to identify redundant factors (i.e., factors without any non-zero loadings); at the same time, all true factors can be recovered well. If is mis-specified smaller than truth, then our model can identify a subset of true factors.

When we specified (i.e. larger than the true ), the additional or redundant factors were clearly identified. Taking as an example for illustration. In the factor loading estimates displayed in Supplementary Figure 7, there is one factor with no non-zero loadings and the remaining factors are close to the truth. Results under are similar, as displayed in Supplementary Figures 8, 9, 10, respectively. These observations indicate that our model is able to identify all redundant factors via estimated factor loadings.

On the other hand, when is smaller than the truth, Supplementary Figure 11 shows that the genes loaded on the ‘missing factor’ (true factor 2) loaded on other factors (true factor 1) and the remaining factors approximately match the true loadings.

C.2.2 Impact of the number of biomarkers

We investigated the performance of our approach under a larger number of genes, with . Other settings are: sample size and number of training time points .

True factor loadings are displayed in Figure 12, and estimated results under correct specification of and incorrect specification are displayed in Figure 13 and Figure 14, respectively. Our approach is always able to recover the true factor loadings reasonably well; and when the specified number is unnecessarily large (), our model can identify redundant factors (i.e., corresponding to columns with all loadings as zero).

Note that the display of results under is different from that under , as the previous way (under ) of displaying results would lead to small texts hard to read. Therefore, we changed the display format: for the truth, we only display non-zero loadings for each factor; then we followed the referee’s suggestion to display the estimated result following the gene ordering of the ground truth. Redundant factors under mis-specifiction of are not displayed, as corresponding columns do not have non-zero loadings.

C.2.3 Impact of the sample size

We investigated the impact of the number of subjects on the result. We fix , and , while we vary .

We find that as the sample size increases, the performance of our model will improve. Supplementary Table 4 shows comparison in biomarker prediction.

| 10 | 0.54 | 2.83 |

| 17 | 0.53 | 2.66 |

| 20 | 0.50 | 2.61 |

| 40 | 0.49 | 2.46 |

C.2.4 Impact of the number of time points

We investigated the impact of the number of time points on the result. We fix , and , while we vary . We find that as increases, the performance of our model will improve. Supplementary Table 5 shows comparison in biomarker prediction.

| 4 | 0.59 | 2.89 |

| 8 | 0.53 | 2.66 |

| 16 | 0.50 | 2.45 |

| 25 | 0.45 | 2.21 |

C.2.5 Impact of the proportion of overlap between factors

We investigated the impact of greater overlap across factors on the model performance. In literature, it is called ‘cross-loading’ if an observed variable is significantly loaded on more than one latent factor (Ximénez et al.,, 2022; Li et al.,, 2020). The simulation study in the manuscript demonstrated good performance in recovering the factor loading when the proportion of genes with cross-loading is ( out of the significant genes are loaded on more than factor).

This additional simulation assesses the performance of our model under a larger cross-loading proportion ( out of the significant genes are loaded on more than factor).

Our model continued to perform well at estimating the factor loadings (Supplementary Figure 15), though a longer chain (corresponding to a more expensive computation cost) was required due to the more complex factor structure. In the original setting, only iterations were required while this number was not enough for the new setting with a greater overlap (results displayed in the third column of Supplementary Figure 15); a longer chain such as iterations were required (results displayed in the second column of Supplementary Figure 15).

It is worth highlighting that for each gene that is loaded on more than one factor, the factor with the largest loading can always be identified by our method. Take gene 98 as an example. The true loadings are non-zero on all factors (with the largest loading on factor 3). Although our model incorrectly estimates zero loading on factor 4, it is able to correctly estimate non-zero loading on factor 3.

D. Real Data

D.1 Prediction performance under different numbers of factors

| Specification of | ||

| 3 | 0.215 | 1.168 |

| 4 | 0.213 | 1.143 |

D.2 Comparison between our model and Chen et al., (2011)’s model

To facilitate comparison between our factor 1 and their ‘principal factor’, Supplementary Figure 16 displays factor trajectories of all people in the same format as Figure 4 in Chen et al., (2011). For symptomatic people, both factors display an increase after the inoculation (time ), then decrease to a level that is higher than before-inoculation; but for asymptomatic people, both factor shapes have little change.

In addition to the shape similarity, genes significantly loaded on factor are also largely similar to those loaded on Chen et al., (2011)’s principal factor. Chen et al., (2011) list the top genes sorted according to the absolute loading values (from most important to least important); we present this alongside the full list of our top genes in Supplementary Table LABEL:gene_comparison for comparison. out of the genes are the same.

| Row Index | Principal Factor by Chen et al., (2011) | Factor 1 by Our Approach |

| 1 | RSAD2 | LAMP3 (13) |

| 2 | IFI44L | RSAD2 (1) |

| 3 | IFIT1 | IFI44L (2) |

| 4 | IFI44 | SERPING1 (10) |

| 5 | HERC5 | SPATS2L (-) |

| 6 | OAS3 | SIGLEC1 (22) |

| 7 | MX1 | ISG15 (8) |

| 8 | ISG15 | IFIT1 (3) |

| 9 | IFIT3 | IFI44 (4) |

| 10 | SERPING1 | RTP4 (35) |

| 11 | IFIT2 | OAS3 (6) |

| 12 | OASL | IFI6 (17) |

| 13 | LAMP3 | CCL2 (-) |

| 14 | IFI27 | IDO1 (-) |

| 15 | OAS1 | HERC5 (5) |

| 16 | OAS2 | MS4A4A (-) |

| 17 | IFI6 | IFIT3 (9) |

| 18 | IFIT5 | OAS1 (15) |

| 19 | IFITM3 | OAS2 (16) |

| 20 | XAF1 | LY6E (24) |

| 21 | DDX58 | OASL (12) |

| 22 | SIGLEC1 | ATF3 (-) |

| 23 | DDX60 | CXCL10 (-) |

| 24 | LY6E | CCL8 (-) |

| 25 | GBP1 | XAF1 (20) |

| 26 | IFIH1 | IFI27 (14) |

| 26 | LOC26010 | SAMD4A (-) |

| 28 | ZCCHC2 | MX1 (7) |

| 29 | EIF2AK2 | LGALS3BP (-) |

| 30 | LAP3 | C1QB (-) |

| 31 | IFI35 | IFITM3 (19) |

| 32 | IRF7 | LAP3 (30) |

| 33 | PLSCR1 | IRF7 (32) |

| 34 | M97935_MA_at | ZBP1 (42) |

| 35 | RTP4 | HERC6 (37) |

| 36 | M97935_MB_at | TFEC (-) |

| 37 | HERC6 | IFI35 (31) |

| 38 | TNFAIP6 | MT2A (-) |

| 39 | PARP12 | SCO2 (41) |

| 40 | M97935_5_at | DDX58 (21) |

| 41 | SCO2 | IFIH1 (26) |

| 42 | ZBP1 | IFIT2 (11) |

| 43 | STAT1 | DHX58 (-) |

| 44 | UBE2L6 | TMEM255A (-) |

| 45 | MX2 | TNFAIP6 (38) |

| 46 | TOR1B | VAMP5 (-) |

| 47 | M97935_3_at | PARP12 (39) |

| 48 | TNFSF10 | GBP1 (25) |

| 49 | TRIM22 | TIMM10 (-) |

| 50 | APOL6 | C1QA (-) |

Despite the similarity between factor estimated by our BSFA-DGP and the principal factor estimated by Chen et al., (2011), as discussed above, it is noteworthy that the trajectory of factor is actually more individualized and informative than the principal factor. This can be seen by observing that, for symptomatic people, the shapes of the principal factor are exactly the same after the factor starts changing (Figure 4 in Chen et al., (2011)) whereas the shapes of factor still vary locally for different subjects, as can be seen from Supplementary Figure 16.

The difference in the recovered factor trajectories is caused by the different assumptions underlying the two models. Chen et al., (2011) assume that the dynamic trajectory of any factor is common to all individuals. Different factor values are observed at the same time point for different people only due to individual-dependent biological time shifts and random noise. Therefore, symptomatic and asymptomatic individuals are distinguished based on the time shift (Figure 4 in Chen et al., (2011)). In contrast, we assume that the trajectory of each factor comes from a distribution (i.e., there is no common curve for every individual), therefore allowing for different curve shapes for different people. We make direct use of the diversity of curve shapes to distinguish patients without introducing the “time shift” quantity.

An additional advantage of our model compared to that in Chen et al., (2011) is its ability to detect potential outliers, due to allowing for subject-specific trajectory. In contrast, Chen et al., assumes a universal factor trajectory and trajectories of different subjects are the result of only shifting this reference trajectory by subject-specific biological time shift along the x-axis. This assumption means extreme values of factor expression (outliers) would be hard to identify, which means additional discoveries may be missed.

D.3 Comparison between BSFA-DGP and BSFA-IGP

D.4 Biological Intepretation

We interpret factor 1 as ‘innate immune response pathway’, because all the significantly enriched pathways are related to immune response (Supplementary Figure 18); and interpret factor 3 as the ribosome pathway, because it is the only significantly enriched pathway identified (Supplementary Figure 19).