Dual Degradation-Inspired Deep Unfolding Network for Low-Light Image Enhancement

Abstract

Although low-light image enhancement has achieved great stride based on deep enhancement models, most of them mainly stress on enhancement performance via an elaborated black-box network and rarely explore the physical significance of enhancement models. Towards this issue, we propose a Dual degrAdation-inSpired deep Unfolding network, termed DASUNet, for low-light image enhancement. Specifically, we construct a dual degradation model (DDM) to explicitly simulate the deterioration mechanism of low-light images. It learns two distinct image priors via considering degradation specificity between luminance and chrominance spaces. To make the proposed scheme tractable, we design an alternating optimization solution to solve the proposed DDM. Further, the designed solution is unfolded into a specified deep network, imitating the iteration updating rules, to form DASUNet. Based on different specificity in two spaces, we design two customized Transformer block to model different priors. Additionally, a space aggregation module (SAM) is presented to boost the interaction of two degradation models. Extensive experiments on multiple popular low-light image datasets validate the effectiveness of DASUNet compared to canonical state-of-the-art low-light image enhancement methods. Our source code and pretrained model will be publicly available.

1 Introduction

Due to lacking of suitable light source, images shot at the surrounding exhibit poor visibility, weak contrast, and unpleasant noise. It not only prejudices the visual comfort of the observer, but also impairs the analysis performance of down-stream high-level vision tasks, e.g., detection [7, 37, 43, 49], tracking [63, 62], recognition [21, 40], and segmentation [42, 16, 28]. Hence, how to restore low-light images is an urgent problem to be solved.

Low-light image enhancement refers to improving the brilliance of image content and rendering the radiance of image focus. Generally, an image is considered as the product of an luminance layer and a reflectance layer based on Retinex theory [24]. Mathematically, it can be defined as , where denotes the element-level multiplication, and are the illumination layer and the reflectance layer which imply the extrinsic brightness and the intrinsic property of an object, respectively. According to the statement, some traditional methods attempt to separate reflectance maps from low-light images as enhanced results via Gaussian filter [24], multi-scale Gaussian filter [45], or luminance structure prior [19]. However, they could produce some unnatural appearances. Alternatively, some researchers simultaneously increase luminance component and restore reflectance component to further adjust under-exposure images via bright-pass filter [51] and weighted variational model [15]. Unfortunately, this way could under-enhance or over-enhance these images with uneven brightness. Besides, several additional penalty constraints, e.g., noise prior [27], low-rank constraint [41], structure prior [58, 3], are combined into the final optimization objective function to further improve the image objective quality. However, hand-crafted priors could not well encapsulate the various real low-light scenes. In short, above traditional methods are hard to yield impressive enhancement performance.

Fueled by convolutional neural network, a large group of researchers have attempted to train high-efficient low-light image enhancement models from a sea of data. Deep black-box methods directly learn a powerful mapping relationship from low-light images to normal-light images via denoising autoencoder [32], multi-scale framework [34, 61, 46, 70], normalizing flow [54], Transformer [59, 53], deep unsupervised framework [23, 17], and so on. However, they focus more on enhancement performance at the expense of the interpretability and physical degradation principle, which could heavily hinder the performance improvements. In the light of imaging theory, Retinex-based deep models are proposed to decompose natural images to luminance layers and reflectance layers, which could be trained via reflectance layer consistency constraint [55, 73], illumination smoothness [50], and semantic-aware prior [13]. However, the above methods only mimic ill-posed decomposition in form but lack accurate luminance references. Moreover, Deep unfolding methods [30, 56, 36] unrolled Retinex theory into a convolutional network to combine the physical principle and powerful representation ability of deep model. As shown in Fig. 1, the above unfolding methods directly conduct on RGB space. Deep unfolding methods operating on other color space with specific advantages, such as, YCbCr [47, 1, 18], remian unexplored.

To push the frontiers of deep unfolding-based image enhancement, we propose a Dual degrAdation-inSpired deep Unfolding network, termed DASUNet, for low-light image enhancement, which is shown in Fig. 2. The motivation originates from the degradation specificity of low-light images between luminance and chrominance spaces [47, 1, 18, 48]. On this basis, we formulate the task of low-light image enhancement as the optimization of dual degradation model, which inherits the physical deterioration principle and interpretability. Further, an alternating optimization solution is designed to solve the proposed dual degradation model. Then, the iterative optimization rule is unfolded into a deep model, composing DASUNet, which enjoys the strengths of both the physical model and the deep network. Based on the differences of luminance and chrominance spaces, we customized two different prior modeling modules (PMM) to learn different prior information. In the luminance channel, we design a luminance adjustment Transformer to modulate brightness strength. While in the chrominance channel, a Wavelet decomposition Transformer is proposed to model high-frequency and low-frequency information leveraging the advantages of convolutions and Transformer [12, 31, 68, 4]. Besides, we design a space aggregation module (SAM) to yield the clear images, which combines the reciprocity of dual degradation priors. We perform extensive experiments on several datasets to analyze and validate the effectiveness and superiority of our proposed DASUNet compared to other state-of-the-art methods.

In a nutshell, the main contributions of this work are summarized as follows:

-

•

We propose a dual degradation model based on degradation specificity of low-light images on different spaces. It is unfolded to form dual degradation-inspired deep unfolding network for low-light image enhancement, which can jointly learn two degradation priors from luminance space and chrominance space. More importantly, dual degradation model empowers DASUNet with explicit physical insight, which improves the interpretability of enhancement model.

-

•

We design two different prior modeling modules, luminance adjustment Transformer and Wavelet decomposition Transformer, to obtain degradation-aware priors based on degradation specificity of different spaces.

2 Related Work

2.1 Low-light image enhancement

Recently, deep low-light image models have highly outperformed the traditional enhancement models not only in performance but also in running time. As a pioneering work, LLNet [32] lightened low-light images using the denoising auto-encoder. After that, a large number of researchers [34, 61, 59, 53, 8, 76, 23, 75, 26] built the mapping relation between low-light images and normal-light images via different blocks or manners. Alternatively, some methods [55, 73, 50] attempted to decompose low-light images into reflectance and luminance maps via a data-driven deep model built on Retinex theory. Besides, more researchers [23, 17, 30, 36] have started to construct an unsupervised enhancement model to alleviate the shortage of paired images. However, above methods generally connot consider the physical imaging principle.

2.2 Deep unfolding method

Deep unfolding model inherits the advantages of the interpretability of physical models and the powerful representation capability of deep models driven by a large number of images, which has shown prominent performance in many vision tasks, e.g., super resolution [14, 33, 67, 11] and compressive sensing [6, 39, 46]. Concretely speaking, deep unfolding model could optimize the iterative solver of physical models via some convolution networks in an end-to-end manner. In the field of low-light image enhancement, URetinex [56] proposed a Retinex theory-inspired unfolding model, however too many constraint terms hindered its performance. RUAS [30] and SCI [36] adopted a reference-free training mechanism to supervise the unfolding framework, limiting their performance. Unlike them that only learn a universal prior information, our method unfolds a novel dual degradation model based on space difference into an elaborated network, which learn two complementary and effective prior information to restore low-light images.

3 Methodology

In this section, we firstly introduce our designed dual degradation model (DDM) as the objective function. Then, an iterative optimization solution is designed as the solver of DDM. Moreover, we elaborate on dual degradation-inspired unfolding network (DASUNet) for low-light image enhancement, which is delineated on Fig. 2.

3.1 Dual Degradation Model

Fundamentally, given a low-light image , this paper aim to recover a normal-light image from it. denotes the spatial dimension of an image. According to Retinex theory, the degradation model of low-light images can be described as:

| (1) |

where is the illumination layer, which in fact is a hybrid degradation operator that may include illuminance deterioration, color distortion, and detail loss [50, 77]. Hence, we can recover by minimizing the following energy function:

| (2) |

where is the data fidelity term, represents the degradation prior term, and denotes the hyperparameter weighting the significance of prior term. Due to the ill-posedness of degradation model, many hand-crafted prior items, e.g., non-local similarity [65, 66] and low-rank prior [9], are introduced to approximate a desired solution. However, above priors are hard to depict the universal structure of natural images. Recently, deep denoising prior [10, 69] is proposed to characterize the image-generic skeleton in a data-driven manner, which show more competitive performance in many low-level vision tasks. Hence, this paper also adopts deep prior as the regularization constraint.

Traditional degradation models [9, 65] generally conduct in a single image space, e.g., RGB or Y, which show similar deterioration type. However, low-light images show diverse deterioration types, leading to less effectiveness of above degradation model for their enhancement. Of note, we find the degradation specificity between luminance and chrominance spaces from low-light images [1, 18], which is demonstrated in Fig. 3. One can see obvious degradation difference in luminance and chrominance spaces, which inspires us to design DDM to describe different deterioration process. DDM can be obtained via transforming Eq. (2):

| (3) | ||||

where and refer to luminance and chrominance degradation operators, and denote luminance component of normal-light and low-light images, and denote chrominance component of normal-light and low-light images, and denote the luminance space prior and chrominance space prior, and are two weight parameters. More specifically, , , , and can be obtained via:

| (4) | ||||

where and signify the luminance and chrominance transform. For compactness, we omit and in the following. Moreover, we can easily transform luminance and chrominance components to original RGB space via the inverse transform of and . DDM disentangles the intricate low-light degradation process into two easy independent degradation operators, which significantly reduce the modelling difficulty of prior term.

3.2 Optimization Solution

To achieve accurate normal-light images from low-light images, we design an alternate optimization solution to solve DDM. Firstly, we separate Eq. (3) into two independent objects to facilitate the optimization solution:

| (5) |

| (6) |

where Eq. (5) represents luminance degradation model and Eq. (6) refers to chrominance degradation model. They can be parallelly updated in luminance and chrominance spaces.

Taking Eq. (5) as example, it can be solved via proximal gradient algorithm [39, 6], which has been demonstrated its effectiveness in many inverse problems. Specifically, we can alternatively deduce the following updating rule to obtain an approximate solution:

| (7a) | |||

| (7b) | |||

where is the -th updating solution, denotes the updating stepsize, is the transpose of , represents the proximal operator. Generally, the first item is called as the gradient descent and the second item is thought as the proximal mapping.

Similarly, chrominance degradation model is also solved by:

| (8a) | |||

| (8b) | |||

Finally, we can obtain the output results during -th updating process via:

| (9) |

where and are the inverse transformation of luminance and chrominance transform. represents the space merging operation.

3.3 Dual Degradation-Inspired Deep Unfolding Network

Based on above designed iteration optimization solution, we unfold each iterative step into corresponding modules to construct our DASUNet, which is shown in Fig. 2. Specifically, our DASUNet is composed of stages, each of which contains two parts, luminance optimization stream (LOS) and chrominance optimization stream (COS). Furthermore, we design a space aggregation module (SAM) to combine the output of each stage of COS and LOS, which can interact the complementary features learned from luminance and chrominance spaces to produce more high-quality normal-light restored images.

Luminance Optimization Stream. LOS is composed of gradient descent modules (GDM) and prior modeling modules (PMM), which are unfolded from Eq. (7). Taking -th stage for example, GDM adopts low-light luminance component and the -th restored result as inputs, which is depicted in Fig. 4(a). A non-trivial problem is the construction of the degradation operator and its transpose . Inspired by [39, 6], we employ a residual convolution block to simulate and . Their structure all adopt Conv-PReLU-Conv, and the channel numbers of two Convs are set to and 1. For , it is a learnable parameter that is updated in each epoch and initialized to .

Another important module is PMM, which is also thought as a denoising network in many works [10, 69]. Previous methods usually exploit convolution block, e.g., U-net and Resblock, to learn a universal image prior driven by tremendous specified-type images. However, they only extract local image prior via stacking more convolutions but cannot learn more representative long-range image structure. Recently, Transformer has been demonstrated its superiority for long-range information modeling in many vision tasks [12, 31, 68, 4]. Motivated by them, we design a novel Transformer-based PMM to model long-range structural knowledge, as shown in Fig. 5(b). Moreover, some researchers [44, 60] found the information flow among adjacent stages limits the performance of deep unfolding networks and propose a memory-augmented mechanism to facilitate the information flow across stages. Consequently, we build a high-way feature transmission path among adjacent stages without any convolution to aggravate the model efficiency, as shown in Fig. 2.

Specifically, the input is first fed into a convolution with kernel size of and a channel attention block (CAB) [72] to extract shallow features. Then, two convolutions with channels are used to aggregate the shallow features and high-way features from previous stage. Next, two Transformer blocks are used to learn long-range prior features, each of which includes a self-attention layer and a feed-forward layer. Finally, the learned features are sent into high-way features transmission path to flow into the next stage and SAM to produce the restored results of this stage. Overall, LOS can be described as:

| (10a) | |||

| (10b) | |||

In PMM of LOS, we propose a novel luminance adjustment Transformer (LAT) to replace the Transformer block of PMM. LAT is shown in Fig. 4(e). Considering the local smoothness of brightness, we construct a local brightness difference via the pooling layer and pixel-wise subtraction. Then, we learn a brightness scale factor exploiting a convolution layer to adjust the brightness. Finally, we use a convolution to obtain the final luminance prior information.

Chrominance Optimization Stream. COS has the same structure as LOS except for PMM. In COS, we customized a new self-attention layer, Wavelet decomposition Transformer, based on the characteristic of chrominance channels of low-light images, which is shown in Fig. 4(d). The input features are split into low-frequency part and high-frequency part via Wavelet filters. Low-frequency part is inputted into a Transformer block to extract long-range degradation knowledge. Since high-frequency part is sparse, it passed into a convolution block to learn local degradation prior. The design can facilitate the modeling of detail prior and reduce the computation overhead.

Similarly, COS is represented as:

| (11a) | |||

| (11b) | |||

Space Aggregation Module. SAM is proposed to combine luminance prior and chrominance prior based on Equation (10). It takes the outputs of LOS and COS as input to produce the enhanced normal-light image. Specifically, it firstly concatenate and . Then, a convolution with channels is used to combine them. After that, a CAB is added to emphasize the significant features. Finally, we yield the enhanced images in this stage via a convolution with channels. SAM is shown in Fig. 5(c) and can be written as below:

| (12) |

3.4 Loss Function

Given pairs of low/normal-light images , we can train our proposed DASUNet via the following loss function:

| (13) |

where is the stage number, is the weighting parameter of the -th stage, indicates the Charbonnier loss, and denotes the enhanced image of j-th stage of DASUNet. In our training, we set = 1 and other = 0.1.

4 Experiments and Results

4.1 Training Detail and Benchmark

Our DASUNet is conducted in PyTorch platform with an RTX 3090 GPU. The channel number for all module (without clear statement) is set to and the stage number is initialized as in our experiments. Adam optimizer with default setting ( and ) is used as the training solver of DASUNet. The initial learning rate is specified as and gradually decreases to during epochs via the cosine annealing strategy. Moreover, we also use the warming up strategy ( epochs) to steady the training of our DASUNet. Training samples are randomly cropped with size of and then rotated and flipped for data augmentation. For convenience, images are firstly transformed to YCbCr space for training or inference and are finally re-transformed back to RGB domain for impartial evaluation.

We evaluate the effectiveness of our DASUNet on two popular benchmarks: LOL [55] and MIT-Adobe FiveK [2]. LOL dataset is comprised of paired of low/normal-light images, in which pairs are used for training and the rest is used for test. MIT-Adobe FiveK dataset contains low-light images from various real scenes. Like other methods, we use the images retouched by expert-C as reference images. The first pairs images are set as the training set and the last pairs images are selected as test set. Three full-reference evaluators, PSNR, SSIM, and LPIPS [71], are selected as objective metrics. More datasets, including LOL-v2 and some unpaired datasets, and their results are shown in Supplementary Material.

| Methods | AGCWD | SRIE | LIME | ROPE | Zero-DCE | RetinexNet | Enlighten-GAN | KinD | KinD++ |

|---|---|---|---|---|---|---|---|---|---|

| PSNR | 13.05 | 11.86 | 16.76 | 15.02 | 14.86 | 16.77 | 17.48 | 20.38 | 21.80 |

| SSIM | 0.4038 | 0.4979 | 0.5644 | 0.5092 | 0.5849 | 0.5594 | 0.6578 | 0.8045 | 0.8316 |

| LPIPS | 0.4816 | 0.3401 | 0.3945 | 0.4713 | 0.3352 | 0.4739 | 0.3223 | 0.1593 | 0.1584 |

| Methods | MIRNet | URetinex | Bread | DCCNet | LL-Former | UHDFour | RetinexFormer | LLDiffusion | Ours |

| PSNR | 24.14 | 21.33 | 22.96 | 22.98 | 23.65 | 23.10 | 25.16 | 24.65 | 26.60 |

| SSIM | 0.8302 | 0.7906 | 0.8121 | 0.7909 | 0.8102 | 0.8208 | 0.8434 | 0.8430 | 0.8552 |

| LPIPS | 0.1311 | 0.1210 | 0.1597 | 0.1427 | 0.1692 | 0.1466 | 0.1314 | 0.0750 | 0.1275 |

| Methods | SRIE | LIME | IAT | DSN | DSLR | SCI | CSRNet | MIRNet | Ours |

|---|---|---|---|---|---|---|---|---|---|

| PSNR | 18.44 | 13.28 | 18.08 | 19.58 | 20.26 | 20.73 | 20.93 | 23.78 | 28.34 |

| SSIM | 0.7905 | 0.7276 | 0.7888 | 0.8308 | 0.8150 | 0.7816 | 0.7875 | 0.8995 | 0.9140 |

| LPIPS | 0.1467 | 0.1573 | 0.1364 | 0.0874 | 0.1759 | 0.1066 | 0.1297 | 0.0714 | 0.0476 |

4.2 Comparison with State-of-the-Art Methods

To evaluate the performance of our proposed DASUNet, we compare our results with seventeen classical low-light image enhancement methods which include four traditional models (AGCWD [22], SRIE [15], LIME [19], and ROPE [57]), two unsupervised methods (Zero-DCE [17] and EnlightenGAN [23]), five recent deep black-box methods (MIRNet [64], Bread [18], LLFormer [53], UHDFour [26], and LLDiffusion [52]) and four deep Retinex-based methods (RetinexNet [55], KinD [73], KinD++ [74], URetinex [56], and RetinexFormer [5]), on LOL dataset. Quantitative comparison results are summarized in Table 1. It can easily observe that our method outperforms other comparison methods in PSNR and SSIM and achieves the comparative results in LPIPS evaluator. Qualitative results are presented in Fig. 5. As can be seen, RetinexNet cannot achieve expected enhancement images and KinD produces over-smoothing restored images. URetinex shows the color deviation on the ground. LLFormer and RetinexFormer seem to be under-enhanced and noisy. However, the enhanced result by our DASUNet shows more vivid details and more natural scene light, which remove some artifacts and noise that cannot be eliminated by other methods.

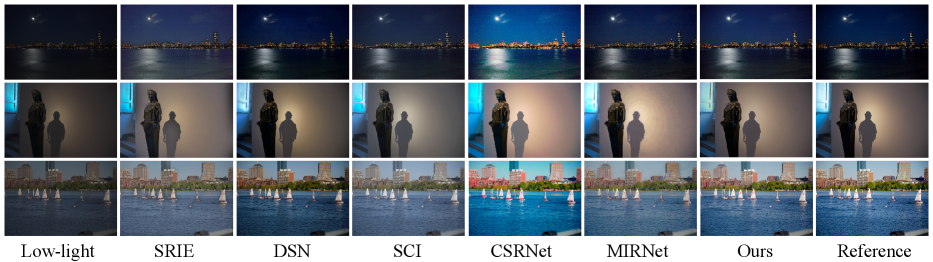

Moreover, we conduct experiments on MIT-Adobe FiveK dataset to demonstrate the generalization capability of our proposed DASUNet. We compare our model with eight state-of-the-art methods including SRIE [15], LIME [19], IAT [8], SCI [36], DSN [76], DSLR [29], CSRNet [20], and MIRNet [64] in Table 2. As tabulated in Table 2, our method significantly surpasses the second-best methods, MIRNet, in terms of PSNR, SSIM, and LPIPS. To show the effectiveness of our DASUNet, visual comparisons are manifested in Fig. 6. One can observe that SRIE and DSN connot achieve favorable enhancements. CSRNet overenhances the low-light images. MIRNet encounters ring artifacts in the second row of image. Overall, our DASUNet produces impressive enhanced images.

| Methods | Single model | Single model | Double model | Triple model | Triple model |

|---|---|---|---|---|---|

| Space | RGB | YCbCr | Y-CbCr | R-G-B | Y-Cb-Cr |

| PSNR | 25.56 | 25.62 | 26.60 | 24.39 | 25.77 |

| SSIM | 0.8367 | 0.8383 | 0.8552 | 0.8299 | 0.8420 |

| LPIPS | 0.1475 | 0.1410 | 0.1275 | 0.1627 | 0.1386 |

| Time (s) | 0.42 | 0.42 | 0.60 | 0.79 | 0.79 |

4.3 Ablation study

Dual degradation model. Based on the degradation specificity between luminance and chrominance spaces, we proposed a DDM for low-light image enhancement. To demonstrate its effectiveness, we conduct some comparison experiments on various color spaces and degradation models on LOL dataset, the results of which are presented in Table 3. Single model and triple model denotes one degradation model and three degradation models on corresponding sapces. One can see from Table 3 that the design philosophy behind DDM is effective. Single model cannot consider the the degradation specificity on different spaces and triple model could lose the mutual benefits between homogeneous degradation spaces. Visual comparison of different degradation models are illustrated in Fig. 7(a). Single model introduces some visual artifacts and triple model yields some blurs, while our model produce clearer result.

Stage number. It is well-known that the stage number is essential for iteration optimization solution. We explore the performance difference of different stage numbers and experimental results are delineated in Fig. 8. When exceeds , the enhancement performance reaches convergence. Consequently, the stage number in our experiments is set to . Visual comparsion are shown in Fig. 7(b). One can see clearer results with stage advancement.

4.4 Running Time

We report the average running time of our method and recent proposed deep learning-based methods on LOL dataset in Fig. 9. Also, we list model parameters of all test methods in Fig. 9. Although LLFormer and MIRNet can achieve noticeable performance, they are heavy in model size. URetinex and Zero-DCE show compact model design, but their performance is poorer than ours. One can see that our method achieves the best performance with an acceptable running time and a relatively economic model size. It embodies a favorable trade-off between the effectiveness and efficiency of our proposed model.

5 Conclusion

In this paper, we have proposed a dual degradation-inspired deep unfolding method (DASUNet) for low-light image enhancement. Specifically, we design a dual degradation model (DDM) based on the degradation specificity among luminance and chrominance spaces. An alternative optimization solution is proposed to solve it and is unfolded into specified network modules to construct our DASUNet, which contains a new prior modeling module to capture the long-range prior information and a space aggregation module to combine dual degradation priors. Extensive expeirmental results validate the superiority of our DASUNet for low-light image enhancement. In future, we will explore more low-level vision tasks based on DDM.

References

- Atoum et al. [2020] Yousef Atoum, Mao Ye, Liu Ren, Ying Tai, and Xiaoming Liu. Color-wise attention network for low-light image enhancement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2130–2139, 2020.

- Bychkovsky et al. [2011] Vladimir Bychkovsky, Sylvain Paris, Eric Chan, and Frédo Durand. Learning photographic global tonal adjustment with a database of input / output image pairs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 97–104, 2011.

- Cai et al. [2017] Bolun Cai, Xianming Xu, Kailing Guo, Kui Jia, Bin Hu, and Dacheng Tao. A joint intrinsic-extrinsic prior model for retinex. In Proceedings of the IEEE International Conference on Computer Vision, pages 4020–4029, 2017.

- Cai et al. [2022] Yuanhao Cai, Jing Lin, Haoqian Wang, Xin Yuan, Henghui Ding, Yulun Zhang, Radu Timofte, and Luc Van Gool. Degradation-aware unfolding half-shuffle transformer for spectral compressive imaging. In Advances in Neural Information Processing Systems, 2022.

- Cai et al. [2023] Yuanhao Cai, Hao Bian, Jing Lin, Haoqian Wang, Radu Timofte, and Yulun Zhang. Retinexformer: One-stage retinex-based transformer for low-light image enhancement. In IEEE/CVF International Conference on Computer Vision, ICCV 2023, Paris, France, October 1-6, 2023, pages 12470–12479. IEEE, 2023.

- Chen et al. [2022] Wenjun Chen, Chunling Yang, and Xin Yang. FSOINET: feature-space optimization-inspired network for image compressive sensing. In IEEE International Conference on Acoustics, Speech and Signal Processing, pages 2460–2464, 2022.

- Cui et al. [2021] Ziteng Cui, Guo-Jun Qi, Lin Gu, Shaodi You, Zenghui Zhang, and Tatsuya Harada. Multitask AET with orthogonal tangent regularity for dark object detection. In Proceedings of the IEEE International Conference on Computer Vision, pages 2533–2542, 2021.

- Cui et al. [2022] Ziteng Cui, Kunchang Li, Lin Gu, Shenghan Su, Peng Gao, Zhengkai Jiang, Yu Qiao, and Tatsuya Harada. You only need 90k parameters to adapt light: a light weight transformer for image enhancement and exposure correction. In British Machine Vision Conference, page 238, 2022.

- Dong et al. [2013] Weisheng Dong, Guangming Shi, and Xin Li. Nonlocal image restoration with bilateral variance estimation: A low-rank approach. IEEE Trans. Image Process., 22(2):700–711, 2013.

- Dong et al. [2019] Weisheng Dong, Peiyao Wang, Wotao Yin, Guangming Shi, Fangfang Wu, and Xiaotong Lu. Denoising prior driven deep neural network for image restoration. IEEE Trans. Pattern Anal. Mach. Intell., 41(10):2305–2318, 2019.

- Dong et al. [2021] Weisheng Dong, Chen Zhou, Fangfang Wu, Jinjian Wu, Guangming Shi, and Xin Li. Model-guided deep hyperspectral image super-resolution. IEEE Trans. Image Process., 30:5754–5768, 2021.

- Dosovitskiy et al. [2021] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, and Neil Houlsby. An image is worth 16x16 words: Transformers for image recognition at scale. In International Conference on Learning Representations, 2021.

- Fan et al. [2020] Minhao Fan, Wenjing Wang, Wenhan Yang, and Jiaying Liu. Integrating semantic segmentation and retinex model for low-light image enhancement. In Proceedings of the ACM International Conference on Multimedia, pages 2317–2325, 2020.

- Fu et al. [2022] Jiahong Fu, Hong Wang, Qi Xie, Qian Zhao, Deyu Meng, and Zongben Xu. Kxnet: A model-driven deep neural network for blind super-resolution. In Proceedings of European Conference Computer Vision, pages 235–253, 2022.

- Fu et al. [2016] Xueyang Fu, Delu Zeng, Yue Huang, Xiao-Ping (Steven) Zhang, and Xinghao Ding. A weighted variational model for simultaneous reflectance and illumination estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2782–2790, 2016.

- Gao et al. [2022] Huan Gao, Jichang Guo, Guoli Wang, and Qian Zhang. Cross-domain correlation distillation for unsupervised domain adaptation in nighttime semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 9903–9913, 2022.

- Guo et al. [2020] Chunle Guo, Chongyi Li, Jichang Guo, Chen Change Loy, Junhui Hou, Sam Kwong, and Runmin Cong. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 1777–1786, 2020.

- Guo and Hu [2023] Xiaojie Guo and Qiming Hu. Low-light image enhancement via breaking down the darkness. Int. J. Comput. Vis., 131(1):48–66, 2023.

- Guo et al. [2017] Xiaojie Guo, Yu Li, and Haibin Ling. LIME: low-light image enhancement via illumination map estimation. IEEE Trans. Image Process., 26(2):982–993, 2017.

- He et al. [2020] Jingwen He, Yihao Liu, Yu Qiao, and Chao Dong. Conditional sequential modulation for efficient global image retouching. In Proceedings of European Conference Computer Vision, pages 679–695, 2020.

- Hira et al. [2021] Sanchit Hira, Ritwik Das, Abhinav Modi, and Daniil Pakhomov. Delta sampling R-BERT for limited data and low-light action recognition. In IEEE Conference on Computer Vision and Pattern Recognition Workshops,, pages 853–862, 2021.

- Huang et al. [2013] Shih-Chia Huang, Fan-Chieh Cheng, and Yi-Sheng Chiu. Efficient contrast enhancement using adaptive gamma correction with weighting distribution. IEEE Trans. Image Process., 22(3):1032–1041, 2013.

- Jiang et al. [2021] Yifan Jiang, Xinyu Gong, Ding Liu, Yu Cheng, Chen Fang, Xiaohui Shen, Jianchao Yang, Pan Zhou, and Zhangyang Wang. Enlightengan: Deep light enhancement without paired supervision. IEEE Trans. Image Process., 30:2340–2349, 2021.

- Jobson et al. [1997] Daniel J. Jobson, Zia-ur Rahman, and Glenn A. Woodell. Properties and performance of a center/surround retinex. IEEE Trans. Image Process., 6(3):451–462, 1997.

- Lee et al. [2013] Chulwoo Lee, Chul Lee, and Chang-Su Kim. Contrast enhancement based on layered difference representation of 2d histograms. IEEE Trans. Image Process., 22(12):5372–5384, 2013.

- Li et al. [2023] Chongyi Li, Chunle Guo, Man Zhou, Zhexin Liang, Shangchen Zhou, Ruicheng Feng, and Chen Change Loy. Embedding fourier for ultra-high-definition low-light image enhancement. In International Conference on Learning Representations, 2023.

- Li et al. [2018] Mading Li, Jiaying Liu, Wenhan Yang, Xiaoyan Sun, and Zongming Guo. Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans. Image Process., 27(6):2828–2841, 2018.

- Liao et al. [2023] Yiyi Liao, Jun Xie, and Andreas Geiger. KITTI-360: A novel dataset and benchmarks for urban scene understanding in 2d and 3d. IEEE Trans. Pattern Anal. Mach. Intell., 45(3):3292–3310, 2023.

- Lim and Kim [2021] Seokjae Lim and Wonjun Kim. DSLR: deep stacked laplacian restorer for low-light image enhancement. IEEE Trans. Multim., 23:4272–4284, 2021.

- Liu et al. [2021a] Risheng Liu, Long Ma, Jiaao Zhang, Xin Fan, and Zhongxuan Luo. Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 10561–10570, 2021a.

- Liu et al. [2021b] Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE International Conference on Computer Vision, pages 9992–10002, 2021b.

- Lore et al. [2017] Kin Gwn Lore, Adedotun Akintayo, and Soumik Sarkar. Llnet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recognit., 61:650–662, 2017.

- Luo et al. [2020] Zhengxiong Luo, Yan Huang, Shang Li, Liang Wang, and Tieniu Tan. Unfolding the alternating optimization for blind super resolution. In Advances in Neural Information Processing Systems, 2020.

- Lv et al. [2021] Feifan Lv, Yu Li, and Feng Lu. Attention guided low-light image enhancement with a large scale low-light simulation dataset. Int. J. Comput. Vis., 129(7):2175–2193, 2021.

- Ma et al. [2015] Kede Ma, Kai Zeng, and Zhou Wang. Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process., 24(11):3345–3356, 2015.

- Ma et al. [2022a] Long Ma, Tengyu Ma, Risheng Liu, Xin Fan, and Zhongxuan Luo. Toward fast, flexible, and robust low-light image enhancement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5627–5636, 2022a.

- Ma et al. [2022b] Tengyu Ma, Long Ma, Xin Fan, Zhongxuan Luo, and Risheng Liu. PIA: parallel architecture with illumination allocator for joint enhancement and detection in low-light. In Proceedings of the ACM International Conference on Multimedia, pages 2070–2078. ACM, 2022b.

- Mittal et al. [2012] Anish Mittal, Anush Krishna Moorthy, and Alan Conrad Bovik. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process., 21(12):4695–4708, 2012.

- Mou et al. [2022] Chong Mou, Qian Wang, and Jian Zhang. Deep generalized unfolding networks for image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 17378–17389, 2022.

- Nguyen et al. [2023] Cindy M. Nguyen, Eric R. Chan, Alexander W. Bergman, and Gordon Wetzstein. Diffusion in the dark: A diffusion model for low-light text recognition. CoRR, abs/2303.04291, 2023.

- Ren et al. [2020] Xutong Ren, Wenhan Yang, Wen-Huang Cheng, and Jiaying Liu. LR3M: robust low-light enhancement via low-rank regularized retinex model. IEEE Trans. Image Process., 29:5862–5876, 2020.

- Sakaridis et al. [2021] Christos Sakaridis, Dengxin Dai, and Luc Van Gool. ACDC: the adverse conditions dataset with correspondences for semantic driving scene understanding. In Proceedings of the IEEE International Conference on Computer Vision, pages 10745–10755, 2021.

- Sasagawa and Nagahara [2020] Yukihiro Sasagawa and Hajime Nagahara. YOLO in the dark - domain adaptation method for merging multiple models. In Proceedings of European Conference Computer Vision, pages 345–359, 2020.

- Song et al. [2021] Jiechong Song, Bin Chen, and Jian Zhang. Memory-augmented deep unfolding network for compressive sensing. In Proceedings of the ACM International Conference on Multimedia, pages 4249–4258, 2021.

- Daniel J. Jobson et al. [1997] Daniel J. Jobson, Zia-ur Rahman, and Glenn A. Woodell. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process., 6(7):965–976, 1997.

- Wang et al. [2023a] Huake Wang, Ziang Li, and Xingsong Hou. Versatile denoising-based approximate message passing for compressive sensing. IEEE Trans. Image Process., 32:1–15, 2023a.

- Wang et al. [2024a] Huake Wang, Xiaoyang Yan, Xingsong Hou, Junhui Li, Yujie Dun, and Kaibing Zhang. Division gets better: Learning brightness-aware and detail-sensitive representations for low-light image enhancement. Knowl. Based Syst., 299:111958, 2024a.

- Wang et al. [2024b] Huake Wang, Xiaoyang Yan, Xingsong Hou, Kaibing Zhang, and Yujie Dun. Extracting noise and darkness: Low-light image enhancement via dual prior guidance. IEEE Trans. Circuits Syst. Video Technol., pages 1–1, 2024b.

- Wang et al. [2024c] Huake Wang, Xin Zeng, Ting Zhang, Jinjiang Wei, Xingsong Hou, Hengfeng Wu, and Kaibing Zhang. Hierarchical kernel interaction network for remote sensing object counting. IEEE Trans. Geosci. Remote. Sens., 62:1–13, 2024c.

- Wang et al. [2019] Ruixing Wang, Qing Zhang, Chi-Wing Fu, Xiaoyong Shen, Wei-Shi Zheng, and Jiaya Jia. Underexposed photo enhancement using deep illumination estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 6849–6857, 2019.

- Wang et al. [2013] Shuhang Wang, Jin Zheng, Hai-Miao Hu, and Bo Li. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process., 22(9):3538–3548, 2013.

- Wang et al. [2023b] Tao Wang, Kaihao Zhang, Ziqian Shao, Wenhan Luo, Björn Stenger, Tae-Kyun Kim, Wei Liu, and Hongdong Li. Lldiffusion: Learning degradation representations in diffusion models for low-light image enhancement. CoRR, abs/2307.14659, 2023b.

- Wang et al. [2023c] Tao Wang, Kaihao Zhang, Tianrun Shen, Wenhan Luo, Björn Stenger, and Tong Lu. Ultra-high-definition low-light image enhancement: A benchmark and transformer-based method. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 1–10, 2023c.

- Wang et al. [2022] Yufei Wang, Renjie Wan, Wenhan Yang, Haoliang Li, Lap-Pui Chau, and Alex C. Kot. Low-light image enhancement with normalizing flow. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 2604–2612, 2022.

- Wei et al. [2018] Chen Wei, Wenjing Wang, Wenhan Yang, and Jiaying Liu. Deep retinex decomposition for low-light enhancement. In British Machine Vision Conference, page 155, 2018.

- Wu et al. [2022] Wenhui Wu, Jian Weng, Pingping Zhang, Xu Wang, Wenhan Yang, and Jianmin Jiang. Uretinex-net: Retinex-based deep unfolding network for low-light image enhancement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5891–5900, 2022.

- Wu et al. [2021] Xiaomeng Wu, Yongqing Sun, Akisato Kimura, and Kunio Kashino. Reflectance-oriented probabilistic equalization for image enhancement. In IEEE International Conference on Acoustics, Speech and Signal Processing, pages 1835–1839, 2021.

- Xu et al. [2020] Jun Xu, Yingkun Hou, Dongwei Ren, Li Liu, Fan Zhu, Mengyang Yu, Haoqian Wang, and Ling Shao. STAR: A structure and texture aware retinex model. IEEE Trans. Image Process., 29:5022–5037, 2020.

- Xu et al. [2022] Xiaogang Xu, Ruixing Wang, Chi-Wing Fu, and Jiaya Jia. Snr-aware low-light image enhancement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 17693–17703, 2022.

- Yang et al. [2022] Gang Yang, Man Zhou, Keyu Yan, Aiping Liu, Xueyang Fu, and Fan Wang. Memory-augmented deep conditional unfolding network for pansharpening. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 1778–1787, 2022.

- Yang et al. [2020] Wenhan Yang, Shiqi Wang, Yuming Fang, Yue Wang, and Jiaying Liu. From fidelity to perceptual quality: A semi-supervised approach for low-light image enhancement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3060–3069, 2020.

- Ye et al. [2021] Junjie Ye, Changhong Fu, Guangze Zheng, Ziang Cao, and Bowen Li. Darklighter: Light up the darkness for UAV tracking. In IEEE/RSJ International Conference on Intelligent Robots and Systems, pages 3079–3085, 2021.

- Ye et al. [2022] Junjie Ye, Changhong Fu, Guangze Zheng, Danda Pani Paudel, and Guang Chen. Unsupervised domain adaptation for nighttime aerial tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 8886–8895, 2022.

- Zamir et al. [2020] Syed Waqas Zamir, Aditya Arora, Salman H. Khan, Munawar Hayat, Fahad Shahbaz Khan, Ming-Hsuan Yang, and Ling Shao. Learning enriched features for real image restoration and enhancement. In Proceedings of European Conference Computer Vision, pages 492–511, 2020.

- Zhang et al. [2012] Kaibing Zhang, Xinbo Gao, Dacheng Tao, and Xuelong Li. Single image super-resolution with non-local means and steering kernel regression. IEEE Trans. Image Process., 21(11):4544–4556, 2012.

- Zhang et al. [2015] Kaibing Zhang, Dacheng Tao, Xinbo Gao, Xuelong Li, and Zenggang Xiong. Learning multiple linear mappings for efficient single image super-resolution. IEEE Trans. Image Process., 24(3):846–861, 2015.

- Zhang et al. [2020] Kai Zhang, Luc Van Gool, and Radu Timofte. Deep unfolding network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3214–3223, 2020.

- Zhang et al. [2022a] Kai Zhang, Yawei Li, Jingyun Liang, Jiezhang Cao, Yulun Zhang, Hao Tang, Radu Timofte, and Luc Van Gool. Practical blind denoising via swin-conv-unet and data synthesis. CoRR, abs/2203.13278, 2022a.

- Zhang et al. [2022b] Kai Zhang, Yawei Li, Wangmeng Zuo, Lei Zhang, Luc Van Gool, and Radu Timofte. Plug-and-play image restoration with deep denoiser prior. IEEE Trans. Pattern Anal. Mach. Intell., 44(10):6360–6376, 2022b.

- Zhang et al. [2023] Kaibing Zhang, Cheng Yuan, Jie Li, Xinbo Gao, and Minqi Li. Multi-branch and progressive network for low-light image enhancement. IEEE Trans. Image Process., 32:2295–2308, 2023.

- Zhang et al. [2018a] Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 586–595, 2018a.

- Zhang et al. [2018b] Yulun Zhang, Kunpeng Li, Kai Li, Lichen Wang, Bineng Zhong, and Yun Fu. Image super-resolution using very deep residual channel attention networks. In Proceedings of European Conference Computer Vision, pages 294–310, 2018b.

- Zhang et al. [2019] Yonghua Zhang, Jiawan Zhang, and Xiaojie Guo. Kindling the darkness: A practical low-light image enhancer. In Proceedings of the ACM International Conference on Multimedia, pages 1632–1640, 2019.

- Zhang et al. [2021] Yonghua Zhang, Xiaojie Guo, Jiayi Ma, Wei Liu, and Jiawan Zhang. Beyond brightening low-light images. Int. J. Comput. Vis., 129(4):1013–1037, 2021.

- Zhang et al. [2022c] Zhao Zhang, Huan Zheng, Richang Hong, Mingliang Xu, Shuicheng Yan, and Meng Wang. Deep color consistent network for low-light image enhancement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 1889–1898, 2022c.

- Zhao et al. [2021] Lin Zhao, Shao-Ping Lu, Tao Chen, Zhenglu Yang, and Ariel Shamir. Deep symmetric network for underexposed image enhancement with recurrent attentional learning. In Proceedings of the IEEE International Conference on Computer Vision, pages 12055–12064, 2021.

- Zheng et al. [2021] Chuanjun Zheng, Daming Shi, and Wentian Shi. Adaptive unfolding total variation network for low-light image enhancement. In Proceedings of the International Conference on Computer Vision, pages 4419–4428, 2021.

- Zhou et al. [2024] Kun Zhou, Xinyu Lin, Wenbo Li, Xiaogang Xu, Yuanhao Cai, Zhonghang Liu, Xiaoguang Han, and Jiangbo Lu. Unveiling advanced frequency disentanglement paradigm for low-light image enhancement. In Proceedings of European Conference Computer Vision, pages 204–221, 2024.

Supplementary Material

6 Supplementary materials

6.1 Dataset detail

LOL-V2 dataset [61] contains 789 pairs of normal/low-light images, in which 689 pairs are used to train the enhancement model and 100 pairs are used as testset. MEF [35] and DICM [25] are two unpaired datasets without reference images. They are respectively composed of 17 and 69 images, which usually are used to evaluate the generalization ability of enhancement algorithms. For unpaired datasets, we introduce a no-reference evaluator NIQE [38] to assess their performance.

6.2 Ablation study

In PMM, we design new luminance adjustment Transformer (LAT) and Wavelet decomposition Transformer (WDT) based on different characteristics of different spaces. To analyze their effects, we perform a set of ablation experiments via breaking them down. As listed in Table 1, the performance will be enhanced by 1.57 dB and 1.72 dB compared to the baseline when adding LAT and WDT. Finally, LAT and WDT jointly achieve the best performance.

| LAT | WDT | PSNR | SSIM | LPIPS |

|---|---|---|---|---|

| 24.05 | 0.8338 | 0.1426 | ||

| ✓ | 25.62 | 0.8415 | 0.1387 | |

| ✓ | 25.77 | 0.8437 | 0.1354 | |

| ✓ | ✓ | 26.60 | 0.8552 | 0.1275 |

Besides, we study the effect of SAM and high-way feature path. As shown in Table 2, the performance will be heavily decreased when deleting SAM and high-way feature path. It demonstrates the importance of SAM and high-way feature path for space prior combination and feature flow.

| SAM | feature path | PSNR | SSIM | LPIPS |

|---|---|---|---|---|

| ✓ | 24.36 | 0.8332 | 0.1410 | |

| ✓ | 24.32 | 0.8463 | 0.1366 | |

| ✓ | ✓ | 26.60 | 0.8552 | 0.1275 |

6.3 Comparisons

We compare our results with seven methods, including SRIE [15], LIME [19], EnlightenGAN [23], Zero-DCE [17], URetinex [56], SNRANet [59], UHDFour [26], RetinexFormer [5], LLDiffusion [52], and ACCA [78], on LOL-V2 dataset. As shown in Table 3, we outperform other comparison methods in PSNR and SSIM. Visual comparisons are shown in Fig. 1. As shown in Fig. 1, LIME and URetinex over-enhance the low-light images. Zero-DCE produces under-enhanced results. UHDFour encounters the color cast in the first-line image. Our method yields visual-pleased results. It demonstrates the advantages of WDT and LAT.

| Methods | SRIE | LIME | En-GAN | Zero-DCE | URetinex | SNRANet | UHDFour | RetinexFormer | LLDiffusion | ACCA | Ours |

|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | 14.45 | 15.24 | 18.64 | 18.06 | 19.78 | 21.41 | 21.78 | 22.80 | 23.16 | 23.80 | 27.64 |

| SSIM | 0.5240 | 0.4151 | 0.6767 | 0.5795 | 0.8427 | 0.8475 | 0.8542 | 0.8400 | 0.8420 | 0.8290 | 0.8659 |

|

Besides, we compare our results with SRIE [15], Zero-DCE [17], RetinexNet [55], KinD [73], MIRNet [64], Bread [18], and LLFormer [53] on MEF and DICM datasets. As shown in Table 4, we obtain the best performance on two datasets in terms of NIQE. Visual comparisons are shown in Fig. 2 and 3. Retinex produces unnatural looks, and KinD achieves under-enhanced results. While MIRNet over-enhances low-light images. LLFormer produces checkboard artifacts. It can be observed that our method yields impressive enhancement images.

| Datasets | SRIE | Zero-DCE | RetinexNet | KinD | MIRNet | Bread | LLFormer | Ours |

|---|---|---|---|---|---|---|---|---|

| MEF | 3.2041 | 3.3088 | 4.9043 | 3.5598 | 3.1915 | 3.5677 | 3.2847 | 3.0358 |

| DICM | 3.3657 | 3.0973 | 4.3143 | 3.5135 | 3.1533 | 3.4063 | 3.5154 | 2.9591 |

|

|