Dual-CBA: Improving Online Continual Learning

via Dual Continual Bias Adaptors

from a Bi-level Optimization Perspective

Abstract

In online continual learning (CL), models trained on changing distributions easily forget previously learned knowledge and bias toward newly received tasks. To address this issue, we present Continual Bias Adaptor (CBA), a bi-level framework that augments the classification network to adapt to catastrophic distribution shifts during training, enabling the network to achieve a stable consolidation of all seen tasks. However, the CBA module adjusts distribution shifts in a class-specific manner, exacerbating the stability gap issue and, to some extent, fails to meet the need for continual testing in online CL. To mitigate this challenge, we further propose a novel class-agnostic CBA module that separately aggregates the posterior probabilities of classes from new and old tasks, and applies a stable adjustment to the resulting posterior probabilities. We combine the two kinds of CBA modules into a unified Dual-CBA module, which thus is capable of adapting to catastrophic distribution shifts and simultaneously meets the real-time testing requirements of online CL. Besides, we propose Incremental Batch Normalization (IBN), a tailored BN module to re-estimate its population statistics for alleviating the feature bias arising from the inner loop optimization problem of our bi-level framework. To validate the effectiveness of the proposed method, we theoretically provide some insights into how it mitigates catastrophic distribution shifts, and empirically demonstrate its superiority through extensive experiments based on four rehearsal-based baselines and three public continual learning benchmarks.

Index Terms:

Online continual learning, bi-level optimization, task-recency bias, stability gap, batch normalization.I Introduction

Continual learning (CL) [1, 2] aims to develop models that can accumulate new knowledge while consolidating previously learned knowledge from streaming data. In the context of CL, the data distribution of streaming tasks is in general non-stationary and changes over time, which violates the independent and identically distributed (i.i.d) assumption commonly adopted in traditional machine learning. Therefore, continual learning suffers from catastrophic forgetting problem [3], where the model severely forgets the previously learned knowledge after being trained on a new task.

Traditional offline CL stores all training batches of the current task and the model is trained on these samples for multiple epochs to achieve relatively superior performance. However, the availability of previously learned batches might be restricted due to privacy concerns [4] or memory limitations. In this paper, we mainly focus on online CL [5], a more challenging and realistic setting. In online CL, samples from each task can be trained in only a single-pass (i.e., one epoch), and previous batches are not accessible in the future.

Unlike traditional CL settings, the training data distribution in online CL continuously changes throughout the entire training process. Consequently, online CL often leads to more severe distribution shifts, further exacerbating catastrophic forgetting. To alleviate this problem, rehearsal-based methods [6, 7, 8, 2] employed a small memory buffer to store the examples of previous tasks, aiming at approximating the entire data distribution of all seen tasks. Even though these rehearsal-based methods have achieved sound performance in online CL, most of them often suffer from task-recency bias [9], i.e., the classifiers tend to classify samples into the classes that are currently being trained. Consequently, some previous works aim to improve the original linear classifier [10, 11, 12] or replace it directly with the nearest classifier [9, 6] to mitigate the negative effects of class imbalance between currently received classes and replayed classes. Despite the promising performance, almost all of these methods implicitly view task-recency bias as a label distribution shift and tackle it from the perspective of class imbalance, which makes these methods sub-optimal in practice [13].

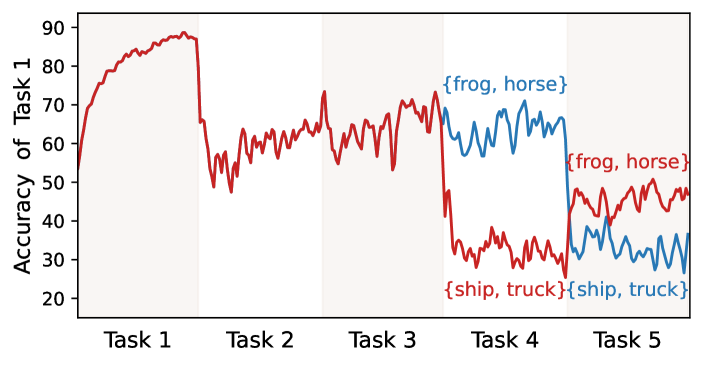

From the Bayes viewpoint, the target of online CL is to accomplish a stable consolidation of knowledge for all tasks by fitting the posterior probability , where and represent stochastic variables of the input data and the corresponding label, respectively. According to Bayes’s rule , any shift from the prior probability or the likelihood will lead to distribution change in . For example, as previous works point out [10, 11, 12, 13], incoming new tasks can change the label distribution , leading to severe forgetting. On the other hand, can also suffer from catastrophic shifts (dubbed feature distribution shift for simplicity) due to the time-varying data streams, especially in online continual learning (CL). To illustrate the feature distribution shift, we conducted a toy experiment using Experience Replay (ER) [14] on CIFAR-10 [15]. As shown in Fig. 1, we exchange the incoming classes of the 4th and 5th tasks while maintaining the label distribution unchanged, as plotted in red and blue lines, respectively. By continuously tracking the accuracy of the 1st task, we observe a significant difference in its final accuracy. This validates the existence of feature distribution shifts (i.e., changes in ) and highlights a challenging problem for online continual learning (CL): how to achieve stable consolidation of past knowledge amidst these distribution shifts.

To tackle these challenges, we introduce the Continual Bias Adaptor (CBA) module to directly adapt the posterior distribution shift online in our conference version [16]. This module aids the original classification network in aligning with the evolving posterior distribution, thereby facilitating stable knowledge consolidation across all seen tasks. To jointly optimize the classification network and the CBA module, we propose a bi-level optimization framework, in which the inner loop problem optimizes the classification network by rehearsal-based methods with the help of CBA, and the outer loop optimizes CBA to assimilate the training bias in continual learning. Specifically, the CBA module in [16] is designed as a lightweight neural network such as a multi-layer perceptron (MLP), which maps the posterior distribution predicted by the classification network to the corresponding adapted posterior probability in a class-specific manner. For clarity, we refer to the CBA module proposed in the conference version as the class-specific CBA in the subsequent sections.

Albeit fast adaptation to drastic posterior distribution change, the class-specific CBA module suffers from a serious stability gap problem [17], where the performance of the previously learned tasks significantly drops upon starting to learn a new task, followed by a fast recovery phase. To illustrate this phenomenon, we compare the accuracy curve of the baseline model ER with that of the class-specific CBA (ER-CBA) on CIFAR-100 in Fig. 2. It can be observed that ER-CBA achieves higher performance at the end of each task training, but it suffers a more substantial degradation than ER upon starting to train a new task. This indicates that the class-specific CBA cannot immediately adapt to the catastrophic posterior distribution change caused by incoming new tasks, thus compromising the need for continuous performance evaluation at any time in online CL. This paper reveals that this serious stability gap arises from the class-specific CBA’s input, specifically the posterior distribution predicted by the classification network, which changes dramatically upon starting to learn a new task or at the task transition timestamp. Thus, the class-specific CBA module may produce incorrect adjustments for the classification network, resulting in a substantial stability gap.

To mitigate the stability gap problem, we propose a novel class-agnostic CBA designed to capture the stable relationship between the new task and all previously learned tasks in the continual learning process. The proposed class-agnostic CBA aggregates the overall posterior probabilities of the new task and those of all the old tasks separately, subsequently yielding stable corrections for the resulting posterior distribution. As a robust and transferable bias adaptor, the class-agnostic CBA module can effectively adapt to sudden changes in posterior distributions and thus can mitigate the stability gap problem. To further leverage the advantages of both the class-agnostic CBA and the class-specific CBA, we integrate these two modules into a unified Dual-CBA. This integration allows the classification network to effectively fit the implicit posterior distribution while maintaining stable knowledge consolidation from all seen tasks throughout the entire continual learning process, as shown in Fig. 2.

Furthermore, we found that the bi-level framework proposed in [16] encounters a feature bias challenge. This challenge arises from the commonly used batch normalization (BN) [18], which induces feature deviations during the testing stage. Specifically, the population statistics of BN are only updated in the inner loop together with the classification network parameter according to the samples from the new task and those saved in the memory buffer. This causes the exponential moving average (EMA) of BN used for testing to be gradually dominated by the new task because most of the training data in the inner loop comes from the new tasks, leading to biased population statistics. Unfortunately, this bias is not fed back into the posterior distribution, making it challenging for the proposed Dual-CBA to adapt accordingly.

To address this problem, we propose Incremental BN (IBN), a simple yet effective method to mitigate the aforementioned feature bias. IBN stops the updating of EMA population statistics of BN in the inner loop and instead replaces them with statistics calculated from the outer loop. This strategy yields a balanced population estimation for the classification model and effectively assimilates the feature bias for the testing stage. For a simple implementation, we can only update the population statistics before the testing stage with the memory buffer data, which shows significant performance improvement for the proposed bi-level learning algorithm.

In a nutshell, building on the class-specific CBA published in our conference version [16], this paper extends it from several perspectives. The main contributions are summarized as follows,

-

•

We shed light on certain limitations of our previously designed class-specific CBA module. On this basis, we propose the class-agnostic CBA and combine it with the class-specific CBA to form a novel Dual-CBA, which can effectively assimilate the training bias and alleviate the stability gap issue in continual learning.

-

•

Based on the proposed bi-level learning framework, we propose Incremental BN to estimate more accurate BN statistics with a simple implementation, assimilating the feature bias during testing.

-

•

Theoretically, we explain how the proposed method adapts to distribution shifts and alleviates forgetting in CL from the perspective of gradient alignment. Additionally, we provide insights into the optimization process of the bi-level learning framework from a linear formulation.

-

•

We conduct extensive experiments to demonstrate that our method consistently improves upon various rehearsal baselines across multiple continual learning (CL) settings. Specifically, we extend Dual-CBA to a semi-supervised continual learning setting and further validate its generalization ability.

-

•

We highlight the strong transferability of the proposed class-agnostic CBA module to unseen tasks or datasets when pre-trained on a limited number of tasks. Concretely, we demonstrate that a pre-trained module can be directly applied to various intra-dataset and inter-dataset scenarios.

The paper is organized as follows. Sec. II discusses related works. Sec. III introduces the proposed Dual-CBA method in detail. Sec. V present extensive experiments to evaluate our method, and the conclusions are summarized in Sec. VI.

II Related Works

Continual learning settings. Based on different task construction manners, continual learning (CL) mainly falls into three categories [1, 2, 19]: Task-incremental learning (Task-IL), Domain-incremental learning (Domain-IL), and Class-incremental learning (Class-IL). Specifically, Task IL necessitates prior knowledge of the task index in both the training and testing stages. Domain-IL mainly focuses on concept drift, where the domain of each task changes while the label space remains unchanged [20, 21, 22]. This paper concentrates on the more challenging Class-IL, where the task index is unavailable during testing [7, 23, 6, 24]. Additionally, from the training perspective, CL can be divided into offline and online CL. Offline CL involves preserving all samples of the current task and training the model on them across multiple epochs [25, 7, 26, 27, 6]. As for online CL, samples of the current task arrive sequentially, which cannot be stored entirely, and each sample is typically seen once, except when stored in the memory buffer [28, 5]. In this paper, we mainly focus on online CL, which represents a more demanding and realistic setting compared to offline CL. Furthermore, since it is expensive to obtain a large amount of labeled data, many works focus on certain weakly supervised scenarios, such as few-shot [29, 30, 31], semi-supervised [32, 33, 34], and imbalance [35, 36], etc. To demonstrate the flexibility of our approach, we also extend our method to the semi-supervised continual learning setting.

Rehearsal-based methods in online CL. In online CL, the main objective is to make the model quickly acquire new knowledge from a new task while retaining previously learned knowledge from old tasks [13, 37, 38, 39, 40, 28, 41]. A commonly used baseline, Experience replay (ER), trains the incoming new samples along with old samples from the memory buffer together. The variants of ER attempt to employ different techniques in the replay strategy, such as knowledge distillation and random augmentation. For example, DER++ [7] utilized a stronger distillation method to further replay logits of the memory buffer data and Mnemonics [42] applied bi-level optimization to distillate global information of all seen examples into a few learnable ones. RAR [8] adopted random augmentation to alleviate overfitting of the memory buffer, while CLSER [25] constructed plastic and stable models to consolidate recent and structural knowledge distillation. Different from these methods, some studies emphasize maximizing the utility and benefits of the memory buffer samples [43, 44, 45, 46, 47, 42]. For example, instead of randomly sampling, GSS [43] selected the samples stored in the memory buffer according to the cosine similarity of gradients, MIR [44] chose maximally interfering samples whose prediction will be most negatively impacted by the foreseen parameters update, and OCS [45] picked the most representative data of the current task while minimizing interference with previous tasks. Unlike these methods, our method focuses on alleviating distribution shifts and can plug in most current rehearsal-based approaches.

Task-recency bias in CL. Task-recency bias [11, 2] in online CL refers to the tendency of classifiers to mistakenly classify examples belonging to previously learned classes as newly received ones. Typically, the linear classifier is susceptible to task-recency bias. To address this, iCaRL [6] proposed to replace the linear classifier with nearest class mean (NCM) classifiers. Similarly, SCR [9] and Co2L [48] employed the NCM classifier, where the feature extractor was trained using a contrastive learning paradigm. A wide range of works tackles the task-recency bias as a class imbalance problem [10, 26, 11, 12, 49]. For example, LUCIR [11] introduced weight normalization to the linear classifier, BiC [12] proposed a bias correction layer turned on a held-out validation set, and SS-IL [10] modified the softmax to mitigate the imbalanced penalization for the outputs of old classes. On the flip side, ER-ACE [13] pointed out that task-recency bias can also arise from feature interference and designed an asymmetric loss to address this problem. Different from these methods, our proposed method relaxes the assumption on label/feature distribution shift by directly modeling the posterior distribution shift. Besides, some methods addressing class imbalance may potentially be explored for tackling task-recency bias in online CL [50, 51, 52, 53]. However, many of these approaches face challenges in generalization to CL due to unstable data distribution.

Normalization Layer in CL. The normalization layer is a crucial component of deep neural networks. Batch Normalization (BN) [18] has shown a strong performance and become the most commonly used normalization strategy in single-task scenarios with fixed data distribution [54, 55]. However, BN may hinder the continual learning performance because of evolving data distributions [56, 57, 58]. To address this, Continual Normalization (CN) [56] reduced the cross-task differences by Group Normalization [59] and subsequently utilized BN to normalize the input features. BNT [60] constructed a balanced batch to update BN statistics. TBBN [57] addressed the imbalance problem in BN by employing reshape and repeat operations to construct a task-balanced batch during training. Task-Specific BN [61] focused on task-IL and applied specific BN statistics for each task. Besides, AdaB2N [58] leveraged a regularization term to optimize a modified momentum to balance BN statistics. Different from these works, our proposed IBN is designed to address the feature bias that arises from the bi-level optimization used in this paper.

III Method

III-A Preliminaries of Continual Learning

In continual learning, the model is trained on a stream of tasks with evolving data distribution. Considering sequential tasks , each task can be represented as , where is the total number of training samples in task . For the online CL setting, the classification model trains each new sample only once, and the previously seen batches are almost inaccessible. Let represent the current learning task, and denotes a small memory buffer that stores the samples of previous tasks . Here we take a widely used rehearsal-based method, Experience Replay (ER) [14, 62], as an example. ER jointly trains current task with examples sampled from the buffer . The training objective function is

| (1) |

where the training batch consists of a batch of incoming new samples and a batch of samples from the memory buffer , and denotes the cross-entropy loss. Note that the memory buffer is updated by reservoir sampling after training each batch , which is a relatively balanced set containing samples of all seen tasks.

III-B Framework Formulation

In this study, we focus on mitigating the issue of catastrophic forgetting of rehearsal-based models with an online CL scenario. As previously mentioned, existing rehearsal-based models often struggle with catastrophic distribution changes caused by dynamic data streams over time. To tackle this challenge, unlike prior methods that regard shifts in label or feature distributions, we suggest directly modeling the catastrophic distribution change for posterior probability , enabling the original classification model to learn a stable knowledge consolidation for all previous tasks.

The main methodology involves the design of a Continual Bias Adaptor (CBA) denoted as , which serves two key purposes: 1) Dynamically augmenting the classification network to produce more diverse posterior distributions by adjusting the parameters of (where can be regarded as hyper-parameters), aiming to address the catastrophic posterior change. 2) Guiding the original classification model to fit an implicit posterior that tends to achieve a stable consolidation of knowledge from previously learned tasks. In summary, during the training stage, for a given training example , its posterior probability is modified by the augmented classification network in an online CL manner, which can be formulated as

| (2) |

where is the function composition operator and is a lightweight network. The augmented classification network is firstly updated to learn new knowledge from the training data that minimizes the rehearsal-based empirical risk, i.e.,

| (3) |

where is a hyper-parameter of the optimal . Note that different rehearsal-based loss functions can be used as the training loss . Here we only take ER as an example for simplicity and more details can be found in Appendix D.

The ultimate objective of our method is to protect the original classification network from catastrophic distribution shift while achieving a stable knowledge consolidation across different tasks. To this end, we further keep tracking the performance of the classification network to prevent catastrophic forgetting, which requires that , obtained by minimizing the rehearsal-based empirical risk Eq. (3), maximizes the performance of all previously seen data. However, accessing all of this historical data is unfeasible in CL, we approximate it by the empirical risk over the memory buffer data, i.e.,

| (4) |

This objective function aims to find the optimal CBA such that the optimized classification network performs well on the memory buffer data, which acts as a stable consolidation of knowledge from the learned tasks.

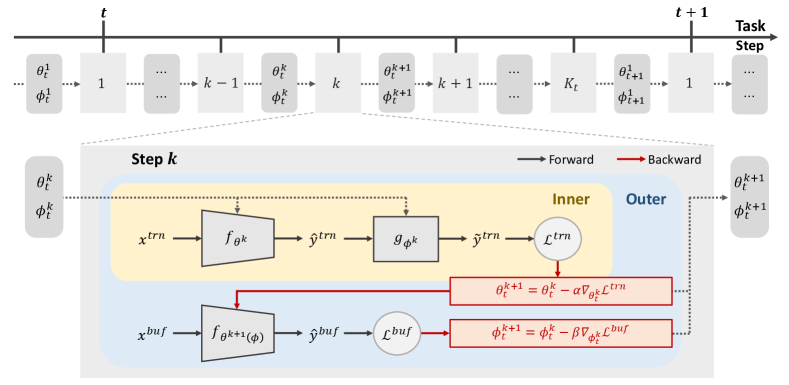

Indeed, Eq. (3) and Eq. (4) formulate a bi-level learning framework and the main flowchart is illustrated in Fig. 3. In the inner loop Eq. (3), the classification network is updated to learn new knowledge and rehearse old knowledge from with the help of the CBA module. In the outer loop Eq. (4), the CBA are updated from to consolidate the previously learned knowledge against catastrophic posterior change. In subsequent sections, we elaborate on the proposed CBA module and bi-level optimization algorithm in detail:

The Learning of CBA. For the bi-level optimization framework Eq. (3) and (4), there is no closed-form solution, especially in the deep learning field [63]. That is because the optimum of and are nested with each other. Therefore, we approximately update and using a gradient-based optimization method following [64, 65].

(1) Update . Given the CBA parameter at iteration step , we update the classification network parameter in Eq. (3) by one-step stochastic gradient descent (SGD):

| (5) |

where is the inner-loop learning rate.

(2) Update . With the one-step updated , a function of , we further optimize in Eq. (4) as follows:

| (6) |

where is the outer-loop learning rate. Note that in Eq. (6) involves a second-order derivative, which can be easily implemented by automatic differentiation systems such as Pytorch [66]. The detailed calculations are provided in Appendix A. Additionally, to alleviate the calculation burden of this second-order derivation, we assume that only depends on the last linear layer of the classification network. Consequently, we only need to unroll the second-order derivation of the linear classification layer in Eq. (6). As the linear layer typically comprises a small number of parameters compared to the entire classification network, our proposed algorithm is more efficient than other bi-level optimization algorithms [67, 68, 64, 65]. Please refer to Appendix H for a comprehensive discussion on computation and GPU memory utilization. The complete training process is detailed in Alg. 1.

III-C Design of Dual-CBA

In this subsection, we delve into the architectural design of the CBA module , which plays a vital role in adapting to catastrophic posterior changes in continual learning.

Class-specific CBA. An intuitive design for the CBA module is to element-wisely adjust the posterior distribution output by the original classification network , where denotes the set of all seen classes. This adjustment essentially defines a mapping from to a corresponding adapted posterior distribution . To achieve this, we use a multi-layer perceptron (MLP) network parameterized by as a part of the CBA module, which can be formulated as:

| (7) |

where and . Such a class-specific CBA is known as a universal approximator, capable of fitting almost any continuous function [69] and thus can adapt to various posterior distribution changes. Importantly, our conference version [16] has validated the effectiveness of the class-specific CBA on assimilating task-recency bias upon the rehearsal-based CL methods.

Class-agnostic CBA. Albeit fast adaptation to drastic posterior distribution changes, the proposed class-specific CBA module suffers from a substantial stability gap, where performance significantly drops upon starting to learn new tasks, followed by a fast recovery phase. This to some extent does not meet the need for online CL to conduct performance evaluations at any time. Thus, a natural question is: what causes the class-specific CBA to aggregate the stability gap problem, and how can this challenge be effectively addressed?

The primary reason is that the class-specific CBA cannot adapt to the abrupt change in the posterior distribution upon starting to learn a new task. To investigate this, we visualize the difference between the posterior probability predicted by the classification network before and after the task transition timestamp in Fig. 4. We can see that the classification network produces a relatively high posterior probability for the 85th class, a new class introduced in task 9. However, when task 10 comes in, the probability of the 85th class is significantly lower than before. Since the posterior probability predicted by the classification network is the input of the class-specific CBA, the corruption of these inputs for task 9 hinders the class-specific CBA from appropriately adjusting its posterior probabilities.

The second reason is that the class-specific CBA cannot immediately adjust the posterior probability of the classes in the new task. When a new task comes in, the class-specific CBA fails to accurately accommodate the posterior probability of the new task because it has not yet encountered the incoming new classes. As shown in Fig. 4, the arrival of task 10 leads to a high posterior probability for its own classes, such as the 95th class. This indicates that the class-specific CBA fails to control the increasing probability of the new task.

To address the aforementioned challenges, instead of directly adjusting the posterior probability in a class-specific manner, we resort to modeling a stable relationship between new and old tasks to adapt to the posterior distribution shift while avoiding a significant stability gap upon starting to learn new tasks. Let and denote the class sets of the new task and old tasks, respectively. Our main idea is to adjust the posterior probability of the new task and that of all the old tasks , given their relatively stable numerical relationship throughout the continual learning process as shown in Fig. 5. To achieve this, we design a novel class-agnostic CBA parameterized by as follows:

| (8) |

Note that the class-agnostic CBA treats the posterior probabilities of the classes in the new task as a whole, and does the same for the old task. Then, for a specific class, the adjustment of its posterior probability is formulated by averaging or , and it can be formulated as

| (9) |

and the final adapted posterior probabilities are given by .

The proposed class-agnostic CBA module serves as a robust and transferable bias adaptor, effectively adapting to sudden changes in posterior distributions after training on a few pairs of new and old tasks. Specifically, we can analyze its adaptation mechanisms for both the preceding old task and the incoming new task. We assume that the task transition timestamp is , and we have

-

•

For the incoming -th new task, the class-agnostic CBA module has processed pairs of old and new tasks, and its current weights can produce a valuable initialization or experience learned from previous data. Therefore, the class-agnostic CBA can quickly adapt to new tasks.

-

•

For the -th task, the class-agnostic CBA module naturally treats it as an old task at the timestamp. Similarly, CBA leverages the experience learned from previous old tasks to adjust its posterior distribution. This robust initialization makes class-agnostic CBA effectively adapt to the changed posterior distribution.

In summary, the proposed class-agnostic CBA can produce a robust initialization for both the -th new task and the -th old task, ensuring to adapt to the suddenly changed posterior distribution quickly. Consequently, the class-agnostic CBA can help the classification model learn the stable posterior distribution and mitigate the stability gap immediately upon starting to learn a new task.

Dual-CBA. For the class-agnostic CBA, we treat all classes equally. However, it is essential to model the discrepancy between classes in the CL process. To capture both the stable relationship between new and old tasks and the refined class-wise relationship, we integrate the class-agnostic CBA and the class-specific CBA into a unified module (referred to as Dual-CBA) with denoting its parameters. Specifically, we average and as the final adapted posterior distribution , that is,

| (10) |

Since the class-specific CBA may aggregate the stability gap as aforementioned, we reinitialize the class-specific CBA modules to avoid incorrect adjustments when a new task arrives. This enables our method to be inferred at any time in the learning process of online CL [28] without being disturbed by the stability gap problem, which will be validated through extensive experiments in Sec. V-F and Appendix F.

Note that the proposed Dual-CBA module is only used in the training stage. In the test stage, the test sample is predicted by , that is . This indicates that our method does not introduce any calculation overhead in the test stage.

Input: new incoming sample batch , memory buffer

Output: optimized classification network parameter and Dual-CBA parameter

Input: testing dataset , memory buffer , optimized classification network parameter

Output: predicted results

III-D Incremental Batch Normalization

In this subsection, we further address the feature bias introduced during the training stage by the commonly used BN [18] of our proposed bi-level framework. Specifically, BN calculates batch statistics of each feature map for normalization during training, while using population statistics estimated by an exponential moving average (EMA) during testing. In our proposed bi-level optimization framework in the conference version, the population statistics of BN are only updated in the inner loop together with the classification network parameters . Unfortunately, these population statistics estimated by EMA in the inner loop are seriously biased toward the current task because the training data are dominated by new tasks, leading to a biased feature extraction during testing. For simplicity, we take the population mean used for testing at task as an example. Specifically, we denote it as , and it is updated using an EMA scheme as follows:

| (11) |

where is the feature mean of the -th batch of training data at task . Then, we have

| (12) |

where is initialized as the population mean calculated at the final of the last task . It can be observed that encodes the feature mean of previous tasks and the corresponding weight is exponentially decreasing as current task training progresses. Additionally, the batch mean is dominated by the current new task data because the number of the memory buffer samples is much smaller than that of incoming new samples. Consequently, the population mean updated by EMA in the inner loop is seriously biased towards new tasks, where the population variance has the same tendency.

Note that this feature bias is not reflected in the posterior distribution during training, where the proposed Dual-CBA module cannot correct the biased feature. To achieve unbiased estimations of these population statistics, we should estimate them on the seen data from all tasks, which is unrealistic in continual learning. Therefore, from the bi-level optimization perspective, an intuitive idea is to update these population statistics by the balanced buffer data set used in the outer loop. Intrinsically, the memory buffer obtained via reservoir sampling is an excellent approximation of the entire data distribution for all seen tasks in an ideal case. Based on this principle, we propose a straightforward yet effective method to provide approximately unbiased population statistics in continual learning, termed Incremental Batch Normalization (IBN). Specifically, we stop the EMA updating of BN statistics in the inner loop optimization and estimate unbiased population statistics using the memory buffer in the outer loop optimization. For the implementation of IBN, we further refine the process by replacing the update of population statistics during the training stage with estimations based on balanced buffer data before the testing stage.

This procedure does not interfere with the optimization of our bi-level learning framework, which can effectively assimilate the feature discrepancy of the classification model during inference by estimating more accurate population statistics in BN for all seen tasks. Specifically, the population statistics estimated by IBN can be formulated as follows:

| (13) |

where is the feature mean of the -th batch of memory buffer samples at task , and is the total number of moving average. The implementation of IBN is simple as it only requires forward propagation of the small balanced buffer data without any back-propagation, and the population statistics can be updated by EMA automatically, which does not introduce too much calculation burden.

IV Theoretical Analysis

In this section, we provide a theoretical analysis of our method. Firstly, we explain how our bi-level optimization method effectively prevents forgetting from the perspective of gradient alignment. Then we illustrate the general intuition by a linear formulation of the bi-level optimization framework.

IV-A Gradient Alignment in Dual-CBA

The following theorem reveals that the proposed bi-level optimization inherently establishes gradient alignment between the loss on the training set and the memory buffer .

Theorem 1

Let and denote the gradients of the outer-loop and inner-loop losses with respect to the classification model parameter , respectively. If the outer-loop loss is gradient Lipschitz continuous, then the bi-level optimization Eq. (3) and (4) potentially guarantees an alignment between and , that is

| (14) |

where is the inner-loop learning rate and is the Lipschitz constant.

The detailed proof of Theorem 1 is shown in Appendix B. This theorem demonstrates that optimizing our proposed bi-level framework Eq. (3) and (4) encourages the angle between the gradients and to be as small as possible, which reveals two insights into our algorithm. On the one hand, this theorem indicates that our approach will push the gradients of the classification network on the training data (with the Dual-CBA module) to those on the buffer data (without the Dual-CBA module). This property potentially encourages the Dual-CBA module to mitigate the training bias present in the training data. That is why our algorithm can effectively mitigate the task-recency bias in the CL process. On the other hand, this theorem shows an alignment between the gradients of the classification network on the training data and buffer data, which regularizes the updating of the classification network on the new task and ensures it does not deviate from the previous updating direction too much. This gradient alignment can effectively alleviate forgetting in CL, which has been demonstrated in many previous gradient-alignment-based methods [70, 71]. However, these works calculate the classification network gradient on training data without accounting for task-recency bias, where the network may seriously bias to new tasks, resulting in an imprecise gradient alignment. In contrast, our proposed Dual-CBA method mitigates this bias, ensuring more accurate gradient alignment and improved performance.

IV-B Closed-form Solution of Linear Dual-CBA

We herein consider a convex model to delve into the insight of our proposed Dual-CBA model. Specifically, we reformulate Dual-CBA as a linear model where the origin and augmented classification networks are represented as:

| (15) |

where is the input data and denote outputs of the original and augmented classification networks, respectively. The parameters of the classification model are and the Dual-CBA module is represented as , where we omit the notation of for clarity. Additionally, we assume the Gaussian feature and noise of this linear model following [72] with denoting the noise vector.

We consider the mean square error (MSE) loss as the convex objective function. In this case, the proposed bi-level optimization framework can be represented as follows:

| (16) |

Note that is the weight matrix and represents a function of . Thanks to the excellent properties of convex optimization, we can get the closed-form solution of this bi-level optimization framework, which can be summarized as follows:

Theorem 2

Let and . If the parameter of CBA is not singular, then the closed-form solution of the linear bi-level optimization Eq. (16) can be represented as

| (17) |

The proof of this theorem can be found in the Appendix B. It can be observed that the optimal solution of in Eq. (17) actually measures the discrepancy between and . Intuitively, if we multiply these two terms by on the left of the two sides of this equation, we can find that this discrepancy depends on the difference between and , which are the optimal solutions of the linear model on the buffer data and that on the training data , respectively. Therefore, the proposed Dual-CBA module captures the relationship between the optimal linear solutions on training data and buffer data, which can effectively feedback to the optimization of the classification network. Specifically, the optimal regularizes the optimal solution of by modifying the optimal linear solution on the training data , ensuring the classification network pay more attention to the balanced buffer data rather than only overfit on the current training data .

V Experiments

To validate the effectiveness of the proposed method, we compare our Dual-CBA to multiple approaches on various datasets under online CL, semi-supervised CL, blurry tasks, and offline CL settings. We also conduct extensive ablation experiments to analyze different components of our approach.

V-A Experiment Settings

Experimental datasets. Following [7], we experiment with three widely-used datasets: Split CIFAR-10 [15], Split CIFAR-100 and Split Tiny-ImageNet [73]. Concretely, Split CIFAR-10 contains five binary classification tasks, which are constructed by evenly splitting ten classes of CIFAR-10. Split CIFAR-100 and Split Tiny-ImageNet both have longer task sequences with each comprising ten disjoint tasks. Specifically, Split CIFAR-100 includes ten tasks with 10 classes each, while Split Tiny-ImageNet includes ten tasks with 20 classes each (see Appendix D for details of the three datasets).

Evaluation metrics. To comprehensively evaluate all comparison methods, we consider the following metrics:

-

•

Average Accuracy (ACC ): This metric calculates the average accuracy of the model trained on all tasks, i.e. , where represents the accuracy of the task after training on the task . indicates that a higher ACC value corresponds to better performance.

-

•

Forgetting Measure (FM ): This metric averages the differences between the best accuracy and the final accuracy, i.e. , where the is the best accuracy of task in the whole training process. indicates that a lower FM value corresponds to better performance.

-

•

Area Under the Curve of Accuracy (): This metric is the area under the curve of the accuracy [28], i.e., , where represent the average accuracy when the model training at step , and is the interval training step which is 5 for faster evaluation in our experiments. indicates that a higher value corresponds to better performance.

Implementation details. We adopt the commonly used ResNet-18 [74] as our backbone [25, 7, 12], and train all methods using the Stochastic Gradient Descent (SGD) optimizer. We use Adam [75] to optimize the proposed Dual-CBA module and set the learning rate as 0.001 for Split CIFAR-10, and 0.01 for Split CIFAR-100 and Split Tiny-ImageNet. To reduce the variability in experimental results, each reported result in the online CL setting is averaged over 10 repeated runs, and each result in the offline CL is averaged over 5 runs. More details about the baselines and implementations are listed in Appendix D.

| Split CIFAR-10 | Split CIFAR-100 | Split Tiny-ImageNet | ||||||||||

| M=0.2k | M=0.5k | M=2k | M=5k | M=2k | M=5k | |||||||

| Method | ACC | FM | ACC | FM | ACC | FM | ACC | FM | ACC | FM | ACC | FM |

| iCaRL [6] | 40.99 | 26.84 | 44.5 | 24.87 | 9.13 | 7.79 | 9.13 | 8.14 | 4.03 | 4.93 | 4.03 | 5.15 |

| LUCIR [11] | 23.59 | 35.59 | 24.63 | 31.89 | 8.28 | 16.07 | 12.31 | 14.02 | 4.47 | 20.4 | 5.29 | 20.28 |

| BiC [12] | 27.71 | 66.45 | 35.47 | 47.92 | 16.32 | 36.7 | 20.89 | 32.33 | 5.43 | 40.14 | 7.5 | 38.52 |

| ER-ACE [13] | 41.49 | 20.84 | 46.35 | 18.98 | 24.95 | 7.67 | 26.54 | 7.25 | 17.89 | 7.04 | 19.04 | 6.9 |

| SS-IL [10] | 37.92 | 15.64 | 41.22 | 11.46 | 24.9 | 9.85 | 25.6 | 10.23 | 17.91 | 7.93 | 18.53 | 8.26 |

| ER | 36.36 | 49.81 | 44.16 | 38.13 | 21.66 | 33.21 | 23.38 | 33.19 | 15.03 | 31.48 | 16.99 | 30.48 |

| ER-CBA | 38.13 | 43.94 | 45.52 | 29.96 | 25.56 | 9.86 | 28.55 | 8.27 | 17.69 | 12.85 | 20.76 | 10.53 |

| ER-Dual-CBA (ours) | 41.40 | 28.24 | 49.32 | 14.33 | 29.14 | 8.90 | 31.08 | 7.29 | 20.81 | 10.55 | 23.84 | 8.13 |

| Gains | + 5.04 | -21.57 | + 5.16 | -23.80 | + 7.48 | -24.31 | + 7.70 | -25.90 | + 5.78 | -20.93 | + 6.85 | -22.35 |

| DER++ [7] | 40.62 | 42.30 | 49.78 | 31.58 | 17.83 | 43.91 | 17.36 | 44.77 | 11.50 | 40.68 | 11.72 | 40.90 |

| DER-CBA | 44.05 | 25.64 | 50.97 | 16.15 | 25.42 | 13.08 | 26.59 | 13.08 | 18.81 | 11.14 | 20.13 | 11.38 |

| DER-Dual-CBA (ours) | 44.29 | 20.59 | 50.12 | 9.72 | 27.93 | 10.24 | 29.87 | 8.31 | 20.73 | 13.36 | 22.52 | 12.65 |

| Gains | + 3.67 | -21.71 | + 0.34 | -21.86 | +10.10 | -33.67 | +12.51 | -36.46 | + 9.23 | -27.32 | +10.80 | -28.25 |

| CLSER [25] | 38.68 | 44.93 | 47.90 | 31.39 | 21.86 | 35.22 | 24.00 | 34.41 | 15.81 | 32.13 | 17.42 | 31.64 |

| CLSER-CBA | 43.32 | 29.26 | 47.68 | 24.58 | 26.32 | 9.50 | 28.38 | 9.23 | 19.32 | 11.86 | 21.16 | 10.39 |

| CLSER-Dual-CBA (ours) | 44.03 | 20.40 | 49.27 | 16.09 | 29.83 | 8.93 | 32.29 | 7.30 | 21.34 | 10.65 | 24.37 | 8.30 |

| Gains | + 5.35 | -24.53 | + 1.37 | -15.30 | + 7.97 | -26.29 | + 8.29 | -27.11 | + 5.53 | -21.48 | + 6.95 | -23.34 |

| RAR [8] | 42.52 | 39.48 | 47.66 | 33.86 | 15.53 | 45.78 | 14.79 | 46.84 | 10.88 | 39.21 | 10.44 | 40.95 |

| RAR-CBA | 44.22 | 22.62 | 47.01 | 15.95 | 22.47 | 14.27 | 22.85 | 14.24 | 17.18 | 11.62 | 17.90 | 11.97 |

| RAR-Dual-CBA (ours) | 44.88 | 13.55 | 47.68 | 11.14 | 26.20 | 9.82 | 26.38 | 9.96 | 19.72 | 12.64 | 20.83 | 12.80 |

| Gains | + 2.36 | -25.93 | + 0.02 | -22.72 | +10.67 | -35.96 | +11.59 | -36.88 | + 8.84 | -26.57 | +10.39 | -28.15 |

V-B Comparison on Disjoint Scenario

Dual-CBA can enhance current rehearsal-based methods. We first investigate the performance of the proposed method Dual-CBA across different datasets and various memory buffer sizes. Our proposed Dual-CBA can be easily plugged into multiple rehearsal continual learning baselines. To demonstrate it, we choose four commonly used rehearsal-based methods (i.e., ER, DER, RAR, and CLSER) as baselines in our experiments, and the results are summarized in Table I. Note that the suffixes ‘CBA’ and ‘Dual-CBA’ represent the class-specific CBA in conference version [16] and the proposed Dual-CBA in this paper applied to the corresponding baselines, respectively. It can be observed that: 1) Our Dual-CBA can consistently improve the ACC of all four baselines and significantly reduce their FM across various settings. This indicates that our method can generalize to multiple rehearsal baselines and help them mitigate the task-recency bias by fitting the implicit posterior distribution during CL training. 2) Dual-CBA further shows significant improvements over the previously proposed CBA in [16] across all four baselines, which highlights the effectiveness of our proposed method. For example, for ER on Split CIFAR-10 with 200 buffer samples, ER-CBA yields an improvement in the ACC of 1.77% and a reduction in FM of 5.84%. In contrast, ER-Dual-CBA improves the ACC of ER by about 5.04% and decreases the FM by about 21.57%. Additional results and analyses can be found in Appendix G.

Analysis of other baselines. LUCIR employs a weight normalization prior, and BiC designs an additional linear layer, with both methods aimed at addressing the distribution shift from the class imbalance perspective. However, these approaches oversimplify the issue of distribution shift, leading to suboptimal performance across these three benchmarks. iCaRL struggles with larger datasets such as Split CIFAR-100/Tiny-ImageNet, suggesting that the NCM classifier relies on accurate and well-separate class means, which is difficult to obtain in online CL on large datasets. A low ACC of iCaRL indicates that it may not effectively acquire available knowledge from each new task, resulting in negligible forgetting during subsequent training and consequently a low FM. As for ER-ACE and SS-IL, although they demonstrate relatively sound decent ACC performance, they excessively suppress the accuracy of new classes during training, which results in a lower FM value. In contrast, the proposed Dual-CBA module balances the ACC and FM and significantly improves the performance of the corresponding baselines.

| Split CIFAR-10 | Split CIFAR-100 | Split Tiny-ImageNet | |||||||||||

| M=0.2k | M=0.5k | M=2k | M=5k | M=2k | M=5k | ||||||||

| Label Ratio | Method | ACC | FM | ACC | FM | ACC | FM | ACC | FM | ACC | FM | ACC | FM |

| ER | 38.62 | 45.84 | 43.34 | 39.00 | 16.38 | 33.62 | 19.07 | 33.52 | 10.83 | 27.42 | 10.68 | 29.23 | |

| ER-CBA | 37.00 | 45.64 | 41.95 | 39.93 | 15.98 | 33.86 | 16.25 | 34.92 | 10.07 | 29.43 | 10.78 | 28.70 | |

| ER-Dual-CBA (ours) | 41.25 | 22.62 | 45.29 | 17.28 | 24.67 | 7.03 | 26.30 | 6.06 | 15.81 | 6.60 | 18.79 | 5.83 | |

| DER++ | 41.02 | 36.93 | 46.62 | 34.43 | 17.91 | 31.23 | 18.73 | 31.37 | 10.75 | 30.40 | 11.93 | 28.29 | |

| DER-CBA | 41.51 | 37.30 | 48.47 | 26.66 | 20.84 | 19.08 | 22.94 | 19.12 | 12.14 | 21.10 | 14.97 | 17.94 | |

| 0.2 | DER-Dual-CBA (ours) | 42.36 | 21.03 | 48.90 | 9.54 | 23.32 | 9.54 | 27.30 | 6.58 | 16.48 | 7.91 | 18.53 | 7.09 |

| ER | 35.75 | 44.93 | 39.04 | 43.58 | 15.80 | 31.64 | 18.17 | 29.51 | 11.27 | 25.84 | 11.86 | 25.22 | |

| ER-CBA | 36.21 | 45.26 | 41.56 | 40.09 | 16.83 | 30.00 | 17.41 | 29.38 | 10.09 | 25.62 | 10.56 | 26.06 | |

| ER-Dual-CBA (ours) | 39.86 | 22.95 | 46.87 | 19.84 | 22.39 | 7.30 | 23.07 | 7.05 | 15.15 | 8.10 | 17.90 | 5.82 | |

| DER++ | 40.20 | 35.56 | 44.08 | 33.18 | 16.79 | 29.77 | 20.67 | 26.46 | 11.81 | 26.50 | 12.33 | 25.35 | |

| DER-CBA | 42.09 | 31.78 | 47.20 | 27.45 | 21.17 | 15.84 | 22.96 | 14.43 | 13.73 | 15.75 | 14.57 | 16.48 | |

| 0.1 | DER-Dual-CBA (ours) | 43.11 | 18.97 | 48.78 | 15.12 | 22.11 | 8.74 | 23.99 | 5.90 | 16.00 | 7.98 | 18.21 | 5.88 |

V-C Comparison under Semi-Supervised Scenario

In semi-supervised continual learning, only a small portion of samples from each task is labeled with the proportion of these labeled data referred to as label ratio. We apply the FixMatch [76] to two rehearsal-based CL methods, namely ER and DER++. Similar to the fully supervised setting, our method Dual-CBA can also be easily applied to these baselines, and the comparison results across different datasets and various label ratios are shown in Table II. It can be observed that CBA only marginally improves the baselines in some cases, with overall performance showing modest gains. For example, when the label ratio is 0.2, ER-CBA even decreases the ACC and increases the FM of baseline ER across all three datasets. In contrast, our Dual-CBA consistently improves the performance of both baselines and surpasses CBA by a large margin, especially in terms of the FM metric. This is likely because the class-specific CBA requires more labeled data to adapt to changed posterior distribution for each new task, making it prone to overfitting in a semi-supervised CL setting with limited labeled samples. However, the strong transferability of the proposed class-agnostic CBA reduces the need for labeled data and enhances the performance of our Dual-CBA. Additionally, these results indicate that the proposed IBN is effective in a semi-supervised CL setting.

| Split CIFAR-100 | |||||

| M = 2k | M = 5k | ||||

| Blurry-K | Method | ACC | FM | ACC | FM |

| ER | 22.72 | 32.26 | 23.44 | 33.20 | |

| ER-CBA | 27.82 | 7.83 | 28.96 | 7.06 | |

| ER-Dual-CBA (ours) | 29.87 | 7.08 | 32.01 | 6.38 | |

| DER++ | 18.87 | 42.71 | 19.47 | 42.77 | |

| DER-CBA | 27.45 | 13.53 | 27.56 | 14.24 | |

| K = 10 | DER-Dual-CBA (ours) | 30.66 | 9.40 | 30.65 | 9.71 |

| ER | 26.60 | 25.37 | 27.52 | 25.46 | |

| ER-CBA | 28.23 | 7.08 | 30.07 | 6.06 | |

| ER-Dual-CBA (ours) | 31.73 | 5.60 | 32.91 | 4.53 | |

| DER++ | 23.41 | 34.03 | 23.74 | 34.14 | |

| DER-CBA | 28.04 | 11.84 | 29.09 | 10.95 | |

| K = 30 | DER-Dual-CBA (ours) | 31.52 | 8.30 | 31.98 | 9.20 |

V-D Comparison on Blurry Scenario

Following [43, 77], we adopt the Blurry- online CL setting. It simulates a practical situation where task boundaries are unclear, characterized by an overlap across all tasks. Specifically, a fraction () of the training data from one task may appear in other tasks. Here we take and as an illustration, and the comparison results on Split CIFAR-100 are summarized in Table III.

It can be observed that our Dual-CBA substantially improves the performance of the corresponding baselines and the original CBA module. For example, CBA only improves the ACC of ER by about 1.76% with 500 buffer samples under the Blurry-10 setting, while the proposed Dual-CBA can further enhance the performance of ER-CBA by about 2.91%. Additionally, our method significantly reduces the forgetting of the two baselines as shown in Table III. These results demonstrate that the proposed Dual-CBA can be flexibly applied to various rehearsal baseline models and help them adapt to the posterior distribution shift without being perturbed by unclear task boundaries, further verifying the effectiveness of the proposed improvements on CBA.

V-E Comparison under the Offline CL

To further validate the generalization ability of the proposed Dual-CBA, we extend our method Dual-CBA to offline continual learning, where each task can be trained over multiple epochs to achieve more stable convergence. As shown in Table IV, all four baselines achieve better optimum solutions compared to the online context. Obviously, the proposed Dual-CBA significantly improves the performance of corresponding baselines and consistently outperforms the CBA module. These results verify the negative impact of the training bias on CL models and the strength of our method, which can also help the baseline models to adapt to the shifting posterior distribution in the offline setting, indicating the strong generalization ability of the proposed Dual-CBA.

| Split CIFAR-100 | ||||

| M=2k | M=5k | |||

| Method | ACC | FM | ACC | FM |

| ER | 38.09 | 51.51 | 47.53 | 39.47 |

| ER-CBA | 44.71 | 38.19 | 54.95 | 24.19 |

| ER-Dual-CBA (ours) | 46.89 | 35.59 | 55.25 | 24.23 |

| DER++ | 53.01 | 29.23 | 58.34 | 22.50 |

| DER-CBA | 52.72 | 22.19 | 58.67 | 15.46 |

| DER-Dual-CBA (ours) | 54.27 | 19.73 | 60.26 | 14.19 |

| CLSER | 42.77 | 45.72 | 55.83 | 31.10 |

| CLSER-CBA | 49.09 | 33.70 | 57.23 | 24.27 |

| CLSER-Dual-CBA (ours) | 49.20 | 33.87 | 58.20 | 21.32 |

| RAR | 48.26 | 34.00 | 54.18 | 26.89 |

| RAR-CBA | 50.13 | 25.62 | 56.07 | 19.35 |

| RAR-Dual-CBA (ours) | 50.43 | 24.94 | 56.50 | 19.12 |

V-F Discussion and Ablation Study.

| Method | |||||||||||||

| ER | 17.02 | 15.86 | 27.62 | 38.30 | 82.98 | 36.36 | 33.44 | ||||||

| ER-CBA | 24.26 | 20.78 | 25.86 | 39.45 | 80.29 | 38.13 | 34.96 | ||||||

| Split CIFAR-10 (M=0.2k) | ER-Dual-CBA (ours) | 44.66 | 26.19 | 31.18 | 40.98 | 64.00 | 41.40 | 37.10 | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | |||||||||||||

| ER | 19.10 | 15.13 | 22.63 | 13.15 | 16.70 | 16.57 | 21.18 | 15.55 | 12.37 | 64.19 | 21.66 | 21.41 | |

| ER-CBA | 24.03 | 21.19 | 29.18 | 25.01 | 25.04 | 31.64 | 32.05 | 29.27 | 15.18 | 23.03 | 25.56 | 22.78 | |

| Split CIFAR-100 (M=2k) | ER-Dual-CBA (ours) | 27.58 | 20.73 | 33.22 | 25.72 | 29.37 | 36.79 | 34.65 | 29.59 | 31.04 | 22.68 | 29.14 | 26.34 |

| Method | |||||||||||||

| ER | 11.10 | 11.10 | 11.55 | 13.98 | 12.30 | 11.94 | 10.10 | 12.39 | 6.21 | 49.60 | 15.03 | 14.76 | |

| ER-CBA | 15.80 | 13.57 | 17.64 | 20.04 | 17.36 | 16.71 | 18.24 | 19.21 | 12.38 | 25.96 | 17.69 | 15.74 | |

| Split Tiny-ImageNet (M=2k) | ER-Dual-CBA (ours) | 18.35 | 14.71 | 19.09 | 24.65 | 19.39 | 20.75 | 22.67 | 22.37 | 19.46 | 26.67 | 20.81 | 18.96 |

Dual-CBA helps the model adapt to distribution shifts. To ascertain this, we exhibit the accuracy of each task after the final task training, i.e., (where ) in Table V. It can be observed that the baseline ER focuses on the new task too much and easily forgets the previously learned knowledge, that is the so-called task-recency bias. However, the proposed Dual-CBA significantly improves the accuracy of previous tasks and prevents the model from paying much more attention to the new task, indicating that our method can help the baseline model adapt to the dramatic distribution shift and absorb the training bias during the continual learning process. Additionally, the Dual-CBA module shows more powerful ability to mitigate task-recency bias than CBA across all datasets, further demonstrating the effectiveness of the proposed enhancement in Dual-CBA.

Dual-CBA meets the need for real-time evaluation in Online CL. In online continual learning, a challenge is that the model should be evaluated at any time throughout the CL process [28, 78]. To explore the effectiveness of our method for any time inference, we display the ACC of the baseline ER, ER-CBA, the proposed ER-Dual-CBA, and ER-Dual-CBA without IBN in Fig. 2 and Fig. 6. It can be observed that especially on the larger datasets Split CIFAR-100 and Tiny-ImageNet, ER-CBA (black line) requires a few iterations to adapt the incoming data distribution and then improve the performance of ER (blue line) quickly. However, ER-Dual-CBA without IBN (red line) can surpass the baseline ER and ER-CBA at the beginning of each task and keep the higher performance during the entire CL process, which demonstrates that the proposed Dual-CBA can be evaluated in real-time and mitigate the stability-gap problem. Additionally, ER-Dual-CBA equipped with IBN (yellow line) further illustrates the effectiveness of the proposed IBN, which can significantly assimilate the feature extraction bias during inference. Furthermore, we also calculate in the last column of Table V. Our method can significantly improve the of baseline ER and ER-CBA across different datasets, also indicating that our method can improve the performance of baseline in real-time. Please refer to Appendix F for more experimental results.

| Split CIFAR-100 | |||||

| M = 0.2k | M = 0.5k | ||||

| Method | Normalization | ACC | FM | ACC | FM |

| BN [18] | 21.66 | 33.21 | 23.38 | 33.19 | |

| CN [56] | 22.50 | 34.22 | 22.44 | 35.05 | |

| AdaB2N [58] | 22.40 | 31.48 | 23.71 | 31.94 | |

| ER | IBN (ours) | 24.67 | 33.16 | 25.34 | 33.96 |

| BN [18] | 25.29 | 9.92 | 28.22 | 8.77 | |

| CN [56] | 25.51 | 9.71 | 28.40 | 8.36 | |

| AdaB2N [58] | 26.37 | 9.04 | 28.10 | 9.16 | |

| ER-Dual-CBA | IBN (ours) | 29.14 | 8.90 | 31.08 | 7.29 |

| BN [18] | 17.83 | 43.91 | 17.36 | 44.77 | |

| CN [56] | 18.24 | 44.23 | 17.05 | 45.93 | |

| AdaB2N [58] | 18.62 | 41.35 | 18.46 | 41.34 | |

| DER++ | IBN (ours) | 18.71 | 42.47 | 18.78 | 42.82 |

| BN [18] | 25.10 | 11.02 | 25.99 | 10.73 | |

| CN [56] | 26.04 | 10.99 | 25.55 | 10.60 | |

| AdaB2N [58] | 26.00 | 14.30 | 27.01 | 13.35 | |

| DER-Dual-CBA | IBN (ours) | 27.93 | 10.24 | 29.87 | 8.31 |

IBN generalizes well to alleviate feature bias. To demonstrate the effectiveness of the proposed IBN, we compare it with the traditional batch normalization [18], and two recent normalization methods aiming to adjust the bias introduced by BN, i.e., CN [56] and AdaB2N [58]. Table VI summarizes the comparison results of different normalization methods based on two baselines and the proposed Dual-CBA. It can be observed that: 1) The proposed IBN can be used for both our bi-level optimization framework of Dual-CBA and the corresponding baselines, indicating that IBN is a plug-and-play module, which does not affect the original BN during training and only assimilate the feature extraction bias in the testing stage. 2) CN and AdaB2N can somewhat mitigate the feature extraction bias, while our IBN can significantly improve ACC and reduce FM of corresponding CL baselines, especially when applied to our bi-level optimization framework on Dual-CBA. For example, in the case of memory buffer size M = 0.5k, IBN improves the ACC of baseline DER++ by about 1.42 and further improves that of DER-Dual-CBA by about 3.88. These results demonstrate the effectiveness of the proposed IBN and indicate that the proposed Dual-CBA module and IBN can jointly further improve model performance in continual learning.

Comparison of predicted distributions with and without the Dual-CBA module. To investigate this discrepancy, we calculate the predicted distribution of each task after training on the final task of Split CIFAR-10 (M=0.5k) in Fig. 7 (a). It can be observed that the predicted distribution of the baseline model ER is significantly higher than those of previous tasks, and CBA slightly corrects this task-recency bias. In contrast, our method Dual-CBA can suppress the prediction probability of the new task and automatically adapt to the changing distribution. Furthermore, we also display their confusion matrix on Split CIFAR-10 as shown in Fig. 7 (b-d). Obviously, the baseline model ER suffers from a significant task-recency bias, as it achieves an excessively high ACC for new-task classes but is accompanied by extremely severe forgetting. However, CBA only marginally improves old task performances over the baseline model, and the model remains biased toward the new task. Contrastively, the proposed Dual-CBA effectively improves the baseline model to classify samples from all tasks with a global view, leading to the classification network producing a more flatness and correct prediction.

| Transfer to other tasks | |||

| Dataset | Pretrained tasks | ACC | FM |

| 1st-2nd | 40.41 | 35.36 | |

| 1st-3rd | 41.06 | 30.24 | |

| 1st-4th | 41.71 | 29.14 | |

| Split CIFAR-10 | 1st-5th (no transfer) | 42.59 | 24.49 |

| 1st-2nd | 28.40 | 18.75 | |

| 1st-4th | 28.85 | 11.88 | |

| 1st-6th | 29.08 | 9.39 | |

| 1st-8th | 29.34 | 9.69 | |

| Split CIFAR-100 | 1st-10th (no transfer) | 29.81 | 9.95 |

| 1st-2nd | 18.66 | 23.20 | |

| 1st-4th | 19.96 | 15.86 | |

| 1st-6th | 20.93 | 12.30 | |

| 1st-8th | 20.60 | 11.25 | |

| Split Tiny-ImageNet | 1st-10th (no transfer) | 20.55 | 10.18 |

| Pretrained dataset | Transfer to other datasets | |||

| Split CIFAR-10 (M=0.2k) | Split CIFAR-100 (M=2k) | Split Tiny-ImageNet (M=2k) | ||

|---|---|---|---|---|

| ACC | FM | ACC | FM | |

| 27.15 | 23.25 | 18.37 | 24.47 | |

| (no transfer) | 29.14 | 8.90 | 20.81 | 10.55 |

| Split CIFAR-100 (M=2k) | Split CIFAR-10 (M=0.2k) | Split Tiny-ImageNet (M=2k) | ||

| ACC | FM | ACC | FM | |

| 42.24 | 22.26 | 20.43 | 8.72 | |

| (no transfer) | 41.40 | 28.24 | 20.81 | 10.55 |

| Split Tiny-ImageNet (M=2k) | Split CIFAR-10 (M=0.2k) | Split CIFAR-100 (M=2k) | ||

| ACC | FM | ACC | FM | |

| 42.51 | 22.82 | 29.77 | 9.11 | |

| (no transfer) | 41.40 | 28.24 | 29.14 | 8.90 |

Transferability of the class-agnostic CBA. As aforementioned, the proposed class-agnostic CBA has strong transferability and can adapt to different new tasks. To demonstrate this, we conduct experiments to evaluate the intra-dataset and inter-dataset transferability of the class-agnostic CBA module. Concretely, to show the intra-dataset transferability of the proposed CBA, we train the classification network based on ER with the help of the class-agnostic CBA module, which is pretrained in the first few tasks and fixed in the consequent tasks. As shown in Table VII, the pretrained CBA can also assimilate the training bias of the classification network to achieve comparable performance to the on-the-fly training version (where the CBA module is trained simultaneously with the classification network, as shown in the gray cell of Table VII). On the other hand, we test the inter-dataset transferability of the proposed class-agnostic CBA. Specifically, we train the classification network based on ER with the help of a fixed class-agnostic CBA, which has been pre-trained in another dataset. In Table VIII, even for various new tasks from different datasets, the class-agnostic CBA module also largely improves the performance of the classification framework. Particularly, after the class-agnostic CBA is trained on a dataset with more tasks such as Split CIFAR-100 and Tiny-ImageNet, it can be well transferred to other datasets and even has better results for Split CIFAR-10, indicating that the class-agnostic CBA can capture the stable relationship between the new and old posterior probabilities across multiple continual learning tasks and effectively generalized to other datasets. In summary, these experimental results demonstrate the strong transferability of the proposed class-agnostic CBA module, indicating the potential to handle complicated relationships between new and old tasks.

| Optimization | Loss function | Split CIFAR-100 | |||

| M = 2k | M = 5k | ||||

| ACC | FM | ACC | FM | ||

| Single level | 24.57 | 34.41 | 25.64 | 34.05 | |

| Single level | 24.59 | 34.46 | 25.72 | 34.14 | |

| Bi-level (ours) | & | 29.14 | 8.90 | 31.08 | 7.29 |

Ablation on the bi-level optimization framework. In our algorithm, we propose a bi-level learning framework to jointly optimize the parameters of the main classification network and the Dual-CBA module. Here we compare the results of the bi-level and single-level optimization to demonstrate the effectiveness of the proposed bi-level learning framework. In Table IX, we display two single-level optimization formulations: 1) only use the rehearsal training loss (i.e., the inner objective of Eq. (3)) to optimize , together; and 2) iteratively optimize by the inner loss and optimize by the outer loss. It can be observed that these two single-level learning cannot enhance the performance of the baseline model. The learned Dual-CBA module optimized in single-level frameworks cannot help the model alleviate the distribution shift, which demonstrates that the proposed bi-level optimization is essential for our model training. Under the bi-level learning framework, Dual-CBA can effectively assimilate the training bias and help the model learn an implicit stable posterior distribution with a consolidation of the seen knowledge.

VI Conclusion

In this paper, we address the challenges of online continual learning, where the classification network commonly exhibits dramatically changing posterior distributions, which yields performance bias toward the arriving new tasks. To tackle this, we propose a novel bi-level optimization framework that enables the classification network to adapt to these catastrophic distribution shifts. Specifically, we introduce a Dual-CBA module that includes a class-specific CBA module, a class-agnostic module, and an Incremental BN module. This combined module is capable of adapting to catastrophic distribution shifts and mitigating the stability gap issue arising from continual training. This ensures the classification network meets the requirements for real-time inference in online CL. The effectiveness of our method is demonstrated through both theoretical and empirical evidence. Theoretically, we provide an explanation of how our approach addresses task-recency bias and alleviates catastrophic forgetting, and we offer insights into the bi-level optimization framework through a linear formulation. Empirically, our algorithm consistently improves performance across various rehearsal-based baselines, showcasing its ability to assimilate training bias and effectively consolidate learned knowledge in online CL. Currently, our method is limited to being a plug-in for rehearsal-based methods. In the future, we will further investigate the applicability of our approach to rehearsal-free models.

References

- [1] G. M. Van de Ven and A. S. Tolias, “Three scenarios for continual learning,” arXiv preprint arXiv:1904.07734, 2019.

- [2] D.-W. Zhou, Q.-W. Wang, Z.-H. Qi, H.-J. Ye, D.-C. Zhan, and Z. Liu, “Deep class-incremental learning: A survey,” arXiv preprint arXiv:2302.03648, 2023.

- [3] R. M. French, “Catastrophic forgetting in connectionist networks,” Trends in Cognitive Sciences, vol. 3, no. 4, pp. 128–135, 1999.

- [4] M. De Lange, R. Aljundi, M. Masana, S. Parisot, X. Jia, A. Leonardis, G. Slabaugh, and T. Tuytelaars, “A continual learning survey: Defying forgetting in classification tasks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 7, pp. 3366–3385, 2021.

- [5] V. Losing, B. Hammer, and H. Wersing, “Incremental on-line learning: A review and comparison of state of the art algorithms,” Neurocomputing, vol. 275, pp. 1261–1274, 2018.

- [6] S.-A. Rebuffi, A. Kolesnikov, G. Sperl, and C. H. Lampert, “icarl: Incremental classifier and representation learning,” in IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 2001–2010.

- [7] P. Buzzega, M. Boschini, A. Porrello, D. Abati, and S. Calderara, “Dark experience for general continual learning: a strong, simple baseline,” in Advances in Neural Information Processing Systems, vol. 33, 2020, pp. 15 920–15 930.

- [8] Y. Zhang, B. Pfahringer, E. Frank, A. Bifet, N. J. S. Lim, and A. Jia, “A simple but strong baseline for online continual learning: Repeated augmented rehearsal,” in Advances in Neural Information Processing Systems, 2022.

- [9] Z. Mai, R. Li, H. Kim, and S. Sanner, “Supervised contrastive replay: Revisiting the nearest class mean classifier in online class-incremental continual learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 3589–3599.

- [10] H. Ahn, J. Kwak, S. Lim, H. Bang, H. Kim, and T. Moon, “Ss-il: Separated softmax for incremental learning,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 844–853.

- [11] S. Hou, X. Pan, C. C. Loy, Z. Wang, and D. Lin, “Learning a unified classifier incrementally via rebalancing,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 831–839.

- [12] Y. Wu, Y. Chen, L. Wang, Y. Ye, Z. Liu, Y. Guo, and Y. Fu, “Large scale incremental learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 374–382.

- [13] L. Caccia, R. Aljundi, N. Asadi, T. Tuytelaars, J. Pineau, and E. Belilovsky, “New insights on reducing abrupt representation change in online continual learning,” arXiv preprint arXiv:2104.05025, 2021.

- [14] R. Ratcliff, “Connectionist models of recognition memory: constraints imposed by learning and forgetting functions.” Psychological review, vol. 97, no. 2, p. 285, 1990.

- [15] A. Krizhevsky, G. Hinton et al., “Learning multiple layers of features from tiny images,” 2009.

- [16] Q. Wang, R. Wang, Y. Wu, X. Jia, and D. Meng, “Cba: Improving online continual learning via continual bias adaptor,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 19 082–19 092.

- [17] M. De Lange, G. M. van de Ven, and T. Tuytelaars, “Continual evaluation for lifelong learning: Identifying the stability gap,” in International Conference on Learning Representations, 2023.

- [18] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” in International conference on machine learning. pmlr, 2015, pp. 448–456.

- [19] L. Wang, X. Zhang, H. Su, and J. Zhu, “A comprehensive survey of continual learning: theory, method and application,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024.

- [20] J. Gama, I. Žliobaitė, A. Bifet, M. Pechenizkiy, and A. Bouchachia, “A survey on concept drift adaptation,” ACM Computing Surveys (CSUR), vol. 46, no. 4, pp. 1–37, 2014.

- [21] J. Lu, A. Liu, F. Dong, F. Gu, J. Gama, and G. Zhang, “Learning under concept drift: A review,” IEEE Transactions on Knowledge and Data Engineering, vol. 31, no. 12, pp. 2346–2363, 2018.

- [22] C. Simon, M. Faraki, Y.-H. Tsai, X. Yu, S. Schulter, Y. Suh, M. Harandi, and M. Chandraker, “On generalizing beyond domains in cross-domain continual learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 9265–9274.

- [23] M. Kang, J. Park, and B. Han, “Class-incremental learning by knowledge distillation with adaptive feature consolidation,” in Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition, 2022, pp. 16 071–16 080.

- [24] F. Zhu, Z. Cheng, X.-Y. Zhang, and C.-l. Liu, “Class-incremental learning via dual augmentation,” Advances in Neural Information Processing Systems, vol. 34, pp. 14 306–14 318, 2021.

- [25] E. Arani, F. Sarfraz, and B. Zonooz, “Learning fast, learning slow: A general continual learning method based on complementary learning system,” in International Conference on Learning Representations, 2022.

- [26] F. M. Castro, M. J. Marín-Jiménez, N. Guil, C. Schmid, and K. Alahari, “End-to-end incremental learning,” in Proceedings of the European Conference on Computer Vision, 2018, pp. 233–248.

- [27] A. Chaudhry, P. K. Dokania, T. Ajanthan, and P. H. Torr, “Riemannian walk for incremental learning: Understanding forgetting and intransigence,” in Proceedings of the European Conference on Computer Vision, 2018, pp. 532–547.

- [28] H. Koh, D. Kim, J.-W. Ha, and J. Choi, “Online continual learning on class incremental blurry task configuration with anytime inference,” in International Conference on Learning Representations, 2022.

- [29] X. Tao, X. Hong, X. Chang, S. Dong, X. Wei, and Y. Gong, “Few-shot class-incremental learning,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 12 183–12 192.

- [30] H.-J. Ye, H. Hu, D.-C. Zhan, and F. Sha, “Few-shot learning via embedding adaptation with set-to-set functions,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 8808–8817.

- [31] S. Dong, X. Hong, X. Tao, X. Chang, X. Wei, and Y. Gong, “Few-shot class-incremental learning via relation knowledge distillation,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 2, 2021, pp. 1255–1263.

- [32] L. Wang, K. Yang, C. Li, L. Hong, Z. Li, and J. Zhu, “Ordisco: Effective and efficient usage of incremental unlabeled data for semi-supervised continual learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 5383–5392.

- [33] J. Smith, J. Balloch, Y.-C. Hsu, and Z. Kira, “Memory-efficient semi-supervised continual learning: The world is its own replay buffer,” in 2021 International Joint Conference on Neural Networks (IJCNN). IEEE, 2021, pp. 1–8.

- [34] Z. Kang, E. Fini, M. Nabi, E. Ricci, and K. Alahari, “A soft nearest-neighbor framework for continual semi-supervised learning,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 11 868–11 877.

- [35] A. Chrysakis and M.-F. Moens, “Online continual learning from imbalanced data,” in International Conference on Machine Learning. PMLR, 2020, pp. 1952–1961.

- [36] J. He, “Gradient reweighting: Towards imbalanced class-incremental learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, pp. 16 668–16 677.

- [37] A. Chrysakis and M.-F. Moens, “Online continual learning from imbalanced data,” in International Conference on Machine Learning. PMLR, 2020, pp. 1952–1961.

- [38] X. Tao, X. Chang, X. Hong, X. Wei, and Y. Gong, “Topology-preserving class-incremental learning,” in European Conference on Computer Vision, 2020, pp. 254–270.

- [39] X. Chang, X. Tao, X. Hong, X. Wei, W. Ke, and Y. Gong, “Class-incremental learning with topological schemas of memory spaces,” in 2020 25th International Conference on Pattern Recognition (ICPR). IEEE, 2021, pp. 9719–9726.

- [40] Y.-M. Tang, Y.-X. Peng, and W.-S. Zheng, “Learning to imagine: Diversify memory for incremental learning using unlabeled data,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 9549–9558.

- [41] Q. Wang, R. Wang, Y. Li, D. Wei, K. Ma, Y. Zheng, and D. Meng, “Relational experience replay: Continual learning by adaptively tuning task-wise relationship,” arXiv preprint arXiv:2112.15402, 2021.

- [42] Y. Liu, Y. Su, A.-A. Liu, B. Schiele, and Q. Sun, “Mnemonics training: Multi-class incremental learning without forgetting,” in Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition, 2020, pp. 12 245–12 254.

- [43] R. Aljundi, M. Lin, B. Goujaud, and Y. Bengio, “Gradient based sample selection for online continual learning,” Advances in Neural Information Processing Systems, vol. 32, 2019.

- [44] R. Aljundi, E. Belilovsky, T. Tuytelaars, L. Charlin, M. Caccia, M. Lin, and L. Page-Caccia, “Online continual learning with maximal interfered retrieval,” in Advances in Neural Information Processing Systems, H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, Eds. Curran Associates, Inc., 2019, pp. 11 849–11 860. [Online]. Available: http://papers.nips.cc/paper/9357-online-continual-learning-with-maximal-interfered-retrieval.pdf

- [45] J. Yoon, D. Madaan, E. Yang, and S. J. Hwang, “Online coreset selection for rehearsal-based continual learning,” in International Conference on Learning Representations, 2022.

- [46] L. Wang, X. Zhang, K. Yang, L. Yu, C. Li, H. Lanqing, S. Zhang, Z. Li, Y. Zhong, and J. Zhu, “Memory replay with data compression for continual learning,” in International Conference on Learning Representations, 2022.

- [47] Z. Luo, Y. Liu, B. Schiele, and Q. Sun, “Class-incremental exemplar compression for class-incremental learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 11 371–11 380.

- [48] H. Cha, J. Lee, and J. Shin, “Co2l: Contrastive continual learning,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 9516–9525.

- [49] B. Zhao, X. Xiao, G. Gan, B. Zhang, and S.-T. Xia, “Maintaining discrimination and fairness in class incremental learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 13 208–13 217.

- [50] M. Li, X. Zhang, C. Thrampoulidis, J. Chen, and S. Oymak, “Autobalance: Optimized loss functions for imbalanced data,” Advances in Neural Information Processing Systems, vol. 34, pp. 3163–3177, 2021.

- [51] A. K. Menon, S. Jayasumana, A. S. Rawat, H. Jain, A. Veit, and S. Kumar, “Long-tail learning via logit adjustment,” arXiv preprint arXiv:2007.07314, 2020.

- [52] R. Wang, K. Hu, Y. Zhu, J. Shu, Q. Zhao, and D. Meng, “Meta feature modulator for long-tailed recognition,” arXiv preprint arXiv:2008.03428, 2020.

- [53] R. Wang, X. Jia, Q. Wang, Y. Wu, and D. Meng, “Imbalanced semi-supervised learning with bias adaptive classifier,” in The Eleventh International Conference on Learning Representations, 2022.

- [54] N. Bjorck, C. P. Gomes, B. Selman, and K. Q. Weinberger, “Understanding batch normalization,” Advances in neural information processing systems, vol. 31, 2018.

- [55] S. Santurkar, D. Tsipras, A. Ilyas, and A. Madry, “How does batch normalization help optimization?” Advances in neural information processing systems, vol. 31, 2018.

- [56] Q. Pham, C. Liu, and S. Hoi, “Continual normalization: Rethinking batch normalization for online continual learning,” arXiv preprint arXiv:2203.16102, 2022.

- [57] S. Cha, S. Cho, D. Hwang, S. Hong, M. Lee, and T. Moon, “Rebalancing batch normalization for exemplar-based class-incremental learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 20 127–20 136.

- [58] Y. Lyu, L. Wang, X. Zhang, Z. Sun, H. Su, J. Zhu, and L. Jing, “Overcoming recency bias of normalization statistics in continual learning: Balance and adaptation,” Advances in Neural Information Processing Systems, vol. 36, 2024.

- [59] Y. Wu and K. He, “Group normalization,” in Proceedings of the European conference on computer vision (ECCV), 2018, pp. 3–19.