Dual application of Chebyshev polynomial for efficiently

computing thousands of central eigenvalues in many-spin systems

Abstract

Computation of a large group of interior eigenvalues at the middle spectrum is an important problem for quantum many-body systems, where the level statistics provides characteristic signatures of quantum chaos. We propose an efficient numerical method, dual application of Chebyshev polynomial (DACP), to find thousands of central eigenvalues, which are exponentially close to each other in terms of the system size. To cope with the near-degenerate problem, we employ twice the Chebyshev polynomial to construct an exponential semicircle filter as a preconditioning step and generate a large set of proper states as the basis of the desired subspace. Numerical experiments on Ising spin chain and spin glass shards confirm the correctness and efficiency of the DACP method. As numerical results demonstrate, DACP is times faster than the state-of-the-art shift-invert method for the Ising spin chain while times faster for the spin glass shards. In contrast to shift-invert method, the computation time of DACP is only weakly influenced by the required number of eigenvalues. Moreover, the consumed memory remains a constant for spin- systems consisting of up to spins.

I introduction

Energy level statistics provides an essential characterization of quantum chaos Haake (2010); Brody et al. (1981). Integrable systems often imply level clustering and a Poisson distribution of energy level spacings Brody et al. (1981), while chaotic systems exhibit strong level repulsion and a Wigner-Dyson distribution Bohigas et al. (1984). Other useful statistical tools like the statistic Mehta (1991) and the power spectrum of the statistic also depend on the level distribution Relaño et al. (2002). Numerical observation of eigenvalues is important to exactly characterize these level statistics, because direct theoretical derivation is not available. In addition, the need to access individual eigenstates in the middle of the spectrum, which correspond to the “infinite temperature” limit, is emphasized in studying the phenomenon of many-body localization (MBL) Basko et al. (2006); Nandkishore and Huse (2015); Pietracaprina et al. (2018). Numerical simulations remain instrumental in understanding quantitatively many aspects of the MBL problem. As the many-body problems of interest often involve a huge Hilbert space dimension growing exponentially in terms of system size, it is rather challenging to conduct a full diagonalization of the Hamiltonian or to solve the time-independent Schrödinger equation. Seeking to resolve the eigenvalue problem in a small part of the spectrum is thus an unavoidable and desirable substitution.

To obtain the interior eigenstates, a hybrid strategy of matrix spectroscopy is often invoked Ericsson and Ruhe (1980); Wyatt (1995); Minehardt et al. (1997). It aims at computing eigenstates in selected regions of the spectrum and couples the ground state solvers with a spectral filter, where the filter is designed to cast the selected interior regions to the edges of the spectrum. Among these filters, the Green function is an excellent one. After applying the Green function filter, the cluster of eigenvalues near the test energy is mapped to very large positive and negative values. The level spacings near are greatly amplified, which thus improves the convergence. The Lanczos method Lanczos (1950) has been coupled with such a filter Ericsson and Ruhe (1980); Wyatt (1995), and the Chebyshev polynomial expansion of the Green function is implemented Mandelshtam and Taylor (1995). In particular, the shift-invert method Bai et al. (2000) essentially utilizes this spectral transformation and has been widely used in quantum many-spin systems Pietracaprina et al. (2018); Luitz et al. (2015); Sierant and Zakrzewski (2020); Hopjan and Heidrich-Meisner (2020); Šuntajs et al. (2020). Moreover, it was even proved to be the most efficient one for the MBL problem Pietracaprina et al. (2018). However, for large systems this method suffers rapid increases in both computation time and memory consumption, due to the factorization of . Other spectral filters have been also proposed, including the Dirac delta function filter Li et al. (2016); Fang and Saad (2012); Sierant et al. (2020); Guan and Zhang (2020) and the rectangular window function filter Pieper et al. (2016), both of which are expanded by the Chebyshev polynomial. Note that all the methods mentioned above are iterative ones, where the computation time is usually proportional to the required number of eigenvalues, i.e., more eigenvalues means more filtrations and reorthogonalizations Pietracaprina et al. (2018); Fang and Saad (2012); Sierant et al. (2020).

In this paper, we propose an exact numerical method, DACP, to calculate thousands of eigenvalues in the middle of the energy band, which is already helpful to reveal the level statistics Shklovskii et al. (1993); Georgeot and Shepelyansky (1998). For spin systems, such a region usually locates at the peak of the density of states where the energy levels are so close that they are nearly degenerate. It is extremely challenging to distinguish these near-degenerate eigenvalues without factorizing . In the DACP method, we construct an exponential of semicircle (exp-semicircle) filter to quickly damp the unwanted part of the spectrum by employing the Chebyshev polynomial for the first time. The second application of the Chebyshev polynomial is to fast search a set of states to span the specific subspace consisting of all the eigenstates corresponding to the desired eigenvalues. The DACP method transforms the original high dimensional eigenvalue problem to a low dimensional one. For practical problems in many-spin systems, the DACP method is very efficient, due to its full exploration of several excellent properties of the Chebyshev polynomial while other methods usually utilize only one of them. For a large class of many-spin systems, the DACP method exhibits a significant increase in the computation speed, up to a factor of , in comparison with the shift-invert method. The memory saving is more drastic, up to a factor of . Moreover, the DACP method distinguishes itself from those iterative filtering ones, as its convergence time varies only slightly when the required number of eigenvalues is vastly changed.

The paper is organized as follows. The detailed formalism of the DACP method, including the exp-semicircle filtration, Chebyshev evolution, and subspace diagonalization, are described in Sec. II. Each of the processes relies on a property of the Chebyshev polynomial. In Sec. III we discuss details of two many-spin systems, the Ising model and the spin glass shards, and present the computational results. We conclude in Sec. IV.

II Dual application of Chebyshev polynomial method

To access central eigenvalues of large spin systems, we are restricted by the matrix-free mode, i.e., the matrix of the Hamiltonian must not be explicitly expressed/stored. Instead, we treat the Hamiltonian as a function whose input and output are both states/vectors. Therefore, we shall only operate with the quantum states , where is a positive integer (not too large), in the original Hilbert space of dimension up to . In this paper, we set our goal to find eigenvalues in the middle of the spectrum for many-spin systems as an illustration.

The DACP idea is fairly straightforward. We first transform a randomly initialized state into a wave packet in the subspace spanned essentially by at least central eigenstates. This transformation is realized by an exp-semicircle filter implemented by the Chebyshev polynomial. The Chebyshev polynomial is used to perform an exponential decay. With this particular state in hand, we then generate a large amount of linearly independent states, as large as possible, to approximately span the subspace consisting of the required eigenstates. The Chebyshev polynomial is used again to oscillate (complex exponential) the states, producing approximately linearly independent states. Once the generating set for the desired eigenstates is known, one may explicitly calculate , the reduced representation of in this subspace. The remaining operations are restricted in the subspace of dimension around . Finally, direct diagonalization of , which is of size , gives the required eigenvalues. The detailed procedures and discussions are given in the subsections below.

II.1 Exp-semicircle filter

We utilize the exponential growth of the Chebyshev polynomial outside the interval to efficiently construct an exp-semicircle filter, as shown in Fig. 1. This filter drastically amplifies the components of a desired range of eigenstates for any randomly initialized states, resulting a new state that sharply localized in the central spectrum. We note that the Chebyshev filter explores the same property as well, except that it amplifies the lower end of the spectrum Zhou and Saad (2007); Zhou (2010). A similar idea has been also applied in the quantum algorithm for finding ground states Poulin and Wocjan (2009).

For the Hamiltonian with energy bounded in , where is the minimum energy () and the maximum energy (), the exp-semicircle filter is designed to amplify the components of the eigenstates corresponding to eigenvalues in the interval and to simultaneously dampen those in the interval and , where is a real positive parameter. Focusing on the spin systems, we have assumed and . After the filtration, a new state mainly consists of the eigenstates with eigenvalues belonging to the interval is generated. For simplicity, let us denote the subspace spanned by the eigenstates contained in as .

We now introduce the specific implementation details. Note that we want to amplify the middle of the spectrum, but the exponential growth of the Chebyshev polynomial exists only in both ends. To satisfy this goal, we first square the Hamiltonian whose spectrum ranges (suppose for simplicity). The central spectrum of is transferred to for , which lies exactly at the lower end. Next is to map the dampening part into by shift and normalization of . We thus define an operator

| (1) |

where and . One may easily affirm this map’s correctness by replacing with either or , and correspondingly one has . Note that is simply a polynomial expression of , so is .

We then explore the effect of the filtration using . As the eigenvalues inside of is mapped into of , we have for the lower end of the spectrum, where

| (2) |

Let be a random initial state, with and the random coefficients, the eigenstates inside , the eigenstates outside . The filtration by is

| (3) | ||||

where , , and are eigenvalues corresponding to and , respectively. In writing Eq. (3) we have ignored , as it does not affect the absolute value of coefficients and is a global phase at the end line. When is tiny, i.e., , one may further deduce via Taylor’s expansion of , where is a small positive number. We thus obtain the exp-semicircle filter

| (4) |

that peaked sharply at with a large , for eigenstates satisfying . In Fig. 1 the shape of Eq. (4) is presented in dashed lines, which fits pretty well with the exact form.

With the initial conditions and , the th order Chebyshev polynomial can be efficiently determined using the recurrence relation Eq. (16). In this paper, we set the cut-off order . Such a filter exponentially (the fastest rate among all polynomials) amplifies the components of eigenstates inside Rivlin (1969). After the normalization, it equivalently dampens those outside , generating the target state:

| (5) |

where and is the normalization constant. Obviously, the state localizes (in the energy representation) in the central spectrum. We input as the initial state for the next step, Chebyshev evolution.

II.2 Chebyshev evolution

After obtaining a state confined in the small subspace , we then make use of the oscillation property of the Chebyshev polynomial to efficiently generate a set of distinct states, as many as possible, serving as a complete basis to span . To achieve this goal, it is necessary to limit (corresponding to in ), within which the Chebyshev polynomial behaves as a cosine-like function. This region contrasts with the requirement of the exp-semicircle filter, thus one needs a different transformation of . Below we describe the specific details for the second application of the Chebyshev polynomial.

The original Hamiltonian needs to be shifted by and be rescaled by , where and . In a similar way to Eq. (1), we define an operator which is definitely bounded by and . Assuming again, which does not affect the conclusion, we obtain

| (6) |

At the same time, the parameter is rescaled to as well.

Let us explore the Chebyshev evolution, i.e., the evolution governed by the operator as plays the role of time. We input the state Eq. (5) generated by the filtration as an initial state for the Chebyshev evolution. Since , from Eq. (15) we have , where . In this sense, the Chebyshev evolution gives

| (7) | ||||

where . Note that both and are unitless. Certainly, this is not a physical evolution, and is not even a unitary operator. Actually, this evolution essentially represents a superposition of both forward and backward propagation. Each time the polynomial order increases by , the evolution “time” is added by as well.

With the aid of the Chebyshev evolution, we are able to construct a complete basis that spans the subspace . In detail, we collect a set of states as follows

| (8) |

where and is an integer determined by the relation , with the dimension of . In practice, one may need a relation to ensure the completeness of Eq. (8). Here serves as the time, with . Similarly to the last subsection, the th order Chebyshev polynomial can be calculated. The cut-off order (evolution time) . More details can be found in Append. B.

The duality of the Chebyshev polynomial, as being approximately the trigonometry functions and being the polynomials, plays a vital role in the DACP method. In a certain sense, the Chebyshev evolution is an efficient (possibly the most one among all the polynomials) simulation of the quantum oscillations Chen and Guo (1999).

II.3 Subspace diagonalization

Computing the basis (Eq. (8)) by combination of the exp-semicircle filter and the Chebyshev evolution represents the most challenging aspect as well as the most time-consuming part of the DACP method. Once the appropriate basis has been constructed, the task remained is straightforward, i.e., to compute the eigenpairs of the projected Hamiltonian . This is equivalent to solving the generalized eigenvalue problem

| (9) |

Here, and denote the projected Hamiltonian in and the overlap matrices, respectively,

| (10) |

is a diagonal matrix with the eigenvalues in and the matrix transforms the found basis Eq. (8) to the eigenstates of ,

| (11) |

All these matrices are of size . Because of the small size of it, can be explicitly expressed and stored in core memory. The necessary procedures are conveniently available in the LAPACK library Anderson et al. (1994).

Importantly, due to the special property of the Chebyshev polynomial, the computation of matrices and can even be achieved without an explicit computation and storage of the states . This feature gives rise to a further improvement for the DACP method, both in computation time and memory saving. Besides, for an overcomplete basis Eq. (8), the overlap matrix is generally singular. We thus employ the singular value decomposition (SVD) to solve it. After the SVD, the eigenvalues of are discarded if their absolute values sit below the cutoff condition . The number of remained eigenvalues effectively counts the linearly independent states in Eq. (8). We present both the explicit expressions (denoted by and only) of matrices and , and the solution of the generalized eigenvalue problem in Append. C.

Eigenvalues obtained by the subspace diagonalization may not own the same accuracy when compared to true eigenvalues of the system, thus it is necessary to conduct an independent check to estimate the error bounds. To this end, if the eigenstates were known, the residual norm (variance of the energy) , where and , is widely used as the parameter measuring the accuracy of the results. It has been shown that gives an upper bound on the true error (absolute error) of the computed eigenvalue Saad (2011).

III Numerical results

We apply the DACP method to the quantum spin- systems with two-body interactions. Such systems are good models for investigating a large class of important problems in quantum computing, solid state theory, and quantum statistics Nielsen and Chuang (2011); Bogolubov and N. N. Bogolubov (2009); Sachdev (2011); Dutta et al. (2015). A large amount of exact eigenvalues helps us to obtain the statistical properties, to distinguish quantum chaos from integrability, and serves as a benchmark to evaluate other approximate methods as well.

Generally speaking, the DACP method can deal with the spin systems consisting of couplings between any pair of the spins. Each of the Pauli matrix or the two coupling Pauli matrices , where , is properly represented by a specific function.

We specify the spin model for two physical systems. One is the disordered one-dimensional transverse field Ising model Fisher (1995), where the Hamiltonian is

| (12) |

with the Pauli matrices for the spin . This system is exactly solvable by Jordan-Wigner transformation Sachdev (2011), making it an ideal correctness checker for the DACP method. The nearest neighbor exchange interaction constants are random numbers that uniformly distributed in with . The local random magnetic fields are represented by , which are random numbers that uniformly distributed in the interval with .

Another system is the spin glass shards Georgeot and Shepelyansky (1998), which represents a class of global-range interacting systems that require relatively large bond dimensions to be tackled by the DMRG methods Schollwöck (2005). The Hamiltonian describing the system is

| (13) |

All symbols and parameters are the same as that of the above Ising model, except that the first summation runs over all possible spin pairs. This system is interesting because it presents two crossovers from integrability to quantum chaos and back to integrability again. In the limit , the ground state is paramagnetic with all spins in the local field direction and the system is integrable Georgeot and Shepelyansky (1998). In the opposite limit , the ground state is spin glass and the system is also integrable since there are operators () commuting with the Hamiltonian. A quantum chaos region exists between these two limits. is approximately the border from the quantum chaos to the integrable (the spin glass side) when Georgeot and Shepelyansky (1998).

By employing the upper-bound-estimator, which costs little extra computation and bounds up the largest absolute eigenvalue , one may estimate and Zhou and Li (2011). For this setting we have utilized the symmetry of the density of states (DOS), a bell-shape profile centered at zero, in the many-spin systems. Since we require central eigenvalues, we may set , corresponding to a dimension and being adequate to span the whole subspace . The overlap matrix is generally singular. The approximate distribution of DOS may be efficiently calculated through the Fourier transformation of a time evolved wave function or through a better estimation method given in Hams and De Raedt (2000). The parameter is appropriately chosen to ensure that the number of eigenstates contained in is a little less than (as illustrated in Fig. 2, the precision of some converged eigenvalues may be lower than required).

In practice, sometimes there may exist highly near-degenerate eigenvalues, with level spacings as small as while the average spacing is . It is still hard (two magnitudes longer time) for the Chebyshev evolution to discriminate such close pair of eigenvalues. To circumvent this challenge, we employ the block filter technique Zhou (2010), which means a block of states is filtered or evolved “simultaneously”, in programming of the DACP method. The idea is that two or several random states in the degenerate subspace are usually linearly independent. For each numerical test, a block of initial trial states is randomly generated and employed with the parameter being adjusted to accordingly.

By these settings, we perform numerical tests on the above two systems to show the high exactness and efficiency of DACP method. For this work, we consider only the eigenvalues computations. All the timing information reported in this manuscript is obtained from calculations on the Intel(R) Xeon(R) CPU E7-8880 v4, using sequential mode.

In Fig. 2 we present the relative error in logarithmic scale versus the system energy , for the Ising model with and the spin glass shards with . We have defined the relative error of the computed central eigenvalues as

Exact eigenvalues of both systems have been obtained by other reliable methods. For the Ising model, we make use of the famous Jordan-Wigner transformation to reduce the original matrix to a one, and restore the full spectrum of the original Hamiltonian Sachdev (2011). For the spin glass shards we simply utilize the function of MATLAB, to find eigenvalues closest to . As for our numerical tests, the parameter in Fig. 2 is and for (a) and (b), respectively. In computing the Ising model, the number of eigenvalues satisfying is not enough (less than ) by the settings mentioned above. By adjusting the block size to and the parameter to , we then collect enough eigenvalues. The number of converged eigenvalues, i.e., computed eigenvalues satisfying the condition is for (a) and (all the exact eigenvalues we have) for (b), while the total number of computed eigenvalues is and , respectively.

The spike around for both figures is due to the smallness of the denominator . The smallest absolute eigenvalue is about for (a) and for (b). Besides, there is a flat plateau in the middle of the figures, indicating that for those eigenvalues around we encounter the numerical error, i.e., the absolute error reaches the limit of the double precision representation. In Fig. 2(a) one may observe other spikes, which does not appear in (b). Each of those spikes corresponds to a cluster of eigenvalues lying extremely close to each other. The integrability of the Ising model implies the level clustering and a Poissonian distribution of energy level spacings. We speculate that the Chebyshev evolution does not function well for closely lied eigenvalues, as they give quite similar phase contributions to the final states. To significantly amplify the phase difference , it requires , where is the energy difference. An alternative solution is to increase the block size to match up with the dimension of near-degenerate subspace. Ignoring the central plateau and the spikes, the distribution of shows an inverted shape of the exp-semicircle filter.

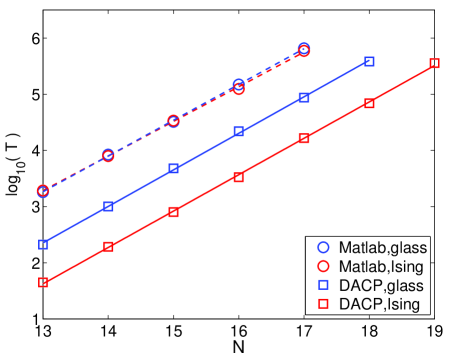

In Fig. 3 we compare the computation time (CPU time in seconds) of the DACP method with that of the shift-invert approach. The latter is widely used in computing eigenpairs at the middle of the spectrum for quantum spin systems, to name a few, like in Pietracaprina et al. (2018); Luitz et al. (2015); Sierant and Zakrzewski (2020); Hopjan and Heidrich-Meisner (2020). In our tests, it is implemented by the function of Matlab R2019b, which employs the implicitly restarted Arnoldi method (ARPACK) Lehoucq et al. (1998). Each of the numerical tests finds around eigenvalues for either of the two spin systems. For the DACP method, we have discarded the time consumed during the subspace diagonalization, as it is a constant and does not affect the scaling behavior. Specifically, the subspace diagonalization spends roughly CPU seconds in each test. Such a constant is completely negligible for systems.

As shown in Fig. 3, for spin systems with , the DACP method is about times faster than the shift-invert approach for the Ising model and times faster for the spin glass shards. Since the shift-invert method essentially finds the ground energy of , which is usually not a sparse matrix, its computation time is not affected by the sparsity of the Hamiltonian and the two dashed lines nearly coincide. On the contrary, the DACP method employs the polynomial combination of acting on the states, its computation time is heavily influenced by the number of Pauli operators of the specific Hamiltonian. For example, when the computation time for the spin glass shards is times that for the Ising model, while the number of Pauli operators is times. Considering this effect, the DACP method is still advantageous over the shift-invert approach in the worst case where the exchange interactions run over all possible spin pairs.

| DACP method | Shift-invert method | |

|---|---|---|

| Ising | 1.49 | 1.42 |

| Glass | 1.50 | 1.47 |

Furthermore, the scaling among these two methods is comparable. To compare quantitatively, we extract the scaling constants of the four lines by fitting the numerical results, where is defined by . The values of are shown in Table 1. Indeed, the four scaling constants are quite close. Suppose that remains unchanged for a bigger , then the DACP method’s advantage in time cost may keep on for rather large systems. In addition, as illustrated by the numerical tests of the shift-invert method in Pietracaprina et al. (2018), the factorization time for finding dominates other computation steps. Considering the factorization part only, the execution time in Pietracaprina et al. (2018) exhibits a scaling constant , indicating a worse scaling behavior for systems with large spins. The efficiency of the DACP method is thus confirmed.

In Fig. 4 we show the computation time versus the number of converged eigenvalues, for the two systems of size . The horizontal axis represents the number of computed eigenvalues satisfying the condition in each test. Recall that the DACP method is divided into three parts: the exp-semicircle filtration, Chebyshev evolution, and subspace diagonalization. We denote their time consumption as , , and , respectively, while solving time is the full computation time . One immediately notice that for the two systems, computation time decreases on the left side, reaching a plateau at the middle region, while quickly increases on the right side. Yet the dashed lines present a quite small increasing rate for the whole region, as the horizontal axis is in logarithmic scale. In the middle region, where the DACP method performs the best, the solving time and the evolution time roughly coincide with each other.

These features are easily understood if one considers the following four facts. (i) Notice that the DOS is usually a bell-shape profile peaked at zero in spin systems and that is typically a tiny parameter ( for ), thus one may safely take as a constant in . Consequently, the number of required eigenvalues is . (ii) Setting the action of the Hamiltonian on the state, , as a basic step, and denoting the computation time of the basic step as , we may count the filtration time as times twice the cut-off order , i.e., . (iii) Similarly, we find the Chebyshev evolution time is since . (iv) It is well known that the full diagonalization time is proportional to the cube of the matrix size, i.e., . The combination of these computation times clearly explains the behavior shown in Fig. 4. For the left side, the exp-semicircle filtration dominates, as is inversely proportional to the parameter . Whereas, when it comes to the intermediate region where the number of eigenvalues is several hundreds to thousands, the evolution time consists of the majority of the computation time while the other two are negligible. As shown in Fig. 4, the performance of DACP method is approximately the same in finding to eigenvalues. As keeps increasing, the subspace diagonalization time eventually consumes the most computation time.

We remark that the plateau in the middle region in Fig. 4 distinguishes the DACP method from those iterative filtering ones Wyatt (1995); Guan and Zhang (2020); Pieper et al. (2016); Zhou and Saad (2007); Zhou (2010); Fang and Saad (2012), although all of them take use of the Chebyshev polynomials. The iterative methods usually require a larger amount of filtration and reorthogonalization, thus a longer convergence time, in finding more eigenvalues Pietracaprina et al. (2018); Fang and Saad (2012); Sierant et al. (2020). In fact, as illustrated in Ref. Pietracaprina et al. (2018), one finds that the computation time is roughly proportional to the number of eigenvalues required for the shift-invert approach. The DACP method is thus highly desirable for large scale eigenvalues computations.

Finally, we note that the memory requirements by DACP method is rather small, as it works in a matrix-vector product mode which avoids an explicit matrix representation of the Hamiltonian. Moreover, as shown in Append. C, the whole set of states in Eq. (8) is not preserved in memory. The major memory consumption is the storage of elements and , thus the memory requirements of the DACP method relates only to the dimension of subspace . By the settings of this work, it occupies around GB of memory for appropriately calculating eigenvalues in systems. On the contrary, for the shift-invert method, the matrix size of grows rather fast as the system size increases (proportional to ), demanding a large amount of memory. For example, in our tests it consumes GB of memory for systems, which is already a factor of more than that of the DACP method.

IV Conclusion

We propose the DACP method to efficiently calculate a large scale (at least ) of central eigenvalues with high precision, by employing twice the Chebyshev polynomials. The proposed method is not a general eigensolver, as the generality gives way to the efficiency. It explores the excellent properties of Chebyshev polynomial to efficiently filter out those non-central parts of spectrum and to construct the appropriate basis states for the central spectrum. The numerical tests for the Ising model and the spin glass shards confirm the exactness and efficiency of the DACP method. Compared to the widely used shift-invert method, the DACP method gives a considerable increase in the speed of computations, for the Ising model up to a factor of while for the spin glass shards the increase in speed is less but still considerable (a factor of ). The memory requirement is drastically decreased, up to a factor of for spin systems and even more for larger systems. Moreover, as shown in Append. D, the DACP method is both more stable and more efficient than the Lanczos method applied with the exp-semicircle filter. As a powerful tool for central eigenvalues calculation, the DACP method may find potential applications in many physical problems, such as many-body localization in condensed matter physics Nandkishore and Huse (2015); Abanin et al. (2019); Luitz et al. (2015); Hopjan and Heidrich-Meisner (2020), and level statistics in quantum chaos Haake (2010); Georgeot and Shepelyansky (1998); Sierant and Zakrzewski (2020); Mondal et al. (2020).

Appendix A Chebyshev polynomial

In this paper, we limit ourselves to that only the polynomial combinations of are the allowed operations. We utilize the Chebyshev polynomial to fulfill the tasks mentioned above. It is the key to bridge the combinations of with an approximately exponential function of , either or . Due to its several remarkable properties, the Chebyshev polynomial may exhaust the potential of this type of methods.

The th order Chebyshev polynomial of the first kind is defined by

| (14) |

with initial conditions and Mason and Handscomb (2003). It is a piece-wise function containing two different kinds of expression, while being the polynomial function, it is both continuous and smooth. For simplicity, let us set () when and set when , the corresponding range of is and , respectively. In terms of the variable , Eq. (14) becomes

| (15) |

One may easily observe that is a sine or cosine-like oscillation function bounded by and inside the interval , as illustrated in Fig. 5 (a-c), while it grows extremely fast outside , as shown in Fig. 5 (d-f).

Note that . It is natural to expect an exponential growth of the Chebyshev polynomial outside the interval . In fact, it is known that among all polynomials with degree , the Chebyshev polynomial grows the fastest outside the interval under comparable conditions Rivlin (1969).

Associated with those properties is a practically useful one: can be efficiently determined by using the -term recurrence

| (16) |

All these properties of the Chebyshev polynomial render it a powerful toolbox and are of great use for the DACP method.

Appendix B Detailed deductions of Eq. (8)

Here we derive explicit expressions showing how to construct the set Eq. (8) via the Chebyshev evolution. We focus on the case that , which is fairly reasonable for large () spin systems.

| (17) | ||||

Using the fact that when is small, , we have at the third line of Eq. (17). Therefore, we have the expression

| (18) |

We then conduct a Chebyshev evolution with a cutoff order , recording both and when with . After the evolution is done, the set of states Eq. (8) is automatically generated.

Appendix C Evaluation of matrix elements and solution of the generalized eigenvalue problem

As shown in Append. B, we may rewrite the basis Eq. (8), or , using the Chebyshev polynomials:

| (19) |

where , , , , etc. For simplicity, one may further assume is an even number.

The elements , where and are directly determined by and . The correspondence is one to one. By making use of the relation

| (20) |

one may even find the matrix elements without recording any states during the Chebyshev evolution. Instead, we simply record the two numbers and at the appropriate time, i.e., when , , and .

Finally, we arrive at the explicit expressions of matrix elements

| (21) | |||

| (22) |

Also note that since is needed, where both and may reach the maximum value , the cut-off order is doubled to in this mode.

For the generalized eigenvalue problem Eq. (9), the Hermitian matrix is first diagonalized as

| (23) |

where is the eigenvector matrix for , , and is the associated eigenvalue matrix. Since is generally singular, we then contract the matrix by elimination of the columns associated with eigenvalues with absolute value below a cutoff . Denoting the number of retained eigenvalues as , the contracted eigenvector matrix is of order , and

| (24) |

The next step is to form the contracted Hamiltonian matrix . Since

| (25) |

denoting the transformation matrix , the contracted Hamiltonian matrix is

| (26) |

The Hermitian matrix of order with around to is then diagonalized directly

| (27) |

where is the eigenvector matrix of and is a diagonal matrix consisting of those desired eigenvalues of the original Hamiltonian contained in . The eigenstates of the projected Hamiltonian may be obtained through elementary matrix algebra:

| (28) |

Denoting the Eq. (19) as a matrix with being the -th column, the eigenstates of the original Hamiltonian contained in is

| (29) |

We finally get the answers and .

Appendix D Comparison with the Lanczos method

Since the Lanczos method is widely employed to compute the lowest (or highest) eigenvalues, while the exp-semicircle filter provides a means to transform the central eigenvalues to the highest ones, naturally one may combine them together to efficiently compute the central eigenvalues, a way far simpler than the DACP method. But there are several reasons to prefer complicated techniques used in the DACP method to the standard construction of the Krylov space, especially when large scale computations are required.

First, it is known that the Lanczos method is not numerically stable under practical conditions. Specifically, although the Lanczos algorithm shows perfect properties in theory, in practice it loses many of its designed features, e.g., global orthogonality and linear independence among Lanczos recursion states Chen and Guo (2003); Cullum and Willoughby (1985); Saad (2011). These defects effectively limits the number of eigenvalues which can be computed. In addition, it seems that the emergence of generalized eigenvalue problem (to deal with non-orthogonal base states) is unavoidable due to the error accumulations Parlett (1987); Wall and Neuhauser (1995). The Chebyshev recursion, on the other hand, possesses many interesting properties common in both the ideal and practical calculations Chen and Guo (2003). In particular, it is accurate and stable for , allowing the propagation in the Chebyshev order domain for tens of thousands of steps without accumulating significant errors Chen and Guo (1999).

Second, taking the reorthogonalization step into consideration, the efficiency of the Lanczos algorithm decreases. The reorthogonalization step is a necessary part in both the Lanczos and the Arnoldi method. When there is a large amount (e.g., several thousands) of eigenvalues to be computed, the total cost is actually dominated by the reorthogonalization Zhou and Saad (2007); Zhou (2010); Saad et al. (2010). Suppose the total required number of eigenvalues is and the Hilbert space dimension is , then the Lanczos-type methods scale as , as every generated Ritz vector needs to be reorthogonalized against the existing ones (see also the discussions in Refs. Zhou (2010); Lehoucq et al. (1998)). In comparison, we have shown in Sec. III that , , and , where each one of them is better than (). Although partial reorthogonalization schemes have been proposed, they result in an increased cost in computations as well as memory traffic Fang and Saad (2012); Bekas et al. (2008), and they are not guaranteed to succeed when the accuracy requirement becomes strict Bekas et al. (2008). Moreover, the DACP method and the Lanczos algorithm are different in terms of space complexity, as the former shows , while the latter requires once the reorthogonalizations are needed Lehoucq et al. (1998). Therefore, the DACP method has superiority over the Lanczos algorithm in both the time and the space complexity.

Finally, ignoring the requirement of reorthogonalizations, we find that the two methods are comparable in both the time and the space complexity. Recall that the Chebyshev evolution time, being the dominate term in the DACP method, becomes , where is the time for matrix-vector product. We then discuss the time complexity of a combined version, the Lanczos method with the exp-semicircle filter. As shown in Fig. 1 of the Supplemental Material in Ref. Sierant et al. (2020), and the discussions in Ref. Zhou and Saad (2007), the number of Lanczos steps shall be at least twice the number of requested eigenvalues , i.e., . Since iterations are needed, and every iteration requires a filter application with , the computation time reads . Therefore, the DACP method and the combination of Lanczos with the exp-semicircle filter share a same time complexity.

In summary, the DACP method is both more stable and more efficient than the Lanczos method applied with the exp-semicircle filter.

Acknowledgment

We thank X.-H. Deng for discussions. This work is supported by the NSFC Grant No. 91836101, No. U1930201, and No. 11574239. The numerical calculations in this paper have been done on the supercomputing system in the Supercomputing Center of Wuhan University.

References

- Haake (2010) F. Haake, Quantum Signatures of Chaos (Springer, Berlin Heidelberg, 2010).

- Brody et al. (1981) T. A. Brody, J. Flores, J. B. French, P. A. Mello, A. Pandey, and S. S. M. Wong, Rev. Mod. Phys. 53, 385 (1981).

- Bohigas et al. (1984) O. Bohigas, M. J. Giannoni, and C. Schmit, Phys. Rev. Lett. 52, 1 (1984).

- Mehta (1991) M. L. Mehta, Random Matrices (Academic Press, New York, 1991).

- Relaño et al. (2002) A. Relaño, J. M. G. Gómez, R. A. Molina, J. Retamosa, and E. Faleiro, Phys. Rev. Lett. 89, 244102 (2002).

- Basko et al. (2006) D. Basko, I. Aleiner, and B. Altshuler, Ann. Phys. 321, 1126 (2006).

- Nandkishore and Huse (2015) R. Nandkishore and D. A. Huse, Annu. Rev. Condens. Matter Phys. 6, 15 (2015).

- Pietracaprina et al. (2018) F. Pietracaprina, N. Macé, D. J. Luitz, and F. Alet, SciPost Phys. 5, 45 (2018).

- Ericsson and Ruhe (1980) T. Ericsson and A. Ruhe, Math. Comput. 35, 1251 (1980).

- Wyatt (1995) R. E. Wyatt, Phys. Rev. E 51, 3643 (1995).

- Minehardt et al. (1997) T. J. Minehardt, J. D. Adcock, and R. E. Wyatt, Phys. Rev. E 56, 4837 (1997).

- Lanczos (1950) C. Lanczos, J. Res. Natl. Bur. Stand. 45, 255 (1950).

- Mandelshtam and Taylor (1995) V. A. Mandelshtam and H. S. Taylor, J. Chem. Phys. 103, 2903 (1995).

- Bai et al. (2000) Z. Bai, J. Demmel, J. Dongarra, A. Ruhe, and H. van der Vorst (Eds.), Templates for the Solution of Algebraic Eigenvalue Problems: A Practical Guide. (SIAM, Philadelphia, 2000).

- Luitz et al. (2015) D. J. Luitz, N. Laflorencie, and F. Alet, Phys. Rev. B 91, 081103(R) (2015).

- Sierant and Zakrzewski (2020) P. Sierant and J. Zakrzewski, Phys. Rev. B 101, 104201 (2020).

- Hopjan and Heidrich-Meisner (2020) M. Hopjan and F. Heidrich-Meisner, Phys. Rev. A 101, 063617 (2020).

- Šuntajs et al. (2020) J. Šuntajs, J. Bonča, T. Prosen, and L. Vidmar, Phys. Rev. B 102, 064207 (2020).

- Li et al. (2016) R. Li, Y. Xi, E. Vecharynski, C. Yang, and Y. Saad, SIAM J. Sci. Comput. 38, A2512 (2016).

- Fang and Saad (2012) H. R. Fang and Y. Saad, SIAM J. Sci. Comput. 34, A2220 (2012).

- Sierant et al. (2020) P. Sierant, M. Lewenstein, and J. Zakrzewski, Phys. Rev. Lett. 125, 156601 (2020).

- Guan and Zhang (2020) H. Guan and W. Zhang, Chin. Phys. B (2020).

- Pieper et al. (2016) A. Pieper, M. Kreutzer, A. Alvermann, M. Galgon, H. Fehske, G. Hager, B. Lang, and G. Wellein, J. Comput. Phys. 325, 226 (2016).

- Shklovskii et al. (1993) B. I. Shklovskii, B. Shapiro, B. R. Sears, P. Lambrianides, and H. B. Shore, Phys. Rev. B 47, 11487 (1993).

- Georgeot and Shepelyansky (1998) B. Georgeot and D. L. Shepelyansky, Phys. Rev. Lett. 81, 5129 (1998).

- Zhou and Saad (2007) Y. Zhou and Y. Saad, SIAM J. Matrix Anal. Appl. 29, 954 (2007).

- Zhou (2010) Y. Zhou, J. Comput. Phys. 229, 9188 (2010).

- Poulin and Wocjan (2009) D. Poulin and P. Wocjan, Phys. Rev. Lett. 102, 130503 (2009).

- Rivlin (1969) T. J. Rivlin, An Introduction to the Approximation of Functions (Blaisdell Publishing Company, Waltham, Massachusetts, 1969).

- Chen and Guo (1999) R. Chen and H. Guo, Comput. Phys. Commun. 119, 19 (1999).

- Anderson et al. (1994) E. Anderson, Z. Bai, C. Bischof, J. Demmel, J. Dongarra, J. D. Croz, A. Greenbaum, S. Hammarling, A. McKenney, S. Ostrouchov, and D. Sorensen, LAPLACK User’s Guide—Release 2.0 (SIAM, Philadelphia, 1994).

- Saad (2011) Y. Saad, Numerical Methods for Large Eigenvalue Problems, 2nd ed. (SIAM, Philadelphia, 2011).

- Nielsen and Chuang (2011) M. A. Nielsen and I. L. Chuang, Quantum Computation and Quantum Information (Cambridge University Press, Cambridge, England, 2011).

- Bogolubov and N. N. Bogolubov (2009) N. N. Bogolubov and J. N. N. Bogolubov, Introduction to Quantum Statistical Mechanics (World Scientific, Singapore, 2009).

- Sachdev (2011) S. Sachdev, Quantum Phase Transitions (Cambridge University Press, Cambridge, England, 2011).

- Dutta et al. (2015) A. Dutta, G. Aeppli, B. K. Chakrabarti, U. Divakaran, T. F. Rosenbaum, and DiptimanSen, Quantum Phase Transitions in Transverse Field Spin Models: From Statistical Physics to Quantum Information (Cambridge University Press, Cambridge, England, 2015).

- Fisher (1995) D. S. Fisher, Phys. Rev. B 51, 6411 (1995).

- Schollwöck (2005) U. Schollwöck, Rev. Mod. Phys. 77, 259 (2005).

- Zhou and Li (2011) Y. Zhou and R.-C. Li, Linear Algebra Appl. 435, 480 (2011).

- Hams and De Raedt (2000) A. Hams and H. De Raedt, Phys. Rev. E 62, 4365 (2000).

- Lehoucq et al. (1998) R. B. Lehoucq, D. C. Sorensen, and C. Yang, ARPACK User’s Guide: Solution of Large Scale Eigenvalue Problems with Implicitly Restarted Arnoldi Methods (SIAM, Philadelphia, 1998).

- Abanin et al. (2019) D. A. Abanin, E. Altman, I. Bloch, and M. Serbyn, Rev. Mod. Phys. 91, 021001 (2019).

- Mondal et al. (2020) D. Mondal, S. Sinha, and S. Sinha, Phys. Rev. E 102, 020101(R) (2020).

- Mason and Handscomb (2003) J. C. Mason and D. C. Handscomb, Chebyshev Polynomials (Chapman and Hall Publisher, Boca Raton, 2003).

- Chen and Guo (2003) R. Chen and H. Guo, J. Chem. Phys. 119, 5762 (2003).

- Cullum and Willoughby (1985) J. K. Cullum and R. A. Willoughby, Lanczos algorithms for large symmetric eigenvalue computations (SIAM, Philadelphia, 1985) p. 92.

- Parlett (1987) B. N. Parlett, The Symmetric Eigenvalue Problem (SIAM, Philadelphia, 1987) p. 102.

- Wall and Neuhauser (1995) M. R. Wall and D. Neuhauser, J. Chem. Phys. 102, 8011 (1995).

- Saad et al. (2010) Y. Saad, Y. Zhou, C. Bekas, M. L. Tiago, and J. Chelikowsky, Phys. Status Solidi (B) 243, 2188 (2010).

- Bekas et al. (2008) C. Bekas, E. Kokiopoulou, and Y. Saad, SIAM J. Matrix Anal. Appl. 30, 397 (2008).