DSOR: A Scalable Statistical Filter for Removing Falling Snow from LiDAR Point Clouds in Severe Winter Weather

Abstract

For autonomous vehicles to viably replace human drivers they must contend with inclement weather. Falling rain and snow introduce noise in LiDAR returns resulting in both false positive and false negative object detections. In this article we introduce the Winter Adverse Driving dataSet (WADS) collected in the snow belt region of Michigan’s Upper Peninsula. WADS is the first multi-modal dataset featuring dense point-wise labeled sequential LiDAR scans collected in severe winter weather; weather that would cause an experienced driver to alter their driving behavior. We have labelled and will make available over 7 GB or 3.6 billion labelled LiDAR points out of over 26 TB of total LiDAR and camera data collected. We also present the Dynamic Statistical Outlier Removal (DSOR) filter, a statistical PCL-based filter capable or removing snow with a higher recall than the state of the art snow de-noising filter while being 28% faster. Further, the DSOR filter is shown to have a lower time complexity compared to the state of the art resulting in an improved scalability.

Our labeled dataset and DSOR filter will be made available at https://bitbucket.org/autonomymtu/dsor_filter.

Index Terms:

Autonomous Driving, Adverse Weather Dataset, Point Cloud De-noising FilterI Introduction

Autonomous Vehicle (AV) perception systems often rely on Light Detection And Ranging (LiDAR) sensors for perception, mapping, and localization. Precipitation such as rain and snow can reduce the performance of these systems[1]. LiDAR sensors are particularly affected due to their inherent beam divergence and short pulse duration. For many AV focused LiDAR sensors, snowflake detection noise is concentrated near the sensor and manifests as a clutter-noise as seen in Fig. 1. Snow clutter noise can introduce false detections as well obscure important obstacles leading to critical false-negative detections [2]. As an example, Fig. 1 (top) shows a LiDAR point cloud captured in Hancock, MI on the 12th of February 2020 with a snow rate of 0.6 in/hr (1.5 cm/hr). Two oncoming vehicles are obscured by the snow and evade Autoware’s Euclidean clustering for object detection [3].

| Dataset | LiDAR | Labeling | Inclement Weather |

|---|---|---|---|

| SemanticKITTI[4] | 64 channel | point-wise | No |

| nuScenes-lidarseg[5] | 32 channel | point-wise | No |

| ApolloScape [6] | 64 channel | point-wise | No |

| DENSE[7] | 32 & 64 channel | bounding boxes | Yes |

| CADC[8] | 32 channel | bounding boxes | Yes |

| WADS | 64 channel | point-wise | Yes |

Precipitation is often hard to predict and severe events are infrequent. Populated North American cities such as Chicago, Detroit, Minneapolis, etc. can receive over 1-inch (2.5 cm) of snow per hour during severe winter weather events. To enable universal adaptation of AV’s, perception systems must be able to reliably operate in conditions like these that may arise unexpectedly or would be tolerated by an experienced human driver. Neural Networks (NN) trained on large datasets, fail to perform well in winter driving conditions; lack of available winter weather datasets has been cited as a likely reason [9]. Michigan’s Keweenaw peninsula frequently receives on average over 200 in. (500 cm) of snow annually. Here, blowing snow can result in both intermittent and persistent white out conditions where visibility is near zero. The frequency of such adverse weather in this area allows reliable collection of large winter driving data featuring extreme snow events [2]. In this paper, we introduce the first point-wise labeled dataset featuring moderate to severe winter driving conditions collected in sub-urban environments. In the context of this work we refer to severe winter weather as conditions that would results in a change in behavior of an experience human driving due to loss of visibility or uncertainty in road surface conditions. Our Winter Adverse Driving dataSet (WADS), aptly named after the largest dorm on Michigan Tech’s campus, features over 7GB of labeled LiDAR point clouds featuring active falling snow as well as accumulated snow on the side of the roads from vehicle movement and snow removal. Point-wise labels enable every point in a scan to have a unique class facilitating capture of fine details such as individual snowflakes. Such a labeled dataset dedicated to winter driving will propel development of AV’s in adverse weather and pave the way for their universal adaptation.

A common technique for dealing with noise in LiDAR point clouds is a filtering step prior to a detection stage. Generalized filters, however, do not account for the distribution of the snow and risk losing important keypoints in the environment. LiDAR returns are volumetrically non-uniform resulting in a point-density that decreases with increasing range. Noise introduced by falling snow, shows the same non-uniformity and follows a log-normal distribution [10]. To address this limitation, we present the Dynamic Statistical Outlier Removal (DSOR) filter to remove snow from LiDAR point clouds. Our solution dynamically changes the filter threshold with range to remove most of the snow and outlier noise while preserving environmental features. Fig. 1 (bottom) shows most of the snow has been cleared by our DSOR filter. We compare our filter to the Dynamic Radius Outlier Removal (DROR) filter [11], which we consider to be the state of the art filter for snow de-noising. The proposed DSOR filter is shown to perform 28% faster than the DROR filter with a 4% increase in the filter recall.

The contributions of this paper can be summarized as follows:

-

•

We introduce the first dense point-wise labeled Winter Adverse Driving dataSet (WADS) featuring moderate to severe snow.

-

•

We introduce the DSOR filter to de-noise point clouds corrupted by falling snow.

-

•

We show that the DSOR filter outperforms the state of the art in recall and has a lower time complexity resulting in improved scalability.

II Related Work

Automotive LiDARs can be adversely affected by weather related scattering and absorption effects. Rain adds noise to point clouds severely degrading performance of object detection algorithms [12]. Falling snow in winter can be detected as diffuse, solid objects, often smaller than the laser beam cross section. Rasmussen et al. [13] show that small snow particles act as Lambertian targets and create significant backscattering. To better understand the interaction of snow with automotive 3D LiDAR, Roy et al. [14] modeled the interaction between snowflakes and laser pulses. They show that snow detections are concentrated near the sensor and detection statistics are governed by the characteristics of the laser beam and rate of precipitation. Michaud et al. [10] show that the probability of detecting snow in automotive 3D LiDAR follows a log-normal distribution with distance from the sensor.

II-A Datasets

Over the past decade, several annotated datasets have been released with LiDAR scans to aid with the development of AV perception tasks [15]. Few include multi sensor winter weather data with extreme events necessary for training NN models. The lack of inclement weather data has been addressed in some literature by adding artificial corruption to existing datasets [16, 17]. Pfeuffer and Dietmayer [9] show that models trained on large datasets such as KITTI [18], fail to perform well when artificial rain or snow is introduced implying that availability of adverse data takes precedence over the size of the dataset.

The KITTI[18] and nuScenes[5] datasets provide LiDAR scans annotated with bounding boxes while SemanticKITTI [4] and nuScenes-lidarseg datasets provide point-wise annotations. These datasets, however, provide no data in inclement weather. The ApolloScape dataset [6] includes LiDAR scans with a semantic mask to extract point-wise annotations. The DENSE dataset [7] includes rain, fog, and snow but the conditions do not rise to the level of being severe. Moreover, annotations in DENSE are limited to bounding boxes and not point-wise labels. The CADC dataset [8] includes adverse weather data collected in Canada with bounding boxes around vehicles and pedestrians. Our dataset provides point-wise annotations and have been collected in consistent severe conditions. Table I provides an overview of relevant datasets compared with ours.

II-B Filters

Noise introduced by snow particles in LiDAR scans can be filtered out in a number of ways; [1] and [14] introduce intensity based filters. These filters assume LiDAR returns from snowflakes will have a lower intensity and remove snow noise by intensity-value thresholding. However, intensity is dependant both on the laser wavelength and target reflectance and, as shown in [13], backscatter from snow particles near the sensor can be significant. To overcome this limitation, Park et al. [1] propose a second clustering stage based on point density to preserve objects of interest. However, in heavy snow with a high volume density clustering techniques will classify snow as objects of interest.

Another filtering technique is to apply image processing on LiDAR point clouds. Here, point clouds are converted to a 2D representation and techniques like Gaussian filters or their derivatives are applied to remove noise. However, as shown by Charron et al. [11], 3D point clouds are sparse and such approaches not only fail to effectively remove snow but also have the negative effect of smoothing edges in keypoint features. Duan et al. [19] use Principal Component Analysis (PCA) to convert 3D point clouds to 2D and apply DBSCAN clustering to find sparse regions and remove them. However, as mentioned previously, clustering techniques fail at high snowfall rates.

Filtering can also be applied to the 3D points directly. The Point Cloud Library (PCL) [20] includes general purpose filters such as the voxel grid filter, Statistical Outlier Removal (SOR) filter and Radius Outlier Removal (ROR) filter; these are, however, not designed for snow. They fail to account for the non-uniformity of the point clouds and hence Charron et al. [11] propose the Dynamic Radius Outlier Removal (DROR) filter for removing snow corruption. Their filter dynamically changes the search radius with range to effectively remove snow. In this work, we compare our DSOR filter with the DROR filter, which we consider to be the state of the art.

III The WADS Dataset

This work introduces point labels for our winter LiDAR dataset [2]. Captured data has been split into sequences of approximately 100 scans each. Each set has sequential scans to further development of algorithms using spatial information. Multiple suburban locations have been captured, including 2-lane highways, residential areas and parked as well as moving vehicles. A total of 26 TB of multi-modal data have been captured. We apply point-wise labels to the LiDAR scans and will make available over 7 GB of data amounting to over 3.6 billion labeled points. Simultaneous Long-Wave Infra-Red (LWIR) and visible color camera imagery are also available [2] but not considered here.

III-A Labeling

Bounding boxes provide vector annotations and often include undesired background objects which can be detrimental for AV perception tasks such as semantic segmentation. We have opted for point-wise labels as they are more precise and enable fine details in the environment to be highlighted, such as individual snowflakes. To maintain compatibility with existing systems and ensure adoption of inclement weather data into existing frameworks, we adopt the popular KITTI format [21]. We leverage the point-cloud labeling tool introduced by Behley et al. [4]. This is a manual labeling tool that can load point clouds either as multiple scans for quick labeling or as single scans for fine details. Fig. 2 shows a labeled scan from our WADS dataset.

III-B Statistics

Every point in a LiDAR scan has been labeled into one of 22 classes as shown in Fig. 3. Over 7 GB or 3.6 billion points have been labeled in all. Here, classes are grouped into categories for easy viewing. It can be seen that the majority of labeled points lie in urban driving scenarios with roads, buildings and various types of vehicles representing most of our labeled data.

In addition to these classes, we introduce two new labels to represent snow. active-snow captures falling snow particles and associated clutter noise in a LiDAR return. accumulated-snow captures snow that builds up on the sides of drive-able surfaces from vehicle traffic and snow removal. Accumulated snow often changes, sometimes through a day, enough to confuse feature-based algorithms. Access to such data will be useful for AV tasks like object detection, localization and mapping, and semantic and panoptic segmentation in adverse weather.

IV Filter Implementation

PCL’s SOR filter is a general noise removal filter widely used for cleaning point clouds. It does not account for the non-uniform distribution of the point cloud and, when applied to a scan with falling snow, fails to remove it as seen in Fig. 4 (second from left).

The state of the art DROR filter applies a threshold to the mean distance of points to their neighbors in a given radius to remove sparse snowflakes. To address changing LiDAR point spacing with distance, the DROR filter changes the search radius as the distance increases from the sensor. The DROR filter achieves a high accuracy but fails to produce a clean point cloud at high snowfall rates as shown in Fig. 4 (second from right).

IV-A Proposed DSOR Filter

The DSOR filter is an extension of PCL’s SOR filter, designed to address the inherent non-uniformity in point clouds. Algorithm 1 helps visualize our approach. The point cloud is first loaded into a k-d tree and the k-nearest neighbor search is applied for every point to accumulate user provided k neighbors. The mean () and standard deviation () of the mean distances are calculated to compute a global threshold given by equation 1.

| (1) |

To account for the non-uniform spacing of points, the distance of every point from the sensor is calculated and a new dynamic threshold () is computed using equation 2

| (2) |

Points with mean distances less than are considered to be outliers and removed. Here, r is a constant, a multiplicative factor for point spacing. Increasing r will increase and will result in the filter rejecting fewer points, whereas, reducing r will decrease resulting in a more aggressive filter. The DSOR filter, when applied to a snow corrupted point cloud, produces the cleanest point cloud as compared to the other approaches as shown in Fig. 4 (right most).

V Evaluation and Results

We apply the SOR, DROR and DSOR filters on data collected during heavy snow events with severely reduced visibility (severe conditions). All of the filters have been implemented on an Intel® Core™ i7-9750H CPU with 32 GB of RAM. In this section we compare the performance of these filters using both qualitative as well as quantitative results. Qualitative results include both visual performance of the filters and distribution of filtered points as a function of range. To quantify the results, the precision and recall as well as the filtering time have been considered. All results have been averaged over 100 point clouds with falling snow.

V-A Qualitative evaluation

Fig. 4 shows a qualitative comparison of the original point cloud, corrupted by snow, passed through different filtering techniques. The SOR filter fails to remove most of the snow and removes key environmental features. The DROR filter does removes most of the snow while preserving environmental features. The DSOR filter removes even more snow detections from the point cloud while preserving key points removed by the SOR filter.

V-B Quantitative evaluation

To understand the visual performance of the filters, the percentage of filtered points as a function of range have been plotted in Fig. 5.

DSOR vs SOR filter: Fig. 5(a) shows that the DSOR filter removes significantly more points than the SOR filter in the first 20 meters where most of the LiDAR interaction with snow particles occurs. Beyond 20 meters, the DSOR filter removes significantly fewer points than the SOR filter thus preserving key environmental features.

DSOR vs DROR filter: Fig. 5(b) shows that in the first 20 meters the DSOR filter also removes more points than the DROR filter. However, beyond 20 meters, the DSOR filter removes more points potentially leading to a lower precision at range.

V-C Precision and Recall

In the context of this work, the aim of a filter must be to remove as much snow as possible (recall) and leave other points untouched (precision). It is possible to filter out all of the snow (recall of 100%) at the expense of environmental features (low precision) using any filter. This, however, is not the aim of this work. Both the DSOR filter and the DROR filter have been tuned to filter most of the snow and preserve as much of the environment as possible as shown in Fig. 4.

As shown in Fig. 6, the DSOR filter achieves a greater recall (95.6%) than the DROR filter (91.9%). This shows that the DSOR filter removes more of the snow corruption correctly than the DROR filter. The DROR filter, however, achieves a greater precision (71.5%) at filtering snow as compared to the DSOR filter (65.1%). This shows that the DSOR filter removes more non-snow points or environmental features than the DROR filter. From Fig. 5(b) it is evident that the loss in precision for the DSOR filter stems from removing points beyond 20 meters. At this range, we observe that features such as tree-tops, poles and overhanging power lines have been filtered out. Such features are sparse, and in the winter can return just a few points. Decrease in precision could also stem from removal of noisy measurements.

| Execution Time | DSOR | ||

| Filter | mean | std. dev. | Speedup |

| (ms) | (ms) | (ms) | |

| DSOR | 369.68 | 18.1 | - |

| SOR | 371.98 | 19.2 | 2.3 |

| DROR | 510.55 | 33.9 | 140.87 |

V-D Filter Rate

Table II compares the time taken to filter a point cloud by the DSOR, SOR and DROR filters. Results have been averaged over 100 point clouds collected by a 64 channel LiDAR with roughly 200k points each. The DSOR filter is, on average, 141 ms or 28% faster than the state of the art DROR filter.

V-E Scalability

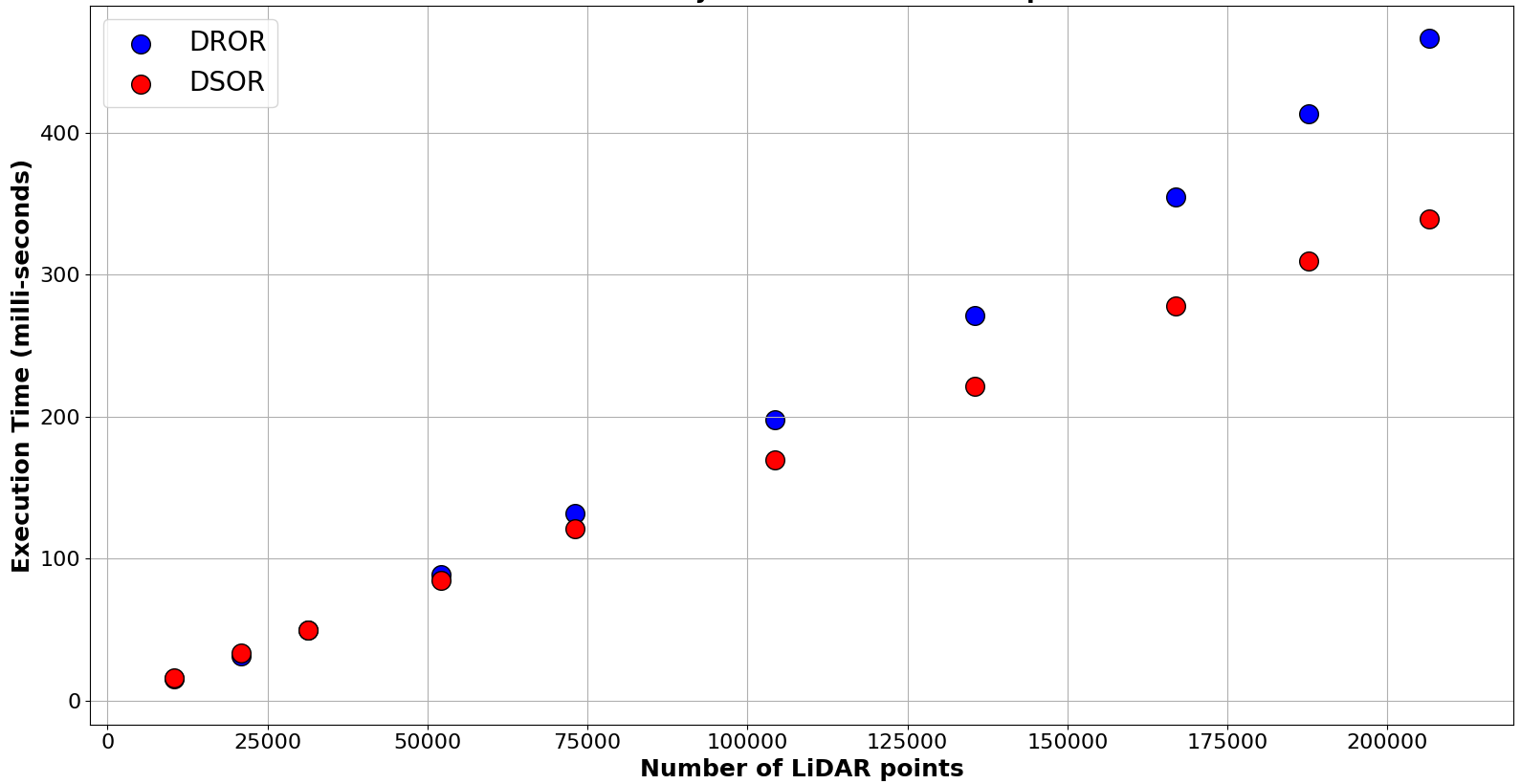

As LiDAR’s continue to evolve, sensors with higher point density and number of lasers are expected. State of the art AV’s also concatenate returns from multiple LiDAR’s for increased visibility and dense spatial sampling. This increases the number of points being captured and processed in the pipeline. For this reason, filters need to scale with the size of point clouds to ensure adaptation on AV systems. To compare the time taken for filtering by the DSOR filter and DROR filter, multiple levels of sub-sampling were applied to a sequence of point clouds from WADS. Fig. 7 shows that the DSOR filter has a lower execution time as compared to the DROR filter as the size of a point cloud increases. The DROR filter uses a radius search and we speculate that the DROR filter has an approximate time complexity of [22]. A curve fit on Fig. 7 is consistent with this assessment. The DSOR filter uses a k-nearest neighbor search and has a lower time complexity of implying that our filter will scale better with the size of LiDAR point clouds.

VI conclusion

Adverse weather conditions negatively affect perception systems used in autonomous vehicles. In particular, LiDAR point clouds suffer from false detections (both positive and negative) introduced by falling rain and snow. Until now, a lack of datasets focused on inclement winter weather has limited the development of AV’s to good clear weather conditions. In this work, we introduced the Winter Adverse Driving dataSet (WADS), a dense, point-wise labeled dataset featuring severe winter weather. We also propose two class labels for falling snow and accumulated snow useful for AV tasks like object detection, localization and mapping, and semantic and panoptic segmentation in adverse weather. In the future we would like to increase the size of labeled data and provide annotated images and possibly RADAR data to enable data fusion in winter weather.

We also present the Dynamic Statistical Outlier Removal (DSOR) filter for de-noising snow from LiDAR point clouds. We show that our filter outperforms the state of the art DROR filter by achieving a 4% higher recall and 28% lower execution time, without optimization. The DSOR filter has a lower time complexity enabling it to scale better with the size of point clouds. Further improvements in performance may be realized by using fast clustering and voxel-subsampling as shown in [23].

References

- [1] J.-I. Park, J. Park, and K.-S. Kim, “Fast and accurate desnowing algorithm for lidar point clouds,” IEEE Access, vol. 8, pp. 160202–160212, 2020.

- [2] J. P. Bos, D. Chopp, A. Kurup, and N. Spike, “Autonomy at the end of the earth: an inclement weather autonomous driving data set,” in Autonomous Systems: Sensors, Processing, and Security for Vehicles and Infrastructure 2020, vol. 11415, p. 1141507, International Society for Optics and Photonics, 2020.

- [3] S. Kato, S. Tokunaga, Y. Maruyama, S. Maeda, M. Hirabayashi, Y. Kitsukawa, A. Monrroy, T. Ando, Y. Fujii, and T. Azumi, “Autoware on board: Enabling autonomous vehicles with embedded systems,” in 2018 ACM/IEEE 9th International Conference on Cyber-Physical Systems (ICCPS), pp. 287–296, 2018.

- [4] J. Behley, M. Garbade, A. Milioto, J. Quenzel, S. Behnke, C. Stachniss, and J. Gall, “Semantickitti: A dataset for semantic scene understanding of lidar sequences,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9297–9307, 2019.

- [5] H. Caesar, V. Bankiti, A. H. Lang, S. Vora, V. E. Liong, Q. Xu, A. Krishnan, Y. Pan, G. Baldan, and O. Beijbom, “nuscenes: A multimodal dataset for autonomous driving,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 11621–11631, 2020.

- [6] X. Huang, P. Wang, X. Cheng, D. Zhou, Q. Geng, and R. Yang, “The apolloscape open dataset for autonomous driving and its application,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, p. 2702–2719, Oct 2020.

- [7] M. Bijelic, T. Gruber, F. Mannan, F. Kraus, W. Ritter, K. Dietmayer, and F. Heide, “Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather,” in The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

- [8] M. Pitropov, D. E. Garcia, J. Rebello, M. Smart, C. Wang, K. Czarnecki, and S. Waslander, “Canadian adverse driving conditions dataset,” The International Journal of Robotics Research, vol. 40, p. 681–690, Dec 2020.

- [9] A. Pfeuffer and K. Dietmayer, “Optimal sensor data fusion architecture for object detection in adverse weather conditions,” 2018.

- [10] S. Michaud, J.-F. Lalonde, and P. Giguere, “Towards characterizing the behavior of lidars in snowy conditions,” in 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), vol. 7, 2015.

- [11] N. Charron, S. Phillips, and S. L. Waslander, “De-noising of lidar point clouds corrupted by snowfall,” in 2018 15th Conference on Computer and Robot Vision (CRV), pp. 254–261, IEEE, 2018.

- [12] Q. Xu, Y. Zhou, W. Wang, C. R. Qi, and D. Anguelov, “Spg: Unsupervised domain adaptation for 3d object detection via semantic point generation,” 2021.

- [13] R. M. Rasmussen, J. Vivekanandan, J. Cole, B. Myers, and C. Masters, “The estimation of snowfall rate using visibility,” Journal of Applied Meteorology and Climatology, vol. 38, no. 10, pp. 1542–1563, 1999.

- [14] G. Roy, X. Cao, R. Bernier, and G. Tremblay, “Physical model of snow precipitation interaction with a 3d lidar scanner,” Appl. Opt., vol. 59, pp. 7660–7669, Sep 2020.

- [15] Y. Xie, J. Tian, and X. X. Zhu, “Linking points with labels in 3d: A review of point cloud semantic segmentation,” IEEE Geoscience and Remote Sensing Magazine, vol. 8, no. 4, pp. 38–59, 2020.

- [16] C. Sakaridis, D. Dai, and L. Van Gool, “Semantic foggy scene understanding with synthetic data,” International Journal of Computer Vision, vol. 126, p. 973–992, Mar 2018.

- [17] R. Heinzler, F. Piewak, P. Schindler, and W. Stork, “CNN-Based Lidar Point Cloud De-Noising in Adverse Weather,” IEEE Robotics and Automation Letters, vol. 5, pp. 2514–2521, Apr. 2020. Conference Name: IEEE Robotics and Automation Letters.

- [18] A. Geiger, P. Lenz, C. Stiller, and R. Urtasun, “Vision meets robotics: The kitti dataset,” The International Journal of Robotics Research, vol. 32, no. 11, pp. 1231–1237, 2013.

- [19] Y. Duan, C. Yang, H. Chen, W. Yan, and H. Li, “Low-complexity point cloud filtering for lidar by pca-based dimension reduction,” 2020.

- [20] R. B. Rusu and S. Cousins, “3d is here: Point cloud library (pcl),” in 2011 IEEE International Conference on Robotics and Automation, pp. 1–4, 2011.

- [21] A. Geiger, P. Lenz, C. Stiller, and R. Urtasun, “Vision meets robotics: The kitti dataset,” International Journal of Robotics Research (IJRR), 2013.

- [22] Y. Lu, L. Cheng, T. Isenberg, C.-W. Fu, G. Chen, H. Liu, O. Deussen, and Y. Wang, “Curve complexity heuristic kd-trees for neighborhood-based exploration of 3d curves,” in Computer Graphics Forum, vol. 40, pp. 461–474, Wiley Online Library, 2021.

- [23] H. Balta, J. Velagic, W. Bosschaerts, G. De Cubber, and B. Siciliano, “Fast statistical outlier removal based method for large 3d point clouds of outdoor environments,” IFAC-PapersOnLine, vol. 51, no. 22, pp. 348–353, 2018.