DRVN (Deep Random Vortex Network): A new physics-informed machine learning method for simulating and inferring incompressible fluid flows

Abstract

We present the deep random vortex network (DRVN), a novel physics-informed framework for simulating and inferring the fluid dynamics governed by the incompressible Navier–Stokes equations. Unlike the existing physics-informed neural network (PINN), which embeds physical and geometry information through the residual of equations and boundary data, DRVN automatically embeds this information into neural networks through neural random vortex dynamics equivalent to the Navier–Stokes equation. Specifically, the neural random vortex dynamics motivates a Monte Carlo-based loss function for training neural networks, which avoids the calculation of derivatives through auto-differentiation. Therefore, DRVN can efficiently solve Navier–Stokes equations with non-differentiable initial conditions and fractional operators. Furthermore, DRVN naturally embeds the boundary conditions into the kernel function of the neural random vortex dynamics and thus, does not need additional data to obtain boundary information. We conduct experiments on forward and inverse problems with incompressible Navier–Stokes equations. The proposed method achieves accurate results when simulating and when inferring Navier–Stokes equations. For situations that include singular initial conditions and agnostic boundary data, DRVN significantly outperforms the existing PINN method. Furthermore, compared with the conventional adjoint method when solving inverse problems, DRVN achieves a 2 orders of magnitude improvement for the training time with significantly precise estimates.

1 Introduction

The ubiquitous Navier–Stokes equations (NSEs) are indispensable for modeling fluids ranging from those studied in meteorology to ocean currents. [3, 40, 44, 46, 48] Consequently, since NSEs are widespread in scientific, [15, 49] industrial, [16, 30] and engineering applications, [8, 41] gaining a deep understanding of them is important. Recently, with the growth of computer technology and better availability of data, the development of deep learning techniques has led to many advances. Deep learning methods, such as physics-informed neural networks (PINNs), can help to solve partial differential equations (PDEs) accurately and efficiently, [38, 39, 17, 4, 11, 25, 32] which demonstrates the promising future of combining deep learning with scientific computing. In general, PINNs seamlessly embed information relating to the observed data and physical laws into neural networks via an automatic differentiation regime. The loss function of a PINN contains the supervised loss constructed from the observed data and the physical loss of PDEs, including the residuals of the governing equations and other additional conservation laws. Compared with conventional numerical methods, PINNs are mesh-free and can handle inverse problems efficiently due to their differentiability. [38]

Recently, significant scientific effort has been devoted to utilizing PINNs to solve scientific problems based on the NSEs. For instance, [39] developed hidden fluid mechanics to solve forward and inverse fluid mechanics problems in arbitrarily complex domains. [42] combined PINNs with the Bayesian method to reconstruct flow fields from sparse and noisy fluid data. [17] proposed Navier–Stokes flow nets (NSFnets) by considering the velocity–pressure and the vorticity–velocity formulations simultaneously to simulate both laminar and turbulent flows. Moreover, several methods have been proposed to optimize the network architectures and training dynamics of PINNs, e.g., multi-scale deep neural networks, [23] hard-constraint PINNs, [26] and the dynamic pulling method. [18]

While these approaches have made remarkable progress, using them to solve the NSEs faces the following three fundamental challenges. First, the existing frameworks of PINNs embed the physical information of fluids via the residual of the NSEs, i.e., by utilizing derivative information directly in PDEs to define the loss function. This implicitly assumes that the relevant functions are sufficiently smooth, which limits their ability to deal with non-differentiable functions, e.g., the Dirac delta function. The second challenge relates to their efficiency when calculating high-order or fractional-order derivatives. For example, fractional NSEs have been widely adopted in modeling fluid dynamics involving historical memory and long-range interactions. [53, 54, 6, 2] However, there is a high computational cost in estimating the fractional operator. [13, 21] The third challenge relates to the inefficiency of tuning the hyperparameters for PINNs. [20, 47, 34] PINNs use a weighted summation of the residual of the equation and the residual of the boundary and initial conditions, where the performance is sensitive to the weight. Training a PINN generally requires boundary data over the whole period to depict the boundary information, [39, 17] which may be unavailable or redundant. Therefore, concisely integrating machine learning and the physical information about the fluid flow is also critical.

In this work, we address these challenges by combining deep learning with a reformulation of the random vortex method (RVM), [7, 24, 27, 35] which is a mesh-free algorithm for implementing the fluid mechanics equations. Instead of solving the NSEs directly, RVM converts the velocity field to its corresponding probabilistic representation via the Feynman–Kac formula, which can be approximated by a Monte Carlo method. In the RVM formulation, the spatial derivation in the original formulation of an NSE can be approximated by sampling from a stochastic differential equation (SDE) driven by a Lévy process. In this way, the RVM can efficiently handle non-smooth and fractional equations. Therefore, we propose a novel physics-informed machine learning framework, namely the deep random vortex network (DRVN), which utilizes a deep neural network to represent the velocity field. The loss function is constructed according to its probabilistic representation in RVM. There are four attractive advantages of DRVN:

-

1.

Broad range of applications. Compared with the existing PINN framework, DRVN can more easily handle non-smooth and fractional equations. DRVN requires only that the network function is integrable in the domain, rather than the second-order or fractional-order continuously differentiability necessary for PINN. Thus, it can represent non-smooth solutions and initial conditions. Furthermore, calculating fractional derivatives can be replaced via the efficient sampling from the Lévy process, which does not increase the algorithmic complexity.

-

2.

Boundary data-free. Unlike existing PINNs, which require data points on the boundary during the whole period to embed geometrical information into their neural networks, DRVN utilizes the kernel function as prior knowledge to implicitly constrain the neural networks so that they satisfy the boundary conditions. Thus, DRVN does not need additional boundary data and can handle situations where boundary data are unavailable or expensive.

-

3.

Easy to implement. Instead of the loss function in a PINN, which is constituted by the equation term, boundary condition term, data term, and other information, all the physical and geometrical information is naturally embedded into the formulation of RVM, so that, in general, the loss function of DRVN has only one term. Thus, there are fewer hyperparameters in the DRVN loss function, which saves effort in fine-tuning the hyperparameters.

-

4.

Efficient for the inverse problem. Compared with the classical RVM, DRVN constructs a continuous model that directly maps the spatial-temporal coordinates to the velocity. By leveraging the auto-differentiation of deep neural networks, DRVN achieves fast inference compared to the traditional inference algorithm based on RVM, i.e., the adjoint method.

We demonstrate the effectiveness of DRVN by solving forward and inverse problems for various equations, including 2-dimensional (2D) and 3-dimensional (3D) Lamb–Oseen vortices (with a singular initial condition), 2D fractional NSEs, and a 2D Taylor–Green vortex (with a periodic boundary condition). For forward problems, the relative errors are around for most equations. For situations with singular initial conditions and agnostic boundary data, DRVN significantly outperforms the existing PINN method. For inverse problems, we utilize a parametric solver to infer the viscosity term in the 2D NSE and the diffusion parameter in the fractional NSE. Compared with the traditional adjoint method, DRVN achieves 2 orders of magnitude improvement for the training time with significantly precise estimates.

This paper is organized as follows. In Section 2, we describe the notation and the problem setups. In Section 3, we introduce our methodology using the 2D NSEs as an example. In Section 4, we report the results of numerical experiments that demonstrate the effectiveness of DRVN. Finally, we summarize and discuss our method in Section 5.

2 Notation and Problem Setups

2.1 Notation

In this section, we introduce the notation used in this paper. We utilize bold letters for vectors and matrices. For a matrix , denotes its th entry. For a vector , and are its th entry and Euclidean norm, respectively. For a 2D vector , then its orthogonal complement . is an identity matrix. We use for the standard Euclidean inner product between two vectors.

Dirac’s delta function is

| (1) |

satisfies that for all smoothing test functions . We utilize to denote the greatest integer. The velocity and vorticity terms of an NSE are and , respectively. Furthermore, is the velocity in . Re and are the Reynolds number and the viscosity. and denote the domain and boundary of the equations, respectively.

2.2 Problem setups

We consider 2D incompressible NSEs defined in the domain :

| (2) | ||||

where is the velocity field, is the pressure term, and is the viscosity. The vorticity is , which evolves according to the following vorticity equation:

| (3) | ||||

where is the velocity field, is the vorticity, and is the viscosity. In this paper, the numerical method is designed based on the vorticity form of the equation.

The 2D fractional vorticity form of the NSE is given by the following equations:

| (4) | ||||

where the diffusion parameter is restricted to the interval . Notice the fractional Laplacian is on the right-hand side of Eq. (4), which is defined by directional derivatives. [22, 29]

Our aim is to simulate and infer the fluid flow based on the NSE, which are described as the forward problem and inverse problem, respectively:

-

•

Forward problem: Given the initial velocity field and vorticity field , the general forward problem aims to simulate the velocity field in the time–space domain for a given viscosity term or simultaneously for a set of viscosity terms .

-

•

Inverse problem: Given the initial velocity field , vorticity field , and the observable dataset generated from a system that satisfies the NSEs, the target of the inverse problem is to infer the unknown parameters of the system (e.g., the viscosity term ) from the observable data.

3 Methodology of DRVN

In this section, we introduce DRVN, which utilizes a feedforward neural network (FNN) to parameterize the velocity field of the fluid flow and simulate the dynamics by optimizing a loss function based on the random vortex dynamics. To efficiently approximate the Feynman–Kac formula in the random vortex dynamics, DRVN utilizes a two-phase Monte Carlo method to obtain an unbiased estimate. We will apply DRVN to simulate and infer an incompressible fluid governed by the NSEs.

3.1 Feedforward neural network

An FNN is a parameterized continuous function that is constructed as:

| (5) |

where denotes the input, where denotes the parameter matrix at layer , and is an element-wise non-linear transformation called the activation function. There are two widely used activation functions:

| (6) | ||||

An FNN is a powerful function approximator in the continuous function class.[14] In the following, we will use an FNN to parameterize the velocity field of the fluid flow.

3.2 Neural random vortex dynamics

In this section, we introduce the neural random vortex dynamics. The vorticity evolves according to the parabolic form of Eq. (3). The Feynman–Kac equation links the vorticity with the following stochastic process:

| (7) |

where represents the initial spatial coordinate in , which is sampled from the initial vorticity distribution , is the 2D Brownian motion, and is a diffusion process that satisfies Taylor’s formulation of Brownian motion. [43, 24] It has been proved that the probability density of follows . [7, 24] From the relations and in , the velocity can be represented as , where the kernel is determined by the boundary condition. Then, the velocity field has the following representation: [7, 24, 10, 24]

| (8) |

To simulate the velocity field governed by Eqs. (2) and (3), we reformulate the stochastic process in Eq. (7) by replacing with a FNN with input and given parameter :

| (9) |

We call the above process the neural random vortex dynamics. Then, our goal is to find the optimal value of parameter such that the neural network function is equal to the velocity in Eq. (8), i.e., , where is sampled from Eq. (8) with .

Concretely, we find by solving the following optimization problem:

| (10) |

where is sampled from Eq. (8).

3.3 Algorithm with a Monte Carlo estimator

In this section, we introduce the numerical method used to find . We discuss the temporal discretization, the architecture of the neural network, the Monte Carlo method for estimating the integrals, and the gradient-based search algorithm.

We divide the time period into uniform intervals, i.e., . Given the vorticity field for all coordinate points in at , we adopt the following Euler discretization of Taylor’s formulation of Brownian motion in Eq. (7) to calculate the path of for all :

| (11) |

where and . Note that we use subnetworks , , each of which has input and parameter .

We evaluate the norm on the right-hand side of Eq. (10) on uniformly sampled grid points and search for by optimizing the following loss function:

| (12) |

where is the batch size per epoch and are the parameters in each subnetwork. Figure 1 illustrates the architecture of DRVN.

We utilize an unbiased Monte Carlo method to estimate the integrals and expectation in Eq. (12). We sample initial coordinate points distributed in uniformly and the corresponding vorticity field at to calculate the integral over . Furthermore, to approximate the expectation of the kernel function in Eq. (8), we utilize a Monte Carlo method to sample paths independently for each in the diffusion process Eq. (11), and denote these as for all . Then, the term is estimated using a Monte Carlo method as

| (13) |

where is the area of the domain . Notice that a two-phase Monte Carlo method is utilized in Eq. (13) to find unbiased estimates of the integration and expectation terms.

We use a gradient-based optimization algorithm to find . A basic optimization algorithm widely used in deep learning is stochastic gradient descent:

| (14) |

where is the iteration index. This algorithm starts from the initialization point . Other variants, like Adam,[19] are also widely used as optimizers to minimize the loss function in machine learning.

3.4 Implementation details

3.4.1 Forward problems

When solving forward problems for initial vortex and a specific , DRVN approximates the velocity field via neural networks and constructs the loss function via Eq. (12). DRVN utilizes Adam [19] to optimize the parameter , which is a variant of stochastic gradient descent for training deep models. Algorithm 1 illustrates the framework of DRVN for solving the 2D NSE forward problem.

Besides solving a specific NSE, we also applied DRVN to parametric solver learning, which aims to learn a generalizable model that can simultaneously output the velocity for a class of NSEs with different parameters. As the Reynolds number is directly related to the complexity of the numerical simulation and turbulence, developing a stable solver that is capable of solving NSEs with different values of is very important. For the 2D NSE, we use DRVN to obtain a neural network function that can be generalized to a range of in Eq. (2). Thus, we regard as an input to the neural network, and we sample different values of and synchronously during training. We consider the following loss function:

| (15) |

where and are the numbers of values of and sampled in each epoch, respectively. Furthermore, we also learn a parametric solver for different values of the diffusion parameter in our experiments with a 2D fractional NSE. The forward propagation, the construction of the loss function, and the optimization algorithm are all the same as when solving a forward problem.

3.4.2 Inverse problem

Given the initial vortex and the dataset generated from a system that satisfies an NSE, the aim of the inverse problem is to infer unknown parameters from the data. A naïve approach is to add the data term directly to the loss function [Eq. (12)], which is consistent with the methodology in PINN. [38] However, this approach has two drawbacks. On the one hand, we need to tune the hyperparameter carefully, as it balances the equation term and the data term. If the data are badly corrupted, the data term may unduly influence the equation term so that the model fails to learn the equation. On the other hand, we have to retrain a neural network again if we need to infer parameters from other data, which is time-consuming.

To address these problems, instead of directly adding the data term to the loss function, we devised a novel inference regime based on the following two procedures. First, we train a parametric solver network that can generalize to different , where is the unknown parameter in the parameter space . Second, we convert the inverse problem to the following optimization problem via the pretrained learning network for the parametric solver:

| (16) |

On the one hand, there is only one term in the loss function [Eq. (16)]. Thus, we do not need to tune any hyperparameters in the loss function. In addition, due to the pretrained parametric solver network, we do not need to worry about information in the NSEs being corrupted by noise in the dataset. On the other hand, equipped with our pretrained parametric solver network, we need to optimize only the above low-dimension optimization problem in Eq. (16), which we do via the gradient descent algorithm. Thus, each inverse problem can be solved in seconds.

4 Experiments

In this section, we apply DRVN to solve forward and inverse problems for incompressible NSEs. In this paper, all neural networks were initialized via the Xavier method. [9] We used the relative error to evaluate the difference between the ground truth and its prediction . It is defined as . We evaluated the performance of our method with the relative error at the terminal time and with the mean relative error over the whole time interval, which we denote as and , respectively. For each setting, we repeated the experiment five times with five different random number seeds and report the mean value and variance. We adopted Pytorch [31] and TensorFlow [1] to implement DRVN and PINN, respectively. All experiments were implemented on an Nvidia GeForce RTX 3080Ti 12G, Nvidia GeForce RTX 3090 24G, and Nvidia Tesla V100 16G. All run times reported in this paper were evaluated on a GeForce RTX 3080Ti 12G. When applying our method, we used a fully connected network with six hidden layers with equal hidden dimensions of 512 and used ReLU as the activation function. Tables 11 and 12 in Appendix B contain further details of the setup of the experiments.

4.1 Equations

We conducted experiments on a Lamb–Oseen vortex, [28] the fractional NSE, and a periodic Taylor–Green vortex. [7] Furthermore, we utilized DRVN to simulate a 3D Lamb–Oseen vortex, as described in Appendix C. Table 1 gives the kernel and driven noise in the corresponding SDE for NSEs with different dimensions and boundary conditions. More details of these equations will be introduced in the following sections.

| System | Domain | Kernel function | Driven noise | |

|---|---|---|---|---|

| Lamb–Oseen [28] |

|

Brownian motion | ||

| Fractional [13] | Lévy process | |||

| Taylor–Green [43] | Brownian motion |

4.1.1 Lamb–Oseen vortex

Here, we introduce the Lamb–Oseen vortex. Consider the following 2D vorticity equation:

| (17) |

where the velocity field is given by the Biot–Savart law:

| (18) |

When the initial vorticity , where is Dirac’s delta function, we can obtain the unique analytical solution of Eq. (17) as follows:

| (19) |

where the vorticity and velocity profiles are given by:

| (20) |

We considered a computational domain of and a time horizon of . The time step was s, and the Reynolds number was fixed as 10.

4.1.2 Fractional NSE

We consider the 2D fractional NSEs [Eq. (4)] described in Section 2.2. Compared with the diffusion process in the RVM for the general 2D NSE, we just need to replace the Brownian motion with the Lévy process for the fractional equation. The corresponding diffusion process and probabilistic representation are given by: [52, 51]

| (21) | ||||

where is the 2D -stable Lévy process, and

We considered a flow with a non-smooth initial condition given by

The Reynolds number was fixed as 10. The computational domain was and the time horizon . To evaluate the performance of our method, we utilized the fine-grained RVM (Appendix A) as the ground truth. We divided the time period into 200 intervals and calculated the average of 1000000 independent paths generated by Lévy processes. We utilized CUDA to accelerate the RVM algorithm. It took 4.5 h for an Nvidia GeForce RTX 3080Ti to generate each ground truth. For forward problems, we uniformly divided the total time period into 40 time intervals, i.e., .

4.1.3 Taylor–Green vortex

A Taylor–Green vortex [7] is an exact solution to a 2D incompressible NSE with a periodic boundary condition in the domain , where its velocity and vorticity are given by:

| (22) | ||||

and the kernel functional of the probabilistic representation for a periodic equation in is given by:

| (23) |

4.2 Baselines

For forward problems, we used PINNs as the baseline methods. PINNs optimize the FNN via the loss function, which includes the residual of the PDEs and the residual of the initial and boundary conditions. Given the initial data at , i.e., with , and the boundary data with , the loss function of the PINN in the vortex–velocity formulation is

| (24) |

where the input of the neural network is and the output is . Furthermore, PINNs represent the velocity field as the partial derivative of some latent function to satisfy automatically the divergence-free condition in the NSEs. [38, 12]

In this paper, we consider two types of loss function, namely PINN and PINN+. The first has no access to the boundary data (PINN), i.e., in Eq. (24), whereas the second can utilize additional boundary data to encode the geometrical information (PINN+). For a periodic PDE when no additional boundary data are provided, we embed the periodic information in the PINN as follows. First, we constrain the left (lower) boundary to be equal to the right (upper) one, i.e., we replace the third term in the loss function [Eq. (24)] with the following boundary loss:

| (25) |

where , , , and denote data points sampled from the left, right, lower, and upper boundaries of , respectively.

4.3 Results: Forward problems

In this section, we describe the results of experiments to solve three 2D forward problems: a Lamb–Oseen vortex, the fractional NSE, and a Taylor–Green vortex. Furthermore, we simulated a 3D Lamb–Oseen vortex, as described in Appendix C.

4.3.1 Lamb–Oseen Vortex

Comparison with PINNs.

First, we simulated a 2D Lamb–Oseen vortex using our proposed method and the two PINNs. For the PINNs, we adopted the network architecture in NSFnets, [17] which has seven hidden layers with 100 neurons and activation functions. We looked at the two types of PINN introduced in Section 4.2, namely PINN and PINN+. Moreover, as the initial condition approaches infinity around the origin, the sampling points for a PINN started from s to avoid extremely large values.

The relative errors of DRVN and the PINNs are given in Table 2. We can see that the DRVN simulation was accurate with an average relative error of for and for . Furthermore, both PINN and PINN+ performed poorly for the 2D Lamb–Oseen vortex. There are two main reasons why the PINNs failed. First, they failed to learn this ill-conditioned equation due to the singularity at , which was also observed in [20]. Second, the PINNs cannot embed the initial conditions on integrally and receive only truncated initial information.

| Error | DRVN | PINN | PINN+ |

|---|---|---|---|

Effects of .

To test how changing affects the performance of our model, we evaluated our method with values of ranging from to . The relative errors and training dynamics are given in Fig. 2. As we can see, with an increase in the number of sampling points, the error decreased, but the overall fluctuation of the error for was smaller than , which shows the robustness of DRVN over .

Meaningful derivative.

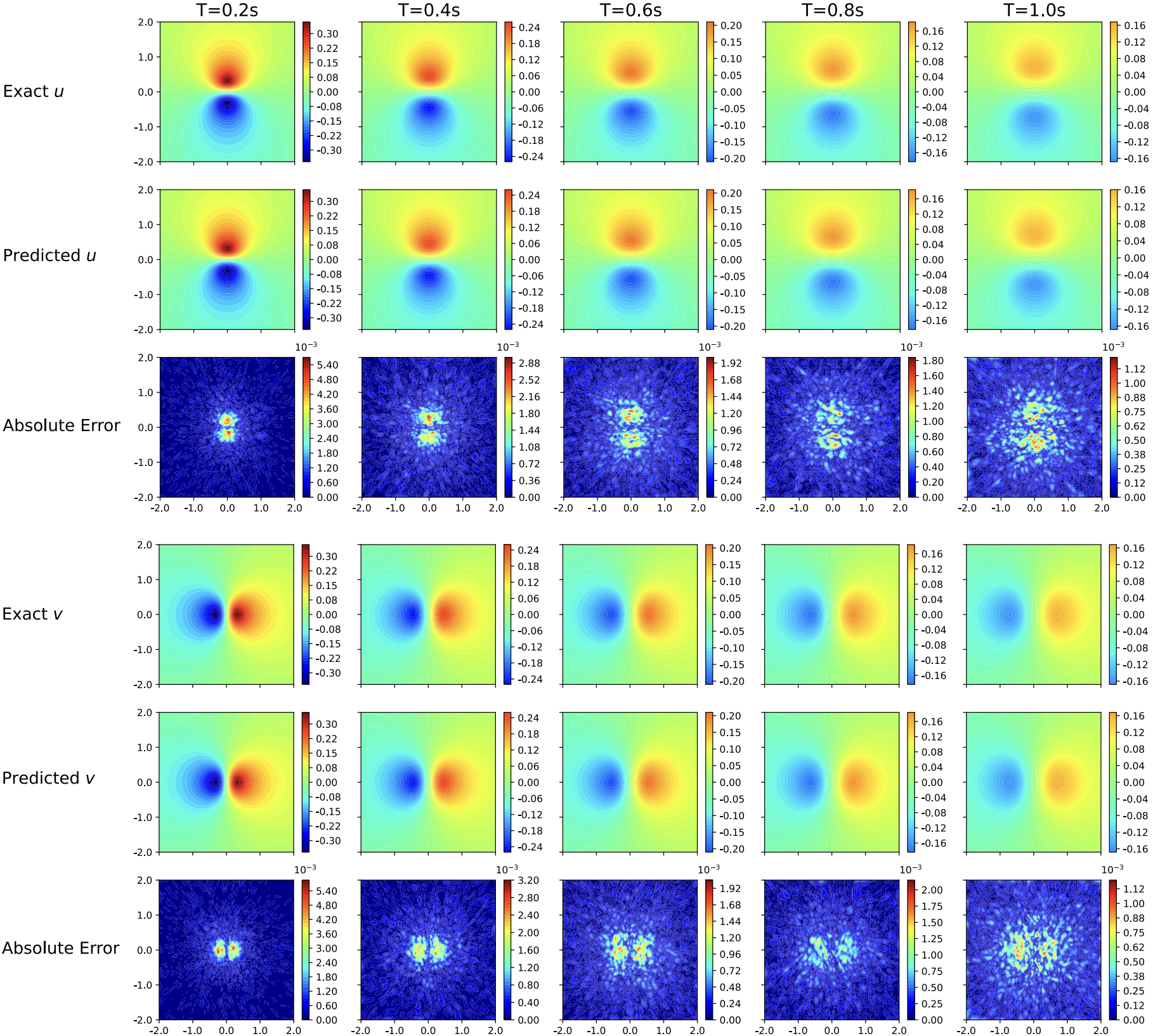

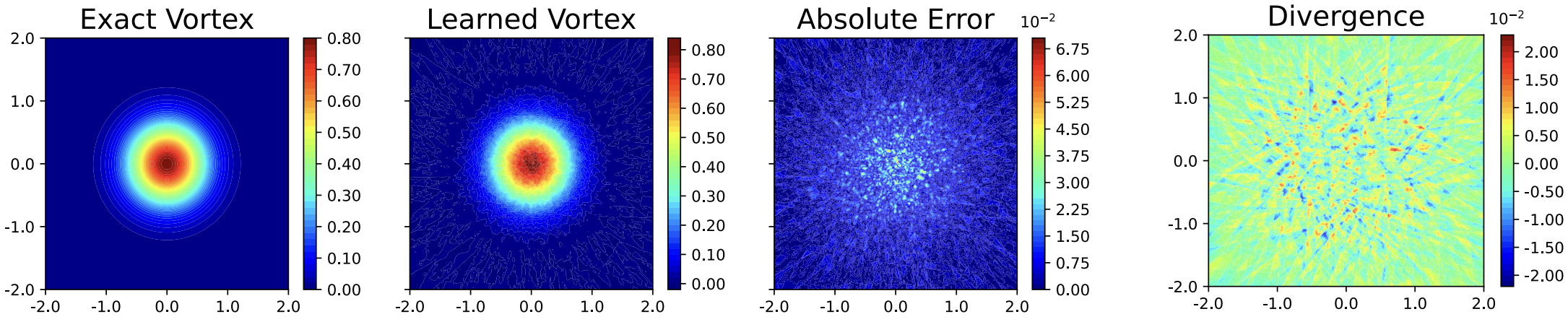

Snapshots of the learned velocity fields and corresponding absolute error for are displayed in Fig. 3. Note that we did not constrain the curl and divergence of explicitly in either Eq. (2) or (3), but the neural network surprisingly learned the meaningful curl and divergence (Fig. 4), which correspond to the incompressible property and vorticity–velocity formulation in the NSEs, respectively. This experimental result indicates that the neural network learned via DRVN has good physical properties.

Parametric solver learning.

Besides learning one model for a specific , we also learned a parametric solver , which can generalize to different values of ranging from to . We changed the input dimension to 3 in the learning network for the parametric solver, and set the range of as to enhance the generalization at the boundary. To distinguish between different values of for small orders of magnitude, we fed to the neural network rather than directly when training the parametric solver. The evaluation results are listed in Table 3. The relative errors are robust over . The errors slightly increased as decreased, which is reasonable because the velocity field became sharper as increased.

| (%) | (%) | |

|---|---|---|

| 0.01 | ||

| 0.02 | ||

| 0.05 | ||

| 0.1 | ||

| 0.2 | ||

| 0.5 |

Training trick: Gradient stopping.

When training DRVN, we adopted gradient stopping as a training trick. All the neural networks participate in calculating in Eq. (11). Thus, the back propagation can become extremely time and memory consuming due to the composition of several neural network functions. To make the training more efficient and stable, we stopped the gradient flows in the computational graphs when updating during training, which is like what is done in reinforcement learning [45] and contrastive learning. [5]

We ran ablation experiments to evaluate the effects of this gradient stopping trick. Table 4 compares the relative error, required time, and memory with and without gradient stopping. We set the number of samples to 1000. The other settings were the same as for the forward problem described in Section 3.2. The gradient stopping trick reduces the time by around 37% and the memory by around 36% with comparable precision compared with the original training technique.

| Parameter | With gradient stopping | Without |

|---|---|---|

| (%) | ||

| (%) | ||

| Time per epoch (s) | ||

| Memory (MB) |

4.3.2 Fractional NSE

General forward problems.

The diffusion parameter is an essential property of the fractional equation. Thus, we evaluated our simulation algorithm for values of ranging from 0.5 to 2. Table 5 shows that the relative errors increased as decreased. The errors at s were smaller than those for the whole interval.

| (%) | (%) | |

|---|---|---|

| 0.50 | ||

| 0.75 | ||

| 1.00 | ||

| 1.25 | ||

| 1.50 | ||

| 1.75 | ||

| 2.00 |

To gain a deeper understanding of the influence of , we plot the landscapes of the learned fractional equations from to s in Fig. 5. On the one hand, the surfaces of the equations became more singular as became smaller. Thus, training the neural networks became more difficult, which is consistent with the frequency principle of neural networks studied in [50, 37]. On the other hand, the landscapes became smoother over time. Thus, the error at the terminal time was relatively smaller than over the whole interval. Furthermore, we show the effects of the number of sampling paths in Fig. 6. In general, the error decreased as we increased the number of samples .

Parametric solver learning.

We also learned a parametric solver , which can generalize to values of ranging from to . The results are shown in Table 6. The relative errors increased when decreased, as they did for the forward problem.

| (%) | (%) | |

|---|---|---|

| 1.00 | ||

| 1.25 | ||

| 1.50 | ||

| 1.75 | ||

| 2.00 |

4.3.3 Taylor–Green vortex

Comparisons with the PINNs.

In this part, we simulate a 2D Taylor–Green vortex using our proposed method and the two PINNs. Like the experiments in Section 4.3.1, we use PINN and PINN+ as the baselines. We choose a fully connected neural network with seven hidden layers and with 500 neurons per layer. [17] Table 7 shows that PINN fails to simulate the velocity field because no boundary information is provided. Note that the results for DRVN are comparable with those for PINN+, although the loss function of DRVN does not include boundary data during as a term.

| Algorithm | (%) | (%) |

|---|---|---|

| DRVN | ||

| PINN | ||

| PINN+ |

Snapshots of the learned velocity fields and corresponding absolute error during are displayed in Fig. 7. As shown, DRVN can obtain accurate solutions for the Taylor–Green vortex. The errors are mainly at the domain boundary.

Architecture trick: Periodic preprocessing operator.

When solving periodic NSEs in a periodic domain , we sample data points in and then train the network. However, the paths of in Eq. (11) can extend out of due to the randomness of the Brownian motion, and the neural network was not trained out of . To reduce the resulting error, we utilized the periodic preprocessing operator to adjust the path of the SDE. We add the following periodic preprocessing operator before the forward propagation of the neural network:

| (26) |

Due to the periodicity of this equation, for all and . Thus, the periodic preprocessing operator projects the SDE output inside the domain without inducing additional errors.

The effects of this periodic preprocessing with different sizes of the temporal lattice are listed in Table 8. This periodic preprocessing trick improves the performance of DRVN significantly with no additional computational cost for all .

| (%) | |||||

|---|---|---|---|---|---|

| (%) w/o pp | |||||

| (%) | |||||

| (%) w/o pp | |||||

| Time (s) | |||||

| Time w/o pp (s) |

4.4 Results: Inverse problems

In this section, we conduct inverse problems on a Lamb–Oseen vortex and the fractional NSE. We aim to infer the viscosity term and the diffusion parameter from given data for these two equations, respectively. We consider the following three training datasets:

-

1.

clean data points,

-

2.

noisy data points whose labels are perturbed by additive uncorrelated Gaussian noise, , where

-

3.

noisy data points whose labels are perturbed by additive uncorrelated Gaussian noise, , where

All data points were sampled uniformly in at s. We set and for the Lamb–Oseen vortex and the fractional NSE, respectively.

4.4.1 Lamb–Oseen vortex

In this part, we apply the learned parametric solver network to handle inverse problems for the three training datasets. The results for DRVN and ARVM are reported in Table 9 using subscripts and , respectively. The table shows that, compared with ARVM, DRVN needs only 1% of the time to solve the inverse problem, and obtains remarkably higher accuracy.

| 1 | 2 | 5 | 10 | 20 | 50 | Time (s) | |

| 100 clean data points | |||||||

| (%) | |||||||

| (%) | |||||||

| 100 noisy data points with Gaussian noise | |||||||

| (%) | |||||||

| (%) | |||||||

| 1000 noisy data points with Gaussian noise | |||||||

| (%) | |||||||

| (%) | |||||||

4.4.2 Factional PDE

Using the same settings described in Section 4.4.1, we apply the learned parametric solver network with respect to to handle inverse problems for the three inverse problems mentioned above. The results are reported in Table 10. The relative errors ranged from 0.001 to 0.01 under low noise levels, which are sufficiently small. Moreover, the relative errors were around 0.05 when the noise was high, indicating that more data were required to obtain a more precise estimate. Note that the total time for optimizing the inverse loss was less than 3 s for all situations.

| 1.00 | 1.25 | 1.50 | 1.75 | 2.00 | Time (s) | |

|---|---|---|---|---|---|---|

| 100 clean data points | ||||||

| (%) | ||||||

| 1000 noisy data points with Gaussian noise | ||||||

| (%) | ||||||

| 2000 noisy data points with Gaussian noise | ||||||

| (%) | ||||||

5 Conclusions

In this paper, we propose DRVN for simulating the fluids and inferring unknown parameters of NSEs. DRVN utilizes the probabilistic representation in the random vortex formulation of an NSE and substitutes Monte Carlo sampling for the derivative calculation. Thus, DRVN can solve non-smooth and fractional NSEs efficiently, which expands the application of the deep learning method in fluid mechanics. The numerical experiments on various equations verify our algorithm. However, DRVN still has some limitations. First, the convergence rate of DRVN is non-trivial due to the non-convexity of neural networks and the stopping gradient technique. Second, we do not consider NSEs with an external force, which is a critical situation in control. We will investigate both the convergence of DRVN and apply DRVN to NSEs with an external force in future work.

Acknowledgments

This project was supported financially by Microsoft Research. We sincerely acknowledge Professor Xicheng Zhang from Wuhan University for helpful discussions on the RVM for the fractional NSE. We also sincerely acknowledge Professor Shihua Zhang from the Chinese Academy of Sciences for support and discussions.

Appendix A Random vortex method

In this section, we take the 2D NSE as an example to illustrate RVM. Recall the Euler discretization in Eq. (7):

| (27) |

RVM utilizes the probabilistic representation of the velocity field in Eq. (8) to calculate :

| (28) |

For other points out of the coordinate points , RVM calculates as follows:

| (29) |

Since RVM is a kind of differentiable solver, we can utilize the adjoint method for the inverse problem. Given the initial vortex and the dataset generated from a system that satisfies the NSEs, ARVM is based on the following optimization problem:

| (30) |

As is differentiable, we directly utilize Adam to find the optimum . We optimize the loss function for 2000 epochs with the initial learning rate 0.01 and reduce the learning rate by a factor of 0.2 every 500 epochs. To distinguish between different values of with small orders of magnitude and obtain stable results, we feed into ARVM rather than directly.

Appendix B Experimental Details

B.1 Experimental Details for DRVN

| Equations | Problems | Epoch | Step size | Decay rate | Batch size | ||

|---|---|---|---|---|---|---|---|

| 2D Lamb–Oseen | Forward | 0.001 | 10000 | 500 | 0.5 | 2000 | 1000 |

| Parametric | 0.001 | 10000 | 500 | 0.5 | 20000 | 500 | |

| Inverse | 0.01 | 2000 | 500 | 0.2 | Data size | NA | |

| 2D Fractional | Forward | 0.001 | 10000 | 500 | 0.5 | 2000 | 1000 |

| Parametric | 0.001 | 10000 | 500 | 0.5 | 10000 | 1000 | |

| Inverse | 0.01 | 2000 | 500 | 0.2 | Data size | NA | |

| 2D Taylor–Green | Forward | 0.0005 | 20000 | 1000 | 0.5 | 100 | 2 |

| 3D Lamb–Oseen | Forward | 0.0005 | 20000 | 1000 | 0.5 | 100 | 10 |

B.2 Experimental Details for PINNs

| 2D Lamb–Oseen | 2D Taylor–Green | |||

| Parameter | PINN | PINN+ | PINN | PINN+ |

| 0.0001 | 0.0001 | 0.0001 | 0.0001 | |

| Epoch | 10000 | 10000 | 20000 | 20000 |

| Step size | 2000 | 2000 | 10000 | 10000 |

| Decay rate | 0.1 | 0.1 | 0.1 | 0.1 |

| Batch size | ||||

| 0 | ||||

| 100 | 100 | 1 | 1 | |

| 0 | 100 | 1 | 1 | |

Appendix C 3D Lamb–Oseen Vortex

In this experiment, we aim to simulate the velocity field for a 3D Lamb–Oseen vortex, [28] which is represented by a 3D incompressible NSE with initial vorticity for . The corresponding exact solution of the velocity field is given by:

| (31) |

C.1 3D random vortex dynamics

In 3D, we utilize Einstein’s notation for simplicity. Here, is the Levi–Civita symbol. We utilize to represent the velocity in . The diffusion process for the 3D NSE is given in [36] as follows:

| (32) |

where denotes the 3D Brownian motion. Then, we can obtain its corresponding probabilistic representation as follows: [36]

| (33) |

where is a symmetric matrix. The evolution of obeys the following dynamic system:

| (34) |

where and

Compared to the 2D cases, calculating the velocity is more complex due to the existence of . DRVN was implemented in 3D as shown in Algorithm 2.

C.2 Problems setups

In this part, we aim to solve forward problems for a 3D Lamb–Oseen vortex. The vorticity field is initialized as with for . The Reynolds number is fixed as 2. When training the neural network, we sample data points in the domain to guarantee the neural networks can learn the information around the initial coordinates .

C.3 Results: Forward problems

Figure 8 shows snapshots of the learned velocity fields and corresponding absolute error during . Furthermore, we show the effects of the size of the temporal lattice on the relative error in Table 13. Due to the singular initialization in the 3D Lamb–Oseen vortex, the surfaces of the equations change faster over time. Thus, unlike for the 2D Taylor–Green vortex, the relative errors are more sensitive to the number of temporal intervals.

| (%) | (%) | Time (s) | |

|---|---|---|---|

| 5 | |||

| 10 | |||

| 20 | |||

| 40 | |||

| 100 |

References

- [1] Martín Abadi, Paul Barham, Jianmin Chen, Zhifeng Chen, Andy Davis, Jeffrey Dean, Matthieu Devin, Sanjay Ghemawat, Geoffrey Irving, Michael Isard, et al. Tensorflow: A system for large-scale machine learning. In 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), pages 265–283, 2016.

- [2] Basim K. Albuohimad. Analytical technique of the fractional Navier–Stokes model by Elzaki transform and homotopy perturbation method. AIP Conf. Proc., 2144(1):050002, 2019.

- [3] Cx K Batchelor and GK Batchelor. An Introduction to Fluid Dynamics. Cambridge University Press, 2000.

- [4] Shengze Cai, Zhiping Mao, Zhicheng Wang, Minglang Yin, and George Em Karniadakis. Physics-informed neural networks (PINNs) for fluid mechanics: A review. Acta Mech. Sin., pages 1–12, 2022.

- [5] Xinlei Chen and Kaiming He. Exploring simple Siamese representation learning. In Proceedings of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pages 15750–15758, 2021.

- [6] Jan W Cholewa and Tomasz Dlotko. Fractional Navier–Stokes equations. Discrete Contin. Dyn. Syst. B, 23(8):2967, 2018.

- [7] Alexandre Joel Chorin. Numerical study of slightly viscous flow. J. Fluid Mech., 57(4):785–796, 1973.

- [8] Dixia Fan, Liu Yang, Zhicheng Wang, Michael S. Triantafyllou, and George Em Karniadakis. Reinforcement learning for bluff body active flow control in experiments and simulations. PNAS, 117(42):26091–26098, 2020.

- [9] Xavier Glorot and Yoshua Bengio. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the 30th Int. Conf. on Artificial Intelligence and Statistics, pages 249–256. JMLR Workshop and Conf. Proc., 2010.

- [10] Jonathan Goodman. Convergence of the random vortex method. In Hydrodynamic Behavior and Interacting Particle Systems, pages 99–106. Springer, 1987.

- [11] Jiequn Han, Arnulf Jentzen, and E Weinan. Solving high-dimensional partial differential equations using deep learning. PNAS, 115(34):8505–8510, 2018.

- [12] Johannes N. Hendriks, Carl Jidling, Adrian G. Wills, and Thomas Bo Schön. Linearly constrained neural networks. Unpublished, 2020.

- [13] Richard Herrmann. Fractional Calculus: An Introduction for Physicists. World Scientific, 2011.

- [14] Kurt Hornik, Maxwell Stinchcombe, and Halbert White. Multilayer feedforward networks are universal approximators. Neural Networks, 2(5):359–366, 1989.

- [15] Alice Jaccod and Sergio Chibbaro. Constrained reversible system for Navier–Stokes turbulence. Phys. Rev. Lett., 127(19):194501, 2021.

- [16] Antony Jameson, Luigi Martinelli, and Niles A Pierce. Optimum aerodynamic design using the Navier–Stokes equations. Theor. Comput. Fluid Dyn., 10(1):213–237, 1998.

- [17] Xiaowei Jin, Shengze Cai, Hui Li, and George Em Karniadakis. NSFnets (Navier–Stokes flow nets): Physics-informed neural networks for the incompressible Navier–Stokes equations. J. Comput. Phys., 426:109951, 2021.

- [18] Jungeun Kim, Kookjin Lee, Dongeun Lee, Sheo Yon Jhin, and Noseong Park. DPM: A novel training method for physics-informed neural networks in extrapolation. In Proceedings of the AAAI Conf. on Artificial Intelligence, pages 8146–8154, 2021.

- [19] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. Unpublished, 2015.

- [20] Aditi Krishnapriyan, Amir Gholami, Shandian Zhe, Robert Kirby, and Michael W Mahoney. Characterizing possible failure modes in physics-informed neural networks. Adv. Neural Inf. Process. Syst., 34:26548–26560, 2021.

- [21] Jinxing Lai, Sheng Mao, Junling Qiu, Haobo Fan, Qian Zhang, Zhinan Hu, and Jianxun Chen. Investigation progresses and applications of fractional derivative model in geotechnical engineering. Math. Prob. Eng., 2016:9183296, 2016.

- [22] Anna Lischke, Guofei Pang, Mamikon Gulian, Fangying Song, Christian Glusa, Xiaoning Zheng, Zhiping Mao, Wei Cai, Mark M Meerschaert, Mark Ainsworth, et al. What is the fractional Laplacian? A comparative review with new results. J. Comput. Phys., 404:109009, 2020.

- [23] Ziqi Liu, Wei Cai, and Zhi-Qin John Xu. Multi-scale deep neural network (MscaleDNN) for solving Poisson-Boltzmann equation in complex domains. Commun. Comput. Phys., 28(5):1970–2001, 2020.

- [24] Ding-Gwo Long. Convergence of the random vortex method in two dimensions. J. Am. Math. Soc., 1(4):779–804, 1988.

- [25] Zichao Long, Yiping Lu, Xianzhong Ma, and Bin Dong. PDE-Net: Learning PDEs from data. In 35th Int. Conf. on Machine Learning, volume 80, pages 3208–3216. PMLR, 2018.

- [26] Lu Lu, Raphael Pestourie, Wenjie Yao, Zhicheng Wang, Francesc Verdugo, and Steven G Johnson. Physics-informed neural networks with hard constraints for inverse design. SIAM J. Sci. Comput., 43(6):B1105–B1132, 2021.

- [27] Andrew J. Majda and Andrea L. Bertozzi. In Vorticity and Incompressible Flow, Cambridge Texts in Applied Mathematics. Cambridge University Press, Cambridge, 2001.

- [28] CW Oseen. Über wirbelbewegung in einer reibenden flüssigkeit, ark. Foer Mat. Astron. Och Fys, 1911.

- [29] Guofei Pang, Lu Lu, and George Em Karniadakis. fPINNs: Fractional physics-informed neural networks. SIAM J. Sci. Comput., 41(4):A2603–A2626, 2019.

- [30] Demetrios T. Papageorgiou. Film flows in the presence of electric fields. Ann. Rev. Fluid Mech., 51(1):155–187, 2019.

- [31] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst., 32, 2019.

- [32] Suraj Pawar, Omer San, Burak Aksoylu, Adil Rasheed, and Trond Kvamsdal. Physics guided machine learning using simplified theories. Phys. Fluids, 33(1):011701, 2021.

- [33] Lev Semenovich Pontryagin. Mathematical Theory of Optimal Processes. CRC Press, 1987.

- [34] Apostolos F Psaros, Kenji Kawaguchi, and George Em Karniadakis. Meta-learning PINN loss functions. J. Comput. Phys., 458:111121, 2022.

- [35] Zhongmin Qian, Youchun Qiu, and Yihuang Zhang. Tracking the vortex motion by using Brownian fluid particles. Phys. Fluids, 33(10):105113, 2021.

- [36] Zhongmin Qian, Endre Süli, and Yihuang Zhang. Random vortex dynamics via functional stochastic differential equations. Unpublished, 2022.

- [37] Nasim Rahaman, Aristide Baratin, Devansh Arpit, Felix Draxler, Min Lin, Fred Hamprecht, Yoshua Bengio, and Aaron Courville. On the spectral bias of neural networks. In Proceedings of the 36th Int. Conf. on Machine Learning, volume 97, pages 5301–5310. PMLR, 2019.

- [38] Maziar Raissi, Paris Perdikaris, and George E Karniadakis. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys., 378:686–707, 2019.

- [39] Maziar Raissi, Alireza Yazdani, and George Em Karniadakis. Hidden fluid mechanics: Learning velocity and pressure fields from flow visualizations. Science, 367(6481):1026–1030, 2020.

- [40] Alexander J Smits. A Physical Introduction to Fluid Mechanics. John Wiley & Sons Incorporated, 2000.

- [41] V. Sriram and Q.W. Ma. Review on the local weak form-based meshless method (MLPG): Developments and applications in ocean engineering. Appl. Ocean Res., 116:102883, 2021.

- [42] Luning Sun and Jian-Xun Wang. Physics-constrained Bayesian neural network for fluid flow reconstruction with sparse and noisy data. Theor. Appl. Mech. Lett., 10(3):161–169, 2020.

- [43] Geoffrey I Taylor. Diffusion by continuous movements. Proc. London Math. Soc., 2(1):196–212, 1922.

- [44] Roger Temam. Navier-Stokes Equations: Theory and Numerical Analysis, volume 343. American Mathematical Society, 2001.

- [45] Hado Van Hasselt, Arthur Guez, and David Silver. Deep reinforcement learning with double Q-learning. In Proceedings of the 30th AAAI Conf. on Artificial Intelligence, pages 2094–2100. AAAI Press, 2016.

- [46] Shisheng Wang. Extensions to the Navier–Stokes equations. Phys. Fluids, 34(5):053106, 2022.

- [47] Sifan Wang, Yujun Teng, and Paris Perdikaris. Understanding and mitigating gradient flow pathologies in physics-informed neural networks. SIAM J. Sci. Comput., 43(5):A3055–A3081, 2021.

- [48] Zhicheng Wang, Dixia Fan, Michael S. Triantafyllou, and George Em Karniadakis. A large-eddy simulation study on the similarity between free vibrations of a flexible cylinder and forced vibrations of a rigid cylinder. J. Fluids Struct., 101:103223, 2021.

- [49] Brian D. Wood, Xiaoliang He, and Sourabh V. Apte. Modeling turbulent flows in porous media. Ann. Rev. Fluid Mech., 52(1):171–203, 2020.

- [50] Zhi-Qin John Xu, Yaoyu Zhang, Tao Luo, Yanyang Xiao, and Zheng Ma. Frequency principle: Fourier analysis sheds light on deep neural networks. Unpublished, 2019.

- [51] Xicheng Zhang. Stochastic functional differential equations driven by lévy processes and quasi-linear partial integro-differential equations. The Annals of Applied Probability, 22(6):2505–2538, 2012.

- [52] Xicheng Zhang. Stochastic Lagrangian particle approach to fractal Navier–Stokes equations. Commun. Math. Phys., 311(1):133–155, 2012.

- [53] Yong Zhou and Li Peng. On the time-fractional Navier–Stokes equations. Comput. Math. Appl., 73(6):874–891, 2017.

- [54] Yong Zhou and Li Peng. Weak solutions of the time-fractional Navier–Stokes equations and optimal control. Comput. Math. Appl., 73(6):1016–1027, 2017.