DPar2: Fast and Scalable PARAFAC2 Decomposition for Irregular Dense Tensors

Abstract

Given an irregular dense tensor, how can we efficiently analyze it? An irregular tensor is a collection of matrices whose columns have the same size and rows have different sizes from each other. PARAFAC2 decomposition is a fundamental tool to deal with an irregular tensor in applications including phenotype discovery and trend analysis. Although several PARAFAC2 decomposition methods exist, their efficiency is limited for irregular dense tensors due to the expensive computations involved with the tensor.

In this paper, we propose DPar2, a fast and scalable PARAFAC2 decomposition method for irregular dense tensors. DPar2 achieves high efficiency by effectively compressing each slice matrix of a given irregular tensor, careful reordering of computations with the compression results, and exploiting the irregularity of the tensor. Extensive experiments show that DPar2 is up to faster than competitors on real-world irregular tensors while achieving comparable accuracy. In addition, DPar2 is scalable with respect to the tensor size and target rank.

Index Terms:

irregular dense tensor, PARAFAC2 decomposition, efficiencyI Introduction

How can we efficiently analyze an irregular dense tensor? Many real-world multi-dimensional arrays are represented as irregular dense tensors; an irregular tensor is a collection of matrices with different row lengths. For example, stock data can be represented as an irregular dense tensor; the listing period is different for each stock (irregularity), and almost all of the entries of the tensor are observable during the listing period (high density). The irregular tensor of stock data is the collection of the stock matrices whose row and column dimension corresponds to time and features (e.g., the opening price, the closing price, the trade volume, etc.), respectively. In addition to stock data, many real-world data including music song data and sound data are also represented as irregular dense tensors. Each song can be represented as a slice matrix (e.g., time-by-frequency matrix) whose rows correspond to the time dimension. Then, the collection of songs is represented as an irregular tensor consisting of slice matrices of songs each of whose time length is different. Sound data are represented similarly.

Tensor decomposition has attracted much attention from the data mining community to analyze tensors [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]. Specifically, PARAFAC2 decomposition has been widely used for modeling irregular tensors in various applications including phenotype discovery [11, 12], trend analysis [13], and fault detection [14]. However, existing PARAFAC2 decomposition methods are not fast and scalable enough for irregular dense tensors. Perros et al. [11] improve the efficiency for handling irregular sparse tensors, by exploiting the sparsity patterns of a given irregular tensor. Many recent works [12, 15, 16, 17] adopt their idea to handle irregular sparse tensors. However, they are not applicable to irregular dense tensors that have no sparsity pattern. Although Cheng and Haardt [18] improve the efficiency of PARAFAC2 decomposition by preprocessing a given tensor, there is plenty of room for improvement in terms of computational costs. Moreover, there remains a need for fully employing multicore parallelism. The main challenge to successfully design a fast and scalable PARAFAC2 decomposition method is how to minimize the computational costs involved with an irregular dense tensor and the intermediate data generated in updating factor matrices.

In this paper, we propose DPar2 (Dense PARAFAC2 decomposition), a fast and scalable PARAFAC2 decomposition method for irregular dense tensors. Based on the characteristics of real-world data, DPar2 compresses each slice matrix of a given irregular tensor using randomized Singular Value Decomposition (SVD). The small compressed results and our careful ordering of computations considerably reduce the computational costs and the intermediate data. In addition, DPar2 maximizes multi-core parallelism by considering the difference in sizes between slices. With these ideas, DPar2 achieves higher efficiency and scalability than existing PARAFAC2 decomposition methods on irregular dense tensors. Extensive experiments show that DPar2 outperforms the existing methods in terms of speed, space, and scalability, while achieving a comparable fitness, where the fitness indicates how a method approximates a given data well (see Section IV-A).

The contributions of this paper are as follows. {itemize*}

Algorithm. We propose DPar2, a fast and scalable PARAFAC2 decomposition method for decomposing irregular dense tensors.

Analysis. We provide analysis for the time and the space complexities of our proposed method DPar2.

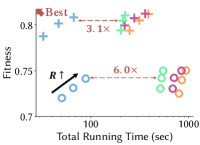

Experiment. DPar2 achieves up to faster running time than previous PARAFAC2 decomposition methods based on ALS while achieving a similar fitness (see Fig. 1).

II Preliminaries

In this section, we describe tensor notations, tensor operations, Singular Value Decomposition (SVD), and PARAFAC2 decomposition. We use the symbols listed in Table I.

| Symbol | Description |

| irregular tensor of slices for | |

| slice matrix () | |

| -th row of a matrix | |

| -th column of a matrix | |

| -th element of a matrix | |

| mode- matricization of a tensor | |

| , | factor matrices of the th slice |

| , | factor matrices of an irregular tensor |

| , , | SVD results of the th slice |

| , , | SVD results of the second stage |

| th vertical block matrix of | |

| , , | SVD results of |

| target rank | |

| Kronecker product | |

| Khatri-Rao product | |

| element-wise product | |

| horizontal concatenation | |

| vectorization of a matrix |

II-A Tensor Notation and Operation

We use boldface lowercases (e.g. ) and boldface capitals (e.g. ) for vectors and matrices, respectively. In this paper, indices start at . An irregular tensor is a 3-order tensor whose -frontal slice is . We denote irregular tensors by instead of where is the number of -frontal slices of the tensor. An example is described in Fig. 2. We refer the reader to [19] for the definitions of tensor operations including Frobenius norm, matricization, Kronecker product, and Khatri-Rao product.

II-B Singular Value Decomposition (SVD)

Singular Value Decomposition (SVD) decomposes to . is the left singular vector matrix of ; is a column orthogonal matrix where is the rank of and , , are the eigenvectors of . is an diagonal matrix whose diagonal entries are singular values. The -th singular value is in where . is the right singular vector matrix of ; is a column orthogonal matrix where , , are the eigenvectors of .

Randomized SVD. Many works [21, 20, 22] have introduced efficient SVD methods to decompose a matrix by applying randomized algorithms. We introduce a popular randomized SVD in Algorithm 1. Randomized SVD finds a column orthogonal matrix of using random matrix , constructs a smaller matrix , and finally obtains the SVD result , , of by computing SVD for , i.e., . Given a matrix , the time complexity of randomized SVD is where is the target rank.

II-C PARAFAC2 decomposition

PARAFAC2 decomposition proposed by Harshman [23] successfully deals with irregular tensors. The definition of PARAFAC2 decomposition is as follows:

Definition 1 (PARAFAC2 Decomposition).

Given a target rank and a 3-order tensor whose -frontal slice is for , PARAFAC2 decomposition approximates each -th frontal slice by . is a matrix of the size , is a diagonal matrix of the size , and is a matrix of the size which are common for all the slices.

The objective function of PARAFAC2 decomposition [23] is given as follows.

| (1) |

For uniqueness, Harshman [23] imposed the constraint (i.e., for all ), and replace with where is a column orthogonal matrix and is a common matrix for all the slices. Then, Equation (1) is reformulated with :

| (2) |

Fig. 2 shows an example of PARAFAC2 decomposition for a given irregular tensor. A common approach to solve the above problem is ALS (Alternating Least Square) which iteratively updates a target factor matrix while fixing all factor matrices except for the target. Algorithm 2 describes PARAFAC2-ALS. First, we update each while fixing , , for (lines 4 and 5). By computing SVD of as , we update as , which minimizes Equation (3) over [11, 24, 25]. After updating , the remaining factor matrices , , is updated by minimizing the following objective function:

| (3) |

Minimizing this function is to update , , using CP decomposition of a tensor whose -th frontal slice is (lines 8 and 10). We run a single iteration of CP decomposition for updating them [24] (lines 11 to 16). , , , and are alternatively updated until convergence.

Iterative computations with an irregular dense tensor require high computational costs and large intermediate data. RD-ALS [18] reduces the costs by preprocessing a given tensor and performing PARAFAC2 decomposition using the preprocessed result, but the improvement of RD-ALS is limited. Also, recent works successfully have dealt with sparse irregular tensors by exploiting sparsity. However, the efficiency of their models depends on the sparsity patterns of a given irregular tensor, and thus there is little improvement on irregular dense tensors. Specifically, computations with large dense slices for each iteration are burdensome as the number of iterations increases. We focus on improving the efficiency and scalability in irregular dense tensors.

III Proposed Method

In this section, we propose DPar2, a fast and scalable PARAFAC2 decomposition method for irregular dense tensors.

III-A Overview

Before describing main ideas of our method, we present main challenges that need to be tackled.

-

C1.

Dealing with large irregular tensors. PARAFAC2 decomposition (Algorithm 2) iteratively updates factor matrices (i.e., , , and ) using an input tensor. Dealing with a large input tensor is burdensome to update the factor matrices as the number of iterations increases.

-

C2.

Minimizing numerical computations and intermediate data. How can we minimize the intermediate data and overall computations?

-

C3.

Maximizing multi-core parallelism. How can we parallelize the computations for PARAFAC2 decomposition?

The main ideas that address the challenges mentioned above are as follows:

As shown in Fig. 3, DPar2 first compresses each slice of an irregular tensor using randomized SVD (Section III-B). The compression is performed once before iterations, and only the compression results are used at iterations. It significantly reduces the time and space costs in updating factor matrices. After compression, DPar2 updates factor matrices at each iteration, by exploiting the compression results (Sections III-C to III-E). Careful reordering of computations is required to achieve high efficiency. Also, by carefully allocating input slices to threads, DPar2 accelerates the overall process (Section III-F).

III-B Compressing an irregular input tensor

DPar2 (see Algorithm 3) is a fast and scalable PARAFAC2 decomposition method based on ALS described in Algorithm 2. The main challenge that needs to be tackled is to minimize the number of heavy computations involved with a given irregular tensor consisting of slices for (in lines 4 and 8 of Algorithm 2). As the number of iterations increases (lines 2 to 17 in Algorithm 2), the heavy computations make PARAFAC2-ALS slow. For efficiency, we preprocess a given irregular tensor into small matrices, and then update factor matrices by carefully using the small ones.

Our approach to address the above challenges is to compress a given irregular tensor before starting iterations. As shown in Fig. 4, our main idea is two-stage lossy compression with randomized SVD for the given tensor: 1) DPar2 performs randomized SVD for each slice for at target rank , and 2) DPar2 performs randomized SVD for a matrix, the horizontal concatenation of singular value matrices and right singular vector matrices of slices . Randomized SVD allows us to compress slice matrices with low computational costs and low errors.

First Stage. In the first stage, DPar2 compresses a given irregular tensor by performing randomized SVD for each slice at target rank (line 3 in Algorithm 3).

| (4) |

where is a matrix consisting of left singular vectors, is a diagonal matrix whose elements are singular values, and is a matrix consisting of right singular vectors.

Second Stage. Although small compressed data are generated in the first step, there is a room to further compress the intermediate data from the first stage. In the second stage, we compress a matrix which is the horizontal concatenation of for . Compressing the matrix maximizes the efficiency of updating factor matrices , , and (see Equation (3)) at later iterations. We construct a matrix by horizontally concatenating for (line 5 in Algorithm 3). Then, DPar2 performs randomized SVD for (line 6 in Algorithm 3):

| (5) |

where is a matrix consisting of left singular vectors, is a diagonal matrix whose elements are singular values, and is a matrix consisting of right singular vectors.

With the two stages, we obtain the compressed results , , , and for . Before describing how to update factor matrices, we re-express the -th slice by using the compressed results:

| (6) |

where is the th vertical block matrix of :

| (7) |

Since is the th horizontal block of and is the th horizontal block of , corresponds to . Therefore, we obtain Equation (6) by replacing with from Equation (4).

In updating factor matrices, we use instead of . The two-stage compression lays the groundwork for efficient updates.

III-C Overview of update rule

Our goal is to efficiently update factor matrices, , , and and for , using the compressed results . The main challenge of updating factor matrices is to minimize numerical computations and intermediate data by exploiting the compressed results obtained in Section III-B. A naive approach would reconstruct from the compressed results, and then update the factor matrices. However, this approach fails to improve the efficiency of updating factor matrices. We propose an efficient update rule using the compressed results to 1) find and (lines 5 and 8 in Algorithm 2), and 2) compute a single iteration of CP-ALS (lines 11 to 13 in Algorithm 2).

There are two differences between our update rule and PARAFAC2-ALS (Algorithm 2). First, we avoid explicit computations of and . Instead, we find small factorized matrices of and , respectively, and then exploit the small ones to update , , and . The small matrices are computed efficiently by exploiting the compressed results instead of . The second difference is that DPar2 obtains , , and using the small factorized matrices of . Careful ordering of computations with them considerably reduces time and space costs at each iteration. We describe how to find the factorized matrices of and in Section III-D, and how to update factor matrices in Section III-E.

III-D Finding the factorized matrices of and

The first goal of updating factor matrices is to find the factorized matrices of and for , respectively. In Algorithm 2, finding and is expensive due to the computations involved with (lines 4 and 8 in Algorithm 2). To reduce the costs for and , our main idea is to exploit the compressed results , , , and , instead of . Additionally, we exploit the column orthogonal property of , i.e., , where is the identity matrix.

We first re-express using the compressed results obtained in Section III-B. DPar2 reduces the time and space costs for by exploiting the column orthogonal property of . First, we express as by replacing with . Next, we need to obtain left and right singular vectors of . A naive approach is to compute SVD of , but there is a more efficient way than this approach. Thanks to the column orthogonal property of , DPar2 performs SVD of , not , at target rank (line 9 in Algorithm 3):

| (8) |

where is a diagonal matrix whose entries are the singular values of , the column vectors of and are the left singular vectors and the right singular vectors of , respectively. Then, we obtain the factorized matrices of as follows:

| (9) |

where and are the left and the right singular vectors of , respectively. We avoid the explicit construction of , and use instead of . Since is already column-orthogonal, we avoid performing SVD of , which are much larger than .

Next, we find the factorized matrices of . DPar2 re-expresses (line 8 in Algorithm 2) as using Equation (6). Instead of directly computing , we replace with . Then, we represent as the following expression (line 12 in Algorithm 3):

Note that we use the property , where is the identity matrix of size , for the last equality. By exploiting the factorized matrices of , we compute without involving in the process.

III-E Updating , , and

The next goal is to efficiently update the matrices , , and using the small factorized matrices of . Naively, we would compute and run a single iteration of CP-ALS with to update , , and (lines 11 to 13 in Algorithm 2). However, multiplying a matricized tensor and a Khatri-Rao product (e.g., ) is burdensome, and thus we exploit the structure of the decomposed results of to reduce memory requirements and computational costs. In other word, we do not compute , and use only in updating , , and . Note that the -th frontal slice of , , is .

Updating . In , we focus on efficiently computing based on Lemma 1. A naive computation for requires a high computational cost due to the explicit reconstruction of . Therefore, we compute that term without the reconstruction by carefully determining the order of computations and exploiting the factorized matrices of , , , , , and for . With Lemma 1, we reduce the computational cost of to .

Lemma 1.

Let us denote with . is equal to .

Proof.

is represented as follows:

where is the identity matrix of size . Then, is expressed as follows:

The mixed-product property (i.e., ) is used in the above equation. Therefore, is equal to . We represent it as using block matrix multiplication since the -th vertical block vector of is . ∎

As shown in Fig. 5, we compute column by column. In computing , we compute , sum up for all , and then perform matrix multiplication between the two preceding results (line 14 in Algorithm 3). After computing , we update by computing where denotes the Moore-Penrose pseudoinverse (line 15 in Algorithm 3). Note that the pseudoinverse operation requires a lower computational cost compared to computing since the size of is small.

Updating . In computing , we need to efficiently compute based on Lemma 2. As in updating , a naive computation for requires a high computational cost . We efficiently compute with the cost , by carefully determining the order of computations and exploiting the factorized matrices of .

Lemma 2.

Let us denote with . is equal to .

Proof.

is represented as follows:

Then, is expressed as follows:

is equal to according to the above equation. ∎

As shown in Fig. 6, we compute column by column. After computing , we update by computing (lines 16 and 17 in Algorithm 3).

Updating . In computing , we efficiently compute based on Lemma 3. As in updating and , a naive computation for requires a high computational cost . We compute with the cost based on Lemma 3. Exploiting the factorized matrices of and carefully determining the order of computations improves the efficiency.

Lemma 3.

Let us denote with . is equal to where denotes the vectorization of a matrix.

Proof.

is represented as follows:

where is the identity matrix of size . The property of the vectorization (i.e., ) is used. Then, is expressed as follows:

is according to the above equation. ∎

We compute row by row. Fig. 7 shows how we compute . In computing , we first compute , and then obtain for all (line 18 in Algorithm 3). After computing , we update by computing where denotes the Moore-Penrose pseudoinverse (line 19 in Algorithm 3). We obtain whose diagonal elements correspond to the th row vector of (line 21 in Algorithm 3).

Convergence Criterion. At the end of each iteration, we determine whether to stop or not (line 23 in Algorithm 3) based on the variation of where is the th reconstructed slice. However, measuring reconstruction errors is inefficient since it requires high time and space costs proportional to input slices . To efficiently verify the convergence, our idea is to exploit instead of , since the objective of our update process is to minimize the difference between and . With this idea, we improve the efficiency by computing , not the reconstruction errors. Our computation requires the time and space costs which are much lower than the costs and of naively computing , respectively. From , we derive . Since the Frobenius norm is unitarily invariant, we modify the computation as follows:

where and are equal to since and are orthonormal matrices. Note that the size of and is which is much smaller than the size of input slices . This modification completes the efficiency of our update rule.

III-F Careful distribution of work

The last challenge for an efficient and scalable PARAFAC2 decomposition method is how to parallelize the computations described in Sections III-B to III-E. Although a previous work [11] introduces the parallelization with respect to the slices, there is still a room for maximizing parallelism. Our main idea is to carefully allocate input slices to threads by considering the irregularity of a given tensor.

The most expensive operation is to compute randomized SVD of input slices for all ; thus we first focus on how well we parallelize this computation (i.e., lines 2 to 4 in Algorithm 3). A naive approach is to randomly allocate input slices to threads, and let each thread compute randomized SVD of the allocated slices. However, the completion time of each thread can vary since the computational cost of computing randomized SVD is proportional to the number of rows of slices; the number of rows of input slices is different from each other as shown in Fig. 8. Therefore, we need to distribute fairly across each thread considering their numbers of rows.

For , consider that an th thread performs randomized SVD for slices in a set where is the number of threads. To reduce the completion time, the sums of rows of slices in the sets should be nearly equal to each other. To achieve it, we exploit a greedy number partitioning technique that repeatedly adds a slice into a set with the smallest sum of rows. Algorithm 4 describes how to construct the sets for compressing input slices in parallel. Let be a list containing the number of rows of for (line 3 in Algorithm 4). We first obtain lists and , sorted values and those corresponding indices, by sorting in descending order (line 4 in Algorithm 4). We repeatedly add a slice to a set that has the smallest sum. For each , we find the index of the minimum in whose th element corresponds to the sum of row sizes of slices in the th set (line 6 in Algorithm 4). Then, we add a slice to the set where is equal to , and update the list by (lines 7 to 9 in Algorithm 4). Note that , , and denote the th element of , , and , respectively. After obtaining the sets for , th thread performs randomized SVD for slices in the set .

After decomposing for all , we do not need to consider the irregularity for parallelism since there is no computation with which involves the irregularity. Therefore, we uniformly allocate computations across threads for all slices. In each iteration (lines 8 to 22 in Algorithm 3), we easily parallelize computations. First, we parallelize the iteration (lines 8 to 10) for all slices. To update , , and , we need to compute , , and in parallel. In Lemmas 1 and 2, DPar2 parallelly computes and for , respectively. In Lemma 3, DPar2 parallelly computes for .

III-G Complexities

We analyze the time complexity of DPar2.

Lemma 4.

Compressing input slices takes time.

Proof.

The SVD in the first stage takes times since computing randomized SVD of takes time. Then, the SVD in the second stage takes due to randomized SVD of . Therefore, the time complexity of the SVD in the two stages is . ∎

Lemma 5.

At each iteration, computing and updating , , and takes time.

Proof.

For , computing and performing SVD of it for all take . Updating each of , , and takes time. Therefore, the complexity for , , , and is . ∎

Theorem 1.

The time complexity of DPar2 is where is the number of iterations.

Proof.

Theorem 2.

The size of preprocessed data of DPar2 is .

Proof.

The size of preprocessed data of DPar2 is proportional to the size of , , , and for . The size of and is and , respectively. For each , the size of and is and , respectively. Therefore, the size of preprocessed data of DPar2 is . ∎

IV Experiments

In this section, we experimentally evaluate the performance of DPar2. We answer the following questions: {itemize*}

Performance (Section IV-B). How quickly and accurately does DPar2 perform PARAFAC2 decomposition compared to other methods?

Data Scalability (Section IV-C). How well does DPar2 scale up with respect to tensor size and target rank?

Multi-core Scalability (Section IV-D). How much does the number of threads affect the running time of DPar2?

Discovery (Section IV-E). What can we discover from real-world tensors using DPar2?

IV-A Experimental Settings

We describe experimental settings for the datasets, competitors, parameters, and environments.

Machine. We use a workstation with CPUs (Intel Xeon E5-2630 v4 @ 2.2GHz), each of which has cores, and 512GB memory for the experiments.

Real-world Data. We evaluate the performance of DPar2 and competitors on real-world datasets summarized in Table II. FMA dataset111https://github.com/mdeff/fma [26] is the collection of songs. Urban Sound dataset222https://urbansounddataset.weebly.com/urbansound8k.html [27] is the collection of urban sounds such as drilling, siren, and street music. For the two datasets, we convert each time series into an image of a log-power spectrogram so that their forms are (time, frequency, song; value) and (time, frequency, sound; value), respectively. US Stock dataset333https://datalab.snu.ac.kr/dpar2 is the collection of stocks on the US stock market. Korea Stock dataset444https://github.com/jungijang/KoreaStockData [3] is the collection of stocks on the South Korea stock market. Each stock is represented as a matrix of (date, feature) where the feature dimension includes 5 basic features and 83 technical indicators. The basic features collected daily are the opening, the closing, the highest, and the lowest prices and trading volume, and technical indicators are calculated based on the basic features. The two stock datasets have the form of (time, feature, stock; value). Activity data555https://github.com/titu1994/MLSTM-FCN and Action data5 are the collection of features for motion videos. The two datasets have the form of (frame, feature, video; value). We refer the reader to [28] for their feature extraction. Traffic data666https://github.com/florinsch/BigTrafficData is the collection of traffic volume around Melbourne, and its form is (sensor, frequency, time; measurement). PEMS-SF data777http://www.timeseriesclassification.com/ contain the occupancy rate of different car lanes of San Francisco bay area freeways: (station, timestamp, day; measurement). Traffic data and PEMS-SF data are 3-order regular tensors, but we can analyze them using PARAFAC2 decomposition approaches.

Synthetic Data. We evaluate the scalability of DPar2 and competitors on synthetic tensors. Given the number of slices, and the slice sizes and , we generate a synthetic tensor using tenrand(I, J, K) function in Tensor Toolbox [31], which randomly generates a tensor . We construct a tensor where is equal to for .

Competitors. We compare DPar2 with PARAFAC2 decomposition methods based on ALS. All the methods including DPar2 are implemented in MATLAB (R2020b).

-

•

DPar2: the proposed PARAFAC2 decomposition model which preprocesses a given irregular dense tensor and updates factor matrices using the preprocessing result.

- •

- •

-

•

SPARTan [11]: fast and scalable PARAFAC2 decomposition for irregular sparse tensors. Although it targets on sparse irregular tensors, it can be adapted to irregular dense tensors. We use the code implemented by authors888https://github.com/kperros/SPARTan.

Parameters. We use the following parameters.

-

•

Number of threads: we use threads except in Section IV-D.

-

•

Max number of iterations: the maximum number of iterations is set to .

- •

To compare running time, we run each method times, and report the average.

Fitness. We evaluate the fitness defined as follows:

where is the -th input slice and is the -th reconstructed slice of PARAFAC2 decomposition. Fitness close to indicates that a model approximates a given input tensor well.

IV-B Performance (Q1)

We evaluate the fitness and the running time of DPar2, RD-ALS, SPARTan, and PARAFAC2-ALS.

Trade-off. Fig. 1 shows that DPar2 provides the best trade-off of running time and fitness on real-world irregular tensors for the three target ranks: , , and . DPar2 achieves faster running time than the competitors for FMA dataset while having a comparable fitness. In addition, DPar2 provides at least faster running times than the competitors for the other datasets. The performance gap is large for FMA and Urban datasets whose sizes are larger than those of the other datasets. It implies that DPar2 is more scalable than the competitors in terms of tensor sizes.

Preprocessing time. We compare DPar2 with RD-ALS and exclude SPARTan and PARAFAC2-ALS since only RD-ALS has a preprocessing step. As shown in Fig. 9(a), DPar2 is up to faster than RD-ALS. There is a large performance gap on FMA and Urban datasets since RD-ALS cannot avoid the overheads for the large tensors. RD-ALS performs SVD of the concatenated slice matrices , which leads to its slow preprocessing time.

Iteration time. Fig. 9(b) shows that DPar2 outperforms competitors for running time at each iteration. Compared to SPARTan and PARAFAC2-ALS, DPar2 significantly reduces the running time per iteration due to the small size of the preprocessed results. Although RD-ALS reduces the computational cost at each iteration by preprocessing a given tensor, DPar2 is up to faster than RD-ALS. Compared to RD-ALS that computes the variation of for the convergence criterion, DPar2 efficiently verifies the convergence by computing the variation of , which affects the running time at each iteration. In summary, DPar2 obtains , , and in a reasonable running time even if the number of iterations increases.

Size of preprocessed data. We measure the size of preprocessed data on real-world datasets. For PARAFAC2-ALS and SPARTan, we report the size of input irregular tensor since they have no preprocessing step. Compared to an input irregular tensor, DPar2 generates much smaller preprocessed data by up to times as shown in Fig. 10. Given input slices of size , the compression ratio increases as the number of columns increases; the compression ratio is larger on FMA, Urban, Activity, and Action datasets than on US Stock, KR Stock, Traffic, and PEMS-SF. This is because the compression ratio is proportional to assuming ; is the dominant term which is much larger than and .

IV-C Data Scalability (Q2)

We evaluate the data scalability of DPar2 by measuring the running time on several synthetic datasets. We first compare the performance of DPar2 and the competitors by increasing the size of an irregular tensor. Then, we measure the running time by changing a target rank.

Tensor Size. To evaluate the scalability with respect to the tensor size, we generate synthetic tensors of the following sizes : . For simplicity, we set . Fig. 11(a) shows that DPar2 is up to faster than competitors on all synthetic tensors; in addition, the slope of DPar2 is lower than that of competitors. Also note that only DPar2 obtains factor matrices of PARAFAC2 decomposition within a minute for all the datasets.

Rank. To evaluate the scalability with respect to rank, we generate the following synthetic data: , , and . Given the synthetic tensors, we measure the running time for target ranks: , , , , and . DPar2 is up to faster than the second-fastest method with respect to rank in Fig. 11(b). For higher ranks, the performance gap slightly decreases since DPar2 depends on the performance of randomized SVD which is designed for a low target rank. Still, DPar2 is up to faster than competitors with respect to the highest rank used in our experiment.

IV-D Multi-core Scalability (Q3)

We generate the following synthetic data: , , and , and evaluate the multi-core scalability of DPar2 with respect to the number of threads: and . indicates the running time when using the number of threads. As shown in Fig. 11(c), DPar2 gives near-linear scalability, and accelerates when the number of threads increases from to .

IV-E Discoveries (Q4)

We discover various patterns using DPar2 on real-world datasets.

IV-E1 Feature Similarity on Stock Dataset

We measure the similarities between features on US Stock and Korea Stock datasets, and compare the results. We compute Pearson Correlation Coefficient (PCC) between , which represents a latent vector of the th feature. For effective visualization, we select 4 price features (the opening, the closing, the highest, and the lowest prices), and 4 representative technical indicators described as follows:

-

•

OBV (On Balance Volume): a technical indicator for cumulative trading volume. If today’s closing price is higher than yesterday’s price, OBV increases by the amount of today’s volume. If not, OBV decreases by the amount of today’s volume.

-

•

ATR (Average True Range): a technical indicator for volatility developed by J. Welles Wilder, Jr. It increases in high volatility while decreasing in low volatility.

-

•

MACD (Moving Average Convergence and Divergence): a technical indicator for trend developed by Gerald Appel. It indicates the difference between long-term and short-term exponential moving averages (EMA).

-

•

STOCH (Stochastic Oscillator): a technical indicator for momentum developed by George Lane. It indicates the position of the current closing price compared to the highest and the lowest prices in a duration.

Fig. 12(a) and 12(b) show correlation heatmaps for US Stock data and Korea Stock data, respectively. We analyze correlation patterns between price features and technical indicators. On both datasets, STOCH has a negative correlation and MACD has a weak correlation with the price features. On the other hand, OBV and ATR indicators have different patterns on the two datasets. On the US stock dataset, ATR and OBV have a positive correlation with the price features. On the Korea stock dataset, OBV has little correlation with the price features. Also, ATR has little correlation with the price features except for the closing price. These different patterns are due to the difference of the two markets in terms of market size, market stability, tax, investment behavior, etc.

IV-E2 Finding Similar Stocks

On US Stock dataset, which stock is similar to a target stock in a time range that a user is curious about? In this section, we provide analysis by setting the target stock to Microsoft (Ticker: MSFT), and the range a duration when the COVID-19 was very active (Jan. 2, 2020 - Apr. 15, 2021). We efficiently answer the question by 1) constructing the tensor included in the range, 2) obtaining factor matrices with DPar2, and 3) post-processing the factor matrices of DPar2. Since represents temporal latent vectors of the th stock, the similarity between stocks and is computed as follows:

| (10) |

where is the exponential function. We set to in this section. Note that we use only the stocks that have the same target range since is defined only when the two matrices are of the same size.

Based on , we find similar stocks to using two different techniques: 1) -nearest neighbors, and 2) Random Walks with Restarts (RWR). The first approach simply finds stocks similar to the target stock, while the second one finds similar stocks by considering the multi-faceted relationship between stocks.

-nearest neighbors. We compute for where is the number of stocks to be compared, and find top- similar stocks to , Microsoft (Ticker: MSFT). In Table IV(a), the Microsoft stock is similar to stocks of the Technology sector or with a large capitalization (e.g., Amazon.com, Apple, and Alphabet) during the COVID-19. Moody’s is also similar to the target stock.

| Rank | Stock Name | Sector |

| 1 | Adobe | Technology |

| 2 | Amazon.com | Consumer Cyclical |

| 3 | Apple | Technology |

| 4 | Moody’s | Financial Services |

| 5 | Intuit | Technology |

| 6 | ANSYS | Technology |

| 7 | Synopsys | Technology |

| 8 | Alphabet | Communication Services |

| 9 | ServiceNow | Technology |

| 10 | EPAM Systems | Technology |

| Rank | Stock Name | Sector |

| 1 | Synopsys | Technology |

| 2 | ANSYS | Technology |

| 3 | Adobe | Technology |

| 4 | Amazon.com | Consumer Cyclical |

| 5 | Netflix | Communication Services |

| 6 | Autodesk | Technology |

| 7 | Apple | Technology |

| 8 | Moody’s | Financial Services |

| 9 | NVIDIA | Technology |

| 10 | S&P Global | Financial Services |

Random Walks with Restarts (RWR). We find similar stocks using another approach, Random Walks with Restarts (RWR) [32, 33, 34, 35]. To exploit RWR, we first a similarity graph based on the similarities between stocks. The elements of the adjacency matrix of the graph is defined as follows:

| (11) |

We ignore self-loops by setting to for .

After constructing the graph, we find similar stocks using RWR. The scores is computed by using the power iteration [36] as described in [37]:

| (12) |

where is the row-normalized adjacency matrix, is the score vector at the th iteration, is a restart probability, and is a query vector. We set to , the maximum iteration to , and to the one-hot vector where the element corresponding to Microsoft is 1, and the others are 0.

As shown in Table III, the common pattern of the two approaches is that many stocks among the top-10 belong to the technology sector. There is also a difference. In Table III, the blue color indicates the stocks that appear only in one of the two approaches among the top-10. In Table IV(a), the -nearest neighbor approach simply finds the top- stocks which are closest to Microsoft based on distances. On the other hand, the RWR approach finds the top- stocks by considering more complicated relationships. There are stocks appearing only in Table IV(b). S&P Global is included since it is very close to Moody’s which is ranked th in Table IV(a). Netflix, Autodesk, and NVIDIA are relatively far from the target stock compared to stocks such as Intuit and Alphabet, but they are included in the top- since they are very close to Amazon.com, Adobe, ANSYS, and Synopsys. This difference comes from the fact that the -nearest neighbors approach considers only distances from the target stock while the RWR approach considers distances between other stocks in addition to the target stock.

DPar2 allows us to efficiently obtain factor matrices, and find interesting patterns in data.

V Related Works

We review related works on tensor decomposition methods for regular and irregular tensors.

Tensor decomposition on regular dense tensors. There are efficient tensor decomposition methods on regular dense tensors. Pioneering works [38, 39, 40, 41, 42, 43] efficiently decompose a regular tensor by exploiting techniques that reduce time and space costs. Also, a lot of works [44, 45, 46, 47] proposed scalable tensor decomposition methods with parallelization to handle large-scale tensors. However, the aforementioned methods fail to deal with the irregularity of dense tensors since they are designed for regular tensors.

PARAFAC2 decomposition on irregular tensors. Cheng and Haardt [18] proposed RD-ALS which preprocesses a given tensor and performs PARAFAC2 decomposition using the preprocessed result. However, RD-ALS requires high computational costs to preprocess a given tensor. Also, RD-ALS is less efficient in updating factor matrices since it computes reconstruction errors for the convergence criterion at each iteration. Recent works [11, 12, 15] attempted to analyze irregular sparse tensors. SPARTan [11] is a scalable PARAFAC2-ALS method for large electronic health records (EHR) data. COPA [12] improves the performance of PARAFAC2 decomposition by applying various constraints (e.g., smoothness). REPAIR [15] strengthens the robustness of PARAFAC2 decomposition by applying low-rank regularization. We do not compare DPar2 with COPA and REPAIR since they concentrate on imposing practical constraints to handle irregular sparse tensors, especially EHR data. However, we do compare DPar2 with SPARTan which the efficiency of COPA and REPAIR is based on. TASTE [16] is a joint PARAFAC2 decomposition method for large temporal and static tensors. Although the above methods are efficient in PARAFAC2 decomposition for irregular tensors, they concentrate only on irregular sparse tensors, especially EHR data. LogPar [17], a logistic PARAFAC2 decomposition method, analyzes temporal binary data represented as an irregular binary tensor. SPADE [48] efficiently deals with irregular tensors in a streaming setting. TedPar [49] improves the performance of PARAFAC2 decomposition by explicitly modeling the temporal dependency. Although the above methods effectively deal with irregular sparse tensors, especially EHR data, none of them focus on devising an efficient PARAFAC2 decomposition method on irregular dense tensors. On the other hand, DPar2 is a fast and scalable PARAFAC2 decomposition method for irregular dense tensors.

VI Conclusion

In this paper, we propose DPar2, a fast and scalable PARAFAC2 decomposition method for irregular dense tensors. By compressing an irregular input tensor, careful reordering of the operations with the compressed results in each iteration, and careful partitioning of input slices, DPar2 successfully achieves high efficiency to perform PARAFAC2 decomposition for irregular dense tensors. Experimental results show that DPar2 is up to faster than existing PARAFAC2 decomposition methods while achieving comparable accuracy, and it is scalable with respect to the tensor size and target rank. With DPar2, we discover interesting patterns in real-world irregular tensors. Future work includes devising an efficient PARAFAC2 decomposition method in a streaming setting.

Acknowledgment

This work was partly supported by the National Research Foundation of Korea(NRF) funded by MSIT(2022R1A2C3007921), and Institute of Information & communications Technology Planning & Evaluation(IITP) grant funded by MSIT [No.2021-0-01343, Artificial Intelligence Graduate School Program (Seoul National University)] and [NO.2021-0-02068, Artificial Intelligence Innovation Hub (Artificial Intelligence Institute, Seoul National University)]. The Institute of Engineering Research and ICT at Seoul National University provided research facilities for this work. U Kang is the corresponding author.

References

- [1] Y.-R. Lin, J. Sun, P. Castro, R. Konuru, H. Sundaram, and A. Kelliher, “Metafac: community discovery via relational hypergraph factorization,” in KDD, 2009, pp. 527–536.

- [2] S. Spiegel, J. Clausen, S. Albayrak, and J. Kunegis, “Link prediction on evolving data using tensor factorization,” in PAKDD. Springer, 2011, pp. 100–110.

- [3] J.-G. Jang and U. Kang, “Fast and memory-efficient tucker decomposition for answering diverse time range queries,” in Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, 2021, pp. 725–735.

- [4] S. Oh, N. Park, J.-G. Jang, L. Sael, and U. Kang, “High-performance tucker factorization on heterogeneous platforms,” IEEE Transactions on Parallel and Distributed Systems, vol. 30, no. 10, pp. 2237–2248, 2019.

- [5] T. Kwon, I. Park, D. Lee, and K. Shin, “Slicenstitch: Continuous cp decomposition of sparse tensor streams,” in ICDE. IEEE, 2021, pp. 816–827.

- [6] D. Ahn, S. Kim, and U. Kang, “Accurate online tensor factorization for temporal tensor streams with missing values,” in CIKM ’21: The 30th ACM International Conference on Information and Knowledge Management, Virtual Event, Queensland, Australia, November 1 - 5, 2021, G. Demartini, G. Zuccon, J. S. Culpepper, Z. Huang, and H. Tong, Eds. ACM, 2021, pp. 2822–2826.

- [7] D. Ahn, J.-G. Jang, and U. Kang, “Time-aware tensor decomposition for sparse tensors,” Machine Learning, Sep 2021.

- [8] D. Ahn, S. Son, and U. Kang, “Gtensor: Fast and accurate tensor analysis system using gpus,” in CIKM ’20: The 29th ACM International Conference on Information and Knowledge Management, Virtual Event, Ireland, October 19-23, 2020, M. d’Aquin, S. Dietze, C. Hauff, E. Curry, and P. Cudré-Mauroux, Eds. ACM, 2020, pp. 3361–3364.

- [9] D. Choi, J.-G. Jang, and U. Kang, “S3cmtf: Fast, accurate, and scalable method for incomplete coupled matrix-tensor factorization,” PLOS ONE, vol. 14, no. 6, pp. 1–20, 06 2019.

- [10] N. Park, S. Oh, and U. Kang, “Fast and scalable method for distributed boolean tensor factorization,” The VLDB Journal, Mar 2019.

- [11] I. Perros, E. E. Papalexakis, F. Wang, R. W. Vuduc, E. Searles, M. Thompson, and J. Sun, “Spartan: Scalable PARAFAC2 for large & sparse data,” in SIGKDD. ACM, 2017, pp. 375–384.

- [12] A. Afshar, I. Perros, E. E. Papalexakis, E. Searles, J. C. Ho, and J. Sun, “COPA: constrained PARAFAC2 for sparse & large datasets,” in CIKM. ACM, 2018, pp. 793–802.

- [13] N. E. Helwig, “Estimating latent trends in multivariate longitudinal data via parafac2 with functional and structural constraints,” Biometrical Journal, vol. 59, no. 4, pp. 783–803, 2017.

- [14] B. M. Wise, N. B. Gallagher, and E. B. Martin, “Application of parafac2 to fault detection and diagnosis in semiconductor etch,” Journal of Chemometrics: A Journal of the Chemometrics Society, vol. 15, no. 4, pp. 285–298, 2001.

- [15] Y. Ren, J. Lou, L. Xiong, and J. C. Ho, “Robust irregular tensor factorization and completion for temporal health data analysis,” in CIKM. ACM, 2020, pp. 1295–1304.

- [16] A. Afshar, I. Perros, H. Park, C. Defilippi, X. Yan, W. Stewart, J. Ho, and J. Sun, “Taste: Temporal and static tensor factorization for phenotyping electronic health records,” in Proceedings of the ACM Conference on Health, Inference, and Learning, 2020, pp. 193–203.

- [17] K. Yin, A. Afshar, J. C. Ho, W. K. Cheung, C. Zhang, and J. Sun, “Logpar: Logistic parafac2 factorization for temporal binary data with missing values,” in SIGKDD, 2020, pp. 1625–1635.

- [18] Y. Cheng and M. Haardt, “Efficient computation of the PARAFAC2 decomposition,” in ACSCC. IEEE, 2019, pp. 1626–1630.

- [19] T. G. Kolda and B. W. Bader, “Tensor decompositions and applications,” SIAM Review, vol. 51, no. 3, pp. 455–500, 2009.

- [20] N. Halko, P. Martinsson, and J. A. Tropp, “Finding structure with randomness: Probabilistic algorithms for constructing approximate matrix decompositions,” SIAM Review, vol. 53, no. 2, pp. 217–288, 2011.

- [21] F. Woolfe, E. Liberty, V. Rokhlin, and M. Tygert, “A fast randomized algorithm for the approximation of matrices,” Applied and Computational Harmonic Analysis, vol. 25, no. 3, pp. 335–366, 2008.

- [22] K. L. Clarkson and D. P. Woodruff, “Low-rank approximation and regression in input sparsity time,” JACM, vol. 63, no. 6, p. 54, 2017.

- [23] R. A. Harshman, “Parafac2: Mathematical and technical notes,” UCLA working papers in phonetics, vol. 22, no. 3044, p. 122215, 1972.

- [24] H. A. Kiers, J. M. Ten Berge, and R. Bro, “Parafac2—part i. a direct fitting algorithm for the parafac2 model,” Journal of Chemometrics: A Journal of the Chemometrics Society, vol. 13, no. 3-4, pp. 275–294, 1999.

- [25] G. H. Golub and C. F. Van Loan, Matrix computations. JHU press, 2013, vol. 3.

- [26] M. Defferrard, K. Benzi, P. Vandergheynst, and X. Bresson, “FMA: A dataset for music analysis,” in ISMIR, 2017. [Online]. Available: https://arxiv.org/abs/1612.01840

- [27] J. Salamon, C. Jacoby, and J. P. Bello, “A dataset and taxonomy for urban sound research,” in Proceedings of the ACM International Conference on Multimedia, MM ’14, Orlando, FL, USA, November 03 - 07, 2014. ACM, 2014, pp. 1041–1044.

- [28] J. Wang, Z. Liu, Y. Wu, and J. Yuan, “Mining actionlet ensemble for action recognition with depth cameras,” in 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, June 16-21, 2012. IEEE Computer Society, 2012, pp. 1290–1297.

- [29] F. Karim, S. Majumdar, H. Darabi, and S. Harford, “Multivariate lstm-fcns for time series classification,” 2018.

- [30] F. Schimbinschi, X. V. Nguyen, J. Bailey, C. Leckie, H. Vu, and R. Kotagiri, “Traffic forecasting in complex urban networks: Leveraging big data and machine learning,” in Big Data (Big Data), 2015 IEEE International Conference on. IEEE, 2015, pp. 1019–1024.

- [31] B. W. Bader, T. G. Kolda et al., “Matlab tensor toolbox version 3.0-dev,” Available online, Oct. 2017. [Online]. Available: https://www.tensortoolbox.org

- [32] J. Jung, J. Yoo, and U. Kang, “Signed random walk diffusion for effective representation learning in signed graphs,” PLOS ONE, vol. 17, no. 3, pp. 1–19, 03 2022.

- [33] J. Jung, W. Jin, H. Park, and U. Kang, “Accurate relational reasoning in edge-labeled graphs by multi-labeled random walk with restart,” World Wide Web, vol. 24, no. 4, pp. 1369–1393, 2021.

- [34] J. Jung, W. Jin, and U. Kang, “Random walk-based ranking in signed social networks: model and algorithms,” Knowl. Inf. Syst., vol. 62, no. 2, pp. 571–610, 2020.

- [35] W. Jin, J. Jung, and U. Kang, “Supervised and extended restart in random walks for ranking and link prediction in networks,” PLOS ONE, vol. 14, no. 3, pp. 1–23, 03 2019.

- [36] L. Page, S. Brin, R. Motwani, and T. Winograd, “The pagerank citation ranking: Bringing order to the web.” Stanford InfoLab, Tech. Rep., 1999.

- [37] J. Jung, N. Park, S. Lee, and U. Kang, “Bepi: Fast and memory-efficient method for billion-scale random walk with restart,” in Proceedings of the 2017 ACM International Conference on Management of Data, 2017, pp. 789–804.

- [38] J. Jang and U. Kang, “D-tucker: Fast and memory-efficient tucker decomposition for dense tensors,” in ICDE. IEEE, 2020, pp. 1850–1853.

- [39] O. A. Malik and S. Becker, “Low-rank tucker decomposition of large tensors using tensorsketch,” in NeurIPS, 2018, pp. 10 117–10 127.

- [40] Y. Wang, H. F. Tung, A. J. Smola, and A. Anandkumar, “Fast and guaranteed tensor decomposition via sketching,” in NeurIPS, 2015, pp. 991–999.

- [41] C. E. Tsourakakis, “MACH: fast randomized tensor decompositions,” in SDM, 2010, pp. 689–700.

- [42] C. Battaglino, G. Ballard, and T. G. Kolda, “A practical randomized CP tensor decomposition,” SIAM J. Matrix Anal. Appl., vol. 39, no. 2, pp. 876–901, 2018.

- [43] A. Gittens, K. S. Aggour, and B. Yener, “Adaptive sketching for fast and convergent canonical polyadic decomposition,” in ICML, ser. Proceedings of Machine Learning Research, vol. 119. PMLR, 2020, pp. 3566–3575.

- [44] A. H. Phan and A. Cichocki, “PARAFAC algorithms for large-scale problems,” Neurocomputing, vol. 74, no. 11, pp. 1970–1984, 2011.

- [45] X. Li, S. Huang, K. S. Candan, and M. L. Sapino, “2pcp: Two-phase CP decomposition for billion-scale dense tensors,” in 32nd IEEE International Conference on Data Engineering, ICDE 2016, Helsinki, Finland, May 16-20, 2016, 2016, pp. 835–846.

- [46] W. Austin, G. Ballard, and T. G. Kolda, “Parallel tensor compression for large-scale scientific data,” in IPDPS, 2016, pp. 912–922.

- [47] D. Chen, Y. Hu, L. Wang, A. Y. Zomaya, and X. Li, “H-PARAFAC: hierarchical parallel factor analysis of multidimensional big data,” IEEE Trans. Parallel Distrib. Syst., vol. 28, no. 4, pp. 1091–1104, 2017.

- [48] E. Gujral, G. Theocharous, and E. E. Papalexakis, “Spade: S treaming pa rafac2 de composition for large datasets,” in SDM. SIAM, 2020, pp. 577–585.

- [49] K. Yin, W. K. Cheung, B. C. Fung, and J. Poon, “Tedpar: Temporally dependent parafac2 factorization for phenotype-based disease progression modeling,” in SDM. SIAM, 2021, pp. 594–602.