Domain Adaptation with Conditional Distribution Matching and Generalized Label Shift

Abstract

Adversarial learning has demonstrated good performance in the unsupervised domain adaptation setting, by learning domain-invariant representations. However, recent work has shown limitations of this approach when label distributions differ between the source and target domains. In this paper, we propose a new assumption, generalized label shift (), to improve robustness against mismatched label distributions. states that, conditioned on the label, there exists a representation of the input that is invariant between the source and target domains. Under , we provide theoretical guarantees on the transfer performance of any classifier. We also devise necessary and sufficient conditions for to hold, by using an estimation of the relative class weights between domains and an appropriate reweighting of samples. Our weight estimation method could be straightforwardly and generically applied in existing domain adaptation (DA) algorithms that learn domain-invariant representations, with small computational overhead. In particular, we modify three DA algorithms, JAN, DANN and CDAN, and evaluate their performance on standard and artificial DA tasks. Our algorithms outperform the base versions, with vast improvements for large label distribution mismatches. Our code is available at https://tinyurl.com/y585xt6j.

1 Introduction

In spite of impressive successes, most deep learning models [24] rely on huge amounts of labelled data and their features have proven brittle to distribution shifts [59, 43]. Building more robust models, that learn from fewer samples and/or generalize better out-of-distribution is the focus of many recent works [5, 2, 57]. The research direction of interest to this paper is that of domain adaptation, which aims at learning features that transfer well between domains. We focus in particular on unsupervised domain adaptation (UDA), where the algorithm has access to labelled samples from a source domain and unlabelled data from a target domain. Its objective is to train a model that generalizes well to the target domain. Building on advances in adversarial learning [25], adversarial domain adaptation (ADA) leverages the use of a discriminator to learn an intermediate representation that is invariant between the source and target domains. Simultaneously, the representation is paired with a classifier, trained to perform well on the source domain [22, 53, 64, 36]. ADA is rather successful on a variety of tasks, however, recent work has proven an upper bound on the performance of existing algorithms when source and target domains have mismatched label distributions [66]. Label shift is a property of two domains for which the marginal label distributions differ, but the conditional distributions of input given label stay the same across domains [52, 62].

In this paper, we study domain adaptation under mismatched label distributions and design methods that are robust in that setting. Our contributions are the following. First, we extend the upper bound by Zhao et al. [66] to -class classification and to conditional domain adversarial networks, a recently introduced domain adaptation algorithm [41]. Second, we introduce generalized label shift (), a broader version of the standard label shift where conditional invariance between source and target domains is placed in representation rather than input space. Third, we derive performance guarantees for algorithms that seek to enforce via learnt feature transformations, in the form of upper bounds on the error gap and the joint error of the classifier on the source and target domains. Those guarantees suggest principled modifications to ADA to improve its robustness to mismatched label distributions. The modifications rely on estimating the class ratios between source and target domains and use those as importance weights in the adversarial and classification objectives. The importance weights estimation is performed using a method of moment by solving a quadratic program, inspired from Lipton et al. [35]. Following these theoretical insights, we devise three new algorithms based on learning importance-weighted representations, DANNs [22], JANs [40] and CDANs [41]. We apply our variants to artificial UDA tasks with large divergences between label distributions, and demonstrate significant performance gains compared to the algorithms’ base versions. Finally, we evaluate them on standard domain adaptation tasks and also show improved performance.

2 Preliminaries

Notation We focus on the general -class classification problem. and denote the input and output space, respectively. stands for the representation space induced from by a feature transformation . Accordingly, we use to denote random variables which take values in . Domain corresponds to a joint distribution on the input space and output space , and we use (resp. ) to denote the source (resp. target) domain. Noticeably, this corresponds to a stochastic setting, which is stronger than the deterministic one previously studied [6, 7, 66]. A hypothesis is a function . The error of a hypothesis under distribution is defined as: , i.e., the probability that disagrees with under .

Domain Adaptation via Invariant Representations For source () and target () domains, we use , , and to denote the marginal data and label distributions. In UDA, the algorithm has access to labeled points and unlabeled points sampled i.i.d. from the source and target domains. Inspired by Ben-David et al. [7], a common approach is to learn representations invariant to the domain shift. With a feature transformation and a hypothesis on the feature space, a domain invariant representation [22, 53, 63] is a function that induces similar distributions on and . is also required to preserve rich information about the target task so that is small. The above process results in the following Markov chain (assumed to hold throughout the paper):

| (1) |

where . We let , , and denote the pushforwards (induced distributions) of and by and . Invariance in feature space is defined as minimizing a distance or divergence between and .

Adversarial Domain Adaptation Invariance is often attained by training a discriminator to predict if is from the source or target. is trained both to maximize the discriminator loss and minimize the classification loss of on the source domain ( is also trained with the latter objective). This leads to domain-adversarial neural networks [22, DANN], where , and are parameterized with neural networks: , and (see Algo. 1 and App. B.5). Building on DANN, conditional domain adversarial networks [41, CDAN] use the same adversarial paradigm. However, the discriminator now takes as input the outer product, for a given , between the predictions of the network and its representation . In other words, acts on the outer product: rather than on . denotes the -th element of vector . We now highlight a limitation of DANNs and CDANs.

An Information-Theoretic Lower Bound We let denote the Jensen-Shanon divergence between two distributions (App. A.1), and correspond to (for DANN) or to (for CDAN). The following theorem lower bounds the joint error of the classifier on the source and target domains:

Theorem 2.1.

Assuming that , then:

Remark The lower bound is algorithm-independent. It is also a population-level result and holds asymptotically with increasing data. Zhao et al. [66] prove the theorem for and . We extend it to CDAN and arbitrary (it actually holds for any s.t. for some , see App. A.3). Assuming that label distributions differ between source and target domains, the lower bound shows that: the better the alignment of feature distributions, the worse the joint error. For an invariant representation () with no source error, the target error will be larger than . Hence algorithms learning invariant representations and minimizing the source error are fundamentally flawed when label distributions differ between source and target domains.

| Covariate Shift | Label Shift |

|---|---|

Common Assumptions to Tackle Domain Adaptation Two common assumptions about the data made in DA are covariate shift and label shift. They correspond to different ways of decomposing the joint distribution over , as detailed in Table 1. From a representation learning perspective, covariate shift is not robust to feature transformation and can lead to an effect called negative transfer [66]. At the same time, label shift clearly fails in most practical applications, e.g. transferring knowledge from synthetic to real images [55]. In that case, the input distributions are actually disjoint.

3 Main Results

In light of the limitations of existing assumptions, (e.g. covariate shift and label shift), we propose generalized label shift (), a relaxation of label shift that substantially improves its applicability. We first discuss some of its properties and explain why the assumption is favorable in domain adaptation based on representation learning. Motivated by , we then present a novel error decomposition theorem that directly suggests a bound minimization framework for domain adaptation. The framework is naturally compatible with -integral probability metrics [44, -IPM] and generates a family of domain adaptation algorithms by choosing various function classes . In a nutshell, the proposed framework applies a method of moments [35] to estimate the importance weight of the marginal label distributions by solving a quadratic program (QP), and then uses to align the weighted source feature distribution with the target feature distribution.

3.1 Generalized Label Shift

Definition 3.1 (Generalized Label Shift, ).

A representation satisfies if

| (2) |

First, when is the identity map, the above definition of reduces to the original label shift assumption. Next, is always achievable for any distribution pair : any constant function satisfies the above definition. The most important property is arguably that, unlike label shift, is compatible with a perfect classifier (in the noiseless case). Precisely, if there exists a ground-truth labeling function such that , then satisfies . As a comparison, without conditioning on , does not satisfy if the marginal label distributions are different across domains. This observation is consistent with the lower bound in Theorem 2.1, which holds for arbitrary marginal label distributions.

imposes label shift in the feature space instead of the original input space . Conceptually, although samples from the same classes in the source and target domain can be dramatically different, the hope is to find an intermediate representation for both domains in which samples from a given class look similar to one another. Taking digit classification as an example and assuming the feature variable corresponds to the contour of a digit, it is possible that by using different contour extractors for e.g. MNIST and USPS, those contours look roughly the same in both domains. Technically, can be facilitated by having separate representation extractors and for source and target [9, 53].

3.2 An Error Decomposition Theorem based on

We now provide performance guarantees for models that satisfy , in the form of upper bounds on the error gap and on the joint error between source and target domains. It requires two concepts:

Definition 3.2 (Balanced Error Rate).

The balanced error rate (BER) of predictor on is:

| (3) |

Definition 3.3 (Conditional Error Gap).

Given a joint distribution , the conditional error gap of a classifier is .

When holds, is equal to . We now give an upper bound on the error gap between source and target, which can also be used to obtain a generalization upper bound on the target risk.

Theorem 3.1.

(Error Decomposition Theorem) For any classifier ,

where is the distance between and .

Remark The upper bound in Theorem 3.1 provides a way to decompose the error gap between source and target domains. It also immediately gives a generalization bound on the target risk . The bound contains two terms. The first contains , which measures the distance between the marginal label distributions across domains and is a constant that only depends on the adaptation problem itself, and , a reweighted classification performance on the source domain. The second is measures the distance between the family of conditional distributions . In other words, the bound is oblivious to the optimal labeling functions in feature space. This is in sharp contrast with upper bounds from previous work [7, Theorem 2], [66, Theorem 4.1], which essentially decompose the error gap in terms of the distance between the marginal feature distributions (, ) and the optimal labeling functions (, ). Because the optimal labeling function in feature space depends on and is unknown in practice, such decomposition is not very informative. As a comparison, Theorem 3.1 provides a decomposition orthogonal to previous results and does not require knowledge about unknown optimal labeling functions in feature space.

Notably, the balanced error rate, , only depends on samples from the source domain and can be minimized. Furthermore, using a data-processing argument, the conditional error gap can be minimized by aligning the conditional feature distributions across domains. Putting everything together, the result suggests that, to minimize the error gap, it suffices to align the conditional distributions while simultaneously minimizing the balanced error rate. In fact, under the assumption that the conditional distributions are perfectly aligned (i.e., under ), we can prove a stronger result, guaranteeing that the joint error is small:

Theorem 3.2.

If satisfies , then for any and letting be the predictor, we have .

3.3 Conditions for Generalized Label Shift

The main difficulty in applying a bound minimization algorithm inspired by Theorem 3.1 is that we do not have labels from the target domain in UDA, so we cannot directly align the conditional label distributions. By using relative class weights between domains, we can provide a necessary condition for that bypasses an explicit alignment of the conditional feature distributions.

Definition 3.4.

Assuming , we let denote the importance weights of the target and source label distributions:

| (4) |

Lemma 3.1.

(Necessary condition for ) If satisfies , then where verifies either or .

Compared to previous work that attempts to align with (using adversarial discriminators [22] or maximum mean discrepancy (MMD) [38]) or with [41], Lemma 3.1 suggests to instead align with the reweighted marginal distribution . Reciprocally, the following two theorems give sufficient conditions to know when perfectly aligned target feature distribution and reweighted source feature distribution imply :

Theorem 3.3.

(Clustering structure implies sufficiency) Let such that . Assume . If there exists a partition of such that , , then satisfies .

Remark

Theorem 3.3 shows that if there exists a partition of the feature space such that instances with the same label are within the same component, then aligning the target feature distribution with the reweighted source feature distribution implies . While this clustering assumption may seem strong, it is consistent with the goal of reducing classification error: if such a clustering exists, then there also exists a perfect predictor based on the feature , i.e., the cluster index.

Theorem 3.4.

Let , and . For or , we have:

Theorem 3.4 confirms that matching with is the proper objective in the context of mismatched label distributions. It shows that, for matched feature distributions and a source error equal to zero, successful domain adaptation (i.e. a target error equal to zero) implies that holds. Combined with Theorem 3.2, we even get equivalence between the two.

3.4 Estimating the Importance Weights

Inspired by the moment matching technique to estimate under label shift from Lipton et al. [35], we propose a method to get under by solving a quadratic program (QP).

Definition 3.5.

We let denote the confusion matrix of the classifier on the source domain and the distribution of predictions on the target one, :

Lemma 3.2.

If is verified, and if the confusion matrix C is invertible, then .

The key insight from Lemma 3.2 is that, to estimate the importance vector under , we do not need access to labels from the target domain. However, matrix inversion is notoriously numerically unstable, especially with finite sample estimates and of C and . We propose to solve instead the following QP (written as ), whose solution will be consistent if and :

| (5) |

The above program (5) can be efficiently solved in time , with small and constant; and by construction, its solution is element-wise non-negative, even with limited amounts of data to estimate C and .

Lemma 3.3.

If the source error is zero and the source and target marginals verify , then the estimated weight vector is equal to .

3.5 -IPM for Distributional Alignment

To align the target feature distribution and the reweighted source feature distribution as suggested by Lemma 3.1, we now provide a general framework using the integral probability metric [44, IPM].

Definition 3.6.

With a set of real-valued functions, the -IPM between distributions and is

| (6) |

By approximating any function class using parametrized models, e.g., neural networks, we obtain a general framework for domain adaptation by aligning reweighted source feature distribution and target feature distribution, i.e. by minimizing . Below, by instantiating to be the set of bounded norm functions in a RKHS [27], we obtain maximum mean discrepancy methods, leading to IWJAN (cf. Section 4.1), a variant of JAN [40] for UDA.

4 Practical Implementation

4.1 Algorithms

The sections above suggest simple algorithms based on representation learning: (i) estimate on the fly during training, (ii) align the feature distributions of the target domain with the reweighted feature distribution of the source domain and, (iii) minimize the balanced error rate. Overall, we present the pseudocode of our algorithm in Alg. 1.

To compute , we build estimators and of C and by averaging during each epoch the predictions of the classifier on the source (per true class) and target (overall). This corresponds to the inner-most loop of Algorithm 1 (lines 8-9). At epoch end, is updated (line 10), and the estimators reset to 0. We have found empirically that using an exponential moving average of performs better. Our results all use a factor . We also note that Alg. 1 implies a minimal computational overhead (see App. B.1 for details): in practice our algorithms run as fast as their base versions.

Using , we can define our first algorithm, Importance-Weighted Domain Adversarial Network (IWDAN), that aligns and ) using a discriminator. To that end, we modify the DANN losses and as follows. For batches and of size , the weighted DA loss is:

| (7) |

We verify in App. A.1, that the standard ADA framework applied to indeed minimizes . Our second algorithm, Importance-Weighted Joint Adaptation Networks (IWJAN) is based on JAN [40] and follows the reweighting principle described in Section 3.5 with a learnt RKHS (the exact JAN and IWJAN losses are specified in App. B.5). Finally, our third algorithm is Importance-Weighted Conditional Domain Adversarial Network (IWCDAN). It matches with by replacing the standard adversarial loss in CDAN with Eq. 7, where takes as input instead of . The classifier loss for our three variants is:

| (8) |

This reweighting is suggested by our theoretical analysis from Section 3, where we seek to minimize the balanced error rate . We also define oracle versions, IWDAN-O, IWJAN-O and IWCDAN-O, where the weights are the true weights. It gives an idealistic version of the reweighting method, and allows to assess the soundness of . IWDAN, IWJAN and IWCDAN are Alg. 1 with their respective loss functions, the oracle versions use the true weights instead of .

4.2 Experiments

We apply our three base algorithms, their importance weighted versions, and the oracles to 4 standard DA datasets generating 21 tasks: Digits (MNIST USPS [32, 19]), Visda [55], Office-31 [49] and Office-Home [54]. All values are averages over 5 runs of the best test accuracy throughout training (evaluated at the end of each epoch). We used that value for fairness with respect to the baselines (as shown in the left panel of Figure 2, the performance of DANN decreases as training progresses, due to the inappropriate matching of representations showcased in Theorem 2.1). For full details, see App. B.2 and B.7.

Performance vs We artificially generate 100 tasks from MNIST and USPS by considering various random subsets of the classes in either the source or target domain (see Appendix B.6 for details). These 100 DA tasks have a varying between and . Applying IWDAN and IWCDAN results in Fig. 1. We see a clear correlation between the improvements provided by our algorithms and , which is well aligned with Theorem 2.1. Moreover, IWDAN outperfoms DANN on the tasks and IWCDAN bests CDAN on . Even on small divergences, our algorithms do not suffer compared to their base versions.

Original Datasets The average results on each dataset are shown in Table 2 (see App.B.3 for the per-task breakdown). IWDAN outperforms the basic algorithm DANN by , , and on the Digits, Visda, Office-31 and Office-Home tasks respectively. Gains for IWCDAN are more limited, but still present: , , and respectively. This might be explained by the fact that CDAN enforces a weak form of (App. B.5.2). Gains for JAN are , and . We also show the fraction of times (over all seeds and tasks) our variants outperform the original algorithms. Even for small gains, the variants provide consistent improvements. Additionally, the oracle versions show larger improvements, which strongly supports enforcing .

| Method | Digits | Digits | Visda | Visda | O-31 | O-31 | O-H | O-H |

|---|---|---|---|---|---|---|---|---|

| No DA | 77.17 | 75.67 | 48.39 | 49.02 | 77.81 | 75.72 | 56.39 | 51.34 |

| DANN | 93.15 | 83.24 | 61.88 | 52.85 | 82.74 | 76.17 | 59.62 | 51.83 |

| IWDAN | 94.90100% | 92.54100% | 63.52100% | 60.18100% | 83.9087% | 82.60100% | 62.2797% | 57.61100% |

| IWDAN-O | 95.27100% | 94.46100% | 64.19100% | 62.10100% | 85.3397% | 84.41100% | 64.68100% | 60.87100% |

| CDAN | 95.72 | 88.23 | 65.60 | 60.19 | 87.23 | 81.62 | 64.59 | 56.25 |

| IWCDAN | 95.9080% | 93.22100% | 66.4960% | 65.83100% | 87.3073% | 83.88100% | 65.6670% | 61.24100% |

| IWCDAN-O | 95.8590% | 94.81100% | 68.15100% | 66.85100% | 88.1490% | 85.47100% | 67.6498% | 63.73100% |

| JAN | N/A | N/A | 56.98 | 50.64 | 85.13 | 78.21 | 59.59 | 53.94 |

| IWJAN | N/A | N/A | 57.56100% | 57.12100% | 85.3260% | 82.6197% | 59.7863% | 55.89100% |

| IWJAN-O | N/A | N/A | 61.48100% | 61.30100% | 87.14100% | 86.24100% | 60.7392% | 57.36100% |

Subsampled datasets The original datasets have fairly balanced classes, making the JSD between source and target label distributions rather small (Tables 11a, 12a and 13a in App. B.4). To evaluate our algorithms on larger divergences, we arbitrarily modify the source domains above by considering only of the samples coming from the first half of their classes. This results in larger divergences (Tables 11b, 12b and 13b). Performance is shown in Table 2 (datasets prefixed by s). For IWDAN, we see gains of , , and on the digits, Visda, Office-31 and Office-Home datasets respectively. For IWCDAN, improvements are , , and , and IWJAN shows gains of , and . Moreover, on all seeds and tasks but one, our variants outperform their base versions.

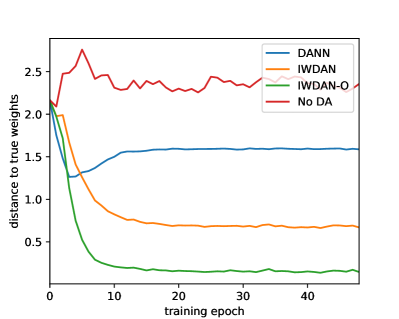

Importance weights While our method demonstrates gains empirically, Lemma 3.2 does not guarantee convergence of to the true weights. In Fig. 2, we show the test accuracy and distance between estimated and true weights during training on sDigits. We see that DANN’s performance gets worse after a few epoch, as predicted by Theorem 2.1. The representation matching objective collapses classes that are over-represented in the target domain on the under-represented ones (see App. B.9). This phenomenon does not occur for IWDAN and IWDAN-O. Both monotonously improve in accuracy and estimation (see Lemma 3.3 and App. B.8 for more details). We also observe that IWDAN’s weights do not converge perfectly. This suggests that fine-tuning (we used in all our experiments for simplicity) or updating more or less often could lead to better performance.

Ablation Study Our algorithms have two components, a weighted adversarial loss and a weighted classification loss . In Table 3, we augment DANN and CDAN using those losses separately (with the true weights). We observe that DANN benefits essentially from the reweighting of its adversarial loss , the classification loss has little effect. For CDAN, gains are essentially seen on the subsampled datasets. Both losses help, with a extra gain for .

| Method | Digits | sDigits | Method | Digits | sDigits |

|---|---|---|---|---|---|

| DANN | 93.15 | 83.24 | CDAN | 95.72 | 88.23 |

| DANN + | 93.27 | 84.52 | CDAN + | 95.65 | 91.01 |

| DANN + | 95.31 | 94.41 | CDAN + | 95.42 | 93.18 |

| IWDAN-O | 95.27 | 94.46 | IWCDAN-O | 95.85 | 94.81 |

5 Related Work

Covariate shift has been studied and used in many adaptation algorithms [30, 26, 3, 1, 53, 65, 48]. While less known, label shift has also been tackled from various angles over the years: applying EM to learn [14], placing a prior on the label distribution [52], using kernel mean matching [61, 20, 46], etc. Schölkopf et al. [50] cast the problem in a causal/anti-causal perspective corresponding to covariate/label shift. That perspective was then further developed [61, 23, 35, 4]. Numerous domain adaptation methods rely on learning invariant representations, and minimize various metrics on the marginal feature distributions: total variation or equivalently [22, 53, 64, 36], maximum mean discrepancy [27, 37, 38, 39, 40], Wasserstein distance [17, 16, 51, 33, 15], etc. Other noteworthy DA methods use reconstruction losses and cycle-consistency to learn transferable classifiers [67, 29, 56]. Recently, Liu et al. [36] have introduced Transferable Adversarial Training (TAT), where transferable examples are generated to fill the gap in feature space between source and target domains, the datasets is then augmented with those samples. Applying our method to TAT is a future research direction.

Other relevant settings include partial ADA, i.e. UDA when target labels are a strict subset of the source labels / some components of are [11, 12, 13]. Multi-domain adaptation, where multiple source or target domains are given, is also very studied [42, 18, 45, 63, 28, 47]. Recently, Binkowski et al. [8] study sample reweighting in the domain transfer to handle mass shifts between distributions.

Prior work on combining importance weight in domain-invariant representation learning also exists in the setting of partial DA [60]. However, the importance ratio in these works is defined over the features , rather than the class label . Compared to our method, this is both statistically inefficient and computationally expensive, since the feature space is often a high-dimensional continuous space, whereas the label space only contains a finite number () of distinct labels. In a separate work, Yan et al. [58] proposed a weighted MMD distance to handle target shift in UDA. However, their weights are estimated based on pseudo-labels obtained from the learned classifier, hence it is not clear whether the pseudo-labels provide accurate estimation of the importance weights even in simple settings. As a comparison, under , we show that our weight estimation by solving a quadratic program converges asymptotically.

6 Conclusion and Future Work

We have introduced the generalized label shift assumption, , and theoretically-grounded variations of existing algorithms to handle mismatched label distributions. On tasks from classic benchmarks as well as artificial ones, our algorithms consistently outperform their base versions. The gains, as expected theoretically, correlate well with the JSD between label distributions across domains. In real-world applications, the JSD is unknown, and might be larger than in ML datasets where classes are often purposely balanced. Being simple to implement and adding barely any computational cost, the robustness of our method to mismatched label distributions makes it very relevant to such applications.

Extensions The framework we define in this paper relies on appropriately reweighting the domain adversarial losses. It can be straightforwardly applied to settings where multiple source and/or target domains are used, by simply maintaining one importance weights vector for each source/target pair [63, 47]. In particular, label shift could explain the observation from Zhao et al. [63] that too many source domains hurt performance, and our framework might alleviate the issue. One can also think of settings (e.g. semi-supervised domain adaptation) where estimations of can be obtained via other means. A more challenging but also more interesting future direction is to extend our framework to domain generalization, where the learner has access to multiple labeled source domains but no access to (even unlabelled) data from the target domain.

Acknowledgements

The authors thank Romain Laroche and Alessandro Sordoni for useful feedback and helpful discussions. HZ and GG would like to acknowledge support from the DARPA XAI project, contract #FA87501720152 and a Nvidia GPU grant. YW would like acknowledge partial support from NSF Award #2029626, a start-up grant from UCSB Department of Computer Science, as well as generous gifts from Amazon, Adobe, Google and NEC Labs.

Broader Impact

Our work focuses on domain adaptation and attempts to properly handle mismatches in the label distributions between the source and target domains. Domain Adaptation as a whole aims at transferring knowledge gained from a certain domain (or data distribution) to another one. It can potentially be used in a variety of decision making systems, such as spam filters, machine translation, etc.. One can also potentially think of much more sensitive applications such as recidivism prediction, or loan approvals.

While it is unclear to us to what extent DA is currently applied, or how it will be applied in the future, the bias formalized in Th. 2.1 and verified in Table 17 demonstrates that imbalances between classes will result in poor transfer performance of standard ADA methods on a subset of them, which is without a doubt a source of potential inequalities. Our method is actually aimed at counter-balancing the effect of such imbalances. As shown in our empirical results (for instance Table 18) it is rather successful at it, especially on significant shifts. This makes us rather confident in the algorithm’s ability to mitigate potential effects of biases in the datasets. On the downside, failure in the weight estimation of some classes might result in poor performance on those. However, we have not observed, in any of our experiments, our method performing significantly worse than its base version. Finally, our method is a variation over existing deep learning algorithms. As such, it carries with it the uncertainties associated to deep learning models, in particular a lack of interpretability and of formal convergence guarantees.

References

- Adel et al. [2017] Tameem Adel, Han Zhao, and Alexander Wong. Unsupervised domain adaptation with a relaxed covariate shift assumption. In Thirty-First AAAI Conference on Artificial Intelligence, 2017.

- Arjovsky et al. [2019] Martin Arjovsky, Léon Bottou, Ishaan Gulrajani, and David Lopez-Paz. Invariant risk minimization, 2019. URL http://arxiv.org/abs/1907.02893. cite arxiv:1907.02893.

- Ash et al. [2016] Jordan T Ash, Robert E Schapire, and Barbara E Engelhardt. Unsupervised domain adaptation using approximate label matching. arXiv preprint arXiv:1602.04889, 2016.

- Azizzadenesheli et al. [2019] Kamyar Azizzadenesheli, Anqi Liu, Fanny Yang, and Animashree Anandkumar. Regularized learning for domain adaptation under label shifts. In ICLR (Poster). OpenReview.net, 2019. URL http://dblp.uni-trier.de/db/conf/iclr/iclr2019.html#Azizzadenesheli19.

- Bachman et al. [2019] Philip Bachman, R. Devon Hjelm, and William Buchwalter. Learning representations by maximizing mutual information across views. CoRR, abs/1906.00910, 2019. URL http://dblp.uni-trier.de/db/journals/corr/corr1906.html#abs-1906-00910.

- Ben-David et al. [2007] Shai Ben-David, John Blitzer, Koby Crammer, Fernando Pereira, et al. Analysis of representations for domain adaptation. Advances in neural information processing systems, 19:137, 2007.

- Ben-David et al. [2010] Shai Ben-David, John Blitzer, Koby Crammer, Alex Kulesza, Fernando Pereira, and Jennifer Wortman Vaughan. A theory of learning from different domains. Machine learning, 79(1-2):151–175, 2010.

- Binkowski et al. [2019] Mikolaj Binkowski, R. Devon Hjelm, and Aaron C. Courville. Batch weight for domain adaptation with mass shift. CoRR, abs/1905.12760, 2019. URL http://dblp.uni-trier.de/db/journals/corr/corr1905.html#abs-1905-12760.

- Bousmalis et al. [2016] Konstantinos Bousmalis, George Trigeorgis, Nathan Silberman, Dilip Krishnan, and Dumitru Erhan. Domain separation networks. In Advances in Neural Information Processing Systems, pages 343–351, 2016.

- Briët and Harremoës [2009] Jop Briët and Peter Harremoës. Properties of classical and quantum jensen-shannon divergence. Phys. Rev. A, 79:052311, May 2009. doi: 10.1103/PhysRevA.79.052311. URL https://link.aps.org/doi/10.1103/PhysRevA.79.052311.

- Cao et al. [2018a] Zhangjie Cao, Mingsheng Long, Jianmin Wang, and Michael I. Jordan. Partial transfer learning with selective adversarial networks. In CVPR, pages 2724–2732. IEEE Computer Society, 2018a. URL http://dblp.uni-trier.de/db/conf/cvpr/cvpr2018.html#CaoL0J18.

- Cao et al. [2018b] Zhangjie Cao, Lijia Ma, Mingsheng Long, and Jianmin Wang. Partial adversarial domain adaptation. In Vittorio Ferrari, Martial Hebert, Cristian Sminchisescu, and Yair Weiss, editors, ECCV (8), volume 11212 of Lecture Notes in Computer Science, pages 139–155. Springer, 2018b. ISBN 978-3-030-01237-3. URL http://dblp.uni-trier.de/db/conf/eccv/eccv2018-8.html#CaoMLW18.

- Cao et al. [2019] Zhangjie Cao, Kaichao You, Mingsheng Long, Jianmin Wang, and Qiang Yang. Learning to transfer examples for partial domain adaptation. In CVPR, pages 2985–2994. Computer Vision Foundation / IEEE, 2019. URL http://dblp.uni-trier.de/db/conf/cvpr/cvpr2019.html#CaoYLW019.

- Chan and Ng [2005] Yee Seng Chan and Hwee Tou Ng. Word sense disambiguation with distribution estimation. In Leslie Pack Kaelbling and Alessandro Saffiotti, editors, IJCAI, pages 1010–1015. Professional Book Center, 2005. ISBN 0938075934. URL http://dblp.uni-trier.de/db/conf/ijcai/ijcai2005.html#ChanN05.

- Chen et al. [2018] Qingchao Chen, Yang Liu, Zhaowen Wang, Ian Wassell, and Kevin Chetty. Re-weighted adversarial adaptation network for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 7976–7985, 2018.

- Courty et al. [2017a] Nicolas Courty, Rémi Flamary, Amaury Habrard, and Alain Rakotomamonjy. Joint distribution optimal transportation for domain adaptation. In Advances in Neural Information Processing Systems, pages 3730–3739, 2017a.

- Courty et al. [2017b] Nicolas Courty, Rémi Flamary, Devis Tuia, and Alain Rakotomamonjy. Optimal transport for domain adaptation. IEEE transactions on pattern analysis and machine intelligence, 39(9):1853–1865, 2017b.

- Daumé III [2009] Hal Daumé III. Frustratingly easy domain adaptation. arXiv preprint arXiv:0907.1815, 2009.

- Dheeru and Karra [2017] Dua Dheeru and Efi Karra. UCI machine learning repository, 2017. URL http://archive.ics.uci.edu/ml.

- du Plessis and Sugiyama [2014] Marthinus Christoffel du Plessis and Masashi Sugiyama. Semi-supervised learning of class balance under class-prior change by distribution matching. Neural Networks, 50:110–119, 2014. URL http://dblp.uni-trier.de/db/journals/nn/nn50.html#PlessisS14.

- Endres and Schindelin [2003] Dominik Maria Endres and Johannes E Schindelin. A new metric for probability distributions. IEEE Transactions on Information theory, 2003.

- Ganin et al. [2016] Yaroslav Ganin, Evgeniya Ustinova, Hana Ajakan, Pascal Germain, Hugo Larochelle, François Laviolette, Mario Marchand, and Victor Lempitsky. Domain-adversarial training of neural networks. Journal of Machine Learning Research, 17(59):1–35, 2016.

- Gong et al. [2016] Mingming Gong, Kun Zhang, Tongliang Liu, Dacheng Tao, Clark Glymour, and Bernhard Schölkopf. Domain adaptation with conditional transferable components. In International conference on machine learning, pages 2839–2848, 2016.

- Goodfellow et al. [2017] Ian Goodfellow, Yoshua Bengio, and Aaron Courville. Deep learning. 2017. ISBN 9780262035613 0262035618. URL https://www.worldcat.org/title/deep-learning/oclc/985397543&referer=brief_results.

- Goodfellow et al. [2014] Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial networks, 2014. URL http://arxiv.org/abs/1406.2661. cite arxiv:1406.2661.

- Gretton et al. [2009] Arthur Gretton, Alex Smola, Jiayuan Huang, Marcel Schmittfull, Karsten Borgwardt, and Bernhard Schölkopf. Covariate shift by kernel mean matching. Dataset shift in machine learning, 3(4):5, 2009.

- Gretton et al. [2012] Arthur Gretton, Karsten M Borgwardt, Malte J Rasch, Bernhard Schölkopf, and Alexander Smola. A kernel two-sample test. Journal of Machine Learning Research, 13(Mar):723–773, 2012.

- Guo et al. [2018] Jiang Guo, Darsh J Shah, and Regina Barzilay. Multi-source domain adaptation with mixture of experts. arXiv preprint arXiv:1809.02256, 2018.

- Hoffman et al. [2017] Judy Hoffman, Eric Tzeng, Taesung Park, Jun-Yan Zhu, Phillip Isola, Kate Saenko, Alexei A Efros, and Trevor Darrell. Cycada: Cycle-consistent adversarial domain adaptation. arXiv preprint arXiv:1711.03213, 2017.

- Huang et al. [2006] Jiayuan Huang, Arthur Gretton, Karsten M Borgwardt, Bernhard Schölkopf, and Alex J Smola. Correcting sample selection bias by unlabeled data. In Advances in neural information processing systems, pages 601–608, 2006.

- LeCun et al. [1998] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998. ISSN 0018-9219. doi: 10.1109/5.726791.

- LeCun and Cortes [2010] Yann LeCun and Corinna Cortes. MNIST handwritten digit database. http://yann.lecun.com/exdb/mnist/, 2010. URL http://yann.lecun.com/exdb/mnist/.

- Lee and Raginsky [2018] Jaeho Lee and Maxim Raginsky. Minimax statistical learning with wasserstein distances. In Advances in Neural Information Processing Systems, pages 2692–2701, 2018.

- Lin [1991] Jianhua Lin. Divergence measures based on the Shannon entropy. IEEE Transactions on Information Theory, 37(1):145–151, 1991.

- Lipton et al. [2018] Zachary Lipton, Yu-Xiang Wang, and Alexander Smola. Detecting and correcting for label shift with black box predictors. In International Conference on Machine Learning, pages 3128–3136, 2018.

- Liu et al. [2019] Hong Liu, Mingsheng Long, Jianmin Wang, and Michael I. Jordan. Transferable adversarial training: A general approach to adapting deep classifiers. In Kamalika Chaudhuri and Ruslan Salakhutdinov, editors, ICML, volume 97 of Proceedings of Machine Learning Research, pages 4013–4022. PMLR, 2019. URL http://dblp.uni-trier.de/db/conf/icml/icml2019.html#LiuLWJ19.

- Long et al. [2014] Mingsheng Long, Jianmin Wang, Guiguang Ding, Jiaguang Sun, and Philip S Yu. Transfer joint matching for unsupervised domain adaptation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1410–1417, 2014.

- Long et al. [2015] Mingsheng Long, Yue Cao, Jianmin Wang, and Michael Jordan. Learning transferable features with deep adaptation networks. In International Conference on Machine Learning, pages 97–105, 2015.

- Long et al. [2016] Mingsheng Long, Han Zhu, Jianmin Wang, and Michael I Jordan. Unsupervised domain adaptation with residual transfer networks. In Advances in Neural Information Processing Systems, pages 136–144, 2016.

- Long et al. [2017] Mingsheng Long, Han Zhu, Jianmin Wang, and Michael I Jordan. Deep transfer learning with joint adaptation networks. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pages 2208–2217. JMLR, 2017.

- Long et al. [2018] Mingsheng Long, Zhangjie Cao, Jianmin Wang, and Michael I. Jordan. Conditional adversarial domain adaptation. In Samy Bengio, Hanna M. Wallach, Hugo Larochelle, Kristen Grauman, Nicolò Cesa-Bianchi, and Roman Garnett, editors, NeurIPS, pages 1647–1657, 2018. URL http://dblp.uni-trier.de/db/conf/nips/nips2018.html#LongC0J18.

- Mansour et al. [2009] Yishay Mansour, Mehryar Mohri, and Afshin Rostamizadeh. Domain adaptation with multiple sources. In Advances in neural information processing systems, pages 1041–1048, 2009.

- McCoy et al. [2019] R. Thomas McCoy, Ellie Pavlick, and Tal Linzen. Right for the wrong reasons: Diagnosing syntactic heuristics in natural language inference. Proceedings of the ACL, 2019.

- Müller [1997] Alfred Müller. Integral probability metrics and their generating classes of functions. Advances in Applied Probability, 29(2):429–443, 1997.

- Nam and Han [2016] Hyeonseob Nam and Bohyung Han. Learning multi-domain convolutional neural networks for visual tracking. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016.

- Nguyen et al. [2015] Tuan Duong Nguyen, Marthinus Christoffel du Plessis, and Masashi Sugiyama. Continuous target shift adaptation in supervised learning. In ACML, volume 45 of JMLR Workshop and Conference Proceedings, pages 285–300. JMLR.org, 2015. URL http://dblp.uni-trier.de/db/conf/acml/acml2015.html#NguyenPS15.

- Peng et al. [2019] Xingchao Peng, Zijun Huang, Ximeng Sun, and Kate Saenko. Domain agnostic learning with disentangled representations. In Kamalika Chaudhuri and Ruslan Salakhutdinov, editors, ICML, volume 97 of Proceedings of Machine Learning Research, pages 5102–5112. PMLR, 2019. URL http://dblp.uni-trier.de/db/conf/icml/icml2019.html#PengHSS19.

- Redko et al. [2019] Ievgen Redko, Nicolas Courty, Rémi Flamary, and Devis Tuia. Optimal transport for multi-source domain adaptation under target shift. In 22nd International Conference on Artificial Intelligence and Statistics (AISTATS) 2019, volume 89, 2019.

- Saenko et al. [2010] Kate Saenko, Brian Kulis, Mario Fritz, and Trevor Darrell. Adapting visual category models to new domains. In Kostas Daniilidis, Petros Maragos, and Nikos Paragios, editors, ECCV (4), volume 6314 of Lecture Notes in Computer Science, pages 213–226. Springer, 2010. ISBN 978-3-642-15560-4. URL http://dblp.uni-trier.de/db/conf/eccv/eccv2010-4.html#SaenkoKFD10.

- Schölkopf et al. [2012] Bernhard Schölkopf, Dominik Janzing, Jonas Peters, Eleni Sgouritsa, Kun Zhang, and Joris M. Mooij. On causal and anticausal learning. In ICML. icml.cc / Omnipress, 2012. URL http://dblp.uni-trier.de/db/conf/icml/icml2012.html#ScholkopfJPSZM12.

- Shen et al. [2018] Jian Shen, Yanru Qu, Weinan Zhang, and Yong Yu. Wasserstein distance guided representation learning for domain adaptation. In Thirty-Second AAAI Conference on Artificial Intelligence, 2018.

- Storkey [2009] Amos Storkey. When training and test sets are different: Characterising learning transfer. Dataset shift in machine learning., 2009.

- Tzeng et al. [2017] Eric Tzeng, Judy Hoffman, Kate Saenko, and Trevor Darrell. Adversarial discriminative domain adaptation. arXiv preprint arXiv:1702.05464, 2017.

- Venkateswara et al. [2017] Hemanth Venkateswara, Jose Eusebio, Shayok Chakraborty, and Sethuraman Panchanathan. Deep hashing network for unsupervised domain adaptation. In (IEEE) Conference on Computer Vision and Pattern Recognition (CVPR), 2017.

- Visda [2017] Visda. Visual domain adaptation challenge, 2017. URL http://ai.bu.edu/visda-2017/.

- Xie et al. [2018] Shaoan Xie, Zibin Zheng, Liang Chen, and Chuan Chen. Learning semantic representations for unsupervised domain adaptation. In Jennifer G. Dy and Andreas Krause, editors, ICML, volume 80 of Proceedings of Machine Learning Research, pages 5419–5428. PMLR, 2018. URL http://dblp.uni-trier.de/db/conf/icml/icml2018.html#XieZCC18.

- Yaghoobzadeh et al. [2019] Yadollah Yaghoobzadeh, Remi Tachet des Combes, Timothy J. Hazen, and Alessandro Sordoni. Robust natural language inference models with example forgetting. CoRR, abs/1911.03861, 2019. URL http://dblp.uni-trier.de/db/journals/corr/corr1911.html#abs-1911-03861.

- Yan et al. [2017] Hongliang Yan, Yukang Ding, Peihua Li, Qilong Wang, Yong Xu, and Wangmeng Zuo. Mind the class weight bias: Weighted maximum mean discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2272–2281, 2017.

- Yosinski et al. [2014] Jason Yosinski, Jeff Clune, Yoshua Bengio, and Hod Lipson. How transferable are features in deep neural networks? In Advances in neural information processing systems, pages 3320–3328, 2014.

- Zhang et al. [2018] Jing Zhang, Zewei Ding, Wanqing Li, and Philip Ogunbona. Importance weighted adversarial nets for partial domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 8156–8164, 2018.

- Zhang et al. [2013] Kun Zhang, Bernhard Schölkopf, Krikamol Muandet, and Zhikun Wang. Domain adaptation under target and conditional shift. In International Conference on Machine Learning, pages 819–827, 2013.

- Zhang et al. [2015] Xu Zhang, Felix X. Yu, Shih-Fu Chang, and Shengjin Wang. Deep transfer network: Unsupervised domain adaptation. CoRR, abs/1503.00591, 2015. URL http://dblp.uni-trier.de/db/journals/corr/corr1503.html#ZhangYCW15.

- Zhao et al. [2018a] Han Zhao, Shanghang Zhang, Guanhang Wu, Geoffrey J Gordon, et al. Multiple source domain adaptation with adversarial learning. In International Conference on Learning Representations, 2018a.

- Zhao et al. [2018b] Han Zhao, Shanghang Zhang, Guanhang Wu, José MF Moura, Joao P Costeira, and Geoffrey J Gordon. Adversarial multiple source domain adaptation. In Advances in Neural Information Processing Systems, pages 8568–8579, 2018b.

- Zhao et al. [2019a] Han Zhao, Junjie Hu, Zhenyao Zhu, Adam Coates, and Geoff Gordon. Deep generative and discriminative domain adaptation. In Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems, pages 2315–2317. International Foundation for Autonomous Agents and Multiagent Systems, 2019a.

- Zhao et al. [2019b] Han Zhao, Remi Tachet des Combes, Kun Zhang, and Geoffrey J. Gordon. On learning invariant representations for domain adaptation. In Kamalika Chaudhuri and Ruslan Salakhutdinov, editors, ICML, volume 97 of Proceedings of Machine Learning Research, pages 7523–7532. PMLR, 2019b. URL http://dblp.uni-trier.de/db/conf/icml/icml2019.html#0002CZG19.

- Zhu et al. [2017] Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A. Efros. Unpaired image-to-image translation using cycle-consistent adversarial networks. In ICCV, pages 2242–2251. IEEE Computer Society, 2017. ISBN 978-1-5386-1032-9. URL http://dblp.uni-trier.de/db/conf/iccv/iccv2017.html#ZhuPIE17.

Appendix A Omitted Proofs

In this section, we provide the theoretical material that completes the main text.

A.1 Definition

Definition A.1.

Let us recall that for two distributions and , the Jensen-Shannon (JSD) divergence is defined as:

where is the Kullback–Leibler (KL) divergence and .

A.2 Consistency of the Weighted Domain Adaptation Loss (7)

For the sake of conciseness, we verify here that the domain adaptation training objective does lead to minimizing the Jensen-Shannon divergence between the weighted feature distribution of the source domain and the feature distribution of the target domain.

Lemma A.1.

Let and be two density distributions, and be a positive function such that . Let denote the -reweighted marginal distribution of under . The minimum value of

is , and is attained for .

Proof.

We see that:

| (9) | ||||

| (10) | ||||

| (11) |

From the last line, we follow the exact method from Goodfellow et al. [25] to see that point-wise in the minimum is attained for and that . ∎

Applying Lemma A.1 to and proves that the domain adaptation objective leads to minimizing .

A.3 -class information-theoretic lower bound

In this section, we prove Theorem 2.1 that extends previous result to the general -class classification problem. See 2.1

Proof.

We essentially follow the proof from Zhao et al. [66], except for Lemmas 4.6 that needs to be adapted to the CDAN framework and Lemma 4.7 to -class classification.

Lemma 4.6 from Zhao et al. [66] states that , which covers the case .

When , let us first recall that we assume or equivalently to be a one-hot prediction of the class. We have the following Markov chain:

where and returns the index of the non-zero block in . There is only one such block since is a one-hot, and its index corresponds to the class predicted by . Given the definition of , we clearly see that is independent of knowing . We can now apply the same proof than in Zhao et al. [66] to conclude that:

| (12) |

It essentially boils down to a data-processing argument: the discrimination distance between two distributions cannot increase after the same (possibly stochastic) channel (kernel) is applied to both. Here, the channel corresponds to the (potentially randomized) function .

Remark

Additionally, we note that the above inequality holds for any such that for a (potentially randomized) function l. This covers any and all potential combinations of representations at various layers of the deep net, including the last layer (which corresponds to its predictions ).

Let us move to the second part of the proof. We wish to show that , where can be either or :

| [34] | |||||

| (13) | |||||

We can now apply the triangular inequality to , which is a distance metric [21], called the Jensen-Shannon distance. This gives us:

where we used Equation (12) for the second inequality and (13) for the third.

Finally, assuming that , we get:

which concludes the proof. ∎

A.4 Proof of Theorem 3.1

To simplify the notation, we define the error gap as follows:

Also, in this case we use to mean the source and target distributions respectively. Before we give the proof of Theorem 3.1, we first prove the following two lemmas that will be used in the proof.

Lemma A.2.

Define , then such that , and , the following upper bound holds:

Proof.

To make the derivation uncluttered, define to be the mixture conditional probability of given , where the mixture weight is given by and . Then in order to prove the upper bound in the lemma, it suffices if we give the desired upper bound for the following term

following which we will have:

To proceed, let us first simplify . By definition of , we know that:

Similarly, for the second term , we can show that:

Plugging these two identities into the above, we can continue the analysis with

The first inequality holds by the triangle inequality and the second by the definition of the conditional error gap. Combining all the inequalities above completes the proof. ∎

We are now ready to prove the theorem: See 3.1

Proof of Theorem 3.1.

First, by the law of total probability, it is easy to verify that following identity holds for :

Using this identity, to bound the error gap, we have:

Invoking Lemma A.2 to bound the above terms, and since , , we get:

| Note that the above holds such that . By choosing and , we have: | ||||

where the last line is due to Holder’s inequality, completing the proof. ∎

A.5 Proof of Theorem 3.2

See 3.2

Proof.

First, by the law of total probability, we have:

|

Now, since , is a function of . Given the generalized label shift assumption, this guarantees that: |

||||||

A.6 Proof of Lemma 3.1

See 3.1

Proof.

From , we know that . Applying any function to will maintain that equality (in particular ). Using that fact and Eq. (4) on the second line gives:

| (14) |

A.7 Proof of Theorem 3.3

See 3.3

Proof.

Follow the condition that , by definition of , we have:

Note that the above equation holds for all measurable subsets of . Now by the assumption that is a partition of , consider :

Due to the assumption , we know that , . This shows that both the supports of and are contained in . Now consider an arbitrary measurable set , since is a partition of , we know that

Plug into the following identity:

where the last line holds because . Realize that the choice of is arbitrary, this shows that , which completes the proof. ∎

A.8 Sufficient Conditions for

See 3.4

Proof.

To prove the above upper bound, let us first fix a and fix a classifier for some . Now consider any measurable subset , we would like to upper bound the following quantity:

Hence it suffices if we can upper bound . To do so, consider the following decomposition:

We bound the above three terms in turn. First, consider :

where the last inequality is due to the fact that the definition of error rate corresponds to the sum of all the off-diagonal elements in the confusion matrix while the sum here only corresponds to the sum of all the elements in two slices. Similarly, we can bound the third term as follows:

Now we bound the last term. Recall the definition of total variation, we have:

Combining the above three parts yields

Now realizing that the choice of and the measurable subset on the LHS is arbitrary, this leads to

From Briët and Harremoës [10], we have:

(the total variation and Jensen-Shannon distance are equivalent), which gives the results for . Finally, noticing that is a bijection ( sums to 1), we have:

which completes the proof. ∎

Furthermore, since the above upper bound holds for any classifier , we even have:

A.9 Proof of Lemma 3.2

See 3.2

Proof.

Given (2), and with the joint hypothesis over both source and target domains, it is straightforward to see that the induced conditional distributions over predicted labels match between the source and target domains, i.e.:

| (15) |

This allows us to compute as

where we used (15) for the second line. We thus have which concludes the proof. ∎

A.10 -IPM for Distributional Alignment

In Table 4, we list different instances of IPM with different choices of the function class in the above definition, including the total variation distance, Wasserstein-1 distance and the Maximum mean discrepancy [27].

| Total Variation | |

| Wasserstein-1 distance | |

| Maximum mean discrepancy |

Appendix B Experimentation Details

B.1 Computational Complexity

Our algorithms imply negligible time and memory overhead compared to their base versions. They are, in practice, indistinguishable from the underlying baseline:

-

•

Weight estimation requires storing the confusion matrix and the predictions . This has a memory cost of , small compared to the size of a neural network that performs well on k classes.

-

•

The extra computational cost comes from solving the quadratic program 5, which only depends on the number of classes and is solved once per epoch (not per gradient step). For Office-Home, it is a QP, solved times. Its runtime is negligible compared to tens of thousands of gradient steps.

B.2 Description of the domain adaptation tasks

Digits We follow a widely used evaluation protocol [29, 41]. For the digits datasets MNIST (M, LeCun and Cortes [32]) and USPS (U, Dheeru and Karra [19]), we consider the DA tasks: M U and U M. Performance is evaluated on the 10,000/2,007 examples of the MNIST/USPS test sets.

Visda [55] is a sim-to-real domain adaptation task. The synthetic domain contains 2D rendering of 3D models captured at different angles and lighting conditions. The real domain is made of natural images. Overall, the training, validation and test domains contain 152,397, 55,388 and 5,534 images, from 12 different classes.

Office-31 [49] is one of the most popular dataset for domain adaptation . It contains 4,652 images from 31 classes. The samples come from three domains: Amazon (A), DSLR (D) and Webcam (W), which generate six possible transfer tasks, A D, A W, D A, D W, W A and W D, which we all evaluate.

Office-Home [54] is a more complex dataset than Office-31. It consists of 15,500 images from 65 classes depicting objects in office and home environments. The images form four different domains: Artistic (A), Clipart (C), Product (P), and Real-World images (R). We evaluate the 12 possible domain adaptation tasks.

B.3 Full results on the domain adaptation tasks

Tables 5, 6, 7, 8, 9 and 10 show the detailed results of all the algorithms on each task of the domains described above. The performance we report is the best test accuracy obtained during training over a fixed number of epochs. We used that value for fairness with respect to the baselines (as shown in Figure 2 Left, the performance of DANN decreases as training progresses, due to the inappropriate matching of representations showcased in Theorem 2.1).

The subscript denotes the fraction of seeds for which our variant outperforms the base algorithm. More precisely, by outperform, we mean that for a given seed (which fixes the network initialization as well as the data being fed to the model) the variant has a larger accuracy on the test set than its base version. Doing so allows to assess specifically the effect of the algorithm, all else kept constant.

| Method | M U | U M | Avg. | sM U | sU M | Avg. |

|---|---|---|---|---|---|---|

| No Ad. | 79.04 | 75.30 | 77.17 | 76.02 | 75.32 | 75.67 |

| DANN | 90.65 | 95.66 | 93.15 | 79.03 | 87.46 | 83.24 |

| IWDAN | 93.28100% | 96.52100% | 94.90100% | 91.77100% | 93.32100% | 92.54100% |

| IWDAN-O | 93.73100% | 96.81100% | 95.27100% | 92.50100% | 96.42100% | 94.46100% |

| CDAN | 94.16 | 97.29 | 95.72 | 84.91 | 91.55 | 88.23 |

| IWCDAN | 94.3660% | 97.45100% | 95.9080% | 93.42100% | 93.03100% | 93.22100% |

| IWCDAN-O | 94.3480% | 97.35100% | 95.8590% | 93.37100% | 96.26100% | 94.81100% |

| Method | Visda | sVisda |

| No Ad. | 48.39 | 49.02 |

| DANN | 61.88 | 52.85 |

| IWDAN | 63.52100% | 60.18100% |

| IWDAN-O | 64.19100% | 62.10100% |

| CDAN | 65.60 | 60.19 |

| IWCDAN | 66.4960% | 65.83100% |

| IWCDAN-O | 68.15100% | 66.85100% |

| JAN | 56.98100% | 50.64100% |

| IWJAN | 57.56100% | 57.12100% |

| IWJAN-O | 61.48100% | 61.30100% |

| Method | A D | A W | D A | D W | W A | W D | Avg. |

|---|---|---|---|---|---|---|---|

| No DA | 79.60 | 73.18 | 59.33 | 96.30 | 58.75 | 99.68 | 77.81 |

| DANN | 84.06 | 85.41 | 64.67 | 96.08 | 66.77 | 99.44 | 82.74 |

| IWDAN | 84.3060% | 86.42100% | 68.38100% | 97.13100% | 67.1660% | 100.0100% | 83.9087% |

| IWDAN-O | 87.23100% | 88.88100% | 69.92100% | 98.09100% | 67.9680% | 99.92100% | 85.3397% |

| CDAN | 89.56 | 93.01 | 71.25 | 99.24 | 70.32 | 100.0 | 87.23 |

| IWCDAN | 88.9160% | 93.2360% | 71.9080% | 99.3080% | 70.4360% | 100.0100% | 87.3073% |

| IWCDAN-O | 90.0860% | 94.52100% | 73.11100% | 99.3080% | 71.83100% | 100.0100% | 88.1490% |

| JAN | 85.94 | 85.66 | 70.50 | 97.48 | 71.5 | 99.72 | 85.13 |

| IWJAN | 87.68100% | 84.860% | 70.3660% | 98.98100% | 70.060% | 100.0100% | 85.3260% |

| IWJAN-O | 89.68100% | 89.18100% | 71.96100% | 99.02100% | 73.0100% | 100.0100% | 87.14100% |

| Method | sA D | sA W | sD A | sD W | sW A | sW D | Avg. |

|---|---|---|---|---|---|---|---|

| No DA | 75.82 | 70.69 | 56.82 | 95.32 | 58.35 | 97.31 | 75.72 |

| DANN | 75.46 | 77.66 | 56.58 | 93.76 | 57.51 | 96.02 | 76.17 |

| IWDAN | 81.61100% | 88.43100% | 65.00100% | 96.98100% | 64.86100% | 98.72100% | 82.60100% |

| IWDAN-O | 84.94100% | 91.17100% | 68.44100% | 97.74100% | 64.57100% | 99.60100% | 84.41100% |

| CDAN | 82.45 | 84.60 | 62.54 | 96.83 | 65.01 | 98.31 | 81.62 |

| IWCDAN | 86.59100% | 87.30100% | 66.45100% | 97.69100% | 66.34100% | 98.92100% | 83.88100% |

| IWCDAN-O | 87.39100% | 91.47100% | 69.69100% | 97.91100% | 67.50100% | 98.88100% | 85.47100% |

| JAN | 77.74 | 77.64 | 64.48 | 91.68 | 92.60 | 65.10 | 78.21 |

| IWJAN | 84.62100% | 83.28100% | 65.3080% | 96.30100% | 98.80100% | 67.38100% | 82.6197% |

| IWJAN-O | 88.42100% | 89.44100% | 72.06100% | 97.26100% | 98.96100% | 71.30100% | 86.24100% |

| Method | A C | A P | A R | C A | C P | C R | |

|---|---|---|---|---|---|---|---|

| No DA | 41.02 | 62.97 | 71.26 | 48.66 | 58.86 | 60.91 | |

| DANN | 46.03 | 62.23 | 70.57 | 49.06 | 63.05 | 64.14 | |

| IWDAN | 48.65100% | 69.19100% | 73.60100% | 53.59100% | 66.25100% | 66.09100% | |

| IWDAN-O | 50.19100% | 70.53100% | 75.44100% | 56.69100% | 67.40100% | 67.98100% | |

| CDAN | 49.00 | 69.23 | 74.55 | 54.46 | 68.23 | 68.9 | |

| IWCDAN | 49.81100% | 73.41100% | 77.56100% | 56.5100% | 69.6480% | 70.33100% | |

| IWCDAN-O | 52.31100% | 74.54100% | 78.46100% | 60.33100% | 70.78100% | 71.47100% | |

| JAN | 41.64 | 67.20 | 73.12 | 51.02 | 62.52 | 64.46 | |

| IWJAN | 41.120% | 67.5680% | 73.1460% | 51.70100% | 63.42100% | 65.22100% | |

| IWJAN-O | 41.8880% | 68.72100% | 73.62100% | 53.04100% | 63.88100% | 66.48100% | |

| Method | P A | P C | P R | R A | R C | R P | Avg. |

| No DA | 47.1 | 35.94 | 68.27 | 61.79 | 44.42 | 75.5 | 56.39 |

| DANN | 48.29 | 44.06 | 72.62 | 63.81 | 53.93 | 77.64 | 59.62 |

| IWDAN | 52.81100% | 46.2480% | 73.97100% | 64.90100% | 54.0280% | 77.96100% | 62.2797% |

| IWDAN-O | 59.33100% | 48.28100% | 76.37100% | 69.42100% | 56.09100% | 78.45100% | 64.68100% |

| CDAN | 56.77 | 48.8 | 76.83 | 71.27 | 55.72 | 81.27 | 64.59 |

| IWCDAN | 58.99100% | 48.410% | 77.94100% | 69.480% | 54.730% | 81.0760% | 65.6670% |

| IWCDAN-O | 62.60100% | 50.73100% | 78.88100% | 72.44100% | 57.79100% | 81.3180% | 67.6498% |

| JAN | 54.5 | 40.36 | 73.10 | 64.54 | 45.98 | 76.58 | 59.59 |

| IWJAN | 55.2680% | 40.3860% | 73.0880% | 64.4060% | 45.680% | 76.3640% | 59.7863% |

| IWJAN-O | 57.78100% | 41.32100% | 73.66100% | 65.40100% | 46.68100% | 76.3620% | 60.7392% |

| Method | A C | A P | A R | C A | C P | C R | |

|---|---|---|---|---|---|---|---|

| No DA | 35.70 | 54.72 | 62.61 | 43.71 | 52.54 | 56.62 | |

| DANN | 36.14 | 54.16 | 61.72 | 44.33 | 52.56 | 56.37 | |

| IWDAN | 39.81100% | 63.01100% | 68.67100% | 47.39100% | 61.05100% | 60.44100% | |

| IWDAN-O | 42.79100% | 66.22100% | 71.40100% | 53.39100% | 61.47100% | 64.97100% | |

| CDAN | 38.90 | 56.80 | 64.77 | 48.02 | 60.07 | 61.17 | |

| IWCDAN | 42.96100% | 65.01100% | 71.34100% | 52.89100% | 64.65100% | 66.48100% | |

| IWCDAN-O | 45.76100% | 68.61100% | 73.18100% | 56.88100% | 66.61100% | 68.48100% | |

| JAN | 34.52 | 56.86 | 64.54 | 46.18 | 56.84 | 59.06 | |

| IWJAN | 36.24100% | 61.00100% | 66.34100% | 48.66100% | 59.92100% | 61.88100% | |

| IWJAN-O | 37.46100% | 62.68100% | 66.88100% | 49.82100% | 60.22100% | 62.54100% | |

| Method | P A | P C | P R | R A | R C | R P | Avg. |

| No DA | 44.29 | 33.05 | 65.20 | 57.12 | 40.46 | 70.0 | |

| DANN | 44.58 | 37.14 | 65.21 | 56.70 | 43.16 | 69.86 | 51.83 |

| IWDAN | 50.44100% | 41.63100% | 72.46100% | 61.00100% | 49.40100% | 76.07100% | 57.61100% |

| IWDAN-O | 56.05100% | 43.39100% | 74.87100% | 66.73100% | 51.72100% | 77.46100% | 60.87100% |

| CDAN | 49.65 | 41.36 | 70.24 | 62.35 | 46.98 | 74.69 | 56.25 |

| IWCDAN | 54.87100% | 44.80100% | 75.91100% | 67.02100% | 50.45100% | 78.55100% | 61.24100% |

| IWCDAN-O | 59.63100% | 46.98100% | 77.54100% | 69.24100% | 53.77100% | 78.11100% | 63.73100% |

| JAN | 50.64 | 37.24 | 69.98 | 58.72 | 40.64 | 72.00 | 53.94 |

| IWJAN | 52.92100% | 37.68100% | 70.88100% | 60.32100% | 41.54100% | 73.26100% | 55.89100% |

| IWJAN-O | 56.54100% | 39.66100% | 71.78100% | 62.36100% | 44.56100% | 73.76100% | 57.36100% |

B.4 Jensen-Shannon divergence of the original and subsampled domain adaptation datasets

Tables 11, 12 and 13 show for our four datasets and their subsampled versions, rows correspond to the source domain, and columns to the target one. We recall that subsampling simply consists in taking of the first half of the classes in the source domain (which explains why is not symmetric for the subsampled datasets).

| MNIST | USPS | Real | |

| MNIST | 0 | - | |

| USPS | 0 | - | |

| Synth. | - | - |

| MNIST | USPS | Real | |

| MNIST | 0 | - | |

| USPS | 0 | - | |

| Synth. | - | - |

| Amazon | DSLR | Webcam | |

|---|---|---|---|

| Amazon | 0 | ||

| DSLR | 0 | ||

| Webcam | 0 |

| Amazon | DSLR | Webcam | |

|---|---|---|---|

| Amazon | 0 | ||

| DSLR | 0 | ||

| Webcam | 0 |

| Art | Clipart | Product | Real World | |

|---|---|---|---|---|

| Art | 0 | |||

| Clipart | 0 | |||

| Product | 0 | |||

| Real World | 0 |

| Art | Clipart | Product | Real World | |

|---|---|---|---|---|

| Art | 0 | |||

| Clipart | 0 | |||

| Product | 0 | |||

| Real World | 0 |

B.5 Losses

B.5.1 DANN

For batches of data and of size , the DANN losses are:

| (16) | ||||

| (17) |

B.5.2 CDAN

Similarly, the CDAN losses are:

| (18) | ||||

| (19) | ||||

| (20) |

where and is the -th element of vector .

CDAN is particularly well-suited for conditional alignment. As described in Section 2, the CDAN discriminator seeks to match with . This objective is very aligned with : let us first assume for argument’s sake that is a perfect classifier on both domains. For any sample , is thus a matrix of s except on the -th row, which contains . When label distributions match, the effect of fooling the discriminator will result in representations such that the matrices are equal on the source and target domains. In other words, the model is such that match: it verifies (see Th. 3.4 below with ). On the other hand, if the label distributions differ, fooling the discriminator actually requires mislabelling certain samples (a fact quantified in Th. 2.1).

B.5.3 JAN

The JAN losses [40] are :

| (21) | ||||

| (22) |

where corresponds to the kernel of the RKHS used to measure the discrepancy between distributions. Exactly as in Long et al. [40], it is the product of kernels on various layers of the network . Each individual kernel is computed as the dot-product between two transformations of the representation: (in this case, outputs vectors in a high-dimensional space). See Section B.7 for more details.

The IWJAN losses are:

| (23) | ||||

| (24) |

B.6 Generation of domain adaptation tasks with varying

We consider the MNIST USPS task and generate a set of vectors in . Each vector corresponds to the fraction of each class to be trained on, either in the source or the target domain (to assess the impact of both). The left bound is chosen as to ensure that classes all contain some samples.

This methodology creates domain adaptation tasks, for subsampled-MNIST USPS and for MNIST subsampled-USPS, with Jensen-Shannon divergences varying from to 111We manually rejected some samples to guarantee a rather uniform set of divergences.. They are then used to evaluate our algorithms, see Section 4 and Figures 1 and 3. They show the performance of the 6 algorithms we consider. We see the sharp decrease in performance of the base versions DANN and CDAN. Comparatively, our importance-weighted algorithms maintain good performance even for large divergences between the marginal label distributions.

B.7 Implementation details

All the values reported below are the default ones in the implementations of DANN, CDAN and JAN released with the respective papers (see links to the github repos in the footnotes). We did not perform any search on them, assuming they had already been optimized by the authors of those papers. To ensure a fair comparison and showcase the simplicity of our approach, we simply plugged the weight estimation on top of those baselines and used their original hyperparameters.

For MNIST and USPS, the architecture is akin to LeNet [31], with two convolutional layers, ReLU and MaxPooling, followed by two fully connected layers. The representation is also taken as the last hidden layer, and has 500 neurons. The optimizer for those tasks is SGD with a learning rate of , annealed by every five training epochs for M U and for U M. The weight decay is also and the momentum .

For the Office and Visda experiments with IWDAN and IWCDAN, we train a ResNet-50, optimized using SGD with momentum. The weight decay is also and the momentum . The learning rate is for the Office-31 tasks A D and D W, otherwise (default learning rates from the CDAN implementation222https://github.com/thuml/CDAN/tree/master/pytorch).

For the IWJAN experiments, we use the default implementation of Xlearn codebase333https://github.com/thuml/Xlearn/tree/master/pytorch and simply add the weigths estimation and reweighted objectives to it, as described in Section B.5. Parameters, configuration and networks remain the same.

Finally, for the Office experiments, we update the importance weights every 15 passes on the dataset (in order to improve their estimation on small datasets). On Digits and Visda, the importance weights are updated every pass on the source dataset. Here too, fine-tuning that value might lead to a better estimation of and help bridge the gap with the oracle versions of the algorithms.

We use the cvxopt package444http://cvxopt.org/ to solve the quadratic programm 5.

We trained our models on single-GPU machines (P40s and P100s). The runtime of our algorithms is undistinguishable from the the runtime of their base versions.

B.8 Weight Estimation

We estimate importance weights using Lemma 3.2, which relies on the assumption. However, there is no guarantee that is verified at any point during training, so the exact dynamics of are unclear. Below we discuss those dynamics and provide some intuition about them.

In Fig. 4b, we plot the Euclidian distance between the moving average of weights estimated using the equation and the true weights (note that this can be done for any algorithm). As can be seen in the figure, the distance between the estimated and true weights is highly correlated with the performance of the algorithm (see Fig.4). In particular, we see that the estimations for IWDAN is more accurate than for DANN. The estimation for DANN exhibits an interesting shape, improving at first, and then getting worse. At the same time, the estimation for IWDAN improves monotonously. The weights for IWDAN-O get very close to the true weights which is in line with our theoretical results: IWDAN-O gets close to zero error on the target error, Th. 3.4 thus guarantees that is verified, which in turns implies that the weight estimation is accurate (Lemma 3.2). Finally, without domain adaptation, the estimation is very poor. The following two lemmas shed some light on the phenomena observed for DANN and IWDAN:

See 3.3

Proof.

If , then the confusion matrix C is diagonal and its -th line is . Additionally, if , then from a straightforward extension of Eq. 12, we have . In other words, the distribution of predictions on the source and target domains match, i.e. (where the last equality comes from ). Finally, we get that . ∎

In particular, applying this lemma to DANN (i.e. with ) suggests that at convergence, the estimated weights should tend to 1. Empirically, Fig. 4b shows that as the marginals get matched, the estimation for DANN does get closer to 1 (1 corresponds to a distance of )555It does not reach it as the learning rate is decayed to .. We now attempt to provide some intuition on the behavior of IWDAN, with the following lemma:

Lemma B.1.

If and if for a given :

| (25) |

then, letting be the estimated weight:

Applying this lemma to , and assuming that (25) holds for all the classes (we discuss what the assumption implies below), we get that:

| (26) |

or in other words, the estimation improves monotonously. Combining this with Lemma B.1 suggests an explanation for the shape of the IWDAN estimated weights on Fig. 4b: the monotonous improvement of the estimation is counter-balanced by the matching of weighted marginals which, when reached, makes constant (Lemma 3.3 applied to ). However, we wish to emphasize that the exact dynamics of are complex, and we do not claim understand them fully. In all likelihood, they are the by-product of regularity in the data, properties of deep neural networks and their interaction with stochastic gradient descent. Additionally, the dynamics are also inherently linked to the success of domain adaptation, which to this day remains an open problem.

As a matter of fact, assumption (25) itself relates to successful domain adaptation. Setting aside , which simply corresponds to a class reweighting of the source domain, (25) states that predictions on the target domain fall between a successful prediction (corresponding to ) and the prediction of a model with matched marginals (corresponding to ). In other words, we assume that the model is naturally in between successful domain adaptation and successful marginal matching. Empirically, we observed that it holds true for most classes (with for IWDAN and with for DANN), but not all early in training666In particular at initialization, one class usually dominates the others..

To conclude this section, we prove Lemma B.1.

Proof.

From , we know that C is diagonal and that its -th line is . This gives us: . Hence:

which conludes the proof. ∎

B.9 Per-class predictions and estimated weights

In this section, we display the per-class predictions of various algorithms on the sU M task. In Table 16, we see that without domain adaptation, performance on classes is rather random, the digit for instance is very poorly predicted and confused with and .

Table 17 shows an interesting pattern for DANN. In line with the limitations described by Theorem 2.1, the model performs very poorly on the subsampled classes (we recall that the subsampling is done in the source domain): the neural network tries to match the unweighted marginals. To do so, it projects representations of classes that are over-represented in the target domain (digits to ) on representations of the under-represented classes (digits to ). In doing so, it heavily degrades its performance on those classes (it is worth noting that digit has an importance weight close to which probably explains why DANN still performs well on it, see Table 14).

As far as IWDAN is concerned, Table 18 shows that the model perfoms rather well on all classes, at the exception of the digit confused with . IWDAN-O is shown in Table 19 and as expected outperforms the other algorithms on all classes.

Finally, Table 14 shows the estimated weights of all the algorithms, at the training epoch displayed in Tables 16, 17, 18 and 19. We see a rather strong correlation between errors on the estimated weight for a given class, and errors in the predictions for that class (see for instance digit for DANN or digit for IWDAN).

| Class | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | Distance | |

| TRUE | 1.19 | 1.61 | 1.96 | 2.24 | 2.16 | 0.70 | 0.64 | 0.70 | 0.78 | 0.66 | 0 |

| DANN | 1.06 | 1.15 | 1.66 | 1.33 | 1.95 | 0.86 | 0.72 | 0.70 | 1.02 | 0.92 | 1.15 |

| IWDAN | 1.19 | 1.61 | 1.92 | 1.96 | 2.31 | 0.70 | 0.63 | 0.55 | 0.78 | 0.78 | 0.38 |

| IWDAN-O | 1.19 | 1.60 | 2.01 | 2.14 | 2.1 | 0.73 | 0.64 | 0.65 | 0.78 | 0.66 | 0.12 |

| No DA | 1.14 | 1.4 | 2.42 | 1.49 | 4.21 | 0.94 | 0.38 | 0.82 | 0.62 | 0.29 | 2.31 |

| Method | Digits | sDigits | Method | Digits | sDigits |

|---|---|---|---|---|---|

| DANN | 93.15 | 83.24 | CDAN | 95.72 | 88.23 |

| DANN + | 93.18 | 84.20 | CDAN + | 95.30 | 91.14 |

| DANN + | 94.35 | 92.48 | CDAN + | 95.42 | 92.35 |

| IWDAN | 94.90 | 92.54 | IWCDAN | 95.90 | 93.22 |

| \collectcell 9 2.89\endcollectcell | \collectcell 0 .13\endcollectcell | \collectcell 3 .24\endcollectcell | \collectcell 0 .00\endcollectcell | \collectcell 2 .20\endcollectcell | \collectcell 0 .01\endcollectcell | \collectcell 0 .45\endcollectcell | \collectcell 0 .88\endcollectcell | \collectcell 0 .18\endcollectcell | \collectcell 0 .02\endcollectcell |

| \collectcell 0 .00\endcollectcell | \collectcell 7 2.54\endcollectcell | \collectcell 1 2.38\endcollectcell | \collectcell 0 .00\endcollectcell | \collectcell 3 .40\endcollectcell | \collectcell 0 .37\endcollectcell | \collectcell 7 .54\endcollectcell | \collectcell 1 .50\endcollectcell | \collectcell 2 .28\endcollectcell | \collectcell 0 .00\endcollectcell |

| \collectcell 0 .31\endcollectcell | \collectcell 0 .23\endcollectcell | \collectcell 9 3.28\endcollectcell | \collectcell 0 .09\endcollectcell | \collectcell 0 .72\endcollectcell | \collectcell 0 .03\endcollectcell | \collectcell 0 .34\endcollectcell | \collectcell 4 .78\endcollectcell | \collectcell 0 .17\endcollectcell | \collectcell 0 .05\endcollectcell |