Do Not Escape From the Manifold: Discovering the Local Coordinates on the Latent Space of GANs

Abstract

The discovery of the disentanglement properties of the latent space in GANs motivated a lot of research to find the semantically meaningful directions on it. In this paper, we suggest that the disentanglement property is closely related to the geometry of the latent space. In this regard, we propose an unsupervised method for finding the semantic-factorizing directions on the intermediate latent space of GANs based on the local geometry. Intuitively, our proposed method, called Local Basis, finds the principal variation of the latent space in the neighborhood of the base latent variable. Experimental results show that the local principal variation corresponds to the semantic factorization and traversing along it provides strong robustness to image traversal. Moreover, we suggest an explanation for the limited success in finding the global traversal directions in the latent space, especially -space of StyleGAN2. We show that -space is warped globally by comparing the local geometry, discovered from Local Basis, through the metric on Grassmannian Manifold. The global warpage implies that the latent space is not well-aligned globally and therefore the global traversal directions are bound to show limited success on it.

1 Introduction

Generative Adversarial Networks (GANs, Goodfellow et al. (2014)), such as ProGAN (Karras et al., 2018), BigGAN (Brock et al., 2018), and StyleGANs (Karras et al., 2019; 2020b; 2020a), have shown tremendous performance in generating high-resolution photo-realistic images that are often indistinguishable from natural images. However, despite several recent efforts (Goetschalckx et al., 2019; Jahanian et al., 2019; Plumerault et al., 2020; Shen et al., 2020) to investigate the disentanglement properties (Bengio et al., 2013) of the latent space in GANs, it is still challenging to find meaningful traversal directions in the latent space corresponding to the semantic variation of an image.

The previous approaches to find the semantic-factorizing directions are categorized into local and global methods. The local methods (e.g. Ramesh et al. (2018), Latent Mapper in StyleCLIP (Patashnik et al., 2021), and attribute-conditioned normalizing flow in StyleFlow (Abdal et al., 2021)) suggest a sample-wise traversal direction. By contrast, the global methods, such as GANSpace (Härkönen et al., 2020) and SeFa (Shen & Zhou, 2021), propose a global direction for the particular semantics (e.g. glasses, age, and gender) that works on the entire latent space. Throughout this paper, we refer to these global methods as the global basis. These global methods showed promising results. However, these methods are successful on the limited area, and the image quality is sensitive to the perturbation intensity. In fact, if a latent space does not satisfy the global disentanglement property itself, all global methods are bound to show a limited performance on it. Nevertheless, to the best of our knowledge, the global disentanglement property of a latent space has not been investigated except for the empirical observation of generated samples. In this regard, we need a local method that describes the local disentanglement property and an evaluation scheme for the global disentanglement property from the collected local information.

In this paper, we suggest that the semantic property of the latent space in GANs (i.e. disentanglement of semantics and image collapse) is closely related to its geometry, because of the sample-wise optimization nature of GANs. In this respect, we propose an unsupervised method to find a traversal direction based on the local structure of the intermediate latent space , called Local Basis (Fig 1(a)). We approximate with its submanifold representing its local principal variation, discovered in terms of the tangent space . Local Basis is defined as an ordered basis of corresponding to the approximating submanifold. Moreover, we show that Local Basis is obtained from the simple closed-form algorithm, that is the singular vectors of the Jacobian matrix of the subnetwork. The geometric interpretation of Local Basis provides an evaluation scheme for the global disentanglement property through the global warpage of the latent manifold. Our contributions are as follows:

-

1.

We propose Local Basis, a set of traversal directions that can reliably traverse without escaping from the latent space to prevent image collapse. The latent traversal along Local Basis corresponds to the local coordinate mesh of local-geometry-describing submanifold.

-

2.

We show that Local Basis leads to stable variation and better semantic factorization than global approaches. This result verifies our hypothesis on the close relationship between the semantic and geometric properties of the latent space in GANs.

-

3.

We propose Iterative Curve-Traversal method, which is a way to trace the latent space in the curved trajectory. The trajectory of the images with this method shows a more stable variation compared to the linear traversal.

-

4.

We introduce the metrics on the Grassmannian manifold to analyze the global geometry of the latent space through Local Basis. Quantitative analysis demonstrates that the -space of StyleGAN2 is still globally warped. This result provides an explanation for the limited success of the global basis and proves the importance of local approaches.

2 Related Work

Style-based Generators.

In recent years, GANs equipped with style-based generators (Karras et al., 2019; 2020b) have shown state-of-the-art performance in high-fidelity image synthesis. The style-based generator consists of two parts: a mapping network and a synthesis network. The mapping network encodes the isotropic Gaussian noise to an intermediate latent vector . The synthesis network takes and generates an image while controlling the style of the image through . Here, -space is well known for providing a better disentanglement property compared to (Karras et al., 2019). However, there is still a lack of understanding about the effect of latent perturbation in a specific direction on the output image.

Latent Traversal for Image Manipulation.

The impressive success of GANs in producing high-quality images has led to various attempts to understand their latent space. Early approaches (Radford et al., 2016; Upchurch et al., 2017) show that vector arithmetic on the latent space for the semantics holds, and StyleGAN (Karras et al., 2019) shows that mixing two latent codes can achieve style transfer. Some studies have investigated the supervised learning of latent directions while assuming access to the semantic attributes of images (Goetschalckx et al., 2019; Jahanian et al., 2019; Shen et al., 2020; Yang et al., 2021; Abdal et al., 2021). In contrast to these supervised methods, some recent studies have suggested novel approaches that do not use the prior knowledge of training dataset, such as the labels of human facial attributes. In Voynov & Babenko (2020), an unsupervised optimization method is proposed to jointly learn a candidate matrix and a corresponding reconstructor, which identifies the semantic direction in the matrix. GANSpace (Härkönen et al., 2020) finds a global basis for in StyleGAN using a PCA, enabling a fast image manipulation. SeFa (Shen & Zhou, 2021) focuses on the first weight parameter right after the latent code, suggesting that it contains essential knowledge of an image variation. SeFa proposes singular vectors of the first weight parameter as meaningful global latent directions. StyleCLIP (Patashnik et al., 2021) achieves a state-of-the-art performance in the text-driven image manipulation of StyleGAN. StyleCLIP introduces an additional training to minimize the CLIP loss (Radford et al., 2021).

Jacobian Decomposition.

Some works use the Jacobian matrix to analyze the latent space of GAN (Zhu et al., 2021; Wang & Ponce, 2021; Chiu et al., 2020; Ramesh et al., 2018). However, these methods focus on the Jacobian of the entire model, from the input noise to the output image. Ramesh et al. (2018) suggested the right singular vectors of the Jacobian as local disentangled directions in the space. Zhu et al. (2021) proposed a latent perturbation vector that can change only a particular area of the image. The perturbation vector is discovered by taking the principal vector of the Jacobian to the target area and projecting it into the null space of the Jacobian to the complementary region. On the other hand, our Local Basis utilizes the Jacobian matrix of the partial network, from the input noise to the intermediate latent code , and investigates the black-box intermediate latent space from it. The top- Local Basis corresponds to the best-local-geometry-describing submanifolds. This intuition leads to exploiting Local Basis to assess the global geometry of the intermediate latent space.

3 Traversing a curved latent space

In this section, we introduce a method for finding a local-geometry-aware traversal direction in the intermediate latent space . The traversal direction is refered to as the Local Basis at . In addition, we evaluate the proposed Local Basis by observing how the generated image changes as we traverse the intermediate latent variable. Throughout this paper, we assess Local Basis of the -space in StyleGAN2 (Karras et al., 2020b). However, our methodology is not limited to StyleGAN2. See appendix for the results on StyleGAN (Karras et al., 2019) and BigGAN (Brock et al., 2018).

3.1 Finding a Local Basis

Given a pretrained GAN model , from the input noise space to the image space , we choose the intermediate layer to discover Local Basis. We refer to the former part of the GAN model as the mapping network . The image of the mapping network is denoted as . The latter part, a non-linear mapping from to the image space , is denoted by . Local Basis at is defined as the basis of the tangent space . This basis can be interpreted as a local-geometry-aware linear traversal direction starting from .

To define the tangent space of the intermediate latent space properly, we assume that is a differentiable manifold. Note that the support of the isotropic Gaussian prior and the ambient space are already differentiable manifolds. The tangent space at , denoted by , is a vector space consisting of tangent vectors of curves passing through point . Explicitly,

| (1) |

Then, the differentiable mapping network gives a linear map between the two tangent spaces and where .

| (2) |

We utilize the linear map , called the differential of at , to find the basis of . Based on the manifold hypothesis in representation learning, we posit that the latent space of the image space in is a lower-dimensional manifold embedded in . In this approach, we estimate the latent manifold as a lower-dimensional approximation of describing its principal variations. The approximation manifold can be obtained by solving the low-rank approximation problem of . The manifold hypothesis is supported by the empirical distribution of singular values . The analysis is provided in Fig 9 in the appendix.

The low-rank approximation problem has an analytic solution defined by Singular Value Decomposition (SVD). Because the matrix representation of is a Jacobian matrix , Local Basis is obtained as the following: For the -th right singular vector , -th left singular vector , and -th singular value of with ,

| (3) | ||||

| (4) |

Then, the -dimensional approximation of around is described as the following because (if ). Note that is a submanifold111Strictly speaking, may not satisfy the conditions of the submanifold. The injectivity of on the domain is a sufficient condition for the submanifold. As described below, this sufficient condition is satisfied under the locally affine mapping network and . of corresponding to the components of Local Basis, i.e. .

| (5) |

Locally affine mapping network

In this paragraph, we focus on the locally affine mapping network , which is one of the most widely adopted GAN structures, such as MLP or CNN layers with ReLU or leaky-ReLU activation functions. This type of mapping network has several well-suited properties for Local Basis.

| (6) |

where denotes a partition of , and and are the parameters of the local affine map. With this type of mapping network , it is clear that the intermediate latent space satisfies a differentiable manifold property at least locally on the interior of each . The region where the property may not hold, the intersection of several closure of ’s in , has measure zero in .

Moreover, the Jacobian matrix becomes a locally constant matrix. Then, the approximating manifold (Eq 5) satisfies the submanifold condition, and is consistent locally for each , avoiding being defined for each . In addition, the linear traversal of the latent variable along can be described as the curve on (Eq 7). Most importantly, these curves on (Eq 7), starting from in the direction of Local Basis, corresponds to the local coordinate mesh of .

| (7) |

Equivalence to Local PCA

To provide additional intuition about Local Basis, we prove the following proposition. The proposition shows that Local Basis is equivalent to applying a PCA on the samples on around .

Proposition 1 (Equivalence to Local PCA).

Consider the Local PCA problem around the base latent variable on , i.e. PCA of the latent variable samples around .

| (8) |

where is the linear approximation of around . Then, the principal components discovered in the Local PCA problem are equivalent to Local Basis at .

3.2 Iterative Curve-Traversal

We suggest a natural curve-traversal that can keep track of the -manifold and an iterative method to implement it. We divide the long curved trajectory into small pieces and approximate each piece by the local curves using Local Basis. We call this curve-traversal Iterative Curve-Traversal method. Consistent with the linear traversal method, we consider Iterative Curve-Traversal departing in the direction of a Local Basis. Explicitly, for a sufficiently large ,

| (9) |

where . We split the curve-traversal into pieces and denote each -th iterate in and as and for . The starting point of the traversal is denoted as the -th iterate , , and . (Fig 2) Note that to find Local Basis at , we need a corresponding such that .

Below, we describe the positive part of Iterative Curve-Traversal. For the negative part, we repeat the same procedure using the reversed tangent vector . The first step of Iterative Curve-Traversal method with perturbation intensity is as follows:

| (10) | ||||

| (11) |

Note that is the endpoint of the curve and . We scale the step size in by to ensure each piece of curve has a similar length of . To preserve the variation in semantics during the traversal, the departure direction of is determined by comparing the similarity between the previous departure direction and Local Basis at . The above process is repeated -times. (The algorithm for Iterative Curve-Traversal can be found in the appendix.)

| (12) |

3.3 Results of Local Basis traversal

We evaluate Local Basis by observing how the generated image changes as we traverse -space in StyleGAN2 and by measuring FID score for each perturbation intensity. The evaluation is based on two criteria: Robustness and Semantic Factorization.

Robustness

Fig 3 and 4 present the Robustness Test results222Ramesh et al. (2018) is not compared because it took hours to get a traversal direction of an image. See appendix for the Qualitative Robustness Test results of Ramesh et al. (2018).. In Fig 3, the traversal image of Local Basis is compared with those of the global methods (GANSpace (Härkönen et al., 2020) and SeFa (Shen & Zhou, 2021)) under the strong perturbation intensity of 12 along the st and nd direction of each method. The perturbation intensity is defined as the traversal path length in . The two global methods show severe degradation of the image compared to Local Basis. Moreover, we perform a quantitative assessment of robustness. We measure the FID score for 10,000 traversed images for each perturbation intensity. In Fig 4, the global methods show the relatively small FID under the small perturbation. But, as we impose the stronger perturbation, the FID scores on the global methods increase sharply, implying the image collapse in Fig 3. By contrast, Local Basis achieves much smaller FID scores with and without Iterative Curve-Traversal.

We interpret the degradation of image as due to the deviation of trajectory from . The theoretical interpretation shows that the linear traversal along Local Basis corresponds to a local coordinate axis on , at least locally. Therefore, the traversal along Local Basis is guaranteed to stay close to even under the longer traversal. However, we cannot expect the same property on the global basis because it is based on the global geometry. Iterative Curve-Traversal shows more stable traversal because of its stronger tracing to the latent manifold. This further supports our interpretation.

Semantic Factorization

Local Basis is discovered in terms of singular vectors of . The disentangled correspondence, between Local Basis and the corresponding singular vectors in the prior space, induces a semantic-factorization in Local Basis. Fig 5 and 6 presents the semantics of the image discovered by Local Basis. In Fig 5, we compare the semantic factorizations of Local Basis and GANSpace (Härkönen et al., 2020) for the particular semantics discovered by GANSpace. For each interpretable traversal direction of GANSpace provided by the authors, the corresponding Local Basis is chosen by the one with the highest cosine similarity. For a fair comparison, each traversal is applied to the specific subset of layers in the synthesis network (Karras et al., 2020b) provided by the authors of GANSpace with the same perturbation intensity. In particular, as we impose the stronger perturbation (from left to right), GANSpace shows the image collapse in Fig 5(a) and entanglement of semantics (Glasses + Head Raising) in Fig 5(d). However, Local Basis does not show any of those problems. Fig 6 provides additional examples of semantic factorization where the latent traversal is applied to a subset of layers predefined in StyleGAN. The subset of the layers is selected as one of four, i.e. coarse, middle, fine, or all styles. Local Basis shows decent factorization of semantics such as Body Length of car and Age of cat in LSUN (Yu et al., 2015) in Fig 6.

3.4 Exploration inside Abstract Semantics

Abstract semantics of image often consists of several lower-level semantics. For instance, Old can be represented as the correlated distribution of Hair color, Wrinkle, Face length, etc. In this section, we show that the adaptation of Iterative Curve-Traversal can explore the variation of abstract semantics, which is represented by the cone-shaped region of the generative factors (Träuble et al., 2021).

Because of its text-driven nature, we utilize the global basis333We use the global basis defined on (Tov et al., 2021). See the appendix for detail. from StyleCLIP (Patashnik et al., 2021) corresponding to the abstract semantics of Old. Then, we consider the modification of Iterative Curve-Traversal following the given global basis . To be more specific, the departure direction of each piece of curve in Eq 12 is chosen by the similarity to , not by the similarity to previous departure direction. The results for old are provided in Fig 7. (See the appendix for other examples.) Step size denotes the length of each piece of curve, i.e. in Sec 3.2. For a fair comparison, the overall perturbation intensity is fixed to by adjusting the number of steps . The linear traversal along the global basis adds only wrinkles to the image and the image collapses shortly. On the contrary, both Iterative Curve-Traversal methods obtain the diverse and high-fidelity image manipulation for the target semantics old. In particular, the diversity is greatly increased as we add stochasticity to the step size. We interpret this diversity as a result of the increased exploration area from the stochastic step size while exploiting the high-fidelity of Iterative Curve-Traversal.

4 Evaluating warpage of -Manifold

In this section, we provide an explanation for the limited success of the global basis in -space of StyleGAN2. In Sec 3, we showed that Local Basis corresponds to the generative factors of data. Hence, the linear subspace spanned by Local Basis, which is the tangent space in Eq 5, describes the local principal variation of image. In this regard, we assess the global disentanglement property by evaluating the consistency of the tangent space at each . We refer to the inconsistency of the tangent space as the warpage of the latent manifold. Our evaluation proves that -manifold is warped globally. In this section, we present the quantitative evaluation of the global warpage by introducing the Grassmannian Metric. The qualitative evaluation by observing the subspace traversal is provided in the appendix. The subspace traversal denotes a simultaneous traversal in multiple directions.

Grassmannian Manifold

Let be the vector space. The Grassmannian manifold (Boothby, 1986) is defined as the set of all -dimensional linear subspaces of . We evaluate the global warpage of -manifold by measuring the Grassmannian distance between the linear subspaces spanned by top- Local Basis of each . The reason for measuring the distance for top- Local Basis is the manifold hypothesis. The linear subspace spanned by top- Local Basis corresponds to the tangent space of the -dimensional approximation of (Eq 5). From this perspective, a large Grassmannian distance means that the -dimensional local approximation of severely changes. Likewise, we consider the subspace spanned by the top- components for the global basis. In this study, two types of metrics (i.e. Projection metric and Geodesic metric) are adopted as metrics of the Grassmannian manifold.

Grassmannian Metric

First, for two subspaces , let the projection into each subspace be and , respectively. Then the Projection Metric (Karrasch, 2017) on Gr is defined as follows.

| (13) |

where denotes the operator norm.

Second, let be the column-wise orthonormal matrix of which columns span , respectively. Then, the Geodesic Metric (Ye & Lim, 2016) on Gr, which is induced by canonical Riemannian structure, is formulated as follows.

| (14) |

where denotes the -th principal angle between and .

Evaluation

We evaluate the global warpage of the -manifold by comparing the five distances as we vary the subspace dimension.

-

1.

Random : Between two random basis of uniformly sampled from

-

2.

Random : Between two Local Basis from two random

-

3.

Close : Between two Local Basis from two close (See appendix for the Grassmannian metric with various .)

(15) -

4.

To global GANSpace: Between Local Basis and the global basis from GANSpace

-

5.

To global SeFa: Between Local Basis and the global basis from SeFa

Fig 8 shows the above five Grassmannian metrics. We report the metric results from 100 samples for the Random and 1,000 samples for the others. The Projection metric increases in order of Close , To global GANSpace, Random , To global SeFa, and Random . For the Geodesic metric, the order is reversed for Random and To global SeFa. Most importantly, the Random metric is much larger than Close . This shows that there is a large variation of Local Basis on , which proves that -space is globally warped. In addition, Close metric is always significantly smaller than the others, which implies the local consistency of Local Basis on . Finally, the metric results prove the existence of limited global disentanglement on . Random is smaller than Random . This order shows that Local Basis on is not completely random, which implies the existence of a global alignment. In this regard, both To global results prove that the global basis finds the global alignment to a certain degree. To global GANSpace lies in between Close and Random . To global SeFa does so on the Geodesic metric and is similar to Random on the Projection metric. However, the large gap between Close and both To global implies that the discovered global alignment is limited.

5 Conclusion

In this work, we proposed a method for finding a meaningful traversal direction based on the local-geometry of the intermediate latent space of GANs, called Local Basis. Motivated by the theoretical explanation of Local Basis, we suggest experiments to evaluate the global geometry of the latent space and an iterative traversal method that can trace the latent space. The experimental results demonstrate that Local Basis factorizes the semantics of images and provides a more stable transformation of images with and without the proposed iterative traversal. Moreover, the suggested evaluation of the -space in StyleGAN2 proves that the -space is globally distorted. Therefore, the global method can find a limited global consistency from -space.

Acknowledgement

This work was supported by the NRF grant [2021R1A2C3010887], the ICT R&D program of MSIT/IITP [2021-0-00077] and MOTIE [P0014715].

Ethics Statement

The limitations and the potential negative societal impacts of our work are that Local Basis would reflect the bias of data. The GANs learn the probability distribution of data through samples from it. Thus, unlike the likelihood-based method such as Variational Autoencoder (Kingma & Welling, 2014) and Flow-based models (Kingma & Dhariwal, 2018), the GANs are more likely to amplify the dependence between the semantics of data, even the bias of it. Because Local Basis finds a meaningful traversal direction based on the local-geometry of latent space, Local Basis would show the bias of data as it is. Moreover, if Local Basis is applied to real-world problems like editing images, Local Basis may amplify the bias of society. However, in order to fix a problem, we have to find a method to analyze it. In this respect, Local Basis can serve as a tool to analyze the bias.

Reproducibility Statement

To ensure the reproducibility of this study, we attached the entire source code in the supplementary material. Every figure can be reproduced by running the jupyter notebooks in notebooks/*. In addition, the proof of Proposition 1 is included in the appendix.

References

- Abdal et al. (2019) Rameen Abdal, Yipeng Qin, and Peter Wonka. Image2stylegan: How to embed images into the stylegan latent space? In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4432–4441, 2019.

- Abdal et al. (2021) Rameen Abdal, Peihao Zhu, Niloy J Mitra, and Peter Wonka. Styleflow: Attribute-conditioned exploration of stylegan-generated images using conditional continuous normalizing flows. ACM Transactions on Graphics (TOG), 40(3):1–21, 2021.

- Bengio et al. (2013) Yoshua Bengio, Aaron Courville, and Pascal Vincent. Representation learning: A review and new perspectives. IEEE transactions on pattern analysis and machine intelligence, 35(8):1798–1828, 2013.

- Boothby (1986) William M Boothby. An introduction to differentiable manifolds and Riemannian geometry. Academic press, 1986.

- Brock et al. (2018) Andrew Brock, Jeff Donahue, and Karen Simonyan. Large scale gan training for high fidelity natural image synthesis. In International Conference on Learning Representations, 2018.

- Chiu et al. (2020) Chia-Hsing Chiu, Yuki Koyama, Yu-Chi Lai, Takeo Igarashi, and Yonghao Yue. Human-in-the-loop differential subspace search in high-dimensional latent space. ACM Transactions on Graphics (TOG), 39(4):85–1, 2020.

- Goetschalckx et al. (2019) Lore Goetschalckx, Alex Andonian, Aude Oliva, and Phillip Isola. Ganalyze: Toward visual definitions of cognitive image properties. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 5744–5753, 2019.

- Goodfellow et al. (2014) Ian J Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron C Courville, and Yoshua Bengio. Generative adversarial nets. In NIPS, 2014.

- Härkönen et al. (2020) Erik Härkönen, Aaron Hertzmann, Jaakko Lehtinen, and Sylvain Paris. Ganspace: Discovering interpretable gan controls. Advances in Neural Information Processing Systems, 33, 2020.

- Heusel et al. (2017) Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Advances in neural information processing systems, 30, 2017.

- Jahanian et al. (2019) Ali Jahanian, Lucy Chai, and Phillip Isola. On the” steerability” of generative adversarial networks. In International Conference on Learning Representations, 2019.

- Karras et al. (2018) Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. Progressive growing of gans for improved quality, stability, and variation. In International Conference on Learning Representations, 2018.

- Karras et al. (2019) Tero Karras, Samuli Laine, and Timo Aila. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4401–4410, 2019.

- Karras et al. (2020a) Tero Karras, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, and Timo Aila. Training generative adversarial networks with limited data. arXiv preprint arXiv:2006.06676, 2020a.

- Karras et al. (2020b) Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8110–8119, 2020b.

- Karrasch (2017) Daniel Karrasch. An introduction to grassmann manifolds and their matrix representation. 2017.

- Kingma & Dhariwal (2018) Diederik P Kingma and Prafulla Dhariwal. Glow: generative flow with invertible 1 1 convolutions. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, pp. 10236–10245, 2018.

- Kingma & Welling (2014) Diederik P Kingma and Max Welling. Auto-encoding variational bayes. In Proceedings of the International Conference on Learning Representations (ICLR), 2014.

- Patashnik et al. (2021) Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski. Styleclip: Text-driven manipulation of stylegan imagery. arXiv preprint arXiv:2103.17249, 2021.

- Pfau et al. (2020) David Pfau, Irina Higgins, Aleksandar Botev, and Sébastien Racanière. Disentangling by subspace diffusion. arXiv preprint arXiv:2006.12982, 2020.

- Plumerault et al. (2020) Antoine Plumerault, Hervé Le Borgne, and Céline Hudelot. Controlling generative models with continuous factors of variations. In International Conference on Learning Representations, 2020. URL https://openreview.net/forum?id=H1laeJrKDB.

- Radford et al. (2016) Alec Radford, Luke Metz, and Soumith Chintala. Unsupervised representation learning with deep convolutional generative adversarial networks. In Proceedings of the International Conference on Learning Representations (ICLR), 2016.

- Radford et al. (2021) Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. Image, 2:T2, 2021.

- Ramesh et al. (2018) Aditya Ramesh, Youngduck Choi, and Yann LeCun. A spectral regularizer for unsupervised disentanglement. arXiv preprint arXiv:1812.01161, 2018.

- Shen & Zhou (2021) Yujun Shen and Bolei Zhou. Closed-form factorization of latent semantics in gans. In CVPR, 2021.

- Shen et al. (2020) Yujun Shen, Jinjin Gu, Xiaoou Tang, and Bolei Zhou. Interpreting the latent space of gans for semantic face editing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9243–9252, 2020.

- Tov et al. (2021) Omer Tov, Yuval Alaluf, Yotam Nitzan, Or Patashnik, and Daniel Cohen-Or. Designing an encoder for stylegan image manipulation. ACM Transactions on Graphics (TOG), 40(4):1–14, 2021.

- Träuble et al. (2021) Frederik Träuble, Elliot Creager, Niki Kilbertus, Francesco Locatello, Andrea Dittadi, Anirudh Goyal, Bernhard Schölkopf, and Stefan Bauer. On disentangled representations learned from correlated data. In International Conference on Machine Learning, pp. 10401–10412. PMLR, 2021.

- Upchurch et al. (2017) Paul Upchurch, Jacob Gardner, Geoff Pleiss, Robert Pless, Noah Snavely, Kavita Bala, and Kilian Weinberger. Deep feature interpolation for image content changes. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 7064–7073, 2017.

- Voynov & Babenko (2020) Andrey Voynov and Artem Babenko. Unsupervised discovery of interpretable directions in the gan latent space. In International Conference on Machine Learning, pp. 9786–9796. PMLR, 2020.

- Wang & Ponce (2021) Binxu Wang and Carlos R Ponce. The geometry of deep generative image models and its applications. arXiv preprint arXiv:2101.06006, 2021.

- Yang et al. (2021) Ceyuan Yang, Yujun Shen, and Bolei Zhou. Semantic hierarchy emerges in deep generative representations for scene synthesis. International Journal of Computer Vision, pp. 1–16, 2021.

- Ye & Lim (2016) Ke Ye and Lek-Heng Lim. Schubert varieties and distances between subspaces of different dimensions. SIAM Journal on Matrix Analysis and Applications, 37(3):1176–1197, 2016.

- Yu et al. (2015) Fisher Yu, Yinda Zhang, Shuran Song, Ari Seff, and Jianxiong Xiao. Lsun: Construction of a large-scale image dataset using deep learning with humans in the loop. arXiv preprint arXiv:1506.03365, 2015.

- Zhu et al. (2021) Jiapeng Zhu, Ruili Feng, Yujun Shen, Deli Zhao, Zhengjun Zha, Jingren Zhou, and Qifeng Chen. Low-rank subspaces in gans. arXiv preprint arXiv:2106.04488, 2021.

Appendix A Proposition proof

Denote by . Then, from ,

| (16) |

The first principal component is the vector such that has the maximum variance.

| (17) |

Therefore,

| (18) |

Clearly, corresponds to the first right singular vector of , i.e. the first left singular vector of , from the linear operator norm maximizing property of singular vectors. Inductively, -th principal component is the vector such that

| (19) |

Thus, becomes the -th left singular vector of . Therefore, the principal components from the Local PCA problem are equivalent to Local Basis at .

Appendix B Algorithm

Appendix C Model and Computation Resource Details

Model

Computation Resource

We generated Latent traversal results on the environment of TITAN RTX with Intel(R) Xeon(R) Gold 5220 CPU @ 2.20GHz. However, it requires a low computational cost to get a Local Basis. For example, on the environment of GTX 1660 with Ryzen 5 2600, computing a Local Basis takes about 0.05 seconds.

Appendix D Code License

The files models/wrappers.py, notebooks/ganspace_utils.py and notebooks/notebook_utils.py are a derivative of the GANSpace, and are provided under the Apache 2.0 license. The directory netdissect is a derivative of the GAN Dissection project, and is provided under the MIT license. The directories models/biggan and models/stylegan2 are provided under the MIT license.

Appendix E Distribution of Sigular Values of Jacobian

Appendix F Grassmannian Metric

Appendix G More Latent Traversal Examples

Appendix H Implementation Details for Sec 3.4

In Sec 3.4, we utilize the global basis from StyleCLIP (Patashnik et al., 2021) defined on , the layer-wise extension of introduced in (Abdal et al., 2019; Patashnik et al., 2021). To be more specific, since the synthesis network in StyleGAN has 18 layers, we obtain an extended latent code defined by the concatenation of latent codes of dimension 512 for each -th layer and it can be described as follows:

| (20) |

Note that our Iterative Curve-Traversal originally defined on has a canonical extension to without additional changes in structures or methodologies.

For implementing the stochastic Iterative Curve-Traversal introduced in Sec 3.4, we firstly find a global basis on using StyleCLIP (Patashnik et al., 2021) which implies a given semantic attribute in the form of text (e.g. old). Now denote the global basis by , which can be represented as follows:

| (21) |

Then we perform the (extended) Iterative Curve-Traversal following , equipped with a stochastic movement for each step. In practice, we consider two options to choose a traversal direction for each step; First, follow the direction most similar to the previously selected basis (as Algorithm 2), except for the first iteration. Note that we compute the similarity between the local basis and the global basis only at once when choosing the first traversal direction. Second, follow the direction most similar to the given global basis. This is slightly different from our Algorithm 2, however, we empirically verify that setting the exploration in that way leads to a more desirable image change.

Fig 14 shows that the first method still preserves the image quality well, but it does not guarantee that the desired direction of image change, namely ‘old’. We speculate the reason why such phenomenon occurs is that most of the information contained in the meaningful global basis disappears after the first step (a unique, direct comparison to the global basis), although our methodology guarantees that the latent code does not escape from the manifold and achieve a high image quality. Nevertheless, Fig 15 shows that the second method for the stochastic Iterative Curve-Traversal can change a given facial image in a very high quality and various ways.

Appendix I Subspace Traversal

In Section 4, we proved that the -space in StyleGAN2 is warped globally. Specifically, the subspace of traversal direction generating principal variation in the image changes severely as we vary the starting latent variable . To verify the claim further, we visualize the subspace traversal on the latent space . The subspace traversal denotes a simultaneous traversal in multiple directions. In this paper, we visualize the two-dimensional traversal,

| (22) |

where and denotes a subnetwork of the given GAN model from to the images space . Since the disentanglement into the linear subspace implies the commutativity of transformation (Pfau et al., 2020), the subspace traversal can be a more challenging version of linear traversal experiments.

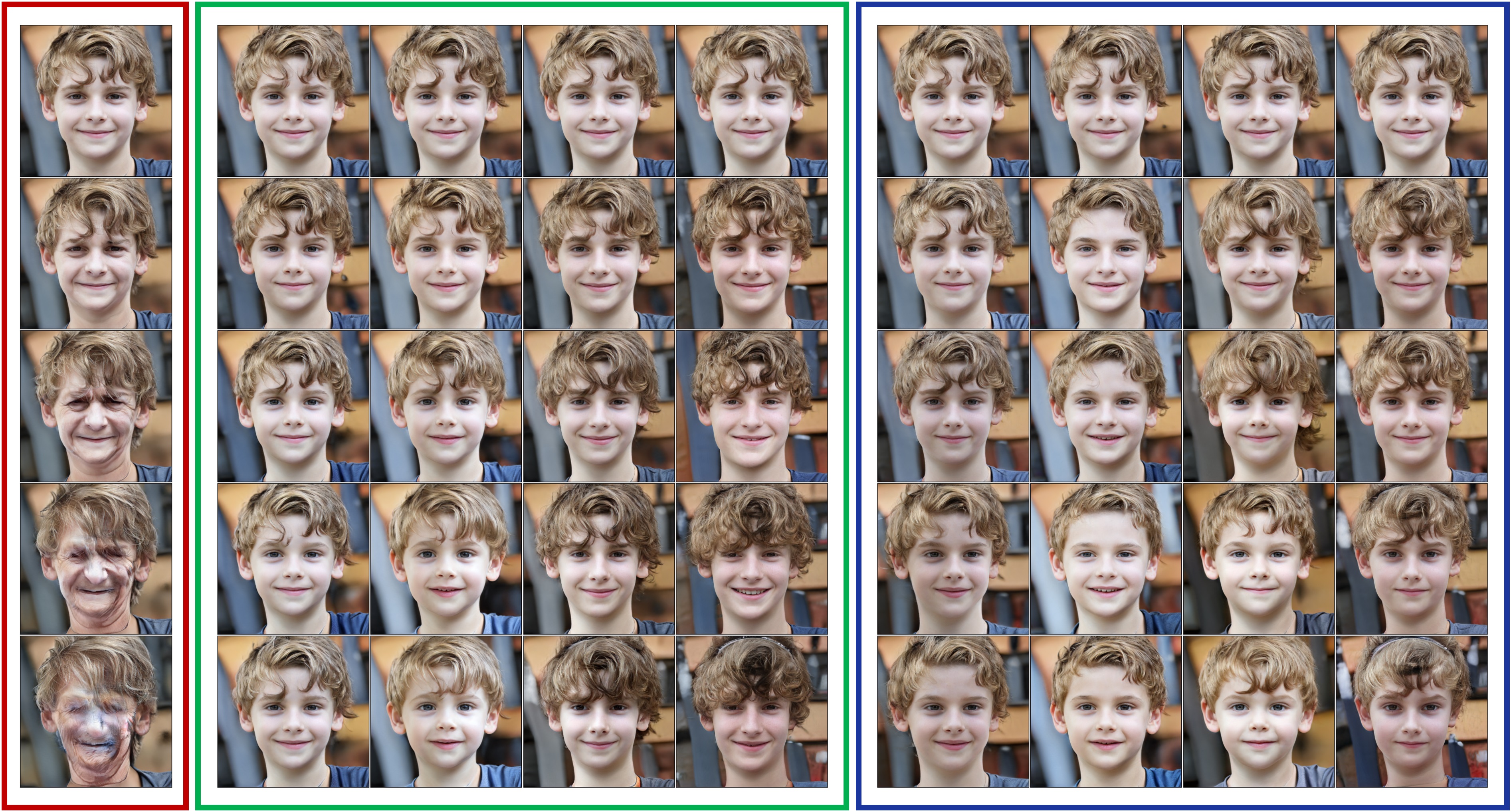

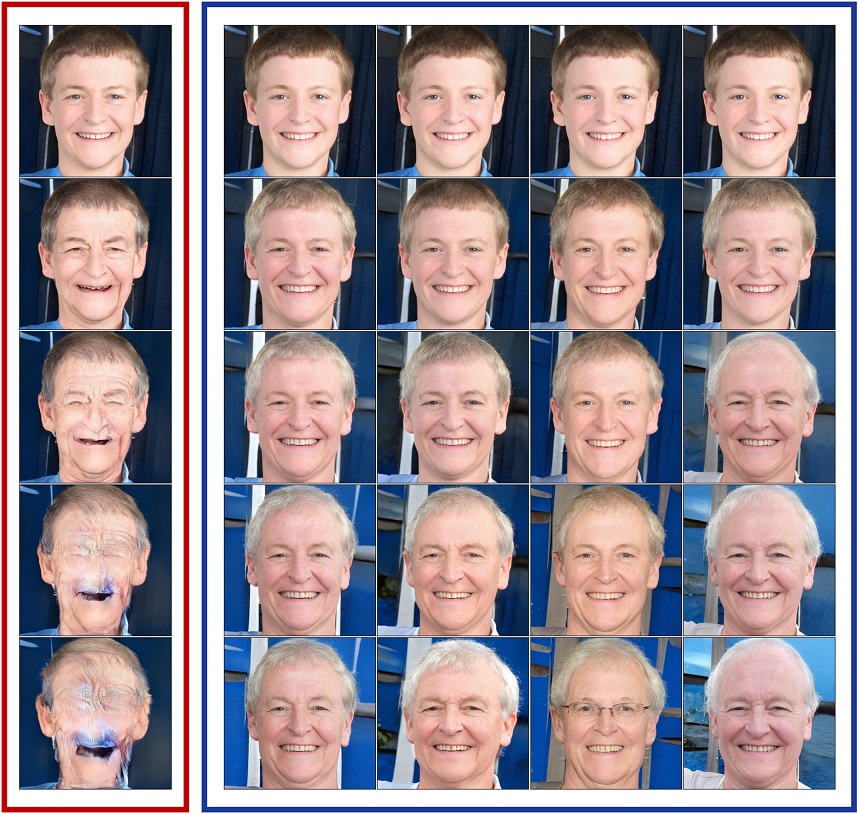

Fig 16 and Fig 17 show results of the subspace traversal for the global basis and Local Basis. Starting from the center, the horizontal and vertical traversals correspond to the st and nd directions of each method. The same perturbation intensity per step is applied for both directions. When restricted to the linear traversal (red and green box), the GANSpace shows relatively stable traversals. However, the traversal image deteriorates at the corner of the subspace traversal. By contrast, Local Basis shows a stable variation during the entire subspace traversal. This result proves that the global basis is not well-aligned with the local-geometry of the manifold.

Appendix J Local Basis on Other models