DNN2LR: Automatic Feature Crossing for Credit Scoring

Abstract.

Credit scoring is a major application of machine learning for financial institutions to decide whether to approve or reject a credit loan. For sake of reliability, it is necessary for credit scoring models to be both accurate and globally interpretable. Simple classifiers, e.g., Logistic Regression (LR), are white-box models, but not powerful enough to model complex nonlinear interactions among features. Fortunately, automatic feature crossing is a promising way to find cross features to make simple classifiers to be more accurate without heavy handcrafted feature engineering. However, credit scoring is usually based on different aspects of users, and the data usually contains hundreds of feature fields. This makes existing automatic feature crossing methods not efficient for credit scoring. In this work, we find local piece-wise interpretations in Deep Neural Networks (DNNs) of a specific feature are usually inconsistent in different samples, which is caused by feature interactions in the hidden layers. Accordingly, we can design an automatic feature crossing method to find feature interactions in DNN, and use them as cross features in LR. We give definition of the interpretation inconsistency in DNN, based on which a novel feature crossing method for credit scoring prediction called DNN2LR is proposed. Apparently, the final model, i.e., a LR model empowered with cross features, generated by DNN2LR is a white-box model. Extensive experiments have been conducted on both public and business datasets from real-world credit scoring applications. Experimental shows that, DNN2LR can outperform the DNN model, as well as several feature crossing methods. Moreover, comparing with the state-of-the-art feature crossing methods, i.e., AutoCross, DNN2LR can accelerate the speed for feature crossing by about to times on datasets with large numbers of feature fields.

1. Introduction

Financial institutions, e.g., banks and online lending companies, evaluate the credit of users based on their profiles, loan history, repayment history and other behavior records. They aim to predict the default probability of each user, so that they can decide whether to approve or reject a credit loan. This is an important task due to the huge amount of loan applications (Babaev et al., 2019; Hu et al., 2020; West, 2000; Bastos, 2007). For reliability, besides the accurate prediction of default, interpretability, which is usually a regulatory requirement, is also necessary (Babaev et al., 2019).

Some white-box models, e.g., Logistic Regression (LR), are simple and globally interpretable (Hall, 2019). However, LR usually has relatively poor performances, and it is hard for LR to model complex nonlinear interactions among features. To improve the performances of LR, heavy handcrafted feature engineering is usually required. Meanwhile, black-box models such as Deep Neural Networks (DNNs) (Zhang et al., 2016; West, 2000) and tree ensemble models (Chen and Guestrin, 2016; Ke et al., 2017; Bastos, 2007) are able to take use of complex nonlinear feature interactions. Recently, these complex models show the possibility of being interpreted locally, which means assigning a piece of local interpretation weights for each sample (Li et al., 2015; Ribeiro et al., 2016; Chu et al., 2018; Lundberg et al., 2018; Mase et al., 2019; Aas et al., 2019; Guan et al., 2019). However, as pointed in (Guidotti et al., 2018; Hall, 2019), different from LR, DNNs and tree ensemble models are still not globally interpretable, or can only be globally interpreted with an approximate linear importance vector. In (Guidotti et al., 2018), the global interpretability is formally defined as, we are able to understand the whole logic of a model and follow the entire reasoning leading to all the different possible outcomes. With only local interpretability for each sample, the credit scoring models can only assist the manual evaluation of loans, or analyze reasons after approval or rejection. On the other hand, with global interpretability of credit scoring models, we can perform credit evaluation automatically, and understand the reasons of decisions in advance. In a word, directly applying conventional classifiers is not optimal for credit scoring.

Fortunately, automatic feature crossing is a promising way to capture nonlinear interactions among features (Chapelle et al., 2015; Cheng et al., 2016; Luo et al., 2019). As a practical direction in automated machine learning (Yao et al., 2018), it calculates second- or higher-order cross-product of categorical features. For example, the cross feature representing a female teacher can be denoted as (“gender=female” “occupation=teacher”). Meanwhile, feature discretization has been proven useful to improve the capability of numerical features (Liu et al., 2002; Chapelle et al., 2015; Liu et al., 2020a). Thus, we usually conduct feature discretization on numerical feature fields to generate corresponding categorical feature fields for automatic feature crossing (Luo et al., 2019). An example of second-order feature crossing can be found in Fig. 1, in which we can obtain the cross feature field (income, debt) to measure a user’s repayment ability. As pointed in (Luo et al., 2019), cross features, instead of latent embeddings or latent representations in DNN, are highly interpretable. Via automatic generation of cross features, we can make the simple and globally interpretable LR model more accurate without heavy handcrafted feature engineering. According to the analysis on Generalized Additive Model (GAM) (Lou et al., 2012) and GA2M (Lou et al., 2013), the LR model empowered with cross features is a GAM with both second- and higher-order feature interactions, which is fortunately a white-box model.

Previous works on automatic feature crossing mostly try to explicitly search in the set of possible cross feature fields (Lou et al., 2013; Chapelle et al., 2015; Katz et al., 2016; Khurana et al., 2018; Liu et al., 2020b), including the state-of-the-art method AutoCross (Luo et al., 2019). In the application of credit scoring, we usually need to evaluate the user credit from different aspects. This results in hundreds of feature fields in the data for credit scoring. For example, there are to feature fields in several typical public credit scoring datasets, as well as in our real applications in credit scoring111Details are illustrated in Sec. 5.1. Thus, the candidate set of possible cross feature fields is inevitably large, which leads to the low efficiency of existing searching methods. Suppose we have feature fields, there will be , and second-, third- and forth-order cross feature fields respectively in the candidate set. Meanwhile, for the task of Click-Through-Rate (CTR) prediction, various feature crossing modules are proposed based on deep learning (Qu et al., 2016; Cheng et al., 2016; Wang et al., 2017; Guo et al., 2017; Lian et al., 2018; Song et al., 2018). Unfortunately, inherit from deep learning technologies, these deep learning-based methods are not globally interpretable, and hard to explicitly generate all the cross features captured in the models.

As mentioned above, DNN can be locally interpreted, via gradient backpropagation (Li et al., 2015; Selvaraju et al., 2017) or feature perturbation (Fong and Vedaldi, 2017; Guan et al., 2019). These methods assign a piece of local interpretation for each sample, and interpret DNN as a combination of numbers of linear classifiers (Montufar et al., 2014; Ribeiro et al., 2016; Chu et al., 2018). In this work, we observe that, local interpretations of a specific feature are usually inconsistent in different samples. We show and prove that, such inconsistency is caused by feature interactions occurred in the hidden layers of DNN. Then, we give definition of the interpretation inconsistency in DNN, so that we can take advantage of the strong expressive ability of DNN to find cross features. This can extremely reduce the size of candidate set, and improve the efficiency for finding cross features on credit scoring datasets with large numbers of feature fields.

Accordingly, we propose a novel DNN2LR method for automatic feature crossing for credit scoring. Specifically, we first calculate local interpretations in DNN via the gradient backpropagation from the prediction layer to the input features (Selvaraju et al., 2017; Alvarez-Melis and Jaakkola, 2018; Li et al., 2015; Smilkov et al., 2017; Yuan et al., 2019). Then, we define and calculate the interpretation inconsistency of each feature in each sample, based which most frequently occurring cross feature fields are extracted as candidate set. Finally, we train a LR model with both original feature fields and candidate feature fields, and select final useful cross feature fields according to their contribution on the validation set. With DNN2LR, we can obtain a LR model empowered with the final set of cross feature fields, which is a white-box model with high accuracy and global interpretability simultaneously. We conduct experiments on both public and business datasets from real applications in credit scoring, with to original feature fields. Experiments demonstrates that, our proposed DNN2LR method can outperform several conventional classifiers and several automatic feature crossing methods. Comparing with the state-of-the-art feature crossing method, i.e., AutoCross (Luo et al., 2019), DNN2LR reduces the running time for feature crossing by about to . In a word, DNN2LR helps us constructing accurate white-box models for the task of credit scoring in an efficient way. The main contributions of this paper are summarized as follows:

-

•

We give definition of the interpretation inconsistency in DNN. Then, we show that the interpretation inconsistency is caused by feature interactions in DNN, and can help us to find cross features.

-

•

we propose a novel DNN2LR method for automatic feature crossing for credit scoring. DNN2LR can generate an accurate and compact candidate set of cross feature fields, so that the searching can be efficiently done on credit scoring datasets with hundreds of original feature fields.

-

•

Experimental results on several real-world credit scoring datasets show that, DNN2LR outperforms existing methods in both effectiveness and efficiency. DNN2LR can produce accurate white-box models for the task of credit scoring in an efficient way.

2. Related Works

In this section, we review some work related to the task of automatic feature crossing for credit scoring. First, we review some methods that explicitly search for cross features. Then, we review some deep learning-based methods for CTR prediction.

2.1. Cross Feature Searching Methods

It is a direct way to explicitly search for useful cross feature fields in a candidate set. However, the candidate set for searching is usually inevitably large, which leads to low efficiency of learning feature crossing, especially on credit scoring datasets with hundreds of input feature fields. Most of these works focus on generating second-order cross features (Lou et al., 2013; Chapelle et al., 2015; Katz et al., 2016; Nargesian et al., 2017; Kaul et al., 2017). GA2M (Lou et al., 2013) extends GAM (Lou et al., 2012) with second-order cross features, which is hard to optimize when the candidate set is large. In (Chapelle et al., 2015), the authors try to generate and select second-order cross feature fields according to Conditional Mutual Information (CMI). However, once the mutual information of an original feature field is high, the generated cross feature fields containing it will also have high conditional mutual information. AutoLearn (Kaul et al., 2017) selects cross feature fields by using regularized regression models, where it is hard to learn a regression model for all the cross feature fields on a wide dataset. Some works (Katz et al., 2016; Nargesian et al., 2017) takes meta-learning into consideration for feature generation. However, the effectiveness of meta-learning in these methods remains a question and requires extremely large amount of data. There are also some methods incorporating genetic algorithm (Tran et al., 2016) and reinforcement learning (Khurana et al., 2018; Chen et al., 2019) for finding feature combinations. However, with genetic algorithm or reinforcement learning, we still have a large space to explore. Moreover, as introduced in (Khurana et al., 2018), it also requires large amount of data for the training of reinforcement learning. To tackle with above problems, AutoCross (Luo et al., 2019) presents an approximate framework to search in the large candidate set of both second- and higher-order cross feature fields more efficiently. AutoCross uniformly divides the whole dataset into at least batches, where is the size of candidate set in AutoCross. Then AutoCross iteratively trains a field-wise LR model on part of the data to validate the contribution of a cross feature field. Though AutoCross achieves state-of-the-art performances, it still faces low searching efficiency on some wide datasets. Moreover, when the candidate set is large, data for training the field-wise LR model of each candidate cross feature field will be too little to produce reliable results. Therefore, AutoCross may face problems in both effectiveness and efficiency on credit scoring datasets with hundreds of feature fields.

Besides above methods explicitly searching for cross features, some works (He et al., 2014; Shi et al., 2020) utilize tree ensemble models (Chen and Guestrin, 2016; Ke et al., 2017) for generating cross features. In (He et al., 2014), each tree in GBDT (Gradient Boosting Decision Tree) (Ke et al., 2017) corresponds to a cross feature field, and each leaf node in a tree corresponds to a cross feature. As trees in GBDT may be deep, the generated cross features may be with too high orders, so that they are hard for human to understand. And cross features in the same cross feature field usually share different meanings. Besides, Factorization Machine (FM) (Rendle, 2010) has been a successful way to explicitly capture second-order feature interactions. To overcome the problem that conventional FM can only model second-order feature interactions Higher-Order FM (HOFM) (Blondel et al., 2016) and Interaction Machine (IM) (Yu et al., 2020) propose to model higher-order feature interactions in the FM architecture in linear time. The calculation of feature interactions in FM is somehow product-based similarity. However, it is hard for these factorization-based methods to capture all kinds of feature interactions in various real-world application scenarios. Another drawback of FM is that, it models all feature interactions of specific-order, most of which are not useful for making predictions. To deal with this problem, Automatic Feature Interaction Selection (AutoFIS) (Liu et al., 2020b) directly learns the weight for each cross feature field, for both second-order and higher-order, and thus an AutoFM model is obtained. It promotes the performances of FM, but faces problems in high computational cost and optimization difficulty, when it is performed on credit scoring datasets with hundreds of feature fields and the desired interaction order is high.

2.2. Deep Learning-based CTR Prediction Methods

Nowadays, some works try to design various deep learning-based crossing modules for the task of CTR prediction. For better performances, these crossing modules are usually applied along with multi-layer fully-connected DNNs. The Wide & Deep model (Cheng et al., 2016) directly learns parameters of manually designed cross features in the wide component. The Product-based Neural Network (PNN) (Qu et al., 2016) applies inner-product or outer-product to capture second-order features. In Deep & Cross Network (DCN) (Wang et al., 2017), the authors design an incremental crossing module, named CrossNet, to capture second-order as well as higher-order features. Factorization-based methods have also been extended with deep architectures (Guo et al., 2017; Yu et al., 2020; Liu et al., 2020b). In xDeepFM (Lian et al., 2018), Compressed Interaction Network (CIN) is proposed as a crossing module based on outer-product calculation of features. AutoINT (Song et al., 2018) is a combination of residual connections (He et al., 2016) and a crossing module based on Self-Attention (Vaswani et al., 2017). With the residual connections, the fully-connected DNN is performed with the input of original features in AutoINT. Convolutional neural networks are also incorporated for modeling local interactions among nearby features (Liu et al., 2015, 2019). Moreover, feature interactions are modeled as graphs, and then graph neural networks are performed (Xie et al., 2020; Su et al., 2021). Neural architecture search approaches have also been incorporated for finding feature interaction architectures in deep learning-based CTR prediction models (Song et al., 2020; Khawar et al., 2020).

Inherit from deep learning technologies, above feature crossing modules are mostly associated with hidden projection, in each layer or between adjacent layers. This makes deep learning-based methods still black-box models and hard to be globally interpreted, which does not meet our requirements for credit scoring.

3. Learning from Interpretations in DNN

In this section, we present that we can learn feature crossing from interpretations in DNN for credit scoring.

3.1. Local Interpretations

DNN has been a powerful black-box model (Guidotti et al., 2018). Recently, extensive works have been done to study local piece-wise interpretability of DNN, which means assigning a piece of local interpretation weights for each sample, a DNN model can be regarded as a combination of numbers of linear classifiers (Montufar et al., 2014; Ribeiro et al., 2016; Lundberg and Lee, 2017; Chu et al., 2018). Some works investigate the gradients from the final predictions to the input features in deep models, which can be applied in the visualization of deep vision models (Zhou et al., 2016; Selvaraju et al., 2017; Smilkov et al., 2017; Alvarez-Melis and Jaakkola, 2018), as well as the interpretation of language models (Li et al., 2015; Yuan et al., 2019). Perturbation on input features is also utilized to find local interpretations of both vision models (Fong and Vedaldi, 2017) and language models (Guan et al., 2019). In this work, we adopt gradient backpropagation for the calculation of local interpretations. Therefore, we can first define the local interpretations in DNN. As introduced, we usually conduct feature discretization for numerical features. Thus, we focus on analyzing DNN with embeddings of categorical features as input.

Definition 0.

(Local Interpretation) Given a specific feature associated with the feature field in a specific sample , the corresponding local interpretation is

| (1) |

where denotes the corresponding feature embeddings of in the DNN model, and is the local weights computed via gradient backpropagation

| (2) |

where denotes the prediction made by the DNN model for the sample .

3.2. Interpretation Inconsistency

Local interpretation refers to the contribution of the corresponding feature to the final prediction in the corresponding sample. Sometimes, local interpretations of a specific feature are inconsistent in different samples. When local interpretations are consistent in different samples, it means the corresponding feature contributes to the final prediction on its own. When local interpretations are inconsistent, it means the contribution of the corresponding feature is affected by other features. The inconsistency of local interpretations in DNN is caused by nonlinear feature interactions in the hidden layers of DNN.

Lemma 0.

(Inconsistent Local Interpretations) If a feature field nonlinearly interacts with other feature fields in the hidden layers of DNN, features associated with the feature field will have inconsistent local interpretations among different samples.

Suppose feature fields are nonlinearly interacted in DNN, i.e., , where is a nonlinear interaction function. For example, . Then, we can have the following local interpretation

| (3) |

In the example of , the local interpretations are and . Obviously, the local interpretation of is affected by the features associated with other feature fields. As the values of change among different samples, the local interpretations of are inconsistent. In contrast, if there is no nonlinear interaction among , we have an addition form, i.e., , where is an arbitrary function. Then, we can have the following local interpretation

| (4) |

Obviously, with the same feature , there will be consistent local interpretations among different samples.

To measure the degree of inconsistency among local interpretations of a specific feature in different samples, we first need to calculate its global interpretation, and then calculate the interpretation inconsistency between the local interpretation and the global interpretation.

Definition 0.

(Global Interpretation) Given the feature , the corresponding global interpretation is

| (5) |

where is the average local weights of the feature in all samples, named as global weights and formulated as

| (6) |

where is the set of samples.

Definition 0.

(Interpretation Inconsistency) Given a specific feature in a specific sample , the corresponding interpretation inconsistency is

| (7) |

According to Lemma 3.2, the interpretation inconsistency in DNN is able to lead us to generate a compact and accurate candidate set of cross feature fields. And larger the values of interpretation inconsistency of a specific feature, more the corresponding feature field can work for feature crossing. To verify the validity of interpretation inconsistency for feature crossing, we conduct empirical experiments in Sec. 5.6.

4. DNN2LR

In this section, we formally propose the DNN2LR approach for credit scoring. DNN2LR consists of two steps: (1) generating a compact and accurate candidate set of cross feature fields; (2) searching in the candidate set for the final cross feature fields. Fig. 2 provides an overview of the proposed DNN2LR approach.

4.1. Candidate Set Generation

For generating the compact and accurate candidate set of cross features, we need to obtain the local piece-wise interpretations in DNN. Thus, we need first to train a DNN model. Specifically, in this work, we train a multi-layer fully-connected DNN. As the original features are sparse categorical features, we use an embedding layer (Zhang et al., 2016) to transform the input features into low dimensional dense representations. Then, the dense representations are passed through some linear transformation and nonlinear activation to obtain the predictions of samples, where we use ReLu as the activation for hidden layers, and sigmoid for the output layer to support binary classification tasks. To be noted, though we focus on learning feature crossing from multi-layer fully-connected DNN in this work, our proposed approach can also works with other types of DNN, such as AutoINT (Song et al., 2018) and xDeepFM (Lian et al., 2018).

Based on the trained DNN model, in each validation sample , we compute the interpretation inconsistency of the feature , as defined in Def. 3.4. Therefore, we obtain an interpretation inconsistency matrix , where is the interpretation inconsistency value of the -th feature field in the -th sample. Then, we conduct an element-wise filtering on matrix with a threshold , which can be formulated as

| (8) |

where is a binary feasible feature matrix, and indicates whether the -th feature in the -th sample has interacted with other features in the hidden layers. denotes the quantile of the matrix , which means keeping the top elements () in with largest values of interpretation inconsistency. Then, for each feasible feature , the corresponding feature field can be used to generate candidate cross feature fields.

Finally, we greedily generate the candidate set of cross features fields. Considering that extremely high-order cross features are rarely useful, as in (Luo et al., 2019), we construct our candidate set with only second-order, third-order and forth-order cross feature fields. To construct a compact and accurate candidate set, we need to find cross feature fields which are most frequently interacted in DNN. According to the feasible feature matrix , we count the occurrences of possible cross feature fields. We then rank the cross feature fields in a descending order according to the corresponding occurrence frequency, and select the top cross feature fields as our candidate set. According to our experiments in Sec. 5.5, we can use as the optimal hyper-parameter, where is the size of the original feature fields. Accordingly, a compact candidate set of cross feature fields is generated, and searching for useful cross feature fields can be efficiently conducted.

4.2. Searching for Final Cross Feature Fields

After obtaining the candidate set of cross feature fields , we can search for final useful cross feature fields. The searching strategy in AutoCross (Luo et al., 2019) has been proven effective. Facing a large candidate set, AutoCross trains a LR model for each candidate cross feature field, and select final cross feature fields based on their contribution measured on the validation set. Obviously, there are too many LR models to train, and the efficiency is low. In contrast, we have a compact and accurate candidate set, and are able to feed all candidate cross feature fields into a single LR model. Thus, we do not need to involve the complex searching structure in (Luo et al., 2019), and can simply select useful cross feature fields during validation.

To search for useful cross feature fields, we need to train a sparse LR model consisting of both original feature fields and candidate cross feature fields . For a specific sample , the prediction can be made by sparse LR as

| (9) |

where is the cross feature associated with cross feature field in the sample , is a lookup function for the weight in sparse LR of the corresponding feature, is the sigmoid function, and is a bias term. Specifically, we first train the sparse LR model with only original features on the training set . Then, we fix the weights of original features, and train the model with candidate cross features on the training set . According to our experiments in Sec. 5.5, we can use as the optimal hyper-parameter. Therefore, the time cost of training the above model is in the same order of magnitude with training with original features.

Based on the trained LR model, we conduct searching procedure for useful cross feature fields according to their contribution measured on the validation set according to the AUC (Area Under the ROC Curve) metric. Pseudocode of the searching procedure is presented in Alg. 1. Moreover, the steps 7-12 in Alg. 1 are paralleled with multi-threading implementation, where the measuring of each candidate cross feature field is conducted on one thread. The main idea in Alg. 1 is that, based on parameters in the trained LR model, we measure the contribution in AUC of each candidate cross feature field on the validation set, and iteratively select those have positive contribution as the final set of cross feature fields. Moreover, we can further incorporate beam search (Cohen and Beck, 2019) in Alg. 1. And this will be further investigated in our experiments in Sec. 5.3.

4.3. Deploying to Online Production

After obtaining the final set of cross feature fields and a white-box model, we need to deploy the final model to online production. As the final model is a LR model, and LR is widely-used for credit scoring or default prediction in banks or online lending companies, the final white-box model is easily deployed to the online system. We only need to link the final LR model to the existing online LR serving system, and generate cross features based on data flows.

5. Experiments

In this section, we empirically evaluate our proposed DNN2LR approach. We first describe settings of the experiments, then report and analyze the experimental results.

| dataset | #samples | #feature fields | |||

|---|---|---|---|---|---|

| training | validation | testing | #Num. | #Cate. | |

| BNP | 73,165 | 18,291 | 22,865 | 109 | 23 |

| PPD | 36,000 | 9,000 | 15,000 | 218 | 109 |

| Bussiness1 | 186,498 | 46,625 | 58,282 | 178 | 15 |

| Bussiness2 | 120,989 | 30,247 | 52,169 | 302 | 56 |

5.1. Datasets

We conduct automatic feature crossing experiments on datasets: BNP, PPD, Bussiness1 and Bussiness2. BNP222https://www.kaggle.com/c/bnp-paribas-cardif-claims-management/data is a public credit scoring dataset from a bank, and PPD333https://www.kesci.com/home/competition/56cd5f02b89b5bd026cb39c9/content/1 is a public default prediction dataset from an online lending company. Bussiness1 and Bussiness2 are business datasets from our real-world applications in credit scoring of banks, after anonymization and sanitization. For BNP and PPD, we randomly use and samples for training and testing respectively. For all the four datasets, we randomly use samples in the training set for validation. Details of these datasets can be found in Tab. 1. There are about to feature fields in these datasets, characterizing users in profiles, loan history, repayment history and other behavior records.

5.2. Settings

In our experiments, we are going to verify whether we can obtain a white-box model with high accuracy and global interpretability simultaneously. Specifically, we are going to verify whether DNN2LR can empower the simple LR model to achieve better performances comparing with conventional classifiers, as well as other competitive feature crossing methods. First, we compared DNN2LR with several conventional common-used classifiers: LR, DNN and GBDT. Then, some competitive feature crossing methods are included: FM (Rendle, 2010), HOFM (Blondel et al., 2016), AutoFM (Liu et al., 2020b), GA2M (Lou et al., 2013), GBDT+LR (He et al., 2014) and AutoCross+LR (Luo et al., 2019). Meanwhile, we further include several deep-learning based feature crossing modules: CrossNet (Wang et al., 2017), CIN (Lian et al., 2018) and Self-Attention (Song et al., 2018; Vaswani et al., 2017). They are the feature crossing modules used in CTR prediction models DCN (Wang et al., 2017), xDeepFM (Lian et al., 2018) and AutoINT (Song et al., 2018) respectively. To be noted, as discussed in Sec. 2.2, these deep learning-based methods are still black-box models, and hard to be globally interpreted. Moreover, we run our proposed DNN2LR method times with different parameter initialization, where average results and significance test are reported. The detailed settings of above compared methods are introduced as following.

| compared | BNP | PPD | Bussiness1 | Bussiness2 | average | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| methods | AUC | KS | AUC | KS | AUC | KS | AUC | KS | AUC | KS |

| LR | 73.32 | 34.19 | 74.56 | 37.56 | 72.36 | 34.21 | 78.16 | 44.73 | 74.60 | 37.67 |

| DNN | 74.08 | 36.28 | 75.81 | 39.65 | 74.60 | 37.11 | 79.68 | 49.33 | 76.04 | 40.59 |

| GBDT | 74.46 | 37.24 | 75.37 | 38.79 | 74.89 | 37.16 | 79.54 | 48.54 | 76.07 | 40.43 |

| CrossNet | 73.43 | 34.47 | 75.17 | 38.36 | 73.94 | 36.76 | 79.07 | 47.74 | 75.40 | 39.33 |

| CIN | 73.64 | 35.03 | 75.59 | 39.49 | 74.06 | 36.59 | 79.38 | 48.55 | 75.67 | 39.92 |

| Self-Attention | 73.97 | 35.89 | 76.12 | 40.72 | 74.39 | 37.06 | 79.51 | 48.71 | 76.00 | 40.60 |

| FM | 73.39 | 34.39 | 74.69 | 37.26 | 72.54 | 34.32 | 78.92 | 46.39 | 74.89 | 38.09 |

| HOFM | 73.46 | 34.42 | 74.82 | 37.59 | 72.78 | 34.78 | 79.21 | 48.29 | 75.07 | 38.77 |

| AutoFM | 73.28 | 33.41 | 74.88 | 37.61 | 72.69 | 35.02 | 78.84 | 46.05 | 74.92 | 38.02 |

| GA2M | 73.19 | 32.38 | 73.57 | 37.18 | 72.49 | 34.51 | 78.33 | 45.67 | 74.40 | 37.44 |

| GBDT+LR | 74.23 | 36.66 | 75.56 | 39.11 | 74.67 | 36.92 | 79.47 | 48.69 | 75.98 | 40.35 |

| AutoCross+LR | 74.73 | 37.64 | 76.06 | 40.81 | 74.81 | 37.01 | 79.39 | 48.75 | 76.25 | 41.05 |

| DNN2LR | 75.48∗ | 38.78∗ | 76.68∗ | 41.96∗ | 75.39∗ | 38.19∗ | 79.91∗ | 50.24∗ | 76.87∗ | 42.29∗ |

For LR, GA2M (Lou et al., 2013) and LR models used in GBDT+LR (He et al., 2014), AutoCross+LR (Luo et al., 2019) and DNN2LR, we tune the learning rate in the range of , and the l2 regularization in the range of .

For DNN and the DNN model used in DNN2LR, we set the dimensionality of feature embeddings as , the learning rate as , and the l2 regularization as . We use Adam for optimization, use the commonly-applied ReLu as activation function, and tune batch size in the range of . The DNN model is a multi-layer fully-connected DNN, whose hidden components in deep layers are set to .

For FM-based methods, i.e., FM (Rendle, 2010), HOFM (Blondel et al., 2016) and AutoFM (Liu et al., 2020b), we set the dimensionality of feature embeddings as , the learning rate as , the l2 regularization as , and tune batch size in the range of . We generate up to forth-order cross features with HOFM. The efficiency of AutoFM is low when there are too many input feature fields and desired interaction order is high. Thus, for efficiency, with AutoFM, we generate up to second-order cross features on default prediction datasets with hundreds of feature fields used in our experiments.

For deep learning-based crossing methods, i.e., CrossNet (Wang et al., 2017), CIN (Lian et al., 2018) and Self-Attention (Song et al., 2018; Vaswani et al., 2017), we set the dimensionality of feature embeddings as , the learning rate as , and the l2 regularization as . We use Adam for optimization, use the commonly-applied ReLu as activation function, and tune batch size in the range of . For these three methods, we have layers of feature crossing, which means conducting up to forth-order crossing. For Self-Attention, the number of hidden units and the number of attention heads are set to and respectively.

For GBDT and GBDT+LR (He et al., 2014), we set the number of trees to , with -round early-stopping. The max depth in the tree is tuned in the range of .

For AutoCross (Luo et al., 2019), we divide the dataset into batches of data for evaluating the contribution of each candidate cross feature field, where is the size of candidate set in AutoCross.

For our proposed DNN2LR approach, the quantile threshold for filtering feasible features in the interpretation inconsistency matrix is tuned in the range of , and the size of candidate set is tuned in the range of , where is the size of the original feature fields.

Moreover, our experiments are conducted on a machine with Intel(R) Xeon(R) CPU (E5-26p30 v4 @ 2.20GHz, 24 cores) and 128G memory. For feature preprocessing, we apply multi-granularity discretization (Luo et al., 2019) on numerical features. The granularities in this method are set as , and we only keep the best granularity for each numerical feature field. We evaluate the performances in terms of two commonly-used metrics for credit scoring: the AUC (Area Under the ROC Curve) metric444https://en.wikipedia.org/wiki/Area_under_the_curve_(pharmacokinetics) and the KS (Kolmogorov-Smirnov) metric555https://en.wikipedia.org/wiki/Kolmogorov%E2%80%93Smirnov_test. AUC measures the overall ranking performance. KS measures the largest difference between true positive rate and false positive rate on the ROC curve, which can be a reference of the threshold for loan approval.

5.3. Performance Comparison

Tab. 2 shows the performances comparison among different methods. We can observe that, in most cases, different feature crossing methods can achieve somehow improvements on the performances of LR. Among deep learning-based cross methods, Self-Attention has good performances, and can slightly outperform DNN and GBDT. FM-based methods, i.e., FM, HOFM and AutoFM, does not achieve good performances. This may indicate that, product-based similarity calculation in FM-based methods is not suitable for interactions in credit evaluation tasks. GA2M performs poor, for it is hard to optimize models with too many candidate cross feature fields on credit scoring datasets with hundreds of input feature fields. GBDT+LR has relatively good performances. Obviously, AutoCross+LR is the best one among baseline methods. However, DNN2LR performs even beeter than AutoCross+LR. This is because, on credit scoring datasets with hundreds of feature fields, data for training the field-wise LR model of each candidate cross feature field will be too little to produce reliable results in AutoCross. These experimental results show DNN2LR outperforms conventional classifiers, as well as competitive feature crossing methods on the task of credit scoring. Moreover, the improvements achieved by DNN2LR are similar between public datasets and business datasets.

| beam search | BNP | PPD | Bussiness1 | Bussiness2 |

|---|---|---|---|---|

| w/ | 75.51 | 76.72 | 75.39 | 79.96 |

| w/o | 75.48 | 76.68 | 75.39 | 79.91 |

As discussed in Sec. 4.2, we can incorporate beam search (Cohen and Beck, 2019) in Alg. 1. To investigate this, we show performance comparison between DNN2LR with and without beam search in Tab. 3. To be noted, this experiment is done when the DNN is trained and fixed. With beam search, we keep the best sets of cross feature fields during each step in Alg. 1, and use the set with best overall performance as the final set. It is clear that, with or without beam search, the performances are close. Accordingly, it is not necessary to incorporate beam search in the searching procedure of DNN2LR.

Furthermore, as shown in Fig. 3, we illustrate the count of both second- and higher-order cross feature fields generated by DNN2LR on each dataset. Roughly speaking, more original feature fields in the dataset, more cross feature fields we need to generate.

5.4. Running Time Comparison

For the efficiency comparison, we involve the best baseline method AutoCross, according to Tab. 2. Fig. 4 shows the running time comparison between AutoCross and DNN2LR. DNN2LR accelerates AutoCross by about , , and on BNP, PPD, Business1 and Business2 respectively. According to Tab. 1, there are to feature fields in these datasets. It is clear that, the speedup ratios are significant, and get larger when we have more input feature fields in the dataset. These results strongly illustrate the high efficiency of DNN2LR for feature crossing on credit scoring datasets with hundreds of feature fields.

5.5. Hyper-parameter Study

As shown in Fig. 5, we illustrate the sensitivity of DNN2LR to hyper-parameters. First, we investigate the performances of DNN2LR with varying quantile thresholds for filtering feasible features in the interpretation inconsistency matrix. According to the curves in Fig. 5, the performances of DNN2LR are relatively stable, especially in the range of . Then, we investigate the performances of DNN2LR with varying sizes of candidate set. As discussed in Sec. 5.3, we need to generate more cross feature fields when we have more original feature fields. Thus, we make the size of candidate set related to the number of original feature fields. We can observe that, the curves are stable when . And when we have larger , the performances of DNN2LR slightly increase. According to our observations, we set and as the optimal hyper-parameters in our experiments. This makes the candidate size extremely small, comparing to the set of possible cross feature fields on credit scoring datasets with hundreds of feature fields.

| formulation | |||

|---|---|---|---|

| 0.0159 | 0.0162 | 0.0034 | |

| 0.0231 | 0.0149 | 0.0056 | |

| 0.0137 | 0.0193 | 0.0039 | |

| 0.0529 | 0.0527 | 0.0084 | |

| 1.2300 | 0.7514 | 0.2281 | |

| 2.7073 | 1.3112 | 0.4556 | |

| 0.0888 | 0.0743 | 0.0113 | |

| 0.0495 | 0.0523 | 0.0027 | |

| 0.0591 | 0.0568 | 0.0081 | |

| 0.0802 | 0.1255 | 0.0156 | |

| 0.0131 | 0.0141 | 0.0046 | |

| 0.5625 | 0.6897 | 0.1782 | |

| 0.5151 | 0.4948 | 0.0667 | |

| 0.2828 | 0.2931 | 0.0726 |

5.6. Interpretation Inconsistency

To investigate the validity of interpretation inconsistency for feature crossing, we conduct some empirical experiments. We conduct experiments on some mathematic formulations, which are shown in Tab. 4. In each formulation, there are three feature fields: , and , where and are interacted, while has no interaction with the other two fields. The interactions between and cover different forms, and the calculation of also varies from simple to complex. For each formulation, we randomly sample features in , and keep two decimal places. We perform DNN on each formulation, and calculate the average interpretation inconsistency values of the three feature fields according to Eq. (7), as shown in Tab. 4. To be noted, we do not perform feature discretization in this experiment. It is clear that, in each formulation, the average interpretation inconsistency values of and are much larger than that of . This observation clearly confirms to the formulations.

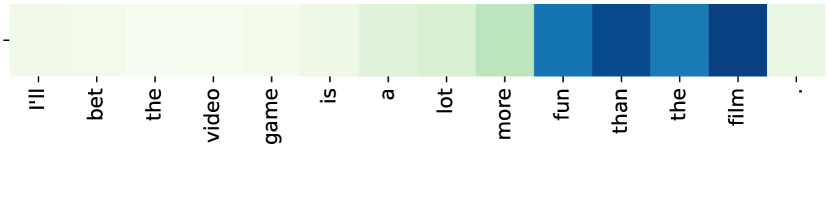

Furthermore, we conduct experiments on sentence classification. We train BiLSTM on the MR dataset666http://www.cs.cornell.edu/people/pabo/movie-review-data/. There are and positive and negative movie reviews respectively. Values of interpretation inconsistency in several example sentences are shown in Fig. 6. We achieve two observations about words with large values of interpretation inconsistency. First, words that form phrases have large values of interpretation inconsistency, e.g., “in other words,” “can’t … get anywhere near,” “meets those standards,” “an hour and a half to blow” and “it goes nowhere.” Second, words with specific intensities or orientations have large values of interpretation inconsistency, e.g., “a lot more fun than the film,” “a lot better” and “a little more than this.” These observations confirm to the feature interactions among words in sentences. These experiments suggest that it is valid to learn feature crossing from interpretation inconsistency in DNN.

| BNP | PPD |

|---|---|

| (v22,v24,v40,v79) | (ThirdParty_Info_Period3_1,UserInfo_16) |

| (v50,v110) | (ListingInfo,Education_Info1) |

| (v30,v50) | (UserInfo_14,UserInfo_16) |

| (v22,v50,v113) | (ThirdParty_Info_Period2_8,UserInfo_16) |

| (v24,v50) | (ThirdParty_Info_Period3_2,UserInfo_16) |

| (v22,v24,v79,v113) | (UserInfo_16,ListingInfo) |

| (v47,v50) | (WeblogInfo_2,UserInfo_14) |

| (v56,v66) | (ThirdParty_Info_Period1_10,UserInfo_16) |

| (v47,v50,v110) | (ThirdParty_Info_Period3_15,UserInfo_14) |

| (v10,v22,v40,v113) | (ListingInfo,UserInfo_7,UserInfo_19) |

| (v21,v50) | (UserInfo_16,UserInfo_3,Education_Info1) |

| (v50,v56,v113) | (WeblogInfo_20,ThirdParty_Info_Period1_10) |

| (v40,v50) | (ListingInfo,WeblogInfo_5) |

| (v24,v66) | (UserInfo_14,ThirdParty_Info_Period2_15) |

| (v10,v50) | (ThirdParty_Info_Period4_6,ThirdParty_Info_Period2_6,ListingInfo) |

| (v22,v30,v113) | (WeblogInfo_15,WeblogInfo_20) |

| (v47,v50,v56) | (ThirdParty_Info_Period3_6,UserInfo_15) |

| (v50,v113) | (UserInfo_16,WeblogInfo_20) |

| (v50,v79) | (WeblogInfo_15,SocialNetwork_13) |

| (v40,v56,v110,v113) | (WeblogInfo_2,ThirdParty_Info_Period4_15) |

5.7. Important Cross Feature Fields

In Tab. 5, we illustrate top- cross feature fields generated by DNN2LR on public datasets, i.e., BNP and PPD. Once the meanings of original feature fields are known, we can obtain the meanings of cross feature fields. The final model generated by DNN2LR is a LR model empowered with cross features, which is a white-box model with high accuracy and global interpretability simultaneously.

6. Conclusion

In this paper, we focus on the automatic feature crossing task for credit scoring, in which we usually face hundreds of feature fields. We observe that the local interpretations of a specific feature are usually inconsistent in different samples, and accordingly define interpretation inconsistency in DNN. We show that, the interpretation inconsistency in DNN can help us to find cross features. Then, we propose a novel automatic feature crossing method called DNN2LR for credit scoring. DNN2LR constructs an accurate and compact candidate set of cross feature fields based on interpretation inconsistency, and search for the final set of cross feature fields to train a final LR model. Thus, with DNN2LR, we can obtain a white-box model, i.e., a LR model empowered with cross features. Extensive experiments have been conducted on real-world credit scoring datasets with large numbers of feature fields. Experimental results demonstrates that, DNN2LR outperforms several conventional classifiers, as well as several automatic feature crossing methods. Moreover, comparing with the state-of-the-art method AutoCross, DNN2LR can significantly accelerate the speed by about to . In a word, DNN2LR is able to efficiently produce accurate white-box models for the task of credit scoring.

References

- (1)

- Aas et al. (2019) Kjersti Aas, Martin Jullum, and Anders Løland. 2019. Explaining individual predictions when features are dependent: More accurate approximations to Shapley values. arXiv preprint arXiv:1903.10464 (2019).

- Alvarez-Melis and Jaakkola (2018) David Alvarez-Melis and Tommi S Jaakkola. 2018. Towards robust interpretability with self-explaining neural networks. In NeurIPS. 7786–7795.

- Babaev et al. (2019) Dmitrii Babaev, Maxim Savchenko, Alexander Tuzhilin, and Dmitrii Umerenkov. 2019. Et-rnn: Applying deep learning to credit loan applications. In KDD. 2183–2190.

- Bastos (2007) Joao Bastos. 2007. Credit scoring with boosted decision trees. (2007).

- Blondel et al. (2016) Mathieu Blondel, Akinori Fujino, Naonori Ueda, and Masakazu Ishihata. 2016. Higher-order factorization machines. In NeurIPS. 3351–3359.

- Chapelle et al. (2015) Olivier Chapelle, Eren Manavoglu, and Romer Rosales. 2015. Simple and scalable response prediction for display advertising. ACM Transactions on Intelligent Systems and Technology (TIST) 5, 4 (2015), 61.

- Chen and Guestrin (2016) Tianqi Chen and Carlos Guestrin. 2016. Xgboost: A scalable tree boosting system. In KDD. 785–794.

- Chen et al. (2019) Xiangning Chen, Qingwei Lin, Chuan Luo, Xudong Li, Hongyu Zhang, Yong Xu, Yingnong Dang, Kaixin Sui, Xu Zhang, Bo Qiao, et al. 2019. Neural Feature Search: A Neural Architecture for Automated Feature Engineering. In ICDM. 71–80.

- Cheng et al. (2016) Heng-Tze Cheng, Levent Koc, Jeremiah Harmsen, Tal Shaked, Tushar Chandra, Hrishi Aradhye, Glen Anderson, Greg Corrado, Wei Chai, Mustafa Ispir, et al. 2016. Wide & deep learning for recommender systems. In Proceedings of the 1st workshop on deep learning for recommender systems. 7–10.

- Chu et al. (2018) Lingyang Chu, Xia Hu, Juhua Hu, Lanjun Wang, and Jian Pei. 2018. Exact and consistent interpretation for piecewise linear neural networks: A closed form solution. In KDD. 1244–1253.

- Cohen and Beck (2019) Eldan Cohen and Christopher Beck. 2019. Empirical analysis of beam search performance degradation in neural sequence models. In ICML. 1290–1299.

- Fong and Vedaldi (2017) Ruth C Fong and Andrea Vedaldi. 2017. Interpretable explanations of black boxes by meaningful perturbation. In ICCV. 3429–3437.

- Guan et al. (2019) Chaoyu Guan, Xiting Wang, Quanshi Zhang, Runjin Chen, Di He, and Xing Xie. 2019. Towards a deep and unified understanding of deep neural models in nlp. In ICML. 2454–2463.

- Guidotti et al. (2018) Riccardo Guidotti, Anna Monreale, Salvatore Ruggieri, Franco Turini, Fosca Giannotti, and Dino Pedreschi. 2018. A survey of methods for explaining black box models. ACM computing surveys (CSUR) 51, 5 (2018), 1–42.

- Guo et al. (2017) Huifeng Guo, Ruiming Tang, Yunming Ye, Zhenguo Li, and Xiuqiang He. 2017. DeepFM: a factorization-machine based neural network for CTR prediction. In IJCAI. 1725–1731.

- Hall (2019) Patrick Hall. 2019. An introduction to machine learning interpretability. O’Reilly Media, Incorporated.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In CVPR. 770–778.

- He et al. (2014) Xinran He, Junfeng Pan, Ou Jin, Tianbing Xu, Bo Liu, Tao Xu, Yanxin Shi, Antoine Atallah, Ralf Herbrich, Stuart Bowers, et al. 2014. Practical lessons from predicting clicks on ads at facebook. In Proceedings of the Eighth International Workshop on Data Mining for Online Advertising. 1–9.

- Hu et al. (2020) Binbin Hu, Zhiqiang Zhang, Jun Zhou, Jingli Fang, Quanhui Jia, Yanming Fang, Quan Yu, and Yuan Qi. 2020. Loan Default Analysis with Multiplex Graph Learning. In CIKM. 2525–2532.

- Katz et al. (2016) Gilad Katz, Eui Chul Richard Shin, and Dawn Song. 2016. Explorekit: Automatic feature generation and selection. In ICDM. 979–984.

- Kaul et al. (2017) Ambika Kaul, Saket Maheshwary, and Vikram Pudi. 2017. Autolearn: Automated feature generation and selection. In ICDM. 217–226.

- Ke et al. (2017) Guolin Ke, Qi Meng, Thomas Finley, Taifeng Wang, Wei Chen, Weidong Ma, Qiwei Ye, and Tie-Yan Liu. 2017. Lightgbm: A highly efficient gradient boosting decision tree. In NeurIPS. 3146–3154.

- Khawar et al. (2020) Farhan Khawar, Xu Hang, Ruiming Tang, Bin Liu, Zhenguo Li, and Xiuqiang He. 2020. AutoFeature: Searching for Feature Interactions and Their Architectures for Click-through Rate Prediction. In CIKM. 625–634.

- Khurana et al. (2018) Udayan Khurana, Horst Samulowitz, and Deepak Turaga. 2018. Feature engineering for predictive modeling using reinforcement learning. In AAAI.

- Li et al. (2015) Jiwei Li, Xinlei Chen, Eduard Hovy, and Dan Jurafsky. 2015. Visualizing and understanding neural models in nlp. arXiv preprint arXiv:1506.01066 (2015).

- Lian et al. (2018) Jianxun Lian, Xiaohuan Zhou, Fuzheng Zhang, Zhongxia Chen, Xing Xie, and Guangzhong Sun. 2018. xdeepfm: Combining explicit and implicit feature interactions for recommender systems. In KDD. 1754–1763.

- Liu et al. (2019) Bin Liu, Ruiming Tang, Yingzhi Chen, Jinkai Yu, Huifeng Guo, and Yuzhou Zhang. 2019. Feature Generation by Convolutional Neural Network for Click-Through Rate Prediction. In WWW. 1119–1129.

- Liu et al. (2020b) Bin Liu, Chenxu Zhu, Guilin Li, Weinan Zhang, Jincai Lai, Ruiming Tang, Xiuqiang He, Zhenguo Li, and Yong Yu. 2020b. AutoFIS: Automatic Feature Interaction Selection in Factorization Models for Click-Through Rate Prediction. In KDD.

- Liu et al. (2002) Huan Liu, Farhad Hussain, Chew Lim Tan, and Manoranjan Dash. 2002. Discretization: An enabling technique. Data Mining and Knowledge Discovery 6, 4 (2002), 393–423.

- Liu et al. (2020a) Qiang Liu, Zhaocheng Liu, and Haoli Zhang. 2020a. An Empirical Study on Feature Discretization. arXiv preprint arXiv:2004.12602 (2020).

- Liu et al. (2015) Qiang Liu, Feng Yu, Shu Wu, and Liang Wang. 2015. A convolutional click prediction model. In CIKM. 1743–1746.

- Lou et al. (2012) Yin Lou, Rich Caruana, and Johannes Gehrke. 2012. Intelligible models for classification and regression. In KDD. 150–158.

- Lou et al. (2013) Yin Lou, Rich Caruana, Johannes Gehrke, and Giles Hooker. 2013. Accurate intelligible models with pairwise interactions. In KDD. 623–631.

- Lundberg et al. (2018) Scott M Lundberg, Gabriel G Erion, and Su-In Lee. 2018. Consistent individualized feature attribution for tree ensembles. arXiv preprint arXiv:1802.03888 (2018).

- Lundberg and Lee (2017) Scott M Lundberg and Su-In Lee. 2017. A unified approach to interpreting model predictions. In NeurIPS. 4765–4774.

- Luo et al. (2019) Yuanfei Luo, Mengshuo Wang, Hao Zhou, Quanming Yao, WeiWei Tu, Yuqiang Chen, Qiang Yang, and Wenyuan Dai. 2019. AutoCross: Automatic Feature Crossing for Tabular Data in Real-World Applications. In KDD. 1936–1945.

- Mase et al. (2019) Masayoshi Mase, Art B Owen, and Benjamin Seiler. 2019. Explaining black box decisions by shapley cohort refinement. arXiv preprint arXiv:1911.00467 (2019).

- Montufar et al. (2014) Guido F Montufar, Razvan Pascanu, Kyunghyun Cho, and Yoshua Bengio. 2014. On the number of linear regions of deep neural networks. In NeurIPS. 2924–2932.

- Nargesian et al. (2017) Fatemeh Nargesian, Horst Samulowitz, Udayan Khurana, Elias B Khalil, and Deepak S Turaga. 2017. Learning feature engineering for classification. In IJCAI.

- Qu et al. (2016) Yanru Qu, Han Cai, Kan Ren, Weinan Zhang, Yong Yu, Ying Wen, and Jun Wang. 2016. Product-based neural networks for user response prediction. In ICDM. 1149–1154.

- Rendle (2010) Steffen Rendle. 2010. Factorization machines. In ICDM. 995–1000.

- Ribeiro et al. (2016) Marco Tulio Ribeiro, Sameer Singh, and Carlos Guestrin. 2016. ” Why should i trust you?” Explaining the predictions of any classifier. In KDD. 1135–1144.

- Selvaraju et al. (2017) Ramprasaath R Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. 2017. Grad-cam: Visual explanations from deep networks via gradient-based localization. In ICCV. 618–626.

- Shi et al. (2020) Qitao Shi, Ya-Lin Zhang, Longfei Li, Xinxing Yang, Meng Li, and Jun Zhou. 2020. SAFE: Scalable Automatic Feature Engineering Framework for Industrial Tasks. In ICDE. 1645–1656.

- Smilkov et al. (2017) Daniel Smilkov, Nikhil Thorat, Been Kim, Fernanda Viegas, and Martin Wattenberg. 2017. Smoothgrad: removing noise by adding noise. In ICML.

- Song et al. (2020) Qingquan Song, Dehua Cheng, Hanning Zhou, Jiyan Yang, Yuandong Tian, and Xia Hu. 2020. Towards automated neural interaction discovery for click-through rate prediction. In KDD. 945–955.

- Song et al. (2018) Weiping Song, Chence Shi, Zhiping Xiao, Zhijian Duan, Yewen Xu, Ming Zhang, and Jian Tang. 2018. AutoInt: Automatic Feature Interaction Learning via Self-Attentive Neural Networks. In CIKM.

- Su et al. (2021) Yixin Su, Rui Zhang, Sarah Erfani, and Zhenghua Xu. 2021. Detecting Beneficial Feature Interactions for Recommender Systems. In AAAI.

- Tran et al. (2016) Binh Tran, Bing Xue, and Mengjie Zhang. 2016. Genetic programming for feature construction and selection in classification on high-dimensional data. Memetic Computing 8, 1 (2016), 3–15.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. In NeurIPS. 5998–6008.

- Wang et al. (2017) Ruoxi Wang, Bin Fu, Gang Fu, and Mingliang Wang. 2017. Deep & cross network for ad click predictions. In Proceedings of the ADKDD’17.

- West (2000) David West. 2000. Neural network credit scoring models. Computers & Operations Research 27, 11-12 (2000), 1131–1152.

- Xie et al. (2020) Yuexiang Xie, Zhen Wang, Yaliang Li, Bolin Ding, Nezihe Merve Gürel, Ce Zhang, Minlie Huang, Wei Lin, and Jingren Zhou. 2020. Interactive Feature Generation via Learning Adjacency Tensor of Feature Graph. arXiv preprint arXiv:2007.14573 (2020).

- Yao et al. (2018) Quanming Yao, Mengshuo Wang, Jair Escalante Hugo, Guyon Isabelle, Yi-Qi Hu, Yu-Feng Li, Wei-Wei Tu, Qiang Yang, and Yang Yu. 2018. Taking human out of learning applications: A survey on automated machine learning. arXiv preprint arXiv:1810.13306 (2018).

- Yu et al. (2020) Feng Yu, Zhaocheng Liu, Qiang Liu, Haoli Zhang, Shu Wu, and Liang Wang. 2020. Deep Interaction Machine: A Simple but Effective Model for High-order Feature Interactions. In CIKM.

- Yuan et al. (2019) Hao Yuan, Yongjun Chen, Xia Hu, and Shuiwang Ji. 2019. Interpreting deep models for text analysis via optimization and regularization methods. In AAAI.

- Zhang et al. (2016) Weinan Zhang, Tianming Du, and Jun Wang. 2016. Deep learning over multi-field categorical data. In ECIR. 45–57.

- Zhou et al. (2016) Bolei Zhou, Aditya Khosla, Agata Lapedriza, Aude Oliva, and Antonio Torralba. 2016. Learning deep features for discriminative localization. In CVPR. 2921–2929.