DNG: Taxonomy Expansion by Exploring the Intrinsic

Directed Structure on Non-gaussian Space

Abstract

Taxonomy expansion is the process of incorporating a large number of additional nodes (i.e., “queries”) into an existing taxonomy (i.e., “seed”), with the most important step being the selection of appropriate positions for each query. Enormous efforts have been made by exploring the seed’s structure. However, existing approaches are deficient in their mining of structural information in two ways: poor modeling of the hierarchical semantics and failure to capture directionality of the is-a relation. This paper seeks to address these issues by explicitly denoting each node as the combination of inherited feature (i.e., structural part) and incremental feature (i.e., supplementary part). Specifically, the inherited feature originates from “parent” nodes and is weighted by an inheritance factor. With this node representation, the hierarchy of semantics in taxonomies (i.e., the inheritance and accumulation of features from “parent” to “child”) could be embodied. Additionally, based on this representation, the directionality of the is-a relation could be easily translated into the irreversible inheritance of features. Inspired by the Darmois-Skitovich Theorem, we implement this irreversibility by a non-Gaussian constraint on the supplementary feature. A log-likelihood learning objective is further utilized to optimize the proposed model (dubbed DNG), whereby the required non-Gaussianity is also theoretically ensured. Extensive experimental results on two real-world datasets verify the superiority of DNG relative to several strong baselines.

Introduction

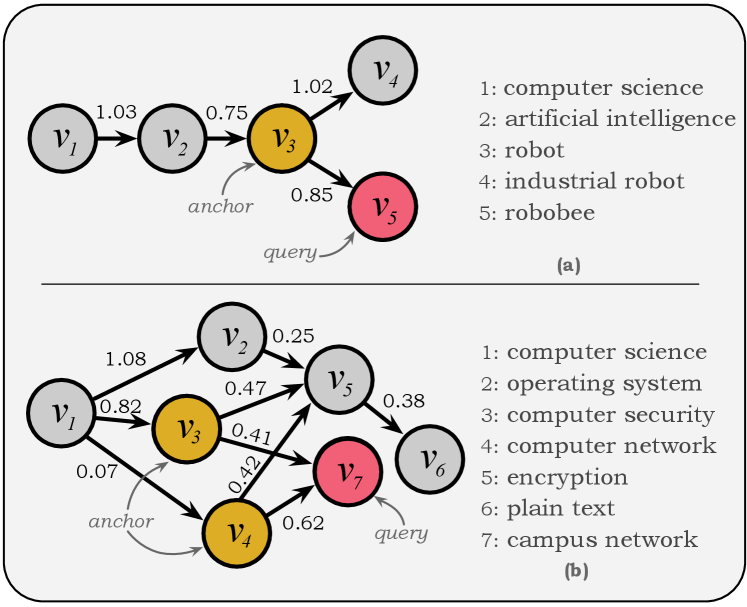

Taxonomies provide the hierarchical information for various downstream applications, such as Query Understanding (Wang et al. 2015; Hua et al. 2017), Web Search (Wu et al. 2012; Liu et al. 2019a), and Personalized Recommender System (Wang et al. 2017; Hu et al. 2021), where the is-a relation connects the nodes (Hearst 1992; Bai et al. 2021). However, manually curating a taxonomy is labor-intensive, time-consuming, and difficult to scale, making the automatic taxonomy expansion draw considerable attention (Shen et al. 2020; Song et al. 2021; Jiang et al. 2022). As depicted in Figure 1, this task aims to insert new nodes (i.e., “query nodes”) into an existing taxonomy (i.e., “seed taxonomy”) by finding appropriate anchors without modifying the seed’s original structure for best preserving and utilizing the seed’s design. In this paper, we will also follow this research line to improve taxonomy expansion.

Recent works primarily contribute to this task from two directions: capturing the seed’s structural information via egonets (Shen et al. 2020), mini-paths (Yu et al. 2020), or vertical-horizontal views of a taxonomy (Jiang et al. 2022); and enhancing node embeddings by implicit edge semantics for a heterogeneous taxonomy (Manzoor et al. 2020), or hyperbolic space (Aly et al. 2019; Ma et al. 2021). It has been suggested that exploring the seed’s structural information should be more important for this task (Wang et al. 2021). However, existing works naively incorporate this information by either GNNs (Yao et al. 2022) or the manually-designed natural language utterance (e.g., “ is a type of ” in Figure 1), leaving two aspects of the seed’s structure under-explored, Type-I: the hierarchical semantics among “parent” and “child” nodes; and Type-II: the directionality of the is-a relation. We focus on these two issues in this paper and demonstrate how these two taxonomic structures can be applied to enhance the taxonomy expansion task.

For further analyzing these two issues, taking the is-a pair (“animal”, “cat”) as an example, the hypernym “animal” is semantically more general than its hyponym “cat” (Santus et al. 2014), implying two-fold information about these two nodes. (a) The hyponym “cat” contains all features of its hypernym “animal”, indicating that a part of the feature for “cat” could be inherited from “animal”. (b) The hypernym “animal” does NOT contain all features of its hyponym “cat”, showing that “cat” owns extra features that could not be inherited from “animal”. This two-fold information indicates the inclusion relation of semantics between the hypernym and hyponym (i.e., “animal” is a proper subset of “cat” in semantics), reflecting the vertex-level character of a taxonomy. The relationship also shows the inheritance and accumulation of information from “parent” to “child”. This variation in information reflects the hierarchical structure of taxonomies (i.e., Type-I) and explains why different levels hold different information.

Besides the hierarchical characteristic, the directionality of the is-a relation (i.e., “cat” is-a “animal”, but “animal” is-not-a “cat”) is another meaningful structural information in a taxonomy (i.e., Type-II). This means that the feature inheritance is directed (e.g., “cat” could inherit features from “animal”, but not vice versa), which exactly matches the underlying DAG (Directed Acyclic Graph) structure of a taxonomy. To capture this edge-level character, we seek to derive an asymmetric transformation between hypernym and hyponym features since each relation in a graph could be treated as a mathematical mapping of features. However, existing approaches (Rimell 2014; Fu et al. 2014) focus heavily on manipulating hypernym and hyponym features (e.g., resorting to subtraction), which cannot theoretically guarantee the directionality of the is-a relation.

In this paper, we propose the DNG model to address the two types of issues with two coherent techniques, and Figure 2 also illustrates the difference of these two issues. On one hand, each node is explicitly represented by a combination of the structural feature (inherited from “parent”) and the supplementary feature (owned by “itself” and cannot be inherited from its “parent”), where the structural feature is weighted by an inheritance factor to measure the inheritability of the node. Under this representation, the hierarchical semantics is embodied in the feature transmission by inheritance strategy (i.e., the feature is passed from one layer to the next) and the feature increment via supplementary mechanism (i.e., some extra features will be supplemented in each layer). On the other hand, benefiting from the improved node representation, the directionality of the is-a relation could be easily converted to the irreversible inheritance of the supplementary feature. Motivated by the Darmois-Skitovich Theorem, we propose a non-Gaussian constraint on the supplementary feature to implement this irreversibility, therefore theoretically guarantee the relation’s directionality.

To optimize DNG, we adopt a log-likelihood learning objective and theoretically prove its effectiveness on modeling structural information. We conduct extensive experiments on two large real-world datasets from different domains to verify the efficacy of the DNG model. The experimental results demonstrate that DNG achieves state-of-the-art performance on the taxonomy expansion task. Additionally, for a better illustration, we will also give a detailed case study about the DNG’s ability in modelling taxonomic structure. To summarize, our major contributions include:

-

•

The node representation is improved by combining the structural and supplementary features; thereby, the hierarchical semantics in taxonomies could be explicitly reflected in the modelling process.

-

•

A non-Gaussian constraint on the supplementary feature is adopted to theoretically guarantee the directionality of the is-a relation.

-

•

A log-likelihood learning objective is employed to optimize the DNG model, ensuring that the required constraint is theoretically guaranteed.

-

•

We conduct extensive experiments on two large datasets from different domains to validate the performance of DNG and the efficacy of modeling taxonomic structure.

Preliminaries

Task Definition

Taxonomy: A given taxonomy can be treated as a directed acyclic graph composed of a vertex set () and an edge set () (Shen et al. 2020; Jiang et al. 2022). An edge between nodes and represents the is-a relation between them (e.g., is-a ), where and are the hypernym (“parent”) and the hyponym (“child”), respectively.

Taxonomy Expansion: It refers to the process that appends a set of new nodes (query set ) into an existing taxonomy (seed taxonomy ), whereby a larger taxonomy could be produced. Hence, this task requires: (1) a seed taxonomy with ; and (2) the query set . As illustrated in Figure 1, the crucial step is to find appropriate anchors in seed taxonomy for each query .

Shannon Entropy

Shannon entropy is the basic concept for measuring the degree of uncertainty of a random variable. It is defined for a discrete-valued random variable as:

| (1) |

Methodology

Node Representation

In order to incorporate the taxonomic structure, each node (e.g., ) is explicitly represented by the combination of structural feature (inherited from its parents) and supplementary feature (could not be inherited from its parents):

| (3) |

where and PAi are the -dimensional representation and the parent set of node , respectively. denotes the feature inherited from the parent node , where the inherited feature is weighted by an inheritance factor . is the supplementary feature of node which cannot be obtained from its parents. indicates the feature matrix of seed’s node set (), and is the inheritance vector of on .

Figure 3 illustrates the node representation in a taxonomy fragment with two is-a pairs (i.e., is-a , and is-a ). For the node , its feature only contains the supplementary part (i.e., ) since it is the root and does not inherit any feature from any node. Node has the parent , making its representation the combination of and (i.e., ). It is observed that is independent of since neither nor contains any information about the other. Similarly, can be represented by the inherited feature and its supplementary feature (i.e., ), from which the independence among , , and can be also inferred. Hence, based on Eq. 3, the hierarchical semantics in a taxonomy could be embodied: the inheritance and accumulation of features from one layer to the next layer. This example also shows that these supplementary features are independent of each other.

Additionally, the directionality is another crucial attribute of the is-a relation: “ is-a , but is-not-a ”. How to capture this structural information is an issue to be resolved. In this paper, we attempt to translate this directionality into the irreversible inheritance of features: “ could inherit feature from , but could not inherit feature from ”. Under this intuition, this attribute could be realized by the irreversible transformation between and in Eq. 3 (i.e., under ). Inspired by the Darmois-Skitovich Theorem, ensuring the non-Gaussianity of the supplementary feature is a feasible implementation, as proven in the next section.

Directionality of is-a on Node Representation

Supposing the representation admits the inverse version under non-Gaussian , there will exist the following transformation:

| (4) | ||||

As such, the simultaneous equation will be obtained:

| (5) |

Due to the non-Gaussian , it can be drawn from Eq.5 based on the corollary of Darmois-Skitovich Theorem111See appendix in the arXiv version. that and will be dependent (i.e., ). However, the fundamental condition of the inverse transformation is . This contradiction between the assumption and the deduction verifies that the non-Gaussian supplementary feature will make the reversal node representation given in Eq. 3 false, indicating that the directionality of is-a is captured. In order to ensure this required constraint, we employ a log-likelihood learning target, which will be elaborated in the next section.

Learning Target

Based on the recursive node representation in Eq. 3, the node set can be represented as 222Derivation of Eq. 6: :

| (6) |

where is dubbed the transition matrix. and are an identity matrix and the inheritance factor matrix, respectively, and both of them have the size . denotes the supplementary feature matrix with the shape of ( is the dimension of this feature).

From Eq. 6, we can observe that the essential step is to estimate the supplementary feature set and the inheritance factors given the observational feature . For this inheritance factor matrix, the entry with position () reflects the probability of node being the “parent” of node .

In real-word datasets, the feature could be easily obtained from the well-designed pre-trained models (Liu et al. 2019b; Devlin et al. 2019) by fusing sufficient context information333DNG focuses on parsing and under the given which can be extracted by other structural approaches with the structural measure, e.g., Wu&P (K., Shet, and Acharya 2012).. However, how to learn and from remains to be determined. To fulfill this goal, we will first analyze the supplementary features as:

| (7) |

where is the probability density of , implemented by (Hyvärinen, Karhunen, and Oja 2001). Then, in order to build the relation between and , the joint probability density of could be derived from by their probability distribution functions (PDFs) 444 First, get the the relation between PDFs: . Then, compute the first-order derivation of PDFs: :

| (8) |

where indicates how to analyze the supplementary features from the observational representations.

With the definition of in hand, the given taxonomy could be treated as the joint distribution of the node set ; hence, the learning target can be defined as maximizing the logarithm of the joint probability:

| (9) | ||||

where denotes the loss to be minimized, and is the parameters. For the DNG model, it is essential to guarantee that the non-Gaussian constraint is satisfied under this optimization, which will be proven in the next section.

The Non-Gaussian

To demonstrate that the supplementary feature is a non-Gaussian distribution under the learning objective, let us first derive the expectation of Eq. 9:

| (10) | ||||

Additionally, in information theory, the entropy measures the uncertainty of a random variable; therefore, a variable with more randomness will have higher entropy. For all types of random variables with the same variance, the Gaussian variable has the highest entropy, leading us to borrow the Negentropy (Hyvärinen, Karhunen, and Oja 2001) as the measure of a variable’s non-Gaussianity. With the help of Eq. 10, the non-Gaussianity of feature can be derived as:

| (11) | ||||

where NG denotes the non-Gaussianity of a variable. is the feature with Gaussian distribution, and could be treated as a constant (Tong, Inouye, and Liu 1993; Hyvärinen, Karhunen, and Oja 2001). Additionally, the term could be also considered as a constant since 555 is pre-processed to unit variance (Hyvärinen and Oja 2000). under the unit-variance and . In this transformation, it can draw that the term does not depend on , which implies is a constant (Hyvärinen and Oja 2000). Consequently, based on Eq. 11, it can be also drawn that maximizing the joint probability of is also equivalent to maximizing the non-Gaussianity of . This corollary exactly accords with the non-Gaussian condition of the proposed DNG model.

DNG Algorithm

In the DNG model, estimating the matrix is the crucial step for the expansion task.To launch the learning procedure, the taxonomy with its vertex set, the feature matrix and the edge set are taken as the inputs. Then, the proposed model DNG is used to analyze the supplementary features and estimate the inheritance factor matrix. Specially, the gradients of updating the parameters are:

| (12) |

where , and is the learning rate. During the inference stage, the inheritance vector for the query is directly computed666For a more precise estimation, a new iteration is requisite on the whole node set. based on the query feature , the learned supplementary feature matrix and the inheritance matrix. With in hand, its appropriate positions could be easily found777Code is available at https://github.com/SonglinZhai/DNG..

| Datasets | #Depth | ||

|---|---|---|---|

| MAG-CS | 24,754 | 42,329 | 6 |

| WordNet-Verb | 13,936 | 13,408 | 13 |

Experiments

Experimental Setup

Datasets

We evaluate DNG and compare models on two large public real-world taxonomies:

-

•

Microsoft Academic Graph (MAG) is a public Field-of-Study (FoS) taxonomy (Sinha et al. 2015). This taxonomy contains over 660,000 scientific nodes and more than 700,000 is-a relations. Following (Shen et al. 2020; Zhang et al. 2021; Jiang et al. 2022), we re-construct a sub-taxonomy from MAG by picking the nodes of the computer science domain, named MAG-CS. Additionally, the node embeddings extracted via word2vec (Mikolov et al. 2013) based on the related paper abstracts corpus are used as the initial embeddings.

-

•

WordNet is another large taxonomy, containing various concepts (Jurgens and Pilehvar 2016). Following (Zhang et al. 2021; Jiang et al. 2022), the concepts of the verb sub-taxonomy are selected based on WordNet 3.0, referred as WordNet-Verb since this sub-field is the part that has been fully-developed in WordNet. Analogously, the fasttext embeddings888The wiki-news-300d-1M-subword.vec.zip on official website is used. are also generated as the initial feature vectors (Shen et al. 2020; Zhang et al. 2021). In addition, a pseudo root named “verb” is inserted as the root node to generate a complete taxonomy because there are isolated components in the constructed taxonomy.

Following the dataset splits used in (Zhang et al. 2021; Jiang et al. 2022), 1,000 nodes are randomly chosen to test our model in each dataset. The statistical information for these two datasets is listed in Table 1.

| Models | MAG-CS | |||

| Recall | Precision | MR | MRR | |

| TaxoExpan | 0.100 | 0.163 | 197.776 | 0.562 |

| ARBORIST | 0.008 | 0.037 | 1142.335 | 0.133 |

| TMN | 0.174 | 0.283 | 118.963 | 0.689 |

| GenTaxo | 0.149 | 0.294 | 140.262 | 0.634 |

| TaxoEnrich | 0.182 | 0.304 | 67.947 | 0.721 |

| DNG | 0.268 | 0.317 | 59.354 | 0.754 |

| Models | WordNet-Verb | |||

| Recall | Precision | MR | MRR | |

| TaxoExpan | 0.085 | 0.095 | 665.409 | 0.406 |

| ARBORIST | 0.016 | 0.067 | 608.668 | 0.380 |

| TMN | 0.110 | 0.124 | 615.021 | 0.423 |

| GenTaxo | 0.094 | 0.141 | 6046.363 | 0.155 |

| TaxoEnrich | 0.162 | 0.294 | 217.842 | 0.481 |

| DNG | 0.199 | 0.201 | 43.723 | 0.513 |

Compared Models

Five recent methods are adopted to verify the performance of DNG model:

- (1)

-

(2)

ARBORIST (Manzoor et al. 2020) aims to resolve the issues in heterogeneous taxonomies via a large margin ranking loss and a dynamic margin function.

-

(3)

TMN (Zhang et al. 2021) performs the taxonomy completion task by computing the matching score of the triple (query, hypernym, hyponym). We employ the model for the expansion task in this paper.

-

(4)

GenTaxo (Zeng et al. 2021) resorts to the sentence-based and subgraph-based node encodings to perform the matching of each query node. Following (Jiang et al. 2022), the GenTaxo++ is adopted in this paper since the original framework contains a name generation module which is not the focus of this paper.

-

(5)

TaxoEnrich (Jiang et al. 2022) achieves state-of-the-art performance on the taxonomy expansion task. This approach leverages semantic and structural information to better represent nodes, which is a strong baseline in this paper.

Evaluation Metrics

A ranked list of nodes is provided to choose appropriate anchors for each query. Following (Shen et al. 2020; Zhang et al. 2021; Jiang et al. 2022), four rank-based metrics are used to evaluate the performance of DNG and the compared methods:

-

•

Precision@k is defined as

where is 1 if the ground-truth node is in the top- predictions, otherwise it is 0. The results reported in Table 2 are the mean value of all test cases on Precision@1.

-

•

Recall@k is computed by:

where is the number of ground truth labels for one case. The results reported in Table 2 are also the mean value of all test cases on Recall@1.

- •

- •

Performance Evaluation

Similar to (Shen et al. 2020; Zhang et al. 2021; Jiang et al. 2022), we also split the original taxonomy into separate sub-taxonomies, with each sub-taxonomy containing all paths from a source node (e.g., the root node) to a destination node (e.g., one leaf node). During inference, candidate anchors are first initially produced from these distinct sub-taxonomies. Then these candidate anchors are recombined by combining the anchors from the nearest sub-taxonomies to generate better anchors. After several iterations, the ultimate predictions could be generated.

Table 2 summarizes the performance of all compared models on Precision, Recall, MR and MRR evaluation metrics. Generally, the DNG model outperforms all baseline approaches on most metrics and especially gains a large margin improvement in Recall across all datasets, indicating the actual anchors being ranked at high positions. Additionally, it has been shown that not all models are capable of producing comparatively superior results for all evolution metrics. Despite the findings demonstrating the excellent performance of TaxoEnrich, the proposed DNG model still surpasses this strong baseline on the majority of criteria. The advantage of the DNG model on MR and MRR reveals its superior ranking performance, validating the effectiveness of the designed node representation and the non-Gaussian constraint presented in this paper.

Capturing Hierarchical Semantics

Section 4 presents a re-designed node representation to capture the hierarchical semantics reflected by the inheritance factor. By estimating , appropriate anchors could be determined by locating the nodes with high factors, which will be illustrated in this section.

Figure 4 gives two real cases of the MAG-CS dataset for validating DNG’s ability to capture taxonomic structure. In the top sub-figure, there are five nodes, each with a single parent in the taxonomy, where and are the candidate nodes and the query node, respectively. Hence, the DNG model needs to estimate the inheritance vector on these candidate nodes. After carrying out the inference, the estimated is , indicating is its only “parent” with the inheritance factor and it does not inherit any feature from any other nodes. Since the inheritance factor of is the largest and others are , the appropriate anchor of query is . Figure 4 (b) depicts a more complicated example in which the convoluted relationships could more convincingly demonstrate DNG’s ability. The matching process for the query node is to estimate on other nodes, and the result is , indicating inherits features from both of and . Similarly, appropriate anchors are and since all other factors are equal to zero. Another notable finding is that (“campus network”) inherits more features from (“computer network”) than from (“computer security”), despite the fact that both and are the “parents”. This is consistent with their ground-truth relations in the MAG-CS dataset, which further validates the DNG’s superiority in identifying appropriate anchors for queries in taxonomic structures.

Capturing Directionality and Taxonomic Structure

One fundamental contribution of this paper is capturing the directionality of the is-a relation under the non-Gaussian constraint; thereby, the structure of a taxonomy could be illustrated. Theoretically, to validate this, we need to solve the exact backward equation (i.e., ) under the non-Gaussian constraint to see if it obeys the reversed form of Eq. 3 (i.e., ). However, despite the fact that the forward equation (see Eq. 3) has been theoretically proven correct, it is still difficult to determine the exact backward equation due to the lack of its prior knowledge. Consequently, we devise an alternative synthetic experiment to serve a similar purpose. Specifically, on the one hand, the distance between the actual and predicted features is also a measure of model’s reliability, with a lower value indicating better performance. On the other hand, supposing the backward equation obeys the reversed Eq. 3, the difference between the distances computed by the forward and backward equations could reflect the “deviation degree” of the backward one. It is anticipated that this “deviation degree” will have a larger value for nodes with non-Gaussian supplementary features compared with that for Gaussian ones. We use a uniform distribution as a non-Gaussian distribution. Consequently, these distance differences of a uniformly-distributed and a Gaussianly-distributed supplementary features are computed to evaluate the reasonableness of the hypothetical backward equation in this section.

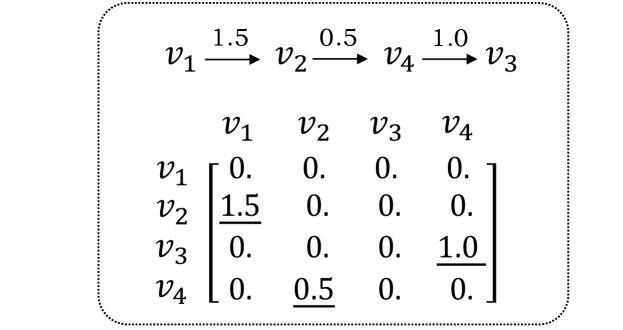

To conduct the experiment described above, two virtual taxonomies with four nodes and an identical structure (see Figure 5) are constructed using a uniform and a Gaussian distribution, respectively, where the edge set is with inheritance factors . The DNG model is then employed to estimate the matrix and the supplementary feature under these two distributions. For the uniform distribution, the distance between the actual and the predicted features with forward equation is: , while the distance between the actual and the backward-predicted features is . An analogous procedure is followed to obtain the distances for the Gaussian distribution: (forward) and (backward). As a result, the mean ratios of the “deviation degree” for the uniform and Gaussian distributions are 107.19% and 45.56%, respectively. The result implies that the hypothetical backward equation incurs a higher loss for the uniform distribution, demonstrating the irreversibility of Eq. 3 under the non-Gaussian constraint.

Apart from demonstrating DNG’s ability in capturing the directionality, we also conduct experiments to validate its ability in capturing the entire taxonomic structure. Figure 6 presents the estimated inheritance matrices of the taxonomy (see Figure 5) under different distributions. As illustrated in the upper matrix, compared to the estimated with Gaussian distribution, the one with non-Gaussian constraint (the top figure) more precisely capture the taxonomic structure, since the ground-truth “parents” have the larger inheritance factor, validating DNG’s ability in modeling taxonomic structure.

Related Work

To improve the taxonomy expansion task, enormous efforts have been made to incorporate the structural information of the seed. Manzoor et al. (2020) learns node embeddings with multiple linear maps to more effectively integrate the structural information of implicit edge semantics for heterogeneous taxonomies. For the specific modelling structural information, Shen et al. (2020) employs the egonet with a position-enhanced graph neural network, and a training scheme based on the InfoNCE loss is also employed to enhance the noise-robustness. However, Yu et al. (2020) converts the expansion task into the matching between query nodes and mini-paths with anchors to capture the locally structural information. For optimizing the taxonomic structure, Song et al. (2021) is the only one that considers the relationship among the different queries to ensure that the generated taxonomy has the optimal structure. Zhang et al. (2021) adds a new node by simultaneously considering the hypernym-hyponym pair, thereby overcoming the limitations of hyponym-only mode. Additionally, Wang et al. (2021) aims to maximize the coherence of the expanded taxonomy in order to take advantage of the hierarchical structure of the taxonomy. Jiang et al. (2022) combines the semantic features (extracted by surface names) and structural information (generated by the pseudo sentences of both vertical and horizontal views) to better represent each node, achieving state-of-the-art performance on this task.

Conclusion

In this paper, we sought to improve the taxonomy expansion task by investigating the taxonomic structure from hierarchical semantics and the directionality of the is-a relation, by which the DNG model was proposed. On the one hand, to model hierarchical semantics, each node is represented by the combination of the structure and the supplementary features, with the structure part weighted by an inheritance factor. On the other hand, based on the node representation, the is-a ’s directionality is easily translated into the irreversible inheritance of features, implemented by the non-Gaussian distribution of the supplementary features. In order to optimize DNG, we employed the log-likelihood learning objective and demonstrated the equivalency between this learning target and the required constraint. Finally, extensive experiments were conducted on two large real-world datasets, and the results not only verified the DNG’s superiority over other compared models on the expansion task but also confirmed its ability to capture the taxonomic structure.

Appendix A DNG Optimization

To optimize the DNG model, the derivation of Eq. 9 with regard to is required based on SGD (Bottou91stochasticgradient):

| (13) | ||||

where is the algebraic complement of . The distribution of supplementary feature is implemented by in this paper, making . Additionally, could be also computed as:

| (14) | ||||

where is the column of . Then, the derivation can be converted to:

| (15) | ||||

Let , the derivation could be converted to:

| (16) |

Since is the adjoint matrix of , the entry of in the row and column is the algebraic complement of (). Due to , the derivation could be translated into:

| (17) | ||||

Finally, the gradients of updating parameters are:

| (18) |

Appendix B The Darmois-Skitovich Theorem

According to the Darmois-Skitovich Theorem (Darmois-Skitovich1; Darmois-Skitovich2), let be independent, non-degenerate random variables: if there exist coefficients and that are all non-zero, the following two linear combinations

| (19) | ||||

are independent, then each random variable will be normally distributed. The contrapositive of the special case of this theorem for two random variables () will be used in the paper (i.e., the following corollary).

Corollary: If either of the independent random variables and are non-Gaussian, the following two linear combinations

| (20) |

will not be independent (i.e., and must be dependent).

Appendix C The Independence in

The joint probability density shown in Eq. 7 is based on the independence of supplementary features. Analogously, to prove the equivalency between DNG optimization and the required independence, we should firstly review the mutual information:

| (21) |

where is a vector contains a set of variables .

According to Eq. 21, the mutual information of could be computed as:

| (22) |

Based on Eq. 23 and Eq. 10, the relation between the mutual information and the learning target could be denoted as:

| (24) |

where const denotes a constant. From Eq. 24, it can be drawn that maximizing the joint probability of is equivalent to minimizing the mutual information of . This conclusion accords with the prerequisite of Eq. 7.

Acknowledgements

This material is based on research sponsored by Defense Advanced Research Projects Agency (DARPA) under agreement number HR0011-22-2-0047. The U.S. Government is authorised to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright notation thereon. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of DARPA or the U.S. Government.

References

- Aly et al. (2019) Aly, R.; Acharya, S.; Ossa, A.; Köhn, A.; Biemann, C.; and Panchenko, A. 2019. Every Child Should Have Parents: A Taxonomy Refinement Algorithm Based on Hyperbolic Term Embeddings. In Proceedings of the 57th Conference of the Association for Computational Linguistics (ACL) Volume 1: Long Papers, 4811–4817. Association for Computational Linguistics.

- Bai et al. (2021) Bai, Y.; Zhang, R.; Kong, F.; Chen, J.; and Mao, Y. 2021. Hypernym Discovery via a Recurrent Mapping Model. In Findings of the Association for Computational Linguistics: ACL/IJCNLP, volume ACL/IJCNLP 2021 of Findings of ACL, 2912–2921. Association for Computational Linguistics.

- Devlin et al. (2019) Devlin, J.; Chang, M.; Lee, K.; and Toutanova, K. 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT Volume 1 (Long and Short Papers), 4171–4186. Association for Computational Linguistics.

- Fu et al. (2014) Fu, R.; Guo, J.; Qin, B.; Che, W.; Wang, H.; and Liu, T. 2014. Learning Semantic Hierarchies via Word Embeddings. In ACL Volume 1: Long Papers, 1199–1209. Association for Computer Linguistics.

- Hearst (1992) Hearst, M. A. 1992. Automatic Acquisition of Hyponyms from Large Text Corpora. In 14th International Conference on Computational Linguistics (COLING), 539–545.

- Hu et al. (2021) Hu, X.; Xu, J.; Wang, W.; Li, Z.; and Liu, A. 2021. A Graph Embedding Based Model for Fine-grained POI Recommendation. Neurocomputing, 428: 376–384.

- Hua et al. (2017) Hua, W.; Wang, Z.; Wang, H.; Zheng, K.; and Zhou, X. 2017. Understand Short Texts by Harvesting and Analyzing Semantic Knowledge. IEEE Trans. Knowl. Data Eng., 29(3): 499–512.

- Hyvärinen, Karhunen, and Oja (2001) Hyvärinen, A.; Karhunen, J.; and Oja, E. 2001. Independent Component Analysis. Wiley. ISBN 0-471-40540-X.

- Hyvärinen and Oja (2000) Hyvärinen, A.; and Oja, E. 2000. Independent component analysis: algorithms and applications. Neural Networks, 13(4-5): 411–430.

- Jiang et al. (2022) Jiang, M.; Song, X.; Zhang, J.; and Han, J. 2022. TaxoEnrich: Self-Supervised Taxonomy Completion via Structure-Semantic Representations. In WWW’22: The ACM Web Conference 2022, 925–934. Association for Computing Machinery.

- Jurgens and Pilehvar (2016) Jurgens, D.; and Pilehvar, M. T. 2016. SemEval-2016 Task 14: Semantic Taxonomy Enrichment. In Proceedings of the 10th International Workshop on Semantic Evaluation, SemEval@NAACL-HLT, 1092–1102. The Association for Computer Linguistics.

- K., Shet, and Acharya (2012) K., M. S.; Shet, K. C.; and Acharya, U. D. 2012. A New Similarity Measure for Taxonomy Based on Edge Counting. CoRR, arxiv.org/abs/1211.4709.

- Liu et al. (2019a) Liu, B.; Guo, W.; Niu, D.; Wang, C.; Xu, S.; Lin, J.; Lai, K.; and Xu, Y. 2019a. A User-Centered Concept Mining System for Query and Document Understanding at Tencent. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 1831–1841. Association for Computing Machinery.

- Liu et al. (2019b) Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; and Stoyanov, V. 2019b. RoBERTa: A Robustly Optimized BERT Pretraining Approach. CoRR, arxiv.org/abs/1907.11692.

- Ma et al. (2021) Ma, M. D.; Chen, M.; Wu, T.; and Peng, N. 2021. HyperExpan: Taxonomy Expansion with Hyperbolic Representation Learning. In Findings of the Association for Computational Linguistics: EMNLP. Association for Computational Linguistics.

- Manzoor et al. (2020) Manzoor, E. A.; Li, R.; Shrouty, D.; and Leskovec, J. 2020. Expanding Taxonomies with Implicit Edge Semantics. In Proceedings of The Web Conference 2020, 2044–2054. Association for Computing Machinery / IW3C2.

- Mikolov et al. (2013) Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G. S.; and Dean, J. 2013. Distributed Representations of Words and Phrases and their Compositionality. In Advances in Neural Information Processing Systems 26: 27th Annual Conference on Neural Information Processing Systems 2013 (NIPS), 3111–3119.

- Rimell (2014) Rimell, L. 2014. Distributional Lexical Entailment by Topic Coherence. In Proceedings of the 14th Conference of the European Chapter of the Association for Computational Linguistics (EACL), 511–519. The Association for Computer Linguistics.

- Santus et al. (2014) Santus, E.; Lenci, A.; Lu, Q.; and im Walde, S. S. 2014. Chasing Hypernyms in Vector Spaces with Entropy. In Proceedings of the 14th Conference of the European Chapter of the Association for Computational Linguistics, EACL, 38–42. The Association for Computer Linguistics.

- Shen et al. (2020) Shen, J.; Shen, Z.; Xiong, C.; Wang, C.; Wang, K.; and Han, J. 2020. TaxoExpan: Self-supervised Taxonomy Expansion with Position-Enhanced Graph Neural Network. In WWW’20: The Web Conference 2020, 486–497. Association for Computing Machinery / IW3C2.

- Sinha et al. (2015) Sinha, A.; Shen, Z.; Song, Y.; Ma, H.; Eide, D.; Hsu, B. P.; and Wang, K. 2015. An Overview of Microsoft Academic Service (MAS) and Applications. In Proceedings of the 24th International Conference on World Wide Web Companion, WWW, 243–246. Association for Computing Machinery.

- Song et al. (2021) Song, X.; Shen, J.; Zhang, J.; and Han, J. 2021. Who Should Go First? A Self-Supervised Concept Sorting Model for Improving Taxonomy Expansion. In Proceedings of the ACM Web Conference 2022, WWW’22. Association for Computing Machinery / IW3C2.

- Tong, Inouye, and Liu (1993) Tong, L.; Inouye, Y.; and Liu, R.-w. 1993. Waveform-Preserving Blind Estimation of Multiple Independent Sources. IEEE Transactions on Signal Processing, 41(7): 2461–2470.

- van den Oord, Li, and Vinyals (2018) van den Oord, A.; Li, Y.; and Vinyals, O. 2018. Representation Learning with Contrastive Predictive Coding. CoRR, arxiv.org/abs/1807.03748.

- Wang et al. (2021) Wang, S.; Zhao, R.; Chen, X.; Zheng, Y.; and Liu, B. 2021. Enquire One’s Parent and Child Before Decision: Fully Exploit Hierarchical Structure for Self-Supervised Taxonomy Expansion. In Proceedings of the Web Conference 2021, WWW’21, 3291–3304. Association for Computing Machinery / IW3C2.

- Wang et al. (2017) Wang, W.; Yin, H.; Chen, L.; Sun, Y.; Sadiq, S.; and Zhou, X. 2017. ST-SAGE: A Spatial-Temporal Sparse Additive Generative Model for Spatial Item Recommendation. ACM Transactions on Intelligent Systems and Technology, 8(3).

- Wang et al. (2015) Wang, Z.; Zhao, K.; Wang, H.; Meng, X.; and Wen, J. 2015. Query Understanding through Knowledge-Based Conceptualization. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence (IJCAI), 3264–3270. AAAI Press.

- Wu et al. (2012) Wu, W.; Li, H.; Wang, H.; and Zhu, K. Q. 2012. Probase: a probabilistic taxonomy for text understanding. In Proceedings of the ACM SIGMOD International Conference on Management of Data, 481–492. Association for Computing Machinery.

- Yao et al. (2022) Yao, K.; Liang, J.; Liang, J.; Li, M.; and Cao, F. 2022. Multi-view Graph Convolutional Networks with Attention Mechanism. Artif. Intell., 307(C): 103708.

- Yu et al. (2020) Yu, Y.; Li, Y.; Shen, J.; Feng, H.; Sun, J.; and Zhang, C. 2020. STEAM: Self-Supervised Taxonomy Expansion with Mini-Paths. In The 26th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 1026–1035. Association for Computing Machinery.

- Zeng et al. (2021) Zeng, Q.; Lin, J.; Yu, W.; Cleland-Huang, J.; and Jiang, M. 2021. Enhancing Taxonomy Completion with Concept Generation via Fusing Relational Representations. In KDD’21: The 27th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2104–2113. Association for Computing Machinery.

- Zhang et al. (2021) Zhang, J.; Song, X.; Zeng, Y.; Chen, J.; Shen, J.; Mao, Y.; and Li, L. 2021. Taxonomy Completion via Triplet Matching Network. In Proceeding of the Thirty-Fifth AAAI Conference on Artificial Intelligence, 4662–4670. AAAI Press.