Diversity-enhancing Generative Network for Few-shot Hypothesis Adaptation

Abstract

Generating unlabeled data has been recently shown to help address the few-shot hypothesis adaptation (FHA) problem, where we aim to train a classifier for the target domain with a few labeled target-domain data and a well-trained source-domain classifier (i.e., a source hypothesis), for the additional information of the highly-compatible unlabeled data. However, the generated data of the existing methods are extremely similar or even the same. The strong dependency among the generated data will lead the learning to fail. In this paper, we propose a diversity-enhancing generative network (DEG-Net) for the FHA problem, which can generate diverse unlabeled data with the help of a kernel independence measure: the Hilbert-Schmidt independence criterion (HSIC). Specifically, DEG-Net will generate data via minimizing the HSIC value (i.e., maximizing the independence) among the semantic features of the generated data. By DEG-Net, the generated unlabeled data are more diverse and more effective for addressing the FHA problem. Experimental results show that the DEG-Net outperforms existing FHA baselines and further verifies that generating diverse data plays a vital role in addressing the FHA problem.

1 Introduction

Data and expert knowledge are always scarce in newly-emerging fields, thus it is very important and challenging to study how to leverage knowledge from other similar fields to help complete tasks in the new fields. To cope with this challenge, transfer learning methods were proposed to leverage the knowledge of source domains (e.g., data in source domains or models trained with data in source domains) to help complete the tasks in other similar domains (a.k.a. the target domains) (Zamir et al., 2018; Sun et al., 2019; Liu et al., 2019; Wang et al., 2019; Zhang et al., 2020; Teshima et al., 2020; Jing et al., 2020; Liu et al., 2021a; Zhong et al., 2021; Zhou et al., 2020; Shui et al., 2022; Guo et al., 2022; Fang et al., 2022; Chi et al., 2022; Wang et al., 2023). Among many transfer learning methods, hypothesis transfer learning (HTL) methods have received a lot of attention since it does not require access to the data in source domains, which prevents the data leakage issue and protects the data privacy (Du et al., 2017; Yang et al., 2021a, b). Recently, the few-shot hypothesis adaptation (FHA) problem has been formulated to make HTL more realistic, which is suitable to solve many problems (Liu et al., 2021b, c; Snell et al., 2017; Wang et al., 2020; Yang et al., 2020). In FHA, only a well-trained source classifier (i.e., a source hypothesis) and a few labeled target data are available (Chi et al., 2021a).

Similar to HTL, FHA also aims to obtain a good target-domain classifier with the help of a source hypothesis and a few target-domain data (Chi et al., 2021a; Motiian et al., 2017). Recently, generating unlabeled data has been shown to be an effective strategy to address in the FHA problem (Chi et al., 2021a). The target-oriented hypothesis adaptation network (TOHAN), a one-step solution to the FHA problem, constructed an intermediate domain to enrich the training data. The data in intermediate domain are highly compatible with data in source domain and target domain (Balcan & Blum, 2010). By the generated unlabeled data in the intermediate domain, TOHAN partially overcame the problems caused by data scarcity in the target domain.

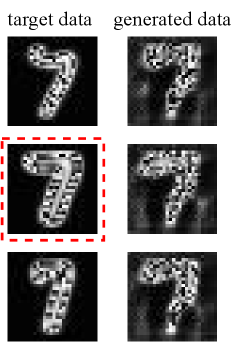

However, the existing methods ignore the diversity of the generated data or the independence among the generated data, so that the generated data are extremely similar or even the same. Lack of diversity leads to less effective data for addressing the FHA problem. Taking the FHA task of digits datasets as an example, we find that the data generated by TOHAN has an issue that the generator tends to generate similar data as the target data and even copy target data (Figure 1(a)). To show how diversity matters in the FHA problem intuitionally, we conduct the experiments in the digits datasets. We use a few labeled target-domain data and the increasing unlabeled data to train the target model. The result is shown in Figure 1(b). For the source data and target data, it is clear that the accuracy of the trained model is higher as the number of data increases. For the generated data, the growth of data volume only helps to improve the accuracy of the model when it is small (e.g., less than 45 in Figure 1(b)). However, the accuracy of the model fluctuates around 33% regardless of the increase in the unlabeled data, when the number of data exceeds 35. This result shows that the model trained by generated data converges faster than those trained by the source data and target data since the generated data have less diversity.

In this paper, to show how the diversity of unlabeled data (i.e., the independence among unlabeled data) affects the FHA methods, we theoretically analyze the effect of the sample complexity regarding the FHA problem (Theorem 1). In this analysis, we adopt the log-coefficient score (Dagan et al., 2019) to measure the dependency among unlabeled data. Our results show that we can still count on the unlabeled data to help address the FHA problem as long as the unlabeled data are weakly dependent (). Nevertheless, once , the results in Theorem 1 may not hold, resulting in failure theoretically. In addition, we find that high dependency among unlabeled data usually means that we need more unlabeled data to obtain a good target-domain classifier. From the above analysis and Figure 1, we argue that diversity matters in addressing the FHA problem.

To this end, we propose the diversity-enhancing generative network (DEG-Net) for the FHA problem, which is a weight-shared conditional generative method equipped with a kernel independence measure: the Hilbert-Schmidt independence criterion (HSIC) (Gretton et al., 2005; Ma et al., 2020; Pogodin & Latham, 2020), which is used in various situations, e.g., clustering (Song et al., 2007; Blaschko & Gretton, 2008), independence testing (Gretton et al., 2007), self-supervised classification (Li et al., 2021), and model-inversion attack (Peng et al., 2022). Although the log-coefficient score is used to analyze the effect of the sample complexity regarding the FHA problem, its calculation requires knowing the target-domain distribution, which is unknown in practice. Yet, HSIC can be estimated easily by the data sample. Thus, we adopt the HSIC to calculate the dependency among generated unlabeled data.

The overview of DEG-Net is in Figure 2, showing that there are two modules in DEG-Net: the generation module and the adaptation module. In the generation module, we train the conditional generator with a well-trained source classifier and a few-target domain data. To train the generator with both the source-domain and target-domain knowledge and improve the diversity of generated data simultaneously, the generative loss of DEG-Net consists of 3 parts: classification loss, similarity loss, and diversity loss. More specifically, DEG-Net generates data via minimizing the HSIC value (i.e., maximizing the independence) between the semantic features of the target data and generated data, where the semantic features are the hidden-layer outputs of the well-trained source hypothesis. To use the generalization knowledge in the semantic features of data shared by different classes (Chen et al., 2020; Chi et al., 2021b; Yao et al., 2021), the generator is a weight-shared network. As for the adaptation module, the classifier is trained using the generated data and a few labeled target-domain data. The adaptation module consists of a classifier and a group discriminator (Chi et al., 2021a; Motiian et al., 2017). With the help of the group discriminator which tries to confuse the classifier to distinguish data from different domains, the classifier is trained to classify the data over the target domain using the generated data and a few label data drawn from the target domain.

We verify the effectiveness of DEG-Net on 8 FHA benchmark tasks (Chi et al., 2021a), including the digit datasets and object datasets. Experimental results show that DEG-Net outperforms existing FHA methods and achieves state-of-the-art performance. Besides, due to the weight-shared generator, DEG-Net is much faster than previous generative FHA methods in the training. We also conduct an ablation study to verify that each component in the DEG-Net is useful, which shows that diverse generated data can help improve the performance when addressing the FHA problem, which lights up a novel road for the FHA problem.

2 Preliminary and Related Works

Problem Setting. In this section, we formalize the FHA problem mathematically. Denoting by the input space and by the output space, where is the number of classes. The source domain (target domain) can be defined as a joint probability distribution on ( on ). Besides, we assume that there is a well-trained model . The is trained with data drawn independently and identically distributed (i.i.d) from with the aim of minimizing , where is a loss function to measure if two elements in are close, and is an element in a hypothesis set . Thus, can be defined as

| (1) |

is also called the source hypothesis in our paper. Hence, the FHA problem is defined as follows:

Problem 1.

Hypothesis Transfer Learning Methods. Hypothesis transfer learning (HTL) aims to train a classifier with only a well-trained classifier and a few labeled data or abundant unlabeled data over the target domain (Kuzborskij & Orabona, 2013; Tommasi et al., 2010; Liang et al., 2020). In Kuzborskij & Orabona (2013), they used the leave-one-out error to find the optimal transfer parameters based on the regularized least squares with biased regularization. SHOT (Liang et al., 2020) froze the source hypothesis and trained a domain-specific encoding module using the abundant unlabeled data. Later, neighborhood reciprocity clustering (Yang et al., 2021a) was proposed to address HTL by encouraging reciprocal neighbors to concord in their label prediction. HTL problem does not have a limitation on the amount of labeled data drawn from the target domain for the transfer training, which is different from the FHA problem.

Target-oriented Hypothesis Adaptation Network. TOHAN (Chi et al., 2021a) is a one-step solution for the FHA problem. It has a good performance due to using the generated unlabeled data in the adaptation process. Motivated by the learnability in semi-supervised learning (SSL), TOHAN found that unlabeled data in the intermediate domain, which is compatible with both the source classifier and target classifier, can address the FHA problem for providing the additional information in the training. Guided by this principle, the key module of TOHAN is to generate the unlabeled data drawn from the probability distribution :

| (2) |

where measures how compatible (resp. ) is with data distribution (Balcan & Blum, 2010).

3 Theoretical Analysis Regarding the Data Diversity in FHA

In previous works, researchers have shown that generated high-compatible data can help address the FHA problem. However, as discussed in Section 1, the diversity of the generated data matters in addressing the FHA. Besides, the previous studies assume that the generated data is independent of their theory, and is inconsistent with their methods. In this section, we will show how the dependency among the generated data affects the performance of FHA methods. Similar to Chi et al. (2021a), our theory is also based on the theory regarding SSL (Webb & Sammut, 2010).

Dependency Measure. Following Dagan et al. (2019), we use the log-coefficient to theoretically analyze the data diversity. log-coefficient measures the dependency among observations from a random variable .

Definition 1 (Log-influence and log-coefficient (Dagan et al., 2019)).

Let be a random variable over and let denote either its probability distribution if discrete or its density if continuous. Assume that on all . For any , define the log-influence between and as

| (3) | |||

Then the log-coefficient of is defined as .

From Definition 1, it is clear that will be zero if and are independent (for any ).

Sample Complexity Analysis for FHA. Since the generated data are unlabeled, we follow the theory regarding SSL to analyze how the generated unlabeled data can help address the FHA problem. More importantly, we will analyze how the dependency on the generated data affects the performance of FHA methods. For simplicity, we consider a binary SSL problem (i.e., ).

Let be the optimal target classifier. Let be the true error rate of a hypothesis over a marginal distribution . In FHA, its learnability mainly depends on the compatibility measuring how “compatible” is to one unlabeled data . In the following, we use to represent the expectation of the compatibility of data from on a classifier , and let be an observation of a random variable , where the distribution regarding is , . The following theorem shows that, under some conditions, we can learn a good even when the dependency among unlabeled target data exists.

Theorem 1.

Let be the empirical compatibility over and . If , , and , then unlabeled data and labeled data are sufficient to learn to error with probability , for

| (4) | |||

and

| (5) |

where is assumed to have a finite VC dimension, , and is the expected number of splits of data drawn from using hypotheses in of compatibility more than . In particular, with probability at least , we have , where .

The proof of Theorem 1 is presented in Appendix A, which mainly follows the recent result in the problem of learning from dependent data (Dagan et al., 2019).

Theorem 1 shows that when the dependency among the unlabeled data is weak (i.e., ), we can obtain a similar result compared to the classical result in the SSL theory (Balcan & Blum, 2010). Namely, if we can generate data that are highly compatible with , which means that is very small and thus is small, thus we do not need a lot of labeled data drawn from the target domain to learn a good (Chi et al., 2021a).

Diversity Matters in FHA. Theorem 1 also shows that the diversity of unlabeled data matters in the FHA problem. There are two reasons. The first reason is that Theorem 1 might not be true if there is strong dependency among the unlabeled data (e.g., ). This will directly make the previous work lose the theoretical foundation to address the FHA problem. The second reason is that we need more unlabeled data to reach the same error if the dependency among the unlabeled data increases. Specifically, if is very close to , then could be a very large number. The above reasons motivate us to take care of the dependency on the generated data. To weaken such dependency, we propose our method, diversity-enhancing generative network (DEG-Net) for the FHA problem.

4 Diversity-enhancing Generative Network for FHA Problem

In this section, we propose the diversity-enhancing generative network (DEG-Net) for the FHA problem. DEG-Net has two modules: (1) the generation module to generate diverse data from the intermediate domain ; (2) the adaptation module to train the classifier for the target domain using the generated data and labeled target data.

4.1 Diversity-enhancing Generation

In order to overcome the shortcomings of the current generative method for the FHA problem, we come up with solutions for both the generative architecture and the loss function. As for the generative architecture, we propose the weight-shared conditional generative network for generating the data with the specific category. We also design a novel loss function to constrain the similarities and diversity of the semantic information of the generated data.

Weight-shared Conditional Generative Network. As discussed before, for using generalized features which are shared among different classes to improve the quality of generated data and reduce the time of training, the weight-shared conditional generative network is promising. Following Chi et al. (2021a), the generator aims to generate data of specific categories from Gaussian random noise. The encoder outputs the semantic feature and the class probability distribution of the generated data. To achieve the aim of the generative method, we design the two-part loss functions: the classification loss function and the similarity of the semantic feature loss function.

We assume that is the generated data with a specific category , where the inputs of generator are the Gaussian random noise and the specific categorical information . Specifically, we use the one-hot encoded label as the categorical information. The generated data inputs to the well-trained source-domain classifier , where the output of is the group discriminator feature, which will be used in the adaptation module and the output of is probability feature , where is the probability of the generated data belonging to the class respectively. The semantic feature used in the similarity loss and diversity loss is the hidden-layer output of (details of the hidden-layer selection can be found in Appendix C.). We aim to update the parameters of the generator to force the generated data with the categorical information belonging to the class, i.e., making close to 1. Specifically, we minimize the following loss to generate the data of a specific category :

| (6) |

where is the batch size of the generator.

To make the generated data closer to data in the target domain, we need to define the loss function to measure the difference between data of two different domains. Motivated by Zheng & Zhou (2021), DEG-Net uses semantic features to calculate the similarities. To avoid the copy issue, we decided to use the distance , where , since it encourages larger gradients for feature dimensions with higher residual errors. Compared to the -norm, it is better to measure the similarity of the semantic features between the generated images and the target images, since distance is more robust to outliers (Oneto et al., 2020). Thus, the similarity loss is defined as follows:

| (7) |

where ( is compact and is continuous), is the number of labeled data drawn from the target domain, and are the semantic features of target data and generated data, respectively. Combining Eq. (6) and Eq. (7), we obtain the loss to train the weight-shared conditional generative network:

| (8) |

where is a hyper-parameter between two losses. Note that optimizing Eq. (8) is corresponding to generative method’s principle Eq. (2) for the FHA problem , where Eq. (6) (resp. Eq. (7)) is corresponding to (resp. ). To ensure that the conditional generator can generate the image with the correct class label, we pretrain the generator for some epochs.

Generative Function with Diversity. As discussed above, the weak dependence among unlabeled data is an important condition for using generated unlabeled data to address the FHA problem. To ensure that the unlabeled data are weakly dependent among unlabeled data (i.e., to generate more diverse unlabeled data), it is necessary to use diversity regularization to train the generator. Unfortunately, the log-coefficient score, a dependence measure used to analyze the sample complexity, is hard to calculate, since its calculation requires the unknown distribution regarding the target-domain data. HSIC, a kernel independence measure can also measure the dependency of the generated data. Different from the log-coefficient score, HSIC can be easily estimated (Gretton et al., 2005; Song et al., 2012):

| (9) |

where () is the kernel matrix ( is the kernel function) and is the centering matrix. We minimize the HSIC measure of the generated data’s semantic features to obtain weakly dependent data. Specifically, we use the Gaussian kernel as the kernel function ,and minimize the following loss to generate more diverse data:

| (10) | ||||

where is the kernel matrice of the semantic features of the generated data with a specific class. Hence, we obtain the total loss to train the generator with diversity enhancement:

| (11) |

where is a hyper-parameter between the generative loss and diversity regularization. Note that the diversity regularization and the similarity loss restrict themselves.

| Tasks | WA | FHA Methods | Number of Target Data per Class | ||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |||

| 69.7 | FT | 74.40.7 | 76.71.9 | 76.92.2 | 77.31.1 | 77.61.4 | 78.32.1 | 78.31.6 | |

| SHOT | 87.20.2 | 87.90.3 | 87.80.4 | 88.00.4 | 87.90.5 | 88.00.3 | 88.40.3 | ||

| S+FADA | 83.70.9 | 86.00.4 | 86.11.1 | 86.50.8 | 86.81.4 | 87.00.6 | 87.20.8 | ||

| T+FADA | 84.20.1 | 84.20.3 | 85.20.9 | 85.20.6 | 86.01.5 | 86.81.5 | 87.20.5 | ||

| TOHAN | 87.70.7 | 88.30.5 | 88.51.2 | 89.30.9 | 89.40.8 | 90.01.0 | 90.41.2 | ||

| DEG-Net | 83.10.9 | 86.20.8 | 86.50.6 | 88.70.9 | 89.60.5 | 91.50.6 | 92.10.6 | ||

| 24.1 | FT | 26.71.0 | 26.82.1 | 26.81.6 | 27.00.7 | 27.31.2 | 27.50.8 | 28.31.5 | |

| SHOT | 25.72.2 | 26.91.2 | 27.92.6 | 29.10.4 | 29.11.4 | 29.61.7 | 29.81.5 | ||

| S+FADA | 25.61.3 | 27.70.5 | 27.80.7 | 28.21.3 | 28.41.4 | 29.01.0 | 29.61.9 | ||

| T+FADA | 25.31.0 | 26.30.8 | 28.91.0 | 29.11.3 | 29.21.3 | 31.90.4 | 32.41.8 | ||

| TOHAN | 26.70.1 | 28.61.1 | 29.51.4 | 29.60.4 | 30.51.2 | 32.10.2 | 33.20.8 | ||

| DEG-Net | 27.20.3 | 28.51.3 | 29.70.9 | 30.70.8 | 32.91.5 | 33.71.8 | 34.91.6 | ||

| 64.3 | FT | 64.91.1 | 66.51.5 | 66.71.7 | 67.31.1 | 68.12.3 | 68.30.5 | 69.71.4 | |

| SHOT | 74.70.3 | 75.51.4 | 75.61.0 | 75.80.7 | 77.12.1 | 77.81.6 | 79.60.6 | ||

| S+FADA | 72.21.4 | 73.61.4 | 74.71.4 | 76.21.3 | 77.21.7 | 77.83.0 | 79.71.9 | ||

| T+FADA | 71.70.6 | 74.31.9 | 74.50.8 | 75.92.1 | 77.71.5 | 76.81.8 | 79.71.9 | ||

| TOHAN | 75.80.9 | 76.81.2 | 79.40.9 | 80.20.6 | 80.51.4 | 81.11.1 | 82.61.9 | ||

| DEG-Net | 75.20.3 | 76.91.5 | 78.21.2 | 80.71.5 | 81.71.7 | 83.11.7 | 84.32.2 | ||

| 70.2 | FT | 70.20.0 | 70.60.3 | 70.70.1 | 70.80.3 | 70.90.2 | 71.10.3 | 71.10.4 | |

| SHOT | 72.61.9 | 73.62.0 | 74.10.6 | 74.61.2 | 74.90.7 | 75.40.3 | 76.11.5 | ||

| S+FADA | 74.41.5 | 83.10.7 | 83.31.1 | 85.90.5 | 86.01.2 | 87.62.6 | 89.11.0 | ||

| T+FADA | 74.21.8 | 81.64.0 | 83.40.8 | 82.02.3 | 86.20.7 | 87.20.8 | 88.20.6 | ||

| TOHAN | 76.01.9 | 83.30.3 | 84.20.4 | 86.51.1 | 87.11.3 | 88.00.5 | 89.70.5 | ||

| DEG-Net | 76.21.3 | 78.21.3 | 85.70.6 | 85.90.8 | 88.61.6 | 89.51.2 | 90.20.7 | ||

| 82.9 | FT | 83.50.4 | 84.32.4 | 84.50.7 | 85.51.3 | 86.61.0 | 87.20.7 | 88.12.7 | |

| SHOT | 83.10.5 | 85.50.3 | 85.80.6 | 86.00.2 | 86.60.2 | 86.70.2 | 87.00.1 | ||

| S+FADA | 83.20.2 | 84.00.3 | 85.01.2 | 85.60.5 | 85.70.6 | 86.20.6 | 87.21.1 | ||

| T+FADA | 82.90.7 | 83.90.2 | 84.70.8 | 85.40.6 | 85.60.7 | 86.30.9 | 86.60.7 | ||

| TOHAN | 84.00.5 | 85.20.3 | 85.60.7 | 86.50.5 | 87.30.6 | 88.20.7 | 89.20.5 | ||

| DEG-Net | 82.20.7 | 85.90.6 | 86.51.5 | 87.80.9 | 88.90.9 | 90.30.5 | 91.61.2 | ||

| 17.3 | FT | 23.41.8 | 23.62.7 | 23.81.6 | 24.61.4 | 24.61.2 | 24.80.7 | 25.51.8 | |

| SHOT | 30.31.2 | 31.60.4 | 29.80.5 | 29.40.3 | 29.70.5 | 29.80.8 | 30.10.9 | ||

| S+FADA | 28.11.2 | 28.71.3 | 29.01.2 | 30.11.1 | 30.31.3 | 30.71.0 | 30.91.5 | ||

| T+FADA | 27.51.4 | 27.90.9 | 28.41.3 | 29.41.8 | 29.50.7 | 30.21.0 | 30.41.7 | ||

| TOHAN | 29.91.2 | 30.51.2 | 31.41.1 | 32.80.9 | 33.11.0 | 34.01.0 | 35.11.8 | ||

| DEG-Net | 29.11.3 | 30.71.1 | 31.80.7 | 33.01.6 | 33.51.4 | 35.11.3 | 36.21.2 | ||

4.2 Adaptation Module Using Generated Data

Following Chi et al. (2021a), we create paired data using the labeled data in the target domain and the generated data and assign the group labels to the paired groups under the following rules: pairs the generated data with the same class label; pairs the generated data and the data in the target domain with the same class label; pairs the generated data but with different class label; pairs the generated data and the data in the target domain and also with different class label. By using adversarial learning, we train a discriminator which could distinguish between the data in different domains while maintaining high classification accuracy on generated data. The discriminator is a four-class classifier with the inputs of the above paired group data. Different from classical adversarial domain adaptation (Ganin et al., 2016; Jiang et al., 2020), the group discriminator decides which of the four groups a given data pair belongs to. By freezing the encoder, we train with the cross-entropy loss:

| (12) |

where represents the empirical mean value, is the group label of the group and is the output of the encoder with the paired data input.

Next, we will train the classifier while freezing the group discriminator, which is initialized with the same weight as that in the source classifier . Motivated by non-saturating games (Goodfellow, 2016), we minimize the following loss to update :

| (13) |

where is a hyper-parameter, is the cross-entropy loss, and is the optimal target model. As demonstrated in Theorem 1, it is only necessary to use generated unlabeled data for addressing the FHA problem. Thus, we only use labeled target data for target supervised loss in Eq. (4.2).

| Tasks | FHA Methods | ||||||

| WA | FT | SHOT | S+FADA | T+FADA | TOHAN | DEG-Net | |

| 70.6 | 71.51.0 | 71.90.4 | 72.10.4 | 71.30.5 | 72.80.1 | 74.30.3 | |

| 51.8 | 54.30.5 | 53.90.2 | 56.90.5 | 55.80.8 | 56.60.3 | 57.20.5 | |

| FHA Methods | Number of Targe Data per Class | ||

| 8 | 9 | 10 | |

| SHOT | 62.07 | 62.28 | 62.36 |

| TOHAN | 64.75 | 64.98 | 64.83 |

| DFG-Net | 65.36 | 65.55 | 66.36 |

| FHA Methods | Number of Target Data per Class | ||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |

| TOHAN | 76.01.9 | 83.30.3 | 84.20.4 | 86.51.1 | 87.11.3 | 88.00.5 | 89.70.5 |

| separate generative DEG-Net | 75.70.7 | 84.70.5 | 85.01.2 | 85.90.9 | 87.40.8 | 89.11.0 | 90.4 1.2 |

| DEG-Net w/o diversity | 87.21.9 | 89.50.3 | 89.20.4 | 90.21.1 | 90.31.3 | 91.10.5 | 91.20.5 |

| DEG-Net | 87.30.9 | 89.20.8 | 90.10.6 | 90.80.9 | 90.60.5 | 91.50.6 | 92.10.6 |

5 Experiments

We compare DEG-Net with previous FHA methods on digits datasets (i.e. MNIST (), USPS (), and SVHN ()) and objects datasets (i.e. CIFAR-10 () , STL-10 () and VisDA-C), following (Chi et al., 2021a). Following the standard domain-adaptation protocols (Shu et al., 2018), we compare DEG-Net with 4 baselines: (1) Without adaptation (WA); (2) Fine tuning (FT); (3) SHOT (Liang et al., 2020); (4) S+FADA (Chi et al., 2021a); (5) T+FADA (Chi et al., 2021a); and (6) TOHAN (Chi et al., 2021a). Details regarding datasets are in Appendixe B and details regarding baselines and implementation are in and Appendixe C.

Digits Datasets. Following Chi et al. (2021a) and Motiian et al. (2017), We conduct 6 tasks of the adaptation among the 3 digital datasets and choose the number of target data from 1 to 7 per class. The classifier accuracy on the target domain of our method over 6 tasks is shown in Table 1. The results show that the performance of DEG-Net is the best on almost all the tasks. It is clear that the accuracy of DEG-Net is lower than TOHAN when the amount of target data is too small. The diversity regularization and the similarity loss restrict each other, to avoid the copy issue. However, when the amount of target data is too small, the target domain information is a few, so the generator is less likely to generate similar data with the target domain. Diversity loss enhances this adversarial effect, resulting DEG-Net degrading to TOHAN and SHOT. Another improvement of DEG-Net over TOHAN is the faster training process of the generator. We need 0.93s to complete the training within each epoch in DEG-Net while needing 1.35s in TOHAN.

Objects Datasets. Following Chi et al. (2021a), we examine the performance of DEG-Net on 2 object tasks and choose the number of target data as 10 per class. The classification accuracies on object tasks are shown in Table 2.Our methods outperform baselines. In , we achieve a 1.5% improvement over TOHAN. In , we achieve a performance accuracy of 57.2%, 0.3% improvement over S+FADA. The effect of DEG-Net is not obvious in objective tasks. The possible reason may be that the simple structure of generative networks is difficult to generate diverse and correct data since the complexity of datasets

For estimating our method on larger datasets, we conduct the experiment on the VisDA-C (Peng et al., 2017), a commonly used large-scale domain adaptation benchmark. We choose synthesis data as the source domain data and real data as the target domain data and choose the number of target data from 8 to 10 per class. The classifier accuracy on the target domain of our method is shown in Table 3. DEG-Net achieves the highest accuracy over the baselines. We find that the accuracy of the generative-based methods (DEG-Net and TOHAN) is higher than SHOT. The reason may be that the generative-based methods can provide a similar amount of valid generated data per class for adaptation learning. Our method performs well than TOHAN, which can demonstrate that diversity matters in the generative-based methods for addressing the FHA problem. In addition, it should be noted that TOHAN trains different generators per class, leading to consuming more memory resources.

DEG-Net Generates More Diverse Data Than TOHAN. In this part, we analyze the diversity of the generated data by DEG-Net and TOHAN to see if our generation process can produce more diverse data than TOHAN’s. We choose the square root of the HSIC to measure the diversity of the generated data in the task , and calculate the HSIC value among the target-domain data as a reference value that is . After the calculation, the average diversity measure of DEG-Net is , and the average diversity measure of TOHAN is . DEG-Net can generate more diverse data than TOHAN. The detailed diversity analysis can be found in Appendix D.

Ablation Study. To show the advantage of the weight-shared architecture and the diversity loss, we conduct two experiments: (1) The architecture of weight-shared is the same as the DEG-Net but uses Eq. (8) to train the generator (DEG-Net without diversity). (2) The separate generative method, which is similar to TOHAN, has generators and uses the semantic features to calculate the similarity loss for training each generator:

| (14) |

As shown in Table 4, DEG-Net works better than both methods introduced above, and the weight-shared architecture works better than the separate generative method, which reveals that both the weight-shared architecture and the diversity loss can improve the quality of generated data and thus achieve the higher accuracy. Specifically, compared to the modified DEG-Net without the diversity loss, the separate generative method ignores the generalization knowledge in the semantic features of data which is shared with all the classes. Modified DEG-Net discards the diversity loss, and thus generates low diverse data and results in worse performance. However, the HSIC diversity loss does not work for all situations. DEG-Net achieves similar accuracy with modified DEG-Net without diversity or even worse if the amount of the labeled data is very few (i.e., ). This phenomenon may be caused by worse data generated by the diversity method. While estimating HSIC, we need enough data to ensure the value of HSIC can be estimated well. Since the diversity loss restricting to the similarity loss, the generator is less likely to generate similar data over target domain while HSIC loss is not estimated well (i.e., the distribution of generated data is far from the target domain). In addition, we conduct the ablation study for comparing our method to TOHAN with the basic geometric data augmentation for the FHA problem over the digit tasks. The results show that the performance of the augmentation techniques is worse than our method in general and details of these experiments can be found in Appendix D.

6 Conclusion

In this paper, we focus on generating more diverse unlabeled data for addressing the few-shot hypothesis adaptation (FHA) problem. We experimentally and theoretically prove that the diversity of generated data (i.e., the independence among the generated data) matters in addressing the FHA problem. For addressing the FHA problem, we propose a diversity-enhancing generative network (DEG-Net), which consists of the generation module and the adaptation module. With weight-shared conditional generative method equipped with a kernel independence measure: Hilbert-Schmidt independence criterion, DEG-Net can generate more diverse unlabeled data and achieve better performance. Experiments show that the generated data of DEG-Net are more diverse. Since the high diversity of the generated data, DEG-Net achieves state-of-the-art performance when addressing the FHA problem, which lights up a novel and theoretical-guaranteed road to the FHA problem in the future.

Acknowledgements

RJD and BH were supported by NSFC Young Scientists Fund No. 62006202 and Guangdong Basic and Applied Basic Research Foundation No. 2022A1515011652, and HKBU CSD Departmental Incentive Grant. BH was also supported by RIKEN Collaborative Research Fund. MS was supported by JST CREST Grant Number JPMJCR18A2.

References

- Balcan & Blum (2010) Balcan, M. and Blum, A. A discriminative model for semi-supervised learning. Journal of ACM, 57(3):19:1–19:46, 2010.

- Blaschko & Gretton (2008) Blaschko, M. and Gretton, A. Learning taxonomies by dependence maximization. In NeurIPS, 2008.

- Chen et al. (2020) Chen, X., Wang, Z., Tang, S., and Muandet, K. Mate: plugging in model awareness to task embedding for meta learning. In NeurIPS, 2020.

- Chi et al. (2021a) Chi, H., Liu, F., Yang, W., Lan, L., Liu, T., Han, B., Cheung, W., and Kwok, J. Tohan: A one-step approach towards few-shot hypothesis adaptation. In NeurIPS, 2021a.

- Chi et al. (2021b) Chi, H., Liu, F., Yang, W., Lan, L., Liu, T., Han, B., Niu, G., Zhou, M., and Sugiyama, M. Demystifying assumptions in learning to discover novel classes. In ICLR, 2021b.

- Chi et al. (2022) Chi, H., Liu, F., Yang, W., Lan, L., Liu, T., Han, B., Niu, G., Zhou, M., and Sugiyama, M. Meta discovery: Learning to discover novel classes given very limited data. In ICLR, 2022.

- Coates et al. (2011) Coates, A., Ng, A., and Lee, H. An analysis of single-layer networks in unsupervised feature learning. In AISTATS, 2011.

- Dagan et al. (2019) Dagan, Y., Daskalakis, C., Dikkala, N., and Jayanti, S. Learning from weakly dependent data under dobrushin’s condition. In COLT, 2019.

- Du et al. (2017) Du, S. S., Koushik, J., Singh, A., and Póczos, B. Hypothesis transfer learning via transformation functions. In NeurIPS, 2017.

- Fang et al. (2022) Fang, Z., Lu, J., Liu, F., and Zhang, G. Semi-supervised heterogeneous domain adaptation: Theory and algorithms. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(1):1087–1105, 2022.

- Ganin et al. (2016) Ganin, Y., Ustinova, E., Ajakan, H., Germain, P., Larochelle, H., Laviolette, F., Marchand, M., and Lempitsky, V. Domain-adversarial training of neural networks. The Journal of Machine Learning Research, 17(1):2096–2030, 2016.

- Goodfellow (2016) Goodfellow, I. NIPS 2016 tutorial: Generative adversarial networks. arXiv preprint arXiv:1701.00160, 2016.

- Gretton et al. (2005) Gretton, A., Bousquet, O., Smola, A., and Schölkopf, B. Measuring statistical dependence with Hilbert-Schmidt norms. In ALT, 2005.

- Gretton et al. (2007) Gretton, A., Fukumizu, K., Teo, C., Song, L., Schölkopf, B., and Smola, A. A kernel statistical test of independence. In NeurIPS, 2007.

- Guo et al. (2022) Guo, X., Lin, F., Yi, C., Song, J., Sun, D., Lin, L., Zhong, Z., Wu, Z., Wang, X., Zhang, Y., et al. Deep transfer learning enables lesion tracing of circulating tumor cells. Nature Communications, 13(1):7687, 2022.

- Hull (1994) Hull, J. J. A database for handwritten text recognition research. IEEE Transactions on Pattern Analysis and Machine Intelligence, 16(5):550–554, 1994.

- Jiang et al. (2020) Jiang, P., Wu, A., Han, Y., Shao, Y., Qi, M., and Li, B. Bidirectional adversarial training for semi-supervised domain adaptation. In IJCAI, 2020.

- Jing et al. (2020) Jing, Y., Liu, X., Ding, Y., Wang, X., Ding, E., Song, M., and Wen, S. Dynamic instance normalization for arbitrary style transfer. In AAAI, 2020.

- Krizhevsky et al. (2009) Krizhevsky, A., Hinton, G., et al. Learning multiple layers of features from tiny images. 2009.

- Kuzborskij & Orabona (2013) Kuzborskij, I. and Orabona, F. Stability and hypothesis transfer learning. In ICML, 2013.

- LeCun et al. (1998) LeCun, Y., Bottou, L., Bengio, Y., and Haffner, P. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

- Li et al. (2021) Li, Y., Pogodin, R., Sutherland, D. J., and Gretton, A. Self-supervised learning with kernel dependence maximization. In NeurIPS, 2021.

- Liang et al. (2020) Liang, J., Hu, D., and Feng, J. Do we really need to access the source data? source hypothesis transfer for unsupervised domain adaptation. In ICML, 2020.

- Liu et al. (2019) Liu, F., Lu, J., Han, B., Niu, G., Zhang, G., and Sugiyama, M. Butterfly: one-step approach towards wildly unsupervised domain adaptation. In NeurIPS LTS Workshop, 2019.

- Liu et al. (2021a) Liu, F., Xu, W., Lu, J., and Sutherland, D. J. Meta two-sample testing: Learning kernels for testing with limited data. In NeurIPS, 2021a.

- Liu et al. (2021b) Liu, L., Hamilton, W., Long, G., Jiang, J., and Larochelle, H. A universal representation transformer layer for few-shot image classification. In ICLR, 2021b.

- Liu et al. (2021c) Liu, L., Zhou, T., Long, G., Jiang, J., Dong, X., and Zhang, C. Isometric propagation network for generalized zero-shot learning. In ICLR, 2021c.

- Long et al. (2018) Long, M., Cao, Z., Wang, J., and Jordan, M. I. Conditional adversarial domain adaptation. In NeurIPS, 2018.

- Ma et al. (2020) Ma, W.-D. K., Lewis, J., and Kleijn, W. B. The HSIC bottleneck: Deep learning without back-propagation. In AAAI, 2020.

- Motiian et al. (2017) Motiian, S., Jones, Q., Iranmanesh, S., and Doretto, G. Few-shot adversarial domain adaptation. In NeurIPS, 2017.

- Netzer et al. (2011) Netzer, Y., Wang, T., Coates, A., Bissacco, A., Wu, B., and Ng, A. Y. Reading digits in natural images with unsupervised feature learning. In NeurIPS, 2011.

- Oneto et al. (2020) Oneto, L., Donini, M., Luise, G., Ciliberto, C., Maurer, A., and Pontil, M. Exploiting mmd and sinkhorn divergences for fair and transferable representation learning. In NeurIPS, 2020.

- Park et al. (2019) Park, S., Mello, S. D., Molchanov, P., Iqbal, U., Hilliges, O., and Kautz, J. Few-shot adaptive gaze estimation. In ICCV, 2019.

- Peng et al. (2017) Peng, X., Usman, B., Kaushik, N., Hoffman, J., Wang, D., and Saenko, K. Visda: The visual domain adaptation challenge. arXiv preprint arXiv:1710.06924, 2017.

- Peng et al. (2022) Peng, X., Liu, F., Zhang, J., Lan, L., Ye, J., Liu, T., and Han, B. Bilateral dependency optimization: Defending against model-inversion attacks. In ACM KDD, 2022.

- Pogodin & Latham (2020) Pogodin, R. and Latham, P. Kernelized information bottleneck leads to biologically plausible 3-factor hebbian learning in deep networks. In NeurIPS, 2020.

- Radford et al. (2015) Radford, A., Metz, L., and Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434, 2015.

- Shorten & Khoshgoftaar (2019) Shorten, C. and Khoshgoftaar, T. M. A survey on image data augmentation for deep learning. Journal of Big Data, 2019.

- Shu et al. (2018) Shu, R., Bui, H. H., Narui, H., and Ermon, S. A dirt-t approach to unsupervised domain adaptation. In ICLR, 2018.

- Shui et al. (2022) Shui, C., Wang, B., and Gagné, C. On the benefits of representation regularization in invariance based domain generalization. Machine Learning, 111(3):895–915, 2022.

- Snell et al. (2017) Snell, J., Swersky, K., and Zemel, R. Prototypical networks for few-shot learning. In NeurIPS, 2017.

- Song et al. (2007) Song, L., Smola, A., Gretton, A., and Borgwardt, K. M. A dependence maximization view of clustering. In ICML, 2007.

- Song et al. (2012) Song, L., Smola, A., Gretton, A., Bedo, J., and Borgwardt, K. Feature selection via dependence maximization. Journal of Machine Learning Research, 13(5), 2012.

- Sun et al. (2019) Sun, Q., Liu, Y., Chua, T.-S., and Schiele, B. Meta-transfer learning for few-shot learning. In CVPR, 2019.

- Teshima et al. (2020) Teshima, T., Sato, I., and Sugiyama, M. Few-shot domain adaptation by causal mechanism transfer. In NeurIPS, 2020.

- Tommasi et al. (2010) Tommasi, T., Orabona, F., and Caputo, B. Safety in numbers: Learning categories from few examples with multi model knowledge transfer. In CVPR, 2010.

- Wang et al. (2019) Wang, B., Mendez, J., Cai, M., and Eaton, E. Transfer learning via minimizing the performance gap between domains. In NeurIPS, 2019.

- Wang et al. (2023) Wang, B., Mendez, J. A., Shui, C., Zhou, F., Wu, D., Xu, G., Gagn, C., and Eaton, E. Gap minimization for knowledge sharing and transfer. Journal of Machine Learning Research, 24(33):1–57, 2023.

- Wang et al. (2020) Wang, Y., Yao, Q., Kwok, J. T., and Ni, L. M. Generalizing from a few examples: A survey on few-shot learning. ACM Computing Surveys, 53(3):1–34, 2020.

- Webb & Sammut (2010) Webb, G. I. and Sammut, C. Encyclopedia of Machine Learning. 2010.

- Yang et al. (2020) Yang, S., Liu, L., and Xu, M. Free lunch for few-shot learning: Distribution calibration. In ICLR, 2020.

- Yang et al. (2021a) Yang, S., van de Weijer, J., Herranz, L., Jui, S., et al. Exploiting the intrinsic neighborhood structure for source-free domain adaptation. In NeurIPS, 2021a.

- Yang et al. (2021b) Yang, S., Wang, Y., van de Weijer, J., Herranz, L., and Jui, S. Generalized source-free domain adaptation. In ICCV, 2021b.

- Yao et al. (2021) Yao, H., Huang, L.-K., Zhang, L., Wei, Y., Tian, L., Zou, J., Huang, J., et al. Improving generalization in meta-learning via task augmentation. In ICML, 2021.

- Zamir et al. (2018) Zamir, A. R., Sax, A., Shen, W., Guibas, L. J., Malik, J., and Savarese, S. Taskonomy: Disentangling task transfer learning. In CVPR, 2018.

- Zhang et al. (2020) Zhang, Y., Liu, F., Fang, Z., Yuan, B., Zhang, G., and Lu, J. Clarinet: A one-step approach towards budget-friendly unsupervised domain adaptation. In IJCAI, 2020.

- Zheng & Zhou (2021) Zheng, H. and Zhou, M. Exploiting chain rule and bayes’ theorem to compare probability distributions. In NeurIPS, 2021.

- Zhong et al. (2021) Zhong, L., Fang, Z., Liu, F., Lu, J., Yuan, B., and Zhang, G. How does the combined risk affect the performance of unsupervised domain adaptation approaches? In AAAI, 2021.

- Zhou et al. (2020) Zhou, F., Jiang, Z., Shui, C., Wang, B., and Chaib-draa, B. Domain generalization with optimal transport and metric learning. arXiv preprint arXiv: 2007.10573, 2020.

Appendix A Proof of Theorem 1

Before proving the Theorem 1, we first introduce a McDiarmid-like inequality under the log-coefficient of a random vector .

Lemma 1 (McDiarmid-like Inequality under the Log-coefficient of ).

Let be a distribution defined over , and be a random vector, and with the following bounded differences property with parameters :

| (15) |

where . If , then, for all ,

| (16) |

Proof.

Then, we introduce a recent result regarding bounding the expected suprema of a empirical process using the corresponding Gaussian complexity.

Theorem 2 ((Dagan et al., 2019)).

Let be a random vector over some domain and let be a class of functions from to . If , then

| (18) |

where is a universal constant, and is a sample of .

Note that, the above result is very general, it does not assume that the marginals of the distribution of are identical. Based on the above theorem and lemma, we can prove the following lemma.

Lemma 2.

Let be a random vector over some domain and let be a class of functions from to . If , and there exists such that for any and , , then, for any ,

| (19) |

for some universal constants .

Proof.

We first consider proving the non-absolute-value version. Let

| (20) |

For any and , we have that . According to Lemma 1, we have

| (21) |

for some universal constant . Then, combining (based on Theorem 2), we have

| (22) |

For the opposite inequality, part, following (Dagan et al., 2019), we can apply the same arguments on . Note that , which concludes the bound. ∎

The above lemma is a slightly general version of Theorem 6.7 in (Dagan et al., 2019) by considering the influence of . Based on Lemma 2, we can prove the Theorem 1 below.

See 1

Proof.

Let be the set of unlabeled data. Based on the relation between VC dimension and the Gaussian complexity, Lemma 2 gives that, with probability at least , we have

where denotes the uniform distribution over . Since , this implies that we have

Therefore, the set of hypotheses with is contained in .

The bound on the number of labeled data now follows directly from known concentration results using the expected number of partitions instead of the maximum in the standard VC-dimension bounds. This bound ensures that with probability , none of the functions with have .

The above two arguments together imply that with probability , all with and have , and furthermore has . This in turn implies that with probability at least , we have , where ∎

Appendix B Datasets

Digits.

Following TOHAN (Chi et al., 2021a), we conduct 6 adaptation experiments on digits datasets: , , ,, and . MNIST () (LeCun et al., 1998) is the handwritten digits dataset, which has been size-normalized and centered in pixels. SVHN () (Netzer et al., 2011) is the real-world image digits dataset, of which images are pixels with 3 channels. USPS () (Hull, 1994) data are 16×16 grayscale pixels. The SVHN and USPS images are resized to grayscale pixels in the adaptation task (Chi et al., 2021a).

Objects.

Following Sun et al. (2019), we compared DEG-Net and benchmark on CIFAR-10 and STL-10. The CIFAR-10 (Krizhevsky et al., 2009) dataset contains 60, 000 color images in 10 categories, while the STL-10 (Coates et al., 2011) dataset is inspired by the CIFAR-10 dataset with some modifications. However, these two datasets only contain nine overlapping classes. We removed the non-overlapping classes (“frog” and “monkey”) (Shu et al., 2018). We also compared DEG-Net and benchmark on VisDA-C (Peng et al., 2017), which is a challenging large-scale datasets that mainly focuses on the 12-class synthesisto-real classification task.

Appendix C Details regarding Experiments

Baselines. We follow the standard domain-adaptation protocols (Shu et al., 2018) and compare DEG-Net with 4 baselines: (1) Without adaptation (WA): to classify the target domain with the well-trained source domain classifiers. (2) Fine tuning (FT): to train the last connected layer of the classifier with few accessible labeled data. (3) SHOT: an HTL method, where we modify it to use both the labeled target data and unlabeled target data (Liang et al., 2020). (4) S+FADA:to generate unlabeled data using the loss with the well-trained source clasifier and apply them into DANN (Ganin et al., 2016). (5) T+FADA:to generate unlabeled data using the loss with the few labeled target data and apply them into DANN. (6) TOHAN: a novel FHA method, which generates the specific category unlabeled data separately (Chi et al., 2021a).

| Task | Target Data | TOHAN | DEG-Net |

| 0.0013 | 0.0027 | 0.0019 | |

| 0.0025 | 0.0021 | ||

| 0.0004 | 0.0016 | 0.0013 | |

| 0.0014 | 0.0008 | ||

| 0.0002 | 0.0012 | 0.001 | |

| 0.0009 | 0.0005 |

| Method | Tasks | Number of Target Data per Class | ||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | ||

| TOHAN w/ aug | 77.10.4 | 83.50.6 | 84.00.7 | 86.71.1 | 87.50.6 | 88.11.4 | 89.41.1 | |

| 26.71.0 | 27.81.6 | 29.71.3 | 29.40.7 | 30.31.2 | 32.40.8 | 33.51.5 | ||

| 76.40.5 | 78.60.3 | 82.70.1 | 86.50.3 | 87.90.2 | 88.20.3 | 89.60.4 | ||

| 82.10.7 | 84.91.3 | 85.30.6 | 86.71.5 | 87.40.8 | 87.90.7 | 89.80.4 | ||

| Avg. | 65.60.7 | 68.71.0 | 70.40.7 | 72.40.9 | 73.30.7 | 74.10.8 | 75.60.8 | |

| TOHAN | 76.01.9 | 83.30.3 | 84.20.4 | 86.51.1 | 87.11.3 | 88.00.5 | 89.70.5 | |

| 26.70.1 | 28.61.1 | 29.51.4 | 29.60.4 | 30.51.2 | 32.10.2 | 33.20.8 | ||

| 76.01.9 | 83.30.3 | 84.20.4 | 86.51.1 | 87.11.3 | 88.00.5 | 89.70.5 | ||

| 84.00.5 | 85.20.3 | 85.60.7 | 86.50.5 | 87.30.6 | 88.20.7 | 89.20.5 | ||

| Avg. | 65.71.1 | 70.10.5 | 70.90.7 | 72.20.8 | 73.01.1 | 74.00.5 | 75.50.6 | |

| DEG-Net | 83.10.9 | 86.20.8 | 86.50.6 | 88.70.9 | 89.60.5 | 91.50.6 | 92.10.6 | |

| 27.20.3 | 28.51.3 | 29.70.9 | 30.70.8 | 32.91.5 | 33.71.8 | 34.91.6 | ||

| 76.21.3 | 78.21.3 | 85.70.6 | 85.90.8 | 88.61.6 | 89.51.2 | 90.20.7 | ||

| 82.20.7 | 85.90.6 | 86.51.5 | 87.80.9 | 88.90.9 | 90.30.5 | 91.61.2 | ||

| Avg. | 67.10.8 | 69.71.0 | 72.10.9 | 73.30.9 | 75.01.1 | 76.31.0 | 77.21.0 | |

Implementation Details. We implement all methods by PyTorch 1.7.1 and Python 3.7.6, and conduct all the experiments on NVIDIA RTX 2080Ti GPUs. Due to the limitation of accessible computing resources, we can not choose more complex networks as the backbone of the generator.

Our conditional generator uses the standard DCGAN network (Radford et al., 2015). We adopt the backbone network of LeNet-5 with batch normalization and dropout to extract the group discriminator feature in the digits tasks and adopt the backbone networt of ResNet50 in the object task. We employ connected layers with the softmax function as the classifier to obtain the probability. The semantic feature in digital tasks is the output of the first fully connected layer. We adopt 3 connected layers with softmax function as the group discriminator . While estimating the HSIC measure, we add to the kernel function for ensuring the kernel matrix be positive.

Hyper-parameter Settings. Following the common protocol of domain adaptation (Shu et al., 2018), we set fixed hyper-parameters for the different datasets. We pretrain the conditional generator for 300 epochs and pretrain the group discriminator for 100 epochs. The training step of the classifier (i.e. the adaptation module) is set to 50. As for the generator and the group discriminator, the learning rate of adam optimizer is set to . As for the classifier, the learning rate of adam optimizer is set to . The tradeoff parameter in Eq. (8) is set to and the tradeoff parameter in Eq. (11) is set to . Following Long et al. (2018) the radeoff parameter in Eq. (4.2) is set to .

| Task | Method | Number of Generated Data per Class | Number of Target Data per Class | ||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |||

| TOHAN | 0 | 76.0 | 83.3 | 84.2 | 86.5 | 87.1 | 88.0 | 89.7 | |

| 5 | 75.8 | 83.7 | 84.3 | 84.3 | 85.0 | 87.5 | 88.1 | ||

| 20 | 76.0 | 83.3 | 84.3 | 84.0 | 85.1 | 87.2 | 89.5 | ||

| DEG-Net | 0 | 83.1 | 86.2 | 86.5 | 88.7 | 89.6 | 91.5 | 92.1 | |

| 5 | 82.6 | 86.0 | 85.9 | 88.2 | 88.7 | 91.9 | 92.3 | ||

| 20 | 81.3 | 84.3 | 86.2 | 88.6 | 90.3 | 92.3 | 93.4 | ||

| TOHAN | 0 | 26.7 | 28.6 | 29.5 | 29.6 | 30.5 | 32.1 | 33.2 | |

| 5 | 26.2 | 28.4 | 28.9 | 29.1 | 30.2 | 31.4 | 32.5 | ||

| 20 | 25.8 | 26.9 | 29.8 | 27.4 | 29.8 | 32.7 | 32.8 | ||

| DEG-Net | 0 | 27.2 | 28.5 | 29.7 | 30.7 | 32.9 | 33.7 | 34.9 | |

| 5 | 27.3 | 28.2 | 29.6 | 29.4 | 32.8 | 33.8 | 35.4 | ||

| 20 | 26.4 | 27.3 | 30.2 | 28.9 | 33.5 | 35.0 | 36.4 | ||

Appendix D Additional Analysis

Augmentation Techniques on the FHA Problem

In this section, we compare the accuracy of the target classifier trained by TOHAN and that of TOHAN with the basic geometric data augmentation for the FHA problem over the digit tasks. The geometric data augmentation technique has been widely explored to diversify the image data (Shorten & Khoshgoftaar, 2019). In our experiment, we randomly choose one or more augmentation techniques: resizing, shifting, cropping, and slight rotations (1 and 20 and -1 to -20) for the generated data in TOHAN. The classifier accuracy on the target domain of our method over 4 experiments and the average accuracy is shown in Table 6.

It is clear that the performance of the augmentation techniques is worse than our method in general. It may be caused by the fact that the generated images are similar and even the same as the few target data. The diversity of generated data is still low with data augmentation. The accuracy of the augmentation is basically the same as TOHAN’s. The improvement brought by the augmentation is more obvious while the number of the target data is increasing.

Diversity Analysis of DEG-Net

In this section, we compare the diversity of generated data of DEG-Net with that of TOHAN and target data. Because of the difficulty of calculating log-influence, we use the HSIC to measure the diversity of data. Considering that the generated batch in the training process is 32, we calculate the HSIC measure with the 32 sample data. Table 5 shows the diversity of the different data. It is clear that the diversity loss in DEG-Net works well to make the generated data more diverse.

Data Efficiency Analysis of DEG-Net

In this section, we conduct the experiments in the tasks and to analyze the efficiency of the generated data. Following the architecture of the DEG-Net, we use the Eq. (11) to train the conditional generator and obtain the following loss to update classifier :

| (23) |

where is the generated data and is the label of the generated data. We use the different numbers of the generated data by TOHAN (Chi et al., 2021a) and DEG-Net to train the classifier and the classification accuracy is shown in Table 7. It is clear that the performance of using data generated by TOHAN is almost the same as just using labeled data. In addition, the data generated by DEG-Net can not improve the performance of the model while the number of target data per class is small. It may be caused by that the generated data is similar to the label target data, so that add the almost same data for the training will bring little improvement. However, it is worth noting that the improvement will be large if the number of data generated by DEG-Net is more than 5 per class. This phenomenon indicates that the data generated by DEG-Net is more independent of the existing target data and could be treated as the new ones to some degree.