Disturbance Rejection-Guarded Learning for Vibration Suppression of Two-Inertia Systems

Abstract

Model uncertainty presents significant challenges in vibration suppression of multi-inertia systems, as these systems often rely on inaccurate nominal mathematical models due to system identification errors or unmodeled dynamics. An observer, such as an extended state observer (ESO), can estimate the discrepancy between the inaccurate nominal model and the true model, thus improving control performance via disturbance rejection. The conventional observer design is memoryless in the sense that once its estimated disturbance is obtained and sent to the controller, the datum is discarded. In this research, we propose a seamless integration of ESO and machine learning. On one hand, the machine learning model attempts to model the disturbance. With the assistance of prior information about the disturbance, the observer is expected to achieve faster convergence in disturbance estimation. On the other hand, machine learning benefits from an additional assurance layer provided by the ESO, as any imperfections in the machine learning model can be compensated for by the ESO. We validated the effectiveness of this novel learning-for-control paradigm through simulation and physical tests on two-inertial motion control systems used for vibration studies.

Index Terms:

Machine Learning, Disturbance Rejection, Extended State Observer, Model UncertaintyI Introduction

Vibration suppression of multi-inertia systems is critical in many engineering applications, including automotive suspensions, series elastic actuators (SEA), and various other motion control systems [1]. These systems often involve multiple inertia components with a two-inertia subsystem serving as a fundamental block, connected by flexible couplings, which leads to inherent resonance issues. This resonance can cause dynamic stresses, energy wastes, and performance degradation, therefore posing significant challenges to the systems’ efficiency and stability [2, 3]. Given the fundamental challenge of system identification and the necessity for real-time performance, it is common practice to employ a simplified or inaccurate nominal dynamic model. Consequently, the disturbances become inevitable, necessitating their rejection to achieve robust control. The disturbance includes internal (i.e., unknown or unmodelled parts of the plant dynamics) and external (i.e., perturbations from the outside affecting the dynamics) [4, 5].

The observer-based method has emerged as a promising approach to estimating the disturbance for the subsequent design of a disturbance rejection controller. Among the array of existing disturbance observers, the extended state observer (ESO) [6] is gaining popularity due to its simplicity in implementation. For the formulation of an ESO, the system is modeled as a simple chained integrator with a total disturbance term (also called lumped disturbance, ) that includes both internal and external disturbances. The total disturbance is treated as an extended state to be estimated together with other states. The estimated disturbance can be mitigated through various means, including a simple state feedback controller or more advanced control strategies such as sliding mode control [7] and model predictive control [8].

It is worth noting that the traditional ESO operates in a memoryless fashion, i.e., once it estimates a disturbance and transmits it to the controller, the datum used for estimation is then discarded. However, as a control system operates, we can improve our understanding of the disturbance through collected operational data. Prior works [9, 10] show that a model-based ESO (MB-ESO), which utilizes prior model information about the disturbance (such as a detailed dynamic model obtained through system identification), tends to exhibit reduced sensitivity to noise when compared to a model-free ESO (MF-ESO) that assumes a simple chained integrator as a nominal model. In order to circumvent the need for extensive system identification and maximize the utilization of disturbance information, we propose to leverage machine learning (ML), which has powerful capacities for nonlinear optimization, to memorize and generalize the past estimations from the ESO as a feedforward estimation of the disturbance. The learning component is expected to capture the internal dynamics as well as patterns of external disturbances.

[11, 12, 13] combine ESO with iterative learning control (ILC) for repetitive control tasks. Our approach focuses on general control tasks rather than just the repetitive ones. In addition, we assume that system dynamics, as well as disturbances, are unknown and not necessarily repetitive. In [14], a neural network is utilized to tune the parameters of ESO rather than explicitly learning the disturbance. Other learning-for-control approaches such as [15] employ neural networks to capture discrepancies between a nominal model and the true model . Since the state of the true model is unknown, the measured next state is used to update the error model represented by the neural network. However, these methods always assume full-state information is available. In addition, when the learning performance falls short of expectations, it may result in suboptimal performance for subsequent model-based controllers. In contrast, our approach represents a novel paradigm that aims at learning the total disturbance with the help of output measurements instead of true values for states. Furthermore, our paradigm includes a correction mechanism for cases where the learning component fails to accurately capture the disturbances. The residual total disturbance, i.e., the remainder excluding the disturbance already estimated by the learning component, will be estimated by a conventional ESO in a feedback correction manner. Through this seamless integration, even when the learning-based estimation struggles to converge effectively, we can leverage the ESO for feedback correction, thereby adding an extra layer of robustness and assurance to the system.

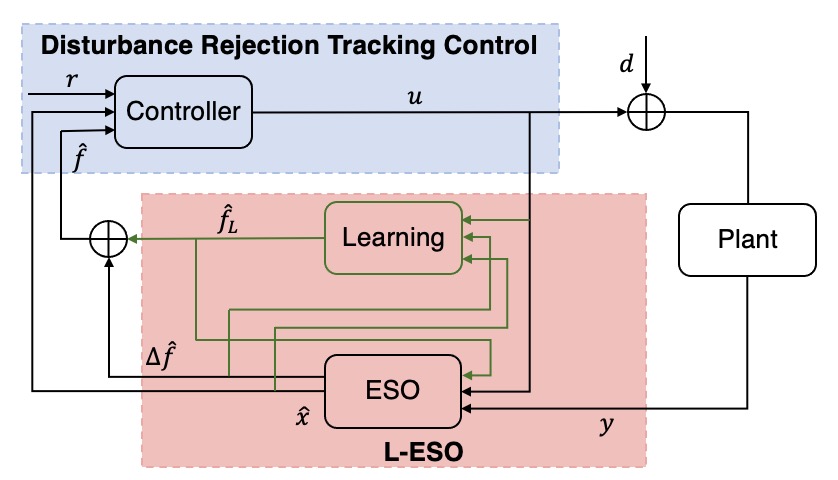

In our new framework, as visualized in Fig. 1, we refer to the learning-enabled extended state observer as L-ESO. The estimation of the true total disturbance consists of and , which are from the learning component and the ESO, respectively. First, ESO uses the information of control and observation to estimate the system’s states and the residual disturbance . Second, ESO’s estimation, including and are fed as input to the learning component for learning a regression model. The learning component carries out the feedforward estimation , after which an online optimization iteratively minimizes the difference between and , allowing the learning component to approximate the total disturbance accurately. In situations where imperfect learning introduces errors, the ESO serves as an additional layer to rectify.

The contributions of our work are summarized as follows:

-

•

We propose a novel framework that combines ML and ESO for feedforward estimation and feedback correction for a general disturbance rejection tracking control task. Compared with existing learning-for-control frameworks, we estimate states and disturbances in a unique way. We also have an extra error correction mechanism for the learning component.

-

•

The learning component serves as an add-on to existing ESO-based control architecture. As shown in Fig. 1, only a learning component and a few connections (in green) are introduced. The advantage of our modular design is two-fold: 1) no need to change the existing framework; 2) users can customize the learning components by choosing any appropriate machine learning model.

-

•

Our learning and estimation are real-time and online. We showcase the efficacy of our framework through simulations and a real-world two-inertia testbed as a fundamental block for a multi-inertia system.

The remainder of this paper is structured as follows. We first go through the preliminaries in Sec. II. Then, we construct our framework in Sec. III. Simulation results of the two-mass-spring benchmark system are presented in Sec. IV, followed by the hardware experiments of a torsional plant in Sec. V. Finally, we conclude our work and discuss possible future research directions in Sec. VI.

II Preliminary

The multi-inertia system can be represented as the sum of a nominal part and a nonlinear time-varying part:

| (1) |

where is the state vector, is a control input, is a measured output, and is an unknown function representing the time-varying uncertainty, which contains external disturbance , unmodeled dynamics, and parameter uncertainty. Terms , , and are real and known matrices with appropriate dimensions. For the particular case of a two-inertial system with , meaning two states for each inertial position/angular and velocity/angular velocity, please refer to the details in the example in Sec. IV. The justification of classifying (1) as a nonlinear time-varying system can be found in [16, 17].

Traditionally, an ESO is established for a system in a chained integrator form [6]. However, in our most recent work [18], we have significantly expanded the applicability scope of ESO and rigorously proved that for a general system (1), given that Assumption 1 and the Assumption 2 are satisfied, an ESO can be established to estimate by releasing the chained integrator form requirement.

Assumption 1.

is observable.

Assumption 2.

has no invariant zeros.

| (3) |

form the following new system

| (4) |

The readers are referred to [18] for more details on the matrix transformation. The new system (4) has an observable canonical form such that an ESO can be established for estimating .

According to whether or not the system dynamics are available, we have the following two variants of ESO:

II-A MB-ESO

If the model information, i.e., , in matrix and is available, we have

| (5) |

The total disturbance can be represented as:

| (6) |

where is the external disturbance, is the true control gain.

II-B MF-ESO

If the model information, i.e., , in matrix and , is not available, we have

| (7) |

where is the internal disturbance (unknown/unmodelled dynamics), is the nominal control gain, and is the external disturbance. In such a case, the total disturbance becomes:

| (8) |

ESO treats the total disturbance as an extended state, such that a Luenberger observer can be designed to estimate both the original system state and the total disturbance . The augmented dynamic system is as follows:

| (9) |

where , , , .

The Luenberger observer has the following form:

| (10) |

where and are estimations of and , is the observer gain. We have the following estimation error dynamics:

| (11) |

where .

Theorem 1.

All eigenvalues can be placed at , which is called the observer bandwidth of ESO [19].

III Learning-Enabled ESO

Remark 2.

By incorporating model information, MF-ESO becomes equivalent to MB-ESO.

Remark 3.

The motivation for proposing the learning component can be justified in that the model information is learnable to facilitate the incorporation of model information.

Remark 4.

The learning component is even possible to learn the external disturbance together with the internal disturbance to be incorporated.

Since the learning component has a feedforward estimation for the total disturbance, ESO can serve as a feedback correction to estimate the residual total disturbance as . The combination of the feedforward estimation and the feedback correction is realized as follows:

| (13) |

Since the learning component is expected to capture the unknown dynamics, we employ a model-free ESO, see Fig. 1. The learning block in Fig. 1 is a function parameterized by . To learn the total disturbance (see (8)), we establish a mapping from the input ( estimated by ESO and control input ) to the output , where . The total disturbance estimation consists of two parts: 1) the feedforward estimation from the learning component ; 2) feedback correction for the residual disturbance by an MF-ESO. To optimize the parameters of the machine learning model, a general regression problem is formulated using the following cost function:

| (14) |

where is the size of the training data. The details are in Alg. 1. When the batch is not yet filled, we run the MF-ESO (see Line 7-14, the learning component does not return optimized parameters).

Our framework has superior modularity. The design of the ESO is just a conventional model-free convention. We only need to use the estimation from ESO to drive the training of our learning component. First, the learning component can serve as an add-on to existing ESO-based control architecture by just adding a few connections. Second, the learning component is so flexible that users can customize it by choosing appropriate machine learning models, e.g., linear, non-linear, parametric, non-parametric, etc.

IV Simulation Results

IV-A Two-Mass-Spring Problem Formulation

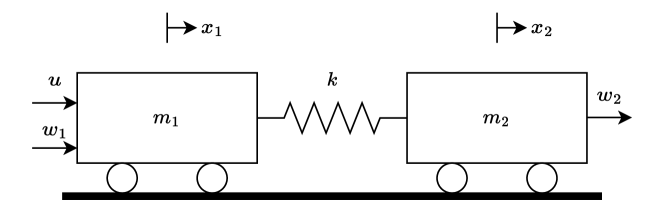

Fig. 2 depicts a schematic of a two-mass-spring system, which is from a well-known benchmark control problem [20]. The system includes two masses: and , which can slide freely over a horizontal surface without friction. Note that it has been proved that a non-friction setting is more challenging for a controller design [9]. The masses are connected by a light horizontal spring with a spring constant . The system is subject to two external disturbance forces and , which act on masses and , respectively. The control signal is the force applied to mass . Both the positions of mass and mass are measured, and either one can be used as an output to be controlled.

The states of the two-mass-spring system are defined as the displacements and velocities of the two masses. Specifically, the displacement and velocity of mass are and , respectively, while the displacement and velocity of mass are and , respectively. The dynamics of the system can be represented in the following state-space form:

| (15) |

A time-varying unknown external disturbance is from the mass , control needs to be conducted on to allow track any desired trajectory. For the output , i.e., , a chained integrator system is derived by taking the derivatives of the output four times. The input and disturbance are in the last channel of this fourth-order system with :

| (16) |

IV-B ESO design

The states in the system are:

| (17) |

The state-space description of the system is

| (18) |

IV-B1 Model-free ESO

The state-space model is:

, , , . As we can see, the model-free design assumes unknown dynamics, such that the total disturbance can be represented as:

| (19) |

where is the model parameter information, is the nominal control gain. We have

| (20) |

where everything besides is considered as total disturbance (see (16)). It can be validated that such a system satisfies Assumptions 1, 2, and 3. Therefore, an ESO can be designed for the estimation of , see (10).

The observer gain is chosen where all the eigenvalues of are placed at [19], i.e., .

IV-B2 Model-based ESO

The model-based design has the following state-space representation:

, , , . In contrast to the above-mentioned model-free design, such a system tries to leverage the prior knowledge of the dynamic model, by assuming is known (see (16)). In this case, the total disturbance becomes:

| (21) |

such that

The observer gain is chosen where all eigenvalues of are placed at [19]. Let , the coefficients of are listed in Table I.

| Parameters | Values |

|---|---|

IV-B3 L-ESO

As shown in (19), the internal disturbance has a linearly structured mapping between the input (state and control) and the output (disturbance). Therefore, a linear regression model is a reasonable choice for the learning component, with . Note that as we mentioned before, the learning model is flexible to be linear, nonlinear, parametric, non-parametric, etc. Our contribution is not about the complexity of the learning model but the novel design to seamlessly combine machine learning models with an ESO. A batch gradient descent method is used for optimizing the cost function. In our experiments, we initialize with all zeros.

IV-C Controller Design

The control law for the system (20) can be designed as:

| (22) |

such that

| (23) |

It can be controlled by a state feedback controller

| (24) |

with a control gain , where is the close-loop natural frequency [19].

IV-D Simulation Results

The system parameters are taken from the benckmark problem [20], i.e., kg, N/m, , . Tracking a desired trajectory for the position of mass is the control objective. A sinusoidal wave with a frequency of 1 rad/s and amplitude 1 is applied in the training phase for L-ESO. After 110 seconds, a step reference is given to all three approaches. A band-limited white noise with noise power is added at the system output side. A sinusoidal external disturbance with frequency rad/s is applied on as starting at 150 s. The learning algorithm is running online. The learning phase is designed to emulate the typical operational scenarios of the machine under general conditions, whereas the step response is employed to assess and compare the tracking performance. All the control parameters are set identically for fair comparison.

The controller bandwidth and the observer bandwidth are set to 1 rad/s and 10 rad/s, respectively. The control gain is set to 1. All three approaches share such same settings for fair comparison.

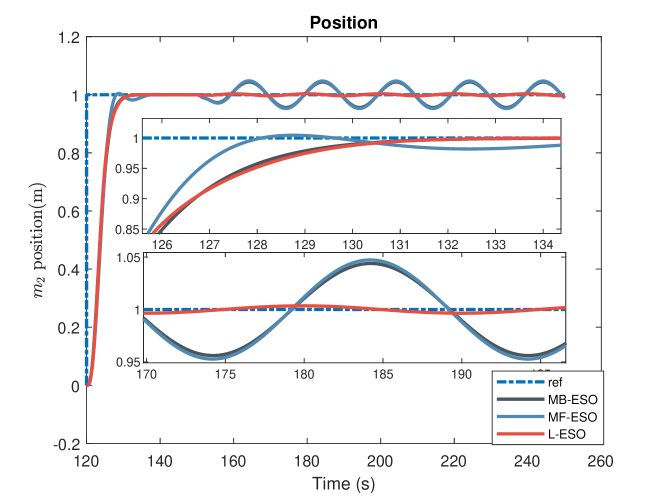

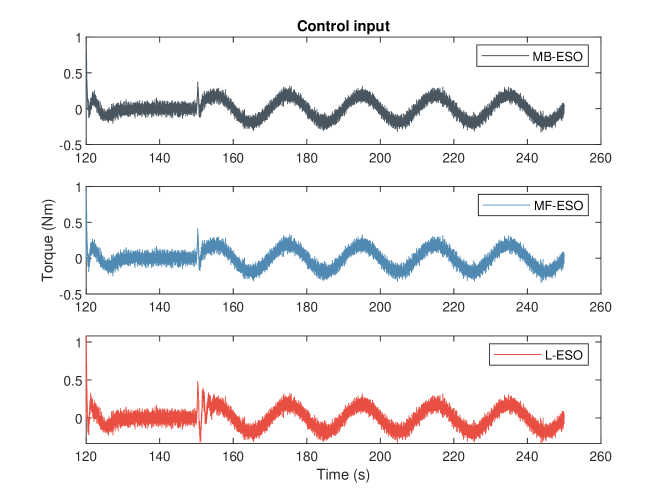

The tracking performance and the control input are shown in Fig. 3 and Fig. 4, respectively.

- 1.

-

2.

For external disturbance rejection (see the zoom-in plot from 170 s to 195 s, Fig. 3), L-ESO’s performance is the best. By re-visiting (8), if the external disturbance has a linear component, a linear regression component can still capture it, e.g., the trends of going up and down in a sinusoidal external disturbance.

-

3.

Adding external disturbance information to the observer can help reduce the required bandwidth. In our experiments, we found that MF-ESO and MB-ESO will need three times more bandwidth to achieve the same performance as the L-ESO.

-

4.

The control input of the L- ESO has more fluctuations compared with MF-ESO and MB-ESO, as shown in Fig. 4. This is caused by the noise signal and the batch gradient descent method we choose to minimize the cost function. It can be smoothened by increasing the batch size in this example.

V Hardware Experiments Results

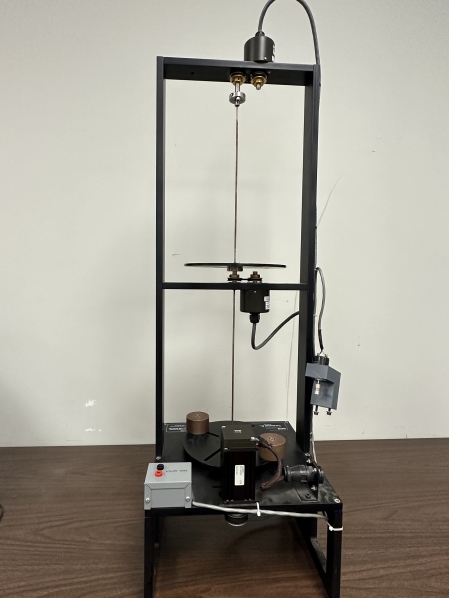

We conduct physical experiments on our ECP Model 205 torsional testbed [21], see Fig.5. It is a mechanical system that consists of a flexible vertical shaft connecting two disks - a lower disk and an upper disk. Each disk is equipped with an encoder for position measurement. A DC servo motor drives the lower disk through a belt and pulley system, which provides a 3:1 speed reduction ratio. The system can be used to study the vibration of a torsional two-mass-spring system.

A personal computer with MATLAB®Simulink Desktop Real-Time™ installed is used for computation. The computer is also equipped with a four-channel quadrature encoder input card (NI-PCI6601) and a multi-function analog and digital I/O card (NI-PCI6221). These cards interface with the torsional plant Model 205 for real-time data acquisition and control. The quadrature encoder input card enables the computer to receive position and velocity data from the encoders on the disks of the plant. The multi-function analog and digital I/O card allows the computer to send control signals to the DC servo motor that drives the lower disk.

V-A System Model

Since the MB-ESO, as a baseline approach, needs the dynamics information, we first use MATLAB®System identification toolbox and get the transfer function: .

V-B ESO and Controller Design

As this testbed is again a fourth-order dynamic system, the same ESO design pipeline shown before can be applied.

V-C Experiment Results

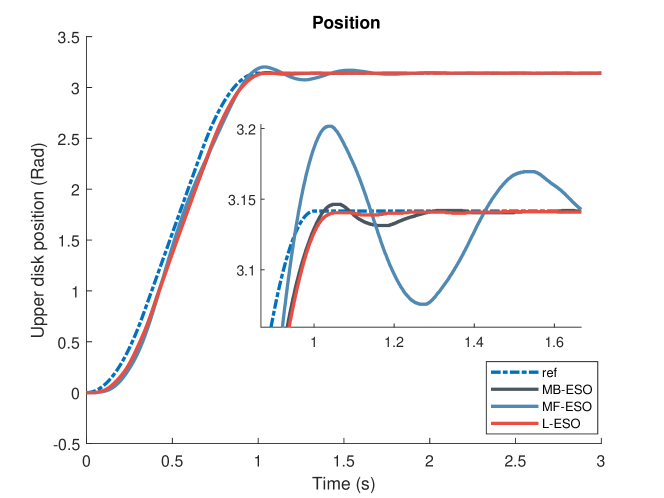

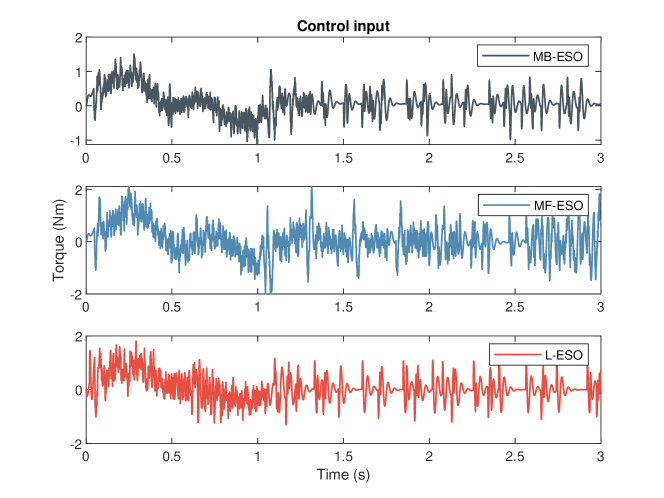

Tracking a desired trajectory for the upper disk is the control objective. A sinusoidal wave with a frequency of rad/s and an amplitude is applied in the training phase of L-ESO. and are set to 90 rad/s and 40 rad/s, respectively. The control gain is . A trapezoidal profile reference with the final value is used.

From the results illustrated in Fig. 6 and Fig. 7, we have the following observations: 1) L-ESO has the best performance among all the methods after the training phase in terms of overshoot percentage and settling time. The reasons for L-ESO outperforming MB-ESO could be the imperfection of system identification or that our approach can learn internal as well as external disturbance. 2) The fluctuation of control input of L-ESO is between MF-ESO and MB-ESO, as shown in Fig. 7, which is different from the simulation result. This is because the learning rate is conservatively chosen due to the large noise in the hardware. Also, the trapezoidal profile reference is more smooth than the step reference, which is beneficial for learning.

VI CONCLUSIONS

A novel learning-enabled extended state observer L-ESO with the capacity to memorize and generalize from past estimated disturbances is proposed in this paper. The machine learning model is seamlessly integrated into existing disturbance rejection control architecture as a flexible add-on for boosting robustness performance against unknown and time-varying disturbances. Compared with existing learning for control framework, our new paradigm does not rely on access to full states. In addition, the learning is guarded by disturbance rejection that provides an extra assurance layer to compensate for the imperfections of the machine learning model. The efficacy of the proposed approach has been supported by simulation and hardware experiments. In the future, we will further validate in real robotic testbeds.

References

- [1] Y. Hori, H. Iseki, and K. Sugiura, “Basic consideration of vibration suppression and disturbance rejection control of multi-inertia system using SFLAC (state feedback and load acceleration control),” IEEE Transactions on Industry Applications, vol. 30, no. 4, pp. 889–896, 1994.

- [2] S. Zhao and Z. Gao, “An active disturbance rejection based approach to vibration suppression in two-inertia systems,” Asian Journal of control, vol. 15, no. 2, pp. 350–362, 2013.

- [3] Y. Wang, L. Dong, Z. Chen, M. Sun, and X. Long, “Integrated skyhook vibration reduction control with active disturbance rejection decoupling for automotive semi-active suspension systems,” Nonlinear Dynamics, pp. 1–16, 2024.

- [4] J. Chen, Y. Hu, and Z. Gao, “On practical solutions of series elastic actuator control in the context of active disturbance rejection,” Advanced Control for Applications: Engineering and Industrial Systems, vol. 3, no. 2, p. e69, 2021.

- [5] Q. Zheng, Z. Ping, S. Soares, Y. Hu, and Z. Gao, “An active disturbance rejection control approach to fan control in servers,” in 2018 IEEE Conference on Control Technology and Applications (CCTA). IEEE, 2018, pp. 294–299.

- [6] J. Han, “From PID to active disturbance rejection control,” IEEE Transactions on Industrial Electronics, vol. 56, no. 3, pp. 900–906, 2009.

- [7] R. Cui, L. Chen, C. Yang, and M. Chen, “Extended state observer-based integral sliding mode control for an underwater robot with unknown disturbances and uncertain nonlinearities,” IEEE Transactions on Industrial Electronics, vol. 64, no. 8, pp. 6785–6795, 2017.

- [8] H. Zhang, Y. Li, Z. Li, C. Zhao, F. Gao, F. Xu, and P. Wang, “Extended-state-observer based model predictive control of a hybrid modular DC transformer,” IEEE Transactions on Industrial Electronics, vol. 69, no. 2, pp. 1561–1572, 2021.

- [9] H. Zhang, S. Zhao, and Z. Gao, “An active disturbance rejection control solution for the two-mass-spring benchmark problem,” in 2016 American Control Conference (ACC). IEEE, 2016, pp. 1566–1571.

- [10] C. Fu and W. Tan, “Tuning of linear ADRC with known plant information,” ISA transactions, vol. 65, pp. 384–393, 2016.

- [11] Y. Hui, R. Chi, B. Huang, and Z. Hou, “Extended state observer-based data-driven iterative learning control for permanent magnet linear motor with initial shifts and disturbances,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 51, no. 3, pp. 1881–1891, 2021.

- [12] J. Wang, D. Huang, S. Fang, Y. Wang, and W. Xu, “Model predictive control for ARC motors using extended state observer and iterative learning methods,” IEEE Transactions on Energy Conversion, vol. 37, no. 3, pp. 2217–2226, 2022.

- [13] J. Zhang and D. Meng, “Improving tracking accuracy for repetitive learning systems by high-order extended state observers,” IEEE Transactions on Neural Networks and Learning Systems, 2022.

- [14] P. Kicki, K. Łakomy, and K. M. B. Lee, “Tuning of extended state observer with neural network-based control performance assessment,” European Journal of Control, vol. 64, p. 100609, 2022.

- [15] G. Shi, X. Shi, M. O’Connell, R. Yu, K. Azizzadenesheli, A. Anandkumar, Y. Yue, and S.-J. Chung, “Neural lander: Stable drone landing control using learned dynamics,” in 2019 International Conference on Robotics and Automation (ICRA), 2019, pp. 9784–9790.

- [16] B. Guo and Z. Zhao, “On the convergence of an extended state observer for nonlinear systems with uncertainty,” Systems & Control Letters, vol. 60, no. 6, pp. 420–430, 2011.

- [17] W. Bai, S. Chen, Y. Huang, B. Guo, and Z. Wu, “Observers and observability for uncertain nonlinear systems: A necessary and sufficient condition,” International Journal of Robust and Nonlinear Control, vol. 29, no. 10, pp. 2960–2977, 2019.

- [18] J. Chen, Z. Gao, Y. Hu, and S. Shao, “A general model-based extended state observer with built-in zero dynamics,” arXiv preprint arXiv:2208.12314, 2023.

- [19] Z. Gao, “Scaling and bandwidth-parameterization based controller tuning,” in Proceedings of the 2003 American Control Conference, 2003. IEEE, 2003, pp. 4989–4996.

- [20] B. Wie and D. S. Bernstein, “Benchmark problems for robust control design,” Journal of Guidance, Control, and Dynamics, vol. 15, no. 5, pp. 1057–1059, 1992.

- [21] Open AI, “Safety gym,” http://www.ecpsystems.com/controls_torplant.htm [Accessed: 3-23-2024].