Distributionally Robust Probabilistic Prediction

for Stochastic Dynamical Systems

Abstract

Probabilistic prediction of stochastic dynamical systems (SDSs) aims to accurately predict the conditional probability distributions of future states. However, accurate probabilistic predictions tightly hinge on accurate distributional information from a nominal model, which is hardly available in practice. To address this issue, we propose a novel functional-maximin-based distributionally robust probabilistic prediction (DRPP) framework. In this framework, one can design probabilistic predictors that have worst-case performance guarantees over a pre-defined ambiguity set of SDSs. Nevertheless, DRPP requires optimizing over the function space of probability distributions, which is generally intractable. We develop a methodology that equivalently transforms the original maximin in function spaces into convex-nonconcave minimax in Euclidean spaces. Although it remains intractable to seek a global optimal solution, two suboptimal solutions are derived. By relaxing the inner constraints, we obtain a suboptimal predictor called Noise-DRPP. Relaxing the outer constraints yields another suboptimal predictor, Eig-DRPP. More importantly, we establish an explicit upper bound on the optimality gap between Eig-DRPP and the global optimal predictor. Utilizing the theoretical results obtained from solving the two suboptimal predictors, we synthesize practical rules to judge whether the pre-defined ambiguity set is too large or small. Finally, we conduct elaborate numerical simulations to compare the performance of different predictors under different SDSs.

Index Terms:

Stochastic Dynamical System, Distributionally Robust Optimization, Uncertainty Quantification, Probabilistic Prediction.I Introduction

I-A Background

A probabilistic prediction is a forecast that assigns probabilities to different possible outcomes, enabling better uncertainty quantification, risk assessment, and decision-making. Due to the ever-increasing demand for both accurate and reliable predictions against uncertainty [1], it has wide applications in fields such as epidemiology [2], climatology [3], and robotics [4], etc. The performance of a probabilistic prediction is evaluated by both sharpness and calibration of the predictive probability density function (pdf). The sharpness means how concentrated the predictive pdf is, and calibration concerns the statistical compatibility between the predictive pdf and the realized outcomes [5]. To simultaneously assess both sharpness and calibration, one usually assigns a numerical score to the predictive pdf and the realized value of the prediction target. If one has a further requirement that the expected scoring rule is maximized only when the predictive pdf equals the target’s real pdf almost everywhere, a strictly proper scoring rule (see in [6]) is needed.

The probabilistic prediction of a stochastic dynamical system (SDS) is to predict the conditional pdfs of future states based on some prior information about the system. One’s prior information is usually in the form of a nominal model, which is a simplified or idealized representation of the underlying system. While probabilistic prediction for a linear system with Gaussian noises is straightforward, it becomes computationally expensive once the nominal SDS is nonlinear or the noises are non-Gaussian. Therefore, a lot of research contributes to approximating the future pdf to balance the requirement of prediction precision and computation complexity [7].

I-B Motivation

Despite the significant achievements in the current prediction algorithms for SDSs, they are not guaranteed to perform well if the distributional information provided by a nominal model is inaccurate. It is ubiquitous for a nominal SDS to possess both inaccurate state evolution functions and incomplete distributional information of noises. For example, the nominal state evolution function may be a local linearization from a nonlinear model [8]. Additionally, one’s nominal information of the distributions of system noises is usually partial. Most of the time, only estimated mean and covariance are available in the nominal model, which is far from uniquely determining a pdf [9]. A nominal predictor can lead to misleading predictive pdfs even though the uncertainties on SDS are not prominent (as demonstrated by experiments in Sec. VIII). Addressing this issue is crucial for the widespread application of probabilistic prediction in model-uncertain scenarios. In this paper, the aim is to develop a framework to design probabilistic predictors for SDSs with worst-case performance guarantees.

To design probabilistic predictors against distributional uncertainties of model, we inherit the key notions from the distributionally robust optimization (DRO) [10]. DRO is a minimax-based mathematical framework for decision-making under uncertainty. It aims to find the decision that performs well across a range of possible pdfs in an ambiguity set. Nevertheless, what we need is to find the optimal probabilistic predictor that performs well over a set of SDSs. In DRPP, we generalize the idea of ambiguity sets to jointly describe one’s uncertainties of noises’ pdfs and state evolution functions.

I-C Contributions

Motivated by the aforementioned considerations, we propose a novel functional-maximin-based distributionally robust probabilistic prediction (DRPP) framework. In DRPP, the objective is the expected score under the worst-case SDS within an ambiguity set, and the predictor optimizes the objective over the function space of all pdfs on the state space. Compared to DRO, the proposed DRPP is significantly different in three perspectives. i) (Functional optimization) One needs to find the optimal pdfs over function spaces rather than optimizing decisions over Euclidean spaces. ii) (Conditional prediction) The predictor for an SDS is a map from the state space to the pdf space, rather than a single decision variable without conditioning on some context. iii) (Trajectory expectation) The objective is an expectation concerning all possible trajectories and control inputs, rather than an expectation over a single random variable. The main contributions of this paper are summarized as follows:

-

•

To the best of our knowledge, this is the first work on probabilistic prediction of SDSs that optimizes the worst-case performance over an ambiguity set. We have generalized the classical conic moment-based ambiguity set such that both the uncertainties of state evolution functions and system noises are quantified.

-

•

We develop a methodology to greatly reduce the complexity of solving DRPP. First, we use the principle of dynamic programming to prove that solving DRPP only needs to concern the one-step DRPP. Second, we exploit a necessary optimality condition to equivalently transform the maximin problem in function spaces into a convex-nonconvex minimax problem in Euclidean spaces.

-

•

We design two suboptimal DRPP predictors by relaxing constraints. Noise-DRPP is the optimal predictor when the nominal state evolution function is accurate, and Eig-DRPP is the optimal predictor when the predictor is constrained by an eigenvector restriction. Moreover, an explicit upper bound of the optimality gap between Eig-DRPP and the global optimal predictor is obtained.

-

•

We provide upper and lower bounds for the optimal worst-case value function of the DRPP. Utilizing these theoretical bounds, we synthesize practical rules to judge whether a pre-defined ambiguity set for SDS is too large or too small. Based on these rules, one can adjust the ambiguity set in real-time for better performance.

I-D Organization

The rest of this article is organized as follows. Section II provides a brief review of the related work and Section III introduces preliminaries on probabilistic prediction and DRO. In Section IV, we formulate the proposed DRPP problem. In Section V, we introduce the main methodology to address the main challenges in the DRPP. Then, we relax the constraints to solve two suboptimal DRPPs with upper and lower bounds in Section VI and Section VII. Next, we conduct elaborate experiments and provide an application in Section VIII, followed by a conclusion in Section IX.

II Related Work

The study of DRPP for SDSs integrates ideas from fields including probabilistic prediction, control theory, minimax optimization, and DRO. In this section, we briefly summarize the most relevant work as follows.

Probabilistic prediction of SDS

According to how the predictive pdfs are approximated, existing literature in the control community can be classified into the following groups. First, approximation by the first several central moments. Particularly, there are many methods using the mean and covariance to describe the predictive distribution. Classical local approximation methods include the Taylor polynomial in the extended Kalman filter [11, 12], the Stirling’s interpolation [13] and the unscented transformation [14] as representatives for derivative-free estimation methods [15].

Second, approximation by a weighted sum of basis functions. The Gaussian mixture model (GMM) [16] approximates a pdf by a sum of weighted Gaussian distributions, and it can achieve any desired approximation accuracy if there are sufficiently large number of Gaussians [17]. Using GMM, the probabilistic prediction is equivalent to propagating the first two moments and weights of each basis [18]. The polynomial chaos expansion (PCE) [19] approximates a state evolution function by a sum of weighted polynomial basis functions, and these basis functions are orthogonal regarding the input pdf. In this way, the mean and covariance of the output can be conveniently approximated by the weights of PCE [20].

Third, approximation by a finite number of sampling points. The numerical solution using Monte Carlo (MC) samplings [21] can approximate any statistics of the output distribution if the sampling number is sufficiently high. For a further review of the modern MC methods in approximated probabilistic prediction, we recommend the review [22].

Nevertheless, assuming the accuracy of a nominal model is restrictive, especially in the control engineering practice. As reviewed in [7], an important application of probabilistic prediction for SDSs is to formulate chance constraints [23] in stochastic model predictive control (SMPC) [24, 25]. In these situations, a misleading predictive pdf can lead to unsafe control policies. To take the distributional uncertainties into account, Coulson et al [26] have proposed a novel distributionally robust data-enabled predictive control algorithm. While the end-to-end algorithm in [26] aims to robustify the control performance, the problem of distributionally robust probabilistic prediction remains open.

Probabilistic prediction in machine learning

Taking a historical view of the development of PP in the statistics and machine learning communities, we can find the research in the early phase also focuses on approximating the posterior pdf, especially from a Bayesian perspective. The advent of the scoring rule has provided a convenient scalar way to measure the performance of probabilistic predictions [5]. Then, the research focus is shifting toward training probabilistic predictors to optimize the empirical scores. Classical statistical methods include Bayesian statistical models [27], approximate Bayesian forecasting [28], quantile regression [29], etc. Recent machine learning algorithms include distributional regression [30], boosting methods such as NGBoost [31] and autoregressive recurrent networks like DeepAR [32], etc. We recommend [33] for a more detailed review of machine learning algorithms for probabilistic prediction.

Distributionally robust optimization

To guarantee the prediction performance for SDSs when the nominal model is not accurate, the primary task is to describe the ambiguity set for SDSs, which is the key concept in the DRO community. DRO is a mathematical framework for decision-making under uncertainty. Moment-based ambiguity sets are among the most widely studied in DRO, leveraging partial statistical information (e.g., mean, covariance, or higher moments) to define a set of possible distributions. In the seminal work [34], the Chebyshev ambiguity set is defined, where only mean and covariance are known. Using this ambiguity set, several extensions are further studied in [35]. Next, Delage and Ye [10] generalize the Chebyshev ambiguity set to the conic moment-based ambiguity set, which allows the mean and covariance matrix to be also unknown. Overall, viewing from the outer optimization, DRO still optimizes in an Euclidean space, while DRPP optimizes in a functional space of all pdfs. Since classical tools in DRO cannot be directly applied to solve DRPP, new methods are needed.

III Preliminaries

In this paper, we denote random variables in bold fonts to distinguish them from constant variables. Given a random variable taking values in , we denote its pdf as . Given a map , the expectation of is denoted as or . We also denote a sequence by , and is defined as an empty set. We denote the set of positive semi-definite matrices with dimension as . Given , we use to indicate .

III-A Probabilistic Prediction

Suppose a random variable takes value on with distribution , the output of a probabilistic predictor is a predictive distribution , where denotes the set of all probability distributions over . After the value of is materialized as , a scoring rule,

assigns a numerical score to measure the quality of the predictive distribution on the realized value . The expected scoring rule of , usually sharing the same operator but different operands, is

A scoring rule is proper with respect to the distribution space if holds for all . It is strictly proper if the equality holds only when equals almost everywhere. For example, the logarithm score,

is one of the most celebrated scoring rules for being essentially the only local proper scoring rule up to equivalence [36].

III-B Distributionally Robust Optimization

Traditional stochastic optimization approaches assume a known probability distribution and solve problems

where represents the decision variable, is sampled from the distribution , is the objective function. However, in many real-world problems, the true probability distribution of the uncertain parameters is unknown or partially known. DRO addresses this issue by considering a family of distributions, known as an ambiguity set, rather than a single distribution. The goal is to find a solution that performs well for the worst-case distribution within this set. A general DRO problem is formulated as:

where is the ambiguity set, a set of probability distributions that are close to the nominal distribution in some sense.

The ambiguity set can be defined in various ways. One of the most popular ones is the moment-based sets, where distributions are constrained by their moments (e.g., mean, variance). For example, the celebrated conic moment-based ambiguity set is used in the seminal work [10] such that

| (1) | ||||

where is the nominal support, and are the nominal mean and covariance, quantifies the uncertainties on the mean and covariance. Other popular ambiguity sets include structure-based sets, distance-based sets, etc. A thorough review of popular ambiguity sets is provided in [37, Sec. 3].

IV Problem Formulation

A probabilistic prediction for a finite-horizon controlled SDS is formulated by specifying the following elements:

where the system model includes a state space , a control input space , a time-variant controlled SDS , a control policy , and a time horizon . The prediction model includes a scoring rule and a probabilistic predictor .

To begin with, we define the system model and the probabilistic prediction model respectively. Next, we generalize the classical conic moment-based ambiguity set (1) for SDSs such that one’s uncertainties of both state evolution functions and system noises can be jointly described. Finally, we formulate the DRPP problem as a functional maximin optimization.

IV-A System and Prediction Model

System model . Consider a discrete-time time-variant SDS with horizon , denoted as . The dynamics of at time step is

| (2) |

where is the system state taking values in , the initial state is given as , is the state evolution function. is the control input taking values in , which is generated from a state-feedback control policy , i.e., . is the system noise taking values in . Since the pair determines , it is convenient to denote the pair as . Without raising confusion, we also denote the state-control pair as .

Assumption 1 (SDS).

At each time step ,

-

•

has finite operator norms, i.e., is bounded,

-

•

the noises have finite second moments, and they are not necessarily independent or identically distributed.

Prediction model . In this paper, we use the popular logarithm score to measure the performance of probabilistic predictions, i.e., . Starting from the system’s initial state , a probabilistic predictor keeps observing the states and the control inputs, aiming to predict the conditional pdfs of the next state. The probabilistic prediction of the SDS state at time step is a map from the previous state and control input to a predictive conditional pdf for the next state, i.e.,

| (3) |

The prediction performance of at time step is measured as . Next, we measure the prediction performance of a probabilistic predictor along an SDS state trajectory by the sum of one-step scores.

Because the logarithm scoring rule is strictly proper, the expected logarithm score can only attain its maximum when identifies with almost everywhere. Therefore, a predictor needs full information on both the state evolution function and the noises’ distributions to maximize the expected scores, which is often impossible in practice. We detail this lack of information in the following assumption on the predictor’s prior information during the trajectory prediction.

Assumption 2 (Predictor’s prior information).

At each time step , the probabilistic predictor has only access to the following information:

-

•

the state trajectory , control input sequence ,

-

•

a nominal state evolution function ,

-

•

a nominal noise mean , and a nominal noise covariance .

The predictor’s prior information usually does not identify with the ground truth. To further quantify the uncertainty between the nominal model and the real model, we need to define an ambiguity set of SDSs.

IV-B Ambiguity Set

The crucial spirit of an ambiguity set is, that although a nominal model rarely identifies with the ground truth, one can still have additional information or belief that the real model locates around the nominal model. For example, an upper bound for the operator norm may be available when the nominal function is derived from a system identification procedure. Additionally, estimations and confidence regions (CRs) of the mean and covariance of system noise may be statistically inferred from historical data. Even if no supportive evidence exists to construct a reasonable ambiguity set, one can still subjectively select an ambiguity set.

Jointly considering the uncertainties of both state evolution functions and noises, we define the conic moment-based ambiguity set for SDSs.

Definition 1 (Conic moment-based ambiguity set for SDSs).

Conditioned on a state-control pair at time step , the conic moment-based ambiguity set for is defined by four constraints as follows

| (4) | ||||

where are defined in Assumption 2, is the support set of , and we fix it to be in this paper. Then, one’s uncertainties of the nominal model are quantified by , , and .

When there is no confusion about the parameters that determine an ambiguity set, we often use for simplification.

Remark 1.

Theoretically, one can also let and be dependent on , and our results will not be affected. Nevertheless, a practical consideration is that one’s uncertainty quantification for the noises is usually less informative than the state evolution function. In other words, being conditioned on different usually does not change the quantifications.

IV-C Distributionally Robust Probabilistic Prediction (DRPP)

To begin with, we need to formulate the expected cumulative scores that a predictor can achieve from a given state-control pair. In reinforcement learning, the value function is a mathematical tool to estimate the expected cumulative reward for an agent following certain policy [38]. This concept can also be applied to estimate the performance of a probabilistic predictor. Starting from a time step with a state-control pair , the expected performance of a predictor is denoted by the value function ,

| (5) |

where the expectation is over the state-control trajectory. For convenience, we also use the notation

for the expected performance of along the whole time horizon starting from initial state-control pair .

Next, given an initial state-control pair and a sequence of ambiguity sets of the underlying SDS , we are interested in designing probabilistic predictors that can perform well in the worst-case scenarios. To this end, a DRPP problem for SDS is formulated as a multistep functional maximin optimization:

| s.t. |

where the inner minimization yields the worst-case performance for a given predictor over the ambiguity sets, the outer maximization essentially finds out the optimal predictive pdfs to maximize the worst-case performance. Please see Fig. 1 for the overall structure of this paper.

V Main Methodology

To begin with, we use the principle of dynamic programming to reduce a DRPP problem into solving one-step DRPPs. A discussion on when dynamic programming fails is provided. Then, we canonicalize the ambiguity set of SDS into consistent constraints, such that the problem is transformed into an infinite dimensional conic linear program. Next, we take the dual form of inner minimization with a strong duality guarantee. Based on the dual analysis, we develop a necessary optimality condition to handle the essential hardness of functional optimization. Exploiting this optimality condition, we equivalently transform the one-step DRPP in function spaces into a convex-nonconcave minimax problem in Euclidean spaces. Finally, we provide a discussion on the computation complexity.

V-A Dynamic Programming and One-step DRPP

Given a state-control pair at time step , we denote the optimal worst-case value function as

| (6) |

Leveraging the principle of dynamic programming, one can derive the following recursive equations for ,

| (7) | ||||

Remark 2.

The equality may not hold when the system dynamics is assumed to be strictly time-invariant, because the worst-case SDSs that minimize the first term are not guaranteed to minimize the second term . In this case, the problem of DRPP for SDS will not possess an optimal substructure [39], thus the principle of dynamic programming fails to apply.

Thanks to the principle of dynamic programming, one has that the optimal predictor at time step is

Conditioned on the previous state-control pair , optimizing over the probabilistic predictor is equivalent to optimizing over the predictive pdf. Therefore, conditioned on at time step , we call the maximin optimization over the predictive pdf space and the ambiguity set as a one-step DRPP:

where is an abbreviation for the predictive pdf of conditioned on . In the rest of this paper, we focus on solving the one-step DRPP .

V-B Ambiguity Set Constraints Canonicalization

Towards solving the one-step DRPP , the very first step is to canonicalize the conic moment-based ambiguity set such that all the optimization variables appeared in the objective and constraints are consistent.

First, to canonicalize the first ambiguity constraint on , given a state-control pair at time step , we define the real one-step state evolution as , and the nominal one-step state evolution as . Then, this ambiguity constraint of is equivalent to describing the distance between and , i.e.,

Second, to canonicalize the remaining ambiguity constraints on , we need to keep them consistent with the pdf in the optimization objective. For ease of notation, since the one-step DRPP problem is always conditioned on a given state-control pair , we abbreviate the notations by denoting and without raising confusion. It follows that , and we shall align one with another. Here we align with and then optimize over . Finally, the inner minimization of the one-step DRPP is canonicalized as an infinite dimensional conic linear program:

| (8) | ||||

Remark 3.

In fact, the alignment order shall not be reversed, a discussion on why aligning with and then optimizing over leads to a dead end is provided in Appendix A.

V-C Dual Analysis

Directly solving the worst-case pdf in the function space is difficult. As a first step towards dealing with this challenge, we leverage the dual analysis for problem . If a strong duality holds, one can optimize the Lagrange multipliers in the dual form. For the inner optimization (8), a classical conclusion is that is a sufficient condition for strong duality to hold [10, p. 5]. Let for be the Lagrange multipliers, the dual form of (8) is

where . Please see Appendix B for a detailed derivation.

Unfortunately, we have to deal with an infinite number of constraints indexed by in problem . Although the dual problem cannot lead to a direct solution, it helps to develop an optimality condition for the probabilistic predictors in the following theorem.

Theorem 1 (Optimality condition).

A necessary optimality condition for the one-step DRPP is that is subject to a Gaussian distribution almost everywhere, i.e., and such that

| (9) |

Proof.

Please see Appendix C. ∎

Theorem 1 reduces the optimization complexity from the original function space to the Gaussian distribution family, more specifically, the parameter spaces of mean and covariance. Therefore, can be transformed into a finite-dimensional optimization problem as follows

| (10) | ||||

Please see Appendix D for a detailed derivation. However, this problem is now a nonconvex semidefinite optimization with respect to , solving a globally optimal solution is NP-hard in general. An alternative is to use numerical iterative algorithms to seek a locally optimal solution of (10). Nevertheless, the local optimality of the dual problem cannot guarantee the worst-case performance of . To deal with this hardness, we avoid directly solving the dual problem by applying the optimality condition in Theorem 1 to .

V-D Primal Analysis Under Gaussian Parameterization

Substituting the parameterized into the primal one-step DRPP , we have the objective being as

Next, notice that and , one has equals to . The functional minimax is then equivalent to a minimax optimization in Euclidean spaces as follows

Lemma 1.

Given any , the one-step DRPP has explicit solutions for and as

i) .

ii) .

Proof.

Please see Appendix E. ∎

Substituting the expressions of and into , it is equivalent to a convex-nonconcave minimax optimization:

| (11) | ||||

where . Notice that the inner maximization is an indefinite quadratic constrained quadratic programming (QCQP) problem, which is still short of efficient algorithms to solve this kind of minimax optimization.

Given any and , one can first solve by concerning the following quadratic constrained linear programming (QCLP) problem:

| (12) |

Lemma 2.

The solution of the QCLP problem (12) is

Proof.

Using the KKT condition, there are

The first condition reveals that and , thus the second condition leads to . Then,

and the proof is completed. ∎

Substituting the optimal into the problem, the inner optimization of (11) can be further reformulated as a convex maximization problem:

| (13) |

where , , and .

V-E Complexity Analysis

As we have shown, solving the one-step DRPP is equivalent to finding a global minimax point (Stackelberg equilibrium) for a convex-nonconcave minimax problem where the outer minimization is a semidefinite programming and the inner problem is to maximize a non-smooth convex function subject to quadratic constraint. It is known that convex maximization is NP-hard in very simple cases such as quadratic maximization over a hypercube, and even verifying local optimality is NP-hard [40]. Thus finding a global minimax point of a one-step DRPP problem is also NP-hard.

Even worse, finding a local minimax point of a general constrained minimax optimization is of fundamental hardness. Not only the local minimax point may not exist [41], but any gradient-based algorithm needs exponentially many queries in the dimension and to compute an -approximate first-order local stationary point [42]. Finally, the local stationary point is not always guaranteed to be a local minimax point [43, 44].

In summary, globally finding a minimax point of a one-step DRPP is computationally intractable, and gradient-based algorithms aiming for local minimax points are limited by both convergence speed and performance guarantee. Therefore, a reasonable goal for the one-step DRPP is to derive upper or lower bounds of the optimal worst-case value function by relaxing the constraints imposed on the predictors or SDSs.

VI Value Function Upper Bound

To get an upper bound of , one can either enlarge the feasible region of the outer maximization or shrink the feasible region of the inner minimization. Since the outer feasible region has already contained all pdfs, we shall weaken some constraints of the ambiguity set . If the first constraint is substituted by , one is constrained to a smaller ambiguity subset of . If a global minimax point can be solved for the relaxed one-step DRPP, one will get a suboptimal predictor and a performance upper bound.

VI-A Problem Reformulation

If we shrink the ambiguity set of one-step DRPP by assuming that the nominal one-step state evolution is equal with the nominal one-step state evolution , there is which means that the value of is precisely known after been observed.

Remark 4.

Now that there is no uncertainty of the state evolution , intuition is that predicting the state’s conditional pdf is equivalent to predicting the noise’s conditional pdf . To delineate this intuition in rigor, we construct an auxiliary predictive pdf as

Then, the objective of the one-step DRPP , which is originally an expectation over conditioned on , now equals an expectation over conditioned on , i.e.,

As a result, the outer optimization of that maximizes over can be recast to maximize over .

To summarize, the essence of probabilistic prediction subject to no uncertainty of one-step state evolution is to predict the noise’s conditional distribution.

Conditioned on , we let be the conditional expectation of and abbreviate the conditional pdf as . Different from the general condition as discussed in Remark 3 where one should not formulate the inner minimization over , now the inner minimization can be structured as a canonical moment problem on and :

| (14) | ||||

With the outer maximization imposed on , we can get a reformulated one-step DRPP whose optimal worst-case value function is an upper bound of .

VI-B Noise-DRPP

Taking the dual form of the inner moment problem and joining it with the outer maximization, we transform the primal maximin problem into the following maximization problem:

where . Please see Appendix F for a detailed derivation for the dual problem.

Theorem 2 (Noise-DRPP).

Given at time step , if the probabilistic predictor has no ambiguity of the one-step state evolution, i.e., , the solution to the one-step DRPP is:

i) The optimal predictive pdf is

ii) The worst-case SDS belongs to the set

iii) The objective value at is

Proof.

Please see Appendix G. ∎

First, an immediate application of Theorem 2 is to utilize the explicit solution of to develop a probabilistic prediction algorithm. Because this predictor solely focuses on the distributional robustness against the ambiguity of system noises, we name it Noise-DRPP. The pseudo-code is in Algorithm 1.

Second, notice that the optimal predictive pdf is unique, but the worst-case SDS is not. It contains any SDS that has a proper one-step state evolution and a noise whose first two moments satisfy an equation.

Finally, which is also our original goal, the objective value at is an upper bound of . Since the objective value does not depend on , we can recursively use the dynamic programming (7) to get an upper bound of .

Theorem 3 (Upper bound of ).

Given an initial state-control pair , an upper bound of the optimal worst-case value function is

Remark 5.

We highlight that this upper bound can be computed offline because it only depends on the ambiguity sets . It can be used to verify whether the current ambiguity set is too conservative by comparing it with the real-time performance of a Noise-DRPP predictor in practice: if the scores always exceed this upper bound, one can suspect that the ambiguity set is too large.

VII Value function Lower Bound

To get a lower bound of , one can either shrink the feasible region of the outer maximization or enlarge the feasible region of the inner minimization. Because enlarging the ambiguity set does not essentially change the structure to alleviate the difficulty of a one-step DRPP , we choose to restrict to a smaller pdf family by forcing the eigenvectors of the predictive covariance to be the same as the nominal covariance . In this section, we elaborate on how the eigenvector restriction contributes to a well-performed algorithm and a lower bound.

VII-A Problem Reformulation

After relaxing the constraints of (11), one can still get a suboptimal prediction performance guarantee in the worst case, which is also a performance lower bound to the original one-step DRPP. Nevertheless, the choice of relaxation methods is delicate, which can greatly affect both the optimality gap and the computation efficiency.

Suppose the spectral decomposition for is , where is an orthogonal matrix whose columns are eigenvectors of and . Similarly, we let the spectral decomposition for be , where is an orthogonal matrix whose columns are eigenvectors of and . Then, the eigenvector restriction for (11) is to impose the following constraint to the predictor:

| (15) |

To explain why we chose the eigenvector restriction, some supporting lemmas need to be derived.

Lemma 3.

The optimal to problem (13) is an eigenvector of with its -norm being .

Proof.

There are necessary optimality conditions for (13):

where the first one comes from the KKT condition and the second one holds because the objective increases monotonously with . It follows that the optimal should be an eigenvector of with its -norm being . ∎

If the eigenvectors of have explicit expressions, one may have explicit expressions for . Then, substituting these expressions to (11) can transform the original minimax optimization problem into a semidefinite-constrained maximization, where tractable optimization solvers may be available. However, it is always difficult to explicitly derive the eigenvectors when does not identify with .

Lemma 4.

Proof.

VII-B Eig-DRPP

Notice that optimization is convex for any fixed . Therefore, can be solved by taking the minimum of different convex optimization problems, which is numerically efficient. Let be the solution of , we summarize the solution of a one-step DRPP under eigenvector restriction as follows.

Theorem 4 (Eig-DRPP).

Given at time step , if the probabilistic predictor is constrained by the eigenvector restriction (15), the solution to the one-step DRPP is:

i) The optimal predictive pdf is

ii) The worst-case SDS belongs to the set

iii) The objective value at is

Proof.

First, an immediate application of Theorem 4 is to utilize the explicit solution of to develop a probabilistic prediction algorithm, named Eig-DRPP. The pseudo-code is presented in Algorithm 2. Second, although the optimal predictive pdf is unique, the worst-case SDS is not. Compared to Theorem 2, there are relatively more restrictions on the set of worst-case SDSs. Third, when , i.e., the predictor has no ambiguity of , the solution of is and can be any feasible index. In this case, both the optimal predictive pdf and objective value in Theorem (4) coincide with those in Theorem (2). However, the sets of worst-case SDSs are not the same, due to the extra constraint for the expectation in Theorem (4).

Finally, the objective value at is a lower bound of . Since the objective value depends on through , one cannot directly use the dynamic programming (7) recursively to get a lower bound of .

Theorem 5 (Lower bound of and optimality gap of ).

Given an initial state-control pair and an upper bound of such that for each , there is

i) A lower bound of the optimal worst-case function is

where is the solution to with all being replaced by .

ii) The optimality gap between Eig-DRPP and the global optimal predictor is upper bounded as follows,

| (16) | ||||

Proof.

i) Notice that as increases, the ambiguity set enlarges, thus the optimal value of one-step DRPP under eigenvector constraint should monotonously decrease. Since the upper bound of , i.e., , is independent of , one can still use (7) to derive a lower bound.

ii) Subtracting this lower bound from the upper bound in Theorem 3, one immediately gets an upper bound of the optimality gap for Eig-DRPP. As illustrated in (16), comes from the upper bound, which is determined by and the determinant of . While comes from the lower bound, which is jointly determined by and the eigenvalues of by solving . ∎

Remark 6.

Similar to the upper bound, this lower bound can also be computed offline because it only depends on the ambiguity sets . It can be used to verify whether the current ambiguity set is too optimistic by comparing it with the real-time performance of an Eig-DRPP predictor in practice: if the scores always stay lower than this lower bound, one can suspect that the ambiguity set is too small.

VIII Experiments

In this section, we study a two-dimensional SDS with two dimensional control inputs. Though it may not be linear or time-invariant, we suppose the nominal model available to the predictor is a linear time-invariant (LTI) SDS. A series of experiments are conducted to explore four questions. i) Given an SDS subject to an ambiguity set, what are the performance advantages of Noise-DRPP and Eig-DRPP compared to a nominal predictor? ii) What can be exploited from the upper bound in Theorem 3 and the lower bound in Theorem 5 to adjust ambiguity set? iii) How much will the prediction performances be influenced by different control strategies? iv) How well do DRPP predictors perform in providing high probability confidence regions of the future states?

VIII-A Experiment Setup

Ambiguity set

Conditioned on a state-control pair at time step , the nominal state evolution function is given as

and the uncertainty between and the true is quantified by . The nominal support, nominal mean and covariance of the noise conditioned on are

and the uncertainty between and the real are quantified by and , respectively. Given the ambiguity set specified above, we simulate the real SDS by randomly choosing one from . Although the nominal model is an LTI model, the real model does not have to be LTI. This is because contains linear time-variant (LTV) and even nonlinear time-variant (NTV) models.

Simulation mechanism

In this section, three different simulation mechanisms are conducted, corresponding to LTI, LTV, and adversarial NTV models, respectively.

i) (LTI) Let be two independent random variables subject to the uniform distribution on , and let be another independent random variable subject to the uniform distribution on . We randomly generates for , then we simulate a LTI SDS as

where .

ii) (LTV) Similar to the LTI simulation, for each , let be random variables that are independent and identically distributed to . We randomly generates for each and , then we simulate a LTV SDS as

where .

iii) (Adversarial) In this case, the underlying SDS is no longer linear or pre-generated before prediction. It is allowed to adversarially choose an SDS in the ambiguity set at each step such that the prediction performance is minimized. Specifically, at each step, after a predictive pdf has been output by the predictor, the adversarial SDS uses the predictive covariance to choose a worst-case SDS as described in Theorem 4.

Predictors comparison

We study two different control strategies, the zero input and the linear quadratic regulation (LQR). Specifically, the zero input strategy means no control input is imposed, and the LQR control strategy is defined as a standard linear quadratic regulator based on the nominal linear model with and all set as identity matrices. Under each control strategy, we randomly generate 1,000 trajectories starting from the same initial state for each of the generation mechanisms. For the LTI and LTV mechanisms, since the ground-truth dynamics are known before prediction, we have an optimal predictor as the least upper bound of prediction performance. At each time step , let the nominal predictor predicts , and the optimal predictor predicts . Then, we compare the prediction performance among the nominal predictor, Noise-DRPP, Eig-DRPP, and the optimal predictor. For the adversarial mechanism, there is no explicit predefined optimal predictor because the the real SDS depends on the predictor. Therefore, we should compare the prediction performance among the nominal predictor, Noise-DRPP, and Eig-DRPP. In fact, as guaranteed by Theorem 4, when predictors are constrained by the eigenvector restriction, Eig-DRPP is the optimal predictor under this adversarial scenario.

VIII-B Results and Discussions

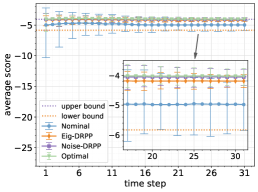

In Fig. 2, the prediction performances of different probabilistic predictors on different SDSs under different control strategies are visualized and compared with each other. (a-c) are performances under zero control strategy, and (d-f) are performances under LQR control strategy; the SDSs in (a) and (d) are the same, generated from the LTI mechanism. The SDSs in (b) and (e) are the same, generated from the LTV mechanism; the SDSs in (c) and (f) are the same, generated from the adversarial mechanism. For each SDS, we evaluate the temporal average scores of each predictor at each time step on 1,000 randomly sampled trajectories. At each step, the mean of 1,000 average scores is plotted as a dot, which is an approximation to the expected average score. The 5% and 95% percentiles of these average scores are plotted as an error bar, reflecting how concentrated the average scores are.

Performance advantages

Under each setting of SDS and control strategy, both Noise-DRPP and Eig-DRPP possess larger expected average scores at each time step compared to the nominal predictor. Since the ambiguity set for our simulation does not have too large an uncertainty, the scores of both DRPP predictors are close to the optimal predictor. Additionally, both DRPP predictors have more concentrated average scores at each time step. As the uncertainty of the real underlying SDS increases from LTI to adversarial, the performance gap between the DRPP predictors and the nominal predictor increases.

Upper and lower bounds

When the ambiguity set is not assured to be accurate, we can use the theoretical upper and lower bounds to adjust the ambiguity set, and then improve the performance of DRPP predictors. We provide two simple but efficient rules to adjust the ambiguity set in practice: i) If the expected score of any DRPP predictor is always larger than the upper bound, it means that the true SDS is less adversarial than the worst-case SDS in , thus the predictor can use a smaller ambiguity set for better scores. ii) If the expected score of an Eig-DRPP predictor is always smaller than the lower bound, it means that the true SDS is more adversarial than the worst-case SDS in , thus the Eig-DRPP predictor can use a larger ambiguity set for better scores.

Influence of control strategies

As we have discussed in Remark 4, when a predictor has no ambiguity of the system’s state evolution function, predicting the states is equivalent to predicting the noises. In this case, the choice of control strategies does not affect any probabilistic predictor. However, when a predictor does have ambiguity of the system’s state evolution function, different control strategies can greatly influence the performance of a probabilistic predictor. Since the ambiguity set at each step directly depends on the state-input pair, different control strategies will lead to quite different state-input trajectories, thus leading to quite different prediction performances. We can observe this phenomenon by comparing (a-c) with (d-f) in Fig. 2 respectively. An intuitive explanation is that the LQR control strategy regulates the system states towards the neighborhoods of , where the ambiguity of one-step state evolution is smaller than other states, thus the DRPP predictors perform better.

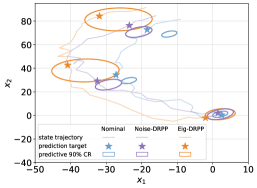

VIII-C Application: Predict Robust Confidence Regions

An immediate application of probabilistic prediction is to predict high-probability confidence regions for future states. Since the predictive pdfs of both Noise-DRPP and Eig-DRPP are Gaussian, one can easily derive an elliptical -confidence region for any . In Fig. 3, we have visualized the predictive 90% confidence regions for each predictor at certain time steps of a randomly generated trajectory.

Visualizing the behaviors of different probabilistic predictors on a trajectory, one can intuitively explain why DRPP predictors outperform the nominal predictor. The nominal predictor is overly confident, where the predictive pdfs are always too sharp to enclose the future states in their 90% CRs. In contrast, DRPP predictors have taken the ambiguity of noises into account, whose CRs are more robust to contain the prediction targets most of the time. If we further compare Noise-DRPP with Eig-DRPP, it seems that Eig-DRPP is more intelligent in adaptively changing the ellipses’ shapes. This is because Eig-DRPP has also considered the ambiguity of one-step state evolution, and the eigenvalues of predictive covariances are attained based on minimax optimization.

IX Conclusion

This paper presented a distributionally robust probabilistic prediction (DRPP) framework to address the challenges of predicting future states in stochastic dynamical systems (SDSs) with incomplete and imprecise distributional information. The proposed DRPP framework ensures robust performance by designing predictors that account for the worst-case scenarios over a predefined ambiguity set of SDSs. To overcome the inherent intractability of optimizing over function spaces, we reformulated the original functional-maximin problem into an equivalent convex-nonconcave minimax problem in Euclidean spaces.

While achieving the global optimal solution remains computationally prohibitive, we developed two practical suboptimal predictors: Noise-DRPP, obtained by relaxing the inner constraints, and Eig-DRPP, derived from relaxing the outer constraints. An explicit upper bound on the optimality gap between Eig-DRPP and the global optimal predictor was also established, providing theoretical guarantees on the quality of the suboptimality. Furthermore, leveraging the theoretical results, we proposed practical guidelines to assess and refine the size of the ambiguity set, balancing robustness and conservatism. Numerical simulations demonstrated the effectiveness and practicality of the proposed predictors across various SDSs, highlighting their ability to deliver distributionally robust probabilistic predictions under different settings.

The DRPP framework developed in this work is promising for future exploration of computationally efficient predictors under different types of scoring rules and ambiguity sets.

References

- [1] F. Sévellec and S. S. Drijfhout, “A novel probabilistic forecast system predicting anomalously warm 2018-2022 reinforcing the long-term global warming trend,” Nature Communications, vol. 9, no. 1, p. 3024, Aug. 2018.

- [2] E. Y. Cramer, E. L. Ray, V. K. Lopez, J. Bracher, A. Brennen, A. J. Castro Rivadeneira, A. Gerding, T. Gneiting, K. H. House, Y. Huang et al., “Evaluation of individual and ensemble probabilistic forecasts of covid-19 mortality in the united states,” Proceedings of the National Academy of Sciences, vol. 119, no. 15, 2022.

- [3] R. Buizza, “The value of probabilistic prediction,” Atmospheric Science Letters, vol. 9, no. 2, pp. 36–42, 2008.

- [4] J. Fisac, A. Bajcsy, S. Herbert, D. Fridovich-Keil, S. Wang, C. Tomlin, and A. Dragan, “Probabilistically safe robot planning with confidence-based human predictions,” Robotics: Science and Systems XIV, 2018.

- [5] T. Gneiting and M. Katzfuss, “Probabilistic forecasting,” Annual Review of Statistics and Its Application, vol. 1, no. 1, pp. 125–151, 2014.

- [6] T. Gneiting and A. E. Raftery, “Strictly proper scoring rules, prediction, and estimation,” Journal of the American Statistical Association, vol. 102, no. 477, pp. 359–378, Mar. 2007.

- [7] D. Landgraf, A. Völz, F. Berkel, K. Schmidt, T. Specker, and K. Graichen, “Probabilistic prediction methods for nonlinear systems with application to stochastic model predictive control,” Annual Reviews in Control, vol. 56, Jan. 2023.

- [8] D. Hinrichsen and A. J. Pritchard, “Uncertain systems,” in Mathematical Systems Theory I: Modelling, State Space Analysis, Stability and Robustness, D. Hinrichsen and A. J. Pritchard, Eds. Berlin, Heidelberg: Springer, 2005, pp. 517–713.

- [9] K. Schmüdgen, The Moment Problem, ser. Graduate Texts in Mathematics. Cham: Springer International Publishing, 2017, vol. 277.

- [10] E. Delage and Y. Ye, “Distributionally robust optimization under moment uncertainty with application to data-driven problems,” Operations Research, Jan. 2010.

- [11] P. S. Maybeck, Stochastic Models, Estimation, and Control. Academic press, 1982.

- [12] M. Roth and F. Gustafsson, “An efficient implementation of the second order extended kalman filter,” in 14th International Conference on Information Fusion, Jul. 2011, pp. 1–6.

- [13] M. Nørgaard, N. K. Poulsen, and O. Ravn, “New developments in state estimation for nonlinear systems,” Automatica, vol. 36, no. 11, pp. 1627–1638, Nov. 2000.

- [14] H. M. T. Menegaz, J. Y. Ishihara, G. A. Borges, and A. N. Vargas, “A systematization of the unscented kalman filter theory,” IEEE Transactions on Automatic Control, vol. 60, no. 10, pp. 2583–2598, Oct. 2015.

- [15] M. Šimandl and J. Duník, “Derivative-free estimation methods: New results and performance analysis,” Automatica, vol. 45, no. 7, pp. 1749–1757, Jul. 2009.

- [16] H. W. Sorenson and D. L. Alspach, “Recursive bayesian estimation using gaussian sums,” Automatica, vol. 7, no. 4, pp. 465–479, Jul. 1971.

- [17] D. Alspach and H. Sorenson, “Nonlinear bayesian estimation using gaussian sum approximations,” IEEE Transactions on Automatic Control, vol. 17, no. 4, pp. 439–448, Aug. 1972.

- [18] R. Chen and J. S. Liu, “Mixture kalman filters,” Journal of the Royal Statistical Society Series B: Statistical Methodology, vol. 62, no. 3, pp. 493–508, Sep. 2000.

- [19] N. Wiener, “The homogeneous chaos,” American Journal of Mathematics, vol. 60, no. 4, pp. 897–936, 1938.

- [20] J. A. Paulson and A. Mesbah, “An efficient method for stochastic optimal control with joint chance constraints for nonlinear systems,” International Journal of Robust and Nonlinear Control, vol. 29, no. 15, pp. 5017–5037, 2019.

- [21] S. Särkkä and L. Svensson, Bayesian Filtering and Smoothing, 2nd ed., ser. Institute of Mathematical Statistics Textbooks. Cambridge: Cambridge University Press, 2023.

- [22] J. Zhang, “Modern monte carlo methods for efficient uncertainty quantification and propagation: A survey,” WIREs Computational Statistics, vol. 13, no. 5, p. e1539, 2021.

- [23] M. Farina, L. Giulioni, and R. Scattolini, “Stochastic linear model predictive control with chance constraints – a review,” Journal of Process Control, vol. 44, pp. 53–67, Aug. 2016.

- [24] A. Mesbah, “Stochastic model predictive control: An overview and perspectives for future research,” IEEE Control Systems Magazine, vol. 36, no. 6, pp. 30–44, Dec. 2016.

- [25] R. D. McAllister and J. B. Rawlings, “Nonlinear stochastic model predictive control: Existence, measurability, and stochastic asymptotic stability,” IEEE Transactions on Automatic Control, vol. 68, no. 3, pp. 1524–1536, Mar. 2023.

- [26] J. Coulson, J. Lygeros, and F. Dörfler, “Distributionally robust chance constrained data-enabled predictive control,” IEEE Transactions on Automatic Control, vol. 67, no. 7, pp. 3289–3304, Jul. 2022.

- [27] C. P. Robert, The Bayesian Choice, ser. Springer Texts in Statistics. New York, NY: Springer, 2007.

- [28] D. T. Frazier, W. Maneesoonthorn, G. M. Martin, and B. P. M. McCabe, “Approximate bayesian forecasting,” International Journal of Forecasting, vol. 35, no. 2, pp. 521–539, Apr. 2019.

- [29] R. Koenker, “Quantile regression: 40 years on,” Annual Review of Economics, vol. 9, no. Volume 9, 2017, pp. 155–176, Aug. 2017.

- [30] L. Schlosser, T. Hothorn, R. Stauffer, and A. Zeileis, “Distributional regression forests for probabilistic precipitation forecasting in complex terrain,” The Annals of Applied Statistics, vol. 13, no. 3, pp. 1564–1589, Sep. 2019.

- [31] T. Duan, A. Anand, D. Y. Ding, K. K. Thai, S. Basu, A. Ng, and A. Schuler, “NGBoost: Natural gradient boosting for probabilistic prediction,” in Proceedings of the 37th International Conference on Machine Learning. PMLR, Nov. 2020, pp. 2690–2700.

- [32] D. Salinas, V. Flunkert, J. Gasthaus, and T. Januschowski, “DeepAR: Probabilistic forecasting with autoregressive recurrent networks,” International Journal of Forecasting, vol. 36, no. 3, pp. 1181–1191, Jul. 2020.

- [33] H. Tyralis and G. Papacharalampous, “A review of predictive uncertainty estimation with machine learning,” Artificial Intelligence Review, vol. 57, no. 4, p. 94, Mar. 2024.

- [34] H. E. Scarf, K. J. Arrow, and S. Karlin, A Min-Max Solution of an Inventory Problem. Rand Corporation Santa Monica, 1957.

- [35] G. Gallego and I. Moon, “The distribution free newsboy problem: Review and extensions,” Journal of the Operational Research Society, vol. 44, no. 8, pp. 825–834, Aug. 1993.

- [36] M. Parry, A. P. Dawid, and S. Lauritzen, “Proper local scoring rules,” The Annals of Statistics, vol. 40, no. 1, Feb. 2012.

- [37] F. Lin, X. Fang, and Z. Gao, “Distributionally robust optimization: A review on theory and applications,” Numerical Algebra, Control and Optimization, vol. 12, no. 1, pp. 159–212, 2022.

- [38] R. S. Sutton and A. G. Barto, Reinforcement learning: An introduction. MIT press, 2018.

- [39] R. Bellman, “Dynamic programming,” Science, vol. 153, no. 3731, pp. 34–37, Jul. 1966.

- [40] P. M. Pardalos and G. Schnitger, “Checking local optimality in constrained quadratic programming is np-hard,” Operations Research Letters, vol. 7, no. 1, pp. 33–35, Feb. 1988.

- [41] C. Jin, P. Netrapalli, and M. Jordan, “What is local optimality in nonconvex-nonconcave minimax optimization?” in Proceedings of the 37th International Conference on Machine Learning. PMLR, Nov. 2020, pp. 4880–4889.

- [42] C. Daskalakis, S. Skoulakis, and M. Zampetakis, “The complexity of constrained min-max optimization,” in Proceedings of the 53rd Annual ACM SIGACT Symposium on Theory of Computing, ser. STOC 2021. New York, NY, USA: Association for Computing Machinery, Jun. 2021, pp. 1466–1478.

- [43] B. Grimmer, H. Lu, P. Worah, and V. Mirrokni, “Limiting behaviors of nonconvex-nonconcave minimax optimization via continuous-time systems,” in Proceedings of The 33rd International Conference on Algorithmic Learning Theory. PMLR, Mar. 2022, pp. 465–487.

- [44] T. Fiez, B. Chasnov, and L. Ratliff, “Implicit learning dynamics in stackelberg games: Equilibria characterization, convergence analysis, and empirical study,” in Proceedings of the 37th International Conference on Machine Learning. PMLR, Nov. 2020, pp. 3133–3144.

Appendix A Discussion on Canonicalization

If we align with and optimize over , i.e., , the Lagrange function would have been

However, minimizing the Lagrange function over and cannot lead to further useful analysis. On the one hand, if is minimized before , it will lead to constraints . Then the minimization over is constrained by these constraints, which makes it impossible to derive explicit expression for an optimal . On the other hand, minimizing before also fails to derive an explicit expression for the optimal because the form of remains undetermined. Therefore, we should not formulate the inner minimization over .

Appendix B Duality of Problem (8)

Proof.

The Lagrange function for (8) is defined as

where the Lagrange multipliers satisfy and for . Let be the set of positive measures on . Minimizing over , , and we have

Equation holds when , otherwise there is equals . Equation holds because

Combining those conditions and the previous multipliers’ constraints, we have the dual form completed. ∎

Appendix C Proof of Theorem 1

Proof.

Step I. We leverage the separable structure of to reformulate the problem. For ease of notation, we use the to summarize all the Lagrange multipliers except for , i.e.,

First, the objective function can be separated as where . Second, only the second constraint concerns . Therefore, given any group of feasible satisfying the last three constraints in , the optimizing over and is equivalent to solving the following problem:

| (17) | ||||

Step II. We exploit the last constraint in (17) to analyze the lower bound of . Notice that

| (18) | ||||

one immediately has that is semi-definite positive. Because and are both semi-definite, we have is symmetric and there exists an orthogonal matrix and an diagonal matrix such that . Then we have

where the second equation follows by letting . If there is an index such that , then , which contradicts (18). It follows that can be lower bounded by , because

| (19) | ||||

Step III. We use the condition that makes (19) equal to obtain the optimality condition. Notice that the equality of (19) holds only when there is for almost everywhere. Therefore, given any feasible , the objective function in problem (17) can only attain its maximum when is a Gaussian distribution almost everywhere.

Finally, considering that the objective of can be reformulated as , and a necessary optimal condition for the inner optimization is that is subject to Gaussian almost everywhere, we have the proof completed. ∎

Appendix D Derivation of (10)

Proof.

Facilitated by Theorem 1, we can first parameterize by and such that

Substituting it into the constraints of , there is

Then we have , , and . Substituting these equations and the parameterized into the objective of problem , we get

Substituting the above equations into the problem , we have the problem (10) derived. ∎

Appendix E Proof of Lemma 1

Proof.

Since the objective function is linear with respect to and the second constraint provides an upper bound for it, one immediately has the worst-case covariance should satisfy that

Let , problem can be further equivalent to

| (20) | ||||

| s.t. |

Suppose , we have can also attain the maximum. Then we have

where the last inequality is strict when . Therefore, we have , which means that .

∎

Appendix F Duality of the moment problem (14)

Proof.

The Lagrange function for (14) is defined as

where the Lagrange multipliers satisfy and . Let be the set of positive measures on . Minimizing over and , one has

where the second equality holds when and hold simultaneously, otherwise there is . Combining these two conditions and the previous multipliers’ constraints, we have attained the dual problem . ∎

Appendix G Proof of Theorem 2

Proof.

The proof is organized in three parts: first, we use Theorem 1 to parameterize by Gaussian distribution and reformulate the dual problem to a finite-dimensional optimization; second, we solve the optimal predictive pdf ; third, we substitute into the original problem to obtain the worst-case SDS by solving the inner minimization.

Step I. Using the necessary optimality condition for , we can parameterize by and such that

Moreover, from the constraint , we get

which indicates that , , and . Next, from the constraint one can get . Substituting the parameterized and the above equations into problem , there is

As a result, can be transformed into a finite-dimensional optimization problem as follows:

| (21) | ||||

Step II. Notice that the constraint and the relationship reveals that and are one-on-one, one can optimize on either of them. In this proof, we choose to maximize over and substitute in the objective by . Next, notice that the constraints for only depend on , and it can be separated from the constraints for , we can first maximize over and , then over , finally over . In other words, the objective of problem (21) can be reformulated as

| (22) | ||||

The solution of the first maximization is because

and .

The solution of the second maximization is . Notice that is equivalent to , therefore and . Again, and . Additionally, indicates that .

The solution of the third maximization is . This is because where . Notice that and monotonously decrease on . Therefore, is concave, which can be maximized at where , i.e., . Finally, we have equals the inverse of , which is . Therefore, the optimal predictive pdf is

| (23) |

Step III. Substituting the optimal into the primal maximin problem , we have the inner minimization problem as

| (24) | ||||

The objective is minimized when equals , which means the solution for is the set

and the objective value at is

∎

| Tao Xu (S’22) received a B.S. degree in the School of Mathematical Sciences from Shanghai Jiao Tong University (SJTU), Shanghai, China. He is currently working toward the Ph.D. degree with the Department of Automation, SJTU. He is a member of Intelligent of Wireless Networking and Cooperative Control Group. His research interests include probabilistic prediction, distributionally robust optimization, dynamic games, and robotics. |

| Jianping He (SM’19) is currently an associate professor in the Department of Automation at Shanghai Jiao Tong University. He received the Ph.D. degree in control science and engineering from Zhejiang University, Hangzhou, China, in 2013, and had been a research fellow in the Department of Electrical and Computer Engineering at University of Victoria, Canada, from Dec. 2013 to Mar. 2017. His research interests mainly include the distributed learning, control and optimization, security and privacy in network systems. Dr. He serves as an Associate Editor for IEEE Trans. Control of Network Systems, IEEE Trans. on Vehicular Technology, IEEE Open Journal of Vehicular Technology, and KSII Trans. Internet and Information Systems. He was also a Guest Editor of IEEE TAC, International Journal of Robust and Nonlinear Control, etc. He was the winner of Outstanding Thesis Award, Chinese Association of Automation, 2015. He received the best paper award from IEEE WCSP’17, the best conference paper award from IEEE PESGM’17, and was a finalist for the best student paper award from IEEE ICCA’17, and the finalist best conference paper award from IEEE VTC20-FALL. |