Distributed Node-Specific Block-Diagonal LCMV Beamforming in Wireless Acoustic Sensor Networks

Abstract

This paper derives the analytical solution of a novel distributed node-specific block-diagonal linearly constrained minimum variance beamformer from the centralized linearly constrained minimum variance (LCMV) beamformer when considering that the noise covariance matrix is block-diagonal. To further reduce the computational complexity of the proposed beamformer, the Sherman-Morrison-Woodbury formula is introduced to compute the inversion of noise sample covariance matrix. By doing so, the exchanged signals can be computed with lower dimensions between nodes, where the optimal LCMV beamformer is still available at each node as if each node is to transmit its all raw sensor signal observations. The proposed beamformer is fully distributable without imposing restrictions on the underlying network topology or scaling computational complexity, i.e., there is no increase in the per-node complexity when new nodes are added to the networks. Compared with state-of-the-art distributed node-specific algorithms that are often time-recursive, the proposed beamformer exactly solves the LCMV beamformer optimally frame by frame, which has much lower computational complexity and is more robust to acoustic transfer function estimation error and voice activity detector error. Numerous experimental results are presented to validate the effectiveness of the proposed beamformer.

keywords:

distributed beamforming, node-specific, speech enhancement , wireless acoustic sensor networks.1 Introduction

Wireless acoustic sensor networks (WASNs) generally consist of several nodes, where each node has one or many sensors, a processing unit, and a wireless communication module allowing them to exchange data. Compared with the traditional and single sensor array [1], WASNs can physically cover a wider area, which have more opportunity to select a subset of nodes close to some target sources, and thus higher signal-to-noise ratio (SNR) and direct-to-reverberant ratio (DRR) can be expected [2, 3]. As the next-generation technology for audio acquisition and processing, WASNs have many potential applications, such as binaural hearing aids [4, 5, 6], (hands-free) speech communication systems [7, 8, 9], and acoustic monitoring systems [10, 11, 12, 13].

In principle, all the sensor signal observations from different nodes can be transmitted to a fusion center, and then an optimal beamformer can be computed, where this approach is known as the centralized estimation [14, 15, 16]. The centralized estimation requires a large communication bandwidth, a large transmission power consumption at the individual nodes, and a nonnegligible computational complexity at the fusion center. However, both the power and the communication bandwidth resources in WASNs are often limited. Furthermore, in many WASNs applications, the fusion center may be undesirable due to privacy considerations [17]. A trivial solution is obtained by only utilizing the local sensor signal observations at a single node without any communication link with other nodes. Whereas, this solution cannot utilize the entire information from the WASNs and hence is only sub-optimal. A promising solution is to develop a suitable distributed approach, which often has three stages [18]. At the first stage, each node processes its own sensor signal observations to obtain some compressed signals. At the second stage, only these compressed signals are transmitted to reduce the communication bandwidth. At the last stage, a target signal is obtained by merging all these compressed signals properly.

Distributed speech enhancement algorithms can be roughly divided into two main categories: node-specific and non-node-specific. For the node-specific estimation algorithms, each node in the WASNs can estimate a different target signal, that is to say, a target source for one node may be an interfering source for another node, and vice versa. The node-specific estimation problem is intrinsic in a blind beamforming framework where the acoustic transfer functions (ATFs) between the target sound sources and the sensors are generally unknown. For these blind beamformers, some subspace estimation algorithms can be used to estimate the subspace of the ATFs [19, 20], and then the target signal can be estimated as it is observed at a reference sensor. If each node in the WASNs chooses its own local sensor as reference, the spatial information of the target source can be preserved. Therefore, the node-specific estimation algorithms are preferred for many practical applications [21].

Several non-node-specific speech enhancement algorithms have been presented in [17, 18], and [22], where different nodes shared a common reference sensor. In [17], each node was assumed to have one sensor and a distributed delay and sum (DDS) beamformer in a randomly connected network was proposed. The DDS with the randomized gossip algorithm [23] is an iterative algorithm for solving averaging consensus problems in a distributed way, where all nodes’ outputs are expected to converge to the same optimal average value. The DDS typically needs many iterations to converge to the optimal solution, as well as multiple (re)-broadcasts of the intermediary variables. The DDS is more suitable for estimating the fixed or slowly varying parameters [24]. In [18], a time-recursive distributed generalized sidelobe canceler (DGSC) was proposed for a fully connected network. The DGSC has two components including constraints subspace and its corresponding null-space, and updates the filter coefficients during speech-absent segments. The DGSC needs to transmit -dimensional raw signal observations, with the number of target sources, in addition to the compressed signals to construct the constraints subspace component. The DGSC requires a larger communication bandwidth than those distributed algorithms transmitting only the compressed signals when one aims to get the estimation of the target source signals separately. In [22], the proposed block-diagonal LCMV (BD-LCMV) beamformer utilizes a set of linearly equality constraints to reduce the full-element noise sample covariance matrix to a block-diagonal form, and the imposed block-diagonal structure of the estimated sample covariance matrix results in a naturally separable objective function. Then the distributed optimal problem can be solved by the primal-dual method of multipliers (PDMM) [25]. The BD-LCMV requires lots of iterations to achieve high performance, and therefore we need to make a trade-off between per-frame optimality and communication overhead in practice.

Several node-specific estimation algorithms have been proposed in [4, 5, 14, 24, 26, 27], and [28], where two main criteria including the minimum mean square error (MMSE) and the minimum variance distortionless response (MVDR) are used. The mean square error (MSE) between the output signal and the desired signal comprises two components, namely the desired signal distortion and the residual noise [18]. The MVDR, first proposed by Capon [29], minimizes the noise power at the output signal while maintaining a distortionless response towards the desired direction. Er and Cantoni [30] generalized the single distortionless response to a set of linear constraints, and denoted this beamformer as LCMV.

In [4], a distributed node-specific speech enhancement algorithm was proposed using the MMSE criterion in a 2-node network for binaural hearing aids applications. The node-specific estimation is required to preserve the auditory cues at the two ears. This method relies on the speech-distortion-weighted multichannel Wiener filter (SDW-MWF), and was referred to as the distributed MWF (DB-MWF). In [5], an iterative distributed MVDR (DB-MVDR) beamformer was introduced for a similar binaural hearing aids setting. Both methods assume a single target source to obtain convergence and optimality, and are equivalent when the trade-off factor between noise reduction and distortion is zero in the SDW-MWF. A more general case was presented in [24], [26], and [27], where multiple target sources and nodes are considered in a so-called distributed adaptive node-specific signal estimation (DANSE) scheme. The scheme considers each node in the WASNs as a data sink, gathering the compressed signals from other nodes, and then estimates the optimal filter coefficients in an iterative fashion. In [26] and [27], the algorithms were proposed for a fully connected network and a network with a tree topology (T-DANSE), respectively. In [24], the algorithm is topology-independent (TI-DANSE). The TI-DANSE algorithm has a slower convergence rate compared to [26] and [27], and requires a larger number of frames to obtain near optimal performance [22]. In [14], a distributed LCMV beamformer referred to as LC-DANSE was proposed by combining the DANSE scheme with the LCMV beamformer. For the DANSE algorithms in [24], [26], and [27], they attempt to align the signal components from the same source in different microphone signals. However, the alignment of the signal components is only possible when the filter length is at least twice the maximum time difference of arrival (TDOA) between all the sensors. This means that in general, the noise reduction performance degrades with increasing TDOA and a fixed filter length [6]. For the LC-DANSE algorithm, the raw signal observations at one node and the compressed signals from other nodes are concatenated into a new vector. In fact, the new vector still has very high dimensions, especially when the number of target sources is large, and requires a high complexity to compute the inverse of its covariance matrix. Besides, both the DANSE and the LC-DANSE algorithms are time-recursive and require multiple frames to reach optimality, which incurs a slow tracking performance [22]. In [28], each node was assumed to have more than one sensor. The recursive estimation of the inverse noise or noisy sample covariance matrix is structured as a consensus problem and is realized in a distributed manner via the randomized gossip algorithm for arbitrary topologies, similar to [17]. In each iteration, each node needs to transmit -dimensional, with the total number of sensors in the WASNs, signals to obtain the product of the inverse sample covariance matrix and the -dimensional sensor signal observations. The communication cost may be higher than that of the centralized algorithm, and the convergence error accumulates across time when the aforementioned product is not accurately estimated.

In this paper, we propose a distributed node-specific block-diagonal linearly constrained minimum variance (DNBD-LCMV) beamformer. The DNBD-LCMV utilizes a set of linear equality constraints to reduce the full-element noise sample covariance matrix to a block-diagonal form and then its analytical solution can be derived from the centralized LCMV beamformer directly. The inverse noise sample covariance matrix at each node is proposed to update by the Sherman-Morrison-Woodbury formula [31] and is used to compute the exchanged signals. The proposed DNBD-LCMV can significantly reduce the number of signals exchanged between nodes, yet obtains the optimal LCMV beamformer at each node as if each node can transmit its all raw sensor signal observations. The DNBD-LCMV is fully distributable for any network topology and is completely scalable, i.e., there is no increase in the per-node computational complexity when new nodes are added to the networks. Compared with the state-of-the-art distributed node-specific algorithms, the DNBD-LCMV exactly solves the LCMV beamformer optimally in each frame, which has much lower computational complexity and is more robust to ATF estimation error and voice activity detector (VAD) error.

The remainder of this paper is organized as follows. In Section 2, the signal model is introduced, and the centralized LCMV is also presented in this section. In Section 3, the DNBD-LCMV and extension of DNBD-LCMV are shown, and its computational complexity and communication bandwidth are analyzed in Section 4. The experimental results are presented in Section 5 and some conclusions are given in Section 6.

2 Preliminaries

2.1 Signal Model

We consider the WASNs with sensor nodes, where the set of nodes is denoted as . Each node is equipped with microphones and thus the total number of microphones is . The distributed speech enhancement problem is often formulated in the short-time Fourier transform (STFT) domain and the vector is given by

| (1) |

where denotes the frequency index, and denotes the time-frame index. is an vector consisting of locally received microphone signals at the th node, and the superscript denotes the transpose operator. can be modeled as

| (2) |

where is a signal vector containing speech sources, is a noise vector, and

| (3) |

is a full-rank ATF matrix. In particularly, is the ATF matrix between the speech sources and the microphones at the th node. In the following, can be approximated as time-invariant in each frame and all derivations refer to a single frequency bin. The frame index and the frequency index will be omitted when no confusion arises.

2.2 Centralized LCMV Beamforming

For the centralized LCMV beamformer, node applies a -dimensional estimator to the -dimensional microphone signals to obtain the node-specific output , where the superscript denotes the conjugate transpose operator. can be obtained from the following general optimization problem [32][33]

| (4) |

where is the noise covariance matrix and denotes the expected value operator. is an desired response vector for the speech sources. Its entries usually consist of ones and zeros to preserve the target sources and eliminate other interfering sources simultaneously. The solution of (4) can be given by

| (5) |

The node-specific output can be expressed as

| (6) |

where and are the th entries of and , respectively. The superscript “” marker denotes the conjugate operator.

Equations (5) and (6) require each node to have access to all microphone signals to estimate and then obtain . Therefore, all the locally received signals at the th node need to be transmitted, which results in a large communication bandwidth and a large transmission power in the WASNs. Besides, the computational power grows dramatically with the increase of when computing the inversion of .

3 Method

In the previous section, it is assumed that each node transmits all its microphone signals to every other node in the WASNs such that each node can compute (5) and (6). We now look, instead, to the case where each node only transmits a linearly compressed version of its microphone signals by means of the distributed node-specific block-diagonal LCMV (DNBD-LCMV) beamformer. For the sake of an easy exposition, we first assume that the ATF matrix is known, and describe the DNBD-LCMV for a fully connected network, where each node is able to directly communicate with every other node in the WASNs. Then, all derivations are extended to a blind beamforming framework and any topology, similar to [22].

3.1 DNBD-LCMV with Known ATF Matrix

If the reverberation time of a room is moderate or large enough and/or the noise is far away from the microphones, i.e., the distance between the noise and the microphones is larger than the reverberation radius, its reverberant sound field is diffuse, homogenous, and isotropic [22] and [34]. In this case, the normalized correlation between two microphones and with distance at a frequency can be given by

| (7) |

where is the sound speed. The correlation can be roughly divided into two frequency regions: one highly correlated at low frequencies and the other much less correlated at high frequencies. The boundary between the two regions occurs at the first zero-crossing frequency . When the distance is large, the frequency is small. For example, equals 171.5 Hz for m.

For the WASNs, the microphones within a node are often nearby, whereas the microphones from different nodes are further away. The noise can be assumed to be uncorrelated across the different nodes [22], and then has the following block-diagonal form approximately

| (8) |

where is the noise covariance matrix at the th node, and is an null matrix.

Note that the noise covariance matrix used in the existing node-specific algorithms, such as [14, 24, 26, 27], and [28], is full-element. In the non-node-specific algorithm BD-LCMV [22], the block-diagonal is adopted and the weight vector associated with the th node is given by

| (9) |

where is a Lagrange multiplier shared by all nodes. Then the dual optimization problem is introduced to compute the optimal and is solved by PDMM. Finally, the signal is exchanged between nodes and the output is obtained by summing all .

In this paper, the proposed beamformer has a completely new scheme. From (5) and (8), the weight vector corresponding to the th-frame microphone signals can be expressed by

| (10) |

The -dimensional compressed signals and the matrix related to the th node are defined as

| (11) |

From (6) and (LABEL:BDWeight), the node-specific output can be rewritten as

| (12) |

where is the product of and ,

| (13) |

In particularly, and are obtained by summing all and all separately,

| (14) |

The above derivations assume that the noise covariance matrix at the th node is perfectly known. However, for practical applications, is unknown and needs to be estimated from the noisy observations. This often requires a hard or soft VAD to determine whether the speakers are present or not. It is noted that the design of a VAD mechanism is a hot research topic on its own, and is out of the scope of this paper [18, 24, 35]. In the following, two methods including non-recursive (moving average) smoothing and first-order recursive smoothing are considered to estimate .

3.1.1 Non-Recursive Smoothing Method

For the non-recursive smoothing method, the set of microphone signals frames and the set of noise-only frames for the current block of microphone signals are denoted by and , respectively. The block has frames microphone signals and denotes the cardinality of a set. The noise covariance matrix at the th node can be estimated using the set of noise-only frames,

| (15) |

Rigorously, all the estimated values need to use “” to distinguish them from their true values. Analogously to in (15), we omit “” in the following for the sake of brevity when no confusion arises. For the current block of microphone signals, only needs to be estimated once. From (11), also needs to be estimated once.

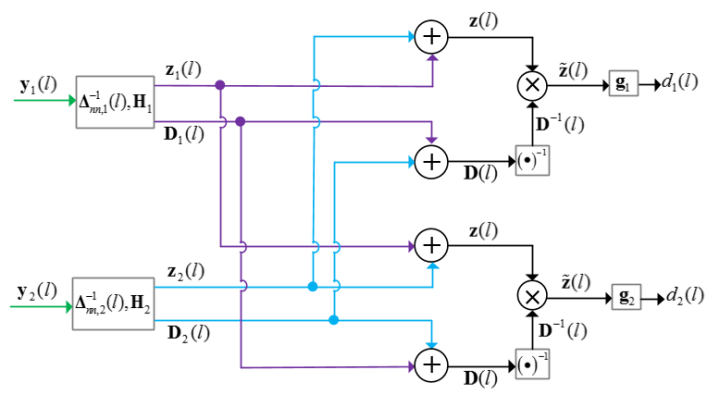

We assume that each block has frames microphone signals, i.e., . The scheme of the DNBD-LCMV with the

non-recursive smoothing method is shown in Fig. 1 and it

consists of the following steps:

1) Initialize: .

2) Each node performs the following operation cycle:

Collect the current block of microphone signals .

For the current block of microphone signals, is estimated with (15).

is applied to -dimensional microphone signals to obtain the -dimensional compressed signals with (11). Besides, can also be obtained.

and are transmitted. In particularly, only needs to be transmitted once for the current block of microphone signals.

Each node can have access to all and , and computes and with (14). Then, the inverse matrix is multiplied with to obtain . Finally, is applied to to obtain .

3) .

4) Return to step 2).

From Fig. 1, for each block of microphone signals, only needs to be transmitted once. Since is a Hermitian matrix and its main diagonal entries are real numbers, the transmission of results in a total of transmitted real numbers for each frequency bin per block. The transmission cost is slight and can be neglected.

3.1.2 First-Order Recursive Smoothing Method

For the first-order recursive smoothing method, the noise sample covariance matrix at the th node is updated by [36]

| (16) |

where when speech component is detected in the th-frame microphone signals ; and , otherwise. When the binary VAD decision is replaced by a soft speech presence probability (SPP) [37], can vary from 0 to 1, which will not be further considered here. is a forgetting factor ranging from 0 to 1.

When , is not updated, i.e., . Similar to Section 3.1.1, only the transmission of is required. While, for , i.e., , the current noise-only frame is included to update by the following equation

| (17) |

A naive solution is obtained by transmitting and for each noise-only frame, where a total of transmitted real numbers and the inversion operation of are required. The communication cost and computational load are huge and need to be further reduced.

With the help of the Sherman-Morrison-Woodbury formula [31], the inversion of in (17) can be expressed by

| (18) |

We further define , and as follows:

| (19) |

By substituting (18) and (19) into (11), we get

| (20) |

and

| (21) |

By substituting into , can be further written as

| (22) |

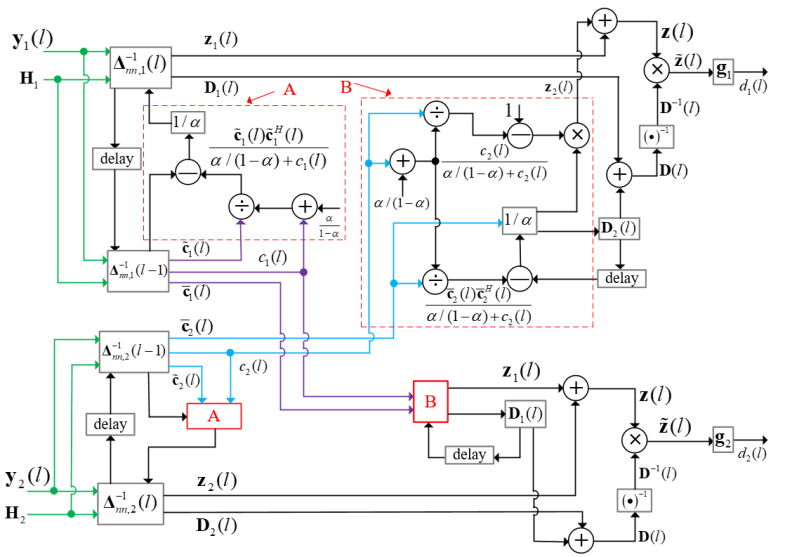

For the noise-only frame, i.e., , from (20), (21), and (22), the scheme

of the DNBD-LCMV with the first-order recursive smoothing method is shown in Fig. 2, and it consists of the following steps:

1) Each node performs the following operation cycle:

The inverse matrix is applied to -dimensional microphone signals to obtain the -dimensional vector and the real number with (19), where the -dimensional vector can also be obtained by using . Then, is estimated with (22).

and are transmitted.

Each node can have access to and , and reconstructs and with (20) and (21), respectively. Then, and are computed with (14). The inverse matrix is multiplied with to obtain . Finally, is applied to to obtain .

2) .

3) Return to step 1).

From Fig. 2, for each noise-only frame, the -dimensional vector and the real number are transmitted to reconstruct the matrix and the -dimensional vector at other nodes instead of the transmission of and . Besides, the inversion operation of is only required at the beginning. The communication cost and computational load are greatly reduced compared to the naive solution mentioned above.

3.2 DNBD-LCMV with Unknown ATF Matrix

In general, the ATF matrix is unknown and needs to be estimated online. Some subspace estimation algorithms can be used to estimate the column space of [19, 20]. Define to be any basis that spans the column space of ,

| (23) |

where is an matrix comprised of the projection coefficients of the original ATFs on the basis vectors.

When the speakers only change their positions slowly with respect to their initial positions, such as teleconferencing, we can estimate the column space at the initial stage (e.g., in a centralized way) [22]. This may cause some estimation error of if the speakers have some slight movements and therefore robust beamformer is preferred.

The goal for node is to estimate the target signal from the signal vector as observed by one of node ’s microphones, referred to as the reference microphone. Without loss of generality, the first microphone of each node is chosen as the reference microphone. For node , the index of its reference microphone is equal to . Accordingly, the weight vector in (5) can be rewritten as

| (24) |

where is the entry in the th row and th column of . and are the th entry of and that of , respectively. The node-specific output in (6) is modified by the following equation [14]

| (25) |

where is the entry in the th row and th column of .

It is obvious that different nodes have different desired response vectors due to different reference microphones, i.e., with . Therefore, each node can extract a node-specific target signal based on node-specific reference microphone to preserve the spatial information of the target source, such as time difference cues, which are very important in target source localization.

3.3 Extension of DNBD-LCMV

When the noise covariance matrix in (8) is replaced by the noisy covariance matrix , that is to say,

| (26) |

one can obtain the distributed node-specific block-diagonal linearly constrained minimum power (DNBD-LCMP) beamformer, which can be given by

| (27) |

where this beamformer does not require an estimate of the noise covariance matrix.

Particularly, when is an identity matrix , the DNBD-LCMV becomes the distributed node-specific delay and sum (DNDS) beamformer

| (28) |

where this beamformer only needs to be calculated once at the beginning. However, it cannot control the sound sources that are not included in .

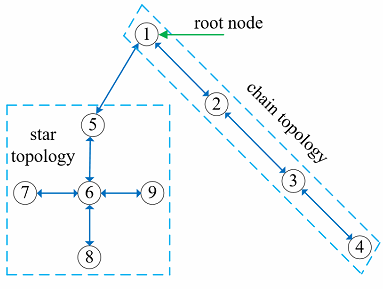

Similar to [22], the proposed beamformers including DNBD-LCMV/DNBD-LCMP, and DNDS can be implemented in the WASNs with arbitrary topologies. This can be achieved by a slight modification to the data transmission process of each node, where each node aggregates its transmitted data including and with the transmitted data from its neighbors. This will allow for the transmitted data to disperse through the WASNs by means of an in-network summation, and is demonstrated for the tree topology, as shown in Fig. 3.

We denote as the set of neighbors of node in the topology with node excluded. After the tree is formed with any tree formation algorithm [38, 39], one arbitrary node is assigned as the root node and the nodes only communicate with their neighbors (for example, and node 1 is the root node in Fig. 3). The following data-driven signal flow is executed for each block of microphone signals (non-recursive smoothing method is considered below):

1) Any leaf node, i.e., a non-root node having only a single neighbor, can immediately fire and transmits and to its single neighbor (toward the root node). Any non-root node with more than a single neighbor waits until it has received the transmitted data from all its neighbors except for a single neighbor that has yet to fire, say node , and then computes the following sum

| (29) |

Next, and are transmitted to node (toward the root node). This process is repeated at every non-root node in the tree until the root node is reached.

2) Once the data-driven signal flow has reached the root node, say node , the vector is obtained by

| (30) |

The vector is now flooded through the WASNs (away from the root node) so that it reaches every node, where the nodes simple act as relays to pass further through the tree. Finally, the output is obtained.

Based on the data-driven signal flow above, we see that any leaf node will transmit only a single block of compressed signals . Any non-leaf node will transmit a maximum of two blocks of signals including and , first toward the root node and then away from the root node. It is worth noting that and only need to be transmitted once for each block of microphone signals and can be ignored.

4 Analysis of Complexity and Bandwidth

This section analyzes the complexity and the bandwith of the proposed beamformer, and those of state-of-the-art beamformers are also presented. For the centralized LCMV/LCMP beamformer, each node needs to have access to the microphone signals from other nodes and therefore all microphone signals in the WASNs are transmitted. In general, we hope to get the estimation of the speech sources separately, where the constraint vector should be modified to an matrix with only one non-zero entry per column. Without loss of generality, we assume that each node has microphones. We need to note that the cost discussed below does not include the overhead associated with those algorithms exploiting a VAD.

The total number of transmissions related to the communication bandwidth is dependent not only on the choice of the beamformer but also on the WASNs topology. As such, it is difficult to analytically bound this transmission cost for any network topology. The comparison of the communication bandwidth and the computational complexity of different beamformers is performed in a fully connected network, where the centralized LCMV/LCMP, LC-DANSE [14], BD-LCMV/BD-LCMP [22], and the beamformers proposed in this paper have the same update rate.

We denote the transmission of one real number as one transmission. For the DNBD-LCMV/DNBD-LCMP with the first-order recursive smoothing method, each node needs to transmit a -dimensional complex vector and a real number , where the total number of transmissions is . Besides, an inversion of an matrix in (13) is performed, yielding a complexity of . For the DNDS, each node needs to transmit a -dimensional compressed signals and the total number of transmissions is . The computational complexity and the communication bandwidth of different beamformers are shown in Table 1.

| Beamformer | Complexity | Bandwidth |

|

||||

| Complexity | Bandwidth | ||||||

| Non-recursive smoothing method | LCMV/LCMP | 48 | |||||

| LC-DANSE | 16 | ||||||

|

16 | ||||||

|

16 | ||||||

| DNDS | (once)2 | (once)2 | 16 | ||||

| First-order recursive smoothing method | LCMV/LCMP | 48 | |||||

|

48 | ||||||

|

20 | ||||||

-

1

is the maximum number of iterations for the BD-LCMV/BD-LCMP.

-

2

the weight vector in (28) only needs to be calculated once at the beginning.

From Table 1, first, for the beamformers with the first-order recursive smoothing method, the DNBD-LCMV/DNBD-LCMP has the lowest complexity and bandwidth. For the beamformers with the non-recursive smoothing method, the DNBD-LCMV/DNBD-LCMP has the lowest complexity, and has the same bandwidth as the LC-DANSE and the BD-LCMV/BD-LCMP. Second, the bandwidth of the DNDS is not higher than other beamformers. In particular, its complexity is negligible because in (28) only needs to be calculated once at the beginning. Finally, the complexity of the proposed beamformers and the BD-LCMV/BD-LCMP is independent of the number of nodes and they are completely scalable, where there is no increase in the per-node complexity when new nodes are added to the networks.

5 Experimental Results

This section evaluates the performance of the proposed beamformers including DNBD-LCMV/DNBD-LCMP and DNDS and compares them with three state-of-the-art beamformers including centralized LCMV/LCMP and LC-DANSE [14]) by using three objective measures, which are SNR, short-time objective intelligibility (STOI) [40], and average TDOA error (ATE) of the speaker in a simulated room when the column space and VAD have errors for reverberation times and . The room impulse responses (RIRs) are generated by the image method [41, 42].

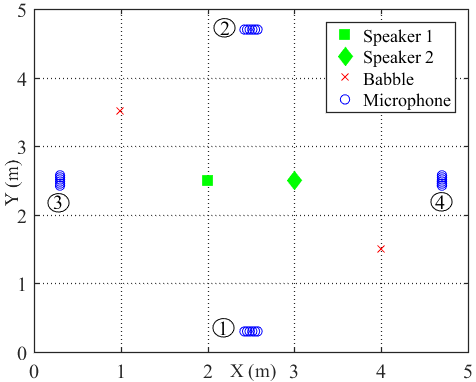

5.1 Experimental Setup

The dimensions of the simulated room is with reverberation times and . The WASNs consist of nodes, each having microphones forming a uniform linear array with an inter-microphone distance of 3 cm. Four point sound sources including speech sources and two babble noise sources are presented. The configuration of the nodes and sound sources are depicted in Fig. 4. The two speakers that produce speech sentences taken from the NOIZEUS corpus [43] have the same power. The two babble directional noise sources are mutually uncorrelated with power that is of the power of any speaker, i.e., 10 dB SNR. Besides the two babble directional noise sources, all microphone signals have an uncorrelated white Gaussian noise component with 30 dB SNR with respect to the superimposed speech signals in the first microphone of node 1. The sampling frequency is 8 kHz and the sound speed is .

In order to approximate the real acoustic scenario, these positions of the speakers, where the column space was estimated, were uniformly distributed over a sphere centered around the true source positions depicted in Fig. 4. These positions were referred to as training positions. For Speaker 1 and Speaker 2, its erroneous training positions had radii and ranging from 0 to 10 cm, respectively. Therefore, the column space estimation error can be modeled as a function of positional error between the training positions and the true source positions [22]. For every value of the positional error, the average performance of 100 different setups were measured. Each setup used the same source signals at the true source positions. However, a different set of training positions mentioned previously and different realizations of the microphone-self noise were used in each setup.

In the experiment, the non-recursive smoothing method was adopted to estimate and , and the estimation was performed on the entire length of signal [24, 44],

| (31) |

where is the set of frames of the entire time horizon, and is the set of frames of the noisy signals used to estimate . The set is used to simulate the VAD error, where the noisy frames containing speech component are erroneously detected as noise-only frames. The error can be measured by the following scalar

| (32) |

When is an empty set, i.e., and , an ideal VAD is considered.

The SNR is the ratio between the powers of the desired speech component and the noise, and can be defined as

| (33) |

where and denote the time domain beamformer output and the time domain noise component (also containing the residual competing speech component [18]) at the th node, respectively.

The TDOA error between the th node and the 1st node for the th speaker can expressed by

| (34) |

where is the location of the 1st microphone at the th node, is the location of the th speaker, and denotes the two-norm of a vector. is the theoretical TDOA and is the estimated TDOA based on the output signals of different nodes using the generalized cross-correlation phase transform (GCC-PHAT) [45]. More details about TDOA can be found in [46]. The ATE is defined by

| (35) |

For the following performance comparison, the first microphone of each node is chosen as the reference microphone and the experimental results for Speaker 1 are presented. In particularly, LC-DANSE [14] is time-recursive and converges to the solution of its centralized algorithm. Therefore, LC-DANSE is replaced by the centralized LCMV [22].

5.2 Robustness to Column Space Estimation Error

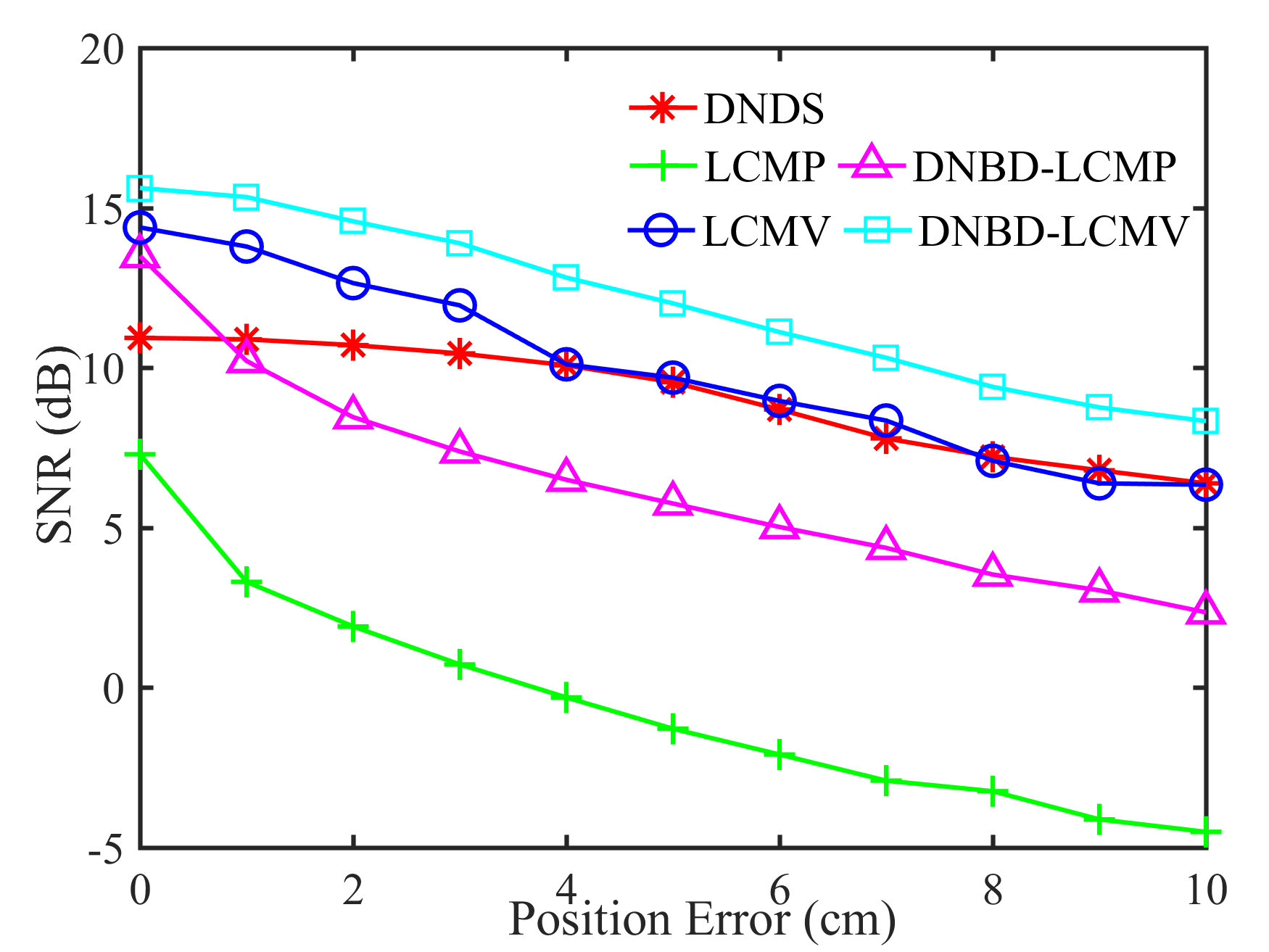

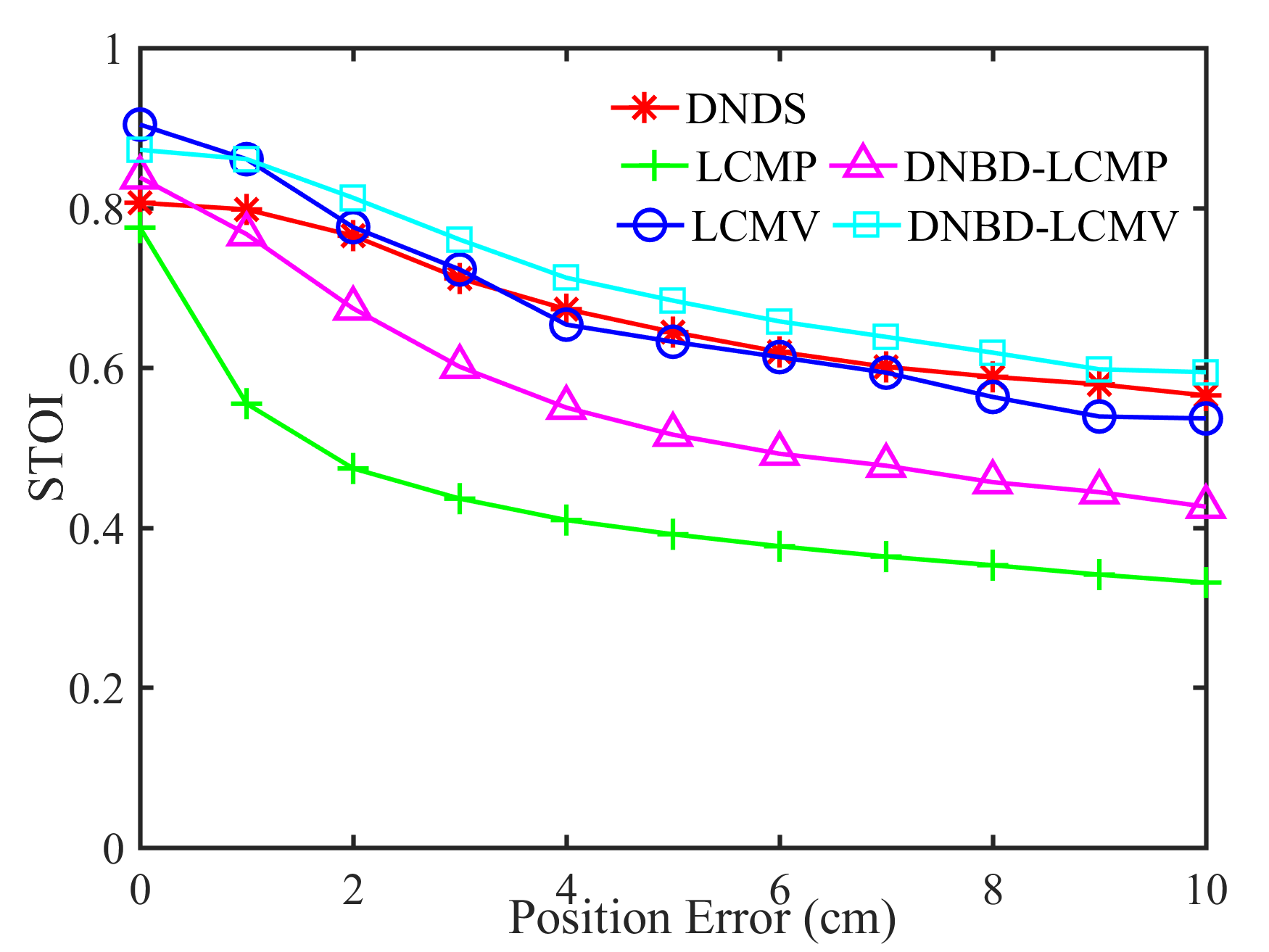

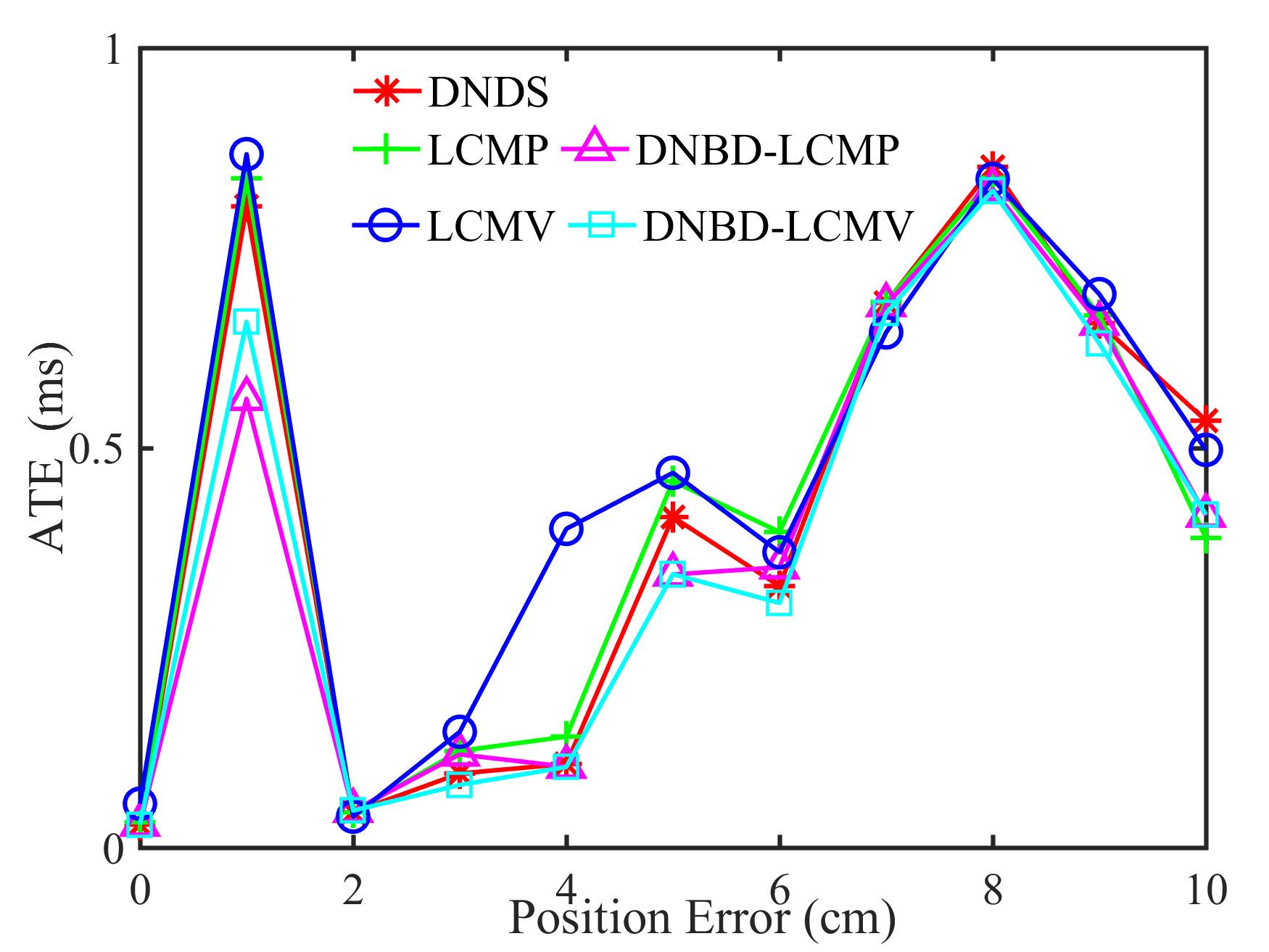

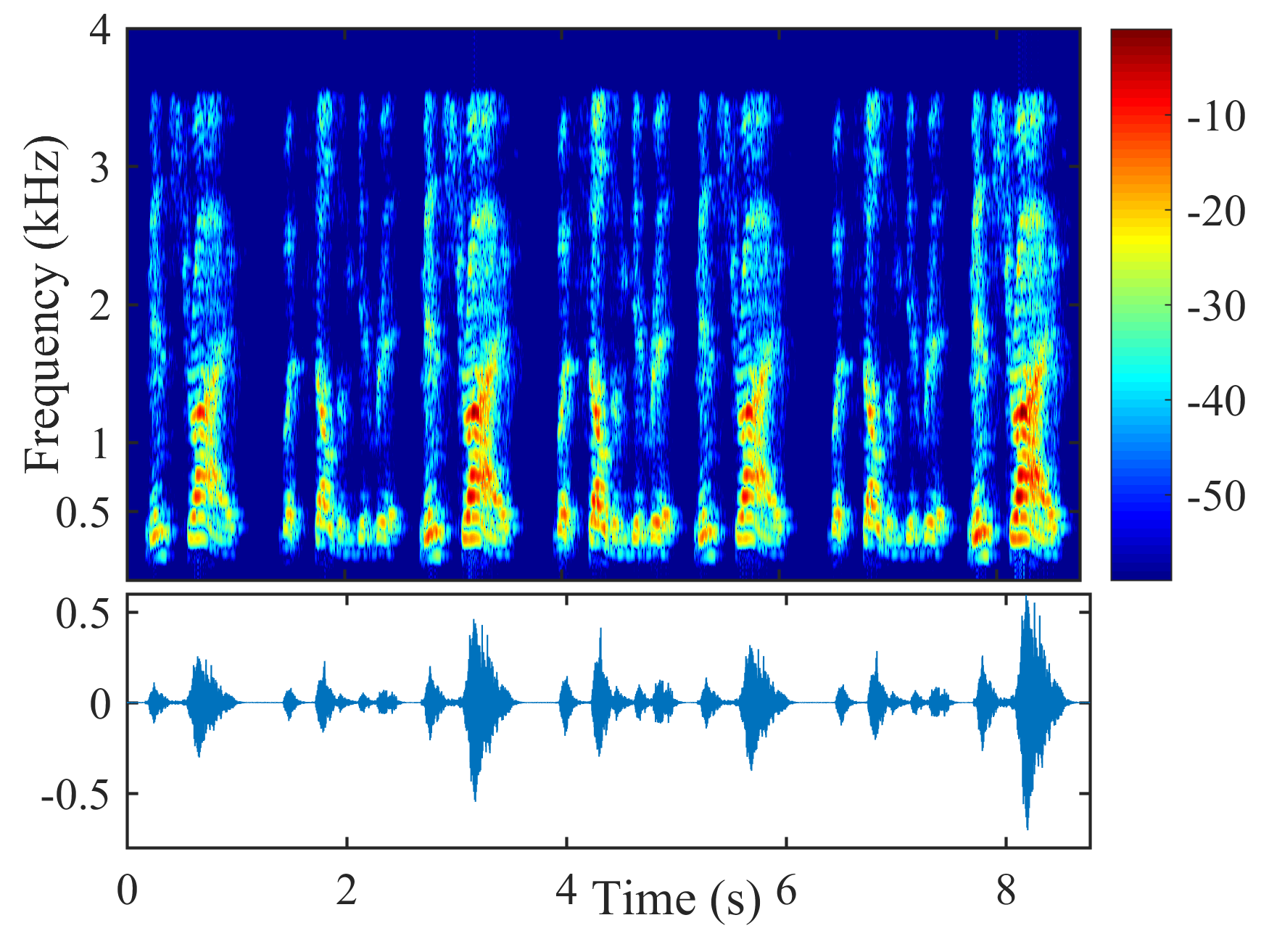

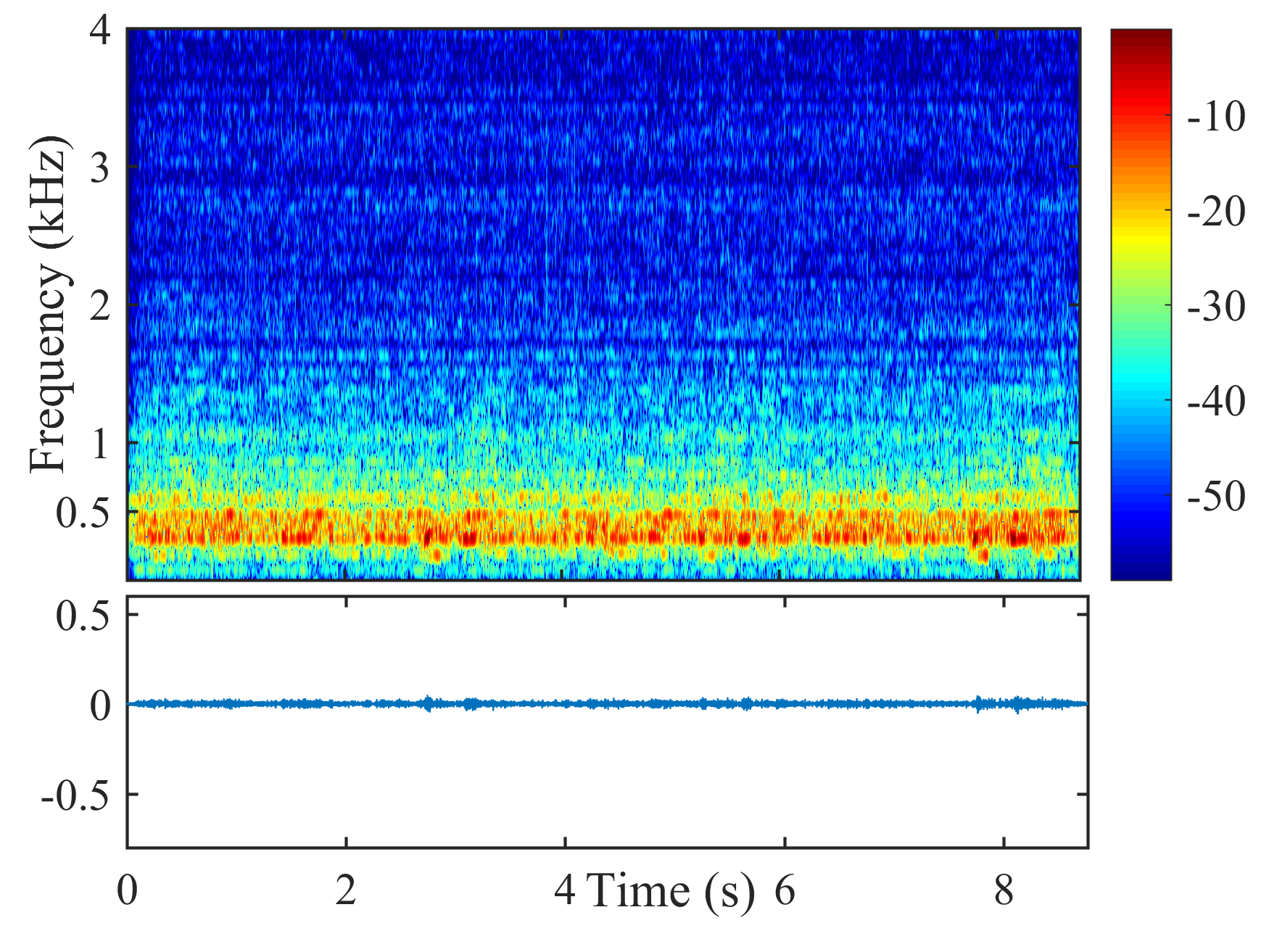

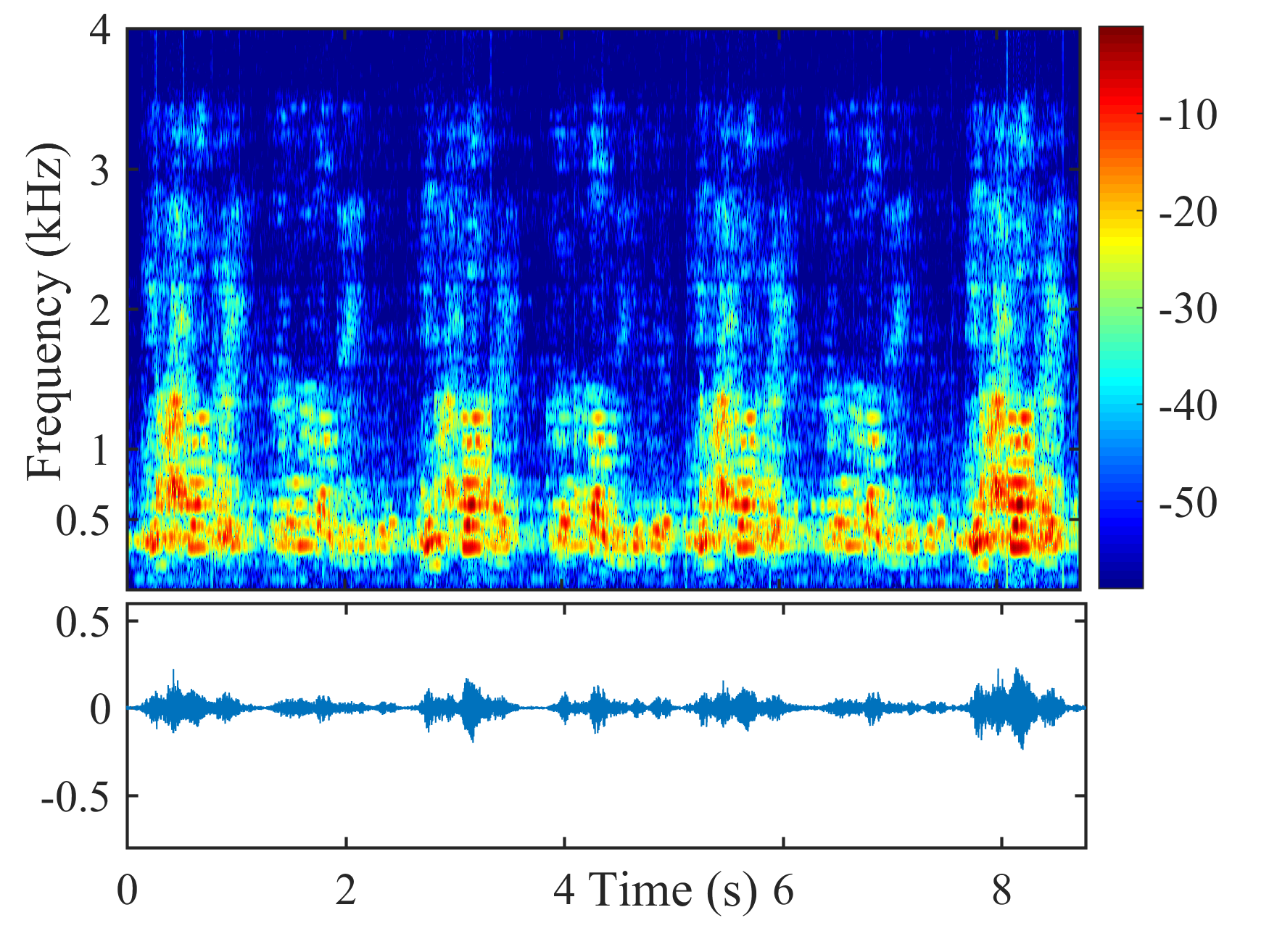

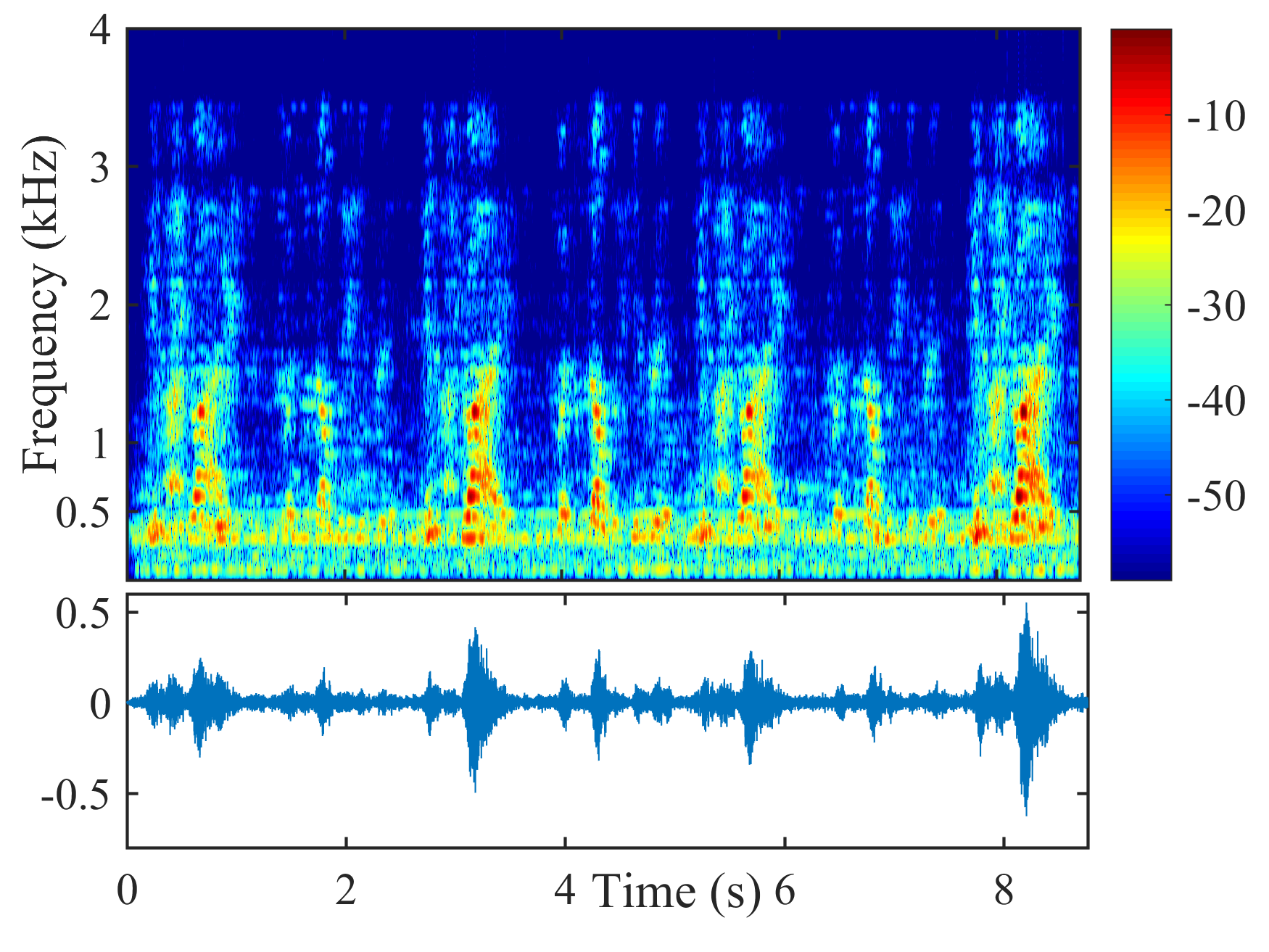

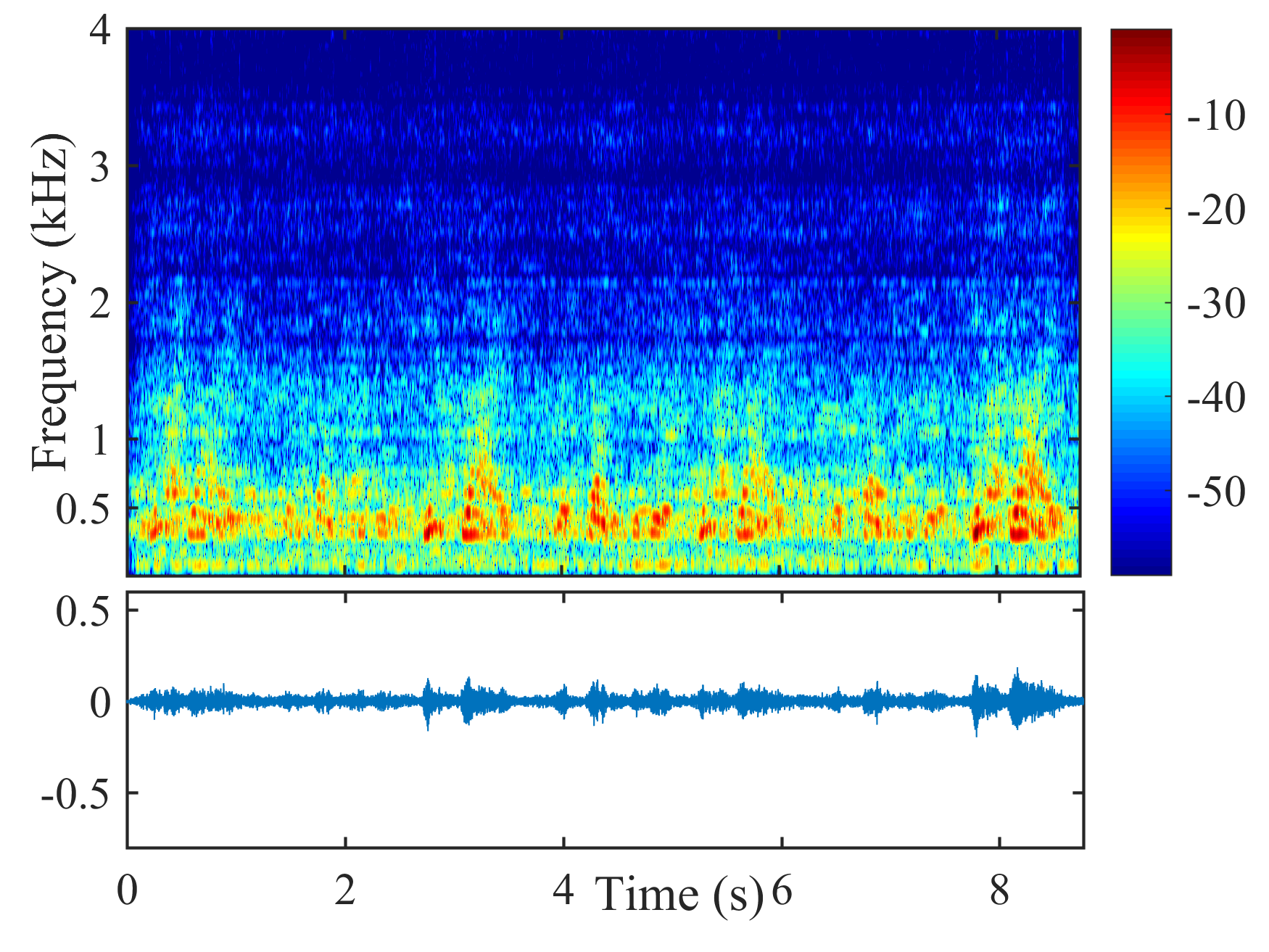

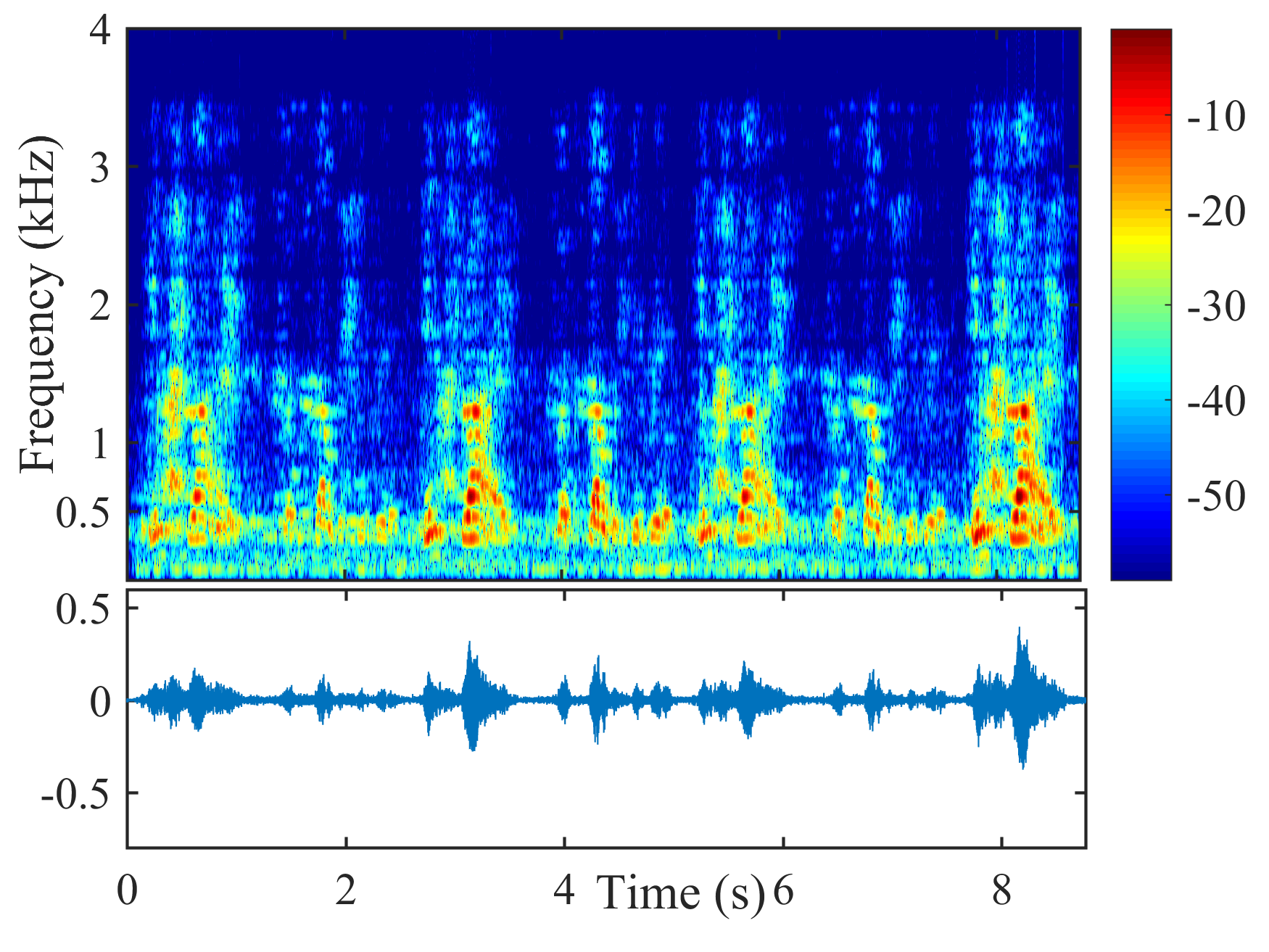

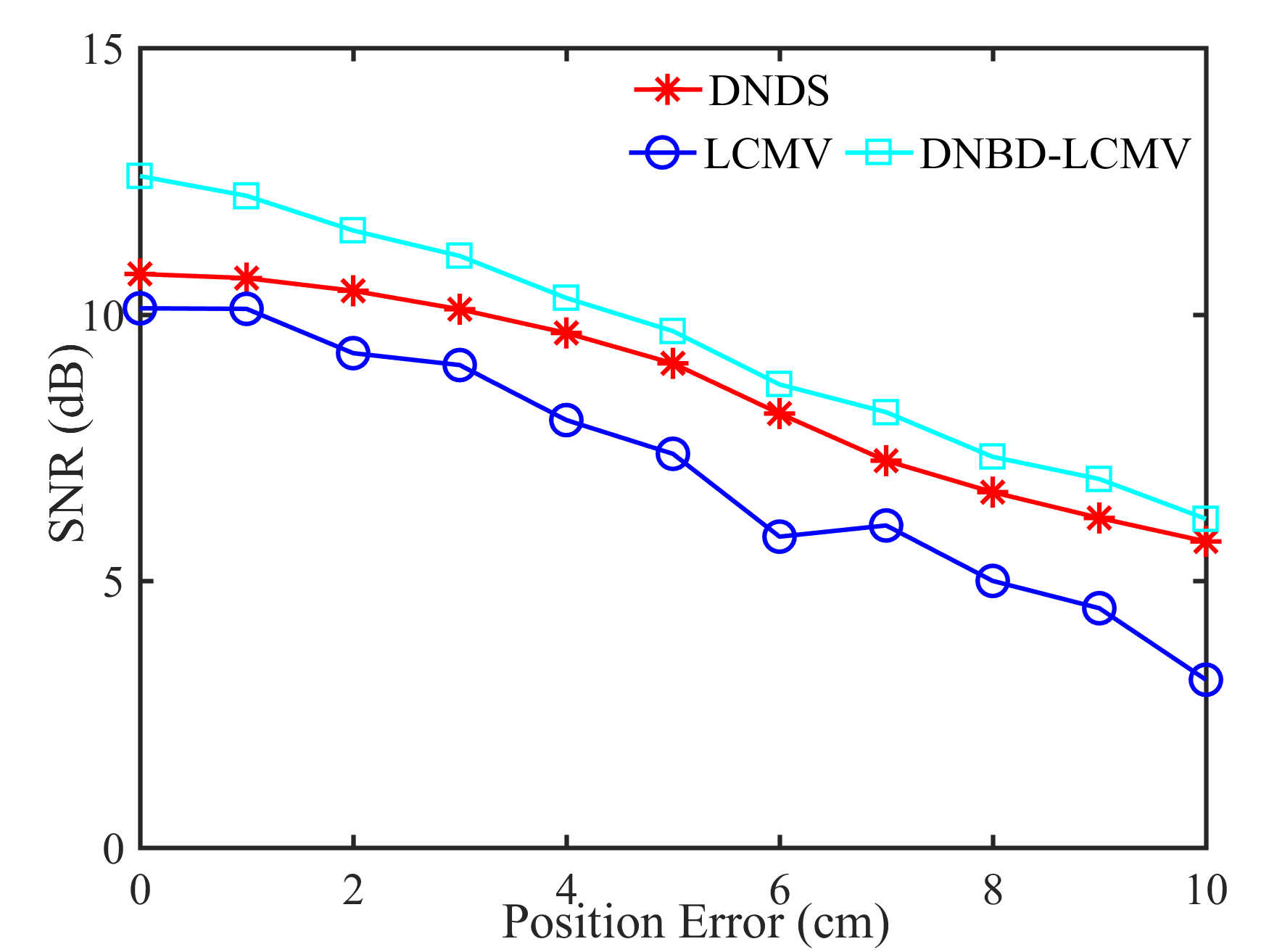

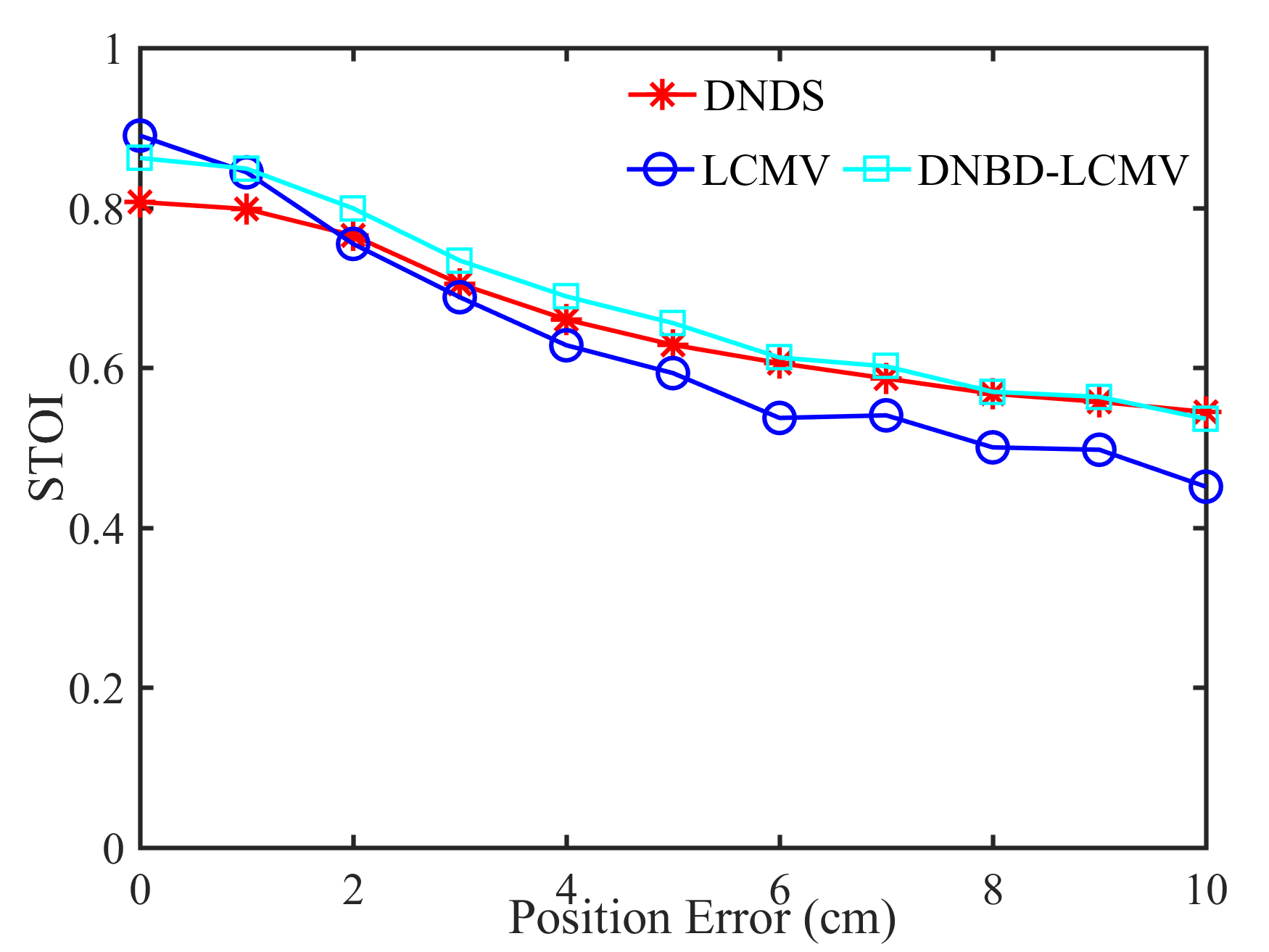

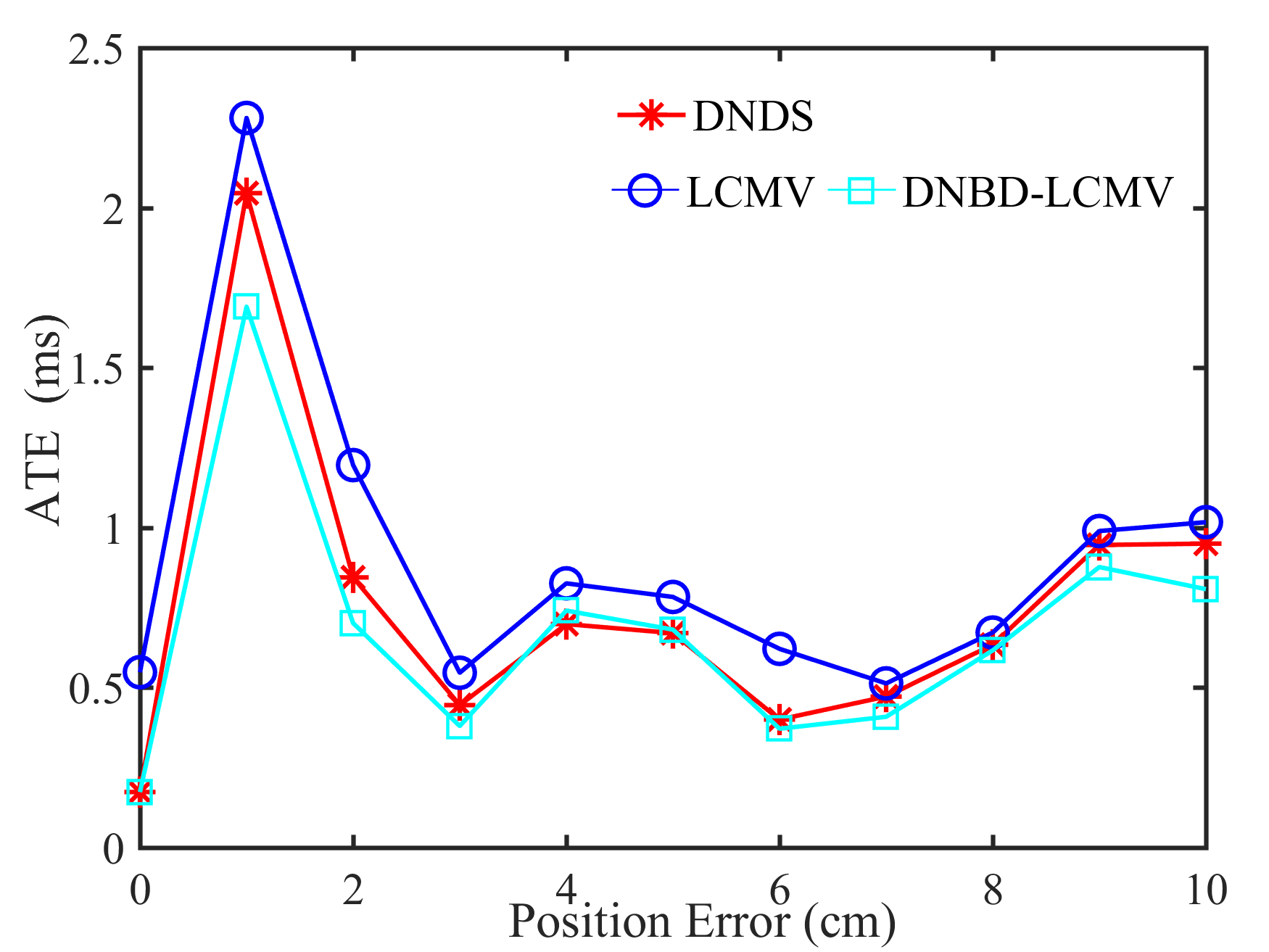

Fig. 5 shows the performance of different beamformers under different positional errors in terms of reverberation time s and VAD error . The spectrograms of the desired signal received by the first microphone of node 1 and the output signals of node 1 of different beamformers were depicted in Fig. 6 for s, , and cm.

From Fig. 5, first, one can get that the performance of the five beamformers decreases with increasing positional error. When there is no positional error, i.e., cm, the SNR and the STOI of DNBD-LCMP are higher than LCMP and DNDS, and are slightly lower than LCMV and DNBD-LCMV. When there is positional error, the SNR and the STOI of DNBD-LCMP are significantly reduced, and are much lower than DNDS, LCMV, and DNBD-LCMV. For different positional error values, the SNR and the STOI of LCMP are the lowest. LCMP and DNBD-LCMP had lower robustness to the positional error than DNDS, LCMV, and DNBD-LCMV. Second, for DNDS, LCMV, and DNBD-LCMV, the SNR and the STOI of DNDS are the lowest when the positional error is zero and then approach LCMV and DNBD-LCMV with increasing positional error (even higher than LCMV). This is related to the fact that DNDS cannot well suppress the directional noise sources that are not included in the desired response vector but has more robust performance to the positional error [47]. DNBD-LCMV has higher SNR and STOI than LCMV for non-zero positional error and is less sensitive to the positional error, where has lower dimensions than the full-element noise sample covariance matrix used in the LCMV and is numerically more favorable. Third, the ATEs of different beamformers do not appear to be a significant difference.

From Fig. 6, the speech component in the output signal of LCMP is almost completely removed, where the column space estimation error results in removal of the actual speech component and preservation in the direction of the wrongly estimated column space. NBD-LCMP has the similar problem to LCMP. However, the performance degradation was not that great as with LCMP, this is because DNBD-LCMP has lower degrees of freedom to satisfy the wrong distortionless response and suppresses less speech component than LCMP [22]. Note that LCMP and DNBD-LCMP will not be further considered in the following experiments due to their poor performance in signal model mismatch cases. LCMV causes more speech distortion than DNBD-LCMV. DNDS preserved the speech component like DNBD-LCMV. However, it also preserves more noise component around 0.5 kHz.

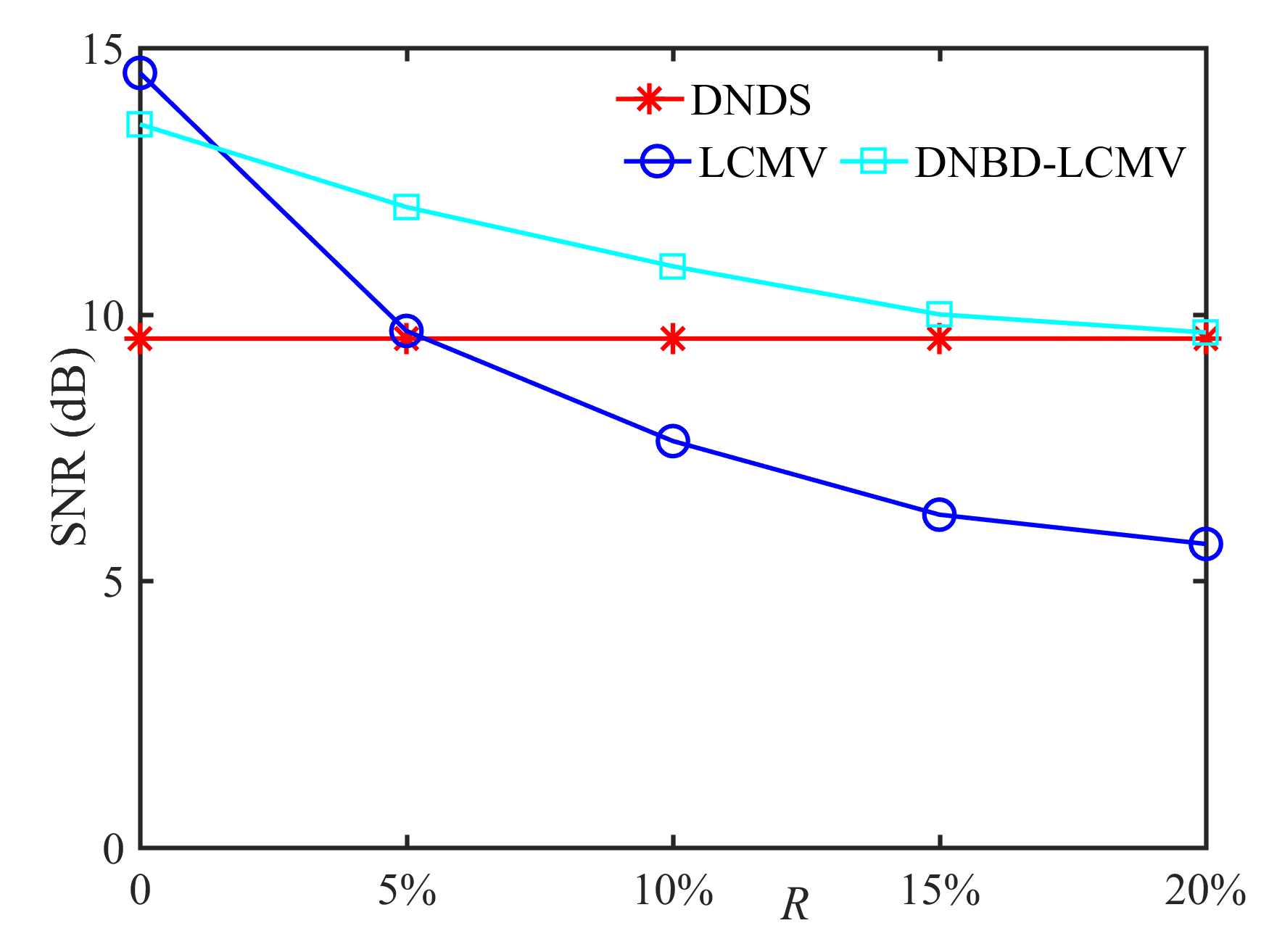

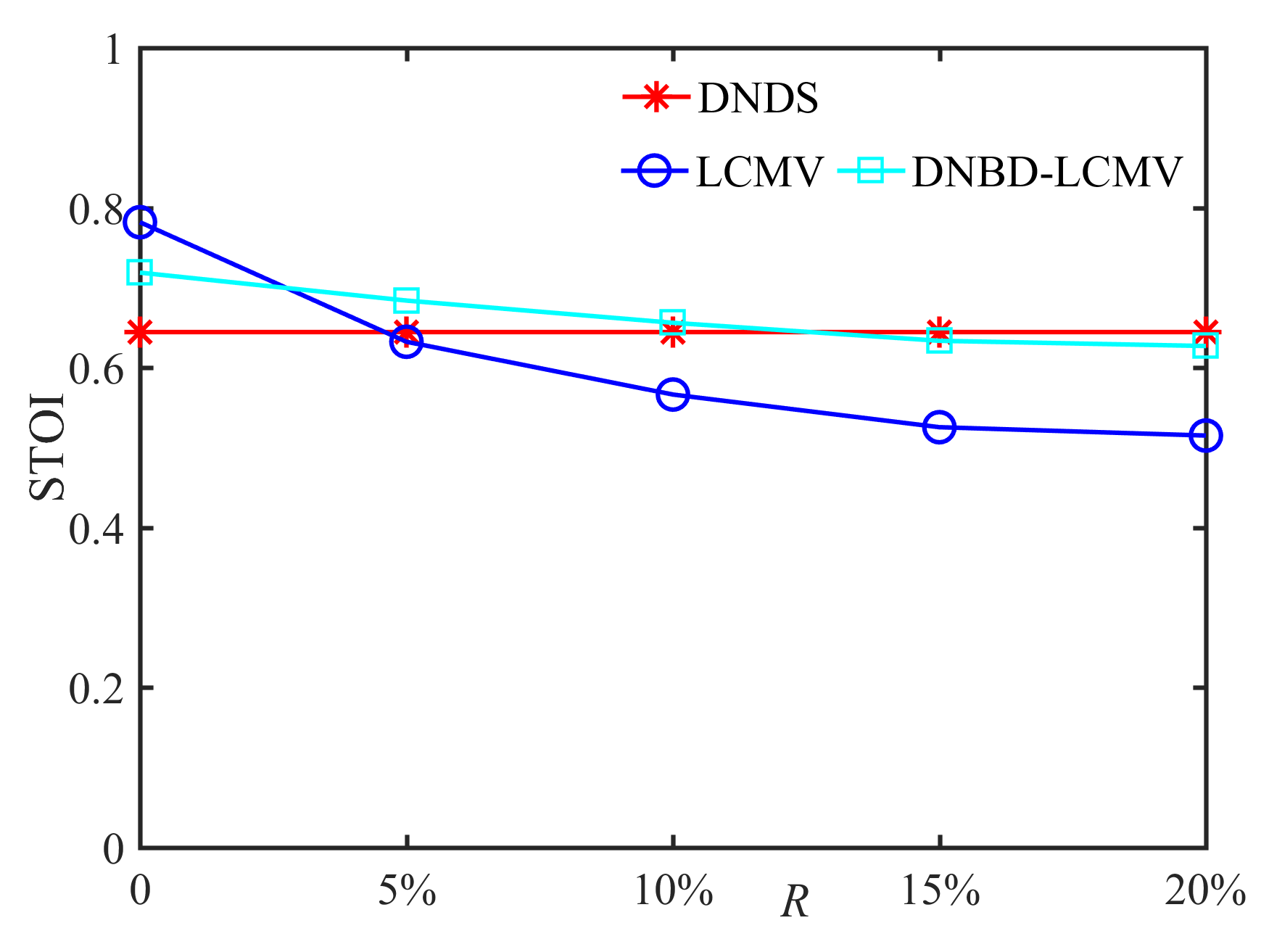

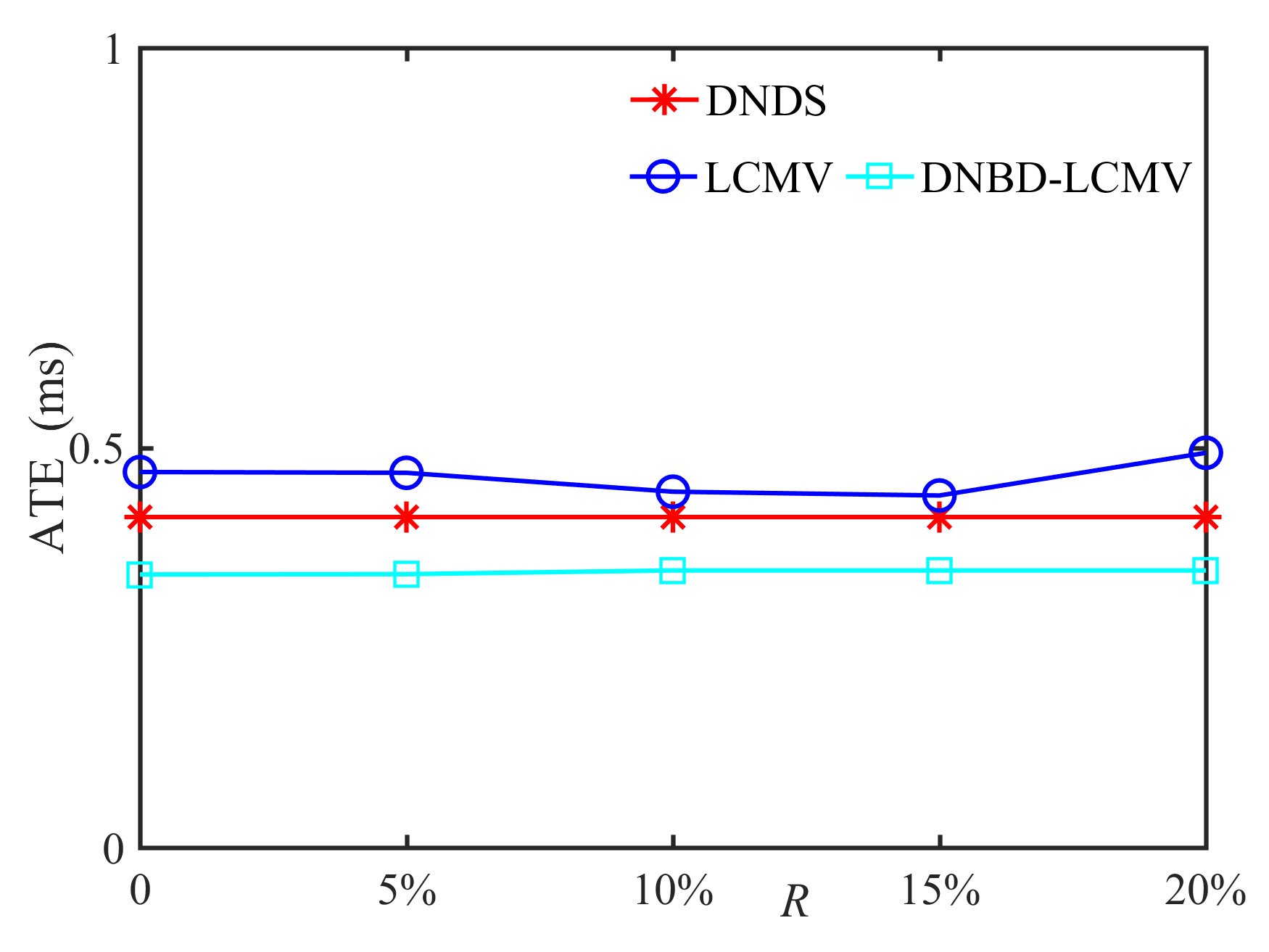

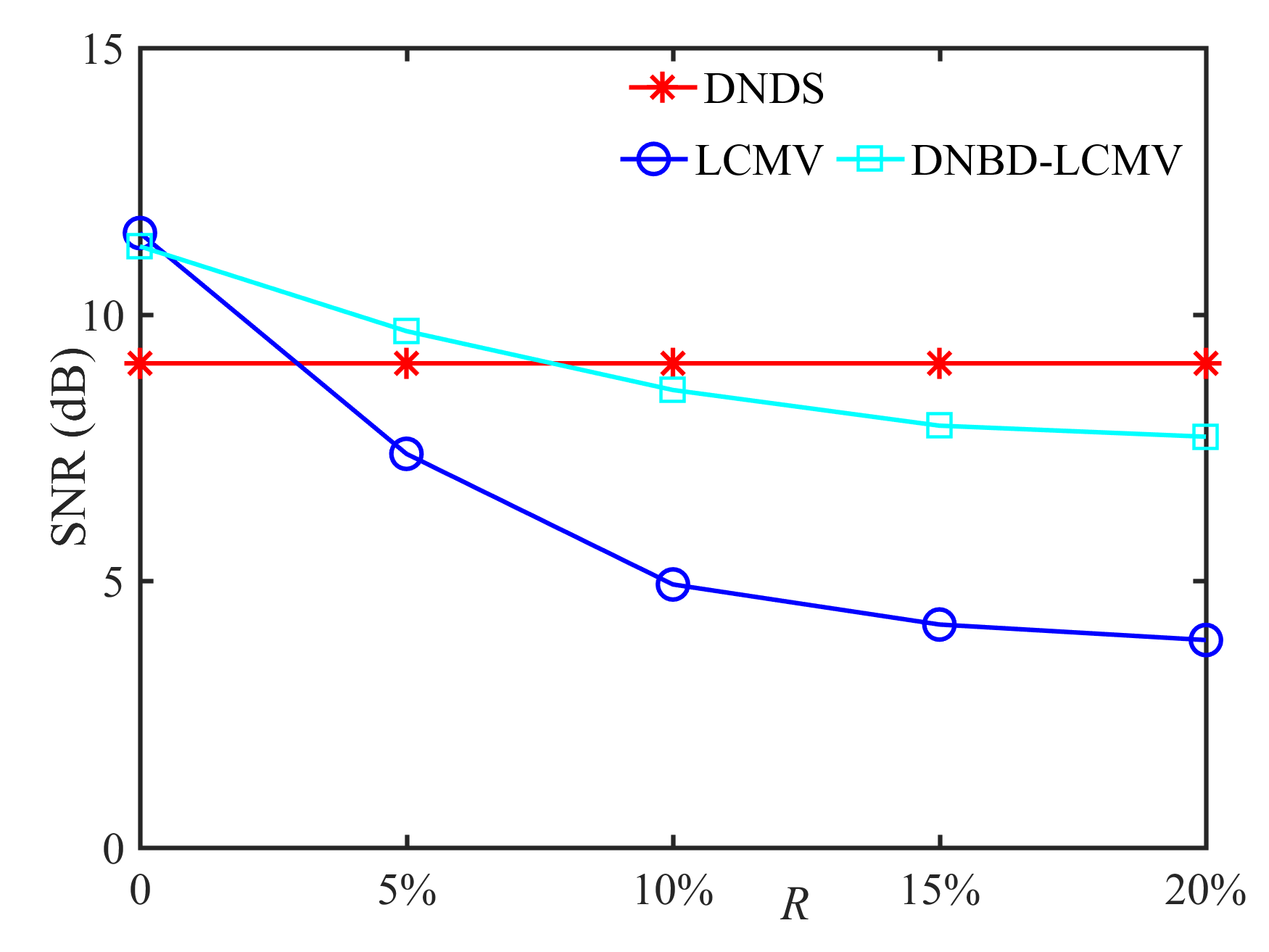

5.3 Robustness to VAD Error

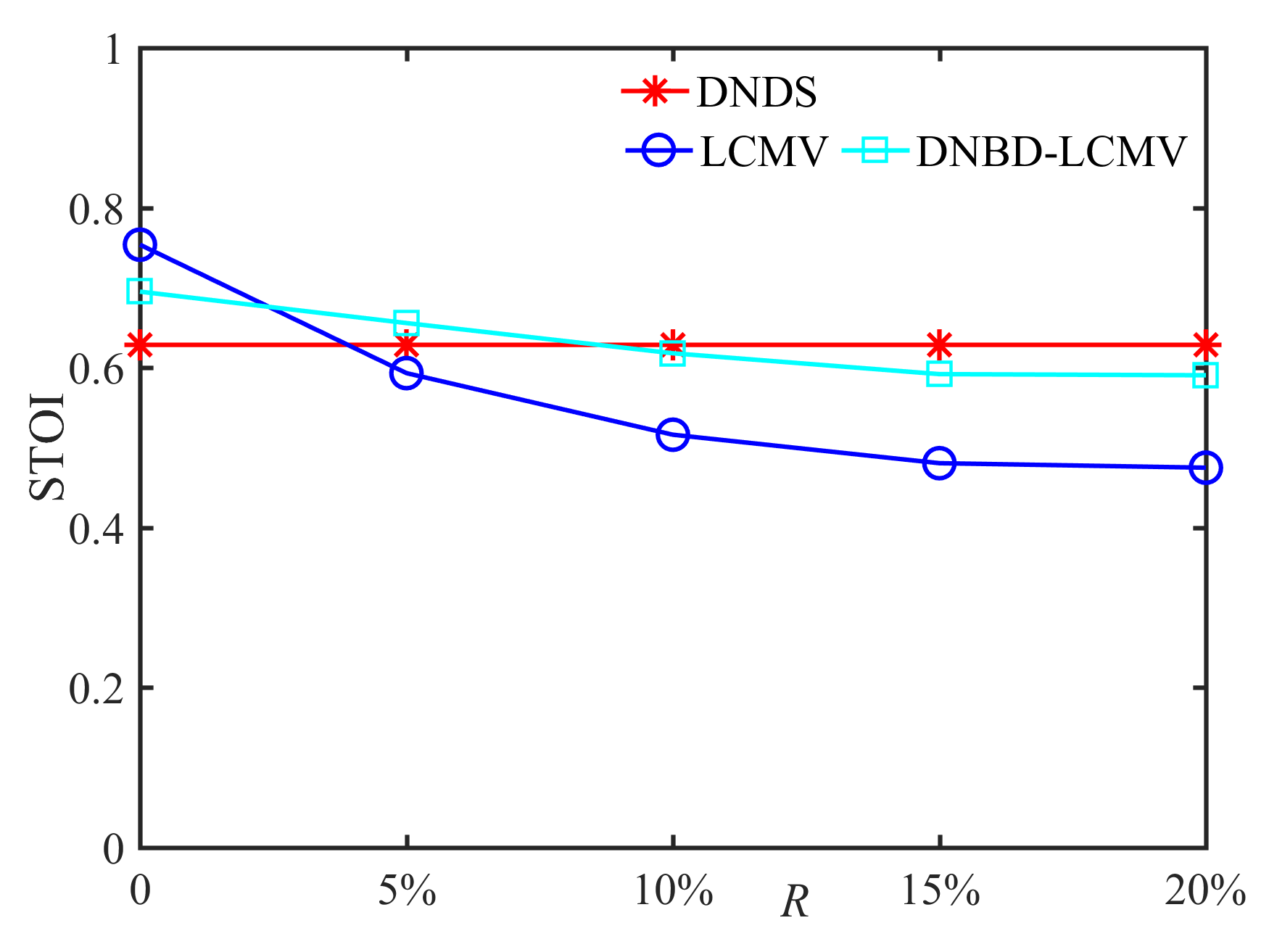

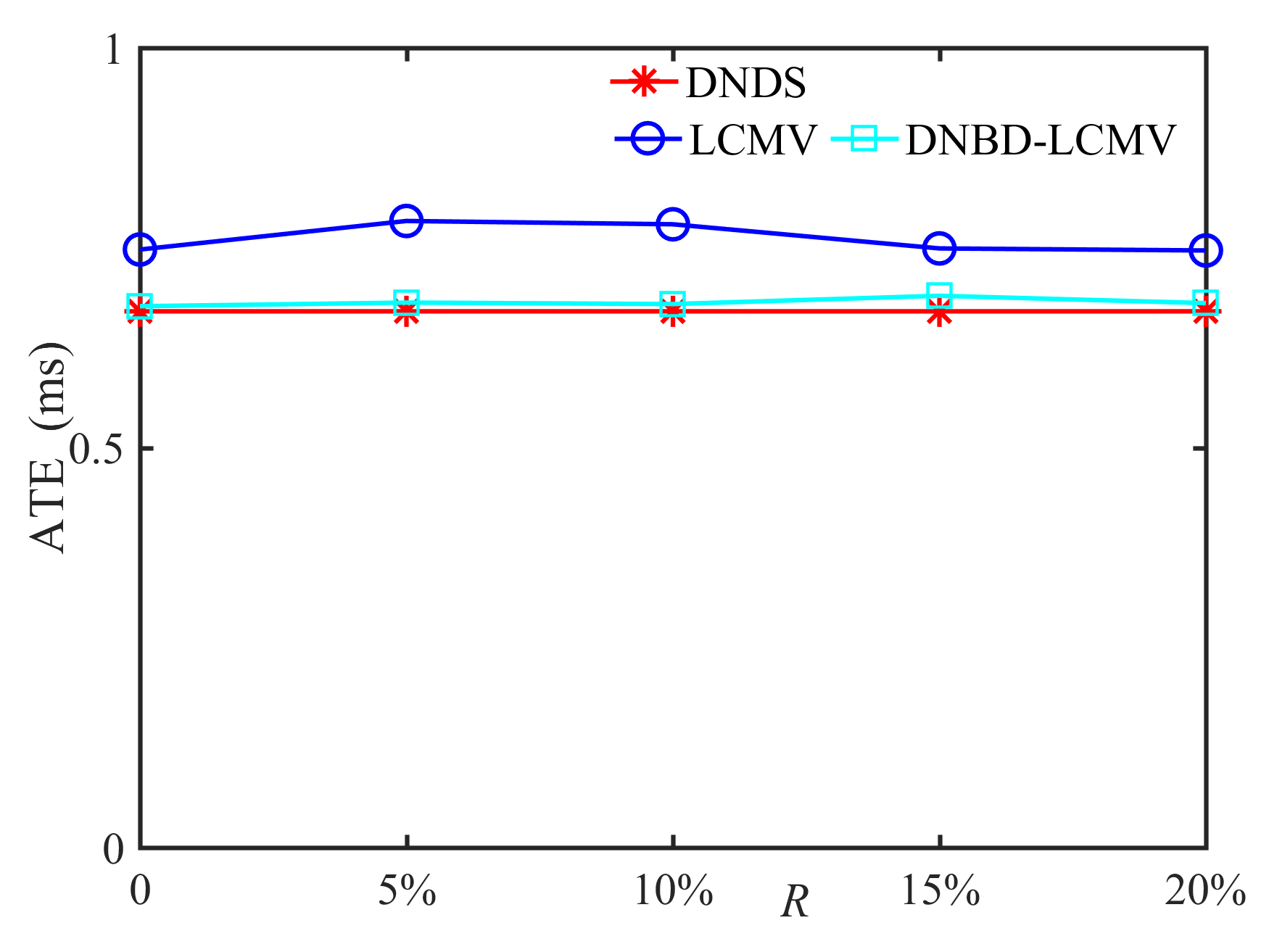

In this subsection, we compare the performance of DNDS, LCMV, and DNBD-LCMV under different VAD errors with the reverberation time s and the positional error cm, as shown in Fig. 7. First, for an ideal VAD, i.e., , the LCMV has the highest SNR and STOI. However, VAD error is often inevitable for practical applications. The SNR and the STOI of LCMV deteriorate significantly and become the lowest with increasing VAD error, which indicates that LCMV is the most sensitive to the VAD error. Second, when the VAD error becomes larger, the SNR and the STOI of DNBD-LCMV are reduced and gradually approach the DNDS, which is independent of the VAD error. Third, for different beamformers, their ATEs are almost identical and do not become larger with increasing VAD error.

5.4 Robustness to Reverberation Time

In this subsection, we compare the performance of DNDS, LCMV, and DNBD-LCMV when the reverberation time increases to s, as shown in Fig. 8. First, one can observe that the SNR and the STOI of LCMV are the lowest for non-zero positional error and the VAD error. The SNR and the STOI of DNBD-LCMV are reduced, and gradually approach or even lower than DNDS with increasing positional error or the VAD error. Second, no significant difference can be observed in the ATEs of different beamformers, which is similar to Fig. 5 (c) and Fig. 7 (c).

6 Conclusion

In this paper, we propose a distributed node-specific block-diagonal linearly constrained minimum variance (DNBD-LCMV) beamformer, where the block-diagonal noise covariance matrix is considered to derive its analytical solution from the centralized LCMV beamformer. By updating the inversion of the noise sample covariance matrix using the Sherman-Morrison-Woodbury formula, the exchanged signals can be computed in a more efficient way. The proposed DNBD-LCMV significantly reduces the number of signals exchanged between nodes and exactly solves the LCMV beamformer optimally in each frame. Analysis and experimental results confirm that the proposed DNBD-LCMV has much lower computational complexity, and is also more robust to column space estimation error and the VAD error than other state-of-the-art distributed node-specific algorithms.

Reference

References

- [1] M. Brandstein, D. Ward, Microphone Arrays: Signal Processing Techniques and Applications, Springer-Verlag, Berlin, Germany, 2001.

- [2] A. Bertrand, Applications and trends in wireless acoustic sensor networks: A signal processing perspective, in: Proc. IEEE Symp. Commun. Veh. Technol. (SCVT), Ghent, Belgium, 2011, pp. 1–6.

- [3] D. Culler, D. Estrin, M. Srivastava, Overview of sensor networks, IEEE Computer. 37 (8) (2004) 41–49.

- [4] S. Doclo, M. Moonen, T. Van den Bogaert, J. Wouters, Reduced-bandwidth and distributed MWF-based noise reduction algorithms for binaural hearing aids, IEEE Trans. Audio, Speech, Lang. Process. 17 (1) (2009) 38–51.

- [5] S. Markovich Golan, S. Gannot, I. Cohen, A reduced bandwidth binaural MVDR beamformer, in: Proc. Int. Workshop Acoust. Echo Noise Contr. (IWAENC), Tel-Aviv, Israel, 2010.

- [6] A. Bertrand, M. Moonen, Robust distributed noise reduction in hearing aids with external acoustic sensor nodes, EURASIP J. Adv. Signal Process. 2009 (1) (2009) 14.

- [7] Y. Jia, Y. Luo, Y. Lin, I. Kozintsev, Distributed microphone arrays for digital home and office, in: Proc. IEEE Int. Conf. Acoust., Speech, Signal Process. (ICASSP), Toulouse, France, 2006, pp. 1065–1068.

- [8] Z. C. Liu, Z. Y. Zhang, L. W. He, P. Chou, Energy-based sound source localization and gain normalization for ad hoc microphone arrays, in: Proc. IEEE Int. Conf. Acoust., Speech, Signal Process. (ICASSP), Honolulu, HI, USA, 2007, pp. 761–764.

- [9] M. H. Chen, Z. C. Liu, L. W. He, P. Chou, Z. Y. Zhang, Energy-based position estimation of microphones and speakers for ad hoc microphone arrays, in: Proc. IEEE Workshop. Applications. Signal Process. Audio. Acoust. (WASPAA), New Paltz, NY, USA, 2007, pp. 22–25.

- [10] A. Griffin, A. Alexandridis, D. Pavlidi, Y. Mastorakis, A. Mouchtaris, Localizing multiple audio sources in a wireless acoustic sensor network, Signal Process. 107 (2015) 54–67.

- [11] A. Alexandridis, A. Mouchtaris, Multiple sound source location estimation in wireless acoustic sensor networks using DOA estimates: the data-association problem, IEEE/ACM Trans. Audio, Speech, Lang. Process. 26 (2) (2018) 342–356.

- [12] X. W. Guo, Z. F. Chen, X. Q. Hu, X. D. Li, Multi-source localization using time of arrival self-clustering method in wireless sensor networks, IEEE Access. 7 (2019) 82110–82121.

- [13] M. Cobos, J. J. Perez-Solano, S. Felici-Castell, J. Segura, J. M. Navarro, Cumulative-Sum-Based localization of sound events in low-cost wireless acoustic sensor networks, IEEE/ACM Trans. Audio, Speech, Lang. Process. 22 (12) (2014) 1792–1802.

- [14] A. Bertrand, M. Moonen, Distributed node-specific LCMV beamforming in wireless sensor networks, IEEE Trans. Signal Process. 60 (1) (2012) 233–246.

- [15] A. Bertrand, M. Moonen, Distributed adaptive estimation of covariance matrix eigenvectors in wireless sensor networks with application to distributed PCA, Signal Process. 104 (2014) 120–135.

- [16] J. Szurley, A. Bertrand, M. Moonen, Distributed adaptive node-specific signal estimation in heterogeneous and mixed-topology wireless sensor networks, Signal Process. 117 (2015) 44–60.

- [17] Y. Zeng, R. C. Hendriks, Distributed delay and sum beamformer for speech enhancement via randomized gossip, IEEE Trans. Audio, Speech, Lang. Process. 22 (1) (2014) 260–273.

- [18] S. Markovich Golan, S. Gannot, I. Cohen, Distributed multiple constraints generalized sidelobe canceler for fully connected wireless acoustic sensor networks, IEEE Trans. Audio, Speech, Lang. Process. 21 (2) (2013) 343–356.

- [19] S. Markovich Golan, S. Gannot, I. Cohen, Multichannel eigenspace beamforming in a reverberant noisy environment with multiple interfering speech signals, IEEE Trans. Audio, Speech, Lang. Process. 17 (6) (2009) 1071–1086.

- [20] S. Markovich Golan, S. Gannot, I. Cohen, Subspace tracking of multiple sources and its application to speakers extraction, in: Proc. IEEE Int. Conf. Acoust., Speech, Signal Process. (ICASSP), Dallas, TX, USA, 2010, pp. 201–204.

- [21] T. Van den Bogaert, J. Wouters, S. Doclo, M. Moonen, Binaural cue preservation for hearing aids using an interaural transfer function multichannel Wiener filter, in: Proc. IEEE Int. Conf. Acoust., Speech, Signal Process. (ICASSP), Honolulu, HI, USA, 2007, pp. 565–568.

- [22] A. I. Koutrouvelis, T. W. Sherson, R. Heusdens, R. C. Hendriks, A low-cost robust distributed linearly constrained beamformer for wireless acoustic sensor networks with arbitrary topology, IEEE/ACM Trans. Audio, Speech, Lang. Process. 26 (8) (2018) 1434–1448.

- [23] S. Boyd, A. Ghosh, B. Prabhakar, D. Shah, Randomized gossip algorithms, IEEE Trans. Inf. Theory. 52 (6) (2006) 2508–2530.

- [24] J. Szurley, A. Bertrand, M. Moonen, Topology-independent distributed adaptive node-specific signal estimation in wireless sensor networks, IEEE Trans. Signal Info. Process. Over Netw. 3 (1) (2017) 130–144.

- [25] G. Q. Zhang, R. Heusdens, Distributed optimization using the primal-dual method of multipliers, IEEE Trans. Signal Info. Process. Over Netw. 4 (1) (2018) 173–187.

- [26] A. Bertrand, M. Moonen, Distributed adaptive node-specific signal estimation in fully connected sensor networks-part I: Sequential node updating, IEEE Trans. Signal Process. 58 (10) (2010) 5277–5291.

- [27] A. Bertrand, M. Moonen, Distributed adaptive estimation of node-specific signals in wireless sensor networks with a tree topology, IEEE Trans. Signal Process. 59 (5) (2011) 2196–2210.

- [28] Y. Zeng, R. C. Hendriks, Distributed estimation of the inverse of the correlation matrix for privacy preserving beamforming, Signal Process. 107 (2015) 109–122.

- [29] J. Capon, High-resolution frequency-wavenumber spectrum analysis, Proc. IEEE. 57 (8) (1969) 1408–1418.

- [30] M. Er, A. Cantoni, Derivative constraints for broad-band element space antenna array processors, IEEE Trans. Acoust., Speech, Signal Process. ASSP-31 (6) (1983) 1378–1393.

- [31] J. Sherman, W. J. Morrison, Adjustment of an inverse matrix corresponding to a change in one element of a given matrix, Ann. Math. Stat. 21 (1) (1950) 124–127.

- [32] B. D. Van Veen, K. M. Buckley, Beamforming: A versatile approach to spatial filtering, IEEE ASSP Mag. 5 (2) (1988) 4–24.

- [33] O. L. Frost, III, An algorithm for linearly constrained adaptive array processing, Proc. IEEE. 60 (8) (1972) 926–935.

- [34] S. Gannot, E. Vincent, S. Markovich Golan, A. Ozerov, A consolidated perspective on multimicrophone speech enhancement and source separation, IEEE/ACM Trans. Audio, Speech, Lang. Process. 25 (4) (2017) 692–730.

- [35] S. Markovich Golan, A. Bertrand, M. Moonen, S. Gannot, Optimal distributed minimum-variance beamforming approaches for speech enhancement in wireless acoustic sensor networks, Signal Process. 107 (2015) 4–20.

- [36] C. S. Zheng, A. Deleforge, X. D. Li, W. Kellermann, Statistical analysis of the multichannel Wiener filter using a bivariate normal distribution for sample covariance matrices, IEEE/ACM Trans. Audio, Speech, Lang. Process. 26 (5) (2018) 951–966.

- [37] T. Gerkmann, R. C. Hendriks, Unbiased MMSE-based noise power estimation with low complexity and low tracking delay, IEEE Trans. Audio, Speech, Lang. Process. 20 (4) (2012) 1383–1393.

- [38] H. Chen, A. Campbell, B. Thomas, A. Tamir, Minimax flow tree problems, Networks. 54 (3) (2009) 117–129.

- [39] Y. Choi, M. Khan, V. S. Anil Kumar, G. Pandurangan, Energy-optimal distributed algorithms for minimum spanning trees, IEEE J. Sel. Areas Commun. 27 (7) (2009) 1297–1304.

- [40] C. H. Taal, R. C. Hendriks, R. Heusdens, J. Jensen, An algorithm for intelligibility prediction of time-frequency weighted noisy speech, IEEE Trans. Audio, Speech, Lang. Process. 19 (7) (2011) 212–2136.

- [41] J. B. Allen, D. A. Berkley, Image method for efficiently simulating small-room acoustics, J. Acoust. Soc. Amer. 65 (4) (1979) 943–950.

- [42] E. A. Lehmann, A. M. Johansson, Prediction of energy decay in room impulse responses simulated with an imgae-source model, J. Acoust. Soc. Amer. 124 (1) (2008) 269–277.

- [43] Y. Hu, P. C. Loizou, Subjective comparison and evaluation of speech enhancement algorithms, Speech Communication. 49 (7) (2007) 588–601.

- [44] A. Spriet, M. Moonen, J. Wouters, The impact of speech detection errors on the noise reduction performance of multi-channel Wiener filtering and generalized sidelobe cancellation, Signal Process. 85 (2005) 1073–1088.

- [45] C. H. Knapp, G. C. Carter, The generalized correlation method for estimation of time delay, IEEE Trans. Acoust., Speech, Signal Process. ASSP-24 (4) (1976) 320–327.

- [46] Y. T. Chan, K. C. Ho, A simple and efficient estimator for hyperbolic location, IEEE Trans. Signal Process. 42 (8) (1994) 1905–1915.

- [47] H. Cox, Resolving power and sensitivity to mismatch of optimum array processors, J. Acoust. Soc. Amer. 54 (3) (1973) 771–785.