Distributed Multi-Robot Multi-Target Tracking Using Heterogeneous Limited-Range Sensors

Abstract

This paper presents a cooperative multi-robot multi-target tracking framework aimed at enhancing the efficiency of the heterogeneous sensor network and, consequently, improving overall target tracking accuracy. The concept of normalized unused sensing capacity is introduced to quantify the information a sensor is currently gathering relative to its theoretical maximum. This measurement can be computed using entirely local information and is applicable to various sensor models, distinguishing it from previous literature on the subject. It is then utilized to develop a distributed coverage control strategy for a heterogeneous sensor network, adaptively balancing the workload based on each sensor’s current unused capacity. The algorithm is validated through a series of ROS and MATLAB simulations, demonstrating superior results compared to standard approaches that do not account for heterogeneity or current usage rates.

Index Terms:

Multi-Robot Systems, Reactive and Sensor-Based Planning, Distributed Robot Systems, Networked Robots.I Introduction

Multiple target tracking (MTT) is a fundamental research problem where one needs to continuously estimate the states of multiple moving targets of interest within an assigned space. Due to its importance in application areas, ranging from environmental monitoring, e.g., comprehending collective behaviours of social animals [1, 2, 3, 4] or pedestrians [5], to intelligent autonomous systems, e.g., autonomous driving [6], it has drawn increasing attention in the signal processing, computer vision, and robotics communities.

Standard MTT algorithms, such as global nearest neighbor (GNN) [7], joint probabilistic data association (JPDA) [8], multiple hypothesis tracking (MHT) [9], and particle filters [10], solve the problem by repeatedly identifying targets and estimating their states using sensor data and Bayesian inferences, and matching the estimates to existing target tracking information, the process widely known as data association. On the other hand, solutions that are based on random finite set (RFS) theory, such as the family of probability hypothesis density (PHD) filters [11, 12] and multi-target multi-Bernoulli (MeMBer) filters [13, 14], do not require an explicit data association process. Hence, those approaches are best suited for the applications where the identities of targets are not important.

I-A Distributed MR-MTT

In recent years, exploring robotic networks’ ability to adaptively adjust their sensor coverage, multi-robot multi-target tracking (MR-MTT) problems have been widely investigated. In this paper, we discuss distributed algorithms to find scalable and fault-tolerant approaches to the problems. Distributed MR-MTT mainly consists of cooperative multi-target state estimation and multi-robot active motion planning. The former can be implemented using either Bayesian methods [15, 16, 17, zhu2020fully, 19], which require each robot to recursively estimate the statistical distribution about target states over the entire task space, or consensus algorithms [20, 22, 23], which enable robots to continuously exchange information until they reach an agreement. The latter is frequently formulated as an optimal control problem aiming to maximize the tracking performance, which is defined as the degree of information acquisition [24, 25, 26], the distance between a robot and a target [27], or some general measure of the target observability [28, 19]. However, the above-mentioned schemes could be susceptible to local extrema, where multiple robots track the same target while others are left unobserved, and provide no guarantee to cover the entire task space with the robots’ limited-range sensors. As a result, none of them is able to jointly detect, localize, and track an unknown and time varying number of multiple targets.

I-B Coverage Control

Perhaps the most suitable MR-MTT solution to pair space exploration with active target state estimation is through coverage control framework. Coverage control is a popular way to optimally gather target information while guaranteeing complete space coverage. It aims to maximize a performance measure such as the robots’ distances to targets. The most widely used distributed coverage control strategies are Voronoi-based methods [29, 30, 31, 32] that leverage Lloyd’s algorithm [33]. The basic idea is to partition an assigned task space using the Voronoi tessellation and then drive each robot towards the weighted centroid of its assigned Voronoi partition. This process will enable the network to reach a local optimum in its coverage performance.

In coverage control, the mission space is typically convex, and robots are only required to communicate with their neighbors and are assumed to have perfect knowledge of their locations. A number of recent works have provided various solutions to accommodate uncertainty in sensor localization [34, 35, 36, 37] and to allow for non-convex spaces [38, 39, 40, 41]. In particular, leveraging the coverage control framework, the work of Dames [42] proposes to couple a distributed multi-target state estimation scheme and a distributed planning algorithm in order for each robot to estimate the density of targets and move to the centroid of the estimated target density. Consequently, networked robots with a limited-range isotropic sensor are able to actively move to maximize the total detection probability of all targets despite measurement errors and false alarms, while maintaining coverage of the entire task space.

I-C Sensor Heterogeneity

Nevertheless, the above-mentioned work exploits MR-MTT with coverage control framework using fixed types of sensor. Yet, cooperative heterogeneous sensor networks composed of arbitrary types of sensors expand the use of robotic networks to complex scenarios where a variety of different types of robots and sensors are needed to complete the task. A variety of approaches have been proposed to incorporate measurements from multiple heterogeneous resources to improve the target tracking quality [43, 44, 45] or to enhance the robustness and resilience of a robotic network [46, 47]. However, none of these methods aims to attain the globally optimal tracking performance by taking advantage of heterogeneous sensing capability while maintaining coverage of the task space.

Weighted Voronoi diagrams, which include power diagrams, have recently been used to account for heterogeneity in the radii of circular sensor footprints [41, 48, 49, 50] and energy levels [51]. Voronoi-based methods have also been used for wedge-shaped sensor field of views (FoV) [52]. Stergiopoulos and Tzes [53, 54, 55] used novel partitioning and distribution algorithms for sensing networks where all sensor FoVs share the same arbitrary shape but may be of a different size. Hexsel et al. [56] introduced a gradient ascent-based coverage control algorithm to maximize the joint probability of detection in anisotropic sensing networks. Nevertheless, as these approaches use gradient ascent, robots can prematurely converge to local extrema of an objective function they aim to maximize.

I-D Contribution

Building on our previous conference version [57], this paper proposes a novel multi-robot multi-target joint state estimation and planning approach for heterogeneous limited-FoV robotic networks. The proposed method allows a team of robots to search for targets over a task space and actively maintain coverage of a majority of detected targets in a distributed manner. We summarize our main contributions as follows.

-

1.

We propose a new measure called the normalized unused sensing capacity that quantifies the difference between the current information that a sensor gathers and the theoretical maximum. This can be computed using entirely local information and does not require any assumptions about the type of sensor or the shape of the sensor FoV.

-

2.

Leveraging this measure, we first replace the standard Voronoi diagram in Lloyd’s algorithm with the power diagram, with the goal of balancing the task load across the team. In particular, we assign robots that have more accurate sensors, larger sensor FoVs, and/or are not currently tracking any objects to cover larger areas.

-

3.

While power diagram implementation provides fast and near optimal space allocation for heterogeneous robotic teams, it does not yield the best tracking accuracy. Therefore, we propose CCVD, a closed-form optimal space partitioning algorithm to further improve the tracking performance and to evaluate the power diagram method by comparing it with theoretical optimum.

-

4.

We demonstrate the efficacy of our approach through a series of experiments in simulated environments to show that the approach yields higher quality and more reliable tracking than the standard Voronoi diagram-based approach and the zigzag coverage path, especially when the robots are highly heterogeneous. To the best of our knowledge, our work presents the first distributed algorithm that allows heterogeneous sensors to jointly detect, localize and track unknown and time-varying number of targets. Therefore, our results are only comparable to baseline algorithms [42][torres2016coverage].

Distinct from our conference paper [57], this paper includes the following novel contributions.

-

1.

Whereas only the power diagram was used in [57], in this paper, we introduce a new space partitioning algorithm based on CCVD. The area of the task space allocated to each robot by CCVD is strictly proportional to the sensing capacity of that robot, which will further optimize the space partitioning by leveraging the heterogeneity of the robots, but at the cost of greater computing resource requirements.

-

2.

We analyze the computational complexity of the proposed algorithms, and discuss the communication cost incurred by the algorithms.

-

3.

We present ROS simulations with visualization in Gazebo and RViz. We conduct additional Matlab simulations for quantitative analysis of the new methods.

-

4.

We compare our methods with a coverage path planning approach quantitatively. Meanwhile, we conduct an ablation study to demonstrate the usage of both the centroid of detection and the partitioning algorithms.

The rest of this paper is organized as follows. In Section II, we formulate the MR-MTT problem and introduce the prerequisites of our proposed methods. Section III presents our proposed space partitioning algorithms using both the power diagram and CCVD along with a distributed control algorithm based on these partitions. In Section IV we analyze the performance of our algorithms in terms of computational complexity and communication loac and introduce the quantitative metrics used to validate our algorithms. Finally, we show simulation results in Section V and draw conclusions in Section VI.

II Problem Formulation

This paper considers the multi-robot, multi-target tracking (MR-MTT) problem, defined below.

Problem 1 (MR-MTT)

Consider a network of mobile sensors (i.e., robots) denoted by with two-dimensional positions and orientations . Each robot has the following dynamical model:

| (1) | ||||

where and are the two-dimensional control inputs for position and orientation of the th robot, respectively. The robots move in a convex environment with known bounds. There is a set of targets in the environments, , where the state of each target is its two-dimensional position . Targets may be moving in an unknown arbitrary pattern or be stationary, and the robot’s onboard sensors have a certain probability of detecting them. The robots are tasked to search for and track the targets, with the goal to identify the number of targets and the state of each target.

Robots communicate with each other bidirectionally. A neighbor set of a robot is defined as all robots that are within the communication range of robot excluding itself. For the purpose of analysis, we assume that the communication range of each robot is large enough such that is non-empty at all time, which can be ensured by using, for instance, connectivity control algorithms [58] to sustain the connectivity.111Existing methods such as [38, 39, 40, 41] can be used to extend our proposed algorithms to non-convex, cluttered environments.

II-A Lloyd’s Algorithm

Given a density function defined over the 2-dimensional Euclidean space, the objective of Lloyd’s algorithm is to compute and minimizing the following functional:

| (2) |

where is the dominance region of robot (i.e., the region that is assigned to robot for coverage), is the Euclidean distance, , and is a monotonically increasing function. The function defines how the cost of robots’ sensing depends on its distance to each sensing location . The dominance regions partition , meaning the regions have disjoint interiors (i.e., ) and the union of all regions is (i.e., ) [29].

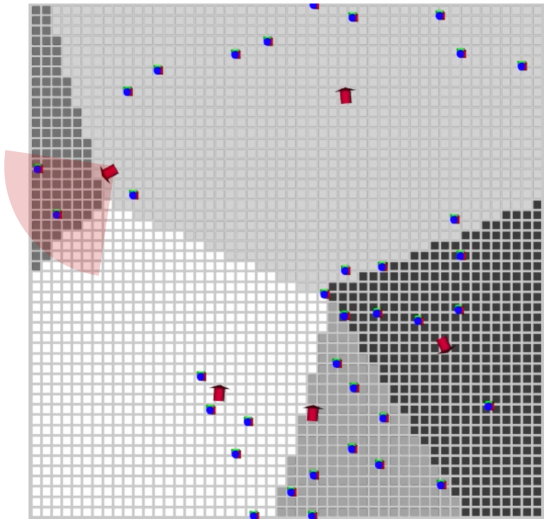

When , each of is given by [29]. In other words, is the collection of all points that are the nearest neighbors of . This is widely known as the Voronoi tessellation, which is illustrated in Figure 1. We refer to as a Voronoi partition, which is convex by construction, and as the generation point of . When each is the weighted centroid of the -th Voronoi partition given by

| (3) |

the robot positions minimize [29].

By applying Lloyd’s algorithm, coverage control sets the control input for robot to

| (4) |

where is a positive gain. In this paper, we use the control law in a discrete time manner, following this direction at the maximum speed of the robot. The angular velocity uses a bang-bang strategy, maximizing the angular velocity until the robot is facing directly towards the goal . By following this control law, the robots asymptotically converge to the weighted centroids of their associated Voronoi partitions. This still holds even when varies with time.

II-B PHD Filter for MTT

In an MTT setting, a natural choice for is to capture the target density at each location . This time-varying density function can be estimated using any standard MTT algorithms. In this paper, we use the PHD filter, as it does not require any explicit data association.222We note that the overall framework presented in this paper can be easily adapted to any other choice of Bayesian MTT tracker.

The Probability Hypothesis Density (PHD), denoted by , is the first order moment of a random finite set (RFS), i.e., a set with a random number of random elements such as a time-varying set of moving targets, and takes the form of a density function over the state space. The integral of the PHD over a region is equal to the expected number of targets in the region at a given time. By assuming that the RFSs are Poisson, i.e., the number of targets follows a Poisson distribution and the spatial distribution of targets is i.i.d., the PHD filter recursively propagates the PHD in order to track the distribution over target sets [11]. Similar to any other Bayesian state estimators, the PHD filter recursively predicts the target state using state transition probability (i.e., target motion), and updates the prediction using the probabilistic models of the sensor measurements.

The PHD filter uses three models to describe the motion of targets: 1) The motion model, , describes the likelihood of an individual target transitioning from an initial state to a new state . 2) The survival probability model, , describes the likelihood that a target with state will continue to exist from one time step to the next. 3) The birth PHD, , encodes both the number and locations of the new targets that may appear in the environment.

The PHD filter uses another three models to describe the ability of robots to detect targets: 1) The detection model, , gives the probability of a robot with state successfully detecting a target with state . For instance, we can set the detection probability to zero for all outside the sensor FoV. 2) The measurement model, , gives the likelihood of a robot with state receiving a measurement from a target with state . 3) The false positive (or clutter) PHD, , describes both the number and locations of the clutter measurements.

Using these target and sensor models, the PHD filter prediction (5a) and update (5b) equations are as follows:

| (5a) | ||||

| (5b) | ||||

| (5c) | ||||

| (5d) | ||||

where is the probability of a sensor at receiving measurement from a target with state and (5c) is used within the prediction step (5b). The subscript refers to time. Note that is not a probability density function (PDF), but can converted into one by normalizing it with the expected number of targets in a sub-space of ,

| (6) |

Dames [42] developed a distributed PHD filter in which each robot maintains the PHD within a unique subset, , of the environment while ensuring the distributed filtering scheme yields the same target estimation performance as its centralized counterpart. Three algorithms then account for motion of the robots (to update the subsets ), motion of the targets (in (5a)), and measurement updates (in (5b)). In this paper, we adopt the same strategy for each robot to locally maintain its portion of the PHD.

III Optimized Space Partition

We propose the novel normalized unused sensing capacity in Section III-A, which will be used to quantify the sensor heterogeneity in Section IV-C2. After that, two space partitioning algorithms are developed based on power diagram (Section III-B) and CCVD (Section III-C). Lastly, a control policy is proposed in Section III-D to optimize robot poses given the space partitioning schemes.

III-A Normalized Unused Sensing Capacity

We assume that each robot has a finite field of view (FoV) , which could be different across the sensors . Examples of include a wedge shape for a camera (e.g., Figure 2) or a circle for a lidar. Let denote the probability of robot at position and with orientation detecting a target with state , which is the same model as in the PHD filter. The robot cannot detect targets outside its FoV , i.e., . We assume that is time-invariant in the robot’s local coordinate frame, but we make no other assumptions about the shape of or the functional form of . In practice, can be estimated using data-driven approaches [59] and/or prior knowledge of the sensor model.

We define the total detecting capability of robot as

| (7) |

which depends on the size of and the sensor’s ability to detect information within .

In this paper, we consider sensor heterogeneity in terms of not only the sensing capability, such as FoV and detection accuracy, but also its current usage to track targets. When a sensor detects a target and starts to track it, the sensor’s sensing capability will be reduced. We assume that we are tracking targets of finite size that cannot overlap, and that the target size is much smaller than the sensor’s FoV. Therefore, for a robot , there exists some area such that only 1 object can be in that area, i.e., . Then

| (8) |

where the last approximate equality holds for small , such that is approximately constant over . We can then write robot ’s FoV as the union of disjoint regions, , with the above property, so that and . Letting , we then define robot ’s maximum sensing capacity as

| (9) |

where comes from (7) and is a tuning parameter associated with the maximum target density in the task space. For instance, as the expected maximum distance between the targets becomes larger, a smaller is selected to discount the maximum sensing capacity of a sensor. Remark 1 discusses the selection of in more detail.

The expected number of target detections at is given by

| (10) |

where is defined in (6). Thus, the total expected target detection probability is given by

| (11) |

Definition 1 (Normalized Unused Sensing Capacity)

The relative normalized unused sensing capacity with respect to the maximum target density for robot , denoted , is given by

| (12) |

Note that (12) can be easily modified by replacing with a density function propagated via a different Bayesian filter. The normalized unused sensing capacity quantifies current capacity for a sensor to track targets. The larger the sensing capability of a robot, the higher the number of targets it can track. However, as the robot tracks an increasing number of targets, its remaining sensing capability begins to decay.

Remark 1 (Choice of )

The choice of is a free parameter. Setting it to a large value will make large relative to , resulting in all robots having similar unused capacities . Picking will result in robots only using the expected number of detections with no acknowledgement of total capacity. When the target density is unknown, we may choose to account for the maximum possible number of target detection. Otherwise, can be set as the ratio of approximate and the number of .

III-B Power Diagram Implementation

To maximize the total detection probability of targets, we control the robots, considering their heterogeneity in spatial deployment. To this end, we optimize both space partitioning and each sensor’s location within its assigned partition. Unlike the Voronoi diagram, which is suitable for the sensors with a homogeneous and isotropic sensing model, power diagrams [60] are often used to compute the optimal dominance regions when the team has heterogeneous sensing models.

The power diagram is a variant of the standard Voronoi diagram that uses the power distance,

| (13) |

where is the weight or power radius of , and is the generation point. Figure 1 illustrates an example of the power diagram. Existing power diagram-based approaches utilized sensor positions as the generation points, , and the radii of the sensor FoVs as the weights, , to account for sensor heterogeneity [50, 41]. However, these approaches are limited to isotropic sensors. On the other hand, our approach extends to heterogeneous anisotropic sensors.

We utilize the normalized unused sensing capacity to set the power radius in (13). This is a novel strategy to account for the heterogeneity in computing the dominance regions. To achieve this, we proceed by expressing the optimization functional (2) as

| (14) |

where is a mapping from the unused sensing capacity to the power radius. Since the normalized unused sensing capacity has units of area, we choose such that the resulting power radius, , is equal to the radius of a perfect (i.e., ) isotropic sensor with the same total sensing capability, , i.e., . Therefore we have

| (15) |

Existing methods based on the power diagram, such as [50, 41, 51], assume that the sensor’s detection probability at in its assigned power partition is a non-increasing function of the distance from to the sensor. In other words, the location of a sensor is the location that maximizes its detection probability of targets in its power partition. Thus, it makes sense that they use the sensor location as the generation point, i.e., let . However, this no longer holds true for anisotropic sensors.

Instead, we find the weighted centroid of the detection probability as

| (16) |

which we call the centroid of detection (COD). We use COD as the generation points for our power diagram, i.e., .333Note that for an isotropic sensor the COD will be the same as the sensor position. Thus, the power partition of each robot becomes

| (17) |

Remark 2

For given and of a robot , we can use (7) to find an equivalent isotropic set satisfying . This will map an arbitrary sensor model, characterized by and , to a perfect isotropic sensor, characterized by a circular with . By choosing the appropriate mapping of the normalized unused sensing capacity , the weighted center of detection and the total sensing capacity are preserved as those of the original sensor model. Hence, unused sensing capabilities of different sensors can be directly compared by their power radii, and so does the task spaces they should be assigned.

III-C Capacity-Constraint Voronoi Diagram Implementation

The capacity-constraint Voronoi diagram (CCVD) [61], visualized in Figure 1, computes the optimal task space assignment with the weight of each generation point as a hard constraint and yields closed-form optimal space partition in a discrete space. This is done by two steps: initial cell assignment and cell swapping. 1) Firstly, the task space is segmented into a finite set of regular grid cells , where each cell is identified by its center . The th cell is indexed by . Then, we conduct an initial cell assignment satisfying a capacity constraint which specifies the maximum number of cells that can be assigned to each generation point. 2) Secondly, we iteratively revise the cell assignment to minimize the total cost defined by

| (18) |

where is the generation point assigned to , is a monotonically increasing function as introduced in (2), and denotes the weight of a generation point as introduced in (13). The right-most term in (18) is a constant for all assignments, and can therefore be omitted. The cell assignment minimizing (18) results in a discrete power diagram where the number of cells in each power partition is equivalent to the capacity of its generation point.

III-C1 Initial Cell Assignment

To implement the CCVD in the robot task assignment, is segmented into cells such that the size of each cell fits the maximum size of an individual target. To take the sensor heterogeneity into consideration, we associate the capacity of each robot , i.e., the number of cells assigned to , denoted by , with its normalized unused sensing capacity . Therefore, we normalize to by selecting a constant

| (19) |

at each discrete time step such that and round to an integer. Similar to Section III-B, we use , i.e., COD of each robot, as the CCVD generation points instead of robot’s locations. The initialization step requires all robots to synchronize in order to find through (19) in a distributed manner. To achieve that, a distributed consensus protocol [62] is applied as outlined in Algorithm 1. Initially, an equal number of cells is assigned to each robot to compute a temporary constant . Then the robots reach a consensus on via Equation 20 to determine using (19) distributedly.

| (20) |

III-C2 Recursive Cell Assignment

The original CCVD is constructed by iteratively swapping the cell assignment to all generation points, which is computationally expensive since each of the cells needs to be examined for the optimal assignment. In contrast, the enhanced approach by [63] reduces the computational complexity without compromising the quality of the point distribution, by allowing a more efficient assignment strategy called median site swap, leading to faster convergence and lower time complexity. To illustrate, the median site swap method focuses on finding an optimal cell to as a distance reference to re-assign cells between the two robots , and , without the need to examine every cell.

We propose a distributed cell assignment algorithm, outlined in Algorithm 2, based upon [63, Algorithm 3]. Initially, an indicator is set to false for a robot , indicating that the robot has not been assigned the best set of cells that minimizes (18). Then the robot compares its ID with those of other robots from its neighbor set. It undertakes the computation for the neighbors with greater IDs by updating the assignment as outlined in Lines 4-18, while requesting updates to its capacity from neighbors with smaller IDs, as outlined in Lines 19-22.

As an example, consider two robots with . A serving robot requests the capacity and the position from its served neighbor to conduct cell assignment, demonstrated as follows. For this pair of neighboring robots, we aim to minimize the cost given by

| (21) |

In Lines 7-9, we calculate the cost defined in (21) for robot to cover a cell and store it as a key in an array . Then, we need to find the optimal cells out of the total to assign for robot in order to minimize the cost, while assigning the rest to robot . This is done in Line 10 by finding a cell which only allows cells to have . Physically, the cell is the median cell, i.e., the cell with the median value, of all cells assigned to both robots and . After finding , any cell that costs less than the cell is assigned to robot ; otherwise it is assigned to robot as outlined in Lines 11-15. Lines 16 and 17 is to keep track of the convergence of the assignment, which occurs when remains unchanged from the previous iteration. On the other hand, the served robot sends its capacity and position to its serving neighbor to request its assignment , outlined in Lines 21-22.

One may notice that Algorithm 2 yields uneven computational workload at each robot. In particular, robots with smaller IDs take on more computation tasks for the cell assignment than those with larger IDs. In practice, one can assign smaller IDs to robots with higher computational resources.

III-D Optimized poses

Once the optimized partition is retrieved, each robot must move to its optimized pose to improve the detection probability. By adopting the same analytical approach presented in [29], we can compute the partial derivative of with respect to as

| (22) |

where and are the mass and center of mass associated with the region , respectively, defined as

| (23) | ||||

Thus, as is recursively driven to , the partial derivative approaches to and thus sensing capability of in is optimized. The control inputs in (1) for are then given by

| (24) | ||||

where denotes the angle of a position vector in global frame.

Algorithm 3 outlines the distributed control algorithm for each robot. At each time step, the robot first finds the optimized partition using one of the approaches explained in Sections III-B and III-C, and maintains the PHD within its partition using the same strategy as in [42], which requires the exchange of local PHD with its neighbors. Then, the robot computes its control using (24).

IV Performance Analysis

IV-A Computational Complexity

Our distributed algorithms for the space partitioning using a power diagram, as described in Sections III-B and III-C, are similar to distributed computation methods for constructing a Voronoi diagram. Resorting to the computational complexity analysis reported in [64], the complexity of such methods is and, hence, so is ours, where is the number of neighbors of each robot .

In the CCVD implementation described in Section III-C, Algorithm 2 requires each of the robots to find the optimal assignment of the total cells. For the worst-case complexity analysis, we assume that each robot is a neighbor of all the other robots, resulting in execution of the loop in Lines 3-21. The most time-consuming part of the algorithm is in finding the cell , i.e., the median point in the unordered array . In our implementation, we employ Hoare’s quick-select algorithm [65] to find the median value by choosing a pivot randomly and dividing the array into two parts, i.e., one with values less than or equal to the pivot and the other with the values greater than the pivot. The median value is either included in the former part if this array has elements or more, where is the number of elements in the array, or the latter part otherwise. This divide-and-conquer algorithm allows the algorithm to run in linear time on average, with the time complexity of [63]. Hence, the total time complexity for each single neighbor iteration is . Considering that the robot has neighbors by assumption, the complexity for a single robot iteration is .

Therefore, the distributed construction of power diagrams has lower computational complexity compared to the construction of CCVDs. Additionally, the complexity of constructing power diagrams depends only on the number of neighbors, making it a better choice when the computational power of the robots is limited, despite the fact that it provides only a near-optimal solution for space partitioning. One may choose to use the most suitable algorithm depending on the available computational resources and trade-off between computational time and tracking accuracy.

IV-B Communication Load

We assume that the communication between each robot and its neighbors is lossless and delay-free. The bandwidth requirement for the robot network is mainly determined by the number of neighbors each robot has and both the size and the frequency of data exchange. On the one hand, given a fixed data size and communication frequency, the worst-case bandwidth requirement occur when all robots are neighbors of one another , in which case non-overlapping communication links are required, where is the number of robots. In fact, this worst case assumption is unlikely to happen as the robots are expected to spread out over a large task space.

On the other hand, given a fixed number of communication channels, each robot only needs to exchange information about its space assignment (either power partition vertices or CCVD cell sets), location, and local PHD to its neighbors. The Voronoi methods, at least with constant weighting function, typically result in each robot having 6 neighbors in a hexagonal packing structure [66]. Hence, the bandwidth requirement is low, and will not increase as the environmental dimension (number of robots and targets, size of the task space, etc) increases.

To analyze the communication load of the space partitioning algorithms, we consider the data size of the information exchanged between robots. For the power diagram, the data size is determined by the number of vertices in the power partition, which is proportional to the number of cells in the task space. Each vertex is represented by a 2D position vector, which requires 8 bytes per vertex for a double-precision floating point number. For the CCVD, each robot shares its current cell assignments, capacity, and local information about detected targets. The data size is proportional to the number of cells in the task space and the number of detected targets. Each cell is represented by a 2D position vector, which requires 8 bytes per cell. Each detected target is represented by a 2D position vector and a detection probability, which requires 12 bytes per target. Hence, for an average of 6 neighbors, 10 targets per robot, and a frequency of 1 Hz, the bandwidth requirement for the power diagram is bytes per second, and for the CCVD is bytes per second.

Currently, robots share all information with their neighbors in every iteration. However, the communication frequency and bandwidth requirements can be further reduced through selective sharing approaches, where re-partitioning only happens when robot positions change significantly.

IV-C Performance Metrics

IV-C1 Tracking Accuracy

To assess the tracking performance, we use the first order Optimal SubPattern Assignment (OSPA) metric [67], which is a widely-adopted metric to evaluate the performance of MTT approaches. Given two sets and (representing the true and estimated target locations), the tracking error is defined as444Without loss of generality, we assume that holds. In other words, represents either the true or estimated target set, whichever is smaller.

| (25) |

The constant is a cutoff distance, , and is the collection of all permutations of the set . The larger the value of is, the more the outliers are penalized. Eq. (25) computes the average matching error between true and estimated target locations considering all possible assignments between elements and that are within distance . This can be efficiently computed in polynomial time using the Hungarian algorithm [68]. Note that the lower the OSPA value, the more accurate the tracking of the targets.

IV-C2 Heterogeneity Level

We define a measure of the heterogeneity in a team as follows.

Definition 2 (Heterogeneity Level)

The heterogeneity level of a sensing network , denoted by , is given by the standard deviation of the power radius of each sensor in , i.e.,

| (26) |

where , where the function is given in (15) and is the maximum sensing capacity of robot .

Definition 3 (Total Sensing Capacity)

The total sensing capacity, of a sensing network is given by

| (27) |

V Simulation Results

We conduct simulations using both ROS and MATLAB to validate our proposed multi-robot multi-target tracking framework. For concise presentation, all simulations adopt sensors with wedge-shaped FoVs whose shape is parameterised by a viewing angle in the forward direction, which is the same as the orientation of a robot, and a radius . Cameras and lidars have such model. Two examples are visualized in Figure 2. The probability of target detection for these FoVs is defined by

| (28) |

where , and is the probability density function that assigns the target detection probability given the distance between the target and robot . Specific implementations of used in simulations are provided in Table I.

Unlike the most existing work such as [41, 50, 51, 52, 53, 31], in this paper, we estimate the target distribution online using the PHD filter. Hence, our problem is significantly more challenging since the robots need to perceive and track targets simultaneously. We will compare our new approach against the standard Voronoi based method and the power diagram based algorithm proposed in our previous conference paper [57] to provide a baseline for performance comparison.

| (deg) | (m) | |||

|---|---|---|---|---|

| 1 | 270 | 3 | 1.675 | |

| 2 | 360 | 3 | 2.422 | |

| 3 | 90 | 3 | 0.99 | 0.700 |

| 4 | 90 | 2.5 | 0.404 | |

| 5 | 360 | 2 | 0.99 | 1.257 |

V-A Qualitative Results

First, we apply CCVD implementation and show the result from a single run using 5 TurtleBot3 Burger differential drive robots, namely , tracking 40 holonomic moving targets in a obstacle-free square task space as visualized in Figure 3.

The simulation was implemented using Ubuntu 18.04 with ROS Melodic. The robots move at a maximum linear velocity of and a maximum angular velocity of , and they are able to localize themselves using position data retrieved from ROS topic /odom , i.e., the ground truth state from Gazebo. Our MR-MTT algorithm recursively generates next waypoints for the robots, and they navigate through the waypoints using the move_base ROS package which uses the dynamic window approach (DWA) as a local planner and Dijkstra’s algorithm as a global planner [69].

All robots are equipped with heterogeneous range-bearing sensors with specifications described in Table I. The standard deviation of range and bearing measurements for all sensors is 0.04 m and , respectively. Since target density is low relative to the size of and the FoV of robots, we select in (9) for all robots. The task space is discretized into cell for both PHD representation and task space assignment. We assume that only one target can occupy each cell.

As explained in Section I, an important application of the MR-MTT is in tracking targets to study collective behaviours of animal groups and crowd dynamics of pedestrians. Motivated by related works in modeling their collective motion [70], we apply Boids algorithm, which has been used to study the emergent flocking behaviors arising in social animal groups from combinations of separation, alignment, and cohesion behaviors, to simulate the moving targets.

In our simulations, a target may exit the task space in which case another target will immediately enter into the space to maintain a constant number of targets. Targets move at a maximum speed of .

The robots begin with a uniform PHD where we set the expected number of targets equal to over the entire task space, meaning that they have no prior knowledge of the target density distribution. At the beginning of the simulations, the robots are located alongside the lower edge of the task space where they cannot detect the majority of the targets. Four animation clips excerpted from a 12 minute 30 second long target tracking simulation are presented in Figure 4. Each column corresponds to one of the clips illustrating the following three behaviors exhibited by the robot network.

Target Coverage

Our algorithm guides the robot to explore the task space when no targets are detected and to maintain coverage of the majority of detected targets when the robot detects multiple targets moving in different directions. The first clip (i.e., the first row in Figure 4) shows the initial exploration process where 5 robots start from their initial locations and move across the task space as the control input (24) drives the COD of each robot to the centroid of its CCVD.

The area that each robot covers for target detection is continuously updated to respond to the targets’ movement, and the sensor FoVs cover most of the areas with high target density, as observed in Figures 4d,4e,4f, Figures 4g,4h,4i, and Figures 4j,4k,4l. In the second clip, we can see that three robots in the bottom tend to gather close to each other as the targets densely aggregate in the lower-right corner of the task space while maintaining certain distance from one another to cover a larger area. Across all figures, we can observe that a sensor with a higher sensing capability is assigned with a larger task region optimized by our task assignment method.

Target Following

Target Tracking Reallocation

When a robot detects multiple targets moving in different directions, it chooses to follow the targets that are within its assigned cells and reallocates the task of tracking other targets to its neighboring robots as the targets move into their cells. This is illustrated in Figures 4g,4h,4i where the right-most robot follows the multiple targets clustered at its top-left region as the weighted centroid of PHD over its assigned region shifts toward the target cluster. As these targets move outside the robot’s assigned region, they no longer dominate the weighted centroid, causing the robot to follow the other three targets on its right to maintain coverage of the bottom-right corner of the task space, shown in the fourth clip. Such responsive switching behavior in the target coverage and following allows the robots to effectively gather information on the target’s locations.

Robot trajectories over the entire simulation time are plotted in Figure 5. Each robot first spreads out to deploy across the task space, indicated by the relatively straight part of trajectories. Then each robot moves twistingly as it searches for or tracks targets within a relatively smaller area of the task space. The trajectories reveal that robots with greater sensing capabilities, e.g., robot 1 and 2, move within a smaller range after initial deployment, while those with weaker sensing capabilities, e.g., robot 3, 4, and 5, move within a wider range. This is due to the fact that the former robots often detect more targets moving in various directions and perform target coverage behavior within a relatively fixed region, while the latter ones more frequently detect fewer targets and perform target following across a wider sub-region. Figure 6 plots the OSPA error with and in (25), which indicates that targets are effectively tracked after the initial deployment of the robotic network as the OSPA error significantly decreases comparing to the initial state.

Figure 7 plots the changes in the robots’ normalized unused sensing capacity as the numbers of detected targets changes over time. Overall, the normalized unused sensing capacity tends to decrease as the number of detected targets increases for each robot, reflecting the online adaptability of task assignment to the workload. However, such increase and decrease do not strictly correspond to each other due to the presence of sensor false alarms (i.e., false negative or false positive detections).

Figure 8 visualizes the variation in the number of cells assigned to the top-left robot as the number of detected targets changes. As the robot detects more targets,its normalized unused sensing capacity is reduced and, hence, so does its assigned task region. Consequently, a large coverage region is assigned to its neighbors , whose normalized unused sensing capacities are unchanged.

V-B Quantitative Results

| (deg) | (m) | |||

|---|---|---|---|---|

| A | 45 | 8 | 0.99 | 24.88 |

| B | 45 | 8 | 0.7 | 17.59 |

| C | 240 | 8 | 0.99 | 134.04 |

| D | 270 | 11.3 | 0.99 | 300.86 |

| E | 270 | 16 | 0.99 | 603.17 |

| A | B | C | D | E | |||

| 6 | 18 | 12 | - | - | 2074.4 | 3.7 | |

| 8 | 24 | 16 | - | - | 2765.8 | 3.7 | |

| 10 | 30 | 20 | - | - | 3457.3 | 3.7 | |

| 16 | - | - | - | 2 | 1604.4 | 6.1 | |

| 16 | - | - | 4 | - | 1604.4 | 4.9 | |

| 16 | - | 9 | - | - | 1604.4 | 3.1 |

| Team | 36 Robots (S1) | 48 Robots (S2) | 60 Robots (S3) | ||||||

| 30 Targets | 40 Targets | 50 Targets | 30 Targets | 40 Targets | 50 Targets | 30 Targets | 40 Targets | 50 Targets | |

| VC-PC | 0.8468 | 0.0783 | 0.1128 | 0.2499 | 0.2477 | 0.0001 | 0.0155 | 0.0585 | 0.0731 |

| PC-CC | 0.9209 | 0.0605 | 0.5158 | 0.0225 | 0.0194 | 0.0998 | 0.8474 | 0.0283 | 0.0137 |

| VC-CC | 0.7695 | 0.0010 | 0.0433 | 0.0021 | 0.0007 | 0.0000 | 0.0162 | 0.0020 | 0.0007 |

To quantitatively compare the efficacy of our power diagram (Section III-B) and CCVD (Section III-C) based method with baseline algorithms, we run batches of simulation trials in MATLAB using a point robot model with dynamic model given as (1). In order to demonstrate the effectiveness of two novel algorithms and to perform ablation experiments, we apply five methods in the following tests:

-

1.

Zigzag (Z) Method: This method utilizes a complete coverage path planning framework, a standard approach for target search. Firstly, a centroidal Voronoi diagram of Voronoi sites is computed using Lloyd’s algorithm. Each robot is assigned a Voronoi cell of the diagram. Then, a zigzag coverage path is planned [torres2016coverage] with the spacing of two adjacent parallel paths equaling to 1 m for each robot to exploit its cell. An example of paths planned for 60 robots is shown in Figure 9. Each robot tracks targets using PHD filter while traversing through the pre-planned coverage path.

-

2.

Voronoi (V) Method: Partitioning the space using Voronoi diagram with robot locations as generator points, similar to Dames’ method [42].

-

3.

Voronoi-COD (VC) Method: Similar to Voronoi method besides using robot CODs as generator points.

-

4.

Power-COD (PC) Method: Our first proposed method, i.e., partitioning the space using power diagram with robot CODs as generator points.

-

5.

CCVD-COD (CC) Method: Our second proposed method, i.e., partitioning the space using CCVD with robot CODs as generator points.

In these simulations, the size of the environment is . Robots move at a maximum linear velocity of and a maximum angular velocity of . Each robot carries one of the five sensor types, as outlined in Table II. Assuming that the target density within the FoVs of sensors are completely unknown, we select in (9) for all robots in all trials. Based on these type definitions, we define six different network compositions, shown in Table III in which columns A-E list the number of robots with each sensor type. Distinguished from Section V-A, in this series of simulations, target motion has a higher degree of randomness. Targets move at a maximum velocity of with their heading directions randomly changing over time, and may enter or leave the environment by crossing over the boundary. This means that the number of targets varies over time, and the true number of targets is not known to the sensors.

V-B1 Team Size

Initially, there are 30, 40, or 50 targets within the environment. We compare three different network compositions, , , and , each of which is composed of the same sensor types in equal proportions, as shown in Table III. By varying the number of targets and the network compositions, we create 9 different simulation trials. Each trial is repeated for 10 times using the three algorithms, each lasting for . The robots and targets are randomly located in the task space at the beginning of each trial.

Figure 10 shows the results of the nine trials. We plot the median and the 25th and 75th percentiles of average OSPA via boxplots using the data collected during the last of each trial to present the steady state tracking performance. Note that larger teams performs better in the target tracking. We also report P-values for comparison between methods VC and PC, PC and CC, VC and CC, respectively, and bold the data that are significant at the 10% level of significance. Consistently, the CCVD methods (CC) outperform the power diagram (PC) and Voronoi diagram methods (V and VC) across different team sizes and target numbers. This confirms the improved efficacy of our proposed CCVD methods, especially for heterogeneous sensing networks. The CCVD and Power diagrams assign larger regions to the sensors with higher unused sensing capacity and smaller regions to those who have smaller unused sensing capacity. The CCVD places a hard constraint, i.e., the capacity constraint, on the size of the partition depending on each robot’s workload. Hence it demonstrates a further improvement in the tracking performance over the power diagram, which instead utilizes an approximation scheme which associates the unused sensing capacity of a robot with a power radius in space partition. As a result, sensing networks can take advantage of their heterogeneous sensing capabilities and avoid overloading sensors with target tracking tasks.

Figures 11 visualize a random moment in a simulation trial with 36 robots, which is utilized as a case study to demonstrate the improvement in space partition optimality. For each robot, we compute the value of area of its assigned space divided by its normalized unused sensing capacity, which reveals the among of a robot’s workload comparing to its sensing capability, and plot it on Figure 11b. It is shown that the area-to-capacity ratio of both Voronoi method and power method varies substantially among robots, indicating that robots are more or less assigned a task area size that does not match their capabilities. PC method improves the ratio to a standard deviation of 5.5, comparing with that of VC method, which is 6.2. However, CC method yields a constant area-to-capacity ratio over the team at all time to further optimize the space partition.

Moreover, it can be seen that VC method consistently yields to significant lower OSPA error than V method, and that PC method significantly ourperforms VC method in most of the simulation trials. This reveals that both replacing robot locations to CODs as generator points and applying the capacity-constraint space partitioning strategies contribute to the tracking accuracy, leading to the out-performance of PC and CC methods. We also find that PC and CC methods significantly improve the tracking accuracy comparing with Z method, indicating that our proposed algorithms produce more effective multi-robot path planning results for heterogeneous teams to track multiple unknown moving targets.

In addition to decreasing the average tracking error, our proposed algorithms, especially CCVD, also decreases the range of OSPA, i.e., tracking errors in a majority of cases, revealed from Figure 10 and Figure 12 introduced in Section V-B2. In Table V, we list the standard deviation of OSPA over all trials in Figure 10. In other words, sensing networks that use the CCVD or power diagram often perform more reliable behavior. This is due to the fact that the optimized assignment of dominance region reduces the probability of targets being lost during tracking.

Remark 3 (Comparison of Results)

The three partitioning methods (Voronoi diagrams, power diagrams, and the CCVD) each use a different function in the functional defined in Equation (2). We see in Figure 1 that the three methods can lead to quite different partitions, with Voronoi and power diagrams tending to look more similar to one another. Given this, it is an interesting result that power and CCVD both yield comparable results in terms of tracking accuracy. We believe that these results emphasize the utility of considering an equitable distribution of space based on each robot’s capability and operational conditions, regardless of the specific partitioning method employed.

| Target | 30 Targets | 40 Targets | 50 Targets | |||

| Method | CC | V | CC | V | CC | V |

| 36 Robots | 0.3167 | 0.4761 | 0.4212 | 0.4353 | 0.4097 | 0.7183 |

| 48 Robots | 0.2488 | 0.6019 | 0.2166 | 0.5192 | 0.2643 | 0.4075 |

| 60 Robots | 0.3378 | 0.4593 | 0.3774 | 0.8131 | 0.1951 | 0.5976 |

V-B2 Heterogeneity Levels

Lastly we run a series of tests to validate the following observation. For a team with a fixed total sensing capacity, , our novel CCVD and power diagram formula will provide increasing improvements in target tracking accuracy over a standard Voronoi diagram as the heterogeneity, , increases.

We use compositions 4, 5, and 6 from Table III, all of which have the identical total sensing capacity of 1604.4 determined by (27). There are 30 targets in all simulation trials. The heterogeneity levels (26) for the three network are given as , , and , respectively.

Figure 12 shows the average OSPA errors over 10 runs, using data from the last 400 s of each trial. We observed significant improvement in the tracking performance of the power diagram and CCVD method compared to that of the standard Voronoi diagram when the network is highly heterogeneous (). On the other hand, as the heterogeneity level decreases, so does the performance improvement as we can observe from and . These results support our aforementioned observation. If all sensors are the same, the power distance in Equation (13) will only depend on sensor’s location so that the locational optimization functional (2) will converge to the one using Voronoi diagram. Meanwhile, since the capacity of the cells tends to be close to each other, the CCVD will get close to the Voronoi diagram as well. We also find that the improvement in tracking accuracy by , and increases with the number of robots in the network, which affects the mobility for a network.

Remark 4 (Trade-off between algorithms)

The MATLAB simulations were conducted on a Windows 11 laptop with 13th Gen Intel(R) Core(TM) i7-1360P 2.20GHz processor and 32GB RAM storage memory. Taking the simulations of 60 robots as an example, the average running time of Algorithm 3 using PC method is approximately 0.1 s per iteration, while running time of using CC method is around 9.0 s per iteration. At the expense of running time, CC method reduces OSPA error by around 20% comparing with PC method. Practitioners should make a trade-off between the two algorithms according to the availability of computing resources and the requirement of tracking accuracy.

VI Conclusions

We propose a distributed coverage control scheme for heterogeneous mobile robots with onboard sensors to track an unknown and time-varying number of targets. This novel strategy allows sensors to have arbitrary sensing models (with limited fields of view) and dynamically optimizes the workload for each individual. To do this, we introduce the normalized unused sensing capacity to quantify the instant detection capability of each sensor. We then use this to construct either a power diagram or a capacity constraint Voronoi diagram to recursively find optimized sensor locations as measurements are updated. The centroid of detection for each sensor is utilized as the generation point to create the partitions, allowing each sensor to center its field of view on the area with the highest information density. Simulation results show the convergence of our proposed method in target tracking scenarios and indicates that our method yields better and more reliable tracking performance compared to baseline algorithms that does not account for heterogeneity. We also observed that the tracking performance of our approach increases over the Voronoi approach as the heterogeneity in the team’s sensing capabilities increases.

References

- [1] H. E. MacGregor, J. E. Herbert-Read, and C. C. Ioannou, “Information can explain the dynamics of group order in animal collective behaviour,” Nature communications, vol. 11, no. 1, p. 2737, 2020.

- [2] P. DeLellis, G. Polverino, G. Ustuner, N. Abaid, S. Macrì, E. M. Bollt, and M. Porfiri, “Collective behaviour across animal species,” Scientific reports, vol. 4, no. 1, p. 3723, 2014.

- [3] L. Gómez-Nava, R. Bon, and F. Peruani, “Intermittent collective motion in sheep results from alternating the role of leader and follower,” Nature Physics, pp. 1–8, 2022.

- [4] M.-C. Chuang, J.-N. Hwang, J.-H. Ye, S.-C. Huang, and K. Williams, “Underwater fish tracking for moving cameras based on deformable multiple kernels,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 47, no. 9, pp. 2467–2477, 2016.

- [5] P. Scovanner and M. F. Tappen, “Learning pedestrian dynamics from the real world,” in 2009 IEEE 12th International Conference on Computer Vision. IEEE, 2009, pp. 381–388.

- [6] M. Adnan, G. Slavic, D. M. Gomez, L. Marcenaro, and C. Regazzoni, “Systematic and comprehensive review of clustering and multi-target tracking techniques for LiDAR point clouds in autonomous driving applications,” Sensors, vol. 23, no. 13, p. 6119, Jul. 2023. [Online]. Available: https://doi.org/10.3390/s23136119

- [7] P. Konstantinova, A. Udvarev, and T. Semerdjiev, “A study of a target tracking algorithm using global nearest neighbor approach,” in Proceedings of the International Conference on Computer Systems and Technologies (CompSysTech’03), 2003, pp. 290–295.

- [8] S. Hamid Rezatofighi, A. Milan, Z. Zhang, Q. Shi, A. Dick, and I. Reid, “Joint probabilistic data association revisited,” in Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 3047–3055.

- [9] S. S. Blackman, “Multiple hypothesis tracking for multiple target tracking,” IEEE Aerospace and Electronic Systems Magazine, vol. 19, no. 1, pp. 5–18, 2004.

- [10] A. Doucet, B.-N. Vo, C. Andrieu, and M. Davy, “Particle filtering for multi-target tracking and sensor management,” in Proceedings of the Fifth International Conference on Information Fusion, vol. 1. IEEE, 2002, pp. 474–481.

- [11] R. P. Mahler, “Multitarget bayes filtering via first-order multitarget moments,” IEEE Transactions on Aerospace and Electronic systems, vol. 39, no. 4, pp. 1152–1178, 2003.

- [12] R. Mahler, “Phd filters of higher order in target number,” IEEE Transactions on Aerospace and Electronic systems, vol. 43, no. 4, pp. 1523–1543, 2007.

- [13] R. P. Mahler, “Statistical multisource-multitarget information fusion,” information fusion, vol. 685, 2007.

- [14] B.-T. Vo, B.-N. Vo, and A. Cantoni, “The cardinality balanced multi-target multi-bernoulli filter and its implementations,” IEEE Transactions on Signal Processing, vol. 57, no. 2, pp. 409–423, 2009.

- [15] R. Olfati-Saber, “Distributed kalman filtering for sensor networks,” in 2007 46th IEEE Conference on Decision and Control. IEEE, 2007, pp. 5492–5498.

- [16] M. E. Campbell and N. R. Ahmed, “Distributed data fusion: Neighbors, rumors, and the art of collective knowledge,” IEEE Control Systems Magazine, vol. 36, no. 4, pp. 83–109, 2016.

- [17] G. A. Hollinger, S. Yerramalli, S. Singh, U. Mitra, and G. S. Sukhatme, “Distributed data fusion for multirobot search,” IEEE Transactions on Robotics, vol. 31, no. 1, pp. 55–66, 2014.

- [18] P. Zhu and W. Ren, “Multi-robot joint localization and target tracking with local sensing and communication,” in 2019 American Control Conference (ACC). IEEE, 2019, pp. 3261–3266.

- [19] R. Khodayi-mehr, Y. Kantaros, and M. M. Zavlanos, “Distributed state estimation using intermittently connected robot networks,” IEEE transactions on robotics, vol. 35, no. 3, pp. 709–724, 2019.

- [20] X. Ge, Q.-L. Han, X.-M. Zhang, L. Ding, and F. Yang, “Distributed event-triggered estimation over sensor networks: A survey,” IEEE transactions on cybernetics, vol. 50, no. 3, pp. 1306–1320, 2019.

- [21] S. Zhu, C. Chen, W. Li, B. Yang, and X. Guan, “Distributed optimal consensus filter for target tracking in heterogeneous sensor networks,” IEEE transactions on cybernetics, vol. 43, no. 6, pp. 1963–1976, 2013.

- [22] R. Olfati-Saber and J. S. Shamma, “Consensus filters for sensor networks and distributed sensor fusion,” in Proceedings of the 44th IEEE Conference on Decision and Control. IEEE, 2005, pp. 6698–6703.

- [23] A. Shirsat, S. Mishra, W. Zhang, and S. Berman, “Probabilistic consensus on feature distribution for multi-robot systems with markovian exploration dynamics,” IEEE Robotics and Automation Letters, vol. 7, no. 3, pp. 6407–6414, 2022.

- [24] M. Corah and N. Michael, “Scalable distributed planning for multi-robot, multi-target tracking,” in 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2021, pp. 437–444.

- [25] B. Schlotfeldt, V. Tzoumas, and G. J. Pappas, “Resilient active information acquisition with teams of robots,” IEEE Transactions on Robotics, vol. 38, no. 1, pp. 244–261, 2021.

- [26] B. J. Julian, M. Angermann, M. Schwager, and D. Rus, “Distributed robotic sensor networks: An information-theoretic approach,” The International Journal of Robotics Research, vol. 31, no. 10, pp. 1134–1154, 2012.

- [27] Y. Sung, A. K. Budhiraja, R. K. Williams, and P. Tokekar, “Distributed assignment with limited communication for multi-robot multi-target tracking,” Autonomous Robots, vol. 44, no. 1, pp. 57–73, 2020.

- [28] L. Zhou and P. Tokekar, “Sensor assignment algorithms to improve observability while tracking targets,” IEEE Transactions on Robotics, vol. 35, no. 5, pp. 1206–1219, 2019.

- [29] J. Cortes, S. Martinez, T. Karatas, and F. Bullo, “Coverage control for mobile sensing networks,” IEEE Transactions on robotics and Automation, vol. 20, no. 2, pp. 243–255, 2004.

- [30] M. Schwager, D. Rus, and J.-J. Slotine, “Decentralized, adaptive coverage control for networked robots,” The International Journal of Robotics Research, vol. 28, no. 3, pp. 357–375, 2009.

- [31] Y. Kantaros and M. M. Zavlanos, “Distributed communication-aware coverage control by mobile sensor networks,” Automatica, vol. 63, pp. 209–220, 2016.

- [32] A. Pierson and D. Rus, “Distributed target tracking in cluttered environments with guaranteed collision avoidance,” in 2017 International Symposium on Multi-Robot and Multi-Agent Systems (MRS). IEEE, 2017, pp. 83–89.

- [33] S. Lloyd, “Least squares quantization in PCM,” IEEE Transactions on Information Theory, vol. 28, no. 2, pp. 129–137, 1982.

- [34] S. Papatheodorou, A. Tzes, K. Giannousakis, and Y. Stergiopoulos, “Distributed area coverage control with imprecise robot localization: Simulation and experimental studies,” International Journal of Advanced Robotic Systems, vol. 15, no. 5, p. 1729881418797494, 2018.

- [35] H. Zhu and J. Alonso-Mora, “B-UAVC: Buffered uncertainty-aware Voronoi cells for probabilistic multi-robot collision avoidance,” in 2019 International Symposium on Multi-Robot and Multi-Agent Systems (MRS). IEEE, 2019, pp. 162–168.

- [36] M. Wang and M. Schwager, “Distributed collision avoidance of multiple robots with probabilistic buffered voronoi cells,” in 2019 International Symposium on Multi-Robot and Multi-Agent Systems (MRS). IEEE, 2019, pp. 169–175.

- [37] J. Chen and P. Dames, “Distributed and collision-free coverage control of a team of mobile sensors using the convex uncertain voronoi diagram,” in 2020 American Control Conference (ACC). IEEE, 2020, pp. 5307–5313.

- [38] J. M. Palacios-Gasós, Z. Talebpour, E. Montijano, C. Sagüés, and A. Martinoli, “Optimal path planning and coverage control for multi-robot persistent coverage in environments with obstacles,” in 2017 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2017, pp. 1321–1327.

- [39] S. Bhattacharya, R. Ghrist, and V. Kumar, “Multi-robot coverage and exploration on Riemannian manifolds with boundaries,” The International Journal of Robotics Research, vol. 33, no. 1, pp. 113–137, 2014.

- [40] A. Breitenmoser, M. Schwager, J.-C. Metzger, R. Siegwart, and D. Rus, “Voronoi coverage of non-convex environments with a group of networked robots,” in 2010 IEEE International Conference on Robotics and Automation. IEEE, 2010, pp. 4982–4989.

- [41] L. C. Pimenta, V. Kumar, R. C. Mesquita, and G. A. Pereira, “Sensing and coverage for a network of heterogeneous robots,” in 2008 47th IEEE Conference on Decision and Control. IEEE, 2008, pp. 3947–3952.

- [42] P. M. Dames, “Distributed multi-target search and tracking using the phd filter,” Autonomous robots, vol. 44, no. 3-4, pp. 673–689, 2020.

- [43] M. Kushwaha, I. Amundson, P. Volgyesi, P. Ahammad, G. Simon, X. Koutsoukos, A. Ledeczi, and S. Sastry, “Multi-modal target tracking using heterogeneous sensor networks,” in 2008 Proceedings of 17th International Conference on Computer Communications and Networks. IEEE, 2008, pp. 1–8.

- [44] Ø. K. Helgesen, K. Vasstein, E. F. Brekke, and A. Stahl, “Heterogeneous multi-sensor tracking for an autonomous surface vehicle in a littoral environment,” Ocean Engineering, vol. 252, p. 111168, 2022.

- [45] H. Zhou, M. Taj, and A. Cavallaro, “Target detection and tracking with heterogeneous sensors,” IEEE Journal of Selected Topics in Signal Processing, vol. 2, no. 4, pp. 503–513, 2008.

- [46] S. Mayya, R. K. Ramachandran, L. Zhou, V. Senthil, D. Thakur, G. S. Sukhatme, and V. Kumar, “Adaptive and risk-aware target tracking for robot teams with heterogeneous sensors,” IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 5615–5622, 2022.

- [47] R. K. Ramachandran, J. A. Preiss, and G. S. Sukhatme, “Resilience by reconfiguration: Exploiting heterogeneity in robot teams,” in 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2019, pp. 6518–6525.

- [48] Y. Kantaros, M. Thanou, and A. Tzes, “Distributed coverage control for concave areas by a heterogeneous robot–swarm with visibility sensing constraints,” Automatica, vol. 53, pp. 195–207, 2015.

- [49] N. Bartolini, T. Calamoneri, T. F. La Porta, and S. Silvestri, “Autonomous deployment of heterogeneous mobile sensors,” IEEE Transactions on Mobile Computing, vol. 10, no. 6, pp. 753–766, 2010.

- [50] O. Arslan and D. E. Koditschek, “Voronoi-based coverage control of heterogeneous disk-shaped robots,” in 2016 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2016, pp. 4259–4266.

- [51] A. Kwok and S. Martinez, “Deployment algorithms for a power-constrained mobile sensor network,” International Journal of Robust and Nonlinear Control: IFAC-Affiliated Journal, vol. 20, no. 7, pp. 745–763, 2010.

- [52] K. Laventall and J. Cortés, “Coverage control by robotic networks with limited-range anisotropic sensory,” in 2008 American Control Conference. IEEE, 2008, pp. 2666–2671.

- [53] Y. Stergiopoulos and A. Tzes, “Autonomous deployment of heterogeneous mobile agents with arbitrarily anisotropic sensing patterns,” in 2012 20th Mediterranean Conference on Control & Automation (MED). IEEE, 2012, pp. 1585–1590.

- [54] ——, “Spatially distributed area coverage optimisation in mobile robotic networks with arbitrary convex anisotropic patterns,” Automatica, vol. 49, no. 1, pp. 232–237, 2013.

- [55] ——, “Cooperative positioning/orientation control of mobile heterogeneous anisotropic sensor networks for area coverage,” in 2014 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2014, pp. 1106–1111.

- [56] B. Hexsel, N. Chakraborty, and K. Sycara, “Coverage control for mobile anisotropic sensor networks,” in 2011 IEEE International Conference on Robotics and Automation. IEEE, 2011, pp. 2878–2885.

- [57] J. Chen and P. Dames, “Distributed multi-target tracking for heterogeneous mobile sensing networks with limited field of views,” in 2021 ieee international conference on robotics and automation (icra). IEEE, 2021, pp. 9058–9064.

- [58] W. Luo and K. Sycara, “Voronoi-based coverage control with connectivity maintenance for robotic sensor networks,” in 2019 International Symposium on Multi-Robot and Multi-Agent Systems (MRS). IEEE, 2019, pp. 148–154.

- [59] P. Dames and V. Kumar, “Experimental characterization of a bearing-only sensor for use with the phd filter,” arXiv preprint arXiv:1502.04661, 2015.

- [60] F. Aurenhammer, “Power diagrams: properties, algorithms and applications,” SIAM Journal on Computing, vol. 16, no. 1, pp. 78–96, 1987.

- [61] B. Michael and H. Daniel, “Capacity-constrained voronoi diagrams in finite spaces,” in Int’l Symp. on Voronoi Diagrams in Science and Engineering, vol. 33, 2008, p. 13.

- [62] R. Olfati-Saber, J. A. Fax, and R. M. Murray, “Consensus and cooperation in networked multi-agent systems,” Proceedings of the IEEE, vol. 95, no. 1, pp. 215–233, 2007.

- [63] H. Li, D. Nehab, L.-Y. Wei, P. V. Sander, and C.-W. Fu, “Fast capacity constrained voronoi tessellation,” in Proceedings of the 2010 ACM SIGGRAPH symposium on Interactive 3D Graphics and Games, 2010, pp. 1–1.

- [64] M. Cao and C. Hadjicostis, “Distributed algorithms for voronoi diagrams and application in ad-hoc networks,” UIUC Coordinated Science Laboratory, Tech. Rep. UILU-ENG-03-2222, DC-210, 2003.

- [65] C. A. R. Hoare, “Algorithm 65: Find,” Commun. ACM, vol. 4, no. 7, p. 321–322, jul 1961. [Online]. Available: https://doi.org/10.1145/366622.366647

- [66] D.-M. Yan, K. Wang, B. Lévy, and L. Alonso, “Computing 2d periodic centroidal voronoi tessellation,” in 2011 eighth international symposium on Voronoi diagrams in science and engineering. IEEE, 2011, pp. 177–184.

- [67] D. Schuhmacher, B.-T. Vo, and B.-N. Vo, “A consistent metric for performance evaluation of multi-object filters,” IEEE Transactions on Signal Processing, vol. 56, no. 8, pp. 3447–3457, 2008.

- [68] H. W. Kuhn, “The Hungarian method for the assignment problem,” Naval Research Logistics Quarterly, vol. 2, no. 1-2, pp. 83–97, 1955.

- [69] K. Zheng, “Ros navigation tuning guide,” Robot Operating System (ROS) The Complete Reference (Volume 6), pp. 197–226, 2021.

- [70] C. W. Reynolds, “Flocks, herds and schools: A distributed behavioral model,” in Proceedings of the 14th annual conference on Computer graphics and interactive techniques, 1987, pp. 25–34.