Distributed Filtering Design with Enhanced Resilience to Coordinated Byzantine Attacks

Abstract

This paper proposes a Byzantine-resilient consensus-based distributed filter (BR-CDF) wherein network agents employ partial sharing of state parameters. We characterize the performance and convergence of the BR-CDF and study the impact of a coordinated data falsification attack. Our analysis shows that sharing merely a fraction of the states improves robustness against coordinated Byzantine attacks. In addition, we model the optimal attack strategy as an optimization problem where Byzantine agents design their attack covariance or the sequence of shared fractions to maximize the network-wide mean squared error (MSE). Numerical results demonstrate the accuracy of the proposed BR-CDF and its robustness against Byzantine attacks. Furthermore, the simulation results show that the influence of the covariance design is more pronounced when agents exchange larger portions of their states with neighbors. In contrast, the performance is more sensitive to the sequence of shared fractions when smaller portions are exchanged.

Index Terms:

Cyber-physical systems, distributed learning, consensus-based filtering, data falsification attacks, multiagent systems, Byzantine agent.I Introduction

In recent years, the development of the internet of things (IoT) and machine learning techniques have led to the wide use of cyber-physical systems (CPS) in various infrastructures, such as smart grids, environment monitoring, and signal processing [1, 2, 3, 4]. However, the high reliance of CPS on sensor cooperation makes them vulnerable to various security threats. As a result of malicious attacks, false information spreads throughout the network and threatens the integrity of the entire system [5]. Therefore, the study of security threats in CPS has gained considerable attention from academia and industry in the past few years [6, 7, 8, 9, 10, 11]. In CPSs, distributed filtering and secure estimation are becoming more prevalent due to their resilience to node failures and scalability [12, 13].

Attack strategies influence the performance of CPSs and create complications in developing protection methods against malicious behavior [14, 15]. In CPSs, attacks can be divided into two groups: denial-of-service (DoS) attacks and integrity attacks. DoS attacks occur when the communication links between agents are blocked, and agents cannot exchange information [16]. In contrast, integrity attacks occur when adversaries or malicious agents inject false information into the network [17, 18]. A stealthy attack is also categorized as an integrity attack in which an adversary injects false information into a network without being detected. Various studies have examined the impact of stealthy attacks on state estimation scenarios and investigated situations where adversaries design attacks to degrade the performance of the network [19, 20, 21].

An optimal attack design from an attacker perspective can aid in developing protection methods operating in worst-case scenarios. To this end, [21] designs a false data-injection strategy that maximizes the trace of error covariance in a remote state estimation scenario. An event-based stealthy attack strategy that degrades estimation accuracy is proposed in [22], while [23] develops a stealthy attack strategy and its optimal defense mechanism by exploiting power grid vulnerabilities. Furthermore, [24] proposes a linear stealthy attack strategy and its feasibility constraints. Moreover, [4] proposes an optimal attack strategy and sufficient conditions to limit the resulting estimation error. In [25], authors investigate the impact of reset attacks on cyber-physical systems, while [26] designs a stealthy attack that maximizes the network-wide estimation error by jointly selecting the optimal subset of Byzantine agents and perturbation covariance matrices. In [27], a false data-injection attack on a distributed CPS is proposed that enforces the local state estimates to remain within a pre-specified range.

It is also vital to analyze how countermeasures taken by agents can reduce the impact of the attack. One approach is to detect adversaries and then implement corrective measures [28, 29, 30]. In [31], for example, attack detection is achieved through trusted agents that raise a flag when an adversary is detected. Alternatively, agents can safeguard information and guarantee system performance by running attack-resilient algorithms [32, 33, 34, 31, 35, 36, 37]. In [32, 33], attack-resilient remote state estimators are studied, where the former proposes a stochastic detector with a random threshold to determine whether to fuse the received data, and the latter detects malicious agents using the statistical correlation between trusted agents. Using a probabilistic protector for each sensor, [34] proposes a robust distributed state estimator that decides whether to use data from neighbors based on their innovation signals. Moreover, a Byzantine-resilient distributed state estimation algorithm is proposed in [38] that employs an internal iteration loop within the local aggregation process to compute the trimmed-mean among neighbors.

Providing an extra procedure to protect the system from adversaries [28, 29, 30, 31] can increase the computational load of the agent and make the algorithm undesirable for resource-constrained scenarios. To resolve this issue, works in [35, 39] reduce the weight assigned to measurements whose norm exceeds a certain threshold, limiting the impact of falsified observations, to provide resilience against measurement attacks. Furthermore, the secure state estimation problem in [40] is solved by a local observer that achieves robustness against malicious agents by employing the median of its local estimates. Our primary motivation for conducting this study was to develop an algorithm that provides robustness against malicious adversaries without imposing an extra computation burden on agents.

Even though multi-agent distributed systems are robust to dynamic changes in the network, they are reliant on local interactions that consume power and bandwidth [41]. Partial-sharing-based approaches, originally proposed in [42, 43], reduce local communication overhead by sharing only a fraction of information during each inter-agent interaction. The simplicity of implementation and efficiency of computation make partial-sharing strategies prevalent in distributed processing scenarios [44, 45]. To the best of our knowledge, partial-sharing-based approaches have not been investigated in an adversarial environment. Additionally, the lack of computationally light distributed algorithms that are robust to coordinated attacks inspired us to conduct this research.

This paper proposes a Byzantine-resilient consensus-based distributed filter (BR-CDF) where agents exchange a fraction of their state estimates at each instant. We study the convergence of the BR-CDF and characterize its performance under a coordinated data falsification attack. In addition, we design the optimal attack by solving an optimization problem where Byzantine agents cooperate on designing the covariance of their falsification data or the order of the information fractions they share. The Byzantine agent is a legitimate network agent that shares false information with neighbors to degrade the overall performance of the network. The numerical results validate the theoretical findings and illustrate the robustness of the BR-CDF algorithm.

The remainder of the article is organized as follows. Section II investigates the system model and the attack strategy, while Section III proposes the Byzantine-resilient consensus-based distributed filter. Section IV analyzes the stability and performance of the BR-CDF algorithm and derives its convergence conditions. The performance of the BR-CDF algorithm is investigated under data falsification attacks in Section V, and Section VI develops an optimal coordinated attack strategy. Simulation results are presented in Section VII, and Section VIII concludes the article.

Mathematical Notations: Scalars are denoted by lowercase letters, column vectors by bold lowercase, and matrices by bold uppercase. Transpose and inverse operators are denoted by and , respectively. The trace operator is denoted by , whereas indicates the Kronecker product and is the Hadamard product. The th element of the matrix is denoted by . The symbol represents the column vector with all entries equal to one and is the identity matrix. Matrices and denote diagonal and block-diagonal matrices whose respective diagonals are the elements of vector and matrices . The set of all real numbers is denoted as and is the statistical expectation operator. A positive semidefinite matrix is denoted by and indicates that is a positive semidefinite matrix. The inequality denotes an element-wise inequality for corresponding elements in matrices and . The maximum and minimum eigenvalues of square matrix are denoted by and , respectively. Vector denotes a column vector consisting of elements of the matrix and denotes the inverse of operator.

II System Model and Byzantine Attack Strategy

Consider a multi-agent network consisting of agents attempting to estimate a dynamic system state through their local observations. The network is modeled as an undirected graph , where is the set of all agents and pairs in set represent communication links between agents. The network adjacency matrix is denoted by and is a diagonal matrix whose diagonal entries are the degrees of corresponding agents. The neighbor set contains agents connected to agent within a single hop, excluding the agent itself.

The state-space model, characterizing the dynamics of the state vector and observation sequences at each agent and time instant , is given by

| (1) | ||||

where is the state, is the local observation, is the state matrix, and is the observation matrix. The state noise and observation noise are mutually independent zero-mean Gaussian processes with covariance matrices and , respectively. Network agents can employ a consensus-based distributed Kalman filter (CDF) to estimate in a collaborative manner [46]. Accordingly, the state estimate at agent is given by

| (2) | ||||

where denotes the consensus gain, is the Kalman gain, and is the received state estimates from neighboring agent .

To analyze the impact of data falsification attacks on network performance, the attack model needs to be specified. For this purpose, we assume a subset of agents to be Byzantines, i.e., , that intend to disrupt the performance of the entire network [17]. Fig. 1 shows the dynamic of the information exchange in a network with Byzantine agents. As seen in Fig. 1, a regular agent shares the actual value of the state estimate with its neighbors. In contrast, a Byzantine agent shares a perturbed version of its state estimate with neighbors; in particular, the shared information at each agent is denoted by

| (3) |

where denotes the perturbation sequence. To maximize the attack stealthiness, i.e., the ability to evade detection, the perturbation sequence is drawn from a zero-mean Gaussian distribution with covariance [47, 48]. Moreover, to further degrade the network performance, Byzantines can cooperate in designing the attack strategy. The network-wide coordinated attack covariance is denoted by where is the network-wide attack sequence with if .

III Byzantine-Resilient consensus-based distributed Kalman filter

By applying partial sharing of information to state estimates in (2), we reduce the information flow between agents at a given instant while maintaining the advantages of cooperation [42, 43]. In particular, each agent only shares a fraction of its state estimate with neighbors rather than the entire vector (i.e., entries of , with ). Although partial-sharing was originally introduced to reduce inter-agent communication overhead, we show that adopting this idea in the current setting improves robustness to Byzantine attacks.

The state estimate entry selection process is performed at each agent using a selection matrix of size , whose main diagonal contains ones and zeros. The ones on the main diagonal of specify the entries of the state estimate to be shared with neighbors. Selecting entries from can either be done stochastically or sequentially, as in [42] and [43]. In this paper, we use uncoordinated partial-sharing, which is a special case of stochastic partial-sharing [42]. In uncoordinated partial-sharing, each agent is initialized with random selection matrices. The selection matrix at the current time instant, i.e., , can be obtained by performing right-circular shift operations on the main diagonal of the selection matrix used in the previous time instant. In other words, if contains the main diagonal elements of at the current instant, then . Then the selection matrix at the current instant can be constructed as . This allows each agent to share only the initial selection matrix with its neighbors, and maintain a record of the indices of parameters shared without needing any additional mechanisms. As a result, the frequency of each entry of the state estimate being shared is equal to .

Due to partial sharing, every agent receives a fraction of the perturbed state estimate vectors from its neighbors, i.e., . Thus, unlike (3), the received information here must be compensated to fill in the missing elements. At each agent , the missing values from neighbors are replaced by . Subsequently, the state update at agent , as in (2), is modified as follows.

| (4) | ||||

At each agent , the Kalman gain is obtained by minimizing the trace of the estimation error covariance with the estimation error evolving as

| (5) | ||||

where

| (6) |

Accordingly, using (III), we can obtain the evolution of the estimation error covariance at agent as follows,

| (7) | ||||

where and

Similarly, the cross-terms of the error covariance, i.e., , evolve as

with

Differentiating the trace of (7) with respect to gives

with .

The distributed Kalman filter based on partial sharing is summarized by (4)–(7). We see that the local covariance update in (7) requires access to cross-term covariance matrices of neighbors, resulting in considerable communication overhead. To reduce the communication overhead, for sufficiently small gain values, i.e., , we can ignore the term in (7) and the last term of in (6) [46], i.e., we have

| (8) | ||||

with . Accordingly, the optimal Kalman gain reduces to

| (9) |

With the above approximations, we obtain a distributed consensus-based Kalman filter, albeit suboptimal [46, 49], that only requires local variables in the error covariance update at each agent. It is worth noting that the last term in (8) is only used to characterize the impact of the perturbation covariances, and since the attack is stealthy from the perspective of an agent, it is excluded from the filtering algorithm. As a result, in addition to the initial selection matrix , each agent shares a fraction of the perturbed state estimate, i.e., , with its neighbors at each instant. The proposed BR-CDF algorithm with reduced communication is summarized in Algorithm 1. We shall see that the BR-CDF in Algorithm 1 performs closely to the solution that shares all necessary variables.

| (10) | ||||

| (11) |

IV Stability and Performance Analysis

This section provides a detailed stability analysis of the BR-CDF algorithm. For this purpose, we make the following assumption:

Assumption 1:

The selection matrix for all is independent of any other data, and the selection matrices and for all and are independent.

Our main result on the stability of the proposed BR-CDF algorithm is summarized by the following theorem.

Theorem 1.

Consider the BR-CDF in Algorithm 1 with consensus gain , where . Then, for a sufficiently small , the error dynamics of the BR-CDF is globally asymptotically stable and all local estimators asymptotically reach a consensus on state estimates, i.e., .

Proof.

The proof begins by analyzing the dynamics of the estimation error in the absence of noise [46]. Given the consensus-based Kalman approach in (10), the estimation error dynamics, without noise, at each agent , can be written as

| (12) |

where . Our goal is to determine such that the estimation error dynamic in (12) is stable. Following the approach in [46], we use

| (13) |

as a candidate Lyapunov function for (12) where the network-wide stacked error is . We can then express as

| (14) |

By substituting (9) into (11) and by employing the matrix inversion lemma, we have

| (15) |

where . Subsequently, replacing (15), without noise, and (12) into (14) yields

| (16) |

Furthermore, by substituting (9) into and employing the matrix inversion lemma, we obtain . Consequently, by replacing into (16) and after some algebraic manipulations, we obtain

| (17) | ||||

With an appropriate choice of consensus gain, i.e., , and a proper selection of , all terms of (17) become negative semidefinite. Subsequently, we have

| (18) | ||||

By defining , (18) becomes

| (19) | ||||

where is the network Laplacian and

For an appropriate choice of , we have , implying that . Consequently, is asymptotically stable. Furthermore, since for all , all estimators asymptotically reach a consensus on state estimates as .

In steady-state, i.e., , we have , . By applying statistical expectation with respect to , we will have the following condition for the stability of the algorithm:

| (20) |

The expectation term can be simplified as

| (21) | ||||

Following the approach in [42, Appendix B] and [45], we can show that with , and we have

Subsequently, to satisfy (20), the bound for is determined as

| (22) |

Thus, if is chosen as in (22), we can ensure that all agents reach a consensus on state estimates asymptotically, which completes the proof. ∎

V Resilience of the BR-CDF to Byzantine attacks

This section investigates the robustness of the BR-CDF in Algorithm 1 to data falsification attacks. We assume that Byzantine agents start perturbing the information once the network reaches steady-state, i.e., . We further assume that the attack remains stealthy from the perspective of agents; thus, the consensus gain remains fixed for .

In steady-state, the error covariance matrix in (8) satisfies

| (23) | ||||

where . Defining , , , , and , the network-wide version of (23) is given by

| (24) | |||

where is the network-wide coordinated attack covariance. Under Assumption 1, we have

| (25) | |||

and using the result of (21), we finally have

| (26) | ||||

The last term in (26) describes the impact of the coordinated Byzantine attack on the error covariance matrix that is scaled by . Thus, defining the steady-state network-wide MSE (NMSE) as

| (27) |

we see that partial sharing, i.e., , results in lower steady-state NMSE compared to the case when the full state is shared, i.e., , which gives enhanced robustness against coordinated Byzantine attacks.

VI Coordinated Byzantine Attack Design

To analyze the worst-case performance of the BR-CDF algorithm, we consider a scenario where Byzantine agents design a coordinated attack to maximize the NMSE. Based on the attack model in Section II and the error covariance of the BR-CDF algorithm in (7), Byzantine agents have the following two levers to design their coordinated attack:

-

•

The design of perturbation covariance matrix , modeled as the covariance of zero-mean Gaussian sequences.

-

•

The choice of selection matrices that impacts the sequence of information fractions that Byzantine agents share at the beginning of the attack, i.e., for .

We ensure that the attack remains stealthy from the perspective of regular agents by setting an upper bound on the energy of the perturbation sequences, i.e., . Assuming Byzantines start perturbing information once agents reach steady-state, i.e., , we derive an expression for the NMSE pertaining to the estimator in (4). The network-wide evolution of the estimation error of the BR-CDF algorithm, given in (III), is given by

| (28) |

where ,

As a result, the network-wide error covariance matrix , including cross-terms of the error covariance, is given by

| (29) |

where . In (29), the last term is due to the injected noise and is given by

| (30) |

which, compared to the Byzantine-free case, degrades the NMSE. Considering the NMSE in (27), we define two optimization problems to find the optimal coordinated Byzantine attacks by designing the partial-sharing selection matrices at and attack covariance matrices of Byzantine agents.

The last term of the estimation error covariance , as in (30), is the only term of (29) that depends on the attack; thus, maximizing the trace of the estimation error covariance is equivalent to maximizing the trace of its last term [50]. The last term of in (29) also depends on the selection matrix and given the attack covariance , we can show that

| (31) |

where . Thus, the optimization problem that maximizes the steady-state NMSE can be stated as

| (32) | ||||

| s. t. | ||||

where the resulted solution for determines the and the first two constraints restrict the selection matrix to be diagonal with or elements on the main diagonal. The last constraint enforces that only elements of the state vector are shared with neighbors at each given instant. We relax the non-convex Boolean constraint on the elements of and rewrite the optimization problem as

| (33) | ||||

| s. t. | ||||

The objective function in (LABEL:OPT_S_main) can be further simplified as

| (34) | ||||

which still contains non-convex quadratic terms. To overcome this problem, we employ the block coordinate descent (BCD) algorithm where each Byzantine agent , given the selection matrix of other Byzantines, optimizes its own selection matrix. The BCD algorithm is iterated for iterations and at each iteration , agent employs the selection matrix of other Byzantine agents from the previous iteration, i.e. .

Hence, the optimization problem in (LABEL:OPT_S_main) can be solved by employing the BCD method, where at each agent and BCD iteration , the optimization problem is modeled as

| (35) | ||||||

| s. t. | ||||||

with the objective function

| (36) | ||||

and as the selection matrix of Byzantine agent at the former BCD iteration.

Algorithm 2 summarizes the BCD algorithm used to solve the optimization problem in (35). Next, we investigate how optimizing the perturbation covariance matrix impacts the NMSE.

Given the selection matrices at the beginning of the attack, i.e., for , Byzantine agents can maximize the steady-state NMSE by cooperatively designing their attack covariances in the following optimization problem

| (37) | ||||

| s. t. | ||||

where and is a vector designed to preserve the structure of the perturbation covariance. We introduce the Boolean vector where if and otherwise. By employing , the block matrices of the that correspond to regular agents are all set to zero. The first constraint in (LABEL:OPT) guarantees that the designed attack covariance is positive semidefinite and the last constraint is related to stealthiness. The energy of the Byzantine noise sequences is assumed to be limited as to maintain the attack stealthiness.

Remark 1.

The optimization problem in (LABEL:OPT) is a semidefinite programming (SDP) problem that can be efficiently solved by interior-point methods.

VII Simulation Results

In this section, we demonstrate the robustness of the BR-CDF algorithm to Byzantine attacks. For this purpose, we consider a target tracking problem with the state vector length of , described by a linear model

We considered a randomly generated undirected connected network with agents, as shown in Fig. 2. At each agent , the state noise covariance is and the local observation is given by

In addition, at each agent , we considered the observation noise covariance as , where . The average NMSE of agents is considered as a performance metric, i.e.,

| (38) |

with being the steady-state error covariance matrix of agent in (23). The simulation results presented in the following are obtained by averaging over 100 independent experiments.

We simulated the proposed BR-CDF algorithm for different values of , e.g., 2, 4, 6, and 8 (i.e., , , and information sharing). Fig. 3 shows the corresponding learning curves, i.e., MSE versus time instant , when no attacks occur in the network. We see that the performance degradation is inversely proportional to the amount of information sharing. Although sharing a smaller fraction results in higher MSE, the difference is negligible in this experiment.

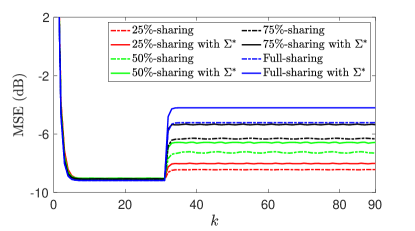

Next, we examined the robustness of the BR-CDF algorithm to Byzantine attacks. After the network has reached convergence, the Byzantine agents launch an attack at . The Byzantine agents are chosen as the nodes with the highest degree in the network graph and the energy of the attack sequences is restricted with parameter . We then compared the accuracy of the proposed suboptimal BR-CDF in Algorithm 1 to the solution of the BR-CDF that shares all necessary variables. Fig. 4 illustrates their corresponding learning curves for different values of . We observe that the suboptimal solution performs closely to the solution that shares all necessary variables. Furthermore, the proposed algorithms provide robustness against Byzantine attacks since sharing less information results in lower MSE.

In Fig. 5, in order to observe the fluctuation caused by the selection matrices, we plot the MSE in (38) and with in (8).111The difference between and MSE is that the does not include the statistical expectation with respect to the selection matrices. Thus, we can examine the accuracy of our theoretical finding to compute the expected value of the error covariance with respect to the selection matrices in (23). The comparable performance of the MSE and in Fig. 5 demonstrates that simulation results match theoretical findings.

To solve the optimization problem in (35), we performed the simulation by the BCD algorithm with iterations and designed the selection matrices at . We can see from Fig. 6 that the designed selection matrix increases the network MSE. Also, it can be seen that designing the selection matrices has a higher impact on the network performance when a smaller fraction is shared.

By solving the optimization problem in (LABEL:OPT), we examine the impact of optimizing the attack covariance compared to a random attack covariance. To this end, we fixed the constraint on the energy of the perturbation sequences, i.e., . Fig. 7 shows that optimizing the perturbation covariance increases the MSE, while using partial sharing of information enhances robustness to Byzantine attacks by restricting the growth in MSE. In other words, as we share more information with neighbors, the impact of optimizing the perturbation covariance matrix increases.

For different values of , Fig. 8 plots the versus time index for optimized and random selection of the attack covariance. It can be seen that when less information is shared, the sensitivity to perturbation sequences with optimized covariance increases, resulting in high levels of fluctuation in the . In addition, Figs. 6 and 7 show that the optimized selection matrices have a greater impact when less information is shared, e.g., and -sharing, while optimal attack covariance has a higher impact when larger fractions of information are shared, e.g., and full-sharing.

In order to analyze the robustness of the proposed BR-CDF algorithm to the number of Byzantine agents, Fig. 9 plots the MSE versus the percentage of Byzantine agents in the network. As expected, we see that as the percentage of Byzantine agents increases, the MSE grows; however, partial sharing of information can significantly improve the resilience to Byzantine attacks, as illustrated by obtaining the lower MSE. In addition, Fig. 10 illustrates the MSE versus the trace of the attack covariance in order to assess the robustness of the BR-CDF algorithm to perturbation sequences. It can be seen that partial sharing of information improves robustness to injected noise by obtaining lower MSE.

VIII Conclusion

This paper proposed a Byzantine-resilient consensus-based distributed filter (BR-CDF) that allows agents to exchange a fraction of their information at each time instant. We characterized the performance and convergence of the BR-CDF and investigated the impact of coordinated data falsification attacks. We showed that partial sharing of information provides robustness against Byzantine attacks and also reduces the communication load among agents by sharing a smaller fraction of the states at each time instant. Furthermore, we analyzed the worst-case scenario of a data falsification attack where Byzantine agents cooperate on designing the covariance of their falsification data or the sequence of their shared fractions. Finally, the numerical results verified the robustness of the proposed BR-CDF against Byzantine attacks and corroborated the theoretical findings.

References

- [1] A. Humayed, J. Lin, F. Li, and B. Luo, “Cyber-physical systems security—a survey,” IEEE Internet Things J., vol. 4, no. 6, pp. 1802–1831, Dec. 2017.

- [2] D. Ding, Q.-L. Han, X. Ge, and J. Wang, “Secure state estimation and control of cyber-physical systems: A survey,” IEEE Trans. Syst., Man, Cybern. Syst., vol. 51, no. 1, pp. 176–190, Jan. 2021.

- [3] A. Farraj, E. Hammad, and D. Kundur, “On the impact of cyber attacks on data integrity in storage-based transient stability control,” IEEE Trans. Ind. Informat., vol. 13, no. 6, pp. 3322–3333, Dec. 2017.

- [4] L. Hu, Z. Wang, Q.-L. Han, and X. Liu, “State estimation under false data injection attacks: Security analysis and system protection,” Elsevier Automatica, vol. 87, pp. 176–183, Jan. 2018.

- [5] L. J. Rodriguez, N. H. Tran, T. Q. Duong, T. Le-Ngoc, M. Elkashlan, and S. Shetty, “Physical layer security in wireless cooperative relay networks: State of the art and beyond,” IEEE Commun. Mag., vol. 53, no. 12, pp. 32–39, Dec. 2015.

- [6] N. Forti, G. Battistelli, L. Chisci, S. Li, B. Wang, and B. Sinopoli, “Distributed joint attack detection and secure state estimation,” IEEE Trans. Signal Inf. Process. Netw., vol. 4, no. 1, pp. 96–110, Mar. 2018.

- [7] M. S. Rahman, M. A. Mahmud, A. M. T. Oo, and H. R. Pota, “Multi-agent approach for enhancing security of protection schemes in cyber-physical energy systems,” IEEE Trans. Ind. Informat., vol. 13, no. 2, pp. 436–447, Apr. 2017.

- [8] H. Fawzi, P. Tabuada, and S. Diggavi, “Secure estimation and control for cyber-physical systems under adversarial attacks,” IEEE Trans. Autom. Control, vol. 59, no. 6, pp. 1454–1467, Jun. 2014.

- [9] A. Moradi, N. K. D. Venkategowda, S. P. Talebi, and S. Werner, “Privacy-preserving distributed Kalman filtering,” IEEE Trans. Signal Process., pp. 1–16, 2022.

- [10] A. Moradi, N. K. Venkategowda, S. P. Talebi, and S. Werner, “Distributed Kalman filtering with privacy against honest-but-curious adversaries,” in Proc. 55th IEEE Asilomar Conf. Signals, Syst., Comput., 2021, pp. 790–794.

- [11] A. Moradi, N. K. D. Venkategowda, S. P. Talebi, and S. Werner, “Securing the distributed Kalman filter against curious agents,” in Proc. 24th IEEE Int. Conf. Inf. Fusion, 2021, pp. 1–7.

- [12] S. Liang, J. Lam, and H. Lin, “Secure estimation with privacy protection,” IEEE Trans. Cybern., pp. 1–15, 2022.

- [13] D. Ding, Q.-L. Han, Z. Wang, and X. Ge, “A survey on model-based distributed control and filtering for industrial cyber-physical systems,” IEEE Trans. Ind. Informat., vol. 15, no. 5, pp. 2483–2499, May 2019.

- [14] C. Zhao, J. He, and J. Chen, “Resilient consensus with mobile detectors against malicious attacks,” IEEE Trans. Signal Inf. Process. Netw., vol. 4, no. 1, pp. 60–69, Mar. 2018.

- [15] Y. Guan and X. Ge, “Distributed attack detection and secure estimation of networked cyber-physical systems against false data injection attacks and jamming attacks,” IEEE Trans. Signal Inf. Process. Netw., vol. 4, no. 1, pp. 48–59, Mar. 2018.

- [16] R. K. Chang, “Defending against flooding-based distributed denial-of-service attacks: A tutorial,” IEEE Commun. Mag., vol. 40, no. 10, pp. 42–51, Oct. 2002.

- [17] A. Vempaty, L. Tong, and P. K. Varshney, “Distributed inference with byzantine data: State-of-the-art review on data falsification attacks,” IEEE Signal Process. Mag., vol. 30, no. 5, pp. 65–75, Aug. 2013.

- [18] A. Moradi, N. K. Venkategowda, and S. Werner, “Total variation-based distributed kalman filtering for resiliency against byzantines,” IEEE Sensors J., vol. 23, no. 4, pp. 4228–4238, Feb. 2023.

- [19] Y. Mo and B. Sinopoli, “On the performance degradation of cyber-physical systems under stealthy integrity attacks,” IEEE Trans. Autom. Control, vol. 61, no. 9, pp. 2618–2624, Sept. 2016.

- [20] R. Deng, G. Xiao, R. Lu, H. Liang, and A. V. Vasilakos, “False data injection on state estimation in power systems—attacks, impacts, and defense: A survey,” IEEE Trans. Ind. Informat., vol. 13, no. 2, pp. 411–423, Apr. 2017.

- [21] F. Li and Y. Tang, “False data injection attack for cyber-physical systems with resource constraint,” IEEE Trans. Cybern., vol. 50, no. 2, pp. 729–738, Feb. 2020.

- [22] P. Cheng, Z. Yang, J. Chen, Y. Qi, and L. Shi, “An event-based stealthy attack on remote state estimation,” IEEE Trans. Autom. Control, vol. 65, no. 10, pp. 4348–4355, Oct. 2020.

- [23] P. Srikantha, J. Liu, and J. Samarabandu, “A novel distributed and stealthy attack on active distribution networks and a mitigation strategy,” IEEE Trans. Ind. Informat., vol. 16, no. 2, pp. 823–831, Feb. 2020.

- [24] Z. Guo, D. Shi, K. H. Johansson, and L. Shi, “Optimal linear cyber-attack on remote state estimation,” IEEE Control Netw. Syst., vol. 4, no. 1, pp. 4–13, Mar. 2017.

- [25] Y. Ni, Z. Guo, Y. Mo, and L. Shi, “On the performance analysis of reset attack in cyber-physical systems,” IEEE Trans. Autom. Control, vol. 65, no. 1, pp. 419–425, Jan. 2020.

- [26] A. Moradi, N. K. Venkategowda, and S. Werner, “Coordinated data-falsification attacks in consensus-based distributed Kalman filtering,” in Proc. 8th IEEE Int. Workshop Comput. Advances Multi-Sensor Adaptive Process., 2019, pp. 495–499.

- [27] M. Choraria, A. Chattopadhyay, U. Mitra, and E. G. Ström, “Design of false data injection attack on distributed process estimation,” IEEE Trans. Inf. Forensics Security, vol. 17, no. 670-683, Jan. 2022.

- [28] M. N. Kurt, Y. Yılmaz, and X. Wang, “Distributed quickest detection of cyber-attacks in smart grid,” IEEE Trans. Inf. Forensics Security, vol. 13, no. 8, pp. 2015–2030, Aug. 2018.

- [29] M. N. Kurt, Y. Yilmaz, and X. Wang, “Real-time detection of hybrid and stealthy cyber-attacks in smart grid,” IEEE Trans. Inf. Forensics Security, vol. 14, no. 2, pp. 498–513, Feb. 2019.

- [30] M. Aktukmak, Y. Yilmaz, and I. Uysal, “Sequential attack detection in recommender systems,” IEEE Trans. Inf. Forensics Security, vol. 16, pp. 3285–3298, Apr. 2021.

- [31] Y. Chen, S. Kar, and J. M. Moura, “Resilient distributed estimation through adversary detection,” IEEE Trans. Signal Process., vol. 66, no. 9, pp. 2455–2469, May 2018.

- [32] Y. Li and T. Chen, “Stochastic detector against linear deception attacks on remote state estimation,” in Proc. 55th IEEE Conf. Decis. Control, 2016, pp. 6291–6296.

- [33] Y. Li, L. Shi, and T. Chen, “Detection against linear deception attacks on multi-sensor remote state estimation,” IEEE Control Netw. Syst., vol. 5, no. 3, pp. 846–856, Sept. 2018.

- [34] W. Yang, Y. Zhang, G. Chen, C. Yang, and L. Shi, “Distributed filtering under false data injection attacks,” Elsevier Automatica, vol. 102, pp. 34–44, 2019.

- [35] Y. Chen, S. Kar, and J. M. F. Moura, “Resilient distributed estimation: Sensor attacks,” IEEE Trans. Autom. Control, vol. 64, no. 9, pp. 3772–3779, Sept. 2018.

- [36] A. Barboni, H. Rezaee, F. Boem, and T. Parisini, “Detection of covert cyber-attacks in interconnected systems: A distributed model-based approach,” IEEE Trans. Autom. Control, vol. 65, no. 9, pp. 3728–3741, Sept. 2020.

- [37] J. Shang, M. Chen, and T. Chen, “Optimal linear encryption against stealthy attacks on remote state estimation,” IEEE Trans. Autom. Control, vol. 66, no. 8, pp. 3592–3607, Aug. 2021.

- [38] L. Su and S. Shahrampour, “Finite-time guarantees for byzantine-resilient distributed state estimation with noisy measurements,” IEEE Trans. Autom. Control, vol. 65, no. 9, pp. 3758–3771, Sept. 2020.

- [39] Y. Chen, S. Kar, and J. M. Moura, “Resilient distributed parameter estimation with heterogeneous data,” IEEE Trans. Signal Process., vol. 67, no. 19, pp. 4918–4933, Oct. 2019.

- [40] J. G. Lee, J. Kim, and H. Shim, “Fully distributed resilient state estimation based on distributed median solver,” IEEE Trans. Autom. Control, vol. 65, no. 9, pp. 3935–3942, Sept. 2020.

- [41] D. Feng, C. Jiang, G. Lim, L. J. Cimini, G. Feng, and G. Y. Li, “A survey of energy-efficient wireless communications,” IEEE Commun. Surveys Tuts., vol. 15, no. 1, pp. 167–178, First Quarter 2013.

- [42] R. Arablouei, S. Werner, Y.-F. Huang, and K. Doğançay, “Distributed least mean-square estimation with partial diffusion,” IEEE Trans. Signal Process., vol. 62, no. 2, pp. 472–484, Jan. 2014.

- [43] R. Arablouei, K. Doğançay, S. Werner, and Y.-F. Huang, “Adaptive distributed estimation based on recursive least-squares and partial diffusion,” IEEE Trans. Signal Process., vol. 62, no. 14, pp. 3510–3522, Jul. 2014.

- [44] V. C. Gogineni, A. Moradi, N. K. Venkategowda, and S. Werner, “Communication-efficient and privacy-aware distributed LMS algorithm,” in Proc. 25th IEEE Int. Conf. Inf. Fusion, 2022, pp. 1–6.

- [45] V. C. Gogineni, S. Werner, Y.-F. Huang, and A. Kuh, “Communication-efficient online federated learning strategies for kernel regression,” IEEE Internet Things J., pp. 1–1, 2022.

- [46] R. Olfati-Saber, “Kalman-consensus filter: Optimality, stability, and performance,” in Proc. 48th IEEE Conf. Decis. Control, 2009, pp. 7036–7042.

- [47] Y. Chen, S. Kar, and J. M. Moura, “Optimal attack strategies subject to detection constraints against cyber-physical systems,” IEEE Control Netw. Syst., vol. 5, no. 3, pp. 1157–1168, Sept. 2018.

- [48] C.-Z. Bai, V. Gupta, and F. Pasqualetti, “On Kalman filtering with compromised sensors: Attack stealthiness and performance bounds,” IEEE Trans. Autom. Control, vol. 62, no. 12, pp. 6641–6648, Des. 2017.

- [49] R. Olfati-Saber, “Distributed Kalman filtering for sensor networks,” in Proc. 46th IEEE Conf. Decis. Control, 2007, pp. 5492–5498.

- [50] Z. Guo, D. Shi, K. H. Johansson, and L. Shi, “Worst-case stealthy innovation-based linear attack on remote state estimation,” Elsevier Automatica, vol. 89, pp. 117–124, Mar. 2018.