Distributed Estimation, Control and Coordination of Quadcopter Swarm Robots

Introduction

In this thesis we are interested in applying distributed estimation, control and optimization techniques to enable a group of quadcopters to fly through openings. The quadcopters are assumed to be equipped with a simulated bearing and distance sensor for localization. Some quadcopters are designated as leaders who carry global position sensors. We assume quadcopters can communicate information with each other. Under these assumptions, the goal of project was achieved by completing the following tasks:

-

1.

Estimate global positions from distance and bearing measurements.

-

2.

Form and maintain desired shapes.

-

3.

Change the scale of the formation.

-

4.

Plan collision free trajectories to pass through openings.

The thesis is organized in the following way to address above challenges: Chapter 1 presented preliminary graph theory that is frequently referenced throughout the thesis. Chapter 2 gave an overview of the system set-up and explains the principle of bearing and distance sensor. Chapter 3 introduced the distributed observer for swarm localization from the bearing and distance sensor measurements. Chapter 4 discussed the distributed control for formation maintaining and chapter 5 proposed a formation scale estimation method for time varying formation scale. Finally Chapter 6 proposed two scalable trajectory optimization algorithms to plan collision-free trajectories through the openings and gave an overview of the constraints.

Chapter 1 Graph Theory

The concepts presented in this chapter are mainly summarized from the book [2].

1.1 Graphs

Undirected graph

An undirected graph is a tuple , where is a node list and is a set of edges of unordered pairs of nodes. An unordered edge is a set of two nodes , and . If , then are called neighbors. Let denote the set of neighbors of .

Directed graph

A directed graph (diagraph) is a tuple , where is a node list and is a set of edges of ordered pairs of nodes. An ordered pair denotes an edge from to . is called in-neighbor of and is called out-neighbor of . Let denote the set of out-neighbor of .

Path

A path of undirected graph is an ordered sequence of nodes such that any pair of consecutive nodes is an edge of the graph.

Directed path

A directed path of a diagraph is an ordered sequence of nodes such that any pair of consecutive nodes is an edge of the diagraph.

Connectivity

An undirected graph is connected if between any pair of nodes there exist a path. A diagraph is strongly connected if there exists a directed path between any pair of nodes.

Weighted diagraph

A weighted diagraph is a triplet , where is a diagraph and is a strictly positive scalar of an edge . A weighted diagraph is undirected if for any edge , there exist an edge and .

1.2 Adjacency Matrix

Given a weighted diagraph , the adjacency matrix is defined as follows:

| (1.1) |

The weighted out-degree matrix are defined by:

| (1.2) |

1.3 Laplacian Matrix

The Laplacian matrix of the weighted diagraph is defined as:

| (1.3) |

and

| (1.4) |

If is undirected,

| (1.5) |

and

| (1.6) |

For an undirected graph , is symmetric. is a simple eigenvalue of and all other eigenvalues of are real and positive:

| (1.7) |

1.4 Incidence Matrix

Given an undirected weighted graph , number the edges of with a unique and assign an arbitrary direction to each edge. The direction of an edge is from to . is called the source node of and is the sink node. Then the incidence matrix B is defined element-wise as

| (1.8) |

The incidence matrix is used to compute the difference between the values of two nodes connected by an edge oriented from to

| (1.9) |

Thus , where . Recall that

| (1.10) |

Then the Laplacian matrix is closely related to the incidence matrix through:

| (1.11) |

The incidence matrix operates on to compute the difference between and of an edge . The difference is then weighted by a diagonal element of the diagonal matrix . Finally picks and sums the weighted differences of all edges connecting the node . The picking and summation properties of helps us to find an appropriate observer gain matrix in a later discuss about distributed observer design. Note that is symmetric because the weighted graph is undirected, i.e., .

Chapter 2 System Setup

2.1 Overview

An overview of the system is shown in Fig. 2.1. The testbed for this project is a nano quadcopter called Crazyflie 2.0. There are five main modules developed on PC in this project. A tracking module tracks the global position of Crazyflie . Then the tracked positions are used for simulating the local distance and bearing sensor measurement of Crazyflie measured by Crazyflie . A preprocessing step follows to transform the simulated local measurement to global measurement from which the distributed localization module can estimate the global position of the Crazyflie. Based on the estimated positions, Crazyflies can apply distributed control to maintain a formation [10] or plan trajectories to accomplish certain tasks [1]. In this chapter we discuss the hardware of the system and three modules: (a) Crazyflies tracking, (b) bearing and distance sensor simulation and (c) the preprocessing of the sensor measurements.

2.2 Hardware

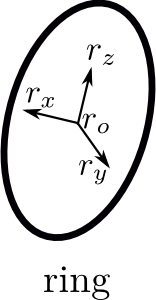

We have in total 4 Crazyflies and 1 Crazyradio for the communication between PC and Crazyflies. Since Crazyflies cannot directly communicate with each other, all communications were completed through PC. We used vicon system to track Crazyflies. On top of each Crazyflie, a infra-red led board is attached to strengthen the signal being observed by the vicon system. We used a hula loop to resemble a ring or opening. Three vicon markers were attached to the ring for tracking its position and orientation. The hardware used in this project are shown in Fig. 2.2.

2.3 Crazyflies Tracking

The vicon system outputs a set of unordered position measurements set of which each element is the position measurement of a Crazyflie. Since the set is unordered, the correspondences of these measurements to the Crazyflies are unknown. In order to select the correct position measurement from the unordered measurements and be more robust to the missing measurements, we applied a kalman filter to track each Crazyflie. The Crazyflie is modelled as a random walk with unknown normally distributed acceleration . Assume the state vector of the Crazyflie is:

| (2.1) |

Then the random walk model of the Crazyflie is:

| (2.2) | ||||

| (2.3) |

where ms is the time interval between two consecutive measurements of vicon system. We applied the standard kalman filter to estimate the state . Let and denote the prior updates of position and velocity of Crazyflie and and denote the measurement updates of Crazyflie . Then the prediction step at time for Crazyflie is:

| (2.4) | ||||

| (2.5) |

Since only the positions are measured, the measurement model is:

| (2.6) | ||||

| (2.7) | ||||

| (2.8) |

Before performing the measurement update step, the position measurement of Crazyflie should be correctly picked from the unordered measurement set . It is selected by searching a measurement that has the smallest euclidean distance to the predicted position :

| (2.9) |

and the measurement update step at time is:

| (2.10) | ||||

| (2.11) |

where and are estimator gains. Thus we are able to track each Crazyflie from the unordered set of positions measurements. The tracked positions will be used for simulating the global position sensors and the bearing and distance sensor on Crazyflies.

2.4 Bearing and Distance Sensor

2.4.1 Sensor Simulation

As shown in Fig. 2.3 the bearing and distance sensor attached on the Crazyflie measures the distance and angle of Crazyflie in its local coordinate .

The local bearing and distance measurement is basically a Spherical coordinate representation. To simulate the bearing and distance sensor from vicon measurements, we first compute the global relative position measurement from the tracked positions and .

| (2.12) |

and we can obtain the local representation of in the local coordinate frame through the attitude matrix that is estimated by Crazyflie on-board attitude estimator:

| (2.13) |

Let , then the local bearing and distance measurement can be found as:

| (2.14) | ||||

| (2.15) | ||||

| (2.16) |

2.4.2 Preprocessing of Sensor Measurements

To estimate the global position from the local bearing and distance of neighbor , a preprocessing step of transforming the Spherical representation to the global Cartesian representations is necessary. This transformation is illustrated in Fig. 2.4. For a distance and bearing measurement measured in Crazyflie ’s coordinate , we first transform it to the local Cartesian representation .

| (2.17) |

Furthermore, the local Cartesian representation can also be transformed to the global Cartesian representation through the attitude matrix .

| (2.18) |

With the global representation of relative position , the distributed observer is able to estimate the global positions of Crazyflies. Note that the attitude matrix plays a crucial role here for the simulation and the preprocessing of the distance and bearing measurements.

2.5 Parameters

The parameters we used for tracking Crazyflie , when the discretization time step ms are:

| (2.19) | |||

| (2.20) |

Chapter 3 Distributed Observer

3.1 Observer Design

We assume that the underlying graph of the measurements is undirected and connected, that is, both Crazyflie and have the relative position measurement , after the preprocessing step. Let the edge of be numbered with a unique and consider all Crazyflies are modeled as double-integrators in -dimensional space (n=3):

| (3.1) |

The system dynamics in state space is:

| (3.2) |

As discussed in the previous chapter, the relative measurements are represented in global Cartesian coordinate . From now on, the prescript and are dropped from for more concise notation.

We are able to express the relative measurements and the global measurements through the incidence matrix and the selection matrix , where the selection matrix is defined as

| (3.3) |

and is a vector of all global measurements:

| (3.4) |

where is the node list of Crazyflies that carry global position sensors. is the th column of the identity matrix . Let and . Then we decompose all measurements into relative and absolute measurements as follows:

| (3.5) | ||||

| (3.6) |

The kronecker product generalizes system to n-dimentional space and is the vector of measurement noise. A typical state observer can be designed as:

| (3.7) | ||||

| (3.8) | ||||

| (3.9) |

Usually a steady state optimal kalman filter will be used to design the gain :

| (3.10) |

However the optimal kalman gain is a dense matrix that will fuse all measurements available in the network to update each Crazyflie ’s state [7]. This is not possible since the measurements and communications are only local and the local state observer of agent updates states only from the neighbors’ relative measurements and from its own global position measurements:

| (3.11) |

where and control the weights of the measurements available to and if Crazyflie does not have global position measurement. and were used to fine-tune the gains for updating positions and velocities. Note that only sums local weighted relative measurements error . As shown in Fig. 3.1(c), can be viewed as a global position measurement of and its difference with the prediction of global position is the measurement error:

| (3.12) |

Let , then is a Laplacian matrix and

| (3.13) |

Let , then

| (3.14) |

Therefore the distributed observer can be written in a compact form as:

| (3.15) |

and the observer gain is:

| (3.16) |

3.2 Stability

To study the stability of above observer, let . Then

| (3.17) |

and

| (3.18) | ||||

| (3.19) | ||||

| (3.20) |

To ensure the observer estimates states properly, the error dynamics matrix needs to be asymptotically stable. The kronecker product only add multiplicities of each eigenvalues and do not affect stability. We then find eigenvalues of to study the stability of by solving .

| (3.21) | ||||

| (3.22) | ||||

| (3.23) | ||||

| (3.24) |

Let be th eigenvalue of , then

| (3.25) |

Then we can solve above equation to find

| (3.26) |

The Routh-Hurwitz stability criterion tells us that if the coefficients of second order polynomial are all positive then the roots are in the left half plane. Therefore if , then is Hurwitz. It is easy to verify that is positive definite and thus :

| (3.27) | ||||

| (3.28) | ||||

| (3.29) |

Since if and only if , and ,

| (3.30) |

Thus and is Hurwitz. The estimation error will asymptotically converge to zero. Thus all the agents are able to estimate positions and velocities, and we are ready to apply distributed control law to control the swarm.

3.3 Parameters

The observer gains to update position and velocity are:

| (3.31) | |||

| (3.32) |

(a) If i measures global position, the observer gains used in this project are:

| (3.33) | |||

| (3.34) |

(b) If i has no global position sensor , then:

| (3.35) | |||

| (3.36) | |||

| (3.37) | |||

| (3.38) |

The parameters selected may not be optimal and need to be further tuned by trial and error in practice.

Chapter 4 Distributed Control

4.1 Controller Design

Let and be the desired global positions and velocities of all Crazyflies. Then the desired relative positions and velocities between and are and . Among the Crazyflies, leaders are able to control the global positions and velocities , whereas followers can only control the relative positions and velocities . To maintain the formation, we propose implementing following formation control law on each Crazyflie [10]:

| (4.1) | ||||

| (4.2) | ||||

| (4.3) | ||||

| (4.4) | ||||

| (4.5) |

where controls relative positions to achieve the desired formations, controls relative velocities for the flocking behavior of the swarm, controls the global position of the swarm, and controls global velocity of the swarm. In addition, , are the control gains for relative positions and velocities to neighbors. , are control gains for the global positions and velocities. Only when Crazyflie is a leader, , .

We assume the underlying graph to control positions and velocities be and respectively, which are both undirected and connected. Let and denote the Laplacian matrices for these two graphs. Let and be defined element-wise as:

| (4.6) | |||

| (4.7) |

and assume the ratio of control gains of position to velocity is constant , :

| (4.8) | ||||

| (4.9) |

Then the distributed control law in a compact form is:

| (4.10) | ||||

| (4.11) | ||||

| (4.12) |

and we obtain the closed loop dynamics:

| (4.13) | ||||

| (4.14) | ||||

| (4.15) | ||||

| (4.16) | ||||

| (4.17) | ||||

| (4.18) |

Let , and , we then obtain the following error dynamics

| (4.19) | ||||

| (4.20) | ||||

| (4.21) |

One must make sure is Hurwitz such that and are bounded given is bounded. is the desired feed-forward input which is unknown.

4.2 Stability

Similar to the observer, we compute the eigenvalues of to test its stability:

| (4.22) | ||||

| (4.23) | ||||

| (4.24) | ||||

| (4.25) | ||||

| (4.26) |

Let be the th eigenvalue of , then

| (4.27) |

Again if then .

| (4.28) | ||||

| (4.29) | ||||

| (4.30) |

where since is a Laplacian matrix which has non-negative eigenvalues and if and only if , . because is a diagonal matrix with non-negative diagonal. Since when ,

| (4.31) |

Thus and the eigenvalue of has negative real part. The error dynamics matrix is asymptotically stable.

4.3 Parameters

If is a leader , the control gains for position and velocity are:

| (4.32) | |||

| (4.33) | |||

| (4.34) | |||

| (4.35) |

If is a follower ,

| (4.36) | |||

| (4.37) | |||

| (4.38) | |||

| (4.39) |

Basically the above parameters mean that the relative position and velocity error to the neighbors are averaged to generate the final control output. This is simple and but may not be optimal. In practice they should be fine-tuned by trial and error.

4.4 Discussion

The distributed control in this project is only suitable for maintaining formations in free space. The collision avoidance was not considered in designing the control law. In literature, Lyapunov functions with infinite potential energy were often proposed to achieve collision avoidance [11]. This is not realistic as physical systems have limited amount of actuation. This motivates us to apply optimization based trajectory generation to deal with complex environments and constraints as will be discussed in chapter 6.

Chapter 5 Formation Scale Estimation

5.1 Estimator Design

The distributed control maintains a constant shape of the formation by controlling the relative positions and velocities. Crazyflies need to know the scale factor of the relative positions and velocities to change the formation scale. In this chapter we discuss how to estimate the scale of the formation.

Assume the center of the formation is and the desired relative position and velocities are with zero mean, i.e., and . Then we are able to write the desired trajectories of the system as:

| (5.1) |

and

| (5.2) |

Sometimes the desired scale of the formation change over time, for example, to avoid collisions. Then the desired relative positions and velocities are not constant anymore and the desired trajectories can be rewritten as:

| (5.3) | ||||

| (5.4) |

where is the time varying scale and is the relative positions when . Since we only allow leaders to have the information of the desired scale , the followers must communicate with neighbors to obtain this scale. One approach is that the scale can be transmitted by leaders to their neighbors who in turn transmit to their neighbors [4]. However each follower needs to know among which of its neighbors there is a path to the leaders. This may not be robust in case the roles of leaders and followers may change and the communication may be lost. A better solution is again using distributed law to fuse all neighbors’ information to estimate the scale regardless of the path to leaders [4]. Assume the underlying communication graph is undirected and connected with weights and is its Laplacian matrix. Let be the list of leaders who know the desired scale and be the scale estimate vector of all Crazyflies. The Crazyflie updates through:

| (5.5) |

and

| (5.6) |

Let be a diagonal matrix and

| (5.7) |

Then we can write the estimation dynamics in compact form:

| (5.8) |

5.2 Stability

Let . If is varying slowly and is bounded the estimation error is bounded from the bounded input bounded state theory:

| (5.9) | ||||

| (5.10) | ||||

| (5.11) |

As before, and if and only if , and . Therefore is exponentially stable.

Note that and . Then

| (5.12) | ||||

| (5.13) |

Substitute and with the estimated scale and , we obtain

| (5.14) | ||||

| (5.15) |

Then we are able to write the approximated desired trajectories as:

| (5.16) |

Thus the approximated desired relative positions and velocities are obtained from the estimated scale factor . Crazyflies then can apply the formation control law to maintain the estimated time varying desired relative positions and velocities.

5.3 Parameters

We again average neighbors’ estimates , to update :

(a) If is a leader, , then

| (5.17) |

(b) If is a follower, , then

| (5.18) | |||

| (5.19) |

Chapter 6 Distributed Trajectory Optimization

6.1 Centralized Trajectory Optimization

Federico proposed a discrete time trajectory optimization method to compute collision-free trajectories in a centralized manner [1]. The optimization problem is:

| (6.3) |

where is the stacked acceleration vectors of all quadcopters. Let and denote the trajectory duration and discretization time step, then . The equality constraint represents initial and final positions and velocities of the quadcopters and the inequality constraint contains the convexified collision avoidance constraints and other physical constraints, e.g., actuator constraints. Since the jerks of quadcopter is related to the magnitude of body rates, we seek for a minimum jerk solution to reduce the aggressiveness of attitude changing during the flight [9]:

| (6.6) |

where is to compute forward difference of to approximate the derivative of acceleration . The above centralized approach scales poorly with number of Crazyflies. There are collision avoidance constraints and optimization variables. In the following sections we discuss how to make the optimization more scalable.

6.2 Initial Solution

To obtain the convexified collision avoidance constraints and the ring constraint , we need either an initial guess or a previous solution. Here we discuss one possible solution as the initial guess.

We first solve the optimization problem 6.6 without to find a straight line solution for each Crazyflie without considering the collision avoidance and ring constraints, which is shown in figure 6.1

The straight line solution is used for finding a proper crossing time to impose the ring constraint. Then a velocity constraint at the position of the crossing time is imposed and the initial solution is refined such that it passes through the center of ring perpendicularly with a reasonable speed.

6.3 Collision Avoidance Constraints

There are two types of collisions considered to solve the optimization problem 6.6:

6.3.1 Collisions between Crazyflie and ring

The ring resembles an opening that only its interior is allowed to pass through as illustrated in Fig. 6.3. Therefore, Crazyflie should avoid hitting on or bypassing the ring. The ring object can be modelled as a convex circle constraint if we know the time to impose it when the Crazyflie crosses the ring. However it is unknown before solving the optimization problem. The following steps estimate a crossing time and convexify the ring constraint using an initial or previous th solution :

-

1.

find

-

2.

let

-

3.

let

-

4.

let

We used a tube and two cones to approximate the ring constraint such that Crazyflie will avoid collisions before and after passing through it. The reason for imposing only two cone constraints is that it makes the constraints only be local near the ring and will not affect the optimization of trajectory far from the ring. This on one hand limits the number of constraints and on the other hand it also reduces the chances of infeasibility when the initial or final position that cannot be optimized are not within the cone, which results in an infeasible problem.

tube

| (6.7) | |||

| (6.8) |

leftCone

| (6.9) | |||

| (6.10) |

rightCone

| (6.11) | |||

| (6.12) |

6.3.2 Collisions between Crazyflies

To avoid collisions between Crazyflies themselves at each , a safe distance margin m between Crazyflies’ centers is enforced. This margin is large enough to tolerate certain control error when Crazyflies are tracking the trajectories. The collision avoidance constraint between Crazyflies and at time is a non-convex constraint:

| (6.13) |

To convexify this constraint, again we need an initial guess or previous solution . Assume the new solutions are and , then the convexified constraint is [1]:

| (6.14) |

Note that this convexified constraint assumes the optimization variables are . We can also optimize only for Crazyflie and for Crazyflie independently [3].

| (6.15) | |||

| (6.16) |

As shown in Fig. 6.5, the convexified constraints Eqn. 6.14 and Eqn. 6.15 are different. Eqn. 6.14 is a relative constraint that the infeasible region can move along the direction of , whereas Eqn. 6.14 is an absolute constraint that the infeasible region is static. Clearly the collision constraints convexified with Eqn. 6.14 have larger feasible regions.

6.4 Distributed Constrained Convex Optimization

After discussing constructing initial solution and convexfication of collision constraints, we begin discussing the method to make the optimization problem more scalable. Recently there is an increasing interest in enabling agents to cooperatively solve the following distributed constrained convex optimization:

| (6.19) |

where each is a local objective function and is a local feasible set. The important observation here is that both the objective function and constraint set are decomposed as individual local objective functions and feasible sets. The distributed projected subgradient algorithm to solve the above problem is [6]:

| (6.20) |

where is the local estimate of at time m, is an entry of adjacency matrix of the underlying communication graph , is the step size of subgradient algorithm at time , and is a subgradient of local objective function at . is the projection of onto , i.e. . The distributed projected subgradient algorithm converges as :

| (6.21) |

under the following assumptions [6]:

-

1.

is nonempty and has nonempty interior.

-

2.

The graph is fixed and strongly connected.

-

3.

, .

-

4.

is doubly stochastic.

-

5.

is compact.

-

6.

and

Remark 1.

The is a local estimate of at time m. The termination condition of the algorithm is that all the local estimates converge sufficiently close to each other, i.e., , and we may assume at most iterations the algorithm can converge.

Remark 2.

There are three operations involved in this algorithm. (a) is a standard distributed averaging step such that and reach consensus as . (b) is a subgradient step to reduce the local objective function value. (c) projects the averaged and subgradient subtracted solution to the local feasible set . The combined three steps allows the local solution to reach consensus with neighbors asymptotically as and cooperatively reduce the value of objective function while satisfying their own local constraint after the projection .

where computes the jerk of Crazyflie , is the initial to final state condition of all Crazyflies, and includes the convexified collision avoidance constraints of Crazyflie with the remaining Crazyflies and other constraints local to , e.g., .

Remark 3.

The assumption 1 that has nonempty interior does not strictly hold for problem 6.24 as the equality constraint is present. However experiments show that the algorithm still converges. One reason may be that all the Crazyflies share the same equality constraint , which does not affect the convergence of algorithm that assumes only inequality constraints exist. The assumption 3 and 4 can be guaranteed by setting , which also simplifies the algorithm design. The assumption 5 is satisfied because of the actuator constraint is compact. Finally in assumption 6, is the step size of subgradient used for decreasing the objective function value. Experiments show that the algorithm converges faster when is set close to 0. This is because the algorithm is solely trying to find a feasible point of without too much perturbation from the operation of subtracting subgradients. Since we are more interested in quickly finding a feasible solution rather than its optimality, we set for fastest consensus rate. Although setting violates assumption 6, experiments demonstrate that the algorithm always converges.

Assuming and , the distributed projected subgradient algorithm 6.20 becomes:

| (6.25) |

Fig. 6.6 illustrates the process of two agents running the algorithm Eqn. 6.25. The solutions and asymptotically converge to the intersection and reach consensus as .

Remark 4.

The significance of distributed projected algorithm Eqn. 6.25 is that (a) the constraint set is decomposed to and distributed to each Crazyflie. Thus the number of constraints for each Crazyflie is small. (b) the algorithm runs in parallel. Compared to the centralized problem 6.6 where the number of constraints of is of order , the number of constraints of of the problem 6.25 is equal to (because there are other Crazyflies for collision avoidance). The optimization may need to be solved times until convergence. As the solving time for convex problem increases quadratically with the number of inequalities, the runtime of problem 6.6 and algorithm 6.25 are of order and .

Remark 5.

Although of Crazyflie contains convexified collision avoidance constraints with the remaining Crazyflies, it does not mean it has to have established communication channels with the rest Crazyflies. Fig. 6.7 illustrates an example that 4 Crazyflies are running the distributed algorithm in parallel. There are 3 convexified collision constraints in any Crazyflie ’s local feasible set . However each Crazyflie is communicating with only 2 Crazyflies. For example, Crazyflie 1 will only receive and at each time m.

Remark 6.

The collision avoidance constraint for any pair of Crazyflies is included in both Crazyflies’ constraint sets. For example in Fig. 6.7, the constraint sets of both Crazyflie 1 and 4 include the collision constraint pair (1,4). This is redundant for the algorithm to converge. It is sufficient to let it be included in one of feasible sets. Nonetheless, this requires a protocol for constraints assignment. For simplicity, the collision constraint for a pair of Crazyflies is included in both feasible sets.

Remark 7.

In order to convexifiy the collision avoidance constraint, before running distributed optimization, the initial solutions must be shared in the network. For the example in Fig. 6.7, Crazyflie 1 needs to know the initial solution of Crazyflie 4 to convexify the collision avoidance constraint between 1 and 4. This is accomplished through the communication path 4-2-1 or 4-3-1. This may cause significant delay when the network is large.

6.5 Distributed Trajectory Optimization

6.5.1 Observations

The distributed projected algorithm 6.25 successfully reduces the problem size from to . However, even the problem size is , it will be quickly become not scalable as grows because:

-

1.

To linearize collision avoidance constraint, the initial solutions of all Crazyflies must be shared in the network, which may be time-consuming when the network is large.

-

2.

The dimension of is equal to . Therefore, the communication time of after each optimization may approximately linearly grow with .

-

3.

The number of collision avoidance constraints of each Crazyflie is equal to . As becomes large, the optimization will inevitably be intractable.

To address above problems, we first make three key observations:

-

1.

For the distributed projected algorithm 6.25, each Crazyflie optimizes the trajectories of the remaining Crazyflies. Let , where is Crazyflie ’s solution of the trajectory of Crazyflie . We may limit Crazyflie to optimize only , to accept other Crazyflies’ optimized trajectories directly without the averaging step, and to treat of other Crazyflies as static obstacles. Because the trajectories of other Crazyflies will not be optimized, Eqn.(6.15)-(6.16) are used for the convexification and the initial-to-final state constraints of other Crazyflies are also excluded from . As a result, the distributed projected algorithm becomes:

(6.26) (6.27) (6.28) where is the initial-to-final state constraint of Crazyflie and has the collision constraints convexified using Eqn. 6.15)- 6.16 and other constraints local to . Note that both and have the same number of constraints but with different dimensionality. Eqn. 6.27 means that Crazyflie ’s solution of neighbor is updated with the solution Crazyflie has optimized itself. Eqn. 6.28 means that to update non-neighbors’ solution , Crazyflie selects the most updated one among the neighbors’ solutions.

-

2.

Due to the presence of collision avoidance constraints, along the resulting optimized trajectories, Crazyflies are separated by a significant amount of space from their neighbors. For those Crazyflies who are not neighbors, they are separated by other Crazyflies in between. Therefore the collision avoidance constraints for non-neighbor pairs are actually not active after all. We then could exclude these inactive constraints pairs to reduce the optimization time. This is achieved by defining an collision active region with radius of such that only those Crazyflies who are within this region are neighbors with active collision avoidance constraints. As shown in Fig. 6.8, there are no neighbors detected at time , whereas at time Crazyflie detected neighbors among themselves because they are sufficiently close to each other.

-

3.

If we are able to exclude inactive constraints for non-neighbors, then constraints involving are excluded from . Crazyflie then only need to accept neighbors’ solution . The algorithm becomes:

(6.29) (6.30)

Remark 8.

Without collision constraints with non-neighbors, the optimization time is significantly reduced and Crazyflie does not need to communicate to Crazyflie any more. Therefore the communication costs are also lowered.

6.5.2 The Algorithm

We summarize above observations in Alg. 1. Fig. 6.8 is an example that illustrates the process of running Alg. 1. At the beginning the flight, each Crazyflie computes an initial trajectory passing through the center of ring as explained in section 6.2 and start tracking the initial trajectory. At each time instant it is detecting neighbors who are within a sphere of radius around it and add their trajectories to its obstacle set. The obstacle sets are used for convexifications of both collision avoidance constraints (Eqn. 6.15- 6.16) and the ring constraints (Eqn. 6.8- 6.12). If there will be collisions between its nominal trajectory and neighbors’ trajectories in future states, will be re-optimized with Eqn. 6.29 such that future collisions are avoided and the new trajectory is shared to their neighbors (Eqn. 6.30). In Fig. 6.8(b) Crazyflie re-optimize and share their trajectories to their neighbors at , whereas Crazyflie does not optimize its trajectory since there are no future collision detected with . Crazyflie only updates the obstacle set from to after having received the re-optimized trajectory . Note that because Crazyflie does not re-optimize its trajectory, the obstacle set of Crazyflie keeps unchanged at .

Alg. 1 allows each Crazyflie to do trajectory optimization in parallel while they are flying. Their neighbors’ trajectories are dynamically added to or removed from the obstacle sets depending on the closeness to their neighbors. Thus the number of constraints and the solving time of each optimization for each Crazyflie is limited as much as possible. Unlike model predictive control, Alg.1 solves optimization only when collisions are detected in the nominal trajectories, but otherwise Crazyflies will only follow the nominal trajectories without recomputing new trajectories. In a word, the trajectory optimization of the swarm was solved in parallel by each Crazyflie with minimal number of collision constraints at only several time instants when collisions were detected.

6.5.3 Convergence

As shown in Fig. 6.9(a), since Crazyflie optimize trajectories independently with respect to , the resulting re-optimized trajectories are only guaranteed to be collision-free with respect to but may not be collision-free between themselves. Crazyflie need another optimization if there exist collisions between and and repeat the optimization until the trajectories are collision-free (assume at most repetitions). Strictly speaking the convergence is not guaranteed [8]. Nonetheless, Alg. 1 reduces the possibility of convergence failure by solving a projection problem in line 12, where the objective of projection problem penalizes the deviation of optimized solution to previous solution. Therefore positions that are already collision-free in previous solutions will be preserved to be collision-free as much as possible. This is illustrated in Fig. 6.9(b). Note that in Fig. 6.9(c) collision constraint convexified from collision violated positions will guarantee the re-optimized positions are collision-free. Although solving projection problem also does not guarantee convergence, experiments show that convergence failures seldomly happen. An example of optimized trajectories for 20 Crazyflies are illustrated in Fig. 6.11 and the inter-Crazyflie distances of the initial solutions as well as optimized solutions are shown in Fig. 6.11. The radius of the ring is set to m. The duration of flight is . The solver we used is ECOS, which is efficient and open-source [5].

6.5.4 Result Comparison

In this section we compare the performance of Alg. 1 with a decentralized approach that does not define a collision active region for dynamically adding or removing constraints. The decentralized approach will solve the optimization before the flight and each Crazyflie/node includes all collision avoidance constraints with others. As shown in Fig. 6.12(b), the collision set of each node contains all the trajectories of other nodes.

We solved both trajectory optimizations for 20 times and averaged the results. We compared the performance of these two approaches according to:

-

1.

The average number of collision constraints for each Crazyflie

-

2.

The average solving time for each Crazyflie

Fig. 6.13 demonstrates that the average number of constraints approaches trajectories for Alg. 1 whereas for the decentralized approach it grows linearly. In addition, the average solving time of Alg. 1 is much less than the decentralized approach, which is explained in remark 9 and 10, and the runtime of Alg. 1 whenever a collision detected is of order .

Remark 9.

Alg. 1 solves optimization whenever collisions are detected during the flight. Let be the number of such optimizations, and the average solving time for Alg. 1 is defined as , whereas the decentralized approach is . Because of the division of , the average solving time of Alg. 1 is much less the decentralized one. The reason for dividing is that for real time application, Crazyflie will track the optimized trajectory as soon as each optimization completes. Thus the solving time for each optimization is more interesting than for the total time for all optimizations each Crazyflie has ever solved.

Remark 10.

The decentralized approach in Fig. 6.12(b) often fail to find feasible solutions during optimization and need multiple times of re-convexification and re-optimization before finding the feasible solution. This is one main reason why it takes much longer time than the Alg. 1. The frequent optimization failure may be due to too many convexified non-neighbor constraints were included and the feasible region became too small. Note that the re-convexification is done by the convexification of the infeasible solution returned by the solver.

6.6 Future Improvement

Alg. 1 is fast because the re-optimized trajectory will be directed accepted by neighbors. However Alg. 1 convexifies the collision avoidance constraints using Eqn. 6.15-Eqn. 6.16 (2ndConvexification), which significantly limits the feasible region of the optimization problem. Besides, the convergence is not guaranteed using Eqn. 6.15-Eqn. 6.16, even though the projection operation may reduce this possibility. Here we propose an improvement on the Alg. 1 that uses the convexification Eqn. 6.14 (1stConvexification) so that convergence is not an issue any more at the expense of more frequent communications and optimizations. The idea is that Crazyflie optimize both its own and neighbors’ trajectories when collisions are detected. The algorithm is shown in Alg. 2. Similar to Alg. 6.25, Crazyflies that are running Alg. 2 average the neighbors’ and their own solutions to reach consensus, and repeatedly project the averaged solution to local feasible sets. We assume by at most communications the consensus can be reached. The difference is that for Alg. 2, Crazyflies will not optimize non-neighbors solutions. Since the average number of neighbors approaches a limit, the number of neighbors’ trajectories to optimize is also limited. Therefore Alg. 2 is also scalable with the number of Crazyflies. Given communication time of the trajectories and runtime of projection are sufficiently small, Alg. 2 is superior to Alg. 1 because the convergence issue due to convexification does not exist any more and the convexified constraints have larger feasible regions. The runtime of Alg. 2 is of order .

Conclusion

This master thesis presented a distributed system to enable a swarm of quadcopters to fly through the openings. The distributed estimation, control and optimization techniques were discussed in details to achieve the goal of the project: the quadcopter swarm is able to fly through an opening subject to local communication and measurement constraints.

We demonstrated that the bearing and distance sensors can be used for localization given one Crazyflie has global position measurement, and that the coupled linear and rotational dynamics of quadcopters that allows Crazyflies to estimate their attitude is crucial to the localization. Since the majority of existing work about distributed control and estimation assumed point mass model without exploring the real dynamics of agent, our work could motivate people to take advantage of the dynamics of agents in future.

We also drew a conclusion that the distributed control is only suitable in an environment of free space. For complex environment, trajectory optimization is necessary to accomplish the challenging tasks that are often difficult for distributed control such as collision avoidance. We had presented the procedures of adapting a distributed optimization method to two trajectory optimization algorithms, and discussed the performance of the first algorithm Alg. 1. The thesis was finalized with the future work where the second scalable trajectory optimization algorithm Alg. 2 was proposed and comparisons to Alg. 1 were highlighted.

Acknowledgement

Here I would like to express my sincere gratitude to Michael Hamer for giving me an opportunity to carry out the master project in Professor D’Andrea’s group. I also thank for his support, patience, continuous guidance and insightful suggestions in the past 6 months. He has been always playing a key role of encouraging me and keeping me on the right track during the course of this project.

Bibliography

- [1] Federico Augugliaro, Angela P Schoellig, and Raffaello D’Andrea. Generation of collision-free trajectories for a quadrocopter fleet: A sequential convex programming approach. In Intelligent Robots and Systems (IROS), 2012 IEEE/RSJ International Conference on, pages 1917–1922. IEEE, 2012.

- [2] Francesco Bullo, Jorge Cortés, and Sonia Martinez. Distributed control of robotic networks: a mathematical approach to motion coordination algorithms. Princeton University Press, 2009.

- [3] Yufan Chen, Mark Cutler, and Jonathan P How. Decoupled multiagent path planning via incremental sequential convex programming. In Robotics and Automation (ICRA), 2015 IEEE International Conference on, pages 5954–5961. IEEE, 2015.

- [4] Samuel Coogan, Murat Arcak, and Magnus Egerstedt. Scaling the size of a multiagent formation via distributed feedback. In Decision and Control and European Control Conference (CDC-ECC), 2011 50th IEEE Conference on, pages 994–999. IEEE, 2011.

- [5] A. Domahidi, E. Chu, and S. Boyd. ECOS: An SOCP solver for embedded systems. In European Control Conference (ECC), pages 3071–3076, 2013.

- [6] Peng Lin, Wei Ren, and Yongduan Song. Distributed multi-agent optimization subject to nonidentical constraints and communication delays. Automatica, 65:120–131, 2016.

- [7] Shiyuan Lu, Che Lin, Zhiyun Lin, Ronghao Zheng, and Gangfeng Yan. Distributed kalman filter for relative sensing networks. In Control Conference (CCC), 2015 34th Chinese, pages 7541–7546. IEEE, 2015.

- [8] Daniel Morgan, Soon-Jo Chung, and Fred Y Hadaegh. Model predictive control of swarms of spacecraft using sequential convex programming. Journal of Guidance, Control, and Dynamics, 37(6):1725–1740, 2014.

- [9] Mark W Mueller, Markus Hehn, and Raffaello D’Andrea. A computationally efficient motion primitive for quadrocopter trajectory generation. IEEE Transactions on Robotics, 31(6):1294–1310, 2015.

- [10] Kwang-Kyo Oh, Myoung-Chul Park, and Hyo-Sung Ahn. A survey of multi-agent formation control. Automatica, 53:424–440, 2015.

- [11] Dimitra Panagou, Dušan M Stipanović, and Petros G Voulgaris. Distributed coordination control for multi-robot networks using lyapunov-like barrier functions. IEEE Transactions on Automatic Control, 61(3):617–632, 2016.

Institute for Dynamic Systems and Control

Prof. Dr. R. D’Andrea, Prof. Dr. L. Guzzella

Title of work:

Thesis type and date:

Master Thesis,

Supervision:

Michael Hamer

Prof. Dr. Raffaello D’Andrea

Student:

| Name: | Zheng Jia |

| E-mail: | [email protected] |

| Legi-Nr.: | 13-947-254 |

| Semester: | 6 |

Statement regarding plagiarism:

By signing this statement, I affirm that I have read and signed the Declaration of Originality,

independently produced this paper, and adhered to the general practice of source

citation in this subject-area.

Declaration of Originality:

http://www.ethz.ch/faculty/exams/plagiarism/confirmation_en.pdf

Zurich, 18. 2. 2025: