Distributed Energy Trading and Scheduling among Microgrids via Multiagent Reinforcement Learning

Abstract

The development of renewable energy generation empowers microgrids to generate electricity to supply itself and to trade the surplus on energy markets. To minimize the overall cost, a microgrid must determine how to schedule its energy resources and electrical loads and how to trade with others. The control decisions are influenced by various factors, such as energy storage, renewable energy yield, electrical load, and competition from other microgrids. Making the optimal control decision is challenging, due to the complexity of the interconnected microgrids, the uncertainty of renewable energy generation and consumption, and the interplay among microgrids. The previous works mainly adopted the modeling-based approaches for deriving the control decision, yet they relied on the precise information of future system dynamics, which can be hard to obtain in a complex environment. This work provides a new perspective of obtaining the optimal control policy for distributed energy trading and scheduling by directly interacting with the environment, and proposes a multiagent deep reinforcement learning approach for learning the optimal control policy. Each microgrid is modeled as an agent, and different agents learn collaboratively for maximizing their rewards. The agent of each microgrid can make the local scheduling decision without knowing others’ information, which can well maintain the autonomy of each microgrid. We evaluate the performances of our proposed method using real-world datasets. The experimental results show that our method can significantly reduce the cost of the microgrids compared with the baseline methods.

Index Terms:

Energy trading, microgrid, distributed energy scheduling, multiagent, reinforcement learning.I Introduction

Distributed energy generation enables the microgrids to generate electricity from renewable sources, such as solar radiation, winds, etc. This can alleviate the environmental pollution caused by fossil fuel-based generation and reduce the energy loss caused by long-distance transmission [1]. The microgrids can save the cost by utilizing renewable energy and obtain revenue by selling the surplus in energy markets. Therefore, it has been witnessed that more and more renewable energy generators are deployed in the microgrids.

This paradigm has significantly changed the ways of the trading and scheduling of energy markets [2]. Each microgrid has become an autonomous entity, which can determine the trading price and quantity with other microgrids based on its energy demands and yields. On the other hand, it also requires each microgrid to make appropriate control decisions on how to schedule its electrical load and energy storage. For example, a microgrid may choose to charge the battery when the market price is low, and discharge the battery for utilization when the energy market price is high. Therefore, the microgrids must have some control mechanism for making optimal trading and scheduling decision to maximize their interests.

Making the trading and scheduling decisions for a microgrid is challenging from the following aspects. First, the trading decision of a microgrid is not only affected by itself but also by other microgrids. In a bidding market, if a microgrid’s selling price is too high or buying price is too low, no deal can be made, which will, therefore, affect the cost and the revenue of the microgrid. Second, the trading and the scheduling decisions of a microgrid are coupled. Making the decisions for trading and scheduling should consider every aspect of the whole system, including the electrical loads, energy storage and yield, and market price. Third, the intermittency of renewable energy sources (e.g., solar irradiation and wind speed) increases the uncertainties of energy generation, which makes the control problem more complex.

Some existing works separately studied the energy trading and scheduling problem for microgrids. Several previous works (e.g., [3, 4, 5, 6, 7]) adopted different approaches to design the trading strategies. However, they did not take into account the scheduling of the local energy storage and the electrical load in each microgrid. Some other works (e.g., [8, 9, 10]) considered the energy storage and load scheduling problems for microgrids. Yet, they considered the problem either from the perspective of an individual microgrid or from the perspective of a centralized controller, which assumes that all microgrids work as an entire entity without autonomy. Meanwhile, these works did not consider energy trading among the microgrids. These two lines of works either only considered the energy scheduling problem for microgrids, or only considered the trading problems for microgrids. Thus, they lack a comprehensive and all-sided analysis for the trading and scheduling problem for microgrids.

The works in [11, 12, 13, 14] jointly considered the energy trading and scheduling problem among microgrids for maximizing the interest of each microgrid. These works mainly adopted the game-theoretic models for modeling the trading and scheduling for microgrids [15]. The models rely on the precise information of future energy yields and electrical loads as inputs for deriving the optimal scheduling solution. However, different microgrid environments may be full of various uncertainties regarding renewable energy generation and energy consumption, which affects the performances of the scheduling. For instance, a microgrid may plan to sell a certain quantity of renewable energy, however, the contract may not be fulfilled because of bad weather conditions. The complexity of the microgrid system, the uncertainty of the environments, and the interplay among different microgrids posit many challenges on precisely modeling the problem and deriving the optimal control decision.

The multiagent deep reinforcement learning approach provides a new perspective for learning the optimal control policy by directly interacting with the environment. In this paper, we propose a multiagent deep reinforcement learning approach [16] for distributed energy trading and scheduling for microgrids. Each microgrid is modeled as an agent, and different agents may collaborate or compete with each other for maximizing its reward. We adopt the Multi-Agent Deep Deterministic Policy Gradient (MADDPG) approach [17] to design the algorithm for learning the optimal control policy for each microgrid. After training, the agent of each microgrid can independently make the control decision without requiring the information from other microgrids. The experimental results from extensive performance evaluations show that our method can significantly reduce the cost of each microgrid.

The main contributions are summarized as follows.

-

•

Propose a multiagent deep reinforcement learning approach for modeling the distributed energy trading and scheduling problem for microgrids and learning the optimal control policy for each microgrid.

-

•

Design a bidding-based incentive mechanism for energy trading among microgrids. The mechanism is suitable for multiagent reinforcement learning based energy trading.

-

•

Design the network models for the actor and the critic based on the characteristics of the control actions and the role of energy trading of each microgrid.

-

•

Implement an energy trading and scheduling simulation environment and evaluate the performances of the proposed method using real-world datasets.

The rest of this paper is organized as follows. Section II introduces the related works, Section III presents the overview of the energy trading and scheduling system, Section IV gives the system models and problem formulation, Section V designs the neural networks and the learning algorithm, Section VI evaluates performances, and Section VII concludes the paper.

II Related Work

In this section, we review the existing works on energy trading and scheduling among microgrids.

One line of research adopted different methods to model the energy trading for microgrids. Gregoratti et al. [3] and Matamoros et al. [4] considered the problem of energy trading among islanded microgrids and adopted the distributed convex optimization framework for minimizing cost. Wang et al. [5] and Lee et al. [6] modeled the energy trading among microgrids using a game-theoretic model and formulated the energy trading as a non-cooperative game. Wang et al. [7] proposed an energy trading framework based on the repeated game for maximizing the revenue of each microgrid and adopted reinforcement learning to learn the policy. These works did not consider the local electrical loads in a microgrid when studying the energy trading problem, yet the scheduling of local electrical loads also affects the trading decision.

Another line of research studied the energy scheduling problem for microgrids. Huang et al. [9] developed a control framework for microgrid energy management by categorizing energy usage. Fathi et al. [8] proposed an adaptive energy consumption scheduling method for microgrids under demand uncertainty to achieve low power generation cost and low peak-to-average ration. Wang et al. [18] proposed a framework for planning the renewable energy generation in microgrids. Fathi et al. [10] considered the power dispatching in microgrids for minimizing the overall power generation and transmission cost. Weng et al. [19] studied the distributed cooperative control for frequency and voltage stability and power sharing in microgrids. Ahn et al. [20] proposed the distributed coordination laws for energy generation and distribution in energy network. These works only considered the energy scheduling problem, and they did not consider the influence of energy trading among microgrids.

The works in [11, 12, 13, 14] jointly considered the energy trading and scheduling for microgrids. Wang et al. [11, 21] proposed a Nash bargaining-based energy trading method. The proposed method considers each microgrid as an autonomous entity, which aims to minimize its cost through energy bargaining. Paudel et al. [12] proposed a game-theoretic model for P2P energy trading among the prosumers in a community. Kim et al. [13] considered direct energy trading among microgrids under the network’s operational constraints. Li et al. [14] proposed a bilateral energy trading framework to increase the economics benefits of each individual. Different from these works, we propose a multiagent deep reinforcement learning approach which can directly learn the optimal control policy for each microgrid by interacting with the environment.

III System Overview

In this section, we introduce the system overview for distributed energy trading and scheduling among microgrids.

III-A Reference System

We illustrate the reference system of interconnected microgrids for energy trading and scheduling in Fig. 1. Several microgrids are interconnected with each other through power delivery system. Each microgrid consists of the following components: electrical loads, batteries, and renewable energy generators. The electrical loads are equipments which consume electricity, the batteries are utilized to store surplus electricity. The renewable energy generator can generate electricity from renewable energy sources, such as solar energy or wind energy. A microgrid can purchase electricity from the main grid on the wholesale market or from other microgrids on the hour-ahead energy market. It can also its sell its surplus energy to other microgrids on the hour-ahead energy market.

III-B Energy Trading Flow

We consider two types of energy markets, namely, the wholesale market and the hour-ahead bidding market. We illustrate the trading flows for the microgrids on the hour-ahead market in Fig. 2. At the beginning of each time slot, a microgrid needs to determine how to schedule the local electrical loads, energy storage, and the bidding price and quantity on the hour-ahead market. The electricity from the power station is sold on the wholesale market and the price is known in advanced. A microgrid can trade with other microgrids on the hour-ahead bidding market. Each microgrid determines its bidding (buying or selling) price and quantity. The bidding price and quantity of each microgrid will be submitted to the bidding system without disclosing to others. When the bidding period elapses, the bidding system will calculate the final market clearing price and the trading quantity of each microgrid based on the adopted double-auction trading algorithm. The payment for each period will be made based on the real electricity usage of each microgrid.

| the number of microgrids | |

| the i-th microgrid, | |

| discrete time slot, | |

| generation power of microgrid during time slot | |

| solar radiation in microgrid during time slot | |

| the overall area of the solar panels in microgrid | |

| conversion efficiency of the solar panels in microgrid | |

| maximum battery storage capacity of microgrid | |

| battery level of microgrid at beginning of time slot | |

| electrical load of microgrid during time slot | |

| battery charging/discharging efficiency of microgrid | |

| battery charging amount of during time slot | |

| battery discharging amount of during time slot | |

| a buyer’s bid price at time slot | |

| a seller’s sell price at time slot | |

| the market clearing price at time slot | |

| the bid quantity of microgrid at time slot | |

| the payment of to the hour-ahead market | |

| revenue for microgrid during time slot | |

| the supplied energy from microgrid during time slot | |

| penalty charged to microgrid at time slot | |

| selling quantity of microgrid during time slot | |

| the weight of penalty for energy under-supply to the grid | |

| consumed energy from wholesale market by microgrid | |

| energy cost of microgrid for wholesale market | |

| the electricity price of the wholesale market | |

| the state of microgrid at time slot | |

| the control action of microgrid for time slot | |

| the reward for microgrid during time slot | |

| submitted selling quantity of microgrid at time slot | |

| submitted buying quantity of microgrid at time slot | |

| the optimal control policy of agent | |

| the discount factor for the reward of agent | |

| the actor network of agent | |

| the critic network of agent | |

| the exploration noise for training | |

| the target Q-value of transition for agent |

IV System Model and Problem Formulation

In this section, we introduce the system models and problem formulation. The main notations are summarized in Table I.

IV-A Microgrid Components

We consider interconnected microgrids. The -th microgrid is denoted as , where . We adopt a discrete time system in which the time is denoted as . The duration of a time slot is one hour. Each microgrid consists of the following components.

Renewable Energy Generation

Renewable energy can be generated in each microgrid from natural energy sources. In this work, we consider the renewable energy generation with Solar Photovoltaics (PV). For simplicity, we assume that the power of the generated electricity in a microgrid is mainly determined by the solar irradiation, the overall area and the conversion efficiency of the solar panels in a microgrid, which follows the following equation,

| (1) |

where is the average power of electricity generation in microgrid during time slot , is the average solar radiation in microgrid during time slot , is the overall area of the solar panels in microgrid , and is the conversion efficiency of the solar panels in microgrid .

Battery

We denote the maximum battery capacity of microgrid as . The battery level of microgrid at the beginning of time slot is denoted as . The battery level changes due to charging and discharging.

Electrical Load

We consider the non-dispatchable electrical loads in the microgrids. The demand of the electrical loads in microgrid during time slot is denoted as .

IV-B Battery Charging and Discharging

During a time slot, a microgrid may charge or discharge the battery for energy storage or utilization. The changes of the battery level of the batteries in a microgrid due to the charging and discharging operations can be modeled as

| (2) |

where and are the charging and discharging efficiency, is the amount of energy charged to the batteries in microgrid during time slot , and is the amount of energy discharged from the batteries in microgrid during time slot . We assume that either charging or discharging can be performed to the batteries of a microgrid during a time slot.

IV-C Energy Trading among Microgrids

At the beginning of a time slot, each microgrid determines whether it should participate in the energy trading of the next time slot on the hour-ahead market to sell or to buy electricity. Each microgrid determines the trading price and the trading quantity that will be submitted to the bidding system. The final market clearing price and trading quantity will be determined based on the double auction mechanism.

Double Auction Mechanism

We design a double auction mechanism suitable for multiagent reinforcement learning-based trading according to the method proposed in [5]. As illustrated in Fig. 3, the buyers’ bid price at time slot will be sorted in decreasing order, denoted as . The sellers’ ask prices at time slot will be sorted in increasing order, denoted as . The aggregate supply curve and demand curve will intersect at a point where satisfies the following inequality,

| (3) |

The market clearing price can be any value within . In this work, we adopt as the market clearing price at time slot . If the total demands of the buyers and the total supply of the sellers are mismatching, the buyers with a higher bid price or the sellers with a lower ask price will be satisfied first. If the market needs to guarantee the truthfulness of double auction, it can be modified by excluding seller and buyer [5].

Monetary Cost for Buying Energy on the Hour-ahead Market

If a microgrid successfully bid a certain quantity of energy on the hour-ahead market, it needs to make the payment to the market. The monetary cost is determined by the market clearing price and trading quantity, which are determined by double auction. Suppose microgrid bids successfully, the monetary cost for microgrid during time slot is

| (4) |

where is the bid quantity of microgrid from the hour-ahead market at time slot and is the clearing price.

Revenue for Selling Energy on the Hour-ahead Market

If a microgrid successfully sell energy on the hour-ahead market, it can get a revenue, which is determined by the market clearing price and the trading quantity by double auction. Note that the final energy supply from a microgrid to the energy delivery system may be less than the pre-determined trading quantity due to device outrage, bad weather condition or yield prediction error. Thus, the revenue will be calculated based on the real energy supply from a microgrid. The revenue for microgrid on the hour-ahead market during time slot is

| (5) |

where is the supplied energy from microgrid during time slot . Note that should be no more than the trading quantity determined by double auction for microgrid .

Penalty for Unfulfilling the Contract

After the trading is completed, each microgrid needs to fulfill the contract. For the sellers, they must supply the gird with the amount of energy that is equal to the pre-determined trading quantity by double auction. If a seller’s energy supply to the grid is less than the pre-determined trading amount for any reasons, the seller will be charged some penalty fees for violating the contract. The penalty for microgrid is calculated as

| (6) |

where is the penalty charged to microgrid at the end of time slot , is the pre-determined selling quantity of microgrid by double auction at the beginning of time slot , is the real energy supply of microgrid to the grid during time slot , and is the weight of penalty.

In this paper, we assume is the gap between the wholesale market price and the market clearing price at time slot . The practical meaning is that some buyers will utilize units of energy during time slot at the market clearing price by trading; if microgrid only supply units, the balance will be made up by the wholesale market, and microgrid will be penalized based on the difference between the market clearing price and the wholesale market price at time slot .

Monetary Cost for Buying Energy from the Wholesale Market

If a microgrid consumes more energy from the grid than its buy quantity from the hour-ahead bidding market, the exceeding part of the energy consumption will be charged based on the price of the wholesale market. The overall supply of energy to a microgrid and its overall consumption of energy should be balanced during a time slot. Based on this balance, we can calculate the consumed energy from the wholesale energy market by microgrid during time slot as

| (7) |

Note that only one type of operation can be conducted for a microgrid during a time slot for charging and discharging, selling and buying, at least one of the two variables should be zero. The monetary cost for the consumed energy from the wholesale market by microgrid during time slot is

| (8) |

where is the electricity price of the wholesale market.

IV-D Problem Formulation

We formulate the energy trading and scheduling among microgrids as a Markov Game with continuous action spaces. Each microgrid is an agent, which aim is to learn the optimal policy to maximize its overall rewards.

State

The state of each microgrid for each time slot consists of two parts, namely, the local state and the public state. The local state includes the current battery level, the historical energy generation power and the historical electrical load of a microgrid. The public state includes the price of the wholesale market and the historical market clearing price in the hour-ahead market. Because the energy generation power and the electrical load for time slot are unknown, we adopt the information of last time slot to represent the states. We denote the state of microgrid at time slot as

| (9) |

where is the battery level at the beginning of time slot , is the renewable energy generation power during time slot , is the load during time slot , is the wholesale market price, and is the market clearing price of the hour-ahead market at time slot .

Action

The control action for each microgrid during each time slot includes the selling/buying price, the selling/buying quantity, and the charging/discharging quantity. We denote the control action for microgrid at time slot as

| (10) |

where operator represents choosing one action out of the two, are the submitted price of microgrid for selling/buying energy at time slot on the hour-ahead market, are the submitted selling/buying quantity of microgrid at time slot , are the charging/discharging quantity of microgrid during time slot . Note that a microgrid can be either a seller or a buyer during a time slot, and it can either charge battery or discharge battery. In the implementation, we can use the different ranges of an output of a neural network to represent different operations. For instance, the positive numbers of an output represent charging and the negative numbers represent discharging. Similarly, the positive numbers of an output represent selling quantity and the negative numbers represent buying quantity.

Reward

The reward for a microgrid during a time slot is the summation of the revenue and cost, which consist of four parts: 1) the revenue for selling energy on the hour-ahead market , 2) the cost for buying energy from the wholesale market , 3) the cost for buying energy from the hour-ahead market , 4) the penalty for energy under-supply to the grid . We define the reward function of microgrid for time slot as follow,

| (11) |

The reward reflects the quality of the control policy and each microgrid aims to maximize its reward.

Optimization Objective

The problem of energy trading and scheduling among microgrids can be formulated as a Markov Game [22] with agents and continuous action spaces. We denote a Markov Game as a tuple, , where is the set of states describing the environments of all agents, is the number of agents, is the set of actions of agent , is the state transition function of the environment, is the set of rewards of agent . At each time slot , the agents observe their local states and take control actions according their control policies . At the end of time slot , the agents will receive their rewards . The reward for an agent is determined by the state of the whole environment and the actions of all agents. The states of the agents will evolve to new states according to the state transition probability function. Our aim is to derive the optimal control policy for each agent to maximize its overall discounted future rewards, which can be presented as

| (12) |

where is the optimal control policy for microgrid , is the discount factor for the reward of agent , and is the reward for agent at time slot . The state transition probability function is hard to obtain for a complex environment, we will introduce the multiagent deep reinforcement learning approach to learn the optimal policy for each agent by directly interacting with the environment.

V Learning Algorithm for Distributed Energy Trading and Scheduling

In this section, we introduce the algorithm for learning the optimal control policy for each microgrid.

V-A Choice of Algorithm

We first briefly introduce why we choose MADDPG for learning the optimal control policy for distributed energy trading and scheduling among microgrids.

Mixed Cooperative-Competitive

Each autonomous microgrid can be seen an agent, which makes the decision for maximizing its rewards. The relationships among the interconnected microgrids are mixed cooperative-competitive. One microgrid may compete with other microgrids for energy selling on the hour-ahead market to maximize revenue; On the other hand, it could also buy energy from other microgrids at a lower price compared with the wholesale market to reduce cost. MADDPG can learn the optimal policy for each agent in a mixed cooperative-competitive environment.

Decentralized Control

MADDPG adopts the framework of centralized training and decentralized execution. During the training, some extra information of other agents (e.g., action, rewards, and training episodes) will be used for training the policy of an agent for learning collaboratively. However, the private information of other microgrids will not be used during execution, and only the local information of a microgrid and the public information are used for local decision making. Thus, MADDPG is suitable for decentralized control to maintain the autonomy of each microgrid.

Continuous Control

Most control actions in a microgrid are continuous, e.g., charging/discharging quantity, trading price and trading quantity, etc. MADDPG is a multiagent extension of Deep Deterministic Policy Gradient (DDPG), which is a deep reinforcement learning algorithm for solving continuous control problem. Therefore, MADDPG is naturally suitable for addressing the continuous control problem.

V-B Design of Actor and Critic Networks

We illustrate the network architecture of MADDPG in Fig. 4. Each agent consists of an actor and a critic. The actor maps the state to the control action. The critic evaluates the advantage of the actions under the given states compared to the average actions. We denote the actor network of agent as , where is the state of microgrid and is the corresponding control action for microgrid . The actor network of an agent inputs the local state and outputs a control action. We denote the critic network of agent as . The critic network inputs the states and the actions of all agents and outputs a real-valued evaluation.

Each agent has an individual critic network, which allows the agents to have different reward structures, and a microgrid can learn its optimal policy based on its objectives in a cooperative, competitive, or mixed environment. We only use the critic networks for training the actor network of each agent. After training, the critic networks are no longer required, and we only use the actor network of an agent for making control action for the corresponding microgrid. Thus, it will not relay on the states and actions of other microgrids.

We illustrate the implementation of the actor network and the critic network in Fig. 5. The outputs of an actor network include the selling price, the bid price, the trading quantity, and the charging quantity. Because an agent can only be either a seller or a buyer within one time slot, we use the value of the output of trading quantity to determine its role. Specifically, if the output value of trading quantity is larger than zero, the agent will be a buyer, and the bid price and the trading quantity will be submitted to the hour-ahead market for biding. If the output value of the trading quantity is less than zero, the agent will be a seller, and the selling price and trading quantity will be submitted for bidding on the hour-ahead market.

In the critic network of an agent, the states of all agents will be input into a Fully-Connected (FC) layer. The output vector of the FC layer will be concentrated with the control actions of all actors, and then input the second FC layer. Note that the main grid price and the last clearing price are public information, which are same for each agent. Therefore, they will be input into the critic network of an agent only once to avoid duplication and reduce the input dimension of the critic networks. The activation function of the actor network is Tanh function, and the range of each output is from to , and we will convert them to the actual range of each control action. The activation function of the critic network is a linear function and the output is a real value.

V-C Learning Algorithm

We now describe how to train the actor network and the critic network of each agent. The learning process can be conducted in a simulation environment. The details of the training algorithm are illustrated in Algorithm 1.

At the beginning of each time slot , each agent first observes the state of microgrid . State will be input into the actor network of agent and output a control action. For the exploration of the state space, we will add an exploration noise to the control action, and the final control action is

| (13) |

where is the exploration noise. We adopt an Ornstein-Uhlenbeck process [23] to generate the exploration noise.

After obtaining the control action for each agent , the control actions will be applied in the corresponding microgrids. The simulation environment will simulate the energy scheduling process and the energy trading process according to the specified control action for each microgrid. At the end of a time slot, each agent can calculate its reward during the time slot by Eq. (11) and observe new state . The state, action, reward, and new state information of each agent during a time slot will be stored as a transition in the replay buffer as , where , , , and .

At the end of each time slot, the actor network and the critic network of each agent will be trained with the transitions sampled from the replay buffer. To stabilize training, a copy of the actor networks and the critic networks will be created as target networks for slowly tracking the learned networks. We denote the target actor network and the target critic network of agent as and , respectively. To train the actor network and the critic network of an agent, we randomly select transitions from the replay buffer. For transition , we denote it as . The target Q-value of this transition for agent will be calculated as follow,

| (14) |

where is the discounting factor and is the -th item in (i.e., the next state of agent ). The critic network of agent will be updated by minimizing the following loss,

| (15) |

where denotes the network parameters of agent . The actor network of agent will be updated using the policy gradient (see details in [17]). The target network parameters of each agent will be updated using the following equation.

| (16) |

where represents the network parameters of the target network of agent and is the learning rate.

We denote the actor network of agent after training is . The control action for microgrid can be directly obtained by observing the state of microgrid and inputing into the actor network, mathematically denoted as . Thus, the computational complexity for obtaining a control action is only determined by the complexity of the actor network, which include the number of hidden layers and the number of neurons in each hidden layer. In this work, the actor network of an agent consists of two hidden layers. We suppose that each hidden layer of the actor has neurons, the complexity for making a control action for an agent is .

VI Experiment

In this section, we introduce the experiment settings and evaluate the performances of our proposed method.

VI-A Experiment Setting and Datasets

We simulate the energy trading and scheduling among four microgrids with different load characteristics and renewable energy generation capacities. The electrical load and the renewable energy generation of each microgrid during each time slot are scaled from real-world datasets. We illustrate parts (500 hours) of the load profiles and the renewable energy generation profiles of each microgrid in Fig. 6 and Fig. 7, respectively. Microgrid 1 and 2 have more renewable energy generations and surplus energy to sell. Microgrid 3 and 4 have heavier loads and less renewable energy generations.

The electricity price on the wholesale market is 22.79 cents/kWh in Singapore. The minimum bidding price on the hour-ahead market is 15.0 cents/kWh, and the maximum bidding price is 22.79 cents/kWh, which is equal to the wholesale market. We use the solar radiation data of one year at four locations of Singapore, which are obtained from Solcast [24], to simulate the solar radiation in each microgrid. We use the scaled historical electricity system demand data for every half-hour period in Singapore to simulate the electrical loads of the microgrids [25]. The maximum battery capacities of Microgrid 1-4 are 100MWh, 100MWh, 20MWh, 10MWh, respectively. The maximum bid quantity for each microgrid on the hour-ahead market is 7.5MWh.

The initial exploration noise scale is 1.0, and the exploration noise linearly decreases over 1000 training episodes till zero. The models are trained over 1500 episodes, and the performances are evaluated over 1000 episodes. Each episode consists of time steps, and each time step in the simulation is one hour. The batch size is 1024. The learning rate is 0.01. The discount factor of the reward is 0.8. Each critic network has three hidden layers, the first layer has 1024 neurons, the second layer has 512 neurons, and the third layer has 256 neurons. Each actor network has two hidden layers, the first layer has 512 neurons, and the second layer has 128 neurons. The activation function is ReLU function.

VI-B Convergence Analysis

To evaluate the convergences of different agents in MADDPG, we train the models five times under the same settings with different random seeds. We illustrate the rewards of different agents at different training episodes in Fig. 8, respectively. The shadow areas in the figures represent the standard deviations of the rewards of the five training times at the different episodes. We can observe from these figures that the rewards of different agents all converge.

The converged rewards of Microgrid 1 and 2 are larger than zero. This is because Microgrid 1 and 2 have larger capacities for renewable energy generation than their own consumptions. Therefore, they can sell their surplus energy on the hour-ahead market and gain revenues. Their revenues are larger than their cost, therefore, their converged rewards are larger than zero. The rewards of Microgrid 3 and 4 also increase during the training, because they can save more cost by improving the trading policy on the hour-ahead market. By trading on the hour-ahead market, they can save more cost compared with buying energy from the wholesale market.

The results illustrated in Fig. 8 verify the feasibility of adopting the multiagent approach for learning the optimal control policy for each microgrid. The four microgrids in the studied case are mixed cooperative-competitive. Microgrid 1 and 2 are competitors for energy selling, and Microgrid 3 and 4 are competitors for energy buying. Microgrid 1 and 2 also collaborate with Microgrid 3 and 4 for energy trading for mutual benefit. The convergences of the agents verify that the agents can find the equilibrium in the mixed cooperative-competitive environment for maximizing their rewards.

VI-C Performance Analysis

We analyze each agent’s performances by illustrating the characteristics of the system states and the control actions of each microgrid. We collect the control actions and the system states during each time slot over 1000 testing episodes. The distributions of the system states and the control actions are estimated with Kernel Density Estimation (KDE) and Histogram (Hist) to analyze the characteristics of each microgrid.

We illustrate the distribution of the bid price and the trading quantity of different microgrids on the hour-ahead market in Fig. 9 and 10, respectively. In our definition of the control action of each agent, if the price of an agent during one time step is larger than zero, the agent is a seller during the time slot. If the price is less than zero, the agent is a buyer during the time slot. In Fig. 9 and 10, we can observe that Microgrid 1 and 2 are mainly as the sellers on the hour-ahead market, because they have more surplus energy, which incentives them to sell to obtain revenues. Microgrid 3 and 4 are mainly as buyers on the hour-ahead market due to lower capacities of energy generation. The distributions of bid price and trading quantity are in line with the characteristics of each microgrid’s energy consumption and generation capacity.

The distributions of the selling price of Microgrid 1 and 2 are sharp, while the buying price of Microgrid 3 and 4 are flatter, because Microgrid 1 and 2 are more competitive on energy selling, and the renewable energy may be wasted if batteries have been fully charged and the generation exceeds the consumption. On the contrary, Microgrid 3 and 4 may change buying price based on their varying demands.

The distribution of the market clearing price on the hour-ahead market is illustrated in Fig. 12. The market clearing price mostly lies within the range (15, 20), which is much lower than the wholesale market price. Therefore, Microgrid 3 and 4 have the incentives of buying from the hour-ahead market to reduce cost. In some cases, there is no trade made on the hour-ahead market because the selling price is too high or the buying price is too low.

We illustrate the distribution of the overall trading quantity during a time slot on the hour-ahead market in Fig. 13. The microgrids prefer to submit the trading quantity at the maximum allowed quantity, because Microgrid 3 and 4 can always save cost by buying more from the hour-ahead market if they can consume. On the contrary, Microgrid 1 and 2 can get more revenues by selling more, if the trading quantity does not exceed the surplus. Therefore, the trading quantity of a microgrid has a large probability of being on the threshold of the maximum allowed trading quantity.

We illustrate the distribution of the battery levels of different microgrids in Fig. 11. The battery levels of Microgrid 3 and 4 are low during most of the time slots, because they have low capacity of energy generations. For Microgrid 1 and 2, their batteries may be fully charged, if they have too much surplus energy and cannot sell on the energy market, which incentives them to sell on the hour-ahead market. Therefore, the battery capacity is more important for the microgrids with larger capacity of renewable generation.

VI-D Performance Comparison

We compare the performances of our proposed method with the following baseline methods.

1) Isolated: the microgrids operate in an isolated mode, in which each microgrid can only supply the generated renewable energy to itself or charge the surplus energy to its battery. A microgrid can only buy energy on the wholesale market to meet its demand when local generation is deficient.

2) DDPG: the microgrids can trade with each other, yet the agent of each microgrid is trained independently with DDPG, which is a single-agent reinforcement learning approach. The agents will not share information with each other.

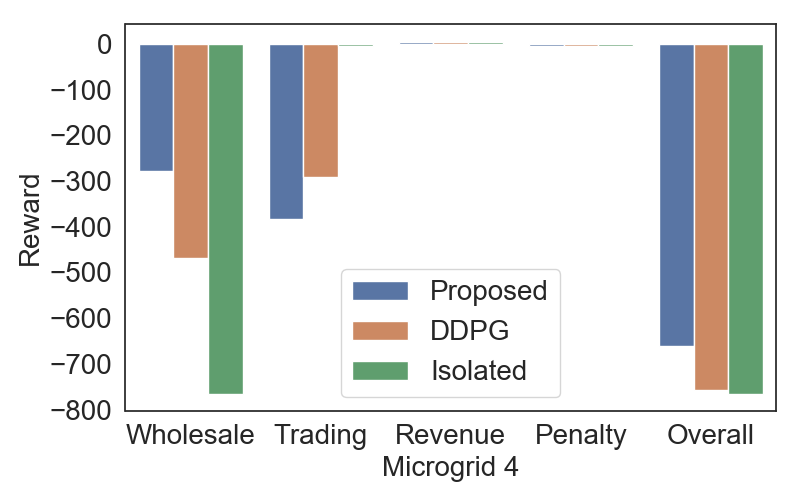

We illustrate the comparisons of the cost, revenue, and the overall reward per time slot for Microgrid 1-4 in Fig. 14, 15, 16, 17, respectively. In the X-axises of these figures, Wholesale represents the cost for buying from the wholesale market, Buying represents the cost for buying on the hour-ahead market, Selling represents the revenue for selling on the hour-ahead market, Penalty represents the penalty for violating the selling contract, and Overall represents the overall reward of the sum of the costs and revenue.

As illustrated in Fig. 14-17, the four microgrids can obtain a higher overall reward under our proposed method compared with the baselines. The results show that the multiagent deep reinforcement learning approach can effectively improve the reward of each agent by collaboratively learning the policy for each agent compared with the baselines.

As illustrated in Fig. 14 and 15, Microgrid 1 and 2 can obtain revenues by energy selling on the hour-ahead market. Thus, the overall rewards under our method and DDPG are higher than Isolated. With Isolated, the cost, revenue, and the overall reward are all near to zero, because Microgrid 1 and 2 have enough renewable energy generation to meet its demands, but cannot gain revenues without energy trading.

With our method, Microgrid 1 and 2 can gain more revenue compared with DDPG. As illustrated in Fig. 18, this is partly due to that the successful trading ratio and the average trading quantity per time slot with our proposed method are higher than that of DDPG. Therefore, Microgrid 1 and 2 can obtain more revenues on the hour-ahead market, and Microgrid 3 and 4 can save more cost via energy trading.

Fig. 16 and 17 verify that our proposed method can reduce more cost for Microgrid 3 and 4 compared with the baseline methods. This is because Microgrid 3 and 4 can successfully buy more energy from the hour-ahead market compared with DDPG, and the price on the hour-ahead market is much lower than the wholesale market, thus it can save more cost compared with DDPG. On the contrary, with the Isolated method, all energy must be brought from the wholesale market with a higher price, it will incur more cost.

VII Conclusion

In this paper, we propose a multiagent deep reinforcement learning approach for learning the optimal policy for distributed energy trading and scheduling among the microgrids. Each microgrid is model as an agent, which can cooperate and compete with other agents on the hour-ahead energy market for energy trading and make the local scheduling decision. We model the problem as a Markov game which aim to maximize the reward for each microgrid. We adopt MADDPG to design the algorithm to learn the optimal policy for each agent. To evaluate the performance, we conduct the experiments using the real-world datasets. The experimental results show that each agent can find the equilibrium in the mixed cooperative-competitive environment and converge to the optimal policy. Our method can reduce the cost and improve the overall reward for each microgrid compared with the baseline methods.

References

- [1] R. H. Lasseter, “Smart distribution: Coupled microgrids,” Proceedings of the IEEE, vol. 99, no. 6, pp. 1074–1082, 2011.

- [2] E. Mengelkamp, J. Gärttner, K. Rock, S. Kessler, L. Orsini, and C. Weinhardt, “Designing microgrid energy markets - a case study: The Brooklyn microgrid,” Applied Energy, vol. 210, pp. 870–880, 2018.

- [3] D. Gregoratti and J. Matamoros, “Distributed energy trading: The multiple-microgrid case,” iEEE Transactions on industrial Electronics, vol. 62, no. 4, pp. 2551–2559, 2014.

- [4] J. Matamoros, D. Gregoratti, and M. Dohler, “Microgrids energy trading in islanding mode,” in 2012 IEEE Third International Conference on Smart Grid Communications (SmartGridComm). IEEE, 2012, pp. 49–54.

- [5] Y. Wang, W. Saad, Z. Han, H. V. Poor, and T. Başar, “A game-theoretic approach to energy trading in the smart grid,” IEEE Transactions on Smart Grid, vol. 5, no. 3, pp. 1439–1450, 2014.

- [6] J. Lee, J. Guo, J. K. Choi, and M. Zukerman, “Distributed energy trading in microgrids: A game-theoretic model and its equilibrium analysis,” IEEE Transactions on Industrial Electronics, vol. 62, no. 6, pp. 3524–3533, 2015.

- [7] H. Wang, T. Huang, X. Liao, H. Abu-Rub, and G. Chen, “Reinforcement learning in energy trading game among smart microgrids,” IEEE Transactions on Industrial Electronics, vol. 63, no. 8, pp. 5109–5119, 2016.

- [8] M. Fathi and H. Bevrani, “Adaptive energy consumption scheduling for connected microgrids under demand uncertainty,” IEEE Transactions on Power Delivery, vol. 28, no. 3, pp. 1576–1583, 2013.

- [9] Y. Huang, S. Mao, and R. M. Nelms, “Adaptive electricity scheduling in microgrids,” IEEE Transactions on Smart Grid, vol. 5, no. 1, pp. 270–281, 2014.

- [10] M. Fathi and H. Bevrani, “Statistical cooperative power dispatching in interconnected microgrids,” IEEE Transactions on Sustainable Energy, vol. 4, no. 3, pp. 586–593, 2013.

- [11] H. Wang and J. Huang, “Bargaining-based energy trading market for interconnected microgrids,” in 2015 IEEE International Conference on Communications (ICC). IEEE, 2015, pp. 776–781.

- [12] A. Paudel, K. Chaudhari, C. Long, and H. B. Gooi, “Peer-to-Peer energy trading in a prosumer-based community microgrid: A game-theoretic model,” IEEE Transactions on Industrial Electronics, vol. 66, no. 8, pp. 6087–6097, 2018.

- [13] H. Kim, J. Lee, S. Bahrami, and V. Wong, “Direct energy trading of microgrids in distribution energy market,” IEEE Transactions on Power Systems, 2019.

- [14] J. Li, C. Zhang, Z. Xu, J. Wang, J. Zhao, and Y.-J. A. Zhang, “Distributed transactive energy trading framework in distribution networks,” IEEE Transactions on Power Systems, vol. 33, no. 6, pp. 7215–7227, 2018.

- [15] W. Tushar, T. K. Saha, C. Yuen, D. Smith, and H. V. Poor, “Peer-to-peer trading in electricity networks: an overview,” IEEE Transactions on Smart Grid, 2020.

- [16] R. S. Sutton and A. G. Barto, Reinforcement learning: An introduction. MIT press, 2018.

- [17] R. Lowe, Y. Wu, A. Tamar, J. Harb, O. P. Abbeel, and I. Mordatch, “Multi-agent actor-critic for mixed cooperative-competitive environments,” in Advances in Neural Information Processing Systems, 2017, pp. 6379–6390.

- [18] H. Wang and J. Huang, “Cooperative planning of renewable generations for interconnected microgrids,” IEEE Transactions on Smart Grid, vol. 7, no. 5, pp. 2486–2496, 2016.

- [19] S. Weng, D. Yue, C. Dou, J. Shi, and C. Huang, “Distributed event-triggered cooperative control for frequency and voltage stability and power sharing in isolated inverter-based microgrid,” IEEE transactions on cybernetics, no. 99, pp. 1–13, 2018.

- [20] H.-S. Ahn, B.-Y. Kim, Y.-H. Lim, B.-H. Lee, and K.-K. Oh, “Distributed coordination for optimal energy generation and distribution in cyber-physical energy networks,” IEEE transactions on cybernetics, vol. 48, no. 3, pp. 941–954, 2017.

- [21] H. Wang and J. Huang, “Incentivizing energy trading for interconnected microgrids,” IEEE Transactions on Smart Grid, vol. 9, no. 4, pp. 2647–2657, 2016.

- [22] M. L. Littman, “Markov games as a framework for multi-agent reinforcement learning,” in Machine learning proceedings 1994. Elsevier, 1994, pp. 157–163.

- [23] G. E. Uhlenbeck and L. S. Ornstein, “On the theory of the brownian motion,” Physical review, vol. 36, no. 5, p. 823, 1930.

- [24] “Solcast,” https://toolkit.solcast.com.au/, accessed: May 2020.

- [25] “Half Hourly System Demand,” https://data.gov.sg/dataset/half-hourly-system-demand, accessed: May 2020.