Distortion-Tolerant Monocular Depth Estimation On Omnidirectional Images Using Dual-cubemap

Abstract

Estimating the depth of omnidirectional images is more challenging than that of normal field-of-view (NFoV) images because the varying distortion can significantly twist an object’s shape. The existing methods suffer from troublesome distortion while estimating the depth of omnidirectional images, leading to inferior performance. To reduce the negative impact of the distortion influence, we propose a distortion-tolerant omnidirectional depth estimation algorithm using a dual-cubemap. It comprises two modules: Dual-Cubemap Depth Estimation (DCDE) module and Boundary Revision (BR) module. In DCDE module, we present a rotation-based dual-cubemap model to estimate the accurate NFoV depth, reducing the distortion at the cost of boundary discontinuity on omnidirectional depths. Then a boundary revision module is designed to smooth the discontinuous boundaries, which contributes to the precise and visually continuous omnidirectional depths. Extensive experiments demonstrate the superiority of our method over other state-of-the-art solutions.

Index Terms— Monocular, 360°, Depth Map, Distortion

1 Introduction

Compared with NFoV images, omnidirectional images contain 360° FoV environmental information, representing a 3D scene as a 2D equirectangular map. Benefiting from the advantage of wide FoV, the 360° camera gains increasing applications in indoor robotic systems, autonomous driving, and virtual reality. Consequently, estimating the depth directly on an omnidirectional image is appealing and demanding for other subsequent computer vision tasks.

The existing monocular depth estimations have achieved encouraging improvements on NFoV images due to the advance of deep learning and availability of large-scale 3D training data. But these excellent methods cannot be directly transferred to estimate the depth of an omnidirectional image. Even FRCN [1], the state-of-the-art monocular depth estimation method, exhibits poor performance in omnidirectional images because of the geometric distortion. In general, omnidirectional images are constructed by extending a sphere to a 360°180° equirectangular map, with increasing distortion from the equator to poles. The distortion twists the shapes and structures of objects to a varying degree and affects the depth estimation performance.

The existing omnidirectional depth estimation methods have to suffer from the effects of distortion while estimating the depth. Some researches [2, 3, 4, 5] propose to estimate the omnidirectional depth directly from a single equirectangular projection map using convolutional neural networks (CNNs). With the powerful feature extraction capability of CNNs, the depth around the equator can be predicted accurately, but the performance drops sharply in the areas with large distortions due to the limited receptive of the network. Considering the regularity of distortion distribution in an omnidirectional image, Su [6] design convolutional kernels of different sizes to deal with the distortion from different regions. Bi-Projection Fuse (BiFuse) [7] is proposed to estimate omnidirectional depth by combining an equirectangular map with a cubemap, further reducing the effects of distortion.

To eliminate the effects of distortions as much as possible, we propose a distortion-tolerant omnidirectional depth estimation method. In particular, we estimate the depth on the cubemap instead of the distorted equirectangular map. However, when the equirectangular map is converted into the cubemap, there is a 25 loss of pixels. In addition, the estimated depth of each face in this cubemap is not continuous. To tackle these two problems, we adopt another 45° rotating cubemap to reduce the pixel loss and smooth the depth difference simultaneously. Based on the dual-cubemap, our solution has more tolerance to distortions than the existing schemes.

Our framework comprises two main modules: cube depth estimation and boundary revision. In the cube depth estimation module, we estimate the depth from dual-cubemap, where the two cubemaps are obtained by rotating 45° from the same sphere. Also, a boundary-aware block is designed for information interaction between the two branches of the dual-cubemap, focusing on the cubemap boundary and mutually promoting depth estimation from the other branch. Then, the depth of cubemap is converted back to the equirectangular format, and an encoder-decoder network revises the coarse omnidirectional depth in the boundary revision module.

In experiments, we test our method on two real indoor scene omnidirectional datasets, Matterport3D [8] and Stanford2D3D [9]. The results show that the proposed framework outperforms the current state-of-the-art methods.

Our contributions are summarized as follows:

-

•

We design an end-to-end framework consisting of a DCDE module and a BR module, aiming to eliminate the effects of distortions and revise the discontinuous boundaries, respectively.

-

•

Different from the existing methods, our method estimates the omnidirectional depth from an interactive dual-cubemap, contributing to distortion-tolerant depth estimated results.

-

•

Experiments demonstrate that the proposed method exceeds the current state-of-the-art methods.

2 Related Work

We briefly discuss the existing methods related to our work in this section.

2.1 Monocular Depth Estimation

Monocular depth estimation, a process of mapping from low to high dimensions, can be very challenging. Early researchers solve the mapping function in a hand-crafted features manner. Nowadays, many recent approaches extract features using deep learning techniques. Eigen [10] propose the first CNNs based network. They adopted a coarse-to-fine strategy by designing a multi-scale module to improve predicted results. Inspired by [11], Laina [12] merged pre-trained ResNet-50 [13] and up-sampling blocks into FRCN [12] to estimate the depth. Considering the auxiliary role of other visual tasks, Jiao [14] introduced semantic information and designed an attention-driven loss as a bond. Different from treating depth estimation as a regression task, the approach of Fu [15] adopted an ordinal regression strategy to transform depth estimation into a classification task by discretizing depth value. However, all the methods mentioned above are designed for NFoV images, and can not be directly applied to our task.

2.2 Omnidirection Depth Estimation

Solving distortion is the key to applying traditional CNNs based approaches. Previous researches attempted to make it by designing various distortion-aware structures. Noticing that the distortion changes from the equator to the poles, Su [6] designed convolution kernels of different sizes to deal with the distortion from different regions. However, the relationship between the convolution kernels’ size and the distortion regions is not a simple linear mapping. Zioulis [16] proposed OmniDepth to adopt the spherical layer in [6] as the pre-processing module. In the omnidirectional target detection task, Benjamin [5] proposed SphereNet to correct distortion by introducing distortion invariance and spherical sampling position mode. DACF [17] designed a distortion-aware deformable convolution filter to directly perform depth regression on panoramas, getting a more accurate result.

On the other hand, some estimate omnidirectional depth using cubemap. This representation suffers from less distortion but brings discontinuity on boundaries for each face. To overcome this issue, Cheng [18] propose cube padding (cp) to utilize the connectivity between faces of the cube for image padding. Nevertheless, they ignore the characteristic of perspective projection. Spherical padding (sp) is presented in [7] to reduce the boundary discontinuity and combined with an equirectangular map to estimate depth. Unfortunately, the equirectangular format introduces distortion again, which inevitably leads to the instability of their training.

3 Our method

This section describes the proposed method in detail, and the architecture is illustrated in Fig. 3. Firstly, we introduce our motivation in Section 3.1. The proposed dual-cube depth estimation module and the boundary revision module are discussed in Section 3.2 and Section 3.3. Finally, we formulate the loss functions in Section 3.4.

3.1 Motivation

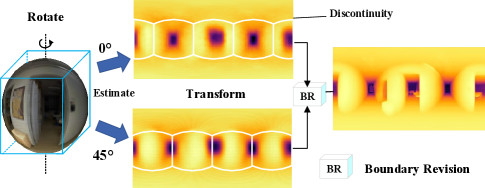

Compared with the equirectangular map, the cubemap contains far less distortion, significantly decreasing depth estimation difficulty. However, when converting to an equirectangular map, estimating each face of the cubemap can easily lead to depth discontinuities and an inability to recover missing pixels accurately, as shown in Fig.2.

To solve this problem, another reference depth map is used to smooth the discontinuous depth. The original cube and the rotated one completely revise the discontinuity because they have complementary boundary discontinuity areas and a consistent context (illustrated in Fig. 1, center). Besides, we observe that when is equal to 45°, the revision effect comes to the best.

We define a rotation operation representing the corresponding horizontal rotation. Besides, equirectangular-to-cube and cube-to-equirectangular transformations are donated as and , respectively. Given an equirectangular representation input , the whole process can be expressed as follows:

| (1) | |||||

| (2) |

where represents the output of DCDE module.

That is feasible but results in a substantial computational overhead. To optimize the algorithm, we quickly implement the rotation-based process by moving the map blocks (illustrated in Fig. 4) according to the conversion relationship between the sample points and spherical coordinates.

3.2 Dual-Cubemap Depth Estimation Module

The dual-cubemap depth module is established using two independent and parallel branches, and each branch shares the same architecture. In each branch, we adopt the pre-trained ResNet-50 [13] to extract features and up-projection [7] to generate the depth from features.

However, the discontinuous depth will be caused due to the discontinuous features in each cube face. To eliminate depth discontinuity at the feature-level, we design a boundary-aware block to promote the interaction between the two branches. Specifically, we convert the cube feature maps that output from layer into equirectangular format to expose the discontinuity and fuse the two branches’ features. The input of layer of the two branches can be respectively expressed as:

| (3) | |||||

| (4) |

where subscript 1, 2 represent the first and second branch, respectively, and is Hadamard product. We get two estimated depth maps in cubemap representation from this module.

3.3 Boundary Revision Module

The depth estimated from the previous module is merely a coarse result with obvious boundary and depth discontinuity, another BR module is designed to revise the boundary at pixel-level further. We adopt an encoder-decoder architecture to implement this module. The network contains 10 convolution blocks, as shown in Fig. 3(right), and each of which comprises two different convolution layers. A maxpooling or deconvolution is adopted every two layers. Besides, skip connections are applied to connect the low-level and high-level features with the same resolution by a 3×3 convolutional layer to prevent the gradient vanishing problem and information imbalance in training [19].

3.4 Objective function

Depth mutations often appear at the boundary of the object. Therefore, to make the depth estimation at that area more accurate, we introduce gradient loss [20]. Besides, the reverse Huber [21] or Berhu loss [12] is also added in our objective function:

| (5) |

| (6) |

And our loss function can be written as:

| (7) |

where is the prediction produced by the first branch; is the ground truth; and P represents all the valid value in the ground truth map.

| (8) |

where is the prediction produced by the second branch.

| (9) |

where is the final prediction.

| Dataset | Method | ||||||

|---|---|---|---|---|---|---|---|

| FRCN [12] | 0.4008 | 0.6704 | 0.1244 | 0.7703 | 0.9174 | 0.9617 | |

| OmniDepth [16] | 0.4838 | 0.7643 | 0.1450 | 0.6830 | 0.8794 | 0.9429 | |

| Matterport3D | BiFuse(cp) [18] | 0.3929 | 0.6628 | - | - | - | - |

| BiFuse(sp) [7] | 0.3470 | 0.6295 | 0.1281 | 0.8452 | 0.9319 | 0.9632 | |

| Ours | 0.2552 | 0.5381 | 0.1075 | 0.8896 | 0.9722 | 0.9893 | |

| FRCN [12] | 0.3428 | 0.5774 | 0.1100 | 0.7230 | 0.9207 | 0.9731 | |

| OmniDepth [16] | 0.3743 | 0.6152 | 0.1212 | 0.6877 | 0.8891 | 0.9578 | |

| Stanford2D3D | BiFuse(cp) [18] | 0.2588 | 0.4407 | - | - | - | - |

| BiFuse(sp) [7] | 0.2343 | 0.4142 | 0.0787 | 0.8660 | 0.9580 | 0.9860 | |

| Ours | 0.1876 | 0.4573 | 0.0894 | 0.9039 | 0.9809 | 0.9886 |

| (10) |

where , represent horizontal gradient and vertical gradient, respectively; and n is the total number of valid values from the ground truth. The total objective function is:

| (11) |

where are the weight of each part. While training our model, the weights are set to and .

4 Experiment

To evaluate our framework, extensive experiments are conducted to compare the proposed method with other state-of-the-art approaches and validate the effectiveness of each module.

4.1 Basic Settings

We conduct our experiments on an Nvidia RTX 2080 MQ GPU. Adam optimizer is chosen, and the batch size is set as 4. The learning rate is for the first 20 epochs and then adjusted to for the next 20 epochs. In addition, a model dynamic storage mechanism is set up to pick the best performance model when the training is stable.

4.2 Metrics and Datasets

We adopt the popular evaluation metrics used by previous works [22, 16] for performance analysis, including MAE, RMSE, RMSE(log),and , .

The experiments are carried out on Matterport3D and Stanford2D3D, two real indoor datasets. The depth values of the two datasets are collected by 3D cameras or sensors, which means there is noise or missing values in certain areas. We follow the official guidance to filter the unavailable images and limit the data range to filter out invalid values. This process can avoid the noise interfering in the experiment (According to the official description, the depth collection values range in 0 m to 10 m). Finally, we get 6319 images for training, 865 for validating, and 1632 for testing from Matterport3D; and 898 for training, 81 for validating, 365 for testing from Stanford2D3D.

| BR | GL | ||||

|---|---|---|---|---|---|

| ✓ | ✗ | ✗ | ✗ | 0.5091 | 0.5264 |

| ✓ | ✓ | ✗ | ✗ | 0.2414 | 0.8519 |

| ✓ | ✓ | ✓ | ✗ | 0.2103 | 0.8894 |

| ✓ | ✓ | ✓ | ✓ | 0.1876 | 0.9039 |

4.3 Quantitative and Qualitative Analysis

We exhibit quantitative comparisons on the two datasets in Table 1, where we mark the 1st best solution in red and the 2nd best solution in blue. The results show that our method outperforms all the other methods in every metric on Matterport3D. On Stanford2D3D, we perform 1st in MAE, , , and 2nd in the remaining two metrics. Since there is more noise in Stanford2D3D, MAE can better measure the performance of the model than RMSE. Overall, our predictions are on average 4 more accurate in than that reported by BiFuse [7]. The comparisons experimental validates the effectiveness of our dual-cubemap approach. The two cubemaps not only focus on the distortion-free regions but also retain the information from the large FoV of the panorama as much as possible, which both contribute to improving estimation accuracy.

Fig. 5 demonstrates the qualitative comparisons of the two datasets. Note that since BiFuse [7] does not release the training code, we can only use their model trained on 360D [16] for qualitative analysis. In general, our results are visually more clear and sharper around boundaries, which can be attributed to the complementary information from the dual-cubemap and gradient loss constraint on edges.

4.4 Ablation Experiment

In this section, ablation studies are performed on the following components: 1) : the 0° rotated cubemap branch; 2) : the 45° rotated cubemap branch; 3) BR: boundary revision module; 4) GL: gradient loss. Especially, 1) and 2) are chosen simultaneously to represent the dual-cubemap depth module.

From Table 2, we can observe that one branch with a cubemap representation image as input performs much worse than others because a single cubemap has a smaller FoV than an equirectangular map. Furthermore, the supplement information from the other cubemap has dramatically improved the performance. Since GL is only used to sharpen edges [20] without eliminating the boundary discontinuity, the BR module is proposed. It finally revises the discontinuity of boundaries and outperforms 13 in terms of MAE.

As shown in Fig. 6, the depth estimated from our dual-cubemap is much better than that from a single cubemap, but there is still visible discontinuity. Moreover, the BR module revises the discontinuity effectively, and gradient loss makes the results more accurate on edges.

5 Conclusion

In this paper, we propose an end-to-end trainable omnidirectional depth estimation network consisting of two modules for depth estimation and boundary revision, respectively. Since the cubemap representation reduces the influence of distortion, we entirely rely on this representation for depth estimation. To extend the FoV of the cubemap presentation and revise the boundary, we adopt a rotation-based two-cubemap strategy. We reconstruct the large FoV depth map using the two cubemaps with consistent context but different discontinuous boundary regions. Experimental results demonstrate that our approach outperforms the current state-of-the-art methods.

Acknowledgement. This work was supported by National Natural Science Foundation of China (No.61772066, No.61972028), and the Fundamental

Research Funds for the Central Universities (No.2018JBZ001).

References

- [1] Iro Laina, Christian Rupprecht, Vasileios Belagiannis, Federico Tombari, and Nassir Navab, “Deeper depth prediction with fully convolutional residual networks,” 2016.

- [2] Yu Chuan Su and Kristen Grauman, “Kernel transformer networks for compact spherical convolution,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- [3] Carlos Esteves, Christine Allen-Blanchette, Ameesh Makadia, and Kostas Daniilidis, “Learning so(3) equivariant representations with spherical cnns,” International Journal of Computer Vision, vol. 128, no. 3, pp. 588–600, 2020.

- [4] Taco S Cohen, Mario Geiger, Jonas Koehler, and Max Welling, “Spherical cnns,” 2018.

- [5] Benjamin Coors, Alexandru Paul Condurache, and Andreas Geiger, “Spherenet: Learning spherical representations for detection and classification in omnidirectional images,” in European Conference on Computer Vision, 2018.

- [6] Yu Chuan Su and Kristen Grauman, “Flat2sphere: Learning spherical convolution for fast features from 360° imagery,” 2017.

- [7] Fu En Wang, Yu Hsuan Yeh, Min Sun, Wei Chen Chiu, and Yi Hsuan Tsai, “Bifuse: Monocular 360 depth estimation via bi-projection fusion,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- [8] Angel Chang, Angela Dai, Thomas Funkhouser, Maciej Halber, Matthias Niebner, Manolis Savva, Shuran Song, Andy Zeng, and Yinda Zhang, “Matterport3d: Learning from rgb-d data in indoor environments,” in 2017 International Conference on 3D Vision (3DV), 2018.

- [9] Iro Armeni, Sasha Sax, Amir Roshan Zamir, and Silvio Savarese, “Joint 2d-3d-semantic data for indoor scene understanding,” CoRR, vol. abs/1702.01105, 2017.

- [10] David Eigen, Christian Puhrsch, and Rob Fergus, “Depth map prediction from a single image using a multi-scale deep network,” CoRR, vol. abs/1406.2283, 2014.

- [11] Weiwei Sun and Ruisheng Wang, “Fully convolutional networks for semantic segmentation of very high resolution remotely sensed images combined with dsm,” IEEE Geoence and Remote Sensing Letters, pp. 1–5, 2018.

- [12] Iro Laina, Christian Rupprecht, Vasileios Belagiannis, Federico Tombari, and Nassir Navab, “Deeper depth prediction with fully convolutional residual networks,” 2016.

- [13] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun, “Deep residual learning for image recognition,” in IEEE Conference on Computer Vision and Pattern Recognition, 2016.

- [14] Jianbo Jiao, Ying Cao, Yibing Song, and Rynson W. H. Lau, “Look deeper into depth: Monocular depth estimation with semantic booster and attention-driven loss,” in Computer Vision - ECCV 2018 - 15th European Conference, Munich, Germany, September 8-14, 2018, Proceedings, Part XV, Vittorio Ferrari, Martial Hebert, Cristian Sminchisescu, and Yair Weiss, Eds. 2018, vol. 11219 of Lecture Notes in Computer Science, pp. 55–71, Springer.

- [15] Huan Fu, Mingming Gong, Chaohui Wang, Kayhan Batmanghelich, and Dacheng Tao, “Deep ordinal regression network for monocular depth estimation,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018.

- [16] Nikolaos Zioulis, Antonis Karakottas, Dimitrios Zarpalas, and Petros Daras, “Omnidepth: Dense depth estimation for indoors spherical panoramas: 15th european conference, munich, germany, september 8–14, 2018, proceedings, part vi,” 2018.

- [17] Keisuke Tateno, Nassir Navab, and Federico Tombari, “Distortion-aware convolutional filters for dense prediction in panoramic images,” in Proceedings of the European Conference on Computer Vision (ECCV), September 2018.

- [18] Hsien Tzu Cheng, Chun Hung Chao, Jin Dong Dong, Hao Kai Wen, Tyng Luh Liu, and Min Sun, “Cube padding for weakly-supervised saliency prediction in 360° videos,” 2018.

- [19] Zongwei Zhou, Md Mahfuzur Rahman Siddiquee, Nima Tajbakhsh, and Jianming Liang, “Unet++: A nested u-net architecture for medical image segmentation,” 2018.

- [20] Sudeep Pillai, Rares Ambrus, and Adrien Gaidon, “Superdepth: Self-supervised, super-resolved monocular depth estimation,” CoRR, vol. abs/1810.01849, 2018.

- [21] Jacopo Cavazza and Vittorio Murino, “Active regression with adaptive huber loss,” 2016.

- [22] Xinjing Cheng, Peng Wang, Yanqi Zhou, Chenye Guan, and Ruigang Yang, “Ode-cnn: Omnidirectional depth extension networks,” 2020.