Dissipative Gradient Descent Ascent Method: A Control Theory Inspired Algorithm for Min-max Optimization

Abstract

Gradient Descent Ascent (GDA) methods for min-max optimization problems typically produce oscillatory behavior that can lead to instability, e.g., in bilinear settings. To address this problem, we introduce a dissipation term into the GDA updates to dampen these oscillations. The proposed Dissipative GDA (DGDA) method can be seen as performing standard GDA on a state-augmented and regularized saddle function that does not strictly introduce additional convexity/concavity. We theoretically show the linear convergence of DGDA in the bilinear and strongly convex-strongly concave settings and assess its performance by comparing DGDA with other methods such as GDA, Extra-Gradient (EG), and Optimistic GDA. Our findings demonstrate that DGDA surpasses these methods, achieving superior convergence rates. We support our claims with two numerical examples that showcase DGDA’s effectiveness in solving saddle point problems.

I Introduction

In recent years, there has been a significant focus on solving saddle point problems, namely min-max optimization problems [1, 2, 3, 4, 5, 6]. These problems have garnered considerable attention, particularly in fields such as Generative Adversarial Networks (GANs) [7, 6, 8], Reinforcement Learning (RL) [9], and Constrained RL (C-RL) [10, 11]. However, a major challenge that persists in these approaches is the instability of the training process. That is, solving the min-max optimization problem via running the standard Gradient Descent Ascent (GDA) algorithm often leads to unstable oscillatory behavior rather than convergence to the optimal solution. This is particularly illustrated in bilinear min-max problems, such as the training of Wasserstein GANs [12] or solving C-RL problems in the occupancy measure space [13], for which the standard GDA fails to converge [1, 2].

In order to understand the instability of the GDA method and further tackle its limitation, we draw inspiration from the control-theoretic notions of dissipativity [14], which enables the design of stabilizing controllers using dynamic (state-augmented) components that seek to dissipate the energy generated by the unstable process. This aligns with recent work that leverages control theory tools in the analysis and design of optimization algorithms [15, 16, 17, 18, 19]. From a dynamical system point of view, dissipativity theory characterizes the manner in which energy dissipates within the system and drives it towards equilibrium. It provides a direct way to construct a Lyapunov function, which further relates the rate of decrease of this internal energy to the rate of convergence of the algorithm.

We motivate our developments by looking first at a simple scalar bilinear problem wherein, the energy of the system, expressed as the square 2-norm distance to the saddle, strictly increases on every iteration, leading to oscillations of increasing amplitude. To tackle this unstable oscillating behavior, we propose the Dissipative GDA method, which, as the name suggests, incorporates a simple friction term to GDA updates to dissipate the internal energy and stabilize the system. Our algorithm can be seen as a discrete-time version of [20], which has been applied to solve the C-RL problems [10]. In this work, we build on this literature, making the following contributions:

1. Novel control theory inspired algorithm: We illustrate how to use control theoretic concepts of dissipativity theory to design an algorithm that can stabilize the unstable behavior of GDA. Particularly, we show that by introducing a friction term, the proposed DGDA algorithm dissipates the stored internal energy and converges toward equilibrium.

2. Theoretical analysis with better rates: We establish the linear convergence of the DGDA method for bilinear and strongly convex-strongly concave saddle point problems. In both settings, we show that the DGDA method outperforms other state-of-the-art first-order explicit methods, surpassing the standard known linear convergence rate (see Table I and II).

3. Numerical Validation: We corroborate our theoretical results with numerical experiments by evaluating the performance of the DGDA method with GDA, EG, and OGDA methods. When applied to solve bilinear and strongly convex-strongly concave saddle point problems, the DGDA method systematically outperforms other methods in terms of convergence rate.

Outline: The rest of the paper is organized as follows. In Section II, we provide some preliminary definitions and background. In Section III, we leverage tools from dissipativity theory and propose the Dissipative GDA (DGDA) algorithm to tackle the unstable oscillatory behavior of GDA methods. In Section IV, we establish its linear convergence rate for bilinear and strongly convex-strongly concave problems, which outperforms state-of-the-art first-order explicit algorithms, including GDA, EG, and OGDA methods. In Section V, we support our claims with two numerical examples. We close the paper with concluding remarks and future research directions in Section VI.

| Bil. | Mokhtari, 2020 | Azizian, 2020 | This Work |

|---|---|---|---|

| EG | - | ||

| OG | - | ||

| DG | - | - |

II Problem Formulation

In this paper, we study the problem of finding saddle points in the min-max optimization problem:

| (1) |

where the function is a convex-concave function. Precisely, is convex for all and is concave for all . We seek to develop a novel optimization algorithm that converges to some saddle point of Problem 1.

Definition 1 (Saddle Point)

A point is a saddle point of convex-concave function (1) if and only if it satisfies for all .

Throughout this paper, we consider two specific instances of Problem 1 commonly studied in related literature: strongly convex-strongly concave and bilinear functions. Herein, we briefly present some definitions and properties.

Definition 2 (Strongly Convex)

A differentiable function is said to be -strongly convex if .

Notice that if , then we recover the definition of convexity for a continuously differentiable function and is -strongly concave if is -strongly convex. Another important property commonly used in the convergence analysis of optimization algorithms is the Lipschitz-ness of the gradient .

Definition 3 (-Lipschitz)

A function is L-Lipschitz if , we have .

Combining the above two properties leads to the first important class of problem that has been extensively studied [21, 22, 2, 1].

Assumption 1

(Strongly strongly convex-concave functions with L-Lipschitz Gradient) The function is continuously differentiable, strongly convex in , and strongly concave in . Further, the gradient vector is -Lipschitz.

It is also crucial to consider situations where the objective function is bilinear. Such bilinear min-max problems often appear when solving constrained reinforcement learning problems [10, 23], and training of WGANs [12].

Assumption 2 (Bilinear function)

The function is a bilinear function if it can be written in the form . For simplicity, we further assume that the matrix is full rank, with .

As seen in Table I and II as well as in Section IV, the linear convergence rates of existing algorithms are frequently characterized by the condition number . Specifically, when the objective function is bilinear, the condition number is defined as , where and denote the largest singular value and smallest singular of a matrix respectively. When the objective function is strongly convex-strongly concave with the -Lipschitz gradient, the condition number of the problem is defined as .

III Dissipative Gradient Descent Ascent Algorithm

| S.C | Zhang, 2021 | Mokhtari, 2020 | Azizian, 2020 | This Work |

|---|---|---|---|---|

| GD | - | - | - | |

| EG | - | - | ||

| OG | - | - | ||

| DG | - | - | - | |

This section introduces the proposed first-order method for solving the min-max optimization problem 1. The algorithm can be seen as a discretization of the algorithm proposed by [20], where the authors introduce a regularization framework for continuous saddle flow dynamics that guarantees asymptotic convergence to a saddle point under mild assumptions. However, the continuous-time analysis presented in [20] does generally extend to discrete time. In this work, we will show the linear convergence of the discrete-time version of this algorithm. Moreover, we illustrate a novel control-theoretic design methodology for optimization algorithms that can broadly use dissipativity tools to study the convergence of the resulting algorithm.

Our results build on gaining an intuitive understanding of the problems that one encounters when applying the vanilla Gradient Descent Ascent method to solve saddle point problems (1):

Gradient Descent Ascent (GDA)

| (2) | ||||

When (1) is strongly convex-strongly concave, and has L-Lipschitz gradients, the GDA method provides linear convergence, with step size and a know rate estimate of [24]. However, when the problem is bilinear, the standard GDA method fails to converge.

III-A Control Theory-Based Motivation

Before we dive into our algorithm, we would like to illustrate our key insight from control theory about how to characterize the energy stored in the system and how to introduce friction to dissipate energy and drive the system toward equilibrium. To get started, consider the following motivating example with a simple bilinear objective function:

| (3) |

We seek to analyze the behavior of (a possibly controlled) vanilla GDA algorithm applied to the above simple bilinear objective function, that is:

| (4) |

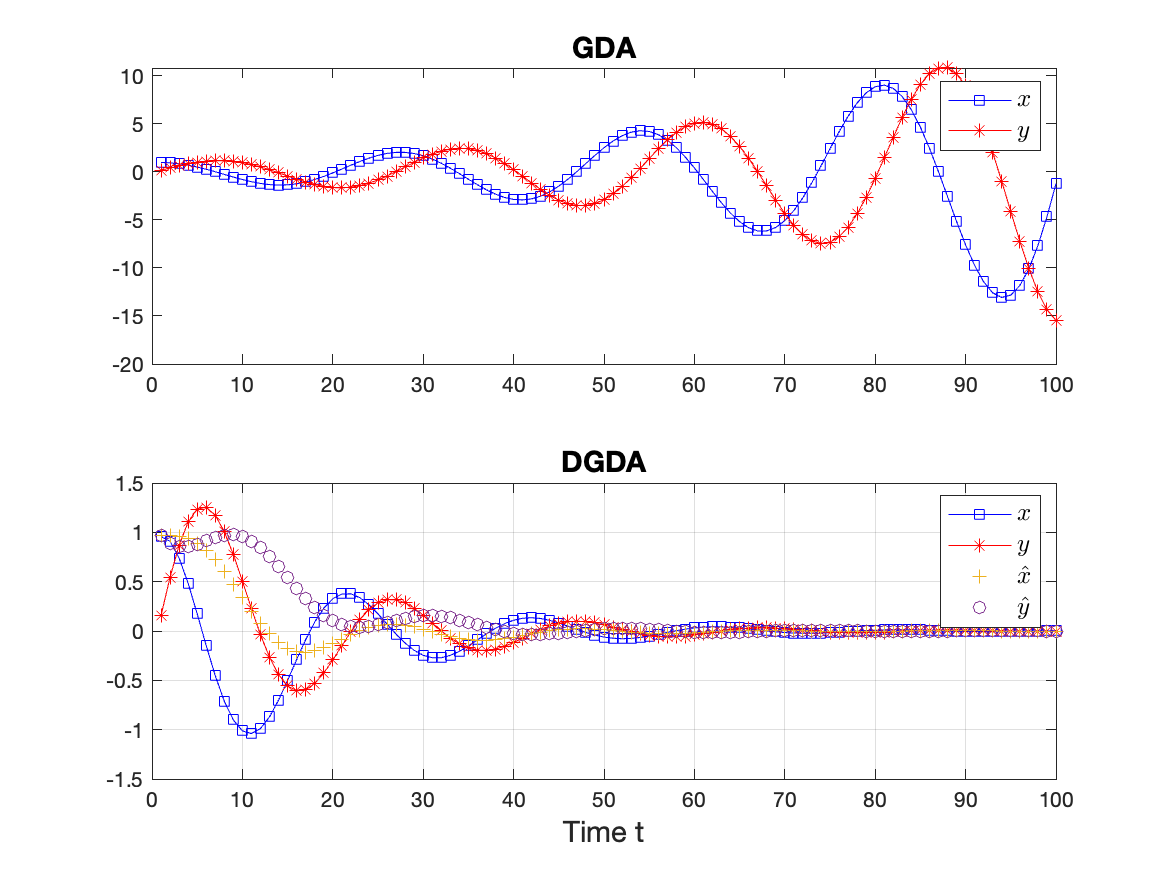

where represent the system state, a controlled input, and . In the absence of control (, ), the algorithm diverges as illustrated by the upper subplot of Figure 1. To understand this unstable process, we keep track of the system’s energy, expressed as the square 2-norm distance to the unique saddle point , . A simple algebraic manipulation shows that

| (5) |

Notably, (5) shows how, without control, the energy grows exponentially with each iteration.

We to choose so that the right-hand side of (5) is upper-bounded by , with . However, it is usually difficult to do without knowledge of the equilibrium.

The proposed approach uses state augmentation to incorporate a dissipation component that receives some external input and filters its fluctuations, i.e.,

| (6) |

The dissipation interpretation of (6) can be readily seen, again, by looking at the evolution of its energy

| (7) |

which has the natural tendency to dissipate energy when and , .

The key innovation of the proposed approach is to combine (4) and (6) with a proper choice of and that satisfies:

-

•

The combined energy decreases, i.e., there is such that:

(8) -

•

The iterates , so that the equilibrium of (4), that is, the saddle point does not change.

To achieve these goals we select

| (9) |

thus leading to the combined algorithm

| (10) | ||||

| (11) |

The choice of ensures that acts as a filtering process of . Further, since for constant , , the choice of ensures that the equilibrium of (4) remains unchanged. We will show in Section IV that one can choose and so that (8) holds. We refer to the bottom of Figure 1 for an illustration of its effectiveness.

III-B Dissipative GDA Algorithm

In the previous section, we showed how to design a dissipative component (6) to stabilize the GDA updates on a simple bilinear objective. Since the design approach is independent of the particular saddle function , it can be readily applied in more general settings. The algorithm presented below seeks to incorporate a similar friction component into a standard GDA update. This modification addresses the inherent limitation of the GDA update when applied to general bilinear problems.

Dissipative gradient descent ascent (DGDA):

| (12) |

Particularly, for as in (1), in (12) we introduce two new sets of variables and and a damping parameter . One important observation is that, once the system reaches equilibrium, i.e., , one necessarily has and , which ensures that the fixed point is necessarily a critical point of the saddle function.

In the remaining part of this section, we will first provide several key observations and properties of the proposed DGDA updates. Then, in the next section, we will formally prove that the proposed DGDA algorithm provides a linear convergence guarantee for both bilinear and strongly convex-strongly concave functions. Mainly, we will show that by simply adding a friction or damping term, the DGDA updates succeed in dissipating the energy of the system even in cases where GDA does not.

III-C Key Properties and Related Algorithms

The first important observation is that the above DGDA update could be considered as applying a vanilla GDA update to the following regularized surrogate for :

| (13) |

Note that this is different from the Proximal Point Method [21, 25] or introducing a regularization [26, 27].

Differences with regularization A commonly used method to ensure convergence is to introduce a regularization term in and [26, 27]:

| (14) |

Although the augmented objective function becomes strongly convex-strongly concave and the vanilla GDA updates will converge, this regularization changes the saddle points. While our algorithm also introduces two regularizing terms, the following Lemma verifies the fixed positions of saddle points between and with virtual variables aligned with original variables.

Lemma 1 (Saddle Point Invariance)

More interestingly, the regularization terms, and , do not introduce extra strong convexity-stong concavity to the original problem. Precisely, the augmented problem is neither strongly convex on () nor strongly concave on . Indeed, on the hyperplane of and , the augmented problem recovers the original problem . Additionally, the introduced regularization terms of the DGDA method are separable and local, thus preserving the distributed structure that original systems may have. Consequently, it can be seamlessly integrated into a fully distributed approach.

Differences with Proximal Point Method The Proximal Point Method for saddle point problems [21] shares a similar structure with DGDA algorithm. In the proximal method, the next iterates is the unique solution to the saddle point problem

| (15) | ||||

Using the optimality conditions of (15), the update of the Proximal Point method can be written as:

| (16) | ||||

This expression shows that the Proximal Point method is an implicit method. Although Implicit methods are known to be more stable and to benefit from better convergence properties [25, 6], implementing the above updates requires computing the operators and , and therefore may be computationally intractable. In contrast, the DGDA algorithm is an explicit algorithm, which applies a vanilla GDA update to the augmented objective function . Thus, as shown in Table I and II, and in the next section, we choose to compare the convergence of DGDA only with other explicit algorithms for saddle point problems, such as the GDA, Extra-Gradient (EG), and Optimistic GDA (OGDA) methods which have comparable per-iteration computational requirements; although the DGDA algorithm has twice as many state variables, it only requires a single gradient computation per update. Moreover, there is no need for retaining and reemploying the extrapolated gradient, which also sets it apart from the OGDA method.

We finalize this section comparing DGDA with recent efforts leveraging Moreau-Yosida smoothing techniques to solve nonconvex-concave [28, 29, 30], nonconvex-nonconcave [31] min-max optimization problems.

Smoothed-GDA method The Smoothed-GDA consists of the following updates

| (17) | ||||

where Smoothed-GDA was independently introduced by [32]. It was originally motivated as an ADMM algorithm to solve the linearly constrained nonconvex differentiable minimization problem [32]. The algorithm has been further extended to consider nonconvex-concave min-max optimization problems [28]. Despite its similarities with DGDA, the iterates of smoothed-GDA are sequential, requiring first updates in and subsequently in , whereas DGDA updates are fully parallel or synchronized. Unfortunately, the setting where such algorithm has been studied is different from the one considered in this paper, which difficult the comparison with the present work. A thorough comparison of DGDA with (LABEL:eq:_SGDA) is subject of current research.

IV Convergence Analysis

In this section, we provide a theoretical analysis of the proposed algorithm. For the purpose of our analysis, we consider a quadratic Lyapunov function to track the energy dissipation of the DGDA updates

which denotes the square 2-norm distance to the saddle point at the -th iteration. The goal is, therefore, to find some such that , where denotes the linear convergence rate.

IV-A Convergence Analysis for Bilinear Functions

When applied to the bilinear min-max optimization problem , the DGDA update (12) is equivalent to a linear dynamical system. Specifically, denote yields:

| (18) |

where As a result, the linear convergence of DGDA, as well as its convergence rate can be derived from the analysis of the spectrum of the associated matrix that defines the DGDA update in (18). This yields the following theorem.

Theorem 2

We remark that linear convergence requires . This is not surprising since GDA, which is known to diverge for bilinear functions, can be interpreted as DGDA method when . More importantly, by choosing the optimal step size , DGDA method achieves a better linear convergence rate than the EG and OGDA methods (see Table I).

IV-B Convergence Analysis for Strongly Convex Stronly Concave Functions

We now consider the case of strongly convex-strongly concave min-max problems. Let . The DGDA updates can be written as follows:

| (20) |

Because of the existence of the nonlinear term , we cannot analyze the spectrum as in the previous bilinear case. This is indeed a common challenge in analyzing most optimization algorithms beyond a neighborhood of the fixed point. We circumvent this problem by leveraging recent results on the analysis of variational mappings as via integral quadratic constraint [18, 16, 17]. More details can be found in the Appendix where we prove the following theorem.

Theorem 3

Similarly, as in the bilinear case, we remark on the importance of the dissipation component. When , a similar analysis as in the proof of the theorem recovers the lower bound of the convergence rate of GDA as shown in [19, 3.1]. Thus, our DGDA method provides a better convergence rate estimate than GDA, since clearly , and therefore .

We remark that while the rate obtained in Theorem 3 is clearly better than those of the EG and OGDA methods for large condition numbers (see Table II), the theorem fails to quantify the comparative performance of DGDA for small values of . The following corollary shows that indeed, the rate of DGDA is provably better for all .

V Numerical Experiments

In this section, we compare the performance of the proposed Dissipative gradient descent (DGDA) method with the Extra-gradient (EG), Gradient descent ascent (GDA), and Optimistic gradient descent ascent (OGDA) methods.

V-A Bilinear problem

We first consider the following bilinear min-max optimization problem: , where is full-rank. The simulation results are illustrated in Figure 2 and Figure 3. In this experiment, we set the dimension of the problem to and the iterates are initialized at , which are randomly drawn from the uniform distribution on the open interval .

We plot the errors (distance to saddle points) of DGDA, EG, and OGDA versus the number of gradient evaluations for this problem in Figure 2. The solid line and grey-shaded error bars represent the average trajectories and standard deviations of 20 trials, where in each trial the randomly generated matrix has a fixed condition number, i.e., . The key motivation is that all three algorithms’ convergence rates critically depend on , and by fixing the condition number, we provide an explicit comparison of their convergence speed.

We pick the step size for different methods according to theoretical findings. That is, we select and for DGDA (Theorem 2), for EG and OGDA (Theorem 6&7 [2] and Theorem 4&7 [1]). In Figure 2, we do not show the error of GDA since it diverges for this bilinear saddle point problem. All other three algorithms converge linearly, with the DGDA method providing the best performance.

Finally, to provide a qualitative demonstration of how DGDA fares with other existing algorithms, we further plot the sample trajectories of GDA, EG, OGDA, and EGDA on a simple 2D bilinear min-max problem, with . In Figure 3, we observe that while GDA diverges, the trajectories of all other three algorithms converge linearly to the saddle point . Interestingly, our proposed algorithm (DGDA) despite taking larger steps, exhibits faster linear convergence.

V-B Strongly convex-strongly concave problem

In the second numerical example, we focus on a strongly convex-strongly strongly-concave quadratic problem of the following form:

| (22) |

where the matrices satisfy , , . As a result, the problem (22) satisfy Assumption 1. In this experiment, we set the dimension of the problem to , and the iterates are initialized at , which are randomly drawn from the uniform distribution on the open interval . We plot the errors (distance to saddle points) of GDA, DGDA, EG, and OGDA versus the number of gradient evaluations for this problem in Figure 4. Again, the solid line and grey-shaded error bars represent the average trajectories and standard deviations of 20 trials, where in each trial the randomly generated matrix is chosen such that the condition number of (22) remains constant, i.e., . Similarly as in the bilinear problem in Section V-A, we pick the step size for the DGDA method according to our theoretical finding in Theorem 3. The step size of the GDA method is selected as (Theorem 5 [33]). The step sizes for EG and OGDA methods are selected as (Theorem 6&7 [2] and Theorem 4&7 [1]). According to the plots, all algorithms converge linearly, and the DGDA method has the best performance.

VI Conclusion and Future Work

In this work, we present the Dissipative GDA (DGDA) algorithm, a novel method for solving min-max optimization problems. Drawing inspiration from dissipativity theory and control theory, we address the challenge of diverging oscillations in bilinear min-max optimization problems when using the Gradient Descent Ascent (GDA) method. Particularly, we introduce a friction term into the GDA updates aiming to dissipate the internal energy and drive the system towards equilibrium. By incorporating a state-augmented regularization, our proposed DGDA method can be seen as performing standard GDA on an extended saddle function without introducing additional convexity. We further establish the superiority of the convergence rate of the proposed DGDA method when compared with other established methods including GDA, Extra-Gradient (EG), and Optimistic GDA. The analysis is further supported by two numerical examples, demonstrating its effectiveness in solving saddle point problems. Our future work includes studying the DGDA method in a stochastic setting and its application in solving Constrained Reinforcement learning problems in the policy space.

References

- [1] A. Mokhtari, A. Ozdaglar, and S. Pattathil, “A unified analysis of extra-gradient and optimistic gradient methods for saddle point problems: Proximal point approach,” in International Conference on Artificial Intelligence and Statistics. PMLR, 2020, pp. 1497–1507.

- [2] W. Azizian, I. Mitliagkas, S. Lacoste-Julien, and G. Gidel, “A tight and unified analysis of gradient-based methods for a whole spectrum of differentiable games,” in International conference on artificial intelligence and statistics. PMLR, 2020, pp. 2863–2873.

- [3] E. Gorbunov, H. Berard, G. Gidel, and N. Loizou, “Stochastic extragradient: General analysis and improved rates,” in International Conference on Artificial Intelligence and Statistics. PMLR, 2022, pp. 7865–7901.

- [4] N. Loizou, H. Berard, G. Gidel, I. Mitliagkas, and S. Lacoste-Julien, “Stochastic gradient descent-ascent and consensus optimization for smooth games: Convergence analysis under expected co-coercivity,” Advances in Neural Information Processing Systems, vol. 34, pp. 19 095–19 108, 2021.

- [5] A. Beznosikov, E. Gorbunov, H. Berard, and N. Loizou, “Stochastic gradient descent-ascent: Unified theory and new efficient methods,” in International Conference on Artificial Intelligence and Statistics. PMLR, 2023, pp. 172–235.

- [6] G. Gidel, H. Berard, G. Vignoud, P. Vincent, and S. Lacoste-Julien, “A variational inequality perspective on generative adversarial networks,” arXiv preprint arXiv:1802.10551, 2018.

- [7] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,” Advances in neural information processing systems, vol. 27, 2014.

- [8] C. Daskalakis, A. Ilyas, V. Syrgkanis, and H. Zeng, “Training gans with optimism,” arXiv preprint arXiv:1711.00141, 2017.

- [9] D. Pfau and O. Vinyals, “Connecting generative adversarial networks and actor-critic methods,” arXiv preprint arXiv:1610.01945, 2016.

- [10] T. Zheng, P. You, and E. Mallada, “Constrained reinforcement learning via dissipative saddle flow dynamics,” in 2022 56th Asilomar Conference on Signals, Systems, and Computers. IEEE, 2022, pp. 1362–1366.

- [11] D. Ding, C.-Y. Wei, K. Zhang, and A. Ribeiro, “Last-iterate convergent policy gradient primal-dual methods for constrained mdps,” arXiv preprint arXiv:2306.11700, 2023.

- [12] J. Adler and S. Lunz, “Banach wasserstein gan,” Advances in neural information processing systems, vol. 31, 2018.

- [13] E. Altman, Constrained Markov decision processes: stochastic modeling. Routledge, 1999.

- [14] S. Sastry, Nonlinear systems: analysis, stability, and control. Springer Science & Business Media, 2013, vol. 10.

- [15] Z. E. Nelson and E. Mallada, “An integral quadratic constraint framework for real-time steady-state optimization of linear time-invariant systems,” in 2018 annual American control conference (ACC). IEEE, 2018, pp. 597–603.

- [16] B. Hu and L. Lessard, “Dissipativity theory for nesterov’s accelerated method,” in International Conference on Machine Learning. PMLR, 2017, pp. 1549–1557.

- [17] M. Fazlyab, A. Ribeiro, M. Morari, and V. M. Preciado, “Analysis of optimization algorithms via integral quadratic constraints: Nonstrongly convex problems,” SIAM Journal on Optimization, vol. 28, no. 3, pp. 2654–2689, 2018.

- [18] L. Lessard, B. Recht, and A. Packard, “Analysis and design of optimization algorithms via integral quadratic constraints,” SIAM Journal on Optimization, vol. 26, no. 1, pp. 57–95, 2016.

- [19] G. Zhang, X. Bao, L. Lessard, and R. Grosse, “A unified analysis of first-order methods for smooth games via integral quadratic constraints,” The Journal of Machine Learning Research, vol. 22, no. 1, pp. 4648–4686, 2021.

- [20] P. You and E. Mallada, “Saddle flow dynamics: Observable certificates and separable regularization,” in 2021 American Control Conference (ACC). IEEE, 2021, pp. 4817–4823.

- [21] R. T. Rockafellar, “Monotone operators and the proximal point algorithm,” SIAM journal on control and optimization, vol. 14, no. 5, pp. 877–898, 1976.

- [22] P. Tseng, “On linear convergence of iterative methods for the variational inequality problem,” Journal of Computational and Applied Mathematics, vol. 60, no. 1-2, pp. 237–252, 1995.

- [23] M. Wang, “Randomized linear programming solves the markov decision problem in nearly linear (sometimes sublinear) time,” Mathematics of Operations Research, vol. 45, no. 2, pp. 517–546, 2020.

- [24] B. Grimmer, H. Lu, P. Worah, and V. Mirrokni, “The landscape of the proximal point method for nonconvex–nonconcave minimax optimization,” Mathematical Programming, vol. 201, no. 1-2, pp. 373–407, 2023.

- [25] N. Parikh, S. Boyd et al., “Proximal algorithms,” Foundations and trends® in Optimization, vol. 1, no. 3, pp. 127–239, 2014.

- [26] A. Krogh and J. Hertz, “A simple weight decay can improve generalization,” Advances in neural information processing systems, vol. 4, 1991.

- [27] T. Hastie, R. Tibshirani, J. H. Friedman, and J. H. Friedman, The elements of statistical learning: data mining, inference, and prediction. Springer, 2009, vol. 2.

- [28] J. Zhang, P. Xiao, R. Sun, and Z. Luo, “A single-loop smoothed gradient descent-ascent algorithm for nonconvex-concave min-max problems,” Advances in neural information processing systems, vol. 33, pp. 7377–7389, 2020.

- [29] Z. Xu, H. Zhang, Y. Xu, and G. Lan, “A unified single-loop alternating gradient projection algorithm for nonconvex–concave and convex–nonconcave minimax problems,” Mathematical Programming, pp. 1–72, 2023.

- [30] J. Yang, A. Orvieto, A. Lucchi, and N. He, “Faster single-loop algorithms for minimax optimization without strong concavity,” in International Conference on Artificial Intelligence and Statistics. PMLR, 2022, pp. 5485–5517.

- [31] T. Zheng, L. Zhu, A. M.-C. So, J. Blanchet, and J. Li, “Universal gradient descent ascent method for nonconvex-nonconcave minimax optimization,” Advances in Neural Information Processing Systems, vol. 36, 2024.

- [32] J. Zhang and Z.-Q. Luo, “A proximal alternating direction method of multiplier for linearly constrained nonconvex minimization,” SIAM Journal on Optimization, vol. 30, no. 3, pp. 2272–2302, 2020.

- [33] A. Beznosikov, B. Polyak, E. Gorbunov, D. Kovalev, and A. Gasnikov, “Smooth monotone stochastic variational inequalities and saddle point problems: A survey,” European Mathematical Society Magazine, no. 127, pp. 15–28, 2023.

- [34] G. M. Korpelevich, “The extragradient method for finding saddle points and other problems,” Matecon, vol. 12, pp. 747–756, 1976.

- [35] R. T. Rockafellar, “Augmented lagrangians and applications of the proximal point algorithm in convex programming,” Mathematics of operations research, vol. 1, no. 2, pp. 97–116, 1976.

- [36] G. Zhang and Y. Yu, “Convergence of gradient methods on bilinear zero-sum games,” in International Conference on Learning Representations, 2019.

- [37] J. P. Hespanha, Linear systems theory. Princeton university press, 2018.

-A First Order Algorithms for Saddle Point Problems

In this section, we introduce several popular first-order methods for solving the min-max problem 1 in the machine learning community. Precisely, we focus on Gradient Descent-Ascent (GDA), Extra-gradient (EG), Optimistic Gradient Descent-Ascent (OGDA), Proximal Point and Smoothed-GDA methods.

Gradient descent ascent (GDA)

| (23) | ||||

When the problem is strongly convex-strongly concave and L-Lipschitz, the GDA method provides linear convergence, with step size and a know rate estimate of [24, 19]. However, when the problem is bilinear, the standard GDA method fails to converge. Therefore, variants of the gradient method such as Extra-Gradient and Optimistic Gradient Descent-Ascent methods have attracted much attention in recent literature because of their superior empirical performance in solving min-max optimization problems such as training GANs and solving C-RL problems.

Extra-gradient (EG)

| (24) | ||||

Extra-gradient is a classical method introduced in [34], where its linear rate of convergence for bilinear functions and smooth strongly convex-strongly concave functions have been established in many recent works (see Table I and II). The Extra-gradient method first computes an extrapolated point () by performing a GDA update. Then the gradients evaluated at the extrapolated point are used to compute the new iterates .

The linear convergence rate of EG for strongly convex-strongly concave is established, with a standard known rate of ; see e.g.[2, 1]. One issue with the Extra-gradient method is that, as the name suggests, each update requires evaluation of extra gradients at the extrapolated point (), which doubles the computational complexity of EG method compared to vanilla GDA method.

Optimistic gradient descent ascent (OGDA)

| (25) | ||||

The Optimistic gradient descent ascent (OGDA) method adds a ”negative-momentum” term to each of the updates, which differentiates the OGDA method from the vanilla GDA method. Meanwhile, the OGDA method stores and re-uses the extrapolated gradient for the extrapolation, which only requires a single gradient computation per update.

Proximal Point (PP)

The proximal point method for convex minimization has been extensively studied [35, 25] and extended to solve saddle point problems in [21]. Define the iterates as the unique solution to the saddle point problem

| (26) | ||||

Using the optimality conditions, the update of the Proximal Point method can be written as:

| (27) | ||||

This expression shows that in contrast to explicit methods such as GDA, EG, and OGDA methods, the Proximal Point method is an implicit method. Although Implicit methods are known to be more stable and to benefit from better convergence properties [25, 6], implementing the above updates requires computing the operators and , and therefore may be computationally intractable. Notably, in [1], the authors show the EG and OGDA methods can be interpreted as an approximation of the PP method, and therefore exhibits similar convergence performance.

Smoothed-GDA method

We finalize this section comparing DGDA with recent efforts leveraging Moreau-Yosida smoothing techniques to solve nonconvex-concave [28, 29, 30], nonconvex-nonconcave [31] min-max optimization problems.

| (28) | ||||

where .

The Smoothed-GDA was independently introduced by Jiawei et al. in [32] and later [28]. It was originally motivated by ADMM to solve the linearly constrained nonconvex differentiable minimization problem [32], where they introduce an extra quadratic proximal term for the equality constraints and an extra sequence . They claim this smoothing or exponential averaging scheme is necessary for the convergence of the proximal ADMM when the objective is nonconvex. Later on, this scheme is further extended to solve the nonconvex-concave min-max optimization problem [28].

-B Proof of Theorem 2

We consider, for ease of presentation, the case when is a square matrix. The extension for non-square matrices is straightforward and has been covered in the literature [36, Appendix G]. Applying the updates 12 to and denoting yields:

| (29) | ||||

where

According to [2, Lemma 7]

We will use and to denote the largest singular value and smallest singular of matrix , respectively. And according to Assumption 2, we have . Since is a normal matrix and diagonalizable, we can compute the eigenvalues of the linear system (29) using the following similarity transformation

| (30) | ||||

where , , , with . In order to show linear convergence, we want to show that , where are the eigenvalues of the above matrix (30) (i.e., the spectral radius of matrix (30)). As straight forward computation leads to

| (31) |

Since, for complex number , , the magnitude of eigenvalues are given by,

Suppose that for all , we choose and , which implies . From (-B), we have,

According to classical linear system theory, e.g. [37, Theorem 8.3], the above spectral radius analysis of the linear system (29) results in the following linear convergence rate estimate:

where .

Furthermore, we want to select the optimal step size . The immediate step is to substitute the optimal , which yields the following inequality:

| (32) |

The spectral radius is therefore given by choosing above, i.e.,

where the last inequality comes from selecting optimal of a quadratic polynomial of . Using the fact that , we have

| (33) |

Again, this results in the following linear convergence rate estimate:

| (34) |

Remark 1

We could also choose such that . And we could construct a similar linear convergence rate by repeating the above process. However, in practice, we found that the step sizes always perform better in numerical experiments. Therefore, we choose this pair of step sizes by default.

Remark 2

Since GDA method could be interpreted as a special case of DGDA method when selecting , the above step proves that when , the GDA method diverges for a bilinear objective function. Specifically, when , we have and

| (35) |

-C Proof of Theorem 3

The proof relies on the application of dissipativity theory to construct Lyapunov functions and establish linear convergence. For more detailed information, refer to [16].

According to [16], a linear dynamical system of the form:

| (36) |

Here, is the state, is the input, is the state transition matrix and is the input matrix. Suppose that there exist a (Lyapunov) function , satisfying , some and a supply rate function such that

| (37) |

This dissipation inequality (37) implies that , and the state will approach a minimum value ate equilibrium no slower than the linear rate . The flowing theorem states how to construct the dissipation inequality (37) by solving a semidefinite programming problem.

Theorem 5

A major benefit of the proposed constructive dissipation approach is that it replaces the trouble some component of a dynamical system (e.g. the gradient term ) by a quadratic constraint on its inputs and outputs that is always satisfied, namely the supply rate constraint . This leads to a two-step novel approach to the convergence analysis of optimization algorithms.

-

1.

Choose a proper quadratic supply rate function such that , that depends on the specific nonlinear term.

-

2.

Solve the Linear Matrix Inequality (39) to obtain a storage function and finding the linear convergence rate .

We will apply this methodology to analyze the DGDA update (12). Let and , and rewrite (12) as in the form of (36):

| (40) | ||||

where .

According to the previous discussion, the first step would be to choose a proper quadratic supply rate function such that , where that depends on the specific nonlinear term . According to the equations (7) in the work by Hu et al. (2017) [16] and Lemma 6 from the research by Lessard et al. (2016) [18], the following applies to the nonlinear operator that meets the conditions specified in Assumption 1:

| (41) |

The conditions in Assumption 1 are also commonly referred to as being L-smooth and m-strongly monotone, as can be found in related literature on variational inequality problems, such as the works by [19, 6]]. Therefore, we could easily extend the above LMI into the following supply rate function for DGDA updates, by augmenting the states :

| (42) | ||||

as a proper quadratic supply rate function , whenever .

Finally, according to Theorem 5 and the above discussion, proving linear convergence reduces to finding a positive definite a matrix , such that (39) is satisfied, where the problem parameters are given by

| (43) | ||||

where is the Kronecker product. Due to the Kronecker structure of this problem, this is equivalent to solving an LMI problem of dimension 3 by 3, with design parameters , with , , and . Because this Linear Matrix Inequality is simple (3 by 3), it can be solved using analytical methods. This, in turn, results in a feasible solution for the LMI, denoted as follows:

| (44) | ||||

After substituting the definition for condition number , the convergence rate simplifies to:

| (45) |