Dissecting the impact of different loss functions with gradient surgery

Abstract

Pair-wise loss is an approach to metric learning that learns a semantic embedding by optimizing a loss function that encourages images from the same semantic class to be mapped closer than images from different classes. The literature reports a large and growing set of variations of the pair-wise loss strategies. Here we decompose the gradient of these loss functions into components that relate to how they push the relative feature positions of the anchor-positive and anchor-negative pairs. This decomposition allows the unification of a large collection of current pair-wise loss functions. Additionally, explicitly constructing pair-wise gradient updates to separate out these effects gives insights into which have the biggest impact, and leads to a simple algorithm that beats the state of the art for image retrieval on the CAR, CUB and Stanford Online products datasets.

1 Introduction

Deep Metric Learning trains networks to map semantically related images to similar locations in an embedding space. Metric learning is useful in extreme classification settings when there are so many classes and limited embedding size that standard approaches fail, when there is a need to compare features extracted from images in unseen classes, or when there may be incomplete labels that allow a system to know that images come from the same or different classes without knowing what those classes are.

In this domain, one popular pair-wise loss to train a network is Triplet Loss. Triplets are three images comprising an anchor image, a positive image from the same class, and a negative image from a different class. The network is trained with a loss function that penalizes situations where the anchor-negative pair is closer than the anchor-positive pair. Many variations of this basic approach explore ways to choose which triplets should be included in the optimization or how much they should be weighted, whether the optimization should consider distances or angles between the embedded vectors, and what specific loss function should drive the scoring of a particular triplet.

One recent work gave a large-scale analysis of many of these variations [11] and found that a substantial fraction of the reported performance variation disappears with careful matching of experimental conditions. In this work, we propose a further unifying analysis of these approaches, but explicitly consider how the pair-wise loss function attempts to affect the embedding location of the anchor, positive, and negative examples. Different pair-wise loss functions have gradients that directly affect the desired locations of each embedded location in different ways. Those gradients are different in terms of the direction the anchor, positive and negative examples are pushed, the overall importance or weight given to different triplets, and the relative importance or weight given to the anchor-positive vs. the anchor negative pairs.

In addition to the analysis, we exploit the fact that PyTorch [12] allows for the programmatic specification of gradients, allowing us to explicitly control the above gradient components, and then supports back-propagation to encourage the low-level features to move in this way. This flexibility allows us to explore the relative contributions of these components of the gradient and better understand what is and is not important in the optimization. Finally, we demonstrate the potential to directly modify the gradient components to train models for deep metric learning tasks instead of loss function modification.

The three main contributions111Reject in CVPR2021, ICCV2021, CVPR2022 of this are:

-

•

a direct gradient framework to create a unified analysis of many recent triplet and pair-wise loss functions in terms of their gradients,

-

•

an experimental analysis showing how different choices for components of the gradient affects model performance,

-

•

a deeper understanding of the practical effects of defining a loss based on the Euclidean metric compared with the cosine similarity metric, and

-

•

an integration of the best choice of each component to create a new gradient rule that outperforms the current state-of-art result for image retrieval by a clear margin across multiple datasets.

2 Background

There are many loss functions that have been proposed to solve the deep metric learning problem. Pair-wise loss functions such as contrastive loss [2], binomial deviance loss [24], lifted structure loss [17] and multi-similarity loss [19] penalize pairs of same label instances if their distance is large and pairs of different label instances if their distance is small. The triplet loss function [5, 15] and its variants such as circle loss [18] form a triplet that contains anchor, positive and negative instances, where the anchor and positive instance share the same label, and anchor and negative instance share different labels. These loss functions have losses encouraging the anchor-positive distance to be smaller than anchor-negative distance. Other variants of triplet loss integrate more negative instances into a triplet, such as N-Pair loss [16]. Proxy loss [10] defines for each class a learnable anchor as a proxy. During the training, each instance is directly pulled to its proxy and pushed away from the proxy location for other classes.

Due to the explosion of many new loss functions, issues underlying the fair comparison for these loss functions have been raised in [11]. This paper works hard to re-implement many works before 2019. It tries to fix settings such as network architecture, optimizer and image prepossessing and compares different methods apple to apple. This gives a relatively clear comparison of many loss functions but does not try to explore why some methods are superior to others.

Recent works such as Multi-Similarity Loss and Circle Loss [19, 18, 22] have started with standard triplet loss formulations and adjust the gradient of loss functions to give clear improvements with very simple code modifications. These works all find an explicit loss function whose gradient creates the desired loss function. In some cases, like the current state-of-the-art approach across many datasets [19], the updated loss function for one triplet includes the relative similarity between the anchor-positive and the anchor and other examples for the anchor’s class. This more complicated loss function and more complicated gradient may cause subtle challenges in the optimization process.

Other strategies start with a desired gradient weighting function and integrate the desired gradients to solve for a loss function whose gradient has the appropriate properties. This is often limited to simple weighting strategies, such as the simple linear form in [18] and simple gradient removal for positive pairs when triplets contain hard negative in [22], because it may be hard to find the loss function whose gradient is consistent with complex weighting strategies.

The discussion of explicitly updating the direction of the gradient has been introduced in [9]. They encourage the anchor-positive and anchor-negative directional updates to be orthogonal (so they don’t cancel each other), but include this as a ”direction regularization”, which does not enforce orthogonality.

The most related work is P2Sgrad [27], the author analyzes the gradient in the family of margin-based softmax loss and directly modified the gradient with the cosine similarity for better optimization. Comparing to P2Sgrad, our work focuses on the triplet and pair-wise loss functions.

The framework in this paper directly explore the space of desired gradient updates as shown in Figure 1. By not limiting ourselves to designing a loss function with appropriate gradients, we can be more explicit in experimentally dissecting the effects of different parts of the gradient. Furthermore, we can recombine the gradient terms that are experimentally most useful in a form of gradient surgery [25] that very slightly alters existing algorithms to give improved performance.

3 The Role of the Gradient in Metric Learning

We define a collection of terms for how a batch of images affects a network. Let be a batch of input images, be the normalized feature vectors of the images extracted by the network, be loss value for the batch, be the parameters of the network, be the learning rate, be the mapping function of the network, and be loss function. In the forward training step, the expression is:

| (1) |

.

The network weights are updated as:

| (2) |

This equation highlights that the gradient of the loss function (rather than the loss function itself) directly affects how the model updates its parameters. Therefore, explicitly exploring the gradient is a useful path to exploring network learning behavior.

We decompose the gradient into two terms, and . The first term represents how changing the embedded feature location affects the loss, and this is the term explored most in detail in this work. The second term represents how model parameter (network weight) changes affect the feature embedding. In a modern deep network with multiple layers, the second term is always expanded with the multiplication of multiple terms for each layer because of the derivative chain rule.

In the following discussion, we focus on the particular forms of the first term in many triplet and pair-wise loss functions and then proposed to directly set and design the first term for model training. In Section 3.1, as an example, two commonly used triplet losses are decomposed into components and then those components are categorized into three parts. Then, Section 3.2, 3.3 and 3.4 extend the analysis to more existing loss functions.

3.1 Gradient of Triplet Losses

Given a triplet, , there are two commonly used triplet losses in the literature, a triplet loss based on Euclidean distance:

| (3) |

where , are the distances between the anchor-positive and the anchor-negative pairs, and is a distance margin. A second common triplet loss is the triplet loss based on cosine similarity with NCA [1]:

| (4) |

where , is the cosine similarity computed as the dot-product of the normalized anchor feature and the normalized feature from the positive example, the anchor-negative is computed in the same way, and is the scaling parameter.

When comparing these two loss functions, their substantial differences make it challenging to determine how the loss affects performance. One loss is based on the Euclidean distance combined with a hinge function, while the other uses cosine similarity along with a negative log softmax function to combine the anchor-positive and anchor-negative pairs. Looking at the gradients of these loss functions makes the difference more clear. In triplet loss based on Euclidean distance, if the loss is greater than 0, its gradient can be derived from Equation 3 as:

| (5) |

Being explicit about this gradient allows us to name the direction that the positive example is being pulled to anchor example as , and these are unit vectors defined as: , with corresponding directions for the negative example, .

The gradient of the triplet loss function based on cosine similarity can also be derived from Equation 4 to give a unit direction and magnitude:

| (6) |

where , , and are the unit gradient directions.

Though both and contain different gradient components, those components can be categorized into two major parts: unit gradient direction for moving the feature and a scalar weight that affects the length of the gradient in that direction. The weight itself can be divided into two sub-parts: the weight related to all three features in a triplet , and (Triplet Weight), and the weight related to the positive pair and or negative pair and in a triplet (Pair Weight).

With the categorizations of the gradient components, it becomes easy to compare the effects of each component. Before the comparison, we first show how recently proposed loss functions can be characterized by computing the direction and weights of the different gradient terms in Sections 3.2, 3.3 and 3.4 and then perform comparisons of the isolated effects of each gradient component in Sections 5.1, 5.2 and 5.3

3.2 Unit Gradient Direction

The first gradient component is the unit vector in the direction of the gradient, derived from how the loss function moves the relative configuration of the anchor, positive and negative features. We refer to the unit gradient direction of the two most common metrics Euclidean distance and cosine similarity as Euclidean direction and cosine direction . Recent work [9], also suggests to other directions, Euclidean orthogonal direction and cosine orthogonal direction .

Euclidean Direction():

In equation 5, the geometric explanation of Euclidean direction is to move the positive feature directly towards the anchor and move the negative feature directly away from the anchor, as shown in Figure 2. The vector direction of the anchor image (not shown in the Figure), is a combination of these directions.

Cosine Direction():

In equation 6, the geometric explanation of cosine direction on positive pair is to move the positive feature in the anchor feature direction and move the anchor in positive feature direction, and on negative pair is to move negative in the opposite of the anchor feature direction and move the anchor in the opposite of the negative feature direction as shown in Figure 2.

Orthogonal Direction( ):

A direct gradient modification function 7 can be applied to both the Euclidean and cosine directions. This requires the negative pair to move in a direction orthogonal to the direction the positive pair is moving. This is constrained as:

| (7) |

This gradient was realized in recent work by [9] who implicitly encourage the negative examples to move orthogonally to the anchor positive direction by adding regularizer in their loss function. Our approach is directly understanding the gradient direction for each example highlights the impact of this loss function.

3.3 Pair Weight

We define the pair-weight , for the anchor-positive pair and anchor-negative pair . The pair weight of cosine similarity based triplet loss is a constant scaling parameter. This is useful as a baseline for comparison. For this case where both pair weights are set with constant , as:

| (8) |

In Euclidean distance based triplet loss, the pair weight is different for the anchor-positive and anchor-negative pairs:

| (9) |

and indicates the pair weight is proportional to the distance between the anchor and the other element of the pair.

Recent works [18, 19, 22] argue that the weight for anchor-negative pair should be large when they are close to each other. Otherwise, as mentioned in [22], the optimization will quickly converge to bad local minima. The solution in Circle loss [18] is to apply a linear pair weight : for negative pairs, the weight is large if the similarity is large and small if the similarity is small; for positive pairs, the weight is large if the similarity is small and small if the similarity is large:

| (10) |

Early work binomial deviance loss [24] uses a similar pair weight but with a nonlinear sigmoid form :

| (11) |

where , and are three hyper-parameters.

Multi-similar(MS) loss [19] combines ideas from the lifted structure loss [17] and binomial deviance loss [24], which includes not only the self-similarity of a selected pair but also the relative similarity from other pairs.

The MS paper [19] tries to find a loss function whose derivative fits the proposed pair weight. Because the relative similarity term involves additional examples (outside the triplet), this creates additional gradients relative to those examples, even though the stated purpose is to weigh the selected pair. Therefore, it’s difficult to understand if the performance gain is coming from the proposed pair weight or from the gradients affecting the feature location of these other examples. By casting their work within our framework, we can decouple the pair-weighting and explore the impact of this term in isolation.

We follow the MS paper to cast their weighting function in our framework. Given a triplet, the self-similarity of the selected positive pair and negative pair are and . The similarity of other positives and negatives to the anchor is considered as relative-similarity, noted as and . In addition, [19] also defines and be the sets of selected and , where

| (12) |

| (13) |

where

When the pair weights simplify back to the sigmoid form in equation 11.

In practice, training MS loss needs to tune four hyper-parameters , , and to fit different datasets, making the training not convenient and not efficient. With analysis on relative-similarity terms and in the appendix, we define a clearer and parameter free version of pair weight called linear MS pair weight , which behaves similar to the original MS weight:

| (14) |

where

3.4 Triplet Weight

The triplet weight contains the similarity of both positive and negative pairs of a triplet, measuring whether a triplet is well separated or not. In Euclidean distance based triplet loss, the triplet weight (denoted as ) is a constant indicating that every triplet will be treated equally. For the fair comparison for other triplet weights, we set constant weight .

| (15) |

In cosine similarity based triplet loss, the triplet weight is:

| (16) |

is rely on the difference of and . When a triplet in a correct configuration, , the triplet weight is small. Otherwise, the triplet weight will be large.

In Circle loss [18], the triplet weight is:

| (17) |

Because only considers the similarity difference , some corner cases such triplet with both large and or both small and are not well treated. The idea of is to introduce a non-linear mapping for and in the exponential term in order to weight more on the corner cases.

Figure 3 shows the triplet weight diagram, a triplet visualization tool from [22], for and with . The equal weight line in is straight lines with form . And the equal weight line in is circular lines with form .

Selectively Contrastive Triplet(SCT) loss [22] selects triplets with hard negatives (the negative example in a triplet is closer to anchor than the positive example) and applies only contrastive loss to the hard negative pairs during the batch training. At gradient level, this approach is to remove the gradients from the anchor-positive pairs for triplets with hard negatives. We treat the selection as a masking operator on positive pair weight:

| (18) |

Because the decision boundary of triplets selection is a 1st order straight line, we note this masking operator is noted as . Besides, we continue to extend the selection idea with Circle loss. The triplets in the corner cases can be also selected to only separate the negative pairs. Then, the decision boundary of the selection operator becomes a 2nd order circular line. We note it as ,

| (19) |

Figure 3 right shows the difference decision boundaries of and .

3.5 Metric Learning Gradient Summary

Method Direction PairWeight TripletWeight Triplet (Euclidean) [15] Triplet (cosine) [23] Circle loss [18] Binomial deviance [24] MS loss [19] DR-MS loss [9] SC triplet loss [22] ,

In this section, we have derived ways to represent many previous loss functions in terms of their gradients. We have explicitly defined the gradients in terms of how the anchor, positive and negative are moved, defined them in terms of a unit vector in the direction of motion, a weight of anchor-positive term and the anchor negative term and weight of the triplet overall. Table 1 shows how to map different combinations of gradient components into currently proposed loss functions. Section 5 gives explicit experiments to understand the isolated effects of these three parts of gradient component.

4 Experiment Settings

We run a set of experiments on the CUB200 (CUB) [20], CAR196 (CAR) [7], Stanford Online Products (SOP) [17] and In-shop Cloth (In-shop) [8] dataset. All experiments are run on the PyTorch platform [12] with Nvidia Tesla V100 GPU, using ResNet [4] architectures, pre-trained on ILSVRC 2012-CLS data [14]. Training images augmented using a standard scheme (random horizontal flip and random crops padded by 10 pixels on each side), and normalized using the channel means and standard deviations. The network is trained with stochastic gradient descent (SGD) with momentum , step and milestone at of the total epochs. We refer the Easy Positive with Hard Negative mining protocol [23] to sample a batch with classes and images per class. On CUB, CAR, SOP and In-shop dataset, we sample 8, 16, 4 and 4 images per class in a mini-batch.

Small embedding size comparing to training classes size: We follow the early goal of deep metric learning works [17, 16, 10, 3] which sets the embedding size to be smaller than the number of training classes. On CUB, CAR, SOP and In-shop dataset the embedding size is 64, 64, 512, 512.

Comparison of Gradient Components: To compare each component in the gradient, we train ResNet18 on CAR dataset and In-shop dataset for 60 epochs. The training is run with batch size 128. For a given test setting, we run the test 5 times to remove the effect caused by the randomness coming from the random sampling of the batch and random initialization of the final FC embedding layer which reducing the GAP feature to a target dimension (e.g. 64 or 512). Then, the mean and standard deviation of Recall@1 are calculated.

Comparison with the State-of-the-Art: To compare the recent state-of-the-Arts results, we select ResNet50 as the backbone for 80 epochs training. The training is run with different batch sizes 128, 256, 384 and 512. Each test is run 3 times and mean Recall@K is calculated as the measurement for retrieval quality.

PyTorch Implementation: In PyTorch platform, we use torch.autograd.Function module to customize both forward and backward functions for a loss module. The backward function is to generate our customized gradient for the optimizer. During the training, the gradient is directly starting from the backward function, replacing the gradient generated by AutoGrad of the forward function.

5 Comparison Experiments

In this section, we give explicit experiment results to demonstrate the isolated effects contributed by unit gradient direction, pair weight and triplet weight. More raw results are shown in Appendix.

Direction CAR In-shop 69.5 0.7 83.7 0.1 75.5 0.2 85.2 0.3 66.9 0.5 84.1 0.1 77.0 0.7 86.6 0.2

PairWeight 69.5 0.7 75.5 0.2 75.2 0.4 77.0 0.4 76.7 0.5 77.8 0.9 78.2 0.4 78.8 0.9 71.9 0.4 74.3 0.2 CAR 74.6 0.8 76.2 0.5 83.7 0.1 85.2 0.3 85.2 0.2 84.8 0.2 87.4 0.1 87.3 0.2 87.5 0.2 87.3 0.1 86.2 0.1 87.8 0.2 In-shop 84.9 0.4 86.4 0.2

TripletWeight 75.5 0.2 77.8 0.9 75.8 0.2 77.5 0.5 & 77.0 0.2 78.8 0.6 & 77.2 0.5 78.4 0.5 75.5 0.3 78.3 0.2 & 77.0 0.4 78.1 0.6 CAR & 76.6 0.8 78.8 0.3 85.2 0.1 87.3 0.2 86.0 0.3 87.9 0.4 & 85.1 0.3 87.3 0.3 & 84.5 0.2 86.9 0.2 86.0 0.1 87.7 0.2 & 84.9 0.2 87.2 0.1 In-shop & 84.2 0.1 86.9 0.2

5.1 Unit Gradient Direction

To understand the behavior of unit gradient directions in section 3.2, we set constant pair and triplet weight and , and vary the choice of Euclidean, cosine, Euclidean-orthogonal and cosine-orthogonal direction.

In Table 2, we find the following trends. First, the cosine and cosine-orthogonal direction have better Recall@1 accuracy than other directions. Second, the cosine-orthogonal gradient direction gives an improvement for both datasets compared to the cos direction. More analysis will be discussed in section 5.4.

5.2 Pair Weight

To understand the behavior of the pair weights, we set triplet weights with constant form and gradient direction with and for two sets of results respectively. As for baseline results, the pair weights are set with constant form .

In Table 3, all pair weights provide a clear performance gain to their baseline results. Also, the performance gap of gradient direction and after applying the pair weight is greatly reduced.

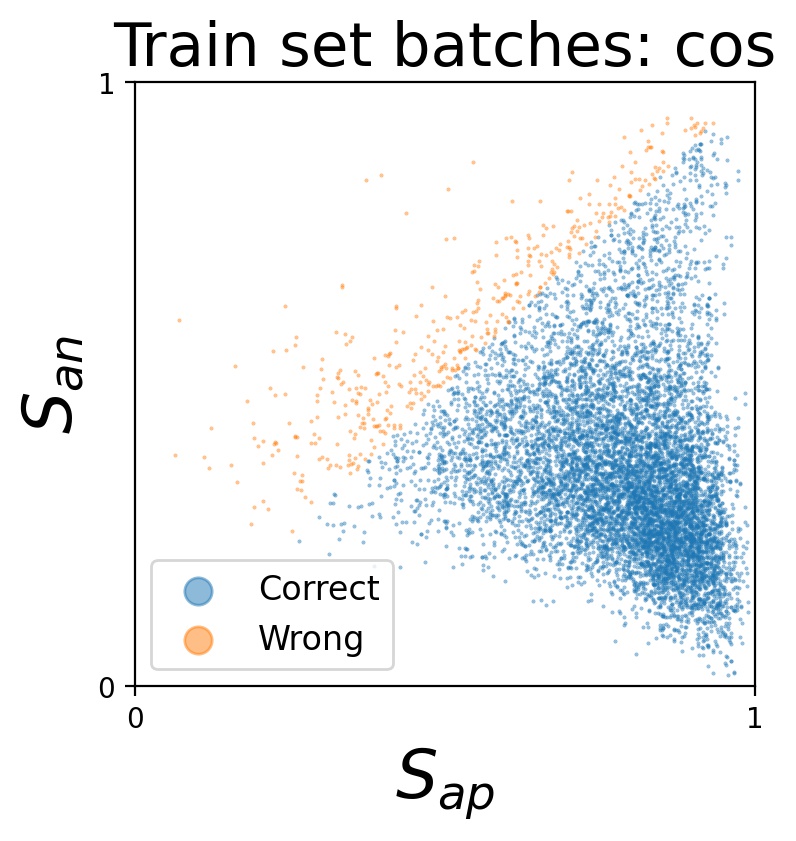

Both relative-similarity methods and perform better than the method with only self-similarity on CAR dataset across different learning rates. Due to the property of well separation on In-shop dataset as shown in Figure 4, the relative-similarity term will less likely exist during the train because few positive and negative examples will be in and set as mentioned in equation 12. is performance almost as same as , but shows some computation instability effect. We put a further analysis of this effect in the appendix.

In summary, Table 3 shows several features related to the pair weight. First, pair weight causes substantial improvement in recall@1 accuracy. Second, in most cases, the linear and sigmoid pair weight outperforms the default Euclidean pair weight. Third, the linear version of the multi-similarity gradient direction is much more robust to different learning rates than the sigmoid version(see in appendix), and gives better performance and Recall@1 accuracy.

5.3 Triplet Weight

Table 4, we show two groups of experiments to compare the seven triplet weights. One group sets the pair-weight to be constant . Another group uses the linear pair weight . All experiments use cosine gradient direction.

Comparing to the baseline method where triplet weight , and has minimal but slight boost in performance; and has bigger impact on CAR data set than In-shop dataset. This is due to the properties of these two datasets as shown in Figure 4. CAR dataset has low inter-class variance(images from different classes may look similar) while In-shop dataset has high inter-class variance(images from different classes look not similar). The major challenge of CAR dataset is to distinguish similar images with different labels, and this is the purpose of triplet operators and because they concentrate on separating triplets with hard negative in training. And In-shop dataset is to relatively easy to separate images with different labels, the goal is to continue separating the images better, which is the impact of and

Therefore, we can conclude that the performance gain in Circle loss is largely from the pair weight not from the triplet weight. Selective Contrastive operator benefits the training tasks which need to separate triplets with hard negative and is not helpful for training tasks which easily separate triplets during the training.

5.4 Euclidean or Cosine Direction?

Dataset CUB(dim=64) CAR(dim=64) SOP(dim=512) In-shop(dim=512) Method R@1 R@2 R@4 R@1 R@2 R@4 R@1 R@10 R@100 R@1 R@10 R@20 LiftedStruct [17] 43.6 56.6 68.6 53.0 65.7 76.0 62.5 80.8 91.9 - - - ProxyNCA [10] 49.2 61.9 67.9 73.2 82.4 86.4 73.7 - - - - - SoftTriple [13] 60.1 71.9 81.2 78.6 86.6 91.8 78.3 90.3 95.9 - - - EasyPositive [23] 57.3 68.9 79.3 75.5 84.2 90.3 78.3 90.7 96.3 87.8 95.7 96.8 MS [19] 57.4 69.8 80.0 77.3 85.3 90.5 78.2 90.5 96.0 89.7 97.9 98.5 SCT [22] 57.7 69.8 79.6 73.4 82.0 88.0 81.9 92.6 96.8 90.9 97.5 98.2 DR-MS [9] 59.1 71.0 80.3 79.3 86.7 91.4 - - - 91.7 98.1 98.7 Proxy-anchor [6] 61.7 73.0 81.8 78.8 87.0 92.2 79.1 90.8 96.2 91.5 98.1 98.8 MS*(B128) 59.8 71.7 81.0 79.0 86.6 91.5 78.7 90.4 96.0 89.4 96.6 97.4 DR-MS*(B128) 60.7 71.9 81.3 79.9 87.0 91.7 78.8 90.4 96.1 89.6 96.4 97.4 Ours(B128) 63.5 74.8 83.6 82.5 89.1 93.3 79.9 90.5 95.5 91.4 97.7 98.4 Ours(B256) 63.8 74.8 83.7 85.5 91.0 94.6 82.0 92.3 96.8 92.2 97.8 98.4 Ours(B384) 63.8 75.0 84.2 86.5 91.6 94.8 82.2 92.5 96.8 92.0 97.8 98.3 Ours(B512) 63.1 74.6 83.2 85.7 91.2 94.7 82.3 92.5 96.9 90.8 97.2 97.9

In Section 3.2 and Figure 2, the different gradient behaviors of and have been showed. But additional discussion will highlight the performance difference shown in Sections 5.1 and 5.2.

We first decompose the unit gradient to move positive and negative features into two directions: the direction along positive and negative feature and the direction orthogonal to positive and negative feature . As shown in Figure 2, only the gradient component along effectively contributes to the angle change of anchor-positive and anchor-negative pair which directly affect the similarity score. The effective gradient projection strength for and :

| (20) |

where is the similarity of a positive or negative pair. The derivation of the above projection length is shown in the appendix.

Figure 2 right shows the change of the effective gradient strength for and varying as a function of pair similarity. Because most pairs during the training have positive similarity, we focus on projection length when similarity is positive.

The Euclidean gradient has stronger force to pull positive close and push negative away than the cosine direction when two features are close to each other. Therefore, Euclidean gradient continues to force features together even when they are already relatively close, unlike the cosine gradient. In Figure 5 left column, we show the triplet diagram plot of triplets extracted from the last 5 epochs of training (epoch 55-60) on CAR dataset. The Euclidean direction clusters the same label feature more tightly than the cosine direction because there are more triplets along the right edge of the triplet diagram comparing to the scatter of cosine direction.

However, the tight clustering behavior in training leads to even the triplet with nearest positive and the nearest negative to be compact. In the middle and right column of Figure 5, we plot just these triplets for the whole training set (middle), and testing set (right). The Euclidean gradient has more triplets very close to the top right corner, indicating that point have very similar same class and different class neighbors, while cosine gradient creates triplets that are more spread out. The spread out effect indicates the feature learned by the deep model is distinguishable [26, 21, 23]. Because these Euclidean feature are more compressed (for both the anchor-positive and anchor-negative pairs), it is harder for the network to learn distinguishable features that if it is using the cosine gradient.

One more piece of evidence to support the analysis above is the pair weight result in section 5.2. When the Euclidean pair weight is applied to Euclidean direction and cosine direction, the performance gap between these two methods is almost disappeared. This is because the Euclidean pair weight reduces the weight de-emphasizes positive pair when they are already close, and therefore avoid its tight clustering behavior, making Euclidean direction behave similarly as cosine direction.

6 Best combination of gradients

In the previous chapter, we separately consider the gradient terms that relate to the gradient directions, the pair weights applied to the gradients from the anchor-negative and anchor-positive pairs, and the overall weight of the triplets. In terms of the gradient direction, the gives the best performance and is relatively stable with respect to the learning rate. In terms of the pair-weighting, is consistently a top performer across datasets. Similarly, shows stable improvement to both CAR and In-shop datasets. We combine these gradient components empirically to form the final gradient, and train a network by imposing this gradient combination. We compare the performance of the network trained this way with many latest state-of-the-art results.

To ensure a fair comparison, we also re-implement current related SOTA approaches, MS and DR-MS results (noted as MS* and DR-MS*) with our gradient method to create a comparison with the same network backbone, pre-processing and training settings. The implementation difference is shown in the appendix. The result is reported in Table 5. In addition, we vary the batch size 128, 256, 384, 512 on all tests for four datasets and continue to improve the Recall performance.

7 Limitations

We point out the following limitations of the paper:

-

•

We do not exhaustively compute all possible combination of all the three gradient components, and instead focus on the isolated effect of single gradient components. There may be additional improvements in explicitly considering the interactions between the different gradient components.

- •

-

•

Our experiments do not fully explore training optimizations. We have fixed hyper-parameters in our sampling approach, we keep a constant step size, and we fix the hyper-parameters in gradient components such as for most experiments. Our results are based on hyper-parameter selections from earlier papers, but the gradient based approach to learning embedding functions may be improved with additional search over the hyper-parameter space.

8 Conclusion

We provide a new framework to train deep metric learning networks with direct gradient modification. In our framework, we disentangled gradient components of many loss functions into common components, and analyze the effects of each component. We find that the Euclidean gradient direction and the cosine gradient direction behave quite differently. In its default form, the Euclidean gradient creates embedding spaces that are very tightly clustered and the cosine gradient direction has a consistently big improvement over a large set of experimental conditions.

Second, recently popular works define new loss functions that, in terms of their gradient, primarily change the pair weight term, which is consistent with our findings that the pair-weight term is very important. In contrast, we find the triplet weight term to have limited impact that was not consistent across datasets.

Finally, this study of the importance of different weighting functions and components of the gradient led to a simple approach that directly defines the desired gradients and gives improvements to state-of-the-art performance relative to recent work.

References

- [1] Jacob Goldberger, Geoffrey E Hinton, Sam T. Roweis, and Ruslan R Salakhutdinov. Neighbourhood components analysis. In L. K. Saul, Y. Weiss, and L. Bottou, editors, Advances in Neural Information Processing Systems 17, pages 513–520. MIT Press, 2005.

- [2] Raia Hadsell, Sumit Chopra, and Yann LeCun. Dimensionality reduction by learning an invariant mapping. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), volume 2, pages 1735–1742. IEEE, 2006.

- [3] Ben Harwood, BG Kumar, Gustavo Carneiro, Ian Reid, Tom Drummond, et al. Smart mining for deep metric learning. In Proceedings of the IEEE International Conference on Computer Vision, pages 2821–2829, 2017.

- [4] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016.

- [5] Elad Hoffer and Nir Ailon. Deep metric learning using triplet network. In International Workshop on Similarity-Based Pattern Recognition, pages 84–92. Springer, 2015.

- [6] Sungyeon Kim, Dongwon Kim, Minsu Cho, and Suha Kwak. Proxy anchor loss for deep metric learning. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

- [7] Jonathan Krause, Michael Stark, Jia Deng, and Li Fei-Fei. 3d object representations for fine-grained categorization. In 4th International IEEE Workshop on 3D Representation and Recognition (3dRR-13), Sydney, Australia, 2013.

- [8] Ziwei Liu, Ping Luo, Shi Qiu, Xiaogang Wang, and Xiaoou Tang. Deepfashion: Powering robust clothes recognition and retrieval with rich annotations. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016.

- [9] Deen Dayal Mohan, Nishant Sankaran, Dennis Fedorishin, Srirangaraj Setlur, and Venu Govindaraju. Moving in the right direction: A regularization for deep metric learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

- [10] Yair Movshovitz-Attias, Alexander Toshev, Thomas K. Leung, Sergey Ioffe, and Saurabh Singh. No fuss distance metric learning using proxies. In Proc. International Conference on Computer Vision (ICCV), Oct 2017.

- [11] Kevin Musgrave, Serge Belongie, and Ser-Nam Lim. A metric learning reality check. In European Conference on Computer Vision, pages 681–699. Springer, 2020.

- [12] Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. Automatic differentiation in pytorch. In NIPS-W, 2017.

- [13] Qi Qian, Lei Shang, Baigui Sun, Juhua Hu, Hao Li, and Rong Jin. Softtriple loss: Deep metric learning without triplet sampling. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2019.

- [14] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg, and Li Fei-Fei. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision (IJCV), 115(3):211–252, 2015.

- [15] Florian Schroff, Dmitry Kalenichenko, and James Philbin. Facenet: A unified embedding for face recognition and clustering. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2015.

- [16] Kihyuk Sohn. Improved deep metric learning with multi-class n-pair loss objective. In Advances in Neural Information Processing Systems, pages 1857–1865, 2016.

- [17] Hyun Oh Song, Yu Xiang, Stefanie Jegelka, and Silvio Savarese. Deep metric learning via lifted structured feature embedding. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016.

- [18] Yifan Sun, Changmao Cheng, Yuhan Zhang, Chi Zhang, Liang Zheng, Zhongdao Wang, and Yichen Wei. Circle loss: A unified perspective of pair similarity optimization. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

- [19] Xun Wang, Xintong Han, Weilin Huang, Dengke Dong, and Matthew R Scott. Multi-similarity loss with general pair weighting for deep metric learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5022–5030, 2019.

- [20] P. Welinder, S. Branson, T. Mita, C. Wah, F. Schroff, S. Belongie, and P. Perona. Caltech-UCSD Birds 200. Technical Report CNS-TR-2010-001, California Institute of Technology, 2010.

- [21] Zhirong Wu, Yuanjun Xiong, Stella X. Yu, and Dahua Lin. Unsupervised feature learning via non-parametric instance discrimination. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018.

- [22] Hong Xuan, Abby Stylianou, Xiaotong Liu, and Robert Pless. Hard negative examples are hard, but useful. In The European Conference on Computer Vision (ECCV), September 2020.

- [23] Hong Xuan, Abby Stylianou, and Robert Pless. Improved embeddings with easy positive triplet mining. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), March 2020.

- [24] Dong Yi, Zhen Lei, Shengcai Liao, and Stan Z Li. Deep metric learning for person re-identification. In 2014 22nd International Conference on Pattern Recognition, pages 34–39. IEEE, 2014.

- [25] Tianhe Yu, Saurabh Kumar, Abhishek Gupta, Sergey Levine, Karol Hausman, and Chelsea Finn. Gradient surgery for multi-task learning. arXiv preprint arXiv:2001.06782, 2020.

- [26] Xu Zhang, Felix X. Yu, Sanjiv Kumar, and Shih-Fu Chang. Learning spread-out local feature descriptors. In The IEEE International Conference on Computer Vision (ICCV), Oct 2017.

- [27] Xiao Zhang, Rui Zhao, Junjie Yan, Mengya Gao, Yu Qiao, Xiaogang Wang, and Hongsheng Li. P2sgrad: Refined gradients for optimizing deep face models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9906–9914, 2019.