Disentangling Drift- and Control- Vector Fields for Interpretable Inference of Control-affine Systems

Abstract

Many engineered as well as naturally occurring dynamical systems do not have an accurate mathematical model to describe their dynamic behavior. However, in many applications, it is possible to probe the system with external inputs and measure the process variables, resulting in abundant data repositories. Using the time-series data to infer a mathematical model that describes the underlying dynamical process is an important and challenging problem. In this work, we propose a model reconstruction procedure for inferring the dynamics of a class of nonlinear systems governed by an input affine structure. In particular, we propose a data generation and learning strategy to decouple the reconstruction problem associated with the drift- and control- vector fields, and enable quantification of their respective contributions to the dynamics of the system. This learning procedure leads to an interpretable and reliable model inference approach. We present several numerical examples to demonstrate the efficacy and flexibility of the proposed method.

I Introduction

Engineered systems encountered in many scientific domains are increasingly complex and highly nonlinear. In recent times, the processes and mechanisms previously confined to biology, social science, etc., are viewed through the prism of systems theory, and, as a consequence, many high-dimensional complex systems are emerging ([1, 2]). A common challenge in both the engineered and natural systems is the lack of accurate mathematical models describing their dynamic behavior. The ability to model such complex systems is essential for understanding the underlying dynamical processes, and, thereby, enabling safe and efficient utilization of such systems in safety-critical application domains such as medical diagnosis and prognosis.

From the early transfer-function models to the widely used state-space representations [3], an enormous body of systems representation and modeling approaches using first principles has been developed and reported [4]. However, in many emerging applications precise models that describe the dynamics of the system are not available. Due to the advances in actuator and sensing technologies, in many of these applications, it is possible to externally perturb the system and record the evolution of the system states [1, 5, 6]. Therefore, it is desired to reconstruct an interpretable and accurate model for these systems by using the time-series data.

Inferring the system models has been an active research topic in the past several decades [7, 8, 9, 4]. Plenitude of results have been reported on system identification and model learning for linear dynamical systems [4, 6] and nonlinear dynamical systems [10, 11] with or without external controls. For instance, in the traditional systems theory, inferring a dynamical system model that encodes and reproduces the relationship in a given input-output dataset has been studied as a realization problem [12, 13]. Moreover, methods such as dynamic mode-decomposition (DMD), DMDc [6], SINDy [11] and adaptive identifiers [7, 14, 8] have been proposed to infer the dynamical equations using simulated or measurement time-series data, and several successful applications of these approaches have been reported (see for example [15, 10]).

In general, existing methods for model reconstruction aim to find a set of mathematical equations that best fits the given data samples. However, in many applications, the data-samples obtained need not be rich enough to fully recover the dynamics of the system, and the experimental costs to generate data can impede this process. In this context, generating data-samples efficiently is an important task. For instance, in adaptive control, the concepts of sufficient richness and persistency of excitation are used to describe the type of perturbation signals and regression functions that are desired for parameter convergence in a model estimation and learning problem [14, 8]. Similarly, in canonical reinforcement learning algorithms, this idea is encapsulated by exploration [16]. Furthermore, learning a model for the controlled system is typically done by combining the unknown parameters of the drift and control vector fields, resulting in an abstract dynamic model that explains the given input-output data. In this setting, the contributions of external perturbations and the natural drift of the system to its dynamic behavior cannot be disambiguated, which is critical in many applications.

In this work, we consider the problem of delineating the contribution of the drift and external perturbations to the dynamic evolution for a class of nonlinear systems. In particular, we consider systems with input-affine dynamics and propose a perturbation strategy to infer the contribution of the control vector field without any influence of the drift dynamics. We show that the resulting learning problem yields a regression function that is an explicit function of the perturbation inputs and therefore directly allows to design experiments to ensure that the regression matrix is well-conditioned. As a result, the proposed perturbation and learning approach, subject to certain requirements on the experimental protocol, can be extremely effective in decoupling the contributions of the external control and the natural drift of a system to its dynamics.

The rest of this paper is organized as follows. In Section II, we introduce the class of systems considered in this study, and provide a brief background on the model learning problem. We present our approach for data generation and model-learning for recovering the control vector fields independent of the drift vector field in Section III. We provide several examples and simulation analysis in Section IV to demonstrate the effectiveness of the proposed approach.

II System and Data: Model-learning

Consider a nonlinear dynamical system with the external control input linearly entering the dynamic equation as described by

| (1) |

where , for , , for each and , , for , are the drift- and the control- vector field, respectively. We assume that the state evolving on the -dimensional manifold is accessible for measurement and are continuous functions. We will denote

| (2) |

In the rest of this section, we will present a brief overview of the model-learning problem.

II-A Model-learning: A brief overview

The primary objective in a system identification or model-learning framework is to reconstruct the dynamics of the system from the given time-series data. For example, given the trajectories and the goal is to infer the unknown functions in (1). This problem has been an active research topic and a recent survey on this topic can be found in [10].

In this section, we will confine our overview to the traditional system identification approaches and some popular learning approaches such as the dynamic mode decomposition [6]. As detailed in [10], the existing framework to build dynamical models using the measured input-output data are composed of three major steps: the data collection, the selection of a set of candidate models, and the learning/estimation algorithm to identify the model that best fits the given data. In the following, we illustrate these ideas, and motivate the problem of decoupling control coefficients from the drift dynamics in a model learning framework and point-out the shortcomings of the existing approaches in dealing with this problem.

Data-generation

To infer the dynamics of the system (1), i.e., unknown functions and from data, first, experiments are performed to assimilate input-output data and . In particular, several samples of data are generated by applying various perturbation inputs, for example, pulse, multi-sine, random noise, etc. [10], to the system. The data generated through experiments are fundamental source of information regarding the underlying system dynamics, and diverse data trajectories are desired for learning a reliable model. In practice, the type of excitation signals applied as inputs during data-generation, the sampling rate of the sensors and measurement noise have a major impact on the ‘quality’ of the data obtained, and there is no standard excitation signal that would work for every application [10].

Candidate models- Linear-in-parameter (LIP) form

The data generated is then used to obtain an approximation of the dynamics from candidate models. A common approach to do this task is to derive an LIP representation of the unknown dynamics, i.e., the system dynamics are re-written in a parametric form as

| (3) |

where constitutes the unknown coefficients corresponding to the regression function , each with appropriate dimensions. The input-output data () are then used in (3) to estimate the coefficients in a supervised learning framework. The choice of regression function in (3) is a design choice and is typically composed of basis or kernel functions or time-delayed states and control in the discrete-time setting [10].

Linear dynamical system

Consider a linear dynamical system with the governing equation given by

| (4) |

where , is a linear time-invariant matix, and for is a constant control-coefficient vector. We denote with and .

For the linear models of the form (4), one can expect the trajectories to lie on a hyper-plane. Therefore, a state-space structure of dimensions can be chosen in a parametric form as in (3), which can then be updated to fit the data samples. For instance, the matrices and are the approximates of and , respectively, and the regression matrices correspond to the data samples and , respectively. The slope of the states is approximated as , where is the sampling time, and a linear regression problem is solved to determine the entities of the matrix . For close to and sufficiently large data set, the unknown parameters in are estimated through solving an associated linear regression problem (LRP).

However, the process of choosing the regression function in (3) to approximate a nonlinear dynamical system can be quite tedious as illustrated by the following example.

Example 1: A neuron can be modeled using a bio-physically meaningful Hodgkin-Huxley (HH) model, which is a -dimensional model describing the evolution of transmembrane potential in the neuron and it is given by

| (5) |

and the internal states are governed by

where are the state variables, is the control input (extrinsic current, e.g., optogenetic stimulation [17]) and are nonlinear functions [18]. In many applications of brain medicine, the parameters that model the ionic channel conductances , the reversal potentials and the membrane capacitance are required to be known accurately for the purpose of diagnosis and for synthesis of extrinsic stimulation to evoke specific patterns such as synchronization/desynchronization, etc. [18].

In practice, only can be measured, and in this case, the voltage dynamics for the HH model can be rewritten in a parametric form as in (3) with the regression function

| (6) |

and the unknown parameters

| (7) |

In this example, the selection of candidate models can be very challenging since the gating variables cannot be measured, and even for a fixed set of gating variables , the excitation signal should be designed such that the resulting regression function (6) is well-conditioned, which is a challenge due to the leak channel and the rich dynamic behavior of a neuron (periodic spiking, bursting, slow-adaptation and fast spiking, etc.) [5, 18].

Nonlinear paradigm

For a nonlinear system, the regression function in (3) is typically composed of orthonormal basis functions of the underlying function space. For instance, if there is a prior knowledge that the system exhibits oscillatory behavior, the Fourier basis can be used to approximate the nonlinear functions. Using a truncated Fourier basis expansion, the unknown dynamics is still represented in the LIP form and the data is used in a supervised learning framework to infer the coefficients of these Fourier terms. Alternatively, polynomials such as Legendre and Chebyshev polynomials are also common choices of candidate regression functions [10].

To enhance the tractability in model selection, it is critical to specify the intended use of the model before experimental design to collect data. For instance, to obtain a predictive model for the purpose of control synthesis [10], interpretability of the approximation may not be essential. In this case, abstract nonlinear models such as artificial neural networks or wavelets [8, 4] can be used to approximate the system dynamics in contrast to the LIP-based approximators as in (3). On the other hand, to obtain a simulation model with properties of interpretability, it is essential to carefully select candidate models and design experiments to collect necessary data.

Estimation and learning

Finally, with the parameterization as in (3), the unknown parameters are updated to fit the data. In particular, this leads to a linear regression problem. The solution to this problem relies on the choice of regression function , the complexity of the dynamics of the system and the given data samples. Many variations to this generic model learning framework have been proposed in the literature and a detailed review of these approaches can be found in [10].

Due to the LIP representation of the model as in (3) and the regression functions and , which are typically state-dependent, the contributions of the natural drift of the system and the external input scaled by the control coefficient cannot be tractably decoupled. In the following, we provide some examples to point out the importance of decoupling the drift- and control- vector fields in a model learning problem.

Example 2

Many control systems describing periodic activity or oscillatory behavior, for example, spiking activity of neurons and thermostatically coupled loads [18, 19, 20], can be represented using the phase-reduction model of the form,

| (8) |

where is the phase of the oscillations, is the frequency of oscillation, models the (Gaussian) noise and describes the phase-response curve (PRC). In order to efficiently design control strategies for steering a higher dimensional oscillatory system using its first-order phase model, it is essential to precisely infer the PRC in the model that describes the infinitesimal effect of external weak forcing on the oscillatory behavior. In practice, the time-varying drift and the noise can affect the computation of the PRC, and it is essential to delineate the contribution of the time-varying frequency from the PRC in order to design precise control signals.

Furthermore, in many practical applications, actuators contribute non-trivially to the dynamics of the system. For instance, for the HH model (II-A) in Example 1, the perturbation is typically applied using external apparatus such as optogenetic stimulation [21, 17], which introduces nonlinearity and the control vector field is no longer a constant determined by the membrane capacitance () as in (II-A). Therefore, it is imperative to disambiguate the contribution of the input channel from the drift (ionic channels) to the overall dynamics of a neuron in order to enable interpretable inference of the underlying dynamical process.

In view of the above practical challenges arising in emerging applications, we consider the problem of disambiguating the drift and control vector fields in a model inference problem. In particular, we propose a methodology to exploit the structure of input-affine nonlinear system to derive a regression problem for inferring the control vector field, which is independent of the drift vector field. To the best of our knowledge, such a problem is not considered in the existing model learning/system identification algorithms.

In the next section, we will consider the nonlinear input-affine system and present our formulation for learning the control vector field independent of the drift vector field.

III Nonlinear dynamical systems

We propose a data generation and learning procedure to recover the control coefficient . In particular, we propose to perform multiple experiments under different experimental settings to generate data samples over a short time horizon and formulate a learning problem for reconstruction of such that the learning problem does not depend on . Consider the input-affine nonlinear system given by (1), we assume that is accessible for measurements and the system can be perturbed using the external control input .

Perturbation strategy

Let the perturbation input to the experiment be denoted by for and . Following this perturbation, the resulting system dynamics are described by

| (9) |

Let the difference between the applied control in the (or the reference) and the experiment be defined as , and let the difference in the resulting state dynamics be denoted as , where in every experiment. By setting the same initial condition in each experiment (i.e., and for ), we have

| (10) |

where is defined in (2). The equation in (10) reveals a system of linear equations, which can be exploited to determine the nonlinear function at .

To approximate in its domain , we repeat this experimental procedure at different sample points in . In particular, let be distinct points in . We apply for and at each of the initial states resulting in a total of experiments. The dynamics of the system under the proposed experimental setting can be represented using (III) as

| (11) |

Without loss of generality, set and define to denote the derivative at time with the initial condition , and denote the -dimensional control at as . This leads to a system of linear equations of the form

| (12) | ||||

where is defined in (2). Using the data generated by the proposed strategy, we can reconstruct or learn an approximation of the function from (12) using truncated orthonormal bases, such as the Legendre polynomials or the Fourier basis, i.e., , , , where are scalar coefficients, are orthonormal basis functions and are the number of expansion terms in the truncated series for and . Such an approximation is possible as any continuous function can be approximated arbitrarily well on a compact support using orthonormal bases by the Stone-Weierstrass theorem [22]. This leads to

| (13) |

Remark 1

The linear regression problems (LRPs) resulting from (III) can be solved (in parallel) for the unknown coefficients to obtain an approximation for . Note here that the solvability of these LRPs is directly related to the variation in the perturbation inputs applied during each experiment, choice of basis functions and the short-term evolution of the system, and it is independent of the natural drift of the system.

III-A Revisiting the case of linear dynamical systems

Here, we will revisit the case when the dynamics of the system are linear as in (4). Suppose that the matrix and the vectors are unknown and that the state is accessible for measurement.

To recover the control-coefficient , we need to perform experiments wherein we apply different perturbation inputs for , and record the resulting states. We can then analyze the dynamical equation (4) for different control signals. In particular, the system dynamics driven by control inputs in the experiment is given by

| (14) |

We also know the difference between the applied control in the reference () experiment and the experiment, , which results in , for . Unlike the recovery of nonlinear in (III), for the linear time-invariant control coefficients, we have

| (15) | ||||

Without loss of generality and for ease of exposition, we set , and define and . Using these definitions in (15) leads to LRPs, given by

| (16) |

for at . In particular, for , the perturbation inputs can be explicitly designed to ensure that regression matrix in (16) is always full-rank, which will yield an exact solution to the associated LRPs. For numerical computation, the derivatives in (16) can be computed by using the forward difference, with being the sampling time, which inherits numerical errors on the order of .

Remark 2

Once the control vector field is inferred using the proposed method, the regression problem for inferring the drift-vector field can be formulated in a supervised learning framework of the form , where the output , is composed of the basis functions evaluated at the sample points, and constitutes the unknown coefficients. Note that in the presence of unmodeled fluctuations or noise in the dynamics, the linear equations in (15) (or in (12)) will be of the form , where models the fluctuations. Under the assumption that these fluctuations are modeled by white noise, the least-square solution to the regression problem will be the maximum-likelihood estimate and as the number of experiments increase, the solution will converge in probability.

In the next section, we apply the proposed method to recover the control vector fields for linear, bilinear, and nonlinear dynamical systems.

IV Simulation Results

In this section, we demonstrate the effectiveness of the proposed approach using three numerical examples.

IV-A Linear dynamical systems

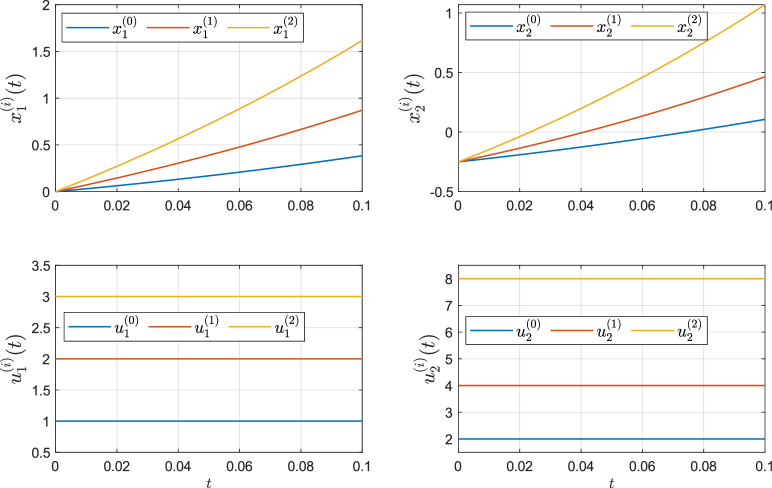

Here, we consider a second-order linear dynamical system to analyze the proposed approach and to illustrate its advantages. In particular, we study one taking the form in (4) with the matrices and . We fixed the initial state at and performed experiments with respective controls for and .

This resulted in the following equations,

and the variation equations

| (17) |

with and

IV-B Bilinear systems

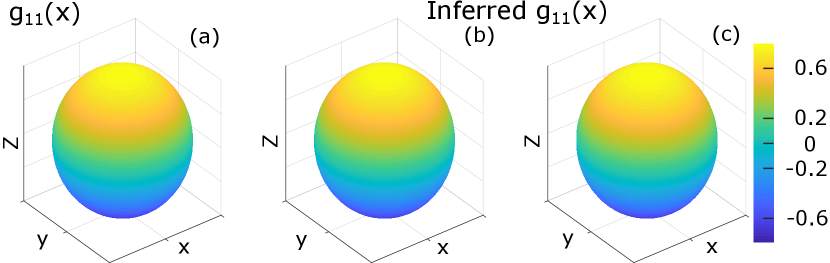

A Bloch system described by a third-order model has its trajectories evolving on a unit sphere with the dynamics given in (1) with ,

where and . The unforced trajectories of this system will only contain limited information regarding the underlying system structure, which cannot be used to infer a reliable model for the system. Therefore, it is essential to design efficient excitation signals to extract information of the system structure.

We consider two cases. First, we define the regression function using the first and the second order polynomials of the states, i.e., . In the second case, we define the regression function using the Fourier basis with for in (III). We randomly selected initial states and applied controls of the form for to recover and . The coefficients estimated using the proposed approach resulted in the replicates of the function as shown in the representative Fig. 2, where the function is recorded, and its approximation using the polynomial basis and the Fourier basis are also recorded.

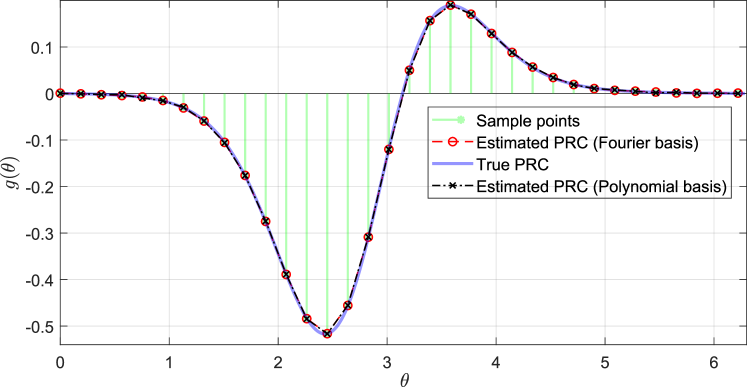

IV-C Nonlinear Oscillators

Here, we revisit the phase model given in Example 3 and apply the proposed approach for recovering the PRC. We consider the dynamics as in (8) with the PRC given as , and .

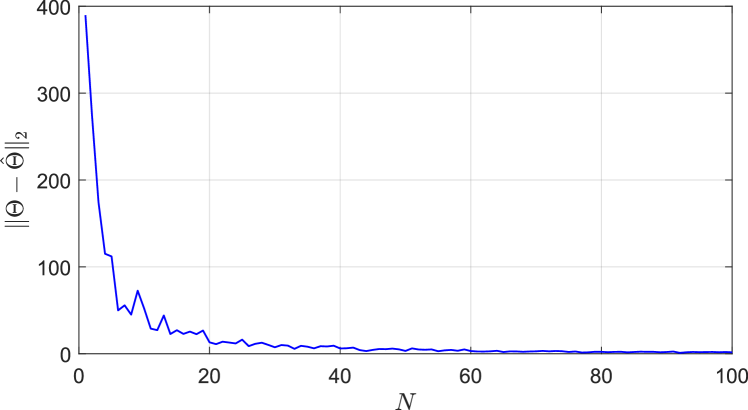

We use the truncated Fourier basis expansion to approximate the PRC upto order . The actual and recovered PRCs and the sample points (total sample points ) are recorded in Fig. 3. Additionally, we considered the case when the dynamics includes an unmodeled white noise as in (8) (). In this case, as the number of perturbation experiments increased, the parameter estimation error, which was computed for the purpose of illustration using the difference between the Fourier coefficients obtained in the noise free case and the Fourier coefficients estimated in the presence of noise, converged in to zero as shown in Fig. 4.

V Conclusions

In this work, we have proposed a data generation protocol and learning framework for recovering the control vector fields, wherein the learning problem of recovering the control vector fields does not depend on the natural drift of the system. Using the input-affine structure, we proposed a perturbation strategy and demonstrated that the control and drift vector fields can be decoupled in a data-driven modeling framework, which under certain conditions pertaining to availability of access to actuation of the system at arbitrary initial conditions, can yield excellent performance when enacted on unknown nonlinear dynamical systems. We demonstrated the efficacy of our approach by implementing the proposed methods in several numerical examples.

References

- [1] S. Ronquist, G. Patterson, L. A. Muir, S. Lindsly, H. Chen, M. Brown, M. S. Wicha, A. Bloch, R. Brockett, and I. Rajapakse, “Algorithm for cellular reprogramming,” Proceedings of the National Academy of Sciences, vol. 114, no. 45, pp. 11 832–11 837, 2017.

- [2] C. Altafini and F. Ceragioli, “Signed bounded confidence models for opinion dynamics,” Automatica, vol. 93, pp. 114–125, 2018.

- [3] R. E. Kalman, “Mathematical description of linear dynamical systems,” Journal of the Society for Industrial and Applied Mathematics, Series A: Control, vol. 1, no. 2, pp. 152–192, 1963.

- [4] L. Ljung, “System identification,” Wiley Encyclopedia of Electrical and Electronics Engineering, pp. 1–19, 1999.

- [5] V. Narayanan, J.-S. Li, and S. Ching, “Biophysically interpretable inference of single neuron dynamics,” Journal of computational neuroscience, vol. 47, no. 1, pp. 61–76, 2019.

- [6] J. L. Proctor, S. L. Brunton, and J. N. Kutz, “Dynamic mode decomposition with control,” SIAM Journal on Applied Dynamical Systems, vol. 15, no. 1, pp. 142–161, 2016.

- [7] P. Ioannou and P. Kokotovic, “An asymptotic error analysis of identifiers and adaptive observers in the presence of parasitics,” IEEE Transactions on Automatic Control, vol. 27, no. 4, pp. 921–927, 1982.

- [8] K. S. Narendra and K. Parthasarathy, “Identification and control of dynamical systems using neural networks,” IEEE Trans. on Neural Networks, vol. 1, no. 1, pp. 4–27, 1990.

- [9] G. Kreisselmeier, “Stabilized least-squares type adaptive identifiers,” IEEE Transactions on Automatic Control, vol. 35, no. 3, pp. 306–310, 1990.

- [10] J. Schoukens and L. Ljung, “Nonlinear system identification: A user-oriented road map,” IEEE Control Systems Magazine, vol. 39, no. 6, pp. 28–99, 2019.

- [11] S. L. Brunton, J. L. Proctor, and J. N. Kutz, “Discovering governing equations from data by sparse identification of nonlinear dynamical systems,” Proceedings of the national academy of sciences, vol. 113, no. 15, pp. 3932–3937, 2016.

- [12] A. Isidori, “Nonlinear system control,” New York: Springer Verlag, vol. 61, pp. 225–236, 1995.

- [13] R. W. Brockett, “Volterra series and geometric control theory,” Automatica, vol. 12, no. 2, pp. 167–176, 1976.

- [14] S. Sastry and M. Bodson, Adaptive control: stability, convergence and robustness. Courier Corporation, 2011.

- [15] P. J. Schmid, “Application of the dynamic mode decomposition to experimental data,” Experiments in fluids, vol. 50, no. 4, pp. 1123–1130, 2011.

- [16] R. S. Sutton and A. G. Barto, Introduction to reinforcement learning. MIT press Cambridge, 1998, vol. 135.

- [17] J. C. Williams and E. Entcheva, “Optogenetic versus electrical stimulation of human cardiomyocytes: modeling insights,” Biophysical journal, vol. 108, no. 8, pp. 1934–1945, 2015.

- [18] E. M. Izhikevich, Dynamical systems in neuroscience. MIT press, 2007.

- [19] W. Bomela, A. Zlotnik, and J.-S. Li, “A phase model approach for thermostatically controlled load demand response,” Applied energy, vol. 228, pp. 667–680, 2018.

- [20] K. Kuritz, S. Zeng, and F. Allgöwer, “Ensemble controllability of cellular oscillators,” IEEE Control Systems Letters, vol. 3, no. 2, pp. 296–301, 2018.

- [21] N. Grossman, K. Nikolic, C. Toumazou, and P. Degenaar, “Modeling study of the light stimulation of a neuron cell with channelrhodopsin-2 mutants,” IEEE Transactions on Biomedical Engineering, vol. 58, no. 6, pp. 1742–1751, 2011.

- [22] W. Rudin, Principles of Mathematical Analysis, ser. International series in pure and applied mathematics. McGraw-Hill, 1976.