Discriminator Contrastive Divergence: Semi-Amortized Generative Modeling by Exploring Energy of the Discriminator

Abstract

Generative Adversarial Networks (GANs) have shown great promise in modeling high dimensional data. The learning objective of GANs usually minimizes some measure discrepancy, e.g., -divergence (-GANs [28]) or Integral Probability Metric (Wasserstein GANs [2]). With -divergence as the objective function, the discriminator essentially estimates the density ratio [37], and the estimated ratio proves useful in further improving the sample quality of the generator [3, 36]. However, how to leverage the information contained in the discriminator of Wasserstein GANs (WGAN) [2] is less explored. In this paper, we introduce the Discriminator Contrastive Divergence, which is well motivated by the property of WGAN’s discriminator and the relationship between WGAN and energy-based model. Compared to standard GANs, where the generator is directly utilized to obtain new samples, our method proposes a semi-amortized generation procedure where the samples are produced with the generator’s output as an initial state. Then several steps of Langevin dynamics are conducted using the gradient of the discriminator. We demonstrate the benefits of significant improved generation on both synthetic data and several real-world image generation benchmarks.111Code is available at https://github.com/MinkaiXu/Discriminator-Contrastive-Divergence.

1 Introduction

Generative Adversarial Networks (GANs) [10] proposes a widely popular way to learn likelihood-free generative models, which have shown promising results on various challenging tasks. Specifically, GANs are learned by finding the equilibrium of a min-max game between a generator and a discriminator, or a critic under the context of WGANs. Assuming the optimal discriminator can be obtained, the generator substantially minimizes some discrepancy between the generated distribution and the target distribution.

Improving training GANs by exploring the discrepancy measure with the excellent property has stimulated fruitful lines of research works and is still an active area. Two well-known discrepancy measures for training GANs are -divergence and Integral Probability Metric (IPM) [26]. -divergence is severe for directly minimization due to the intractable integral, -GANs provide minimization instead of a variational approximation of -divergence between the generated distribution and the target distribution . The discriminator in -GANs serves as a density ratio estimator [37]. The other families of GANs are based on the minimization of an Integral Probability Metric (IPM). According to the definition of IPM, the critic needs to be constrained into a specific function class. When the critic is restricted to be 1-Lipschitz function, the corresponding IPM turns to the Wasserstein-1 distance, which inspires the approaches of Wasserstein GANs (WGANs) [25, 2, 13].

No matter what kind of discrepancy is evaluated and minimized, the discriminator is usually discarded at the end of the training, and only the generator is kept to generate samples. A natural question to ask is whether, and how we can leverage the remaining information in the discriminator to construct a more superior distribution than simply sampling from a generator.

Recent work [3, 36] has shown that a density ratio can be obtained through the output of discriminator, and a more superior distribution can be acquired by conducting rejection sampling or Metropolis-Hastings sampling with the estimated density ratio based on the original GAN [10].

However, the critical limitation of previous methods lies in that they can not be adapted to WGANs, which enjoy superior empirical performance over other variants. How to leverage the information of a WGAN’s critic model to improve image generation remains an open problem. In this paper, we do the following to address this:

-

•

We provide a generalized view to unify different families of GANs by investigating the informativeness of the discriminators.

-

•

We propose a semi-amortized generative modeling procedure so-called discriminator contrastive divergence (DCD), which achieves an intermediate between implicit and explicit generation and hence allows a trade-off between generation quality and speed.

Extensive experiments are conducted to demonstrate the efficacy of our proposed method on both synthetic setting and real-world generation scenarios, which achieves state-of-the-art performance on several standard evaluation benchmarks of image generation.

2 Related Works

Both empirical [2] and theoretical [15] evidence has demonstrated that learning a discriminative model with neural networks is relatively easy, and the neural generative model(sampler) is prone to reach its bottleneck during the optimization. Hence, there is strong motivation to further improve the generated distribution by exploring the remaining information. Two recent advancements are discriminator rejection sampling(DRS) [3] and MH-GANs [36]. DRS conducts rejection sampling on the output of the generator. The vital limitation that lies in the upper bound of is needed to be estimated for computing the rejection probability. MH-GAN sidesteps the above problem by introducing a Metropolis-Hastings sampling procedure with generator acting as the independent proposal; the state transition is estimated with a well-calibrated discriminator. However, the theoretical justification of both the above two methods is based on the fact that the output of discriminator needs to be viewed as an estimation of density ratio . As pointed out by previous work [41], the output of a discriminator in WGAN [2] suffers from the free offset and can not provide the density ratio, which prevents the application of the above methods in WGAN.

Our work is inspired by recent theoretical studies on the property of discriminator in WGANs [13, 41]. [33] proposes discriminator optimal transport (DOT) to leverage the optimal transport plan implied by WGANs’ discriminator, which is orthogonal to our method. Moreover, turning the discriminator of WGAN into an energy function is closely related to the amortized generation methods in the context of the energy-based model (EBM) [20, 40, 23] where a separate network is proposed to learn to sample from the partition function in [9]. Recent progress [32, 8] in the area of EBM has shown the feasibility of generating high dimensional data with Langevin dynamics. From the perspective of EBM, our proposed method can be seen as an intermediary between an amortized generation model and an implicit generation model, i.e., a semi-amortized generation method, which allows a trade-off between speed and flexibility of generation. With a similar spirit, [11] also illustrates the potential connection between neural classifier and energy-based model in supervised and semi-supervised scenarios.

3 Preliminaries

3.1 Generative Adversarial Networks

Generative Adversarial Networks (GANs) [10] is an implicit generative model that aims to fit an empirical data distribution over sample space . The generative distribution is implied by a generated function , which maps latent variable to sample , i.e., . Typically, the latent variable is distributed on a fixed prior distribution . With i.i.d samples available from and , the GAN typically learns the generative model through a min-max game between a discriminator and a generator :

| (1) |

With and as the function and the is constrained as 1-Lipschitz function, the Eq. 1 yields the WGANs objective which essentially minimizes the Wasserstein distance between and . With and as the Fenchel conjugate[16] of a convex and lower-semicontinuous function, the objective in Eq. 1 approximately minimize a variational estimation of -divergence[28] between and .

3.2 Energy Based Model and MCMC basics

The energy-based model tends to learn an unnormalized probability model implied by an energy function to prescribe the ground truth data distribution . The corresponding normalized density function is:

| (2) |

where is so-called normalization constant. The objective of training an energy-based model with maximum likelihood estimation is as:

| (3) |

The estimated gradient with respect to the MLE objective is as follows:

| (4) | ||||

The above method for gradient estimation in Equation 4 is called contrastive divergence (CD). Furthermore, we define the score of distribution with density function as . We can immediately conclude that , which does not depend on the intractable .

Markov chain Monte Carlo is a powerful framework for drawing samples from a given distribution. An MCMC is specified by a transition kernel which corresponds to a unique stationary distribution , i.e.,

More specifically, MCMC can be viewed as drawing from the initial distribution and iteratively get sample at the -th iteration by applied the transition kernel on the previous step, i.e., . Following [24], we formalized the distribution of as obtained by a fixed point update of form , and :

As indicated by the standard theory of MCMC, the following monotonic property is satisfied:

| (5) |

And converges to the stationary distribution as .

4 Methodology

4.1 Informativeness of Discriminator

In this section, we seek to investigate the following questions:

-

•

What kind of information is contained in the discriminator of different kinds of GANs?

-

•

Why and how can the information be utilized to further improved the quality of generated distribution?

We discuss the discriminator of -GANs, and WGANs, respectively, in the following.

4.1.1 -GAN Discriminator

-GAN [27] is based on the variational estimation of -divergence [1] with only samples from two distributions available:

Theorem 1.

[27] With Fenchel Duality, the variational estimation of -divergence can be illustrated as follows:

| (6) | ||||

where the is the arbitrary class of function and denotes the Fenchel conjugate of . And the supremum is achieved only when , i.e. .

In -GAN [28], the discriminator is actually the function parameterized with neural networks. Theorem. 1 indicates the density ratio estimation view of -GAN’s discriminator, as illustrated in [37]. More specifically, the discriminator in -GAN is optimized to estimate a statistic related to the density ratio between and , i.e. , and the can be acquired easily with . For example, in the original GANs [10], the corresponding in -GAN literature is . Assuming the discriminator is trained to be optimal, the output is , and we can get the density ratio . However, it should be noticed that the discriminator is hard to reach the optimality. In practice, without loss of generality, the density ratio implied by a sub-optimal discriminator can be seen as the density ratio between an implicitly defined distribution and the generated distribution . It has been studied both theoretically and empirically in the context of GANs [2, 15, 17], with the same inductive bias, that learning a discriminative model is more accessible than a generative model. Based on the above fact, the rejection-sampling based methods are proposed to use the estimated density ratio, e.g., in original GANs, to conduct rejection sampling[3] or Metropolis-Hastings sampling[36] based on generated distribution . These methods radically modify the generated distribution to , the improvement in empirical performance as shown in [3, 36] demonstrates that we can construct a superior distribution to prescribe the empirical distribution by involving the remaining information in discriminator.

4.1.2 WGAN Discriminator

Different from -GANs, the objective of WGANs is derived from the Integral Probability Metric, and the discriminator can not naturally be derived as an estimated density ratio. Before leveraging the remaining information in the discriminator, the property of the discriminator in WGANs needs to be investigated first. We introduce the primal problem implied by WGANs objective as follows:

Let denote the joint probability for transportation between and , which satisfies the marginality conditions,

| (7) |

The primal form first-order Wasserstein distance is defined as:

the objective function of the discriminator in Wasserstein GANs is the Kantorovich-Rubinstein duality of Eq. 7, and the optimal discriminator has the following property[13]:

Theorem 2.

Let as the optimal transport plan in Eq. 7 and with . With the optimal discriminator as a differentiable function and for all , then it holds that:

Theorem. 2 states that for each sample in the generated distribution , the gradient on the directly points to a sample in the , where the pairs are consistent with the optimal transport plan . All the linear interpolations between and satisfy that . It should also be noted that similar results can also be drawn in some variants of WGANs, whose loss functions may have a slight difference with standard WGAN [41]. For example, the SNGAN uses the hinge loss during the optimization of the discriminator, i.e., and in Eq. 1 is selected as for stabilizing the training procedure. We provide a detailed discussion on several surrogate objectives in Appendix. E.

The above property of discriminator in WGANs can be interpreted as that given a sample from generated distribution we can obtain a corresponding in data distribution by directly conducting gradient decent with the optimal discriminator :

| (8) |

It seems to be a simple and appealing solution to improve with the guidance of discriminator . However, the following issues exist:

1) there is no theoretical indication on how to set for each sample in generated distribution. We noticed that a concurrent work [33] introduce a search process called Discriminator Optimal Transport(DOT) by finding the corresponding through the following:

| (9) |

However, it should be noticed that Eq. 9 has a non-unique solution. As indicated by Theorem 2, all points on the connection between and are valid solutions. We further extend the fact into the following theorem:

Theorem 3.

Theorem 3 provides a theoretical justification for the poor empirical performance of conducting DOT in the sample space, as shown in their paper.

2) Another problem lies in that samples distributed outside the generated distribution () are never explored during training, which results in much adversarial noise during the gradient-based search process, especially when the sample space is high dimensional such as real-world images.

To fix the issues mentioned above in leveraging the information of discriminator in Wasserstein GANs, we propose viewing the discriminator as an energy function. With the discriminator as an energy function, the stationary distribution is unique, and Langevin dynamics can approximately conduct sampling from the stationary distribution. Due to the monotonic property of MCMC, there will not be issues like setting in Eq. 8. Besides, the second issue can also be easily solved by fine-tuning the energy spaces with contrastive divergence. In addition to the benefits illustrated above, if the discriminator is an energy function, the samples from the corresponding energy-based model can be obtained through Langevin dynamics by using the gradients of the discriminator which takes advantage of the property of discriminator as shown in Theorem 2. With all the facts as mentioned above, there is strong motivation to explore further and bridge the gap between discriminator in WGAN and the energy-based model.

4.2 Semi-Amortized Generation with Langevin Dynamics

We first introduce the Fenchel dual of the intractable partition function in Eq. 2:

Theorem 4.

[39] With , the Fenchel dual of log-partition is as follows:

| (10) |

where denotes the space of distributions, and .

We put the Fenchel dual of back into the MLE objective in Eq. 3, we achieve the following min-max game formalization for training energy-based model based on MLE:

| (11) |

The Fenchel dual view of MLE training in the energy-based model explicitly illustrates the gap and connection between the WGAN and Energy based model. If we consider the dual distribution as the generated distribution , and the as the energy function . The duality form for training energy-based models is essentially the WGAN’s objective with the entropy of the generator is regularized.

Hence to turn the discriminator in WGAN into an energy function, we may conduct several fine-tuning steps, as illustrated in Eq. 11. Note that maximizing the entropy of the is indeed a challenging task, which needs to either use a tractable density generator, e.g., normalizing Flows [7], or maximize the mutual information between the latent variable and the corresponding when the is a deterministic mapping. However, instead of maximizing the entropy of the generated distribution directly, we derive our method based on the following fact:

Proposition 1.

To avoid the computation of , motivated by the monotonic property of MCMC, as illustrated in Eq. 5, we propose Discriminator Contrastive Divergence (DCD), which replaces the gradient-based optimization on () in Eq. 11 with several steps of MCMC for finetuning the critic in WGAN into an energy function. To be more specific, we use Langevin dynamics[34] which leverages the gradient of the discriminator to conduct sampling:

| (12) |

Where refers to the step size. The whole finetuning procedure is illustrated in Algorithm 1. The GAN-based approaches are implicitly constrained by the dimension of the latent noise, which is based on a widely applied assumption that the high dimensional data, e.g., images, actually distribute on a relatively low-dimensional manifold. Apart from searching the reasonable point in the data space, we could also find the lower energy part of the latent manifold by conducting Langevin dynamics in the latent space which are more stable in practice, i.e.:

| (13) |

Ideally, the proposal should be accepted or rejected according to the Metropolis–Hastings algorithm:

| (14) |

where refers to the proposal which is defined as:

| (15) |

In practice, we find the rejection steps described in Eq. 14 do not boost performance. For simplicity, following [32, 8], we apply Eq. 12 in experiments as an approximate version.

After fine-tuning, the discriminator function can be approximated seen as an unnormalized probability function, which implies a unique distribution . And similar to the implied in the rejection sampling-based method, it is reasonable to assume that is a superior distribution of . Sampling from can be implemented through the Langevin dynamics, as illustrated in Eq. 12 with serves as the initial distribution.

5 Experiments

In this section, we conduct extensive experiments on both synthetic data and real-world images to demonstrate the effectiveness of our proposed method. The results show that taking the optionally fine-tuned Discriminator as the energy function and sampling from the corresponding yield stable improvement over the WGAN implementations.

5.1 Synthetic Density Modeling

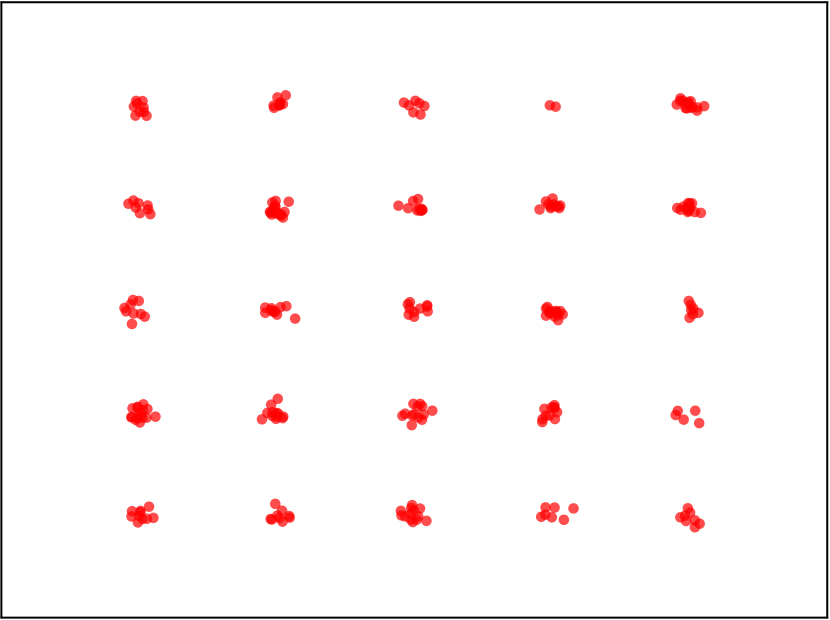

Displaying the level sets is a meaningful way to study learned critic. Following the [3, 13], we investigate the impacts of our method on two challenging low-dimensional synthetic settings: twenty-five isotropic Gaussian distributions arranged in a grid and eight Gaussian distributions arranged in a ring (Fig. 2(a)). For all different settings, both the generator and the discriminator of the WGAN model are implemented as neural networks with four fully connected layers and Relu activations. The Lipschitz constraint is restricted through spectral normalization [25], while the prior is a two-dimensional multivariate Gaussian with a mean of and a standard deviation of .

To investigate whether the proposed Discriminator Contrastive Divergence is capable of tuning the distribution induced by the discriminator as desired energy function, i.e. , we visualize both the value surface of the critic and the samples obtained from with Langevin dynamics. The results are shown in Figure. 2. As can be observed, the original WGAN (Fig. 2(b)) is strong enough to cover most modes, but there are still some spurious links between two different modes. The enhanced distribution (Fig. 2(c)), however, has the ability to reduce spurious links and recovers the modes with underestimated density. More precisely, after the MCMC fine-tuning procedure (Fig. 2(c)), the gradients of the value surface become more meaningful so that all the regions with high density in data distribution are assigned with high value, i.e., lower energy(). By contrast, in the original discriminator (Fig. 2(b)), the lower energy regions in are not necessarily consistent with the high-density region of .

5.2 Real-World Image Generation

To quantitatively and empirically study the proposed DCD approach, in this section, we conduct experiments on unsupervised real-world image generation with DCD and its related counterparts. On several commonly used image datasets, experiments demonstrate that our proposed DCD algorithm can always achieve better performance on different benchmarks with a significant margin.

5.2.1 Experimental setup

Baselines. We evaluated the following models as our baselines: we take PixelCNN [38], PixelIQN [29], and MoLM [30] as representatives of other types of generative models. For the energy-based model, we compared the proposed method with EBM [8] and NCSN [32]. For GAN models, we take WGAN-GP [13], Spectral Normalization GAN (SNGAN) [25], and Progressiv eGAN [19] for comparison. We also take the aforementioned DRS [3], DOT [33] and MH-GAN [36] into consideration. The choices of EBM and GANs are due to their close relation to our proposed method, as analyzed in Section 4. We omit other previous GAN methods since as a representative of a state-of-the-art GAN model, SNGAN and Progressive GAN has been shown to rival or outperform several former methods such as the original GAN [10], the energy-based generative adversarial network [40], and the original WGAN with weight clipping [2].

Evaluation Metrics. For evaluation, we concentrate on comparing the quality of generated images since it is well known that GAN models cannot perform reliable likelihood estimations [35]. We choose to compare the Inception Scores [31] and Frechet Inception Distances (FID) [15] reached during training iterations, both computed from 50K samples. A high image quality corresponds to high Inception and low FID scores. Specifically, the intuition of IS is that high-quality images should lead to high confidence in classification, while FID aims to measure the computer-vision-specific similarity of generated images to real ones through Frechet distance.

Data. We use CIFAR-10 [22] and STL-10 [5], which are all standard datasets widely used in generative literature. STL-10 consists of unlabeled real-world color images, while CIFAR-10 is provided with class labels, which enables us to conduct conditional generation tasks. For STL-10, we also shrink the images into as in previous works. The pixel values of all images are rescaled into .

Network Architecture. For all experiment settings, we follow Spectral Normalization GAN (SNGAN) [25] and adopt the same Residual Network (ResNet) [14] structures and hyperparameters, which presently is the state-of-the-art implementation of WGAN. Details can be found in Appendix. D. We take their open-source code and pre-trained model as the base model for the experiments on CIFAR-10. For STL-10, since there is no pre-trained model available to reproduce the results, we train the SNGAN from scratch and take it as the base model.

Model Inception FID CIFAR-10 Unconditional PixelCNN [38] PixelIQN [29] EBM [8] WGAN-GP [13] MoLM [30] SNGAN [25] ProgressiveGAN [19] - NCSN [32] DCGAN w/ DRS(cal) [3] - DCGAN w/ MH-GAN(cal) [36] - ResNet-SAGAN w/ DOT [33] SNGAN-DCD (Pixel) SNGAN-DCD (Latent) CIFAR-10 Conditional EBM [8] SNGAN [25] SNGAN-DCD (Pixel) SNGAN-DCD (Latent) BigGAN [4]

5.2.2 Results

Model Inception FID SNGAN [25] SNGAN-DCD (Pixel) SNGAN-DCD (Latent)

For quantitative evaluation, we report the inception score [31] and FID [15] scores on CIFAR-10 in Tab. 1 and STL-10 in Tab. 2. As shown in the Tab. 1, in pixel space, by introducing the proposed DCD algorithm, we achieve a significant improvement of inception score over the SNGAN. The reported inception score is even higher than most values achieved by class-conditional generative models. Our FID score of on CIFAR-10 is competitive with other top generative models. When the DCD is conducted in the latent space, we further achieve a inception score and a FID, which is a new state-of-the-art performance of IS. When combined with label information to perform conditional generation, we further improve the FID to , which is comparable with current state-of-the-art large-scale trained models [4]. Some visualization of generated examples can be found in Fig 3, which demonstrates that the Markov chain is able to generate more realistic samples, suggesting that the MCMC process is meaningful and effective. Tab. 2 shows the performance on STL-10, which demonstrates that as a generalized method, DCD is not over-fitted to the specific data CIFAR-10. More experiment details and the generated samples of STL-10 can be found in Appendix. F.

6 Discussion and Future Work

Based on the density ratio estimation perspective, the discriminator in -GANs could be adapted to a wide range of application scenarios, such as mutual information estimation [18] and bias correction of generative models [12]. However, as another important branch in GANs’ research, the available information in WGANs discriminator is less explored. In this paper, we narrow down the scope of discussion and focus on the problem of how to leverage the discriminator of WGANs to further improve the sample quality in image generation. We conduct a comprehensive theoretical study on the informativeness of discriminator in different kinds of GANs. Motivated by the theoretical progress in the literature of WGANs, we investigate the possibility of turning the discriminator of WGANs into an energy function and propose a fine-tuning procedure of WGANs named as "discriminator contrastive divergence". The final image generation process is semi-amortized, where the generator acts as an initial state, and then several steps of Langevin dynamics are conducted. We demonstrate the effectiveness of the proposed method on several tasks, including both synthetic and real-world image generation benchmarks.

It should be noted that the semi-amortized generation allows a trade-off between the generation quality and sampling speed, which holds a slower sampling speed than a direct generation with a generator. Hence the proposed method is suitable to the application scenario where the generation quality is given vital importance. Another interesting observation during the experiments is the discriminator contrastive divergence surprisingly reduces the occurrence of adversarial samples during training, so it should be a promising future direction to investigate the relationship between our method and bayesian adversarial learning.

We hope our work helps shed some light on a generalized view to a method of connecting different GANs and energy-based models, which will stimulate more exploration into the potential of current deep generative models.

References

- Ali and Silvey [1966] Syed Mumtaz Ali and Samuel D Silvey. A general class of coefficients of divergence of one distribution from another. Journal of the Royal Statistical Society: Series B (Methodological), 28(1):131–142, 1966.

- Arjovsky et al. [2017] Martin Arjovsky, Soumith Chintala, and Léon Bottou. Wasserstein gan. arXiv preprint arXiv:1701.07875, 2017.

- Azadi et al. [2018] Samaneh Azadi, Catherine Olsson, Trevor Darrell, Ian Goodfellow, and Augustus Odena. Discriminator rejection sampling. arXiv preprint arXiv:1810.06758, 2018.

- Brock et al. [2018] Andrew Brock, Jeff Donahue, and Karen Simonyan. Large scale gan training for high fidelity natural image synthesis. arXiv preprint arXiv:1809.11096, 2018.

- Coates et al. [2011] Adam Coates, Andrew Ng, and Honglak Lee. An analysis of single-layer networks in unsupervised feature learning. In Proceedings of the fourteenth international conference on artificial intelligence and statistics, pages 215–223, 2011.

- Cover and Thomas [2012] Thomas M Cover and Joy A Thomas. Elements of information theory. John Wiley & Sons, 2012.

- Dinh et al. [2016] Laurent Dinh, Jascha Sohl-Dickstein, and Samy Bengio. Density estimation using real nvp. arXiv preprint arXiv:1605.08803, 2016.

- Du and Mordatch [2019] Yilun Du and Igor Mordatch. Implicit generation and generalization in energy-based models. arXiv preprint arXiv:1903.08689, 2019.

- Finn et al. [2016] Chelsea Finn, Paul Christiano, Pieter Abbeel, and Sergey Levine. A connection between generative adversarial networks, inverse reinforcement learning, and energy-based models. arXiv preprint arXiv:1611.03852, 2016.

- Goodfellow et al. [2014] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. In Advances in neural information processing systems, pages 2672–2680, 2014.

- Grathwohl et al. [2019] Will Grathwohl, Kuan-Chieh Wang, Jörn-Henrik Jacobsen, David Duvenaud, Mohammad Norouzi, and Kevin Swersky. Your classifier is secretly an energy based model and you should treat it like one. arXiv preprint arXiv:1912.03263, 2019.

- Grover et al. [2019] Aditya Grover, Jiaming Song, Alekh Agarwal, Kenneth Tran, Ashish Kapoor, Eric Horvitz, and Stefano Ermon. Bias correction of learned generative models using likelihood-free importance weighting. arXiv preprint arXiv:1906.09531, 2019.

- Gulrajani et al. [2017] Ishaan Gulrajani, Faruk Ahmed, Martin Arjovsky, Vincent Dumoulin, and Aaron C Courville. Improved training of wasserstein gans. In Advances in neural information processing systems, pages 5767–5777, 2017.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- Heusel et al. [2017] Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. Gans trained by a two time-scale update rule converge to a local nash equilibrium. In Advances in Neural Information Processing Systems, pages 6626–6637, 2017.

- Hiriart-Urruty and Lemaréchal [2012] Jean-Baptiste Hiriart-Urruty and Claude Lemaréchal. Fundamentals of convex analysis. Springer Science & Business Media, 2012.

- Hjelm et al. [2017] R Devon Hjelm, Athul Paul Jacob, Tong Che, Adam Trischler, Kyunghyun Cho, and Yoshua Bengio. Boundary-seeking generative adversarial networks. arXiv preprint arXiv:1702.08431, 2017.

- Hjelm et al. [2018] R Devon Hjelm, Alex Fedorov, Samuel Lavoie-Marchildon, Karan Grewal, Phil Bachman, Adam Trischler, and Yoshua Bengio. Learning deep representations by mutual information estimation and maximization. arXiv preprint arXiv:1808.06670, 2018.

- Karras et al. [2017] Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196, 2017.

- Kim and Bengio [2016] Taesup Kim and Yoshua Bengio. Deep directed generative models with energy-based probability estimation. arXiv preprint arXiv:1606.03439, 2016.

- Kingma and Ba [2014] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- Krizhevsky et al. [2009] Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. Technical report, Citeseer, 2009.

- Kumar et al. [2019] Rithesh Kumar, Anirudh Goyal, Aaron Courville, and Yoshua Bengio. Maximum entropy generators for energy-based models. arXiv preprint arXiv:1901.08508, 2019.

- Li et al. [2017] Yingzhen Li, Richard E Turner, and Qiang Liu. Approximate inference with amortised mcmc. arXiv preprint arXiv:1702.08343, 2017.

- Miyato et al. [2018] Takeru Miyato, Toshiki Kataoka, Masanori Koyama, and Yuichi Yoshida. Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957, 2018.

- Müller [1997] Alfred Müller. Integral probability metrics and their generating classes of functions. Advances in Applied Probability, 29(2):429–443, 1997.

- Nguyen et al. [2010] XuanLong Nguyen, Martin J Wainwright, and Michael I Jordan. Estimating divergence functionals and the likelihood ratio by convex risk minimization. IEEE Transactions on Information Theory, 56(11):5847–5861, 2010.

- Nowozin et al. [2016] Sebastian Nowozin, Botond Cseke, and Ryota Tomioka. f-gan: Training generative neural samplers using variational divergence minimization. In Advances in neural information processing systems, pages 271–279, 2016.

- Ostrovski et al. [2018] Georg Ostrovski, Will Dabney, and Rémi Munos. Autoregressive quantile networks for generative modeling. arXiv preprint arXiv:1806.05575, 2018.

- Ravuri et al. [2018] Suman Ravuri, Shakir Mohamed, Mihaela Rosca, and Oriol Vinyals. Learning implicit generative models with the method of learned moments. arXiv preprint arXiv:1806.11006, 2018.

- Salimans et al. [2016] Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen. Improved techniques for training gans. In Advances in neural information processing systems, pages 2234–2242, 2016.

- Song and Ermon [2019] Yang Song and Stefano Ermon. Generative modeling by estimating gradients of the data distribution. In Advances in Neural Information Processing Systems, pages 11895–11907, 2019.

- Tanaka [2019] Akinori Tanaka. Discriminator optimal transport. arXiv preprint arXiv:1910.06832, 2019.

- Teh et al. [2003] Yee Whye Teh, Max Welling, Simon Osindero, and Geoffrey E Hinton. Energy-based models for sparse overcomplete representations. Journal of Machine Learning Research, 4(Dec):1235–1260, 2003.

- Theis et al. [2015] Lucas Theis, Aäron van den Oord, and Matthias Bethge. A note on the evaluation of generative models. arXiv preprint arXiv:1511.01844, 2015.

- Turner et al. [2018] Ryan Turner, Jane Hung, Yunus Saatci, and Jason Yosinski. Metropolis-hastings generative adversarial networks. arXiv preprint arXiv:1811.11357, 2018.

- Uehara et al. [2016] Masatoshi Uehara, Issei Sato, Masahiro Suzuki, Kotaro Nakayama, and Yutaka Matsuo. Generative adversarial nets from a density ratio estimation perspective. arXiv preprint arXiv:1610.02920, 2016.

- Van den Oord et al. [2016] Aaron Van den Oord, Nal Kalchbrenner, Lasse Espeholt, Oriol Vinyals, Alex Graves, et al. Conditional image generation with pixelcnn decoders. In Advances in neural information processing systems, pages 4790–4798, 2016.

- Wainwright et al. [2008] Martin J Wainwright, Michael I Jordan, et al. Graphical models, exponential families, and variational inference. Foundations and Trends® in Machine Learning, 1(1–2):1–305, 2008.

- Zhao et al. [2016] Junbo Zhao, Michael Mathieu, and Yann LeCun. Energy-based generative adversarial network. arXiv preprint arXiv:1609.03126, 2016.

- Zhou et al. [2019] Zhiming Zhou, Jiadong Liang, Yuxuan Song, Lantao Yu, Hongwei Wang, Weinan Zhang, Yong Yu, and Zhihua Zhang. Lipschitz generative adversarial nets. arXiv preprint arXiv:1902.05687, 2019.

Appendix A Proof of Theorem 2

It should be noticed that Theorem. 2 can be generalized to that Lipschitz continuity with -norm (Euclidean Distance) can guarantee that the gradient is directly pointing towards some sample[41]. We introduce the following lemmas, and Theorem. 2 is a special case.

Let be such that , and we define with .

Lemma 1.

If is -Lipschitz with respect to and , then .

Proof.

As we know is -Lipschitz, with the property of norms, we have

| (16) |

implies all the inequalities is equalities. Therefore, . ∎

Lemma 2.

Let be the unit vector . If , then equals to .

Proof.

Then we derive the formal proof of Theorem 2.

Proof.

Assume , if is -Lipschitz with respect to and is differentiable at , then . Let be the unit vector . We have

| (17) |

Because the equality holds only when , we have that . ∎

Appendix B Proof of Theorem 3

Theorem. 3 states that following the following procedure as introduced in [33], there is non-unique stationary distribution. The complete procedure is to find the following for :

| (18) |

To find the corresponding , the following gradient based update is conducted:

| (19) |

For all the points in the linear interpolation of and target as defined in the proof of Theorem 2,

| (20) |

which indicates all points in the linear interpolation satisfy the stationary condition.

Appendix C Proof of Proposition 1

Proposition. 1 is the direct result of the following Lemma. 3. Following [24], we provide the complete proof as following.

Lemma 3.

[6] Let and be two distributions for . Let and be the corresponded distributions of state at time , induced by the transition kernel . Then for all .

Proof.

∎

Appendix D Network architectures

ResNet architectures for CIFAR-10 and STL-10 datasets. We use similar architectures to the ones used in [13].

| dense, |

| ResBlock up 256 |

| ResBlock up 256 |

| ResBlock up 256 |

| BN, ReLU, 33 conv, 3 Tanh |

| RGB image |

|---|

| ResBlock down 128 |

| ResBlock down 128 |

| ResBlock 128 |

| ResBlock 128 |

| ReLU |

| Global sum pooling |

| dense 1 |

Appendix E Discussions on Objective Functions

Optimization of the standard objective of WGAN, i.e. with in Eq. 1, are found to be unstable due to the numerical issues and free offset [41, 25]. Instead, several surrogate losses are actually used in practice. For example, the logistic loss() and hinge loss() are two widely applied objectives. Such surrogate losses are valid due to that they are actually the lower bounds of the Wasserstain distance between the two distributions of interest. The statement can be easily derived by the fact that and . A more detailed discussion could also be found in [33].

Appendix F More Experiment Details

F.1 CIFAR-10

For the meta-parameters in DCD Algorithm 1, when the MCMC process is conducted in the pixel space, we choose as the number of MCMC steps , and set the step size as and the standard deviation of the Gaussian noise as , while for the latent space we set as , as and the deviation as . Adam optimizer [21] is set with learning rate with . We use critic updates per generator update, and a batch size of .

F.2 STL-10

We show generated samples of DCD during Langevin dynamics in Fig. 4. We run 150 steps of MCMC steps and plot generated sample for every 10 iterations. The step size is set as and the noise is set as .