Discovering Robust Convolutional Architecture at Targeted Capacity:

A Multi-Shot Approach

Abstract

Convolutional neural networks (CNNs) are vulnerable to adversarial examples, and studies show that increasing the model capacity of an architecture topology (e.g., width expansion) can bring consistent robustness improvements. This reveals a clear robustness-efficiency trade-off that should be considered in architecture design. In this paper, considering scenarios with capacity budget, we aim to discover adversarially robust architecture at targeted capacities. Recent studies employed one-shot neural architecture search (NAS) to discover robust architectures. However, since the capacities of different topologies cannot be aligned in the search process, one-shot NAS methods favor topologies with larger capacities in the supernet. And the discovered topology might be suboptimal when augmented to the targeted capacity. We propose a novel multi-shot NAS method to address this issue and explicitly search for robust architectures at targeted capacities. At the targeted FLOPs of 2000M, the discovered MSRobNet-2000 outperforms the recent NAS-discovered architecture RobNet-large under various criteria by a large margin of 4%-7%. And at the targeted FLOPs of 1560M, MSRobNet-1560 surpasses another NAS-discovered architecture RobNet-free by 2.3% and 1.3% in the clean and PGD7 accuracies, respectively. All codes are available at https://github.com/walkerning/aw_nas.

1Department of Electronic Engineering, Tsinghua University

2Wechat group, Tencent

1 Introduction

Convolutional neural networks (CNNs) are known to be vulnerable to adversarial examples (i.e., adversarially crafted imperceptible perturbations) [33]. For defending against adversarial examples, extensive efforts are devoted to designing training or regularization techniques [6, 18, 34] and introducing special modules (e.g., randomness injection [9], generative model [30, 31]). Currently, only a few studies [10, 5, 7] have explored the robustness characteristics from the architectural aspect.

Previous studies [18] have observed that increasing the capacity of topology by width expansion brings consistent robustness improvements. Therefore, one can simply expand the architecture width for higher robustness at the cost of increasing model capacity. Nevertheless, in actual deployment, there usually exists a capacity budget requirement on the architecture. To discover superior architectures when augmented to the capacity budget by width expansion, for the first time, we propose to employ neural architecture search (NAS) to search for adversarially robust architectures at targeted capacities.

Currently, parameter-sharing techniques are widely used in NAS methods to boost the search efficiency [27, 16]. In parameter-sharing NAS, candidate architectures are evaluated directly using the weights in an over-parametrized super network (i.e., supernet). After a topology is found, model augmentation along the width or depth dimension is usually applied to construct a larger final architecture. One-shot NAS is a specific type of parameter-sharing NAS, in which the search process is decoupled into two phases: 1) Train a supernet; 2) Search while using the supernet weights to evaluate candidate architectures.

Some studies [5, 7] that apply parameter-sharing NAS for robustness ignore the robustness-efficiency trade-off. Guo et. al. [10] consider the capacity issue in their one-shot NAS flow, but the search is not targeting certain capacities. And there exists a problem in one-shot NAS that hinders its effectiveness in targeting certain capacities: the model capacity of different topologies cannot be aligned in the supernet, and one-shot NAS favors larger topologies which can be suboptimal at the targeted capacities. As illustrated in Fig. 1, Topology 2 is discovered by one-shot NAS since it has a high one-shot reward. However, Topology 1 with a lower reward and capacity in the supernet might outperform Topology 2 when they are aligned to the same capacity.

In this paper, we propose a novel multi-shot NAS method to search for adversarially robust architectures at targeted capacities, while taking full advantage of the parameter sharing technique. The core of the multi-shot NAS method is to inter- or extra-polate the performances evaluated by multiple “one-shot” supernets of different sizes to estimate the “multi-shot” reward at the targeted capacity. The contributions of this paper are as follows:

-

•

We propose a novel multi-shot NAS method to search for adversarially robust architectures at targeted capacity. We verify that the multi-shot evaluation strategy can bridge the correlation gap brought by the capacity misalignment issue in one-shot supernets.

-

•

To improve the efficiency of the NAS for robustness process, we use the FGSM reward as a reliable and efficient proxy of more accurate robustness criteria, and conduct a ranking correlation analysis of various robustness criteria to verify its rationality.

-

•

Experimental results show that at targeted capacities, our discovered MSRobNet architectures outperform manually-designed ones and recent NAS-discovered ones significantly.

2 Related Work

2.1 Adversarial Attacks and Defenses

Adversarial Attacks Current studies usually run adversarial attacks to evaluate the robustness of models. Commonly used white-box attacking methods can be classified into local approximation based ones and optimization based ones. Local approximation based methods craft adversarial inputs by following update rules derived with certain local approximations, including the fast gradient sign method (FGSM) and its variants [8, 14], saliency-based [25] methods, local decision boundary method [20]. On the other hand, optimization-based attacking methods formulate the attack as one or multiple optimization problems and solve the optimization problems approximately, of which the most popular ones are the C&W attack and its variants [4, 3].

Adversarial Defenses Existing adversarial defenses can be classified into several categories: 1) adversarial example detection [19, 3]; 2) input mapping or processing [31, 9]; 3) regularization techniques [26, 6]; 4) adversarial training [18, 34]. Among the extensive literature, many defenses are proved to be not useful to stronger attacks [3, 1], and adversarial training is acknowledged as the most effective defense technique.

Robust CNN Architecture Design In contrast to the aforementioned defenses, we aim to improve the adversarial robustness of CNNs by designing more robust neural architectures. The two most related studies to our work are 1) Guo et al. [10] employ one-shot NAS to investigate the architecture patterns that are beneficial to adversarial robustness, and find that densely connected pattern is beneficial. They analyze architectures in three coarsely-partitioned capacity range (i.e., small, medium, large), by randomly sampling 100 architecture for each range. In contrast, we develop the multi-shot evaluation strategy to estimate the reward at targeted capacities, and conduct a guided search using the estimated reward. 2) Chen et al. [5] employ an anti-bandit algorithm to improve the search efficiency. To accelerate the search process, they employ FGSM adversarial training in the search process, while the final model robustness is evaluated with PGD attacks. However, we find that using FGSM adversarial training during the search process will result in uncorrelated evaluation (See Sec. 5.3.4).

2.2 Neural Architecture Search

Problem Definition Neural architecture search has been recently employed to automatically discover neural architectures for various tasks [35, 27, 16, 29]. The basic formalization of the NAS problem is in Eq. 1, and many studies focus on approximately solving this problem efficiently.

| (1) | ||||

where denotes the architecture search space; denote the data sampled from the training and validation dataset, respectively; denotes the reward used to instruct the search process, and denotes the loss function for backpropagation to train the weights .

The vanilla NAS algorithm [35] is extremely slow, since thousands of architectures need to be evaluated, and the evaluation of each architecture involves a separate training process to get .

Parameter-sharing Techniques and One-shot NAS A widely-used technique to accelerate the evaluation of each individual architecture is to use parameter-sharing techniques [27, 16]. They construct a super network such that all architectures in the search space are sub-architectures of the supernet. Then all architectures can be evaluated using a subset of the weights in the supernet. In this way, the training costs of architectures are amortized to only train an over-parametrized supernet. One-shot NAS [2] is a specific type of parameter-sharing based NAS method, in which the supernet training process and the architecture search process are decoupled.

Predictor-based Controller To reduce the number of architectures needed to be evaluated (i.e., improve the sample efficiency of the search process), predictor-based controllers [17, 12, 22] predict the architectures’ performances, and only sample promising architectures. In this way, fewer architectures need to go through the relatively expensive evaluation procedure, which includes a separate inference process on the validation data split and sometimes a separate training process on the training data split.

3 Preliminary: One-shot NAS Workflow

A typical one-shot NAS workflow goes as follows: 1) Train a supernet; 2) Conduct architecture search while using the supernet weights to evaluate candidate architectures. 3) In cell-based search spaces [27, 16], the model augmentation technique (increasing the channel/layer number) is usually applied on the discovered topology to construct a large final architecture. 4) Finally, the augmented architecture is trained on the dataset.

Previous work [18] has shown that expanding the model width brings consistent robustness improvements at the cost of increasing capacity. Then, a natural question is that can we search for a topology that is superior at certain capacities with one-shot NAS? Unfortunately, since the parameters of different architectures are shared, their capacity cannot be easily aligned. Specifically, the FLOPs (number of floating-point operations) of different topologies can vary in a large range in the supernet (e.g., for our search space, 304M-1344M with 44 init channels). Topologies with larger capacity in the supernet tend to have better one-shot performances. However, these topologies might no longer outperform those with smaller capacity when augmented to a targeted capacity. A motivating illustration is shown in Fig. 1.

4 Problem Definition

The problem of searching for an adversarially robust architecture at the targeted capacity can be formalized as

| (2) | ||||

where model augmentation in the channel (i.e., width) dimension is explicitly included to align architecture capacities to the target : denotes the augmented architecture of topology with init channel number ; refers to the capacity of the augmented architecture; is an positive integer found by minimizing the difference of the augmented architecture capacity and the targeted capacity . denotes the -ball around under norm, and both the reward and the loss are calculated in a min-max fashion. Compared with Eq. 1, the search reward changes from to , where the two most notable changes are 1) The min-max formulation of adversarial robustness [18]; 2) Model augmentation applied for each candidate to get an augmented architecture with a capacity .

In this paper, we propose a multi-shot strategy that can take advantage of the parameter sharing technique to accelerate the evaluation in this “NAS for targeted capacity” problem. This work adopts FLOPs to measure model capacity. Nevertheless, the multi-shot evaluation method can be extended to other capacity measures.

5 Multi-shot NAS for Adversarial Robustness

The overall workflow is illustrated in Fig. 2. The multi-shot NAS workflow consists of three steps: 1) Supernet training: Adversarially train supernet with init channels , and the corresponding shared weights are . A 7-step projected gradient descent attack (PGD7) [18] is used for the adversarial training as an approximation of the min-max optimization problem of . 2) Function family selection: Select a extrapolation function family (Sec. 5.2). 3) Architecture search: Explore the search space (Sec. 5.3), and for each candidate topology, the multi-shot evaluation strategy is used to estimate its reward at the targeted capacity (Sec. 5.1).

5.1 Multi-shot Evaluation Strategy

To estimate the reward of a topology at the targeted capacity , is firstly evaluated in all supernets to get its one-shot rewards . Then, these one-shot rewards together with the corresponding one-shot capacity are used to fit the extrapolation function family , where is the parameters. After getting with nonlinear least square fitting, the fitted function is used to estimate the reward at the targeted capacity .

| (3) |

As we know, one-shot evaluation is the approximated evaluation strategy for the vanilla NAS problem (Eq. 1): Instead of finding for each topology , the shared weights in the supernet are used to get the approximate one-shot reward. Correspondingly, multi-shot evaluation is the approximated evaluation strategy for the “NAS at targeted capacity” problem (Eq. 2): Instead of actually constructing the augmented network at the targeted capacity and training its weights to get , we estimate the targeted reward by inter- or extra-polating multiple one-shot rewards.

Note that our method can capture the increasing tendency of each architecture’s performance as the capacity increases. In this way, the search process takes the influence brought by model augmentation into consideration, instead of only treating the model augmentation as a post-processing step [11, 16, 27].

One-shot Evaluation and BatchNorm Calibration During each one-shot evaluation, we run PGD7 or FGSM attacks on the validation dataset split and average the clean and adversarial accuracy as the reward . Unlike previous studies [10] that train each sub-architecture separately for several epochs, we use the shared weights for sub-architecture evaluation. However, there exist problems with batch normalization (BatchNorm): During the supernet training process, the accumulation of BatchNorm statistics is incorrect for each architecture. Thus, we calibrate BatchNorm statistics using the first 10 batches in the validation split.

5.2 Extrapolation Function Family Selection

Empirical observation [18] shows that as the model capacity gets larger, the increase of the model robustness saturates. Due to this observation, we choose 7 parametric saturating function families and list them in the appendix. After training supernets, we select the appropriate extrapolation function family from by calculating the average leave-one-out (LOO) ranking correlation, as also illustrated in Fig. 2. Specifically, to assess the function family , the leave--out ranking correlation for each supernet is calculated as follows. For each topology , we leave its score in out and fit to get , and . Then we can get the estimated reward of in : . Denoting and for topologies as , the Spearman ranking correlation (SpearmanR) between the estimated and actual one-shot rewards of the topologies in is

| (4) |

where randomly sampled topologies are used in our experiments. After calculating , we choose the function family with the highest average LOO SpearmanR as the extrapolation family:

| (5) |

5.3 Search with Multi-shot Evaluation Strategy

After supernet training and function family selection, we explore the search space using the rewards evaluated by the multi-shot evaluation strategy (Sec. 5.1). Two different search strategies (controllers) are used: 1) Evolutionary search; 2) Predictor-based search.

Note that since the supernet training phase and the architecture search phase is decoupled, after the supernets are trained only once, they can be easily reused in various architecture search processes with different capacity measures (e.g., #Params, latency) and targeting different capacities.

5.3.1 Search Space

Fig. 3 summarizes the cell-level search space design and the cell layout. Our cell-level search space design is identical to [10]: There are four internal nodes, and each internal node can choose an arbitrary number of previous nodes as its inputs to enable discovering densely connected patterns. Four primitives are included: none, skip connection, 3x3 separable convolution, residual 3x3 separable convolution.

We experiment with two types of cell layout: cell-wise and stage-wise. In the cell-wise layout, each of the eight cells has a distinct topology ( architectures in the search space). In the stage-wise layout, the cells in each stage share the same topology, and the search space size is enormously reduced to .

5.3.2 Evolutionary Search

We use the tournament-based evolutionary search strategy [29] (population size 100, tournament size 10) to explore the cell-wise search space.

In the previous NAS for robustness study [10], each candidate architecture is finetuned for epochs during the evaluation, which brings large computational costs. In contrast, we eliminate the separate training costs during the search process by using the BatchNorm calibration technique (Sec. 5.1). By analyzing the search process (see the appendix for concrete analyses), we can see that as more and more architectures are explored, the relative speedup of multi-shot NAS to the NAS method with separate training phases increases and approaches .

5.3.3 Predictor-based Search

Predictor-based search strategy (controller): The multi-shot evaluation strategy utilizes the parameter sharing technique and the extrapolation scheme to avoid separate augmentation and training costs for each architecture. To further reduce the costs of separate inferences on the validation data, we propose to train an efficient architecture performance predictor and utilize this predictor to “pick out” promising architectures. In this way, only these promising architectures need to be evaluated on the validation data.

Specifically, we use a graph-based encoder [22] to encode the topology of each cell into a continuous embedding, and then concatenate the embeddings of the four stage-wise cell topologies (S0, S1, S2, R) as the architecture embedding. Then the architecture embedding is fed into an MLP to get a predicted score.

The proposed predictor-based flow goes as follows. We randomly sample 200 initial architectures and evaluate them with the multi-shot evaluator. Then, the architecture-performance pairs are used to train the initial predictor. After that, we run a predictor-based search for stages. In each stage, architectures are fed into the predictor, and promising ones are picked out to be evaluated with the multi-shot evaluator. The evaluation results are then used to tune the predictor. The predictor training settings and how we pick out promising architectures (i.e., the inner search process) are elaborated in the appendix.

In our predictor-based search flow, architectures are assessed by the predictor, and only 3% of these topologies () are picked and assessed with the multi-shot evaluation strategy.

Stage-wise search space: With limited architectural information available for learning, predictors can provide better predictions in a smaller search space. And during the experiment, we find that the cellwise search space is indeed too large for the initial predictor to make meaningful predictions. Therefore, we only conduct the predictor-based search flow in the much smaller stage-wise search space, in which the predictor can be effectively trained. On the other hand, a smaller search space is easier to explore, which also facilitates a sufficient exploration in a limited search budget. It is worthy to note that since the stage-wise search space is a sub-search space of the cell-wise one, the supernets could be directly reused and no extra training is needed.

5.3.4 FGSM Reward as the Evaluation Proxy

It is slow to evaluate the robustness of a model accurately, and this brings a large computational burden to the NAS process where thousands of architectures need to be evaluated. For example, using PGD7 attack to measure the model robustness is almost as costly as clean model tests. Fortunately, NAS does not necessarily require the reward to be absolutely accurate, as long as the reward can reveal the relative ranking of architectures [22]. Thus, to alleviate the computational burden, we propose to use the FGSM attack as an efficient evaluation proxy of the PGD7 attack to measure the adversarial accuracy. Moreover, we only calculate the reward on the first half of the validation split.

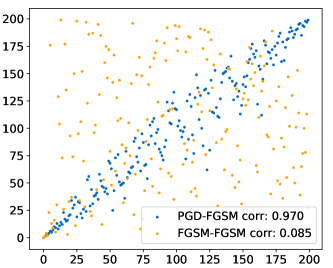

We verify the rationality of using FGSM as the proxy reward in Fig. 4. The notation “ATTACK1-ATTACK2” means that “ATTACK1” is used in supernet adversarial training, and “ATTACK2” is used in the parameter-sharing evaluation. We can see that as long as a stronger attack (PGD7) is used in adversarial training to avoid gradient masking, the FGSM rewards are highly correlated with the PGD rewards on 200 randomly sampled topologies (SpearmanR=0.97). The correlation analyses of more robustness criteria are discussed in Appendix D.

Note that a previous study on NAS for robustness [5] proposes to use FGSM attack during the whole search phase for efficiency. However, Fig. 4 illustrates that using a much weaker attack FGSM for adversarial training causes the supernet evaluation to be completely uncorrelated (SpearmanR=0.085).

By virtue of using FGSM proxy reward and fewer validation images, we accelerate each multi-shot evaluation by roughly 8: From 25 to 3 minutes to evaluate each topology on a 2080Ti GPU. That is to say, while providing better reward estimation at targeted capacities (see Fig. 5), each multi-shot evaluation could be finished in the same amount of time as a vanilla one-shot evaluation.

6 Experiments

We conduct multi-shot NAS on CIFAR-10, and evaluate the discovered architectures on the CIFAR-10, CIFAR-100, SVHN and Tiny-ImageNet [13, 21] datasets.

| Architecture | #Param (M) | #FLOPs (M) | Accuracies (%) | Distances (median ) | |||||

|---|---|---|---|---|---|---|---|---|---|

| Clean | FGSM | PGD7 | PGD20 | PGD100 | DeepFool | BIM | |||

| MobileNet-V2 | 2.30 | 182 | 77.0 | 53.0 | 50.1 | 48.0 | 47.8 | 3.07 | 3.03 |

| VGG-16 | 14.73 | 626 | 79.9 | 53.7 | 50.4 | 48.1 | 47.9 | 3.25 | 3.08 |

| ResNet-18 | 11.17 | 1110 | 83.9 | 57.9 | 54.5 | 51.9 | 51.5 | 3.98 | 3.41 |

| MSRobNet-1000 | 3.16 | 1018 | 84.50.4 | 59.60.2 | 55.70.2 | 52.70.3 | 52.30.4 | 4.080.05 | 3.510.03 |

| RobNet-free [10] | 5.49 | 1560 | 82.8 | 58.4 | 55.1 | 52.7 | 52.6% | 3.67 | 3.47 |

| MSRobNet-1560 | 5.30 | 1588 | 85.10.2 | 60.40.3 | 56.40.4 | 53.40.3 | 53.10.3 | 4.340.05 | 3.600.02 |

| MSRobNet-1560-P | 4.88 | 1565 | 85.00.2 | 59.50.3 | 55.60.3 | 52.70.7 | 52.30.7 | 4.210.09 | 3.520.04 |

| RobNet-large [10] | 6.89 | 2060 | 78.6 | 55.0 | - | 49.4 | 49.2 | - | - |

| RobNet-large-v2 [10] | 33.42 | 10189 | 85.7 | 57.2 | - | 50.5 | 50.3 | - | - |

| MSRobNet-2000 | 6.46 | 2009 | 85.70.3 | 60.60.3 | 56.60.3 | 53.60.4 | 53.20.5 | 4.330.10 | 3.600.02 |

| MSRobNet-2000-P | 5.74 | 2034 | 85.70.3 | 60.80.4 | 56.50.3 | 53.50.3 | 53.10.3 | 4.490.06 | 3.600.03 |

| Target/Substitute | VGG-16 | ResNet-18 | MobileNet-V2 | MSRN-1560 | MSRN-2000 | MSRN-1560-P | MSRN-2000-P |

| VGG-16 | 56.7% | 58.6% | 60.0% | 59.7% | 59.9% | 59.8% | 60.2% |

| ResNet-18 | 62.5% | 59.3% | 64.7% | 61.1% | 61.3% | 61.3% | 61.5% |

| MobileNet-V2 | 57.8% | 58.0% | 54.7% | 58.8% | 59.0% | 58.7% | 59.0% |

| MSRN-1560 | 66.2% | 63.4% | 67.3% | 61.5% | 62.2% | 62.4% | 61.6% |

| MSRN-2000 | 66.6% | 64.1% | 68.0% | 62.8% | 61.6% | 62.9% | 62.7% |

| MSRN-1560-P | 65.6% | 62.6% | 67.0% | 62.2% | 61.8% | 61.8% | 62.0% |

| MSRN-2000-P | 67.3% | 64.7% | 68.6% | 63.1% | 63.2% | 63.3% | 62.0% |

6.1 Experimental Setup

6.1.1 Search Settings

During the search process, the original training dataset of CIFAR-10 is divided into two parts: training split (40000 images) and validation split (10000 images). The supernets are trained on the training split, and architecture rewards are evaluated on the validation split. PGD7 under norm with = and step size = is used for adversarial training. We train supernets with init channel number for 400 epochs. We use a batch size of 64, a weight decay of 1e-4, and an SGD optimizer with a momentum of 0.9. And the learning rate is set to 0.05 initially and decayed down to 0 following a cosine schedule.

After training supernets, we select the extrapolation function family with the described method in Sec. 5.2. Then, we use in the search for robust architectures at various targeted FLOPs: 1000M, 1560M, 2000M.

6.1.2 Adversarial Training and Testing

For the final comparison on CIFAR-10, CIFAR-100 and SVHN, we adversarially train the architectures for 110 epochs using PGD7 attacks with and step size , and other settings are also kept the same. Detailed settings are elaborated in the appendix.

To evaluate the adversarial robustness of the trained models, we apply the Fast Gradient Sign Method (FGSM) [8] with (4/255 for Tiny-ImageNet) and PGD [18] with different step numbers. On CIFAR-10, we also apply another two attacks that report the successful attacking distances using the Foolbox toolbox V2.4.0 [28] and its default settings, and report the median attacking distance on the test dataset: 1) DeepFool [20]; 2) Basic Iterative Method (BIM) [15].

6.2 Results on CIFAR-10

Tab. 1 compares the performances of the architectures under various adversarial attacks. The architectures discovered by our method are referred to as MSRobNet-, in which is the targeted FLOPs in multi-shot search. We train MSRobNets for 4 times with different seeds, and report the mean and standard deviation of their performances. As the baseline architectures, we choose several manually designed architectures, and we also compare with recent NAS-discovered ones [10] in a search space that is similar to ours. We can see that with similar FLOPs, the architectures discovered by multi-shot NAS significantly outperform the baseline architectures. For example, at the targeted FLOPs of 2000M, our discovered architectures, MSRobNet-2000 and MSRobNet-2000-P, surpass RobNet-large by a large margin of 4%-7% with fewer FLOPs and much fewer parameters. Also, at the targeted FLOPs of 1560M, MSRobNet-1560 (clean 85.1%, PGD7 56.4%) outperforms the recent NAS-discovered architecture RobNet-free (clean 82.8%, PGD7 55.1%) significantly.

In addition, we also run two baseline one-shot search methods. Denoting , the two baseline one-shot search methods are:

1) One-shot-rA: Search with reward ; 2) One-shot-div: Search with a scalarized reward that takes both the performance and capacity into consideration. The comparison of MSRobNets with the architectures discovered by baseline one-shot methods are presented in the appendix.

| Topology | Search Reward | One-shot Rewards / FLOPs (M) | Multi-shot Rewards | ||||

|---|---|---|---|---|---|---|---|

| (24c) | (44c) | (54c) | (64c) | ||||

| one-shot-rA-24c-P | (24c) | 0.594 / 329 | 0.623 / 1037 | 0.630 / 1539 | 0.636 / 2140 | 0.631 | 0.634 |

| one-shot-rA-44c-P | (44c) | 0.580 / 340 | 0.624 / 1072 | 0.639 / 1592 | 0.642 / 2213 | 0.635 | 0.642 |

| MSRobNet-1560-P | 0.569 / 253 | 0.619 / 805 | 0.638 / 1196 | 0.645 / 1666 | 0.645 | 0.652 | |

| MSRobNet-2000-P | 0.572 / 261 | 0.620 / 826 | 0.646 / 1228 | 0.648 / 1708 | 0.649 | 0.660 | |

| Architecture |

|

Clean | PGD20 | PGD100 | |

|---|---|---|---|---|---|

| VGG-16 | 626 | 51.5% | 25.8% | 25.8% | |

| ResNet-18 | 1100 | 59.2% | 29.9% | 29.7% | |

| MobileNet-V2 | 182 | 48.2% | 26.3% | 26.2% | |

| RobNet-free [10] | 1560 | 57.7% | 31.1% | 30.8% | |

| MSRobNet-1000 | 1019 | 60.1% | 31.2% | 31.1% | |

| MSRobNet-1560 | 1588 | 60.8% | 31.7% | 31.5% | |

| MSRobNet-2000 | 2009 | 61.6% | 31.6% | 31.5% |

Black-box Attacks

Tab. 2 shows the results of transfer-based black-box evaluation [24].

Specifically, we test the target model with PGD100 adversarial examples crafted on the substitute model and report the accuracy. To evaluate the adversarial transferability between models with the same architecture, we train another model of this architecture as the substitute model with the same training settings. We can see that compared to transferring between the baseline architectures, it is harder to transfer the attacks from baseline architectures to MSRobNets.

6.3 Transfer Results to Other Datasets

We train the architectures on CIFAR-100, SVHN, and Tiny-ImageNet. Tab. 4 and SVHN/Tiny-ImageNet results in the appendix show that MSRobNets outperform the baselines significantly with comparable FLOPs.

6.4 More Efficient Predictor-based Search

We conduct the predictor-based search using the multi-shot rewards targeting two FLOPs (1560M, 2000M), and the discovered topologies are named MSRobNet-1560-P and MSRobNet-2000-P, respectively. Their final performances on CIFAR-10 are reported in Tab. 1. The whole predictor-based search process only takes 1.3 GPU days.

We also conduct the predictor-based search using two baseline one-shot-rA rewards: , which are the one-shot rewards in the supernets with 24 and 44 init channels, respectively. Tab. 3 shows the one-shot rewards / FLOPs and multi-shot rewards of topologies discovered with different search rewards. We can see that as indicated by the motivating illustration in Fig. 1, one-shot search prefers topologies with larger one-shot capacity, while these topologies are no longer superior when augmented to a different capacity. For example, the topology one-shot-rA-24c-P discovered using reward has the highest and a high FLOPs of M with 24 init channels. However, in the 64-init-channel supernet, it only achieves with 2140M FLOPs, while MSRobNet-2000-P achieves with a smaller FLOPs of 1708M. That is to say, although one-shot-rA-24c-p is superior to MSRobNet-2000-P when their init channel number is 24, MSRobNet-2000-P surpasses one-shot-rA-24c-p when they are augmented to use 64 init channels (FLOPs around 2000M).

6.5 Function Family Selection

We choose “log power” as the extrapolation function family, and show the LOO ranking correlations of the 7 candidate function families in the appendix. Fig. 5 shows that for each leave-out supernet , the LOO ranking correlation of the multi-shot estimated rewards (blue bar) is higher than the correlations between and the one-shot rewards in other differently-sized supernets (triangles). This indicates that the multi-shot strategy effectively captures the effect brought by width expansion, and bridges the correlation gap brought by width difference.

7 Conclusion

This paper proposes a multi-shot neural architecture search (multi-shot NAS) framework to discover adversarially robust architectures at targeted capacities. Instead of using one supernet in one-shot NAS, multiple supernets with different capacities are constructed. By evaluating each topology with the inter- or extra-polation of multiple one-shot rewards, our method can explicitly search for superior architectures at targeted capacities. Experimental results demonstrate the effectiveness of the proposed method.

This work applies multi-shot NAS in the context of adversarial robustness, since the trade-off of capacity and adversarial robustness is very significant. Interesting directions for future work include: 1) Extending the multi-shot method to other application scenarios with a targeted capacity. 2) Sharing parameters between differently-sized supernets to reduce the supernet training cost.

References

- [1] Anish Athalye, Nicholas Carlini, and David Wagner. Obfuscated gradients give a false sense of security: Circumventing defenses to adversarial examples. arXiv preprint arXiv:1802.00420, 2018.

- [2] Gabriel Bender, P. Kindermans, Barret Zoph, V. Vasudevan, and Quoc V. Le. Understanding and simplifying one-shot architecture search. In ICML, 2018.

- [3] Nicholas Carlini and David Wagner. Adversarial examples are not easily detected: Bypassing ten detection methods. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, pages 3–14, 2017.

- [4] Nicholas Carlini and David Wagner. Towards evaluating the robustness of neural networks. In 2017 ieee symposium on security and privacy (sp), pages 39–57. IEEE, 2017.

- [5] Hanlin Chen, B. Zhang, Song Xue, Xuan Gong, Hong-Cheu Liu, Rongrong Ji, and D. Doermann. Anti-bandit neural architecture search for model defense. ArXiv, abs/2008.00698, 2020.

- [6] Moustapha Cisse, Piotr Bojanowski, Edouard Grave, Yann Dauphin, and Nicolas Usunier. Parseval networks: Improving robustness to adversarial examples. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pages 854–863. JMLR. org, 2017.

- [7] Minjing Dong, Yanxi Li, Yunhe Wang, and Chang Xu. Adversarially robust neural architectures. arXiv preprint arXiv:2009.00902, 2020.

- [8] Ian J. Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and harnessing adversarial examples. CoRR, abs/1412.6572, 2015.

- [9] Chuan Guo, Mayank Rana, Moustapha Cisse, and Laurens van der Maaten. Countering adversarial images using input transformations. In International Conference on Learning Representations, 2018.

- [10] Minghao Guo, Yuzhe Yang, Rui Xu, and Ziwei Liu. When nas meets robustness: In search of robust architectures against adversarial attacks. arXiv preprint arXiv:1911.10695, 2019.

- [11] Yibo Hu, Xiang Wu, and Ran He. Tf-nas: Rethinking three search freedoms of latency-constrained differentiable neural architecture search. arXiv preprint arXiv:2008.05314, 2020.

- [12] Kirthevasan Kandasamy, Willie Neiswanger, Jeff Schneider, Barnabas Poczos, and Eric P Xing. Neural architecture search with bayesian optimisation and optimal transport. In Advances in Neural Information Processing Systems, pages 2016–2025, 2018.

- [13] A. Krizhevsky. Learning multiple layers of features from tiny images. 2009.

- [14] Alexey Kurakin, Ian Goodfellow, and Samy Bengio. Adversarial machine learning at scale. arXiv preprint arXiv:1611.01236, 2016.

- [15] A. Kurakin, Ian J. Goodfellow, and S. Bengio. Adversarial examples in the physical world. ArXiv, abs/1607.02533, 2017.

- [16] Hanxiao Liu, Karen Simonyan, and Yiming Yang. Darts: Differentiable architecture search. arXiv preprint arXiv:1806.09055, 2018.

- [17] Renqian Luo, Fei Tian, Tao Qin, Enhong Chen, and Tie-Yan Liu. Neural architecture optimization. In Advances in Neural Information Processing Systems 31, pages 7816–7827. 2018.

- [18] Aleksander Madry, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu. Towards deep learning models resistant to adversarial attacks. In International Conference on Learning Representations, 2018.

- [19] Jan Hendrik Metzen, Tim Genewein, Volker Fischer, and Bastian Bischoff. On detecting adversarial perturbations. arXiv preprint arXiv:1702.04267, 2017.

- [20] Seyed-Mohsen Moosavi-Dezfooli, Alhussein Fawzi, and Pascal Frossard. Deepfool: a simple and accurate method to fool deep neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2574–2582, 2016.

- [21] Yuval Netzer, T. Wang, A. Coates, Alessandro Bissacco, B. Wu, and A. Ng. Reading digits in natural images with unsupervised feature learning. 2011.

- [22] Xuefei Ning, Yin Zheng, Tianchen Zhao, Yu Wang, and Huazhong Yang. A generic graph-based neural architecture encoding scheme for predictor-based nas. In The European Conference on Computer Vision (ECCV), 2020.

- [23] Tianyu Pang, Xiao Yang, Yinpeng Dong, Hang Su, and Jun Zhu. Bag of tricks for adversarial training. arXiv preprint arXiv:2010.00467, 2020.

- [24] Nicolas Papernot, Patrick McDaniel, and Ian Goodfellow. Transferability in machine learning: from phenomena to black-box attacks using adversarial samples. arXiv preprint arXiv:1605.07277, 2016.

- [25] Nicolas Papernot, Patrick McDaniel, Somesh Jha, Matt Fredrikson, Z Berkay Celik, and Ananthram Swami. The limitations of deep learning in adversarial settings. In 2016 IEEE European symposium on security and privacy (EuroS&P), pages 372–387. IEEE, 2016.

- [26] Nicolas Papernot, Patrick McDaniel, Xi Wu, Somesh Jha, and Ananthram Swami. Distillation as a defense to adversarial perturbations against deep neural networks. In 2016 IEEE Symposium on Security and Privacy (SP), pages 582–597. IEEE, 2016.

- [27] Hieu Pham, Melody Y Guan, Barret Zoph, Quoc V Le, and Jeff Dean. Efficient neural architecture search via parameter sharing. arXiv preprint arXiv:1802.03268, 2018.

- [28] Jonas Rauber, Wieland Brendel, and Matthias Bethge. Foolbox: A python toolbox to benchmark the robustness of machine learning models. In Reliable Machine Learning in the Wild Workshop, 34th International Conference on Machine Learning, 2017.

- [29] Esteban Real, Alok Aggarwal, Yanping Huang, and Quoc V Le. Regularized evolution for image classifier architecture search. In Proceedings of the aaai conference on artificial intelligence, volume 33, pages 4780–4789, 2019.

- [30] L Schott, J Rauber, M Bethge, and W Brendel. Towards the first adversarially robust neural network model on mnist. In Seventh International Conference on Learning Representations (ICLR 2019), pages 1–16, 2019.

- [31] Yang Song, Taesup Kim, Sebastian Nowozin, Stefano Ermon, and Nate Kushman. Pixeldefend: Leveraging generative models to understand and defend against adversarial examples. In International Conference on Learning Representations, 2018.

- [32] Christian Szegedy, V. Vanhoucke, S. Ioffe, Jon Shlens, and Z. Wojna. Rethinking the inception architecture for computer vision. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 2818–2826, 2016.

- [33] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. Intriguing properties of neural networks. arXiv preprint arXiv:1312.6199, 2013.

- [34] Hongyang Zhang, Yaodong Yu, Jiantao Jiao, Eric Xing, Laurent El Ghaoui, and Michael Jordan. Theoretically principled trade-off between robustness and accuracy. In International Conference on Machine Learning, pages 7472–7482, 2019.

- [35] Barret Zoph and Quoc V. Le. Neural architecture search with reinforcement learning. In ICLR, 2017.

Appendix A Extrapolation Function Family Selection

The 7 parametric saturating function families are

-

1.

Janoschek:

-

2.

vapor pressure:

-

3.

log log linear:

-

4.

ilog2:

-

5.

log power:

-

6.

MMF:

-

7.

log power rep:

The leave-one-out function selection scheme is summarized in Alg. 2. In practice, we find that all the candidate function families have a weak correlation between estimated and actual one-shot rewards in (supernet with init channel number 12). In addition, the capacity of is so small that FLOPs of almost all the topologies in it are far from the targeted capacity. Thus, we regard the estimation results in as outliers, and do not include when calculating .

The leave-one-out correlations of 7 function families are shown in Fig. A1. We choose “log power” as the extrapolation function family, since it has the highest average leave-one-out correlation (0.921).

Tab. 3 in the main text gives some evidence on our motivation. To give a more intuitive evidence on the motivating example in the main text, we show the extrapolation curves of two topologies (among the 200 randomly sampled topologies in our experiment) in Fig. A2. We can see that when aligned to the same capacity (FLOPs), topology 2 is more robust in the low-capacity region, while topology 1 is more robust in the high-capacity region. The two curves are fitted using the data points at the left of the dashed line. And we can see that the fitted curve can capture the tendency, thus manage to predict the relative ranking correctly at a larger capacity without actual training a large supernet (the leftmost triangular markers).

-

•

Evolutionary: search steps , population size , tournament size , PGD attack steps

-

•

Predictor-based: search stages , topologies sampled per stage , inner evolutionary search steps , PGD attack steps (FGSM, no random init)

Appendix B Experimental Setup

B.1 Search Strategy Settings

Evolutionary Search The search strategy is a tournament-based evolutionary method [29], with population size 100 and tournament size 10. In each step, the worst topology is removed from the population.

| Architecture | #Param (M) | #FLOPs (M) | Clean | FGSM | PGD7 | PGD20 | PGD100 |

|---|---|---|---|---|---|---|---|

| VGG-16 | 14.73 | 626 | 92.3% | 66.6% | 55.0% | 47.4% | 45.1% |

| ResNet-18 | 11.17 | 1100 | 92.3% | 73.5% | 57.4% | 51.2% | 48.8% |

| MobileNet-V2 | 2.30 | 182 | 93.9% | 73.0% | 61.9% | 55.7% | 53.9% |

| RobNet-free [10] | 5.49 | 1560 | 94.2% | 84.0% | 66.1% | 59.7% | 56.9% |

| MSRobNet-1000 | 3.16 | 1019 | 94.5% | 85.5% | 67.8% | 61.6% | 58.6% |

| MSRobNet-1560 | 5.30 | 1588 | 95.0% | 77.5% | 64.0% | 57.0% | 54.2% |

| MSRobNet-2000 | 6.56 | 2009 | 94.9% | 84.8% | 65.3% | 58.8% | 55.1% |

| Architecture | #Param (M) | #FLOPs (M) | Clean | FGSM | PGD5 | PGD20 | PGD100 |

|---|---|---|---|---|---|---|---|

| ResNet-18 | 11.28 | 1126 | 50.3% | 27.1% | 28.8% | 24.4% | 24.3% |

| ResNet-50 | 23.92 | 2612 | 52.2% | 27.8% | 29.4% | 25.2% | 25.0% |

| MSRobNet-1000 | 3.33 | 1056 | 52.3% | 28.7% | 30.6% | 26.5% | 26.4% |

| MSRobNet-1560 | 5.51 | 1633 | 52.9% | 28.1% | 29.7% | 25.9% | 25.6% |

Predictor-based Search The predictor-based search process is summarized in Alg. 1. We first randomly sample 200 stage-wise architectures, evaluate them, and train the initial predictor (Line 21). Then, the predictor-based search is run for stages. In each stage, architectures are sampled by the predictor-based controller (Line 23) and evaluated using the multi-shot strategy (Line 24). And then, the multi-shot evaluation results are used to tune the predictor (Line 25).

In the inner search process (Line 23), we run a tournament-based evolutionary search with population size 20 and tournament size 5. We conduct evolutionary search steps, and in each step, a mutated topology is assessed by the predictor, which is very efficient. And in every 50 steps, a topology with the highest predicted score in the current population is decided for the actual multi-shot evaluation. In each step, the topology with the lowest predicted score is removed from the population.

As for the predictor construction, we adapt a recent graph-based encoder [22] to our search space. Specifically, we encode the topology of each cell into a continuous vector, and then concatenate the embeddings of four cell topologies (S0, S1, S2, R) as the architecture embedding. Then the architecture embedding is fed into an MLP to get a predicted score. The operation embedding dimension, node embedding dimension, and hidden dimension are all 48. And the output dimension of each cell topology is set to 32. Thus, the dimension of one full topology is 128. Then this 128-dim vector is fed into a 3-layer MLP with 256 hidden units and output a final score. In each predictor training process, the predictor is trained for 100 epochs with batch size 50 and an Adam optimizer (1e-3 learning rate). We use hinge pair-wise ranking loss with margin to train the predictor following [22]. A dropout of 0.1 before the MLP is used.

B.2 Adversarial Training and Testing of Architectures

For the final comparison of all the architectures, we adversarially train them for 110 epochs on CIFAR-10/CIFAR-100 and 50 epochs on SVHN. As for the adversarial attack, we adopt the PGD7 attack with and step size . The batch size is set to 48, and we use an SGD optimizer with momentum 0.9, gradient clipping 5.0, and weight decay 5e-4. The learning rate is set to 0.05 initially. On CIFAR-10 and CIFAR-100, the learning rate is decayed by 10 at epoch 100 and epoch 105. And on SVHN111To successfully train a VGG-16 model on SVHN, we first conduct a normal training process for 50 epochs, and finetune the normally-trained model with adversarial training., it is decayed by 10 at epoch 20, epoch 40 and epoch 45. In addition, following a recent study [23], we employ the label smoothing regularization [32] with weight 0.2. No cutout and dropout is applied. All models are trained with the same settings on a single 2080Ti GPU.

On Tiny-ImageNet, the image size is 6464 instead of 3232, and we change the stem from a 3x3 convolution to a 7x7 convolution with stride 2. Due to this modification, the overall FLOPs is still close to . For all the architectures, we first conduct a normal training process for 110 epochs with batch size 256. The learning rate is set to 0.1 initially and decayed by 10 at epoch 30, 60 and 100. Using the normally-trained model as the pre-trained model, we adversarially train the model for 70 epochs with PGD5 attack (, step size ). The learning rate is linearly warmed up from 0 to 0.05 in 10 epochs, and decayed by 10 at epoch 45 and 60. In both the normal and adversarial training processes, an SGD optimizer with momentum 0.9, gradient clipping 5.0, and weight decay 3e-4 is used.

The settings of the distance attacks on CIFAR-10: 1) DeepFool: 100 steps; 2) Basic Iterative Method (BIM): 20 binary search steps.

Appendix C Additional Experimental Results

C.1 BatchNorm Calibration

Fig. A3 shows that without BatchNorm calibration (No CalibBN, green points), the evaluation results are unmeaningful. And after BN calibration with several batches, the accuracies and rankings of 200 randomly sampled sub-architectures become meaningful. Note that we calculate the ranking correlation with the CalibBN-15 results (calibration using 15 batches), since we fail to tune the architectures to the same level of accuracies with only 3 epochs of training as in [10].

| Architecture | #Param (M) | #FLOPs (M) | Clean | FGSM | PGD7 | PGD20 | PGD100 |

|---|---|---|---|---|---|---|---|

| One-shot-rA-1560 | 5.65 | 1571 | 84.9% | 60.0% | 56.0% | 53.1% | 52.6% |

| One-shot-div-1560 | 5.00 | 1552 | 84.1% | 59.1% | 55.0% | 52.0% | 51.6% |

| MSRobNet-1560 | 5.30 | 1588 | 85.1% | 60.4% | 56.4% | 53.4% | 53.1% |

| One-shot-rA-2000 | 7.25 | 2013 | 85.3% | 59.9% | 55.9% | 53.0% | 52.7% |

| One-shot-div-2000 | 6.46 | 2005 | 85.1% | 59.8% | 55.9% | 52.8% | 52.4% |

| MSRobNet-2000 | 6.46 | 2009 | 85.7% | 60.6% | 56.6% | 53.6% | 53.2% |

C.2 Transfer Results to SVHN and Tiny-ImageNet

We train MSRobNets and all baseline architectures with identical settings that have been described before, and show the comparison in Tab. A1 and Tab. A2. We can see MSRobNets outperform the baseline architectures, with comparable FLOPs and fewer parameters.

An interesting observation is that the architectures demonstrate slightly different relative robustness on these two datasets. For example, MSRobNet-1000 outperforms MSRobNet-1560 in adversarial accuracies on SVHN/Tiny-ImageNet, and RobNet-free outperforms MSRobNet-1560 on SVHN. This may arise from that larger dataset difference degrades the architecture transferability. Thus, the MSRobNet-1560 architecture discovered on CIFAR-10 is suboptimal on SVHN/Tiny-ImageNet for the targeted FLOPs of 1560M.

C.3 Comparison with Baseline One-shot Workflows

We compare with the architectures discovered by baseline one-shot workflows at targeted FLOPs 1560M and 2000M in Tab. A3. All the one-shot searches are conducted on the supernet with init channel number 44. After the search, the discovered topology is augmented to the targeted capacity . We can see that MSRobNets indeed outperform those architectures discovered by the one-shot methods consistently.

Appendix D Discussion

D.1 Adversarial Robustness Evaluation in the Search Phase: PGD V.S. FGSM

Compared with normal training and evaluation, adversarial training and adversarial robustness evaluation are much more time-consuming. Since NAS itself faces the computational challenge, how to evaluate the adversarial robustness efficiently in the search phase is an important problem. We wonder whether we can use a weaker and faster attack in the search phase while still managing to discover a robust architecture under the stronger attack. For example, while the final comparison is conducted using PGD7 (PGD attack with 7 steps), a recent study [5] tried to use the weaker FGSM attack during the whole search phase for efficiency.

As described in the main text, the search phase includes the supernet training phase and the parameter-sharing search phase. We conduct the following experiments to verify whether it is suitable to use FGSM as a substitute in the search phase: 1) FGSM-FGSM: Train another supernet with FGSM adversarial training, and use FGSM to evaluate the 200 randomly sampled topologies. 2) PGD7-FGSM: Reuse the supernet trained with PGD7 adversarial training, but use FGSM to evaluate the 200 randomly sampled topologies. The notation “ATTACK1-ATTACK2” means that “ATTACK1” is used in supernet adversarial training and “ATTACK2” is used in the parameter-sharing evaluation, and the step size of the FGSM attack is set to 8/255. Fig. A4 shows the rankings of these evaluations and the Spearman coefficient with the PGD7-PGD7 evaluations. The results show that using a much weaker attack FGSM for adversarial training causes the supernet evaluation to be uncorrelated. Thus, one can use FGSM for acceleration during the parameter-sharing search phase, while should keep using a stronger attack during the supernet training phase.

| Supernet | Kendall Tau | Spearman | P@5 | P@10 | P@20 | P@50 | P@100 |

|---|---|---|---|---|---|---|---|

| 0.904 | 0.987 | 0.60 | 0.80 | 0.90 | 0.88 | 0.94 | |

| 0.862 | 0.972 | 0.80 | 0.90 | 0.75 | 0.90 | 0.92 | |

| 0.859 | 0.973 | 0.60 | 0.60 | 0.80 | 0.90 | 0.94 | |

| 0.832 | 0.960 | 0.80 | 0.70 | 0.90 | 0.82 | 0.94 | |

| 0.867 | 0.973 | 0.60 | 0.90 | 0.85 | 0.90 | 0.93 | |

| 0.858 | 0.969 | 0.60 | 0.90 | 0.75 | 0.88 | 0.91 | |

| 0.835 | 0.959 | 0.80 | 0.90 | 0.70 | 0.82 | 0.93 | |

| 0.830 | 0.958 | 0.80 | 0.80 | 0.75 | 0.84 | 0.90 |

In the stage-wise search space, we also calculate the Kendall Tau, Spearman correlation, and P@K [22] of using PGD7-FGSM as a proxy reward for PGD7-PGD7. The P@K criterion reports the proportion of topologies with top-K rewards evaluated by PGD7-FGSM in the top-K topologies evaluated by PGD7-PGD7, and is a criterion that focus more on well-performing topologies. Results in Tab. A4 show that most of the high-score topologies evaluated by PGD7-FGSM also perform well under PGD7-PGD7 evaluation, which demonstrates that FGSM is indeed a suitable proxy attack for PGD during the search phase.

D.2 Adversarial Robustness Evaluation in the Search Phase: Distance-based Criterion

In our paper, we use a reward formulation that is a weighted sum of clean and adversarial accuracies.

| (6) |

where is a hyperparameter making a trade-off between the clean and adversarial accuracies. During our search, is set to 0.5. In other words, we use the average of clean and adversarial accuracies as the reward to guide the search.

Another type of robustness criterion is the distance-based criteria. The Foolbox toolbox [28] implements various kinds of distance attacks that attempt to find a minimum successful perturbation. Examples that have been misclassified without any adversarial perturbation are assigned zero attack distance. In this way, no averaging coefficient is needed to trade off the clean and adversarial accuracies, since the clean accuracy is handled implicitly.

We try to explore how these different robustness criteria correlate with each other. We evaluate the 200 randomly sampled architectures on the validation dataset in (init channel number 24) under five kinds of distance attacks. The five attacks are Basic Iterative Method (BIM) / [15], DeepFool / [20] and C&W [4], where or denotes the type of the distance measure in the input space. Due to the low efficiency of C&W attack, we only run it on 500 images () in the validation set. We use the median distance as one architecture’s performance criterion under the preformed attack, since it is insensitive to outliers (e.g., when the attack fails to find a proper adversarial perturbation within limited attack steps). For comparison, we also run PGD7 and FGSM attacks and calculate their rewards as in Eq. 6 with .

The Kendall Tau correlation coefficients between different criteria are shown in Fig. A5. We can see that

-

1.

Different robustness criteria are indeed correlated.

-

2.

Compared with the relatively high correlation between FGSM and PGD adversarial accuracies and rewards (), their correlations with distance criteria are relatively weaker. The clean accuracy is better correlated with FGSM/PGD rewards (0.718, 0.669) than the distance-based criteria (0.366-0.465). This is intuitive since the clean accuracy is added into FGSM/PGD rewards explicitly, while only handled implicitly (attack distance or ) in distance-based attacks. On the other hand, they are all higher than the correlations of clean accuracy and FGSM/PGD adversarial accuracies (0.324, 0.282), which verifies that an implicit or explicit trade-off between clean and adversarial accuracies must be considered in the robustness criterion.

-

3.

Intuitively, compared with attack distances, attack distances are better correlated with other attack distances, and vice versa. For example, . There are indeed differences brought by input space measure, and this motivates us to use criteria with different input space measure to assess model robustness.

-

4.

FGSM/PGD attacks are conducted with input space measure, thus the correlation of their rewards with attack distances is higher than attack distances.

D.3 Efficiency

As mentioned in the main text, the FGSM proxy reward and fewer validation images accelerate the evaluation process by roughly 8 (from 25 min to 3 min for evaluating each topology on a single 2080Ti GPU).

After been trained only once, the supernets can be reused to discover architectures targeting different capacities (e.g., 1000M, 1560M, 2000M), or in search space with different macro layouts (e.g., cell-wise, stage-wise). Thus, for each targeted capacity, denoting the epoch-wise training and evaluation time of one architecture as and , the search time can be estimated as . By using the BatchNorm calibration technique, we obviate the need of separate architecture training phases during the search. If each architecture needs to be finetuned for epochs (e.g., in [10]), the search time becomes . We can estimate the coefficients of in the multi-shot method and a NAS method with separate training phases as and , respectively.222In our experiments, we find that is approximately times of the train-valid data portion ratio . This means that, compared with the NAS methods that have separate training phases, the relative efficiency of multi-shot NAS emerges as the number of explored architectures increases and the speedup would approaches 25/8 asymptotically.

In the predictor-based search flow, topologies in total are assesed using the multi-shot evaluation strategy, and the whole search process can be finished in 1.3 GPU days.

Appendix E Discovered Architectures

The discovered cell-wise architectures, MSRobNet-1000, MSRobNet-1560 and MSRobNet-2000, are shown in Fig. A6, Fig A7, and Fig. A8, respectively. The discovered stage-wise architectures, MSRobNet-1560-P and MSRobNet-2000-P, are shown in Fig. A9 and Fig. A10, respectively.