Department of Mathematics & Statistics, Utah State University, Logan, UT, USA [email protected]. (J. Zhao)

Discovering Phase Field Models from Image Data with the Pseudo-spectral Physics Informed Neural Networks

Abstract

In this paper, we introduce a new deep learning framework for discovering the phase field models from existing image data. The new framework embraces the approximation power of physics informed neural networks (PINN), and the computational efficiency of the pseudo-spectral methods, which we named pseudo-spectral PINN or SPINN. Unlike the baseline PINN, the pseudo-spectral PINN has several advantages. First of all, it requires less training data. A minimum of two snapshots with uniform spatial resolution would be adequate. Secondly, it is computationally efficient, as the pseudo-spectral method is used for spatial discretization. Thirdly, it requires less trainable parameters compared with the baseline PINN. Thus, it significantly simplifies the training process and assures less local minima or saddle points. We illustrate the effectiveness of pseudo-spectral PINN through several numerical examples. The newly proposed pseudo-spectral PINN is rather general, and it can be readily applied to discover other PDE-based models from image data.

keywords:

Phase Field; Linear Scheme; Cahn-Hilliard Equation; Physics Informed Neural Network1 Introduction

The phase field models have been widely appreciated for investigating moving interface or multiphase problems. Among them, the well-known models are the Allen-Cahn equation (AC) and the Cahn-Hilliard (CH) equation [3]. In general, most existing phase field models are thermodynamically consistent, i.e., they satisfy the first and second thermodynamic laws. In particular, for most scenarios, the temperature is assumed constant, such that the Helmholtz free energy is non-increasing in time. Therefore, Denoting the state variable as and the free energy as , the phase field models can usually be written in a generic gradient flow form

| (1.1) |

with proper initial values and boundary conditions. Here is a semi negative-definite operator known as the mobility operator. In general, the free energy contains a conformation entropy and a bulk potential part. In this paper, we consider the free energy

| (1.2) |

with a model parameter. We use to denote the bulk chemical potential. For the Allen-Cahn equation, the mobility is usually chosen as , with a constant. Then, the Allen-Cahn equation reads

| (1.3) |

Similarly, for the Cahn-Hilliard equation, the mobility is usually chosen as , with a constant. Then the Cahn-Hilliard equation reads as

| (1.4) |

Here the choice of the bulk potential will depend on the material properties, and the double-well potential and Flory-Huggins free energy are the common choices.

Though there is a considerable amount of work on solving the phase field models [19, 18, 8, 6, 22, 7], there is less achievement on solving the inverse problem, i.e., discovering the phase field models from data. In the classical approach, the PDE models are usually derived based on empirical observation. The PDE models with free parameters are introduced first, and the free parameters will be fitted with data afterward. In this paper, we introduce a novel method using the physics informed neural network approach, where the model would be directly learned from data. In particular, we assume the bulk potential is unknown, and we will discover it via learning from the existing data using the deep neural network.

The deep neural network has been widely used to investigate problems in various fields. Mathematically, the feed-forward neural network could be defined as compositions of nonlinear functions. Given an input , and denote the output of the -th layer as , which is the input for -th layer. In general, we can define the neural network as [10]

| (1.5) |

where and denote the weights and biases at layer respectively, denotes the activation function. Many interesting work have been published in terms of solving or discovery of partial differential equations [16, 13, 15, 14, 2, 20, 9, 5, 1, 11, 23]. Here we briefly discuss some relevant work of PDE discovery with deep neural network or machine learning method. In [16], the authors assume the PDE model is a linear combination of a few known terms, and try to fit the coefficient from data. Based on the format of known data, they propose two approaches for calculating the loss function. In [17], the authors assume the PDE model is a linear combination of terms in a predefined dictionary (with many terms), and use the sparse regularization to coefficients are zero. Thus, after fitting with the data, only a few non-zero terms will be left, and the model is discovered. In [12], the authors introduce a feed-forward deep neural network to predict the PDE dynamics and uncover the underlying PDE model (in a black box form) simultaneously.

However, in most of the existing work, there is strong assumption on the availability of data. For instance, most existing PDE discovery strategies request a well sampled data across time and spatial domain, and they usually assume the data collected at different time slots are from a single time sequence. In reality, especially from lab experiments, the data are usually sampled as snapshots (images) from various time slots, and the images might have limited spatial resolution, i.e. the solution values are only known at certain locations. The major goal of this paper is to address the problem of PDE discovery with image snapshots. Therefore, we add to the efforts by introducing the pseudo-spectral PINN, which provides a handy approach to deal with data that are provided as image snapshots. And in particular, we focus on the phase field models alone.

The rest of of this paper is organized as follows. In Section 2, we introduce some notations, and set up the PDE discovery problem. In Section 3, we introduce the pseudo-spectral physics informed neural networks by introducing the network stricture and the various definitions of the loss function. Afterward, several examples are shown to demonstrate the approximation power of the pseudo-spectral PINN in Section 4. A brief conclusion is drawn in the last section.

2 Problem Setup

Recall from (1.1), we use to denote the state variables, where are the spatial coordinates, and denotes the time. The baseline PINN [16] would require a rather amount of data pairs properly sampled from the domain . Also, it requires all the data are from a single time sequence, given a deep neural network is needed to approximate as an aiding neural network for the discovery the PDE. However, this is usually not the case. In practice, the data are usually sampled as image snapshots at various time slots. For instance, for certain experiments, a picture is taken at , and after , another picture is taken at .

To clearly describe the problem, we need to introduce some notations. Let be two positive even integers. The spatial domain is uniformly partitioned with mesh size and

In order to derive the algorithm conveniently, we denote the discrete gradient operator and the discrete Laplace operator

following our previous work [4].

Let be the space of grid functions on . For such cases, the data are collected in the form as

| (2.1) |

where and are two snapshots with a time lag between the two states, and is the total number of snapshot pairs. And the goal is to discover the bulk function in the phase field models, with the data collected in the form of (2.1).

3 Pseudo-spectral Physics Informed Neural Networks

3.1 Neural Network Structure

For simplicity of notations, we introduce our idea by applying it on a generic example. Given the PDE problem

| (3.1) |

with periodic boundary condition, where operator is known, but operator is unknown. If we are provided with data pairs as (2.1), the major goal of this paper is to identify the operator/functional , thus discover the PDE model in (3.1).

The generic way to solve it is by introducing a deep neural network

| (3.2) |

to approximate , where represents the free parameters. In this paper, we assume is only a function of for simplicity. Notice the idea introduced applies easily to cases where is a function of and its derivatives, saying .

Since the data pairs (2.1) are uniformly sampled and the periodic boundary condition is considered for the PDE problem (3.1), it is advisable to simply the problem (3.1) by discretizing in space using pseudo-spectral method. Afterwards, the PDE problem in (3.1) can be written as

| (3.3) |

where is the spatially discretized mobility operator. Here we use to denote the discrete function values of on . We emphasis the periodic boundary condition is discussed in this paper. If other type of boundary conditions is considered, finite-difference or finite-element method for spatial discretization might be more proper.

Remark 3.1.

Notice, in the baseline PINN, an aiding neural network is introduced to approximate , which is computationally expensive and is applicable only to a single time sequence. It will be apparent that applying the physics informed neural networks on the semi-discrete problem (3.3) will have several advantages.

There are mainly two components (ingredients) in the deep neural network method. First of all, we shall define what the neural network meant to approximate (along with its structures, input, output, and hidden layers); define the loss function (which is enforced with known physics). Hence, for the problem above, we introduce a neural network to approximate . Next, we need to define the loss function.

3.2 Loss function

We split in (3.3) as

where is the linear operator and is the rest, i.e.

| (3.4) |

To solve it numerically in the time interval , we can propose the stabilized scheme

| (3.5) |

where is a stabilizing operator, which could be chosen as

| (3.6) |

with ’s are constants. Then, we have

| (3.7) |

Notice the scheme (3.7) is first-order accurate in time. When is small enough, the expression for is accurate. Inspired by this, we can introduce our linear SPINN, to discover (3.1) from the data (2.1).

Definition 3.2 (Linear SPINN loss function).

Denote the neural network where are the free parameters. Given , we can approximate via , which is defined by

| (3.8) |

Hence, the loss function is defined as

| (3.9) |

When the time step is small, by minimizing , we can identify , which in turn discover the PDE problem in (3.1). For certain cases, might be large. A single step marching scheme might be inaccurate. We thus introduce a loss function that is based on a more general recursive Linear SPINN.

Definition 3.3 (Recursive Linear SPINN loss function).

Denote the neural network . Given , we can approximate via a mapping , where is defined recursively as

| (3.10) |

with and ’s hyper-parameters. And the loss function is traced as

| (3.11) |

Remark 3.4.

Similarly, we can design the Neural network inspired by a second-order or higher-order numerical scheme. For instance, consider the following predictor-corrector second-order scheme. To solve it numerically in the time interval , we introduce the stabilized second-order scheme in two step:

-

•

First of all, we can obtain via

(3.12) -

•

Next, we can obtain via solving

(3.13)

Therefore, if we definite the neural network , once given , we can approximate via the mapping defined as

| (3.14) |

where is defined by

| (3.15) |

Then the loss function could be defined similarly. Some other ideas, such as exponential time integration can also be utilized for designing neural network structure. For brevity, these ideas will not be pursued in this paper. Interested readers are encouraged to explore these interesting topics.

As an analogy, we can mimic the Runge-Kutta method for solving (3.1) to design neural networks.Inspired by the idea of four-stage explicit Runge-Kutta method for the time discretization, we can introduce the following loss function.

Definition 3.5 (Four-stage explicit Runge-Kutta SPINN loss function).

Denote the neural network . Given , we can approximate by the mapping defined by the four stage method

| (3.16) |

where

| (3.17) |

Then the loss function can be defined as

| (3.18) |

Similarly, when the time step is large, we can define the loss function via the explicit RK SPINN recursively as below.

Definition 3.6 (Recursive four-stage Runge-Kutta SPINN loss function).

Denote the neural network . Given the data , we can approximate via the -step recursive mapping denoted as , where

| (3.19) |

And the loss function is defined by

| (3.20) |

Remark 3.7.

When the time step is even larger, one can use the implicit Runge-Kutta method for time discretization. However, given the intermediate stages are unknown, we need to introduce an extra neural network to approximate it:

| (3.21) |

where is to approximate . By following the idea in [16], we can define the following loss function

| (3.22) |

For such a case, it requires expensive computational costs, and we will not investigate it in the current paper.

4 Numerical examples

Next, we investigate the proposed SPINN with several examples. To identify the phase field models from data snapshots.

Recall that we assume is unknown, and the goal is to identify it via learning the existing data in the form of (2.1). As explained, we define a neural network. to approximate and use the loss functions as defined in the previous section. In the rest of this paper, we assume a feed-forward neural network, with -hidden layers, with each hidden layer having neurons. The activation function is applied in both hidden layers. During the training process, the method with default learning rate is used for 10,000 training iterations, followed by an L-BFGS-B optimization training process. The algorithms are implemented with Tensorflow.

For simplicity of discussion, in the all the examples below, generates random numbers between we chosen the domain , and choose in , i.e. the collected data are matrices in . And we solve the PDE first with high-order-accurate scheme with uniform time steps and uniform spatial discretization. The numerical solutions are randomly sampled at different time slots as training data to inversely discover the bulk function . Given the free energy in (1.2), we get , and . And we will chose for AC equation, and for CH equation.

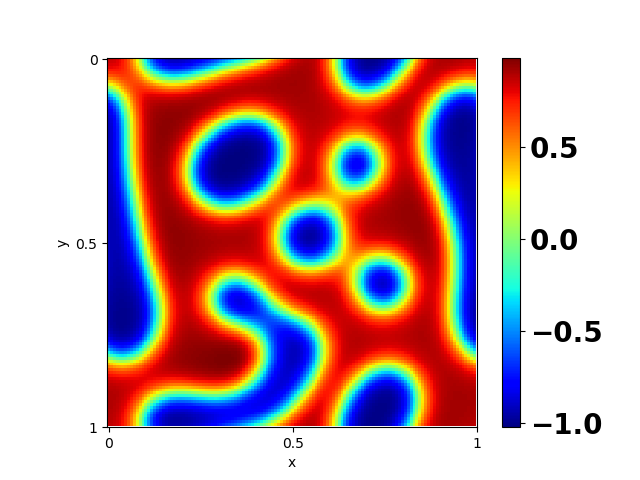

Example 1. In the first example, we generate data by solving the Allen-Cahn equation in (1.3) with , which means . And the parameters used are , , with the initial condition . Some snapshots of at various time slots are shown in Figure 4.1.

First of all, we test out the Runge-Kutta SPINN approach. We choose , i.e. use only a single data pair to train the neural network. We randomly choose , and study how the size of would affect the learned result. One experiment result with chosen at and are summarized in Figure 4.2. We observe that when the two snapshots are close, i.e. is small, the Runge-Kutta SPINN approach can accurately learn the bulk function . However, when the time step is large, its accuracy drops.

To overcome the inaccuracy when is large, we utilize the recursive Runge-Kutta SPINN approach. Here we fix the time step , and test the effect by using different recursive stage . The results are summarized in Figure 4.3. We observe that, by increasing the recursive stages, the accuracy improves, and the bulk function can be learned accurately when sufficient stage is used.

Example 2. However, when the time step is large enough, the recursive Runge-Kutta SPINN approach will not provide accurate approximate to . For instance, with the time step , the recursive Runge-Kutta SPINN approach fails. Meanwhile, the linear SPINN approach shows superior accuracy. As an example, we use a single data pair with a fixed time step . We vary the recursive stage for the recursive linear SPINN, and the results of the learned function are summarized in Figure 4.4. We observe that, even when the time step is large, the recursive linear SPINN approach still provides accurate approximate to , so long as the recursive stage is large enough.

We remark that the training strategy and the quality of training data might also be factors for the approximation accuracy, which we will not pursue in detail. Interested readers are strongly encouraged to explore.

Example 3. In the next example, we increase the problem complexity to identify a highly nonlinear bulk function. In details, we get the data by solving an Allen-Cahn equation with the Flory-Huggins free energy , which means the bulk function . The parameters used are , , along with the initial condition . We randomly sample two snapshots with , and train the neural network. A example of using two snapshots at are shown in Figure 4.5, where the two snapshots are shown in Figure 4.5(a), and the predicted function is shown in Figure 4.5(b). We observe the linear SPINN approach can learn the bulk function from only two images accurately.

Example 4. In the last example, we use the linear SPINN approach to discover the bulk function from the solution snapshots of the Cahn-Hilliard equation in (1.4). Here the data is obtained by solving the Cahn-Hilliard equation with , i.e. , , and initial condition . For the loss function, we add an extra term , to enforce the uniqueness of . Some temporal snapshots for are summarized in Figure 4.6. We randomly choose data , with data points with fixed time step . The learned result is summarized in Figure 4.7. We obverse the predicted bulk function has improved accuracy with more data used to train the neural network.

5 Conclusion

In this paper, we introduce a pseudo-spectral physics informed neural network to discover the bulk function in the phase field models. This newly proposed method well fits the common data collection strategy, i.e., taking snapshots/images at various time slots. The effectiveness of the proposed pseudo-spectral PINN, or SPINN, has been verified through identifying the bulk function of several phase field models. The idea of pseudo-spectral PINN is rather general, and it can be readily applied to discover other PDE models from image data, which will be investigated in our later research projects.

Acknowledgment

Jia Zhao would like to acknowledge the support from NSF DMS-1816783.

Conflict of Interest Statement

On behalf of all authors, the corresponding author states that there is no conflict of interest.

References

- [1] J. Berg and K. Nystrom. A unified deep artificial neural network approach to partial differential equations in complex geometries. Neurocomputing, 317:28–41, 2018.

- [2] S. L. Brunton, J. L. Proctor, and J. N. Kutz. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proceedings of the National Academy of Sciences, 113(15):3932–3937, 2016.

- [3] J. W. Cahn and J. E. Hilliard. Free energy of a nonuniform system. I. interfacial free energy. Journal of Chemical Physics, 28:258–267, 1958.

- [4] L. Chen, J. Zhao, and Y. Gong. A novel second-order scheme for the molecular beam epitaxy model with slope selection. Commun. Comput. Phys, x(x):1–21, 2019.

- [5] W. E and B. Yu. The deep ritz method: A deep learning-based numerical algorithm for solving variational problems. Communications in Mathematics and Statistics, 6:1–12, 2018.

- [6] F. Guillen-Gonzalez and G. Tierra. Second order schemes and time-step adaptivity for Allen-Cahn and Cahn-Hilliard models. Computers Mathematics with Applications, 68(8):821–846, 2014.

- [7] Y. Gong, and J. Zhao. Energy-stable Runge–Kutta schemes for gradient flow models using the energy quadratization approach. Applied Mathematics Letter, 94, 224-231, 2019.

- [8] D. Han and X. Wang. A second order in time uniquely solvable unconditionally stable numerical schemes for Cahn-Hilliard-Navier-Stokes equation. Journal of Computational Physics, 290(1):139–156, 2015.

- [9] J. Han, A. Jentzen, and W. E. Solving high-dimensional partial differential equations using deep learning. Proceedings of the National Academy of Sciences, 115(34):8505–8510, 2018.

- [10] C. Higham and D. Higham. Deep learning: An introduction for applied mathematicians. arXiv, page 1801.05894, 2018.

- [11] B. Li, S. Tang, and H. Yu. Better approximations of high dimensional smooth functions by deep neural networks with rectified power units. Communications in Computational Physics, 27:379–411, 2020.

- [12] Z. Long, Y. Lu, X. Ma, and B. Dong. Pde-net: Learning pdes from data. Proceedings of the 35th International Conference on Machine Learning, 80:3208–3216, 2018.

- [13] L. Lu, X. Meng, Z. Mao, and G. E. Karniadakis. Deepxde: A deep learning library for solving differential equations. arXiv, page 1907.04502, 2019.

- [14] T. Qin, K. Wu, and D. Xiu. Data driven governing equations approximation using deep neural networks. Journal of Computational Physics, 395:620–635, 2019.

- [15] M. Raissi. Deep hidden physics models: Deep learning of nonlinear partial differential equations. The Journal of Machine Learning Research, 19:1–24, 2018.

- [16] M. Raissi, P. Perdikaris, and G. E. Karniadakis. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics, 378:686–707, 2019.

- [17] S. Rudy, S. L. Brunton, J. L. Proctor, and J. N. Kutz. Data-driven discovery of partial differential equations. Science Advances, 3(4):e1602614, 2017.

- [18] J. Shen and X. Yang. Numerical approximations of Allen-Cahn and Cahn-Hilliard equations. Disc. Conti. Dyn. Sys.-A, 28:1669–1691, 2010.

- [19] C. Wang, X. Wang, and S. Wise. Unconditionally stable schemes for equations of thin film epitaxy. Discrete and Continuous Dynamic Systems, 28(1):405–423, 2010.

- [20] Y. Wang and C. Lin. Runge-kutta neural network for identification of dynamical systems in high accuracy. IEEE Transactions on Neural Networks, 9(2):294–307, 1998.

- [21] K. Xu and D. Xiu. Data-driven deep learning of partial differential equations in modal space. Journal of Computational Physics, 408:109307, 2020.

- [22] X. Yang, J. Zhao, and Q. Wang. Numerical approximations for the molecular beam epitaxial growth model based on the invariant energy quadratization method. Journal of Computational Physics, 333:102–127, 2017.

- [23] H. Zhao, B. Storey, R. Braatz, and M. Bazant. Learning the physics of pattern formation from images. Physical Review Letters, 124, 060201, 2020.

- [24] J. Zhao, X. Yang, Y. Gong, X. Zhao, X.G. Yang, J. Li, and Q. Wang A general strategy for numerical approximations of non-equilibrium models–Part I thermodynamical systems. International Journal of Numerical Analysis & Modeling, 15 (6), 884-918, 2018.