Dirichlet Energy Constrained Learning for Deep Graph Neural Networks

Abstract

Graph neural networks (GNNs) integrate deep architectures and topological structure modeling in an effective way. However, the performance of existing GNNs would decrease significantly when they stack many layers, because of the over-smoothing issue. Node embeddings tend to converge to similar vectors when GNNs keep recursively aggregating the representations of neighbors. To enable deep GNNs, several methods have been explored recently. But they are developed from either techniques in convolutional neural networks or heuristic strategies. There is no generalizable and theoretical principle to guide the design of deep GNNs. To this end, we analyze the bottleneck of deep GNNs by leveraging the Dirichlet energy of node embeddings, and propose a generalizable principle to guide the training of deep GNNs. Based on it, a novel deep GNN framework – EGNN is designed. It could provide lower and upper constraints in terms of Dirichlet energy at each layer to avoid over-smoothing. Experimental results demonstrate that EGNN achieves state-of-the-art performance by using deep layers.

1 Introduction

Graph neural networks (GNNs) [1] are promising deep learning tools to analyze networked data, such as social networks [2, 3, 4], academic networks [5, 6, 7], and molecular graphs [8, 9, 10]. Based on spatial graph convolutions, GNNs apply a recursive aggregation mechanism to update the representation of each node by incorporating representations of itself and its neighbors [11]. A variety of GNN variations have been explored for different real-world networks and applications [12, 13].

A key limitation of GNNs is that when we stack many layers, the performance would decrease significantly. Experiments show that GNNs often achieve the best performance with less than layers [14, 12]. As the layer number increases, the node representations will converge to indistinguishable vectors due to the recursive neighborhood aggregation and non-linear activation [15, 16]. Such phenomenon is recognized as over-smoothing issue [17, 18, 19, 20, 21]. It prevents the stacking of many layers and modeling the dependencies to high-order neighbors.

A number of algorithms have been proposed to alleviate the over-smoothing issue and construct deep GNNs, including embedding normalization [22, 23, 24], residual connection [25, 26, 27] and random data augmentation [28, 29, 30]. However, some of them are motivated directly by techniques in convolutional neural networks (CNNs) [31], such as the embedding normalization and residual connection. Others are based on heuristic strategies, such as random embedding propagation [29] and dropping edge [28]. Most of them only achieve comparable or even worse performance compared to their shallow models. Recently, a metric of Dirichlet energy has been applied to quantify the over-smoothing [32], which is based on measuring node pair distances. With the increasing of layers, the Dirichlet energy converges to zero since node embeddings become close to each other. But there is a lack of methods to leverage this metric to overcome the over-smoothing issue.

Therefore, it remains a non-trivial task to train a deep GNN architecture due to three challenges. First, the existing efforts are developed from diverse perspectives, without a generalizable principle and analysis. The abundance of these components also makes the design of deep GNNs challenging, i.e., how should we choose a suitable one or combinations for real-world scenarios? Second, even if an effective indicator of over-smoothing is given, it is hard to theoretically analyze the bottleneck and propose a generalizable principle to guide the training of deep GNNs. Third, even if theoretical guidance is given, it may be difficult to be utilized and implemented to train GNNs empirically.

To this end, in this paper, we target to develop a generalizable framework with a theoretical basis, to handle the over-smoothing issue and enable effective deep GNN architectures. In particular, we will investigate two research questions. 1) Is there a theoretical and generalizable principle to guide the architecture design and training of deep GNNs? 2) How can we develop an effective architecture to achieve state-of-the-art performance by stacking a large number of layers? Following these questions, we make three major contributions as follows.

-

•

We propose a generalizable principle – Dirichlet energy constrained learning, to guide the training of deep GNNs by regularizing Dirichlet energy. Without proper training, the Dirichlet energy would be either too small due to the over-smoothing issue, or too large when the node embeddings are over-separating. Our principle carefully defines an appropriate range of Dirichlet energy at each layer. Being regularized within this range, a deep GNN model could be trained by jointly optimizing the task loss and energy value.

-

•

We design a novel deep architecture – Energetic Graph Neural Networks (EGNN). It follows the proposed principle and could efficiently learn an optimal Dirichlet energy. It consists of three components, i.e., orthogonal weight controlling, lower-bounded residual connection, and shifted ReLU (SReLU) activation. The trainable weights at graph convolutional layers are orthogonally initialized as diagonal matrices, whose diagonal values are regularized to meet the upper energy limit during training. The residual connection strength is determined by the lower energy limit to avoid the over-smoothing. While the widely-used ReLU activation causes the extra loss of Dirichlet energy, the linear mapping worsens the learning ability of GNN. We apply SReLU with a trainable shift to provide a trade-off between the non-linear and linear mappings.

-

•

We show that the proposed principle and EGNN can well explain most of the existing techniques for deep GNNs. Empirical results demonstrate that EGNN could be easily trained to reach layers and achieves surprisingly competitive performance on benchmarks.

2 Problem Statement

Notations.

Given an undirected graph consisting of nodes, it is represented as , where denotes the adjacency matrix and denotes the feature matrix. Let and be the adjacency and degree matrix of the graph augmented with self-loops. The augmented normalized Laplacian is then given by , where is an augmented normalized adjacency matrix used for the neighborhood aggregation in GNN models.

Node classification task.

GNNs have been adopted in many applications [6, 33, 34]. Without loss of generality, we take node classification as an example. Given a graph and a set of its nodes with labels for training, the goal is to predict the labels of nodes in a test set.

We now use the graph convolutional network (GCN) [14] as a typical example, to illustrate how traditional GNNs perform the network analysis task. Formally, the layer-wise forward-propagation operation in GCN at the -th layer is defined as:

| (1) |

and are node embedding matrices at layers and , respectively; denotes trainable weights used for feature transformation; denotes an activation function such as ReLU; at the initial layer of GCN. The embeddings at the final layer are optimized with a node classification loss function, e.g., cross-entropy loss. The recursive neighborhood aggregation in Eq. (1) will make node embeddings similar to each other as the number of layer increases. This property, i.e., over-smoothing, prevents traditional GNNs from exploring neighbors many hops away. In practice, dependencies to high-order neighbors are important to the node classification. The traditional shallow GNNs may have sub-optimal performances in the downstream tasks [15, 27].

3 Dirichlet Energy Constrained Learning

In this paper, we aim to develop an effective principle to alleviate the over-smoothing issue and enable deep GNNs to leverage the high-order neighbors. We first theoretically analyze the over-smoothing issue, and then provide a principle to explain the key constraint in training deep GNNs.

Node pair distance has been widely adopted to quantify the over-smoothing based on embedding similarities [18, 22]. Among the series of distance metrics, Dirichlet energy is simple and expressive for the over-smoothing analysis [32]. Thus, we adopt Dirichlet energy and formally define it as below.

Definition 1.

Given node embedding matrix learned from GCN at the -th layer, the Dirichlet energy is defined as follows:

| (2) |

where denotes trace of a matrix; is edge weight given by the -th element in matrix ; is node degree given by the -th diagonal element in matrix . Dirichlet energy reveals the embedding smoothness with the weighted node pair distance. While a smaller value of is highly related to the over-smoothing, a larger one indicates that the node embeddings are over-separating even for those nodes with the same label. Considering the node classification task, one would prefer to have an appropriate Dirichlet energy at each layer to separate the nodes of different classes while keeping those of the same class close. However, under some conditions, the upper bound of Dirichlet energy is theoretically proved to converge to in the limit of infinite layers [32]. In other words, all nodes converge to a trivial fixed point in the embedding space.

Based on the previous analysis, we derive the corresponding lower bound and revisit the over-smoothing/separating problem from the model design and training perspectives. To simplify the derivation process, we remove the non-linear activation , and re-express GCN as: . The impact of non-linear function will be considered in the model design.

Lemma 1.

The Dirichlet energy at the -th layer is bounded as follows:

| (3) |

The detailed proof is provided in the Appendix. and are the non-zero eigenvalues of matrix that are most close to values and , respectively. and are the squares of minimum and maximum singular values of weight , respectively. Note that the eigenvalues of vary with the real-world graphs, and locate within range . We relax the above bounds as blow.

Lemma 2.

The lower and upper bounds of Dirichlet energy at the -th layer could be relaxed as:

| (4) |

Besides the uncontrollable eigenvalues determined by the underlying graph, it is shown that the Dirichlet energy can be either too small or too large without proper design and training on weight . On one hand, based on the common Glorot initialization [35] and L2 regularization, we empirically find that some of the weight matrices approximate to zero in a deep GCN. The corresponding square singular values are hence close to zero in these intermediate layers. That means the Dirichlet energy will become zero at the higher layers of GCN and causes the over-smoothing issue. On the other hand, without the proper weight initialization and regularization, a large may lead to the energy explosion and the over-separating.

The Dirichlet energy plays a key role in training a deep GNN model. However, the optimal value of Dirichlet energy varies in the different layers and applications. It is hard to be specified ahead and then enforces the node representation learning. Therefore, we propose a principle – Dirichlet energy constrained learning, defined in Proposition 1. It provides appropriate lower and upper limits of Dirichlet energy. Regularized by such a given range, a deep GNN model could be trained by jointly optimizing the node classification loss and Dirichlet energy at each layer.

Proposition 1.

Dirichlet energy constrained learning defines the lower & upper limits at layer as:

| (5) |

We apply the transformed initial feature through trainable function : . Both and are positive hyperparameters. From interval , hyperparameter is selected by satisfying constraint of . In such a way, the over-smoothing is overcome since the Dirichlet energies of all the layers are larger than appropriate limits related to . Compared with the initial transformed feature , the intermediate node embeddings of the same class are expected to be merged closely to have a smaller Dirichlet energy and facilitate the downstream applications. Therefore, we exploit the upper limit to avoid over-separating, where is usually selected from . In the experiment part, we show that the optimal energy value accompanied with the minimized classification loss locates within the above range at each layer. Furthermore, hyperparameters and could be easily selected from large appropriate scopes, which do not affect the model performance.

Given both the low and upper limits, an intuitive solution to search the optimal energy is to train GNN by optimizing the following constrained problem:

| (6) |

denotes the cross-entropy loss of node classification task; is layer number of GNN; denotes Frobenius norm of a matrix; and is loss hyperparameter.

4 Energetic Graph Neural Networks - EGNN

It is non-trivial to optimize Problem (6) due to the expensive computation of . Furthermore, the numerous constraints make the problem a very complex optimization hyper-planes, at which the raw task objective tends to fall into local optimums. Instead of directly optimizing Problem (6), we propose an efficient model EGNN to satisfy the constrained learning from three perspectives: weight controlling, residual connection and activation function. We introduce them one by one as follows.

4.1 Orthogonal Weight Controlling

According to Lemma , without regularizing the maximum square singular value of matrix , the upper bound of Dirichlet energy can be larger than the upper limit, i.e., . That means the Dirichlet energy of a layer may break the upper limit of constrained learning, and makes Problem (6) infeasible. In this section, we show how to satisfy such limit by controlling the singular values during weight initialization and model regularization.

Orthogonal initialization.

Since the widely-used initialization methods (e.g., Glorot initialization) fail to restrict the scopes of singular values, we adopt the orthogonal approach that initializes trainable weight as a diagonal matrix with explicit singular values [36]. To restrict and meet the constrained learning, we apply an equality constraint of at each layer. Based on this condition, we derive Proposition to initialize those weights and their square singular values for all the layers of EGNN, and give Lemma to show how we can satisfy the upper limit of constrained learning. The detailed derivation and proof are listed in Appendix.

Proposition 2.

At the first layer, weight is initialized as a diagonal matrix , where is identity matrix with dimension and the square singular values are . At the higher layer , weight is initialized with an identity matrix , where the square singular values are .

Lemma 3.

Based on the above orthogonal initialization, at the starting point of training, the Dirichlet energy of EGNN satisfies the upper limit at each layer : .

Orthogonal regularization.

However, without proper regularization, the initialized weights cannot guarantee they will still satisfy the constrained learning during model training. Therefore, we propose a training loss that penalizes the distances between the trainable weights and initialized weights or . To be specific, we modify the optimization problem (6) as follows:

| (7) |

Comparing with the original problem (6), we instead use the weight penalization to meet the upper limit of constrained learning, and make the model training efficient. While a larger highly regularizes the trainable weights around the initialized ones to satisfy the constrained learning, a smaller assigns the model more freedom to adapt to task data and optimize the node classification loss.

4.2 Lower-bounded Residual Connection

Although the square singular values are initialized and regularized properly, we may still fail to guarantee the lower limit of constrained learning in some specific graphs. According to Lemma , the lower bound of Dirichlet energy is . In the real-world applications, eigenvalue may exactly equal to and relaxes the lower bound as zero as shown in Lemma . For example, in Erdős–Rényi graph with dense connections [37], the eigenvalues of matrix converge to with high probability [16]. Even though , the Dirichlet energy can be smaller than the lower limit and leads to the over-smoothing. To tackle this problem, we adopt residual connections to the initial layer and the previous layer . To be specific, we define the residual graph convolutions as:

| (8) |

and are residual connection strengths determined by the lower limit of constrained learning, i.e., . We are aware that the residual technique has been used before to set up deep GNNs [25, 38, 27]. However, they either apply the whole residual components, or combine an arbitrary fraction without theoretical insight. Instead, we use an appropriate residual connection according to the lower limit of Dirichlet energy. In the experiment part, we show that while a strong residual connection overwhelms information in the higher layers and reduces the classification performance, a weak one will lead to the over-smoothing. In the following, we justify that both the lower and upper limits in the constrained learning can be satisfied with the proposed lower-bounded residual connection. The detailed proofs are provided in Appendix.

Lemma 4.

Suppose that . Based upon the orthogonal controlling and residual connection, the Dirichlet energy of initialized EGNN is larger than the lower limit at each layer , i.e., .

Lemma 5.

Suppose that . Being augmented with the orthogonal controlling and residual connection, the Dirichlet energy of initialized EGNN is smaller than the upper limit at each layer , i.e., .

4.3 SReLU Activation

Note that the previous theoretical analysis and model design are conducted by ignoring the activation function, which is usually given by ReLU in GNN. In this section, we first theoretically discuss the impact of ReLU on the Dirichlet energy, and then demonstrate the appropriate choice of activation.

Lemma 6.

We have if activation function is ReLU or Leaky-ReLU [32].

It is shown that the application of ReLU further reduces the Dirichlet energy, since the negative embeddings are non-linearly mapped to zero. Although the trainable weights and residual connections are properly designed, the declining Dirichlet energy may violate the lower limit. On the other hand, a simplified GNN with linear identity activation will have limited model learning ability although it does not change the energy value. For example, simple graph convolution (SGC) model achieves comparable performance with the traditional GCN only with careful hyperparameter tuning [39]. We propose to apply SReLU to achieve a good trade-off between the non-linear and linear activations [40, 41]. SReLU is defined element-wisely as:

| (9) |

where is a trainable shift shared for each feature dimension of . SReLU interpolates between the non-linearity and linearity depending on shift . While the linear identity activation is approximated if is close to , the non-linear mapping is activated if node embedding is smaller than the specific . In our experiments, we initialize with a negative value to provide an initial trade-off, and adapt it to the given task by back-propagating the training loss.

4.4 Connections to Previous Work

Recently, various techniques have been explored to enable deep GNNs [15, 23, 29]. Some of them are designed heuristically from diverse perspectives, and others are analogous to CNN components without theoretical insight tailored to graph analytics. In the following, we show how our principle and EGNN explain the existing algorithms, and expect to provide reliable theoretical guidance to the future design of deep GNNs.

Embedding normalization.

The general normalization layers, such as pair [22], batch [24] and group [23] normalizations, have been used to set up deep GNNs. The pair normalization (PairNorm) aims to keep the node pair distances as a constant in the different layers, and hence relieves the over-smoothing. Motivated from CNNs, the batch and group normalizations re-scale the node embeddings of a batch and a group, respectively. Similar to the operation in PairNorm, they learn to maintain the node pair distance in the node batch or group. The adopted Dirichlet energy is also a variant of the node pair distance. The existing normalization methods can be regarded as training GNN model with a constant energy constraint. However, this will prevent GNN from optimizing the energy as analyzed in Section 3. We instead regularize it within the lower and upper energy limits, and let model discover the optimum.

Dropping edge.

As a data augmentation method, dropping edge (DropEdge) randomly masks a fraction of edges at each epoch [28]. It makes graph connections sparse and relieves the over-smoothing by reducing information propagation. Specially, the contribution of DropEdge could be explained from the perspective of Dirichlet energy. In Erdős–Rényi graph, eigenvalue converges to if the graph connections are more and more dense [16]. DropEdge reduces the value of , and helps improve the upper bound of Dirichlet energy to slow down the energy decreasing speed. In the extreme case where all the edges are dropped in any a graph, Laplacian becomes a zero matrix. As a result, we have eigenvalue of zero and maximize the upper bound. In practice, the dropping rate has to be determined carefully depending on various tasks. Instead, our principle assigns model freedom to optimize the Dirichlet energy within a large and appropriate range.

Residual connection.

Motivated from CNNs, residual connection has been applied to preserve the previous node embeddings and relieve the over-smoothing. Especially, the embedding from the last layer is reused and combined completely in related work [25, 42, 43]. A fraction of the initial embedding is preserved in model GCNII [27] and APPNP [44]. Networks JKNet [26] and DAGNN [45] aggregate all the previous embeddings at the final layers. The existing work uses the residua connection empirically. In this work, we derive and explain the residual connection to guarantee the lower limit of Dirichlet energy. By modifying hyperparameter , our EGNN can easily evolve to the existing deep residual GNNs, such as GCNII and APPNP.

Model simplification.

Model SGC [39] removes all the activation and trainable weights to avoid over-fitting issue, and simplifies the training of deep GNNs. It is equivalent to EGNN with and , where weights and shifts are remained as constants. Such simplification will reduce the model learning ability. As shown in Eq. (7), we adopt loss hyperparameter to learn the trade-off between maintaining the orthogonal weights or updating them to model data characteristics.

5 Experiments

In this section, we empirically evaluate the effectiveness of EGNN on real-world datasets. We aim to answer the following questions. Q1: How does our EGNN compare with the state-of-the-art deep GNN models? Q2: Whether or not the Dirichlet energy at each layer of EGNN satisfies the constrained learning? Q3: How does each component of EGNN affect the model performance? Q4: How do the model hyperparameters impact the performance of EGNN?

5.1 Experiment Setup

Datasets.

Baselines.

Implementation.

We implement all the baselines using Pytorch Geometric [49] based on their official implementations. The model hyperparameters are reused according to the public papers or are fine-tuned by ourselves if the classification accuracy could be further improved. Specially, we apply max-pooling to obtain the final node representation at the last layer of JKNet. In Ogbn-arxiv, we additionally include batch normalization between the successive layers in all the considered GNN models except PairNorm. Although more tricks (e.g., label reusing and linear transformation as listed in leader board) could be applied to improve node classification in Ogbn-arxiv, we focus on comparing the original GNN models in enabling deep layer stacking. The training hyperparameters are carefully set by following the previous common setting and are listed in Appendix.

We implement our EGNN upon GCN, except for the components of weight initialization and regularization, lower-bounded residual connection and SReLU. We choose hyperparameters , , and based on the validation set. For the weight initialization, we set to be for all the datasets; that is, the trainable weights are initialized as identity matrices at all the graph convolutional layers. The loss hyperparameter is in Cora, Pubmed and Coauthor-Physics to strictly regularize towards the orthogonal matrix; and it is in Ogbn-arxiv to improve the model’s learning ability. For the lower-bounded residual connection, we choose residual strength from range and list the details in Appendix. The trainable shift is initialized with in Cora and Pubmed; it is initialized to and in Coauthor-Physics and Ogbn-arxiv, respectively. We also study these hyperparameters in the following experiments. All the experiment results are the averages of runs.

| Datasets | Cora | Pubmed | Coauthors-Physics | Ogbn-arxiv | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Layer Num | ||||||||||||

| GCN | ||||||||||||

| PairNorm | ||||||||||||

| DropEdge | ||||||||||||

| SGC | ||||||||||||

| JKNet | ||||||||||||

| APPNP | ||||||||||||

| GCNII | ||||||||||||

| EGNN | ||||||||||||

5.2 Experiment Results

Node classification results.

To answer research question Q1, Table 1 summarizes the test classification accuracies. Each accuracy is averaged over random trials. We report the results with layers for Cora and Pubmed, and layers for Coauthor-Physics and Ogbn-arxiv.

We observe that our EGNN generally outperforms all the baselines across the four datasets, especially in the deep cases (). Notably, the node classification accuracy is consistently improved with the layer stacking in EGNN until or , which demonstrates the benefits of deep graph neural architecture to leverage neighbors multiple hops away. While the state-of-the-art models PairNorm, DropEdge, SGC, JKNet, and APPNP alleviate the over-smoothing issue to some extend, their performances still drop with the increasing of layers. Most of their /-layer models are even worse than their corresponding shallow versions. As the most competitive deep architecture in literature, GCNII augments the transformation matrix as , where is a hyperparameter to preserve the identity mapping and enhance the minimum singular value of the augmented weight. Instead of explicitly defining the strength of identity mapping, we propose the orthogonal weight initialization based on the upper limit of Dirichlet energy and apply the orthogonal weight regularization. Based on Eq. (7), EGNN automatically learns the optimal trade-off between identity mapping and task adaption. Furthermore, we use SReLU activation and the residual connection to theoretically control the lower limit of Dirichlet energy. The experimental results show that EGNN not only outperforms GCNII in the small graphs Cora, Pubmed and Coauthor-Physics, but also delivers significantly superior performance in the large graph Obgn-arxiv, achieving improvement over GCNII with 32 layers.

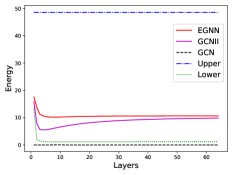

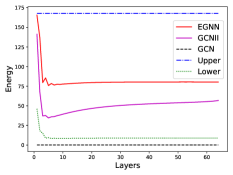

Dirichelet energy visualization.

To answer research question Q2, we show the Dirichlet energy at each layer of a -layer EGNN in Cora and Pubmed datasets in Figure 1. We only plot GCN and GCNII for better visualization purposes. For other methods, the Dirichlet energy is either close to zero or overly large due to the over-smoothing issue or over-separating issue of node embeddings, respectively.

It is shown that the Dirichlet energies of EGNN are strictly constrained within the range determined by the lower and upper limits of the constrained learning. Due to the over-smoothing issue in GCN, all the node embeddings converge to zero vectors. GCNII has comparable or smaller Dirichlet energy by carefully and explicitly designing both the initial connection and identity mapping strengths. In contrast, our EGNN only gives the appropriate limits of Dirichlet energy, and let the model learn the optimal energy at each layer for a specific task. The following hyperparameter studies will show that the values of and could be easily selected from a large appropriate range.

| Component | Type | Cora | Pubmed | Coauthors-Physics | Ogbn-arxiv | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Weight | Glorot | ||||||||||||

| initialization | Orthogonal | ||||||||||||

| Lower | |||||||||||||

| limit setting | |||||||||||||

| Activation | Linear | ||||||||||||

| SReLU | |||||||||||||

| ReLU | |||||||||||||

Ablation studies of EGNN components.

To demonstrate how each component affects the training of graph neural architecture and answer research question Q3, we perform the ablation experiments with EGNN on all the datasets. For the component of orthogonal weight initialization and regularization, we compare and replace them with the traditional Glorot initialization and Frobenius norm regularization as shown in Eq. (6). Considering the component of lower-bounded residual connection, we vary the lower limit hyperparameter from , and . Within the range of , the adoption of specific values is specified for each dataset in Appendix. The component of the activation function is studied from candidates of linear identity activation, SReLU, and ReLU. Table 2 reports the results of the above ablation studies.

The orthogonal weight initialization and regularization are crucial to train the deep graph neural architecture. In Cora, Pubmed, and Coauthor-Physics, Glorot initialization and Frobenius norm regularization fail to control the singular values of trainable weights, which may lead to overly large or small Dirichlet energy and affect the node classification performance. In Ogbn-arxiv, the input node features are described by dense word embeddings of a paper [50], where the trainable weights in GNN are required to capture data statistics and optimize the classification task. EGNN applies a small loss hyperparameter of to let the model adapt to the given task, which is equivalent to the traditional regularization. Therefore, the two approaches have comparable performances.

An appropriate lower limit could enable the deep EGNN. While the Dirichlet energy may approach zero without the residual connection, the overwhelming residual information with prevents the higher layer from learning the new neighborhood information. Within the large and appropriate range of , could be easily selected to achieve superior performance.

Activation SReLU performs slightly better than the linear identity activation and ReLU. This is because SReLU could automatically learn the trade-off between linear and non-linear activations, which prevents the significant dropping of Dirichlet energy and ensures the model learning ability.

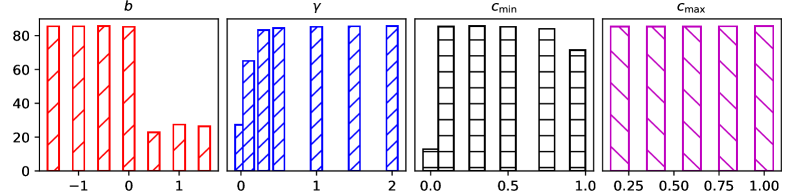

Hyperparameter analysis.

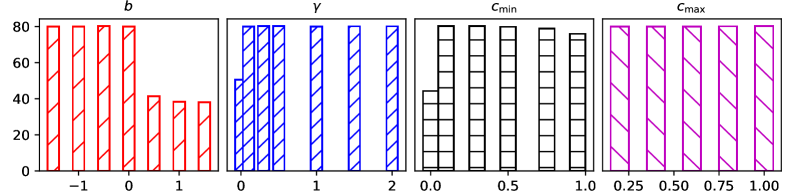

To understand the hyperparameter impacts on a -layer EGNN and answer research question Q4, we conduct experiments with different values of initial shift , loss factor , lower limit factor and upper one . We present the hyperparameter study in Figure 2 for Cora, and show the others with similar tendencies in Appendix.

We observe that our method is not sensitive to the choices of , , and in a wide range. The initial shift value should be , in order to avoid the overly nonlinear mapping and Dirichlet energy damage. The loss factor within the range is applied to regularize the trainable weight around the orthogonal matrix to avoid the explosion or vanishing of Dirichlet energy. within the appropriate range allows the model to expand neighborhood size and preserve residual information to avoid the over-smoothing. As shown in Figure 1, since energy at the hidden layer is much smaller than from the input layer, we could easily satisfy the upper limit with in a large range . Given these large hyperparameter ranges, EGNN could be easily trained with deep layers.

6 Conclusions

In this paper, we propose a Dirichlet energy constrained learning principle to show the importance of regularizing the Dirichlet energy at each layer within reasonable lower and upper limits. Such energy constraint is theoretically proved to help avoid the over-smoothing and over-separating issues. We then design EGNN based on our theoretical results and empirically demonstrate that the constrained learning plays a key role in guiding the design and training of deep graph neural architecture. The detailed analysis is presented to illustrate how our principle connects and combines the previous deep methods. The experiments on benchmarks show that EGNN could be easily trained to achieve superior node classification performances with deep layer stacking. We believe that the constrained learning principle will help discover deeper and more powerful GNNs in the future.

References

- [1] Yujia Li, Daniel Tarlow, Marc Brockschmidt, and Richard Zemel. Gated graph sequence neural networks. arXiv preprint arXiv:1511.05493, 2015.

- [2] Rex Ying, Ruining He, Kaifeng Chen, Pong Eksombatchai, William L Hamilton, and Jure Leskovec. Graph convolutional neural networks for web-scale recommender systems. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 974–983, 2018.

- [3] Xiao Huang, Qingquan Song, Yuening Li, and Xia Hu. Graph recurrent networks with attributed random walks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 732–740, 2019.

- [4] Wenqi Fan, Yao Ma, Qing Li, Yuan He, Eric Zhao, Jiliang Tang, and Dawei Yin. Graph neural networks for social recommendation. In The World Wide Web Conference, pages 417–426, 2019.

- [5] Hongyang Gao, Zhengyang Wang, and Shuiwang Ji. Large-scale learnable graph convolutional networks. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 1416–1424, 2018.

- [6] Hongyang Gao and Shuiwang Ji. Graph u-nets. In international conference on machine learning, pages 2083–2092. PMLR, 2019.

- [7] Kaixiong Zhou, Qingquan Song, Xiao Huang, and Xia Hu. Auto-gnn: Neural architecture search of graph neural networks. arXiv preprint arXiv:1909.03184, 2019.

- [8] Justin Gilmer, Samuel S Schoenholz, Patrick F Riley, Oriol Vinyals, and George E Dahl. Neural message passing for quantum chemistry. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pages 1263–1272. JMLR. org, 2017.

- [9] Marinka Zitnik and Jure Leskovec. Predicting multicellular function through multi-layer tissue networks. Bioinformatics, 33(14):i190–i198, 2017.

- [10] Federico Monti, Michael M Bronstein, and Xavier Bresson. Geometric matrix completion with recurrent multi-graph neural networks. arXiv preprint arXiv:1704.06803, 2017.

- [11] Will Hamilton, Zhitao Ying, and Jure Leskovec. Inductive representation learning on large graphs. In NeuIPS, pages 1024–1034, 2017.

- [12] Petar Velickovic, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Lio, and Yoshua Bengio. Graph attention networks. arXiv, 1(2), 2017.

- [13] Keyulu Xu, Weihua Hu, Jure Leskovec, and Stefanie Jegelka. How powerful are graph neural networks? arXiv preprint arXiv:1810.00826, 2018.

- [14] Thomas N Kipf and Max Welling. Semi-supervised classification with graph convolutional networks. ICLR, 2017.

- [15] Qimai Li, Zhichao Han, and Xiao-Ming Wu. Deeper insights into graph convolutional networks for semi-supervised learning. In Thirty-Second AAAI Conference on Artificial Intelligence, 2018.

- [16] Kenta Oono and Taiji Suzuki. Graph neural networks exponentially lose expressive power for node classification. In International Conference on Learning Representations, 2020.

- [17] Hoang NT and Takanori Maehara. Revisiting graph neural networks: All we have is low-pass filters. arXiv preprint arXiv:1905.09550, 2019.

- [18] Deli Chen, Yankai Lin, Wei Li, Peng Li, Jie Zhou, and Xu Sun. Measuring and relieving the over-smoothing problem for graph neural networks from the topological view. arXiv preprint arXiv:1909.03211, 2019.

- [19] Uri Alon and Eran Yahav. On the bottleneck of graph neural networks and its practical implications. arXiv preprint arXiv:2006.05205, 2020.

- [20] Eli Chien, Jianhao Peng, Pan Li, and Olgica Milenkovic. Adaptive universal generalized pagerank graph neural network. In International Conference on Learning Representations. https://openreview. net/forum, 2021.

- [21] Wenbing Huang, Yu Rong, Tingyang Xu, Fuchun Sun, and Junzhou Huang. Tackling over-smoothing for general graph convolutional networks. arXiv e-prints, pages arXiv–2008, 2020.

- [22] Lingxiao Zhao and Leman Akoglu. Pairnorm: Tackling oversmoothing in gnns. arXiv preprint arXiv:1909.12223, 2019.

- [23] Kaixiong Zhou, Xiao Huang, Yuening Li, Daochen Zha, Rui Chen, and Xia Hu. Towards deeper graph neural networks with differentiable group normalization. Advances in Neural Information Processing Systems, 33, 2020.

- [24] Sergey Ioffe and Christian Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. ICML, 2015.

- [25] Guohao Li, Matthias Muller, Ali Thabet, and Bernard Ghanem. Deepgcns: Can gcns go as deep as cnns? In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 9267–9276, 2019.

- [26] Keyulu Xu, Chengtao Li, Yonglong Tian, Tomohiro Sonobe, Ken-ichi Kawarabayashi, and Stefanie Jegelka. Representation learning on graphs with jumping knowledge networks. In International Conference on Machine Learning, pages 5453–5462. PMLR, 2018.

- [27] Ming Chen, Zhewei Wei, Zengfeng Huang, Bolin Ding, and Yaliang Li. Simple and deep graph convolutional networks. arXiv preprint arXiv:2007.02133, 2020.

- [28] Yu Rong, Wenbing Huang, Tingyang Xu, and Junzhou Huang. Dropedge: Towards deep graph convolutional networks on node classification. In International Conference on Learning Representations. https://openreview. net/forum, 2020.

- [29] Wenzheng Feng, Jie Zhang, Yuxiao Dong, Yu Han, Huanbo Luan, Qian Xu, Qiang Yang, Evgeny Kharlamov, and Jie Tang. Graph random neural networks for semi-supervised learning on graphs. Advances in Neural Information Processing Systems, 33, 2020.

- [30] Arman Hasanzadeh, Ehsan Hajiramezanali, Shahin Boluki, Mingyuan Zhou, Nick Duffield, Krishna Narayanan, and Xiaoning Qian. Bayesian graph neural networks with adaptive connection sampling. In International Conference on Machine Learning, pages 4094–4104. PMLR, 2020.

- [31] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In CVPR, pages 770–778, 2016.

- [32] Chen Cai and Yusu Wang. A note on over-smoothing for graph neural networks. arXiv preprint arXiv:2006.13318, 2020.

- [33] Kaixiong Zhou, Qingquan Song, Xiao Huang, Daochen Zha, Na Zou, and Xia Hu. Multi-channel graph neural networks. arXiv preprint arXiv:1912.08306, 2019.

- [34] Muhan Zhang and Yixin Chen. Link prediction based on graph neural networks. arXiv preprint arXiv:1802.09691, 2018.

- [35] Xavier Glorot and Yoshua Bengio. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the thirteenth international conference on artificial intelligence and statistics, pages 249–256, 2010.

- [36] Quoc V Le, Navdeep Jaitly, and Geoffrey E Hinton. A simple way to initialize recurrent networks of rectified linear units. arXiv preprint arXiv:1504.00941, 2015.

- [37] Paul Erdős and Alfréd Rényi. On the evolution of random graphs. Publ. Math. Inst. Hung. Acad. Sci, 5(1):17–60, 1960.

- [38] Guohao Li, Chenxin Xiong, Ali Thabet, and Bernard Ghanem. Deepergcn: All you need to train deeper gcns. arXiv preprint arXiv:2006.07739, 2020.

- [39] Felix Wu, Tianyi Zhang, Amauri Holanda de Souza Jr, Christopher Fifty, Tao Yu, and Kilian Q Weinberger. Simplifying graph convolutional networks. arXiv preprint arXiv:1902.07153, 2019.

- [40] Sitao Xiang and Hao Li. On the effects of batch and weight normalization in generative adversarial networks. arXiv preprint arXiv:1704.03971, 2017.

- [41] Haozhi Qi, Chong You, Xiaolong Wang, Yi Ma, and Jitendra Malik. Deep isometric learning for visual recognition. In International Conference on Machine Learning, pages 7824–7835. PMLR, 2020.

- [42] Lei Chen, Le Wu, Richang Hong, Kun Zhang, and Meng Wang. Revisiting graph based collaborative filtering: A linear residual graph convolutional network approach. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, pages 27–34, 2020.

- [43] Kuangqi Zhou, Yanfei Dong, Kaixin Wang, Wee Sun Lee, Bryan Hooi, Huan Xu, and Jiashi Feng. Understanding and resolving performance degradation in graph convolutional networks, 2020.

- [44] Johannes Klicpera, Aleksandar Bojchevski, and Stephan Günnemann. Predict then propagate: Graph neural networks meet personalized pagerank. arXiv preprint arXiv:1810.05997, 2018.

- [45] Meng Liu, Hongyang Gao, and Shuiwang Ji. Towards deeper graph neural networks. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 338–348, 2020.

- [46] Zhilin Yang, William W Cohen, and Ruslan Salakhutdinov. Revisiting semi-supervised learning with graph embeddings. arXiv preprint arXiv:1603.08861, 2016.

- [47] Oleksandr Shchur, Maximilian Mumme, Aleksandar Bojchevski, and Stephan Günnemann. Pitfalls of graph neural network evaluation. arXiv preprint arXiv:1811.05868, 2018.

- [48] Weihua Hu, Matthias Fey, Marinka Zitnik, Yuxiao Dong, Hongyu Ren, Bowen Liu, Michele Catasta, and Jure Leskovec. Open graph benchmark: Datasets for machine learning on graphs. arXiv preprint arXiv:2005.00687, 2020.

- [49] Matthias Fey and Jan E. Lenssen. Fast graph representation learning with PyTorch Geometric. In ICLR Workshop, 2019.

- [50] Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg Corrado, and Jeffrey Dean. Distributed representations of words and phrases and their compositionality. arXiv preprint arXiv:1310.4546, 2013.

Appendix A Appendix

A.1 Dataset Statistics

We conduct experiments on four benchmark graph datasets, including Cora, Pubmed, Coauthor-Physics and Ogbn-arxiv. They are widely used to study the over-smoothing issue and test the performance of deep GNNs. We use the public train/validation/test split in Cora and Pubmed, and randomly split Coauthor-Physics by following the previous practice. Their data statistics are summarized in Table 3.

| Datasets | # Nodes | # Edges | # Classes | # Features | # Train/Validation/Test nodes | Setting |

|---|---|---|---|---|---|---|

| Cora | 2,708 | 5,429 | 7 | 1,433 | 140/500/1,000 | Transductive (one graph) |

| Pubmed | 19,717 | 44,338 | 3 | 500 | 60/500/1,000 | Transductive (one graph) |

| Coauthor-Physics | 34,493 | 247,962 | 5 | 8,415 | 100/150/34,243 | Transductive (one graph) |

| Ogbn-arxiv | 169,343 | 1,166,243 | 40 | 128 | 90,941/29,799/48,603 | Transductive (one graph) |

A.2 Baselines

To validate the effectiveness of the Dirichlet energy constrained learning principle and our EGNN on the node classification problem, we consider baseline GCN and other state-of-the-art deep GNNs based upon GCN. They are summarized as follows:

- •

-

•

PairNorm [22]. Based upon GCN, PairNorm is applied between the successive graph convolutional layers to normalize node embeddings and to alleviate the over-smoothing issue.

-

•

DropEdge [28]. It randomly removes a certain number of edges from the input graph at each training epoch, which reduces the convergence speed of over-smoothing.

-

•

SGC [39]. It simplies the vanilla GCN by removing all the hidden weights and activation functions, which could avoid the over-fitting issue in GCN.

-

•

Jumping knowledge network (JKNet) [26]. Based upon GCN, all the hidden node embeddings are combined at the last layer to adapt the effective neighborhood size for each node. Herein we apply max-pooling to combine the the series of node embeddings from the hidden layers.

-

•

Approximate personalized propagation of neural predictions (APPNP) [44]. It applies personalized PageRank to improve the message propagation scheme in vanilla GCN. Furthermore, APPNP simplifies model by removing the hidden weight and activation function and preserving a small fraction of initial embedding at each layer.

-

•

Graph convolutional network via initial residual and identity mapping (GCNII) [27]. It is an extension of the vanilla GCN model with two simple techniques at each layer: an initial connection to the input feature and an identity mapping added to the trainable weight.

A.3 Implementation Details

For each experiment, we train with a maximum of epochs using the Adam optimizer and early stopping. Following the previous common settings in the considered benchmarks, we list the key training hyperparameters for each of them in Table 4. All the experiment results are reported by the averages of independent runs.

| Dataset | Dropout rate | Weight decay (L2) | Learning rate | # Training epoch |

|---|---|---|---|---|

| Cora | ||||

| Pubmed | ||||

| Coauthor-Physics | ||||

| Ogbn-arxiv |

A.4 Lower Limit Setting

We carefully choose the lower limit hyperparameter from range for each dataset based on the classification performance and Dirichlet energy on the validation set. Note that we have the residual connection strengths and which satisfy constraint: . Specially, we use of (layer number ) and () in Cora, where in all the layer cases. We use of () and () in Pubmed, where and . We apply of in Coauthor-Physics, where and . We apply and of and when , respectively; we make use of and of and if , respectively. In these two cases, the residual connection strength to the last layer is .

A.5 Proof for Lemma 1

Lemma 1.

The Dirichlet energy at the -th layer is bounded as follows:

Proof.

By ignoring the activation function, we obtain the upper bound as below.

denotes the maximum eigenvalue of a matrix, and . Since , where is a feature matrix, we can obtain the inequality relationship: . In a similar way, we can also get the upper bound of .

Similarly, we derive the lower bound as below.

A.6 Derivation of Proposition 2

All the trainable weights at the graph convolutional layers of EGNN are initialized as the orthogonal diagonal matrices. At the first layer, the upper bound of Dirichlet energy is given by . Given constraint , we can obtain . The square singular values are then restricted as: . For layer , we further relax the upper bound as: . Note that at the first layer. Given constraint , we can obtain weight . The square singular values are restricted as: , and .

A.7 Proof for Lemma 3

Lemma 3.

Based on the above orthogonal initialization, at the starting point of training, the Dirichlet energy of EGNN satisfies the upper limit at each layer : .

Proof.

According to Lemma 2, the Dirichlet energy at layer is limited as:

A.8 Proof for Lemma 4

Lemma 4.

Suppose that . Based upon the orthogonal controlling and residual connection, the Dirichlet energy of initialized EGNN is larger than the lower limit at each layer , i.e., .

Proof.

To obtain the Dirichlet energy relationship between and , we first expand node embedding as the series summation in terms of the initial node embedding . We then re-express the graph convolution at layer , which is simplified to depend only on node embedding . As a result, we can easily derive Lemma 4. The detailed proofs are provided in the following.

According to Eq. (8), by ignoring the activation function , we obtain the residual graph convolution at layer as:

where is an identity matrix with dimension , and . We define , and then simply the above graph convolution as:

| (10) |

To facilitate the proof, we further expand the above graph convolution as the series summation in terms of the initial node embedding as:

where the weight matrix product is defined as: , and . Notably, in our EGNN, the trainable weight at the first layer is orthogonally initialized as diagonal matrix of , while at layer is initialized as identity matrix . Therefore, the series expansion of could be simplified as:

at the case . Note that is invertible if all the eigenvalues of matrix are not equal to zero, which could be achieved by selecting an appropriate depending on the downstream task. Let . We then represent the initial node embedding as: . Similarly, at layer . Therefore, we can re-express the graph convolution at layer in Eq. (10) as:

According to Lemma 1, the lower bound of Dirichlet energy at layer is given by:

denotes the minimum square eigenvalue of a matrix. To get the minimum square eigenvalue, we represent the eigenvalue decomposition of matrix as: , where is the eigenvector matrix and is the diagonal eigenvalue matrix. We then decompose as:

Let denote the eigenvalue of matrix . Recalling and . Since the eigenvalues of are within , we have . To ensure that is invertible, we could apply a larger value of to have . The square eigenvalue of matrix is:

It could be easily validated that . That means the square eigenvalue increases with the layer . Considering the extreme case of , we obtain . Since at layer , we thus obtain when . In practice, since approximates to one with the increasing of layer , the Dirichlet energy will be maintained as a constant at the higher layers of EGNN, which is empirically validated in Figure 1.

The minimum square eigenvalue is achieved when , i.e., , where and is close to . In this case, we obtain . At layer , we have . Since , to make sure at the first layer, we only need to satisfy the following condition:

Note that the square eigenvalue is increasing with , and for layer . At the higher layer , we have . Therefore, once the condition of is satisfied, we can obtain and for all the layers in EGNN.

A.9 Proof for Lemma 5

Lemma 5.

Suppose that . Being augmented with the orthogonal controlling and residual connection, the Dirichlet energy of initialized EGNN is smaller than the upper limit at each layer , i.e., .

Proof.

According to the proof of Lemma 4, we have . Based on Lemma 1, the upper bound of Dirichlet energy at layer is given by:

where is the maximum square eigenvalue of a matrix. According to the definition of and the eigenvalue decomposition of in the proof of Lemma 4, we decompose as:

Therefore, the square eigenvalue of is given by:

where denotes the eigenvalue of matrix . Recalling and . The maximum square eigenvalue is achieved when takes the largest value, i.e., , where is the non-zero eigenvalue of matrix that is most close to value . Therefore, we have . To ensure that for all the layers, we have to satisfy the condition of . Since , we simplify this condition in the followings:

Note that and . The above condition can be satisfied if . Note that . Therefore, if , we obtain for all the layers in EGNN. Such condition can be easily satisfied by adopting .

A.10 Proof for Lemma 6

Lemma 6.

We have if activation function is ReLU or Leaky-ReLU [32].

Proof.

Herein we directly adopt the proof from [32] to support the self-containing in this paper. Let , , and let , . We have the following relationships:

The first inequality holds for activation function whose Lipschitz constant is smaller than , including ReLU and Leaky-ReLU. The second equality holds because , and . Recalling the Dirichlet energy definition in Eq. (2): . By extending to the vector space and replacing , , , and with , , , and , respectively, we can obtain .

A.11 Hyperparameter Analysis

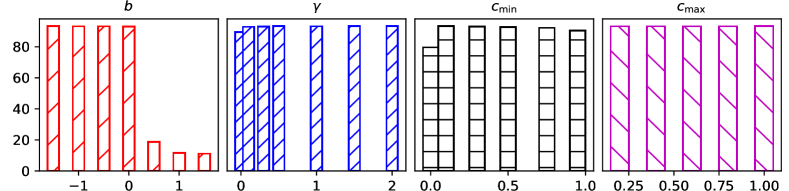

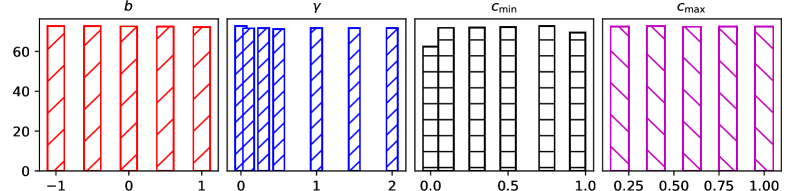

To further understand the hyperparameter impacts on EGNN and answer research question Q4, we conduct more experiments and show in Figures 3, 4 and 5 for Pubmed, Coauthor-Physics and Ogbn-arxiv, respectively.

Similar to the hyperparameter study on Cora, we observe that our method is consistently not sensitive to the choices of , , and within wide value ranges for all the datasets. The appropriate ranges of , , and are , , and , respectively. Specially, in the large graph of Ogbn-arxiv, our model could even has a large initialization range for . Given these wide hyperparameter ranges, EGNN could be easily constructed and trained with deep layers.