Directional Primitives for Uncertainty-Aware

Motion Estimation in Urban Environments

Abstract

We can use driving data collected over a long period of time to extract rich information about how vehicles behave in different areas of the roads. In this paper, we introduce the concept of directional primitives, which is a representation of prior information of road networks. Specifically, we represent the uncertainty of directions using a mixture of von Mises distributions and associated speeds using gamma distributions. These location-dependent primitives can be combined with motion information of surrounding vehicles to predict their future behavior in the form of probability distributions. Experiments conducted on highways, intersections, and roundabouts in the Carla simulator, as well as real-world urban driving datasets, indicate that primitives lead to better uncertainty-aware motion estimation.

I Introduction

Autonomous vehicles will not only have to interact with themselves but also will have to coexist with other vehicles operated by humans. Hence, it is important for autonomous cars to learn the behavior of both human and robotic agents to safely maneuver in busy urban environments. Similar to a human driver, we expect autonomous cars to learn from experience. For instance, when we, as human drivers, approach an intersection, it is possible for us to anticipate how other vehicles in the surrounding would plausibly act. This prediction depends not only on the current behavior but also heavily on our previous experience of observing vehicle interactions in intersections.

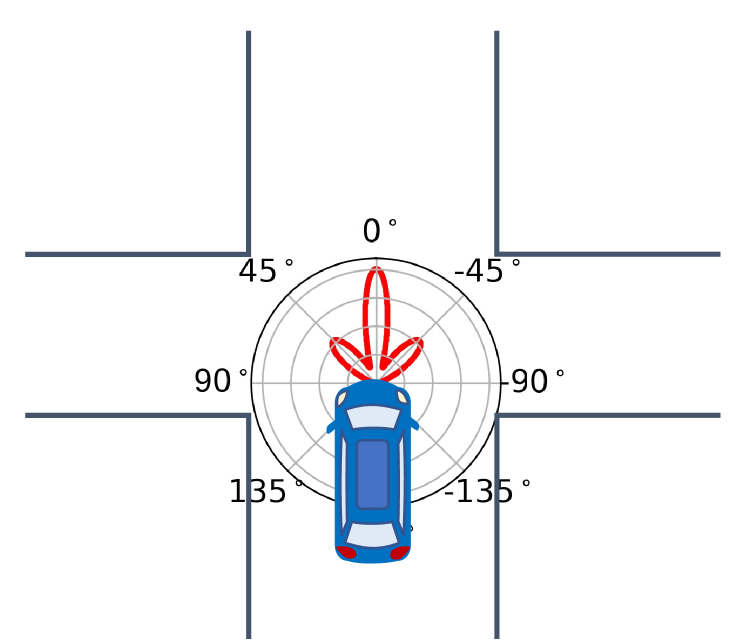

Modern vehicles can be used to collect large amounts of data to gain insight into how drivers typically behave in various parts of the road networks. Going beyond the capacities of human drivers, driverless cars can build lifelong models and can learn from one another. The advancement of vehicle-to-network (V2N) and vehicle-to-everything (V2X) technologies would support the development of such communication needs. The behavior of vehicles, both autonomous and human-driven, is highly variable and can be difficult to predict. Therefore, in order to build robust models about the road networks and driver behavior, it is important to consider multimodal probabilistic formulations. For instance, as shown in Figure 1, when a car approaches a four-way intersection, there are three possible directions it could turn. It is important that our models capture the uncertainty [1] in the future directions. If an autonomous car observes a vehicle ahead, it should be able to infer which directions the vehicle could turn at each position of its possible future trajectories. Such an understanding about the environment can be used to enhance safe decision-making.

In this paper, we propose directional primitives, a representation of prior multimodal directional uncertainties that are local to different segments of the road networks. Unlike previous approaches, our focus is to:

-

1.

model the uncertainty in speed as well as directions,

-

2.

associate prior knowledge with current knowledge to make accurate predictions, and

-

3.

use prior information to generate possible trajectories.

II Background

II-A Primitives in robotics

The idea of incorporating prior information into likelihood models is widely discussed in Bayesian inference. They have various applications to robotics including simultaneous localization and mapping, Bayesian filtering [2], and Bayesian networks for human-machine interaction [3]. Prior information about motion plays an important role in motor control tasks in animals [4, 5]. A set of low-level motor skills known as movement primitives [6] and motion primitives have also been successfully used in robotics applications such as flight control [7] and motion planning [8, 9]. In an imitation learning setting for human-robot interaction, [10] [10] learn interactive motor skills using “interaction primitives.” The directional primitives we propose in this paper are a set of prior information about possible directions local to a given geographic area. A set of these low-level directional primitives can be used for high-level motion prediction and trajectory generation.

II-B Motion estimation

Various methods have been used to model the motion of vehicles and pedestrians [11, 12], predicting occupancy levels [13, 14, 15], and predicting trajectories [12, 16]. Much of this prior research framed these problems as a supervised learning problem and typically do not take into account prior knowledge about location-specific agent behavior for estimation.

In contrast with the objective of motion prediction research, our focus is incorporating high-level information about the behavior of observed vehicles into decision-making. For instance, if a vehicle in front of us is signaling to turn right in the intersection shown in Figure 1, we can specify this current notion of signaling as a directional probability distribution. This distribution can then be used in conjunction with the prior distributions that specify the probabilities of directions and speeds of vehicles in the past in order to infer the possible future locations of the vehicle.

Estimating the current direction of a moving object has been studied by [17] [17]. This work has been extended to the temporal domain using LSTMs [18]. While our proposed model can plausibly be extended to the temporal domain [19, 20], this paper focuses only on the spatial domain. None of these previous methods consider the variation of speed, which is also crucial for accurate motion prediction.

III Directional primitives

III-A Location-dependent direction-speed priors

We model the uncertainty of directions at every location in a road network. The uncertainty of speeds associated with each mode of direction is also modeled.

III-A1 Uncertainty of directions

In order to effectively model uncertainty in real-world robotic applications such as autonomous driving, the stochasticity of directions must be modeled. Unlike many other physical quantities, there are several challenges when modeling directional variability using a probability density function. A density function that represents directions must have a limited support with the two limits, and , being the same.

Circular distributions such as Bingham distributions have been used in filtering problems [21, 22]. Such distributions characterized by rotation matrices are ideal for modeling joint angles in . In this paper, we consider the von Mises distribution [23, 24] used for directional grid maps (DGM) [17]. Intuitively, a von Mises distribution can be thought of as a Gaussian distribution wrapped around a circle.

In order to understand in which directions vehicles typically move in different segments of the road network, we begin by discretizing the environment into a grid with cells. A mixture of von Mises distributions is assigned to each cell. The mixture is to handle the multiple possible directions [25, 17]. This mixture,

| (1) |

models the probability of possible directions at anywhere in the longitude-latitude space . With weights , the distribution is composed of von Mises distributions,

| (2) |

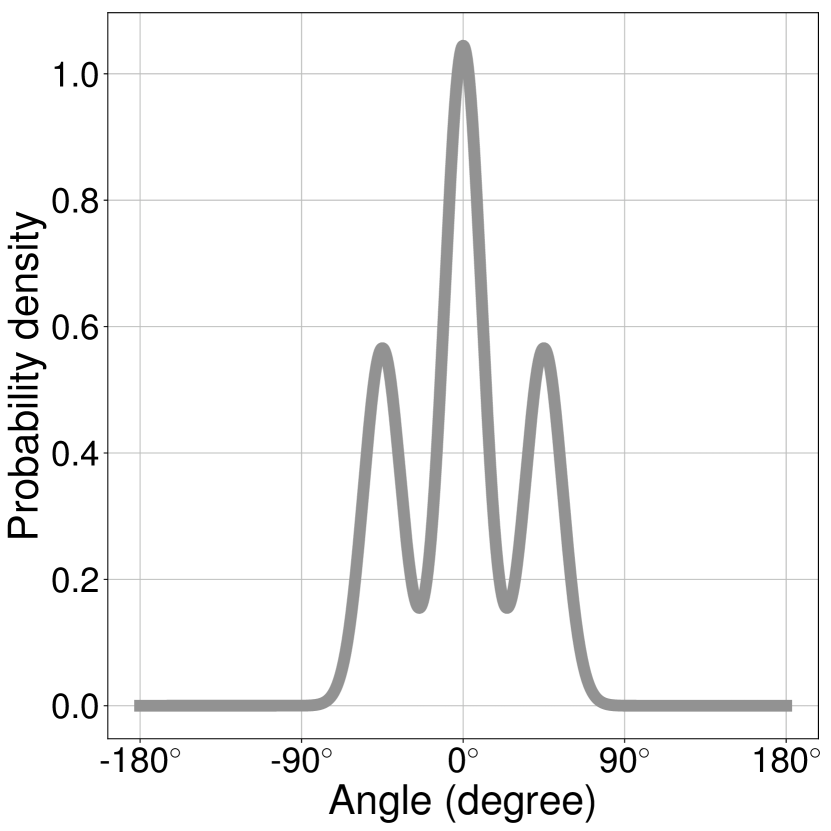

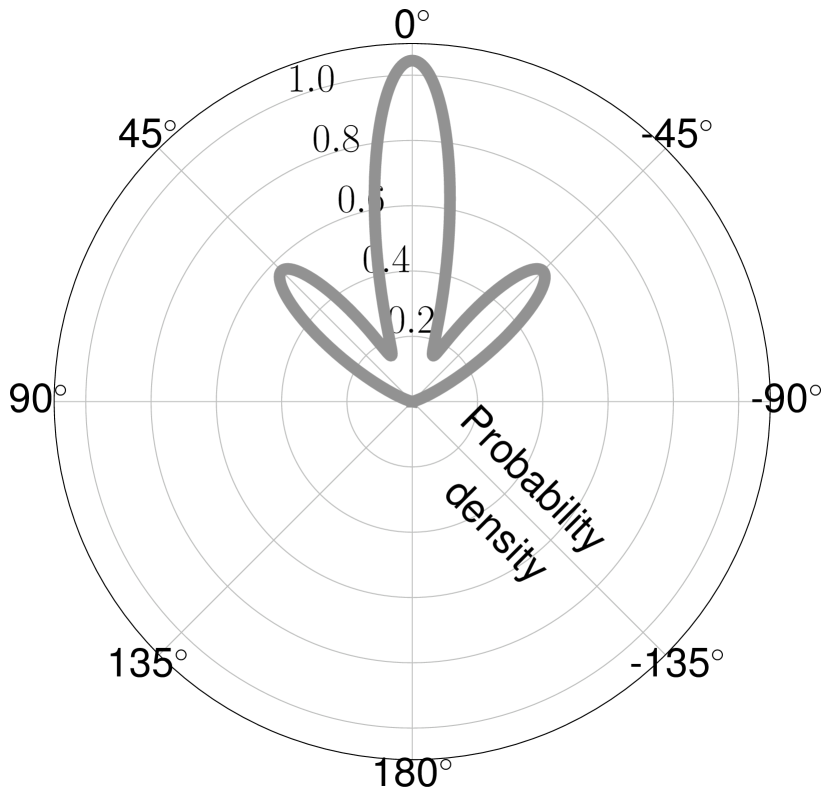

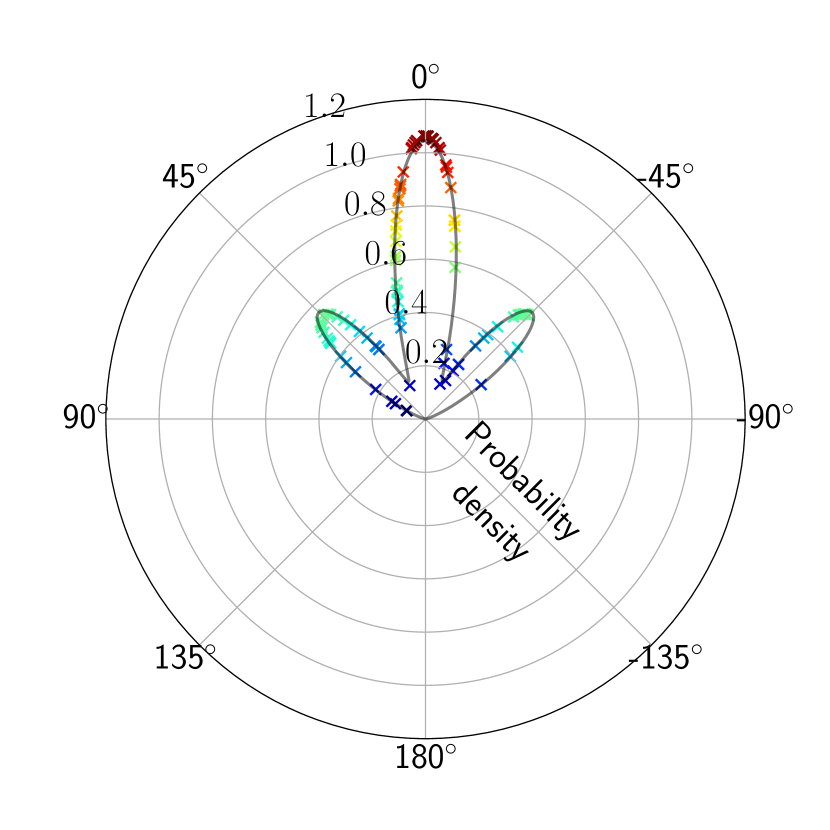

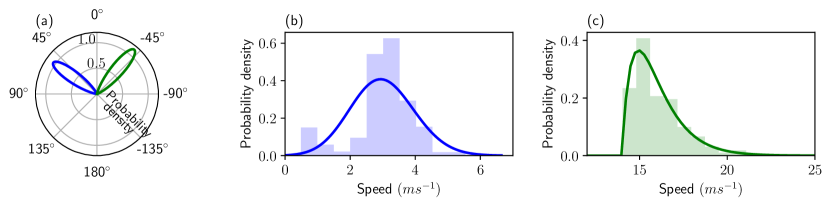

where is the mean direction and is the concentration.111Analogous to a Gaussian distribution, these can be thought as the mean and inverse variance parameters. The higher the concentration parameter, the lower the dispersion of angles is. is the th order and st kind modified Bessel function. The mean and variance of the th von Mises distribution are and , respectively. Figure 2 shows a mixture of von Mises distributions with three components, also known as modes.

For the entire road network with cells and mixture components for the th cell, the set of parameters is . With angles extracted from vehicle trajectory data, these parameters can be learned using individual expectation-maximization routines [26, 17]. is determined for each cell using a density-based clustering algorithm [26].

III-A2 Uncertainty of speeds

In Section III-A1, we obtained a multimodal distribution of directions. In addition to the directions, we also want to model the distribution of speed for each directional modality. Having such speed priors is important because the speeds are different in various segments of the road network. For instance, the speed of vehicles is relatively low near intersections, crosswalks, and roundabouts compared to a highway. Since speed is strictly a non-negative quantity, it is modeled using a gamma distribution given by,

| (3) |

where and are the shape and rate parameters, respectively. is the gamma function. The mean and variance of a gamma distribution can be computed as and , respectively. Gamma distributions are estimated by maximizing the likelihood [27] with data that lie within twice the standard deviation of each von Mises component.

III-B Hallucinating future locations

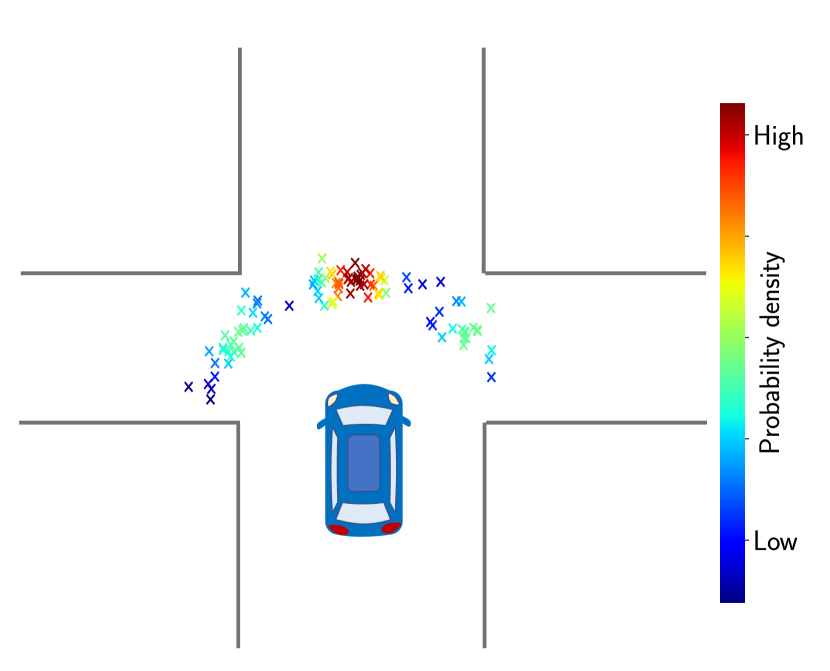

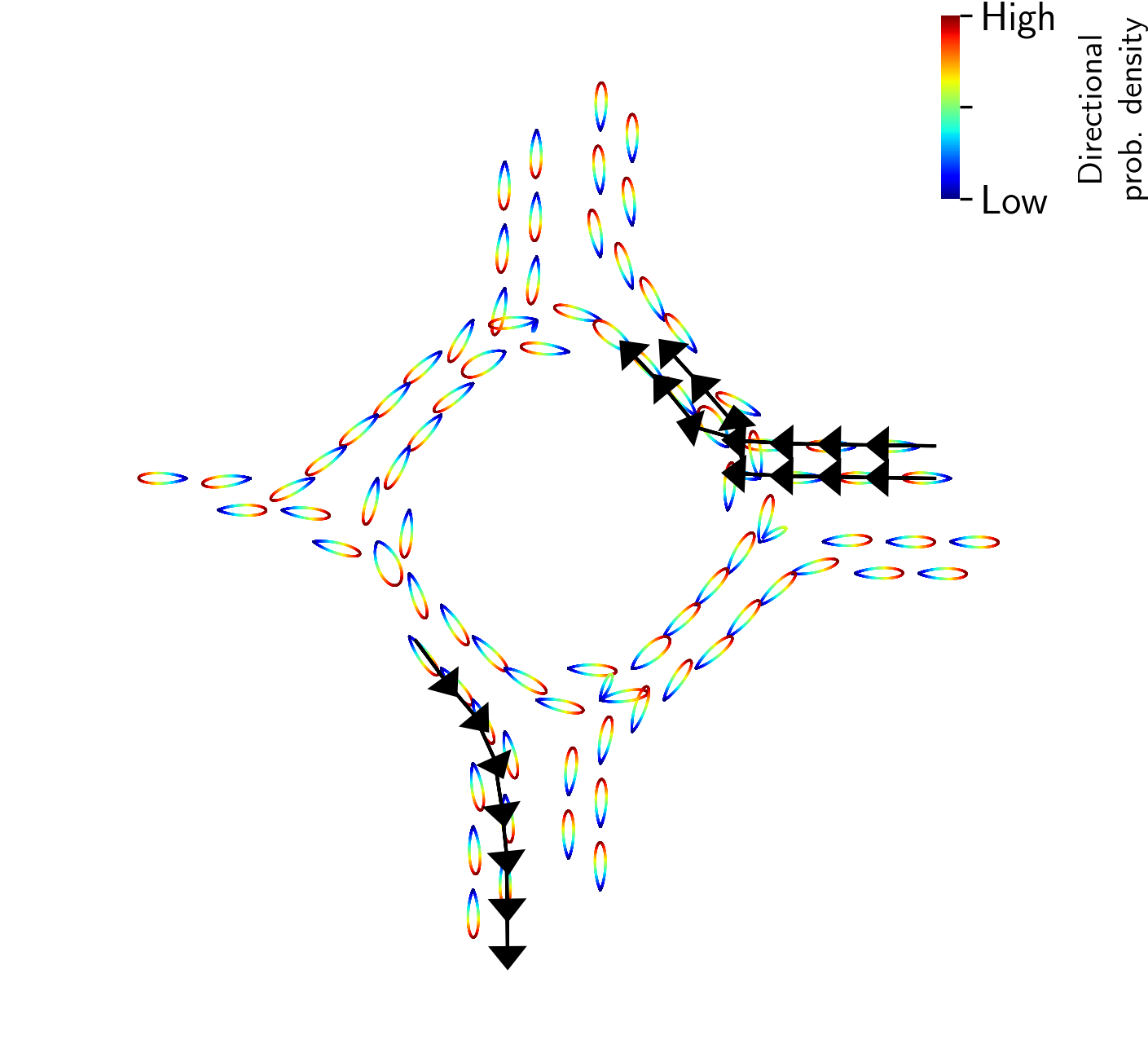

If an autonomous car observes another vehicle at a particular location, then the corresponding cell the vehicle belongs to can be used to predict where the observed vehicle could move. As depicted in Figure 1, finitely many such hallucinations can be obtained by sampling the possible directions from the mixture of von Mises distribution and projecting the vehicle towards the sampled directions of motion.

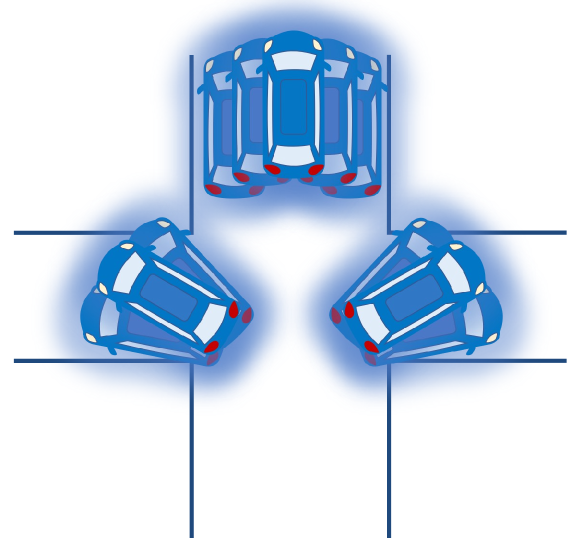

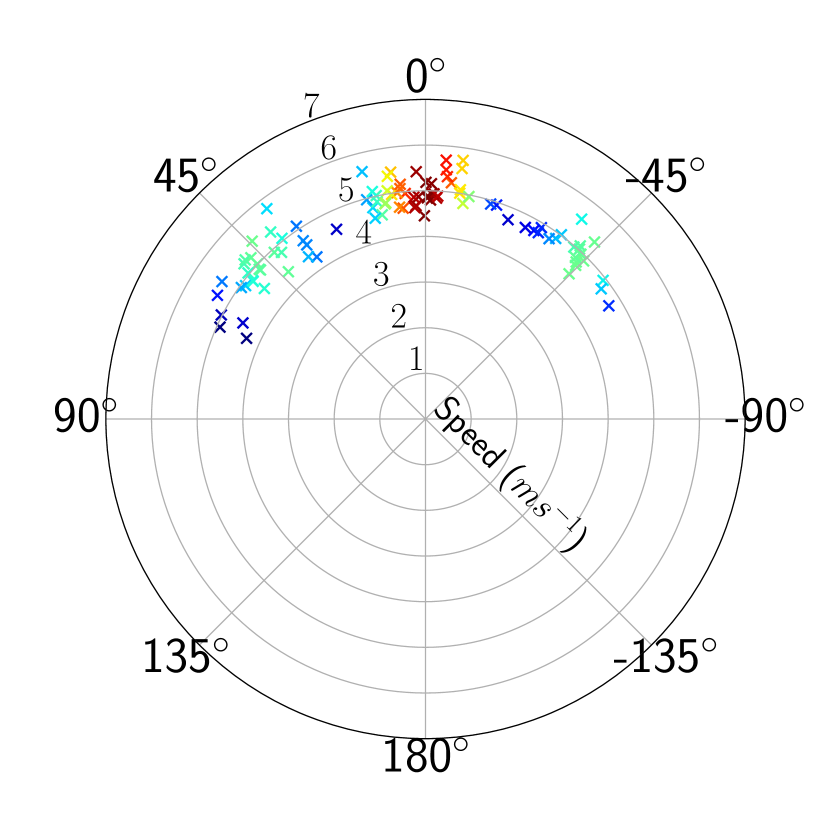

To sample from the mixture, we first sample from a categorical distribution . Then, we sample an angle from the th von Mises mixture component [28, 29]. This process can be repeated to obtain samples. If a sample has a speed , in a unit time, the vehicle will be projected into the positions in the vehicle’s coordinate system. If the distribution of speeds corresponding to the particular location is also known, it can also be incorporated into computations by replacing with and sampling from the gamma distribution introduced in Section III-A2. As illustrated in Figure 3, uncertainty in directions and speeds would project the vehicle not only into various angles, but also into different positions.

III-C Incorporating current knowledge

Note that the estimation in Section III-B is purely based on previous experiences of observing the behavior of thousands of vehicles in the past. Nonetheless, past information alone is not sufficient for accurate decision-making. Within our framework, we can also effectively use high-level information about the environment to bias the predictions so as to make informed decisions.

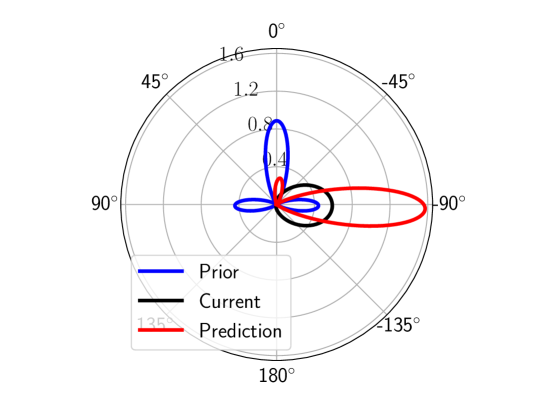

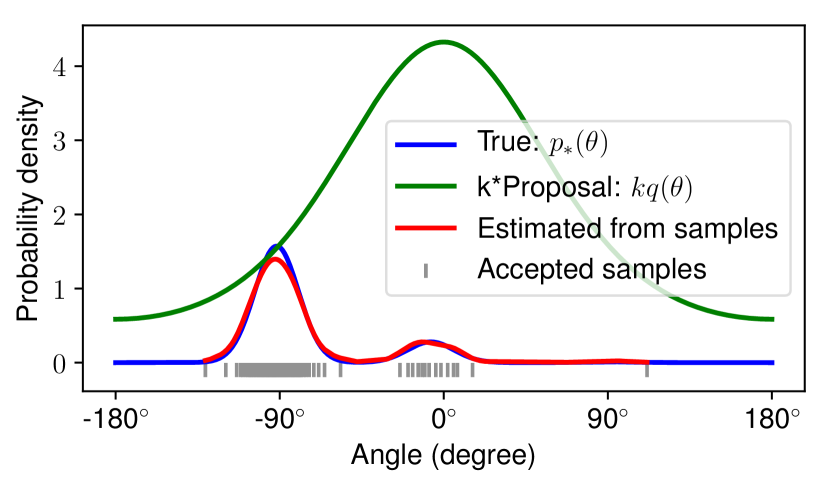

If we have some indications of how an observed car would behave, then we incorporate this current high-level information with the prior information to make better predictions. For instance, consider the distributions in Figure 4. According to the prior information , shown in blue, there is a very high chance that the vehicle would continue straight, and there is less chance that it would turn either left or right. However, if we observe the vehicle leans towards the right lane or it signals the right blinkers, we have a current probability distribution with the mean direction towards the right and very low concentration indicating high uncertainty.

Based on the prior and current information, we can compute the new directional distribution as,

| (4) |

Because of current information, the new distribution , shown in red in Figure 4, has a higher probability towards right and negligible probability towards straight and almost zero probability towards left.

In order to obtain samples from the “product of mixtures” , a Monte Carlo technique [31] can be used. In this paper, as illustrated in Figure 5, we propose a rejection sampling scheme [32]. Firstly, we select an arbitrarily large constant and a unimodal von Mises distribution with a small concentration as the proposal distribution . This distribution should be broad enough to enclose the underlying true distribution. We then draw a sample from and evaluate and . Independent to this process, another sample is drawn from the uniform distribution . Samples are accepted as samples from , if . The entire process is repeated until it satisfactorily converges [33]. All accepted samples are kept as an approximation to . In order to further improve the sampling efficiency, similar to sampling from a product of mixture of Gaussians, Gibbs sampling can be performed using a KD-tree [34].

III-D Generating multi-modal trajectories

If a vehicle is observed in cell at time , it is possible to sample an angle and move in that direction for one time step. Then we can sample from the directional (and speed) distribution in the new cell. We can repeat this for time steps. This process is summarized in Algorithm 1.

IV Experiments

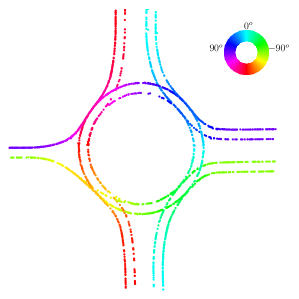

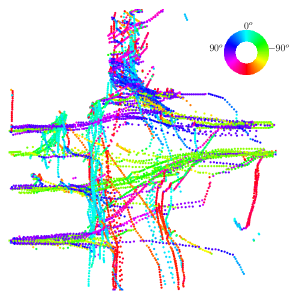

The Carla simulator [35] was used to collect simulation data. Town 3 of the simulator was used with 20 cars running in the autopilot mode for around 2 hours. To increase diversity, the system was restarted by spawning new cars every 10000 timesteps. Spurious trajectories such as rare collisions that are generated due to the limitations of the driver model of the simulator were removed based on visual inspection. To demonstrate the idea of directional primitives, we considered two important areas of the environment: a roundabout and an intersection (Figure 6). Stanford Drone dataset [36] and the Lankershim segment of the NGSIM dataset [37] were used as real-world datasets. The former is an aerial dataset that contains trajectories of pedestrians, cyclists, etc. whereas the latter contains trajectories of highways in the US.

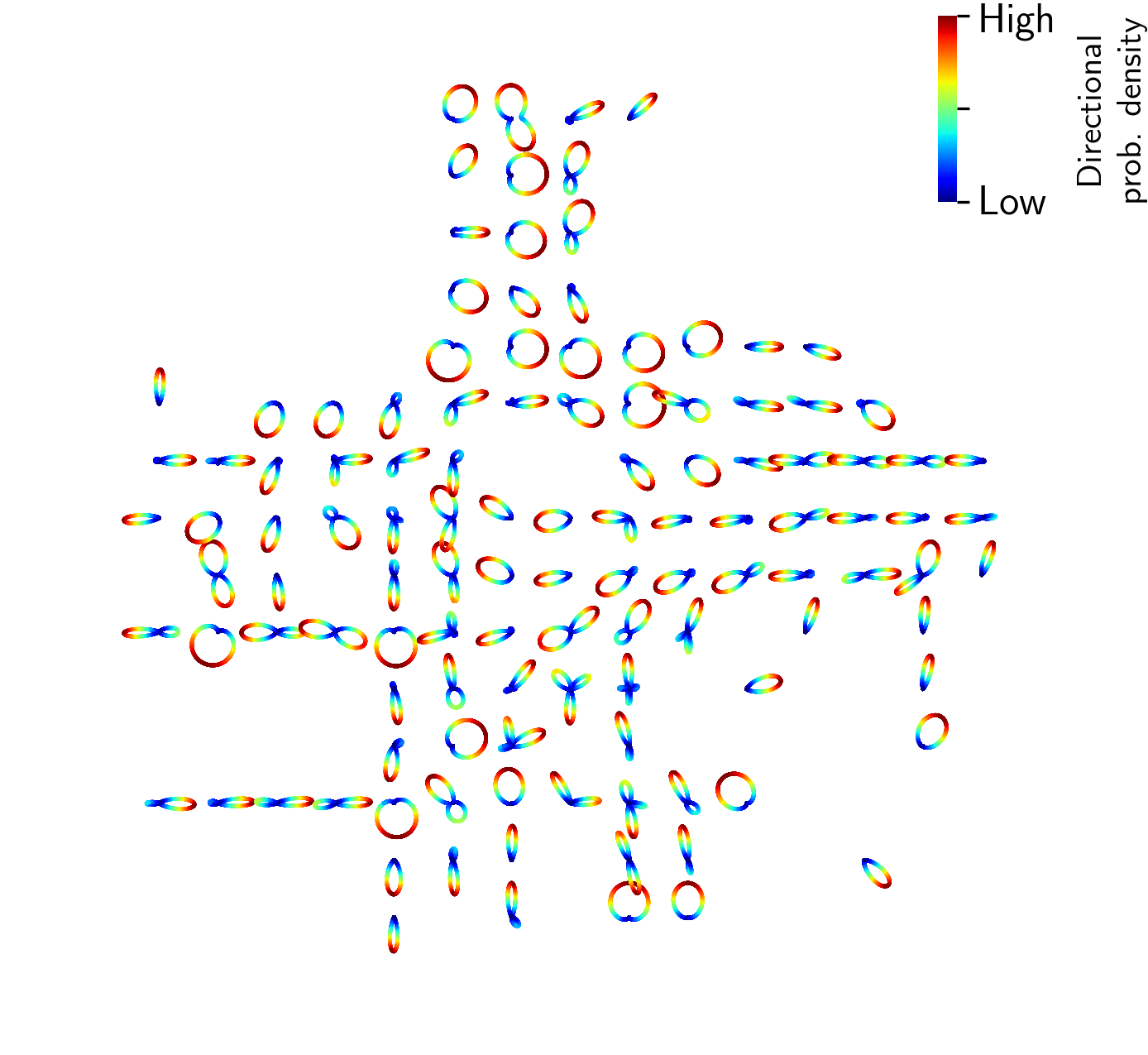

Roads in each environment were divided into cells depending on the road geometry. Von Mises and gamma distributions were then fitted to each cell as described in Sections III-A1 and III-A2, respectively. As shown in Figure 8, this results in a collection of von Misses distributions spread throughout the environment. For each directional mode in each cell, we also have an associated speed distribution. An example of a pair of speed distributions corresponding to a given cell with a bimodal von Mises directional distribution is shown in Figure 7.

| Dataset | Direction | Speed | |||||

|---|---|---|---|---|---|---|---|

| Primitive | DGM | GP | Uninformative | Primitive | |||

| Carla roundabout | 1.891 0.471 | 1.480 0.727 | 1.484 1.029 | 0.159 0.000 | 0.304 0.126 | ||

| Carla intersection | 1.893 0.468 | 1.588 0.758 | 0.657 0.505 | 0.159 0.000 | 0.269 0.110 | ||

| NGSIM Lankershim | 1.726 0.552 | 1.381 0.742 | 0.114 0.051 | 0.159 0.000 | 0.324 0.273 | ||

| Stanford Death Circle | 0.453 0.409 | 0.387 0.352 | 0.119 0.044 | 0.159 0.000 | 2.344 5.615 | ||

| Quantity | Roundabout | Intersection |

|---|---|---|

| Prior likelihood | 267.072 | 1288.951 |

| Observation likelihood | 187.271 | 1051.696 |

| Posterior likelihood | 280.905 | 1619.642 |

| Percentage improvement | 54.119% | 48.993% |

On average, it takes 82 milliseconds for the EM algorithm to learn the parameters of each mixture of von Mises distributions. Using 10% of the dataset as the test set, to assess how well the distribution is fitted, the average probability density was computed (Table I). Since the test dataset was prepared randomly, it is expected that a higher number of data points are under the higher density area. Hence, the average probability density should be higher than a benchmark uniform distribution. The lower the concentrations of the fitted distribution are, the higher the average density values would be. However, since this is a density estimation problem, designing a metric for evaluating the dispersion is challenging. We compared our results with DGM, Gaussian process directional estimation (GP) [38], and an uninformative von Mises distribution (). We considered the sparse subset of data approximation to GPs [39] as the datasets we consider are extremely large to fit a full GP in a reasonable time. The last column in Table I also reports average density for the gamma speed distributions.

To assess how much accuracy is gained by combining prior and current belief distributions, with reference to the example given in Figure 4, the RMSE value between the true () and 100 angles sampled from each distribution were computed. The RMSE values of angles dropped from approximately to after combining prior and current estimates. As reported in Table II, we further evaluated the effectiveness of combining prior information with observations on the Carla roundabout and intersection datasets using the improvement in likelihood as a metric. Firstly, we randomly picked several locations in the environment. We then computed the prior likelihood on the test set. As a proxy, we considered the direction of observation as the most probable direction indicated by the directional distribution in the cell right before the prior cell. Its likelihood is also calculated. We then computed the prior-observation combined distribution using eq. (4) and the posterior likelihood on the test dataset. The percentage improvement in likelihood is .

We also simulated future trajectories of vehicles. With the fitted distributions, in Figure 8(a), three possible trajectories starting at three different locations around the roundabout are shown in black arrows. Although only one possible trajectory for each starting location is shown, it is possible to generate all possible trajectories a vehicle could follow using the sampling procedure introduced in Algorithm 1.

V Discussion

In Section III, we divided the environment into cells. This process sometimes splits the important areas of the road among several cells and disregards certain aspects of the underlying road network structure, limiting the accuracy of predictions and increasing the memory requirements to store the parameters. Therefore, it is important to develop an automatic tessellation technique that takes the road geometry into consideration. For that purpose, in future work, as in the context of decentralized collision avoidance [40] and multi-robot coverage [41], it is possible to use Voronoi cells. In D space, a Voronoi diagram is a partition of the space based on the positions of a group of generators . The Voronoi cell associated with the th generator consists of all the points that are closer to than any other generators. If Voronoi cell and are adjacent, generator and are called Voronoi neighbors. The construction of a Voronoi cell only depends on the position of ego agent and its Voronoi neighbors. Thus, the computation of Voronoi partition is fully decentralized and the computation time is linear with respect to the number of neighbors. For the directional map, we can jointly learn the cell boundaries and directional parameters by solving a variant of for cells and all possible assignments of data points to cells.

Rather than considering cells are independent, it is possible to learn the interactions between cells. For instance, with reference to Figure 1, if a vehicle is observed moving straight in the main road, then it can be inferred that the probability a vehicle comes from the two side roads is relatively low. We can also consider the temporal dependencies among cells. For example, similar to the spatial priors discussed in Section III-C, we can compute temporal priors. Such a formulation paves our way to directional-speed Bayesian filtering [42].

VI Conclusion

We introduced directional primitives as a framework to represent uncertainty in directions and speeds of a road network. We showed that this prior information can be combined with current information about the vehicles observed in the environment to infer the possible directions a vehicle could turn. Future work will focus on optimizing road tessellation, incorporating spatiotemporal state dependencies, and employing computer vision models for estimating the distribution of observed vehicles. These efforts will lead to developing safe-decision-making algorithms for autonomous vehicles operating in urban environments [43, 44].

Acknowledgments

The authors thank Dr. Alex Koufos for discussions related to the Carla simulator. Toyota Research Institute (TRI) provided funds to assist the authors with their research, but this article solely reflects the opinions and conclusions of its authors and not TRI or any other Toyota entity.

References

- [1] Mao Shan, Charika De Alvis, Stewart Worrall and Eduardo Nebot “Extended Vehicle Tracking with Probabilistic Spatial Relation Projection and Consideration of Shape Feature Uncertainties” In IEEE Intelligent Vehicles Symposium (IV), 2019, pp. 1477–1483

- [2] Dieter Fox et al. “Bayesian filtering for location estimation” In IEEE Pervasive Computing 2.3, 2003, pp. 24–33

- [3] Karim A Tahboub “Intelligent human-machine interaction based on dynamic Bayesian networks probabilistic intention recognition” In Journal of Intelligent and Robotic Systems 45.1, 2006, pp. 31–52

- [4] A Polit and Emilio Bizzi “Characteristics of motor programs underlying arm movements in monkeys” In Journal of Neurophysiology 42.1, 1979, pp. 183–194

- [5] Tamar Flash and Binyamin Hochner “Motor primitives in vertebrates and invertebrates” In Current Opinion in Neurobiology 15.6 Elsevier, 2005, pp. 660–666

- [6] Alexandros Paraschos, Christian Daniel, Jan R Peters and Gerhard Neumann “Probabilistic movement primitives” In Advances in Neural Information Processing Systems (NIPS), 2013, pp. 2616–2624

- [7] Baris E Perk and Jean-Jacques E Slotine “Motion primitives for robotic flight control” In arXiv preprint cs/0609140, 2006

- [8] Alberto Lacaze, Yigal Moscovitz, Nicholas DeClaris and Karl Murphy “Path planning for autonomous vehicles driving over rough terrain” In IEEE International Symposium on Intelligent Control (ISIC), 1998, pp. 50–55

- [9] Mihail Pivtoraiko, Ross A Knepper and Alonzo Kelly “Differentially constrained mobile robot motion planning in state lattices” In Journal of Field Robotics 26.3 Wiley, 2009, pp. 308–333

- [10] Joseph Campbell, Simon Stepputtis and Heni Ben Amor “Probabilistic Multimodal Modeling for Human-Robot Interaction Tasks” In Robotics: Science and Systems (RSS), 2019

- [11] Alexandre Alahi et al. “Social LSTM: Human Trajectory Prediction in Crowded Spaces” In IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), 2016

- [12] Seong Hyeon Park et al. “Sequence-to-Sequence Prediction of Vehicle Trajectory via LSTM Encoder-Decoder Architecture” In IEEE Intelligent Vehicles Symposium (IV), 2018, pp. 1672–1678

- [13] Ransalu Senanayake, Lionel Ott, Simon O’Callaghan and Fabio Ramos “Spatio-Temporal Hilbert Maps for Continuous Occupancy Representation in Dynamic Environments” In Advances in Neural Information Processing Systems (NIPS), 2016

- [14] Ransalu Senanayake and Fabio Ramos “Bayesian Hilbert Maps for Dynamic Continuous Occupancy Mapping” In Conference on Robot Learning (CoRL), 2017

- [15] Ransalu Senanayake, Anthony Tompkins and Fabio Ramos “Automorphing Kernels for Nonstationarity in Mapping Unstructured Environments” In Conference on Robot Learning (CoRL), 2018

- [16] Wilko Schwarting et al. “Safe Nonlinear Trajectory Generation for Parallel Autonomy with a Dynamic Vehicle Model” In IEEE International Conference on Intelligent Transportation Systems (ITSC) 19.9, 2017, pp. 2994–3008

- [17] Ransalu Senanayake and Fabio Ramos “Directional grid maps: modeling multimodal angular uncertainty in dynamic environments” In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018, pp. 3241–3248

- [18] William Zhi, Ransalu Senanayake, Lionel Ott and Fabio Ramos “Spatiotemporal Learning of Directional Uncertainty in Urban Environments with Kernel Recurrent Mixture Density Networks” In Robotics and Automation Letters (RA-L) 4.4, 2019, pp. 4306 –4313

- [19] Tomáš Vintr et al. “Time-varying pedestrian flow models for service robots” In 2019 European Conference on Mobile Robots (ECMR), 2019, pp. 1–7 IEEE

- [20] Tomáš Vintr, Zhi Yan, Tom Duckett and Tomáš Krajník “Spatio-temporal representation for long-term anticipation of human presence in service robotics” In IEEE International Conference on Robotics and Automation (ICRA), 2019, pp. 2620–2626 IEEE

- [21] Gerhard Kurz, Igor Gilitschenski, Simon Julier and Uwe D Hanebeck “Recursive Estimation of Orientation Based on the Bingham Distribution” In International Conference on Information Fusion (ICIF), 2013

- [22] Igor Gilitschenski, Gerhard Kurz, Simon J Julier and Uwe D Hanebeck “Unscented orientation estimation based on the Bingham distribution” In IEEE Transactions on Automatic Control 61.1, 2015, pp. 172–177

- [23] K. V. Mardia and R. Edwards “Weighted distributions and rotating caps” In Biometrika 69.180, 1982, pp. 323–330

- [24] Kanti V. Mardia and Peter E. Jupp “Directional Statistics” Wiley, 2006

- [25] B. Ivanovic, E. Schmerling, K. Leung and M. Pavone “Generative Modeling of Multimodal Multi-Human Behavior” In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018

- [26] Martin Ester, Hans-Peter Kriegel, Jörg Sander and Xiaowei Xu “A density-based algorithm for discovering clusters in large spatial databases with noise.” In ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD) 96.34, 1996, pp. 226–231

- [27] CW Anderson and WD Ray “Improved maximum likelihood estimators for the gamma distribution” In Communications in Statistics-Theory and Methods 4.5 Taylor & Francis, 1975, pp. 437–448

- [28] Gary Ulrich “Computer Generation of Distributions on the M-Sphere” In Journal of the Royal Statistical Society: Series C (Applied Statistics) 33.2 Wiley Online Library, 1984, pp. 158–163

- [29] Tim R. Davidson et al. “Hyperspherical Variational Auto-Encoders” In Conference on Uncertainty in Artificial Intelligence (UAI), 2018

- [30] Richard A Davis, Keh-Shin Lii and Dimitris N Politis “Remarks on some nonparametric estimates of a density function” In Selected Works of Murray Rosenblatt Springer, 2011, pp. 95–100

- [31] Peter W Glynn and Donald L Iglehart “Importance sampling for stochastic simulations” In Management science 35.11 INFORMS, 1989, pp. 1367–1392

- [32] George Casella, Christian P Robert and Martin T Wells “Generalized Accept-Reject Sampling Schemes” In Lecture Notes-Monograph Series JSTOR, 2004, pp. 342–347

- [33] George Casella and Roger L Berger “Statistical inference” Duxbury Pacific Grove, CA, 2002

- [34] Alexander T Ihler, Erik B Sudderth, William T Freeman and Alan S Willsky “Efficient multiscale sampling from products of Gaussian mixtures” In Advances in Neural Information Processing Systems (NIPS), 2004, pp. 1–8

- [35] Alexey Dosovitskiy et al. “CARLA: An open urban driving simulator” In arXiv preprint arXiv:1711.03938, 2017

- [36] Alexandre Robicquet, Amir Sadeghian, Alexandre Alahi and Silvio Savarese “Learning social etiquette: Human trajectory understanding in crowded scenes” In European Conference on Computer Vision (ECCV), 2016, pp. 549–565 Springer

- [37] Vassili Alexiadis et al. “The next generation simulation program” In Journal of the Institute of Transportation Engineers (ITE) 74.8 Institute of Transportation Engineers, 2004, pp. 22

- [38] Simon T O’Callaghan, Surya PN Singh, Alen Alempijevic and Fabio T Ramos “Learning navigational maps by observing human motion patterns” In IEEE International Conference on Robotics and Automation (ICRA), 2011, pp. 4333–4340

- [39] Ralf Herbrich, Neil D Lawrence and Matthias Seeger “Fast sparse Gaussian process methods: The informative vector machine” In Advances in Neural Information Processing Systems (NIPS), 2003, pp. 625–632

- [40] M. Wang, Z. Wang, S. Paudel and M. Schwager “Safe Distributed Lane Change Maneuvers for Multiple Autonomous Vehicles Using Buffered Input Cells” In IEEE International Conference on Robotics and Automation (ICRA), 2018, pp. 4678–4684

- [41] Alyssa Pierson, Lucas C Figueiredo, Luciano CA Pimenta and Mac Schwager “Adapting to sensing and actuation variations in multi-robot coverage” In International Journal of Robotics Research (IJRR) 36.3 SAGE Publications Sage UK: London, England, 2017, pp. 337–354

- [42] Sebastian Thrun, Wolfram Burgard and Dieter Fox “Probabilistic Robotics” MIT Press, 2000

- [43] Liting Sun, Wei Zhan, Ching-Yao Chan and Masayoshi Tomizuka “Behavior planning of autonomous cars with social perception” In IEEE Intelligent Vehicles Symposium (IV), 2019, pp. 207–213

- [44] Keqi Shu et al. “Autonomous Driving at Intersections: A Critical-Turning-Point Approach for Left Turns” In arXiv preprint arXiv:2003.02409, 2020