Dimension-free Relaxation Times of Informed MCMC Samplers on Discrete Spaces

Abstract

Convergence analysis of Markov chain Monte Carlo methods in high-dimensional statistical applications is increasingly recognized. In this paper, we develop general mixing time bounds for Metropolis-Hastings algorithms on discrete spaces by building upon and refining some recent theoretical advancements in Bayesian model selection problems. We establish sufficient conditions for a class of informed Metropolis-Hastings algorithms to attain relaxation times that are independent of the problem dimension. These conditions are grounded in high-dimensional statistical theory and allow for possibly multimodal posterior distributions. We obtain our results through two independent techniques: the multicommodity flow method and single-element drift condition analysis; we find that the latter yields a tighter mixing time bound. Our results and proof techniques are readily applicable to a broad spectrum of statistical problems with discrete parameter spaces.

Keywords: Drift condition; Finite Markov chains; Informed Metropolis-Hastings; Mixing time; Model selection; Multicommodity flow; Random walk Metropolis-Hastings; Restricted spectral gap

1 Introduction

1.1 Convergence of MCMC Algorithms

Approximating probability distributions with unknown normalizing constants is a ubiquitous challenge in data science. In Bayesian statistics, the posterior probability distribution can be obtained through the Bayes rule, but without conjugacy, calculating its normalizing constant is a formidable challenge. In such scenarios, Markov chain Monte Carlo (MCMC) methods are commonly employed to simulate a Markov chain whose stationary distribution coincides with the target distribution. The convergence assessment of MCMC methods usually relies on an empirical diagnosis of Markov chain samples, such as effective sample size (Robert et al., 1999; Gong and Flegal, 2016) and Gelman-Rubin statistic (Gelman and Rubin, 1992), but the results can sometimes be misleading due to the intrinsic difficulty in detecting the non-convergence (Cowles and Carlin, 1996). For this reason, there has been a growing recognition of the importance of taking into account algorithmic convergence when evaluating Bayesian statistical models. A widely used metric for assessing the convergence of MCMC methods is the mixing time, which indicates the number of iterations required for the sampler to get sufficiently close to the target posterior distribution in total variation distance. Most existing studies on the mixing of MCMC algorithms consider Euclidean spaces. In particular, a very rich theory for log-concave target distributions has been developed (Dwivedi et al., 2019; Durmus and Moulines, 2019; Cheng et al., 2018; Dalalyan, 2017; Chewi et al., 2021), providing useful insights into the sampling complexity and guidance on the tuning of algorithm parameters. These results encompass a wide range of statistical models owing to the Bernstein-von Mises theorem (Belloni and Chernozhukov, 2009; Tang and Yang, 2022), which suggests that the posterior distribution becomes approximately normal (and thus log-concave) as the sample size tends to infinity. In contrast, the literature on the complexity of MCMC samplers for discrete statistical models is limited. Further, since every discrete space comes with its own combinatorial structure, researchers often choose to investigate each problem on a case-by-case basis rather than formulating a unified theory for arbitrary discrete spaces. Notable examples include variable selection (Yang et al., 2016), community detection (Zhuo and Gao, 2021), structure learning (Zhou and Chang, 2023), and classification and regression trees (CART) (Kim and Rockova, 2023).

Zhou and Chang (2023) undertook the endeavor to establish a general theoretical framework for studying the complexity of MCMC sampling for high-dimensional statistical models with discrete parameter spaces. They assumed a mild unimodal condition on the posterior distribution, akin to the log-concavity assumption in continuous-space problems, and derived mixing time bounds for random walk Metropolis-Hastings (MH) algorithms that were sharper than existing ones. Here “unimodal” means that other than the global maximizer, every state has a “neighbor” with strictly larger posterior probability, where two states are said to be neighbors if the proposal probability from one to the other is nonzero. Selecting this neighborhood relation often entails a trade-off, and the result of Zhou and Chang (2023) shows that rapid mixing can be achieved if (i) the neighborhood is large enough so that the posterior distribution becomes unimodal, and (ii) the neighborhood is not too large so that the proposal probability of each neighboring state is not exceedingly small. Techniques from high-dimensional statistical theory can be used to establish the unimodality in a way similar to how posterior consistency is proved, but the analysis is often highly complicated and tailored to individual problems. We will not explore these statistical techniques in depth in this paper; instead, our focus will be the mixing time analysis under the unimodal condition or a weaker condition that will be introduced later.

1.2 Main Contributions of This Work

Our first objective is to extend the result of Zhou and Chang (2023) to more sophisticated MH schemes that do not use random walk proposals. We consider the informed MH algorithm proposed in Zanella (2020), which emulates the behavior of gradient-based MCMC samplers on Euclidean spaces (Duane et al., 1987; Roberts and Stramer, 2002; Girolami and Calderhead, 2011) and has gained increasing attention among the MCMC practitioners (Grathwohl et al., 2021; Zhang et al., 2022). The proposal schemes used in the informed algorithms always assess the local posterior landscape surrounding the current state and then tune the proposal probabilities to prevent the sampler from visiting states with low posterior probabilities. However, such informed schemes do not necessarily result in faster mixing, because the acceptance probabilities can be extremely low for proposed states, and it was shown in Zhou et al. (2022) that, for Bayesian variable selection, naive informed MH algorithms can even mix much more slowly than random walk MH algorithms. We will show that this acceptance probability issue can be overcome by generalizing the “thresholding” idea introduced in Zhou et al. (2022). Moreover, if the posterior distribution is unimodal and tails decay sufficiently fast, we prove that there always exists an informed MH scheme whose relaxation time can be bounded by a constant, independent of the problem dimension, which immediately leads to a nearly optimal bound on the mixing time. Our result significantly generalizes the finding of Zhou et al. (2022), which only considered the high-dimensional variable selection problem.

Delving into the more technical aspects, we investigate and compare two different approaches to obtaining sharp mixing time bounds on discrete spaces: the multicommodity flow method (Giné et al., 1996; Sinclair, 1992), and the single-element drift condition (Jerison, 2016). The former bounds the spectral gap of the transition matrix by identifying likely paths connecting any two distinct states. Compared to another path argument, known as “canonical path ensemble” and commonly used in the statistical literature (Yang et al., 2016; Zhuo and Gao, 2021; Kim and Rockova, 2023; Chang et al., 2022), the multicommodity flow method is more flexible and can yield tighter bounds. The drift condition method is based on a coupling argument and stands as the most popular technique for deriving the convergence rates of MCMC algorithms on general state spaces (Rosenthal, 1995; Roy and Hobert, 2007; Fort et al., 2003; Johndrow et al., 2020). A notable difference from the path method is that this approach directly bounds the total variation distance from the stationary distribution without analyzing the spectral gap. The existing literature suggests that both methods have their own unique strengths, and which method yields a better mixing time bound depends on the problem (Jerrum and Sinclair, 1996; Guruswami, 2000; Anil Kumar and Ramesh, 2001). To our knowledge, the two approaches have never been compared for analyzing MCMC algorithms for discrete-space statistical models. Indeed, the only works we are aware of that use drift conditions in these contexts are Zhou et al. (2022) and Kim and Rockova (2023), which considered variable selection and CART, respectively. We will demonstrate how the two methods can be applied under the general framework considered in this paper, and it will be shown that the drift condition approach yields a slightly sharper bound.

As the last major contribution of this work, we further extend our general theory beyond unimodal settings. For multimodal target distributions, while various mixing time bounds have been obtained (Guan and Krone, 2007; Woodard et al., 2009; Zhou and Smith, 2022), it is generally impossible to obtain rapid mixing results where the mixing time grows only polynomially with respect to the problem dimension. This is because the definition of mixing time considers the worst-case scenario regarding the choice of the initial distribution, and the chain can easily get stuck if it is initialized at a local mode. When these local modes (other than the global one) possess only negligible posterior mass and are unlikely to be visited by the sampler given a “warm” initialization, mixing time may provide an overly pessimistic estimate for the convergence rate. One possible remedy was described in the recent work of Atchadé (2021), who proposed to study initial-state-dependent mixing times using restricted spectral gap, a notion that generalizes the spectral gap of a transition matrix, and derived a rapid mixing result for Bayesian variable selection. We extend this technique to our setting and show that it can be integrated with the multicommodity flow method to produce sharp mixing time bounds for both random walk and informed MH schemes.

| Setting | Proof techniques | Random walk MH | Informed MH | Require |

| warm start | ||||

| Unimodal | Path method | Thm 1: | Thm 2: | No |

| Drift condition | Not considered (Remark 6) | Thm 3: | No | |

| Beyond | Path method + | Thm 4: | Thm 5: | Yes |

| unimodal | restricted spectral gap |

1.3 Organization of the Paper

In Section 2, we review some recent results about the Bayesian variable selection problem, which will be used as an illustrative example throughout this paper; this section can be skipped for knowledgeable readers. Section 3 presents the setup for our theoretical analysis and reviews the mixing time bounds for random walk MH algorithms. In Section 4, we consider unimodal target distributions and prove mixing time bounds for informed MH algorithms via both the path method and drift condition analysis. Section 5 generalizes our results to a potentially multimodal setting. Simulation studies are conducted in Section 6, and Section 7 concludes the paper with some further discussion. A summary of the mixing time bounds obtained in this work is presented in Table 1.

Most technical details are deferred to the appendix. In Appendix A, we review the path method used in Zhou and Chang (2023) for bounding the spectral gaps of MH algorithms. In Appendix B, we review the result about restricted spectral gaps obtained in Atchadé (2021) and develop the multicommodity flow method for bounding restricted spectral gaps. Appendix C gives a brief review of the single-element drift condition of Jerison (2016). Proofs for all results presented in the main text are given in Appendix D.

2 Working Example: Variable Selection

We review in this section some recent advancements in understanding the complexity of MCMC sampling for high-dimensional spike-and-slab variable selection, one of the most representative examples for discrete-space models in Bayesian statistics (Tadesse and Vannucci, 2021).

2.1 Target Posterior Distribution

We consider the standard linear regression model where a design matrix and a response vector are assumed to satisfy

| (1) |

where is the unknown vector of regression coefficients and denotes the multivariate normal distribution. Introduce the indicator vector such that indicates that the -th variable has a non-zero effect on the response. Variable selection is the task of identifying the true value of , that is, finding the subset of variables with nonzero regression coefficients. Given , we can write with , where and , respectively, denote the submatrix and subvector corresponding to those variables selected in . We will also call a model, and will be referred to as the empty model. Consider the following two choices for the space of allowed models:

| (2) |

where denotes the -norm and is a positive integer. The set is the unrestricted space, while represents a restricted space with some sparsity constraint. Let denote the cardinality of a set. It is typically assumed in the high-dimensional literature that the sparsity parameter increases to infinity with , in which case both and grow super-polynomially with .

Spike-and-slab variable selection is a Bayesian procedure for constructing a posterior distribution over or , which we denote by . In Section 6.1, we recall one standard approach to specifying the prior distribution for , which leads to the following closed-form expression for up to a normalizing constant.

| (3) |

In (3), are prior hyperparameters and is the coefficient of determination. Exact calculation of the normalizing constant is usually impossible, because it would require a summation over the entire parameter space, which involves super-polynomially many evaluations of .

2.2 MH Algorithms for Variable Selection

To find posterior probabilities of models of interest or evaluate integrals with respect to , the most commonly used method is to use an MH algorithm to generate samples from . The transition probability from to in an MH scheme can be expressed by

where is the probability of proposing to move from to , and the associated acceptance probability is given by

| (4) |

ensuring that is the stationary distribution of .

The efficiency of the MH algorithm depends on the choice of , and there are many strategies for selecting the proposal neighborhood and assigning the proposal probabilities. Recall that neighborhood refers to the support of the distribution for each , which we denote by . Let us begin by considering random walk proposals such that is simply the uniform distribution on . To illustrate the importance of selecting a proper proposal neighborhood, we first present two naive choices that are bound to result in slow convergence.

Example 1.

Let the state space be and the proposal be a uniform distribution on for each . In this case, we get an independent MH algorithm, which is clearly ergodic but usually mixes very slowly. For example, suppose the true model is and the data is extremely informative. Even if the chain is initialized at the empty model, it takes on average iterations to propose moving to .

Example 2.

Let be a one-to-one mapping defined by . Define . In words, we number all elements of from to , and the proposal is a simple random walk on . The resulting MH algorithm is also ergodic, but again the mixing is slow, since it requires at least steps to move from the empty model to the full model .

The neighborhood size is exponential in in Example 1 and is a fixed constant in Example 2. Both are undesirable, and it is better to use a neighborhood with size polynomial in . The following choice is common in practice:

| (5) |

The set contains all the models that can be obtained from by either adding or removing a variable. When the design matrix contains highly correlated variables, it is generally considered that is too small and introducing “swap” moves is beneficial, which means to remove one variable and add another one at the same time. The resulting random walk MH algorithm is often known as the add-delete-swap sampler; denote its neighborhood by , which is defined by

| (6) |

We will treat and as neighborhood relations defined on . When implementing the add-delete-swap sampler on the restricted space , one can still propose from and simply reject the proposal if . Note that .

We end this subsection with two comments. First, a popular alternative to MH algorithms is Gibbs sampling. Consider a random-scan Gibbs sampler that randomly picks and updates from its conditional posterior distribution given the other coordinates. It is not difficult to see that this updating is equivalent to randomly proposing and accepting with probability .111 In a general sense, this random-scan Gibbs sampler is also an MH scheme according to the original construction in Hastings (1970). By Peskun’s ordering (Mira, 2001), this Gibbs sampler is less efficient than the random walk MH algorithm with proposal neighborhood (George and McCulloch, 1997).

Second, for simplicity, we assume in this work that is a uniform distribution on for random walk proposals. But in practice, these proposals can be implemented in a more complicated, non-uniform fashion. For example, one can first choose randomly whether to add or remove a variable, and then given the type of move, a proposal of that type is generated with uniform probability. This distinction has minimal impact on all theoretical results we will develop.

2.3 Rapid Mixing of a Random Walk MH Algorithm

The seminal work of Yang et al. (2016) considered a high-dimensional setting with and , and they proved that, under some mild assumptions, the mixing time of the add-delete-swap sampler on the restricted space has order , polynomial in ; in other words, the sampler is rapidly mixing. To provide intuition about this result and proof techniques, which will be crucial to the understanding of the theory developed in this work, we construct a detailed illustrative example.

Example 3.

Let and be such that for , , and ; the three explanatory variables are highly correlated. Let be generated by

where is orthogonal to each explanatory variable, i.e., for each , and . We calculate the un-normalized posterior probabilities by (3) for all models with , and ; the values are given in Table 2.

| Local mode | Local mode | Local mode | |||

|---|---|---|---|---|---|

| w.r.t. | w.r.t. | w.r.t. | |||

| 1 | 0 | No | No | No | |

| 0.8704 | 63.98 | No | No | No | |

| 1 | -2.76 | No | No | No | |

| 0.8236 | 90.46 | No | No | No | |

| 0.64 | 207.70 | Global mode | Global mode | Global mode | |

| 0.8219 | 88.69 | No | No | No | |

| 0.7243 | 148.95 | No | Yes | No | |

| 0.64 | 204.90 | No | – | – |

The true model, , is the global mode of , which is expected since is small but is large. Further, we indicate in Table 2 if each model is a local mode with respect to the given search space and neighborhood relation. For example, we say is a local mode with respect to if for every . When there is only one local mode (which must be in this example), we say is unimodal. Table 2 shows that is unimodal with respect to , but it is multimodal with respect to and the other local mode is . A graphical illustration is given in Figure 1. Another interesting observation is that does not necessarily increase as gets closer to . We have , while the distance from each of the three models to is strictly decreasing. This happens due to the correlation structure among the three variables, and in high-dimensional settings, such collinearity is very likely to occur between some variables. We will frequently revisit this example in later discussions.

In Example 3, is unimodal with respect to . An important intermediate result of Yang et al. (2016) was that, with high probability, such a unimodality property still holds in the high-dimensional regime they considered, and the global mode coincides with the true model . This implies that the add-delete-swap sampler is unlikely to get stuck at any , since there always exists some neighboring state such that and thus . This is the main intuition behind the rapid mixing proof of Yang et al. (2016). Interestingly, it was shown in Yang et al. (2016) that the unimodality is unlikely to hold on the unrestricted space , since local modes can easily occur on the set , though it has negligible posterior mass. Since these local modes can easily trap the sampler for a huge number of iterations, they had to consider the restricted space in order to obtain a rapid mixing result. They also found that without using swap moves, local modes are likely to occur at the boundary of the restricted space. (In Example 3, is such a local mode.) This is exactly the reason why swaps are required for their rapid mixing proof. More generally, for the MCMC convergence analysis of high-dimensional model selection problems with sparsity constraints, examining the local posterior landscape near the boundary seems often a major technical challenge (Zhou and Chang, 2023). Nevertheless, the practical implications of this theoretical difficulty is largely unclear. Even if we choose as the search space, in practice, the sampler is typically initialized within , and if is large enough, we will probably never see the chain leave , and thus the landscape of on is unimportant.

The above discussion naturally leads to the following question: assuming a warm initialization, can we obtain a “conditional” rapid mixing result on the unrestricted space ? Here, “warm” means that the posterior probability of the model is not too small, and we use to denote such an estimator, which can often be obtained by a frequentist variable selection algorithm. An affirmative result was given in Pollard and Yang (2019), where a random walk MH algorithm on is constructed such that it proposes models from and is allowed to immediately jump back to whenever becomes too large. They assumed was obtained by the thresholded lasso method (Zhou, 2010). An even stronger result was obtained recently in Atchadé (2021), who showed that by only using addition and deletion proposals, the random walk MH algorithm on is still rapidly mixing given a warm start. The proof of Atchadé (2021) utilizes a novel technique based on restricted spectral gap, which we will discuss in detail later.

2.4 Rapid Mixing of an Informed MH Algorithm

Now consider informed proposal schemes (Zanella, 2020), which will be the focus of our theoretical analysis. Unlike random walk proposals which draw a candidate move indiscriminately from the given neighborhood, informed schemes compare the posterior probabilities of all models in the neighborhood and then assign larger proposal probabilities to those with larger posterior probabilities. One might conjecture that such an informed MH sampler may behave similarly to a greedy search algorithm and can quickly find the global mode if the target distribution is unimodal. But a naive informed proposal scheme may lead to performance even much worse than a random walk MH sampler, as illustrated in the following example.

Example 4.

Consider Example 3 and Table 2 again. Let the informed proposal be given by

| (7) |

where is the normalizing constant; that is, the probability of proposing to move from to is proportional to . Suppose that the chain is initialized at the empty model . A simple calculation yields where . But the acceptance probability is

Hence, we will probably never see the chain leave . In contrast, for the random walk MH algorithm with proposal neighborhood , the probability that the chain stays at is less than 1/3.

In order to avoid scenario similar to Example 4, Zhou et al. (2022) proposed to use informed schemes with bounded proposal weights. They constructed an informed add-delete-swap sampler and showed that, under the high-dimensional setting considered by Yang et al. (2016), the mixing time on the restricted space is , thus independent of the problem dimension parameter . Since each iteration of informed proposal requires evaluating the posterior probabilities of all models in the current neighborhood, the actual complexity of their algorithm should be , comparable to the mixing time of the random walk MH sampler. The intuition behind the proof of Zhou et al. (2022), which was based on a drift condition argument, is similar to that of Yang et al. (2016). The unimodality of implies that any has one or more neighboring models with larger posterior probabilities, and they showed that, for their sampler, the transition probability to such a “good” neighbor is bounded from below by a universal constant.

In the remainder of this paper, we develop general theories that extend the results discussed in this section to arbitrary discrete spaces.

3 Theoretical Setup and Preliminary Results

3.1 Notation and Setup for Theoretical Analysis

Let denote a probability distribution on a finite space . Let be endowed with a neighborhood relation , and we say is a neighbor of if and only if . We assume satisfies three conditions: (i) for each , (ii) whenever , and (iii) is connected.222“Connected” means that for any , there is a sequence such that for . For our theoretical analysis, we will assume the triple is given and analyze the convergence of MH algorithms that propose states from . Define

| (8) |

For most high-dimensional statistical problems, grows at a super-polynomial rate with respect to some complexity parameter , while only has a polynomial growth rate. Below are some examples.

-

(i)

Variable selection. As discussed in Section 2, we can let and , in which case we have . If we consider the restricted space , we have .

-

(ii)

Structure learning of Bayesian networks. Given variables, one can let be the collection of all -node Bayesian networks (i.e., labeled directed acyclic graphs). Let be the collection of all Bayesian networks that can be obtained from by adding, deleting or reversing an edge (Madigan et al., 1995). We have since the graph has at most edges, while is super-exponential in by Robinson’s formula (Robinson, 1977).

-

(iii)

Ordering learning of Bayesian networks. Every Bayesian network is consistent with at least one total ordering of the nodes such that node precedes node whenever there is an edge from to . To learn the ordering, we can let be all possible orderings of , which is the symmetric group with degree . Clearly, is super-exponential in . We may define as the set of all orderings that can be obtained from by a random transposition, which interchanges any two elements of while keeping the others unchanged (Chang et al., 2023; Diaconis and Shahshahani, 1981). This yields .

-

(iv)

Community detection. Suppose there are nodes forming communities. One can let be the collection of all label assignment vectors that specify the community label for each node, and define as the set of all assignments that differ from by the label of only one node (Zhuo and Gao, 2021). We have and .

-

(v)

Dyadic CART. Consider a classification or regression tree problem where the splits are selected from pre-specified locations. Assume for some integer , and consider the dyadic CART algorithm, a special case of CART, where splits always occur at midpoints (Donoho, 1997; Castillo and Ročková, 2021; Kim and Rockova, 2023). The search space is the collection of all dyadic trees with depth less than or equal to (in a dyadic tree, every non-leaf node has 2 child nodes). One can show that is exponential in . For , we define as the set of dyadic trees obtained by either a “grow” or “prune” operation; “grow” means to add two child nodes to one leaf node, and “prune” means to remove two leaf nodes with a common parent node. Then .

Let be the transition matrix of an irreducible, aperiodic and reversible Markov chain with stationary distribution . For all Markov chains we will analyze, moves on the graph ; that is, . Define the total variation distance between and another distribution by . For , let

| (9) |

which can be seen as the “conditional” mixing time of given the initial state . Define the mixing time of by

| (10) |

It is often assumed in the literature that , because one can show that for any (Levin and Peres, 2017, Chap. 4.5). However, since such an inequality does not hold for , we will treat as an arbitrary constant in in this work. We can quantify the complexity of an MH algorithm by the product of its mixing time and the complexity per iteration.

It is well known that mixing time can be bounded by the spectral gap (Sinclair, 1992). Our assumption on implies that it has real eigenvalues (Levin and Peres, 2017, Lemma 12.1). The spectral gap is defined as and satisfies the inequality

| (11) |

where . The quantity is known as the relaxation time. In our mixing time bounds, we will always work with the “lazy” version of , which is defined by . That is, for any , we set . Since all eigenvalues of are non-negative, always equals the second largest eigenvalue of .

3.2 Unimodal Conditions

To characterize the modality and tail behavior of , we introduce another parameter :

| (12) | ||||

| (13) |

If in the above equation is not uniquely defined, fix as one of the modes according to any pre-specified rule. If , we say is unimodal with respect to , since for any , there is some neighboring state such that . In our subsequent analysis of MH algorithms, we will consider unimodal targets with (for random walk MH) or (for informed MH). These conditions ensure that the tails of decay sufficiently fast. To see this, for , let denote the set of states that need at least steps to reach . Then, , which decreases to exponentially fast if .

As discussed in Section 2, for high-dimensional variable selection, the unimodal property has been rigorously established on with . A careful examination of the proof of Yang et al. (2016) reveals that, since is polynomial in , the proof for and that for are essentially the same; see the discussion in Section S3 of Zhou et al. (2022). The unimodal condition has also been proved for other high-dimensional statistical problems, including structure learning of Markov equivalence classes (Zhou and Chang, 2023), community detection (Zhuo and Gao, 2021), and dyadic CART (Kim and Rockova, 2023). It should be noted that how to choose a proper so that or can be a very challenging question (e.g., for structure learning), which we do not elaborate on in this paper.

In order to achieve rapid mixing, such unimodal conditions are arguably necessary, since for general multimodal targets, the chain can get trapped at some local mode for an arbitrarily large amount of time. We further highlight two reasons why the unimodal analysis is important and not as restrictive as it may seem. First, mixing time bounds for unimodal targets are often the building blocks for more general results in multimodal scenarios. One strategy that will be discussed shortly is to use restricted spectral gap. Another approach is to apply state decomposition techniques (Madras and Randall, 2002; Jerrum et al., 2004; Guan and Krone, 2007), which works for general multimodal targets; see, e.g., Zhou and Smith (2022). Second, as explained in Remark 4 of Zhou and Smith (2022), the condition is very similar to log-concavity on Euclidean spaces, since it implies unimodality and exponentially decaying tails. Moreover, as we have illustrated in Example 3, for a variable selection problem with fixed and sufficiently large , is typically unimodal, but may not increase as gets closer to the mode in distance. This suggests that our unimodal condition is conceptually more general than log-concavity, since the latter also implies unimodality in a slice taken along any direction.

For the analysis beyond the unimodal setting, we consider a subset and only impose unimodality on . Let denote restricted to , that is, . Mimicking the definition of , we define by restricting ourselves to :

| (14) | ||||

| (15) |

If , we say is unimodal on with respect to . Note that also implies that is connected. In Section 5, we will study the mixing times of MH algorithms assuming (or ) for some such that is sufficiently large.

3.3 Mixing Times of Random Walk MH Algorithms

We first review the mixing time bound for random walk algorithms obtained in Zhou and Chang (2023) under a unimodal condition. Recall that we assume the random walk proposal scheme can be expressed as

The resulting transition matrix of random walk MH can be written as

| (19) |

Yang et al. (2016) used (11) and the “canonical path ensemble” argument to bound the mixing time of the add-delete-swap sampler for high-dimensional variable selection. As observed in Zhou and Smith (2022) and Zhou and Chang (2023), the method of Yang et al. (2016) is applicable to the general setting we consider. The only assumption one needs is that the triple satisfies , which ensures that, for any , we can identify a path such that for each . A canonical path ensemble is a collection of such paths, one for each , and then a spectral gap bound can be obtained by identifying the maximum length of a canonical path and the edge subject to the most congestion. It was shown in Zhou and Smith (2022) that the bound of Yang et al. (2016) can be further improved by measuring the length of each path using a metric depending on (instead of counting the number of edges). The following result is a direct consequence of (11) and Lemma 3 of Zhou and Smith (2022).

Theorem 1.

Assume . Then, we have , and

| (20) |

where

| (21) |

Remark 1.

For high-dimensional statistical models, we say that a random walk MH algorithm is rapidly mixing if for fixed , scales polynomially with some complexity parameter . As discussed in Section 3.1, is typically polynomial in by construction. Hence, to conclude rapid mixing from Theorem 1, it suffices to establish two conditions: (i) is polynomial in , and (ii) , which implies . This line of argument is used in most existing works on the complexity of MCMC algorithms for high-dimensional model selection problems; see Yang et al. (2016); Zhou and Chang (2023); Zhuo and Gao (2021) and Kim and Rockova (2023). In particular, under common high-dimensional assumptions, often grows to infinity at a rate polynomial in . Note that if , we also have by Theorem 1, which is a consistency property of the underlying statistical model.

4 Dimension-free Relaxation Times of Informed MH Algorithms

4.1 Informed MH Algorithms

Informed MH algorithms generate proposal moves after evaluating the posterior probabilities of all neighboring states. Given a weighting function , we define the informed proposal by

| (22) |

that is, draws from the set with probability proportional to . Intuitively, one wants to let be non-decreasing so that states with larger posterior probabilities receive larger proposal probabilities. Choices such as or were analyzed in Zanella (2020). It was observed in Zhou et al. (2022) and also illustrated by our Example 4 that for problems such as high-dimensional variable selection, such choices could be problematic and lead to even worse mixing than random walk MH algorithms. To gain a deeper insight, we write down the transition matrix of the induced MH algorithm:

| (23) |

We expect that proposes with high probability some such that . But , which depends on the local landscape of on , can be arbitrarily small if is unbounded, causing the acceptance probability of the proposal move from to to be exceedingly small.

The solution proposed in Zhou et al. (2022) was to use some that is bounded both from above and from below. In this work, we consider the following choice of :

| (24) |

where are some constants. Henceforth, whenever we write or , it is understood that takes the form given in (24). We now revisit Example 3 and show that this bounded weighting scheme overcomes the issue of diminishing acceptance probabilities.

Example 5.

Consider Example 3. It was shown in Example 4 that if an unbounded informed proposal is used, the algorithm can get stuck at , since it keeps proposing of which the acceptance probability is almost zero. Now consider an informed proposal with defined in (24) and , . By Table 2, this yields proposal probability ; thus, receives larger proposal probability than in the random walk proposal. In contrast to the scenario in Example 4, the acceptance probability of this move equals , since

We can also numerically calculate that the spectral gaps of (random walk MH) and are 0.334 and 0.582, respectively, which shows that the informed proposal accelerates mixing. Since is very small in this example, the advantage of the informed proposal is not significant.

What we have observed in Example 5 is not a coincidence. The following lemma gives simple conditions under which an informed proposal is guaranteed to have acceptance probability equal to .

Lemma 1.

Let . Assume that and . Then,

That is, an informed proposal from to has acceptance probability .

Proof.

See Appendix D. ∎

The assumption used in Lemma 1 is weak, and it will be satisfied if has one neighboring state such that , which is true if .

4.2 Dimension-Free Relaxation Time Bound via the Multicommodity Flow Method

Using Lemma 1 and Theorem 2 of Zhou and Chang (2023), we can now prove a sharp mixing time bound for informed MH algorithms under the assumption .

Theorem 2.

Proof.

See Appendix D. ∎

Remark 2.

Recall that is called the relaxation time, which can be used to derive the mixing time bound by (11). If we consider an asymptotic regime where and , by Theorem 2, the relaxation time for informed MH algorithms is bounded from below by a universal constant (independent of the problem dimension). In particular, this relaxation time bound is improved by a factor of , compared to that for random-walk MH algorithms in Theorem 1. Since the spectral gap of a transition matrix cannot be greater than 2, the order of the relaxation time bound in Theorem 2 is optimal, and we say it is “dimension-free”.

Remark 3.

Lemma 1 and Theorem 2 provide useful guidance on the choice of in (24). For most problems, after specifying the proposal neighborhood , we can simply set , which is typically easy to calculate or bound. Regarding the choice of , according to Theorem 2, for unimodal targets one may use or for some small . For multimodal targets, the simulation studies in Zhou et al. (2022) suggest that one may want to choose such that the ratio is smaller; in other words, one wants to use a more conservative informed proposal that is not overwhelmingly in favor of the best neighboring state.

The proof of Theorem 2 utilizes the multicommodity flow method (Sinclair, 1992), which generalizes the canonical path ensemble argument and allows us to select multiple likely transition routes between any two states. If one uses the canonical path ensemble, the resulting relaxation time bound for informed MH algorithms will still involve a factor of as in Theorem 1. A detailed review of the multicommodity flow method is given in Appendix A.

4.3 Better Mixing Time Bounds via the Drift Condition

The mixing time analysis we have carried out so far relies on employing path methods to establish bounds on the spectral gap—an approach that is predominant in the literature on finite-state Markov chains. One exception was the recent work of Zhou et al. (2022), who used drift condition to study the mixing time of an informed add-delete-swap sampler for Bayesian variable selection. In this section, we show that their method can also be applied to general discrete spaces, and it can be used to improve the mixing time bound obtained in Theorem 2 in an asymptotic setting.

We still let . The strategy of the proof is to establish a drift condition on , which means that for some and ,

| (26) |

where Then, one can apply Theorem 4.5 of Jerison (2016) to obtain a mixing time bound of the order . The main challenge is to find an appropriate such that is as small as possible. After several trials, we find that the choice,

| (27) |

yields the desired mixing rate given in Theorem 3 below. This drift function is similar to but simpler than the one used in Zhou et al. (2022) for variable selection. By definition, always decreases as increases since , and is bounded on .

Theorem 3.

Assume . Choose and . If satisfies

| (28) |

then

| (29) |

Proof.

See Appendix D. ∎

Remark 4.

The order of the mixing time bound in Theorem 3 is typically better than that in Theorem 2. To see this, let denote the complexity parameter as introduced in Section 3.1 and assume and for constants with . Then, Theorem 3 implies

which improves the bound in Theorem 2 by a factor of . The additional condition on given in (28) is very weak. It holds as long as

In particular, (28) is satisfied if for some universal constant .

Remark 5.

The mixing time bound does not take into account the computational cost per iteration. When implementing informed MH algorithms in practice, one should consider the cost of posterior evaluation of all neighboring states in each proposal. Theorems 2 and 3 suggest that there at least exists some informed MH algorithm whose mixing rate is fast enough to compensate for the additional computation cost. When parallel computing resources are available, one can reduce the cost of informed proposals significantly by parallelizing the posterior evaluation.

Remark 6.

The drift function given in (27) cannot be used to study the mixing times of random walk MH algorithms under the unimodal setting we consider. To see this, suppose for all and that there exist such that (i) , and (ii) for any , , which implies . Then,

Assuming and , we can rewrite the above equation as

Hence, as long as , the right-hand side of the above equation is asymptotically positive. Consequently, is asymptotically greater than , which means that there does not exist satisfying (26).

5 Mixing Times in a Multimodal Setting

As we have seen in Section 2, when we let be the unrestricted search space for a high-dimensional statistical problem, we usually do not expect that is unimodal on . In particular, for high-dimensional model selection problems, local modes can easily occur at some non-sparse models. However, we can often establish the unimodality on some subset . Moreover, in reality, we typically initialize the MH algorithm at some state in (e.g. a sparse model), and if is large enough, we probably will not see the chain leave during the entire run. This suggests that the behavior of the chain on may not matter if we only care about the mixing of the chain given a good initialization. In this section, we measure the convergence of MH algorithms using the initial-state-dependent mixing time defined in (9) and generalize the previous results to a potentially multimodal setting where or . Combining the restricted spectral gap argument of Atchadé (2021) with the multicommodity flow method, we obtain the following theorem, which shows that the mixing will be fast if the chain has a warm start and is sufficiently small.

Theorem 4.

Proof.

See Appendix D. ∎

Remark 7.

We use variable selection to illustrate how Theorem 4 is typically utilized in high-dimensional settings. Set and . Since it was already shown in Yang et al. (2016) that under some mild assumptions, it only remains to verify conditions (ii) and (iii) in Theorem 4. If they can be established for some and such that is polynomial in , then is also polynomial in . The existing literature (Narisetty and He, 2014) suggests that condition (iii) is likely to hold for where is a relatively large fixed constant, and thus polynomial complexity of a random walk MH is guaranteed if . See Atchadé (2021) for a proof of warm start under the assumption that the initial model has bounded size and no false negative.

Similarly, we can also use the argument of Atchadé (2021) to extend Theorem 2 to the multimodal setting. However, this time we need an additional assumption on the behavior of at the boundary of the set . It is given in (31) below and is used to ensure that is sufficiently small for any at the boundary of .

Theorem 5.

Proof.

See Appendix D. ∎

6 Numerical Examples

6.1 Bayesian Variable Selection

We consider the Bayesian variable selection model studied in Yang et al. (2016), which assumes

and uses the following prior distribution on :

| (33) | ||||

By integrating out the model parameters , we obtain the marginal posterior distribution given in (3), which is a function of and prior hyperparameters. Since is singular if , we will work with the search space defined in (2), which is equivalent to setting for any such that .

Throughout our simulation studies, we set , , sample size and number of variables . We always generate by with . The first 5 elements of are set to

where we use the notation ; all the other elements of are set to zero. Let denote the true model, which satisfies . We let all rows of the design matrix be i.i.d from , and consider two choices the covariance matrix :

-

(i)

(moderate correlation) for ;

-

(ii)

(high correlation) for .

We set for in both cases.

In all the data sets we have simulated, regardless of the simulation setting, we find that is always the global mode of with significant posterior mass. Hence, we use the number of iterations needed to reach as an indicator of the mixing of the chain (Peres and Sousi, 2015). We compare three different MCMC methods.

-

(a)

RWMH: the random walk MH algorithm described in Section 3.3.

-

(b)

IMH: the informed MH algorithm described in Section 4 with and .

-

(c)

IMH-unclipped: the informed MH algorithm described in Section 4 with and .

For all three algorithms, we use as the proposal neighborhood; that is, the algorithms only propose the next model by adding or deleting a variable. For each integer , we use

to denote the set of all models involving variables. The initial model will be sampled from uniformly for some . We always run RWMH for 10,000 iterations and run each informed MH algorithm for 1,500 iterations. We report the following metrics.

-

•

Success: the number of runs where the algorithm samples the true model.

-

•

: the median number of iterations needed to sample the true model for the first time.

-

•

Time: the median wall time measured in seconds.

-

•

: the median wall time (in seconds) needed to sample the true model for the first time.

6.1.1 Moderately correlated design

We first generate one data set where the covariance matrix of exhibits moderate correlation. We initialize RWMH and IMH at models uniformly sampled from and study the effect of on the mixing. For each choice of , we run the algorithms 100 times. Results are presented in Figure 2 and Table 3.

We first observe that the mixing of the chain depends on the initialization, and local modes are likely to occur among non-sparse models. As illustrated by Figure 2, when the chain is initialized at some , it always gets stuck and barely moves. This is probably because a randomly generated is likely to be a local mode on . As argued in Yang et al. (2016), yields a perfect fit with , and for a neighboring model to have a larger posterior probability, it must achieve a nearly perfect fit, which is unlikely. If we sample the initial model from for smaller , the performance of the algorithms gets improved dramatically, as shown in both Figure 2 and Table 3. This aligns well with the theory developed in Section 5: seems to be (at least approximately) unimodal on for some much smaller than , and given a warm start, both RWMH and IMH mix quickly.

Another observation from Figure 2 and Table 3 is that, compared to RWMH, IMH needs a better initialization to achieve fast mixing. Specifically, for RWMH, we need for most runs to be “successful” (i.e., find the true model ), and for IMH, we need . We believe this is because informed algorithms, which behave similarly to gradient-based samplers on Euclidean spaces, have a stronger tendency to move to nearest local modes than RWMH. Consequently, the performance of IMH is more sensitive to the initialization. This observation also partially explains why the condition (31) is required in Theorem 5, which is not needed when we analyze RWMH.

Next, we consider a more realistic initialization scheme, where all samplers are started at some uniformly drawn from . We generate 100 replicates of under the moderately correlated setting, and compare the performance of RWMH, IMH and IMH-unclipped. The result is summarized in Table 4. Both RWMH and IMH hit within the specified total number of iterations, and IMH achieves this in much fewer iterations. This is expected, since Theorem 1 and Theorem 3 suggest that IMH mixes faster by a factor with the order of (for this variable selection problem, ).

We also note that IMH-unclipped exhibits very poor performance in Table 4, which is expected according to the reasoning explained in Example 4. It shows that selecting appropriate values for and in (24) is crucial to the performance of informed MH algorithms in applications like variable selection.

| Initialization | ||||||||

|---|---|---|---|---|---|---|---|---|

| RWMH | Success | 99 | 100 | 100 | 100 | 99 | 36 | 0 |

| 1548 | 2322 | 2584 | 2602 | 2620 | – | – | ||

| IMH | Success | 100 | 100 | 100 | 97 | 7 | 0 | 0 |

| 5 | 103 | 155 | 179 | – | – | – | ||

| RWMH | IMH | IMH-unclipped | |

|---|---|---|---|

| Success | 100 | 100 | 0 |

| 1811 | 27 | – | |

| Time | 13.6 | 9.3 | 35.3 |

| 9.1 | 7.5 | – |

6.1.2 Highly correlated design

When the design matrix exhibits a high degree of collinearity, we expect that can be highly multimodal, and local modes may occur on even for small . Hence, unlike in the moderately correlated setting where we only need to initialize the sampler at a sufficiently sparse model, we may need to impose much stronger assumptions on the initialization so that the samplers can quickly find the true model.

To investigate this, we generate 100 replicates of under the highly correlated setting, and consider two initialization schemes studied in Atchadé (2021). We denote them by and . Both and include 50 randomly sampled variables that are not in (i.e., false positives), and for each , while . Hence, satisfies and has 5 false negatives, and satisfies with no false negative. Table 5 summarizes the results. When started at , both samplers fail to find in most replicates, and IMH is still more sensitive to nearby local modes as in the simulation study with moderately correlated design. When started at , both algorithms recover quickly, and IMH has a slightly higher success rate than RWMH. This result suggests that is probably highly multimodal among models with false negatives (recall that in Example 3, the posterior probability can decrease when we add some variable that is in the true model), and the identification of requires a warm start that already includes as a submodel.

| RWMH | IMH | |||

| Initialization | ||||

| Success | 27 | 95 | 3 | 100 |

| – | 2198 | – | 51 | |

| Time | 13.2 | 13.5 | 13.1 | 19.6 |

| – | 9.5 | – | 8.7 | |

6.2 Bayesian Community Detection

Consider the community detection problem with only two communities, where the goal is to estimate the community assignment vector from an observed undirected graph represented by a symmetric adjacency matrix . Here denotes the community assignment of the -th node. We construct a posterior distribution by following the model and prior distribution used in Zhuo and Gao (2021) and Legramanti et al. (2022):

| (34) | ||||

where denotes the prior distribution of and is the edge connection probability between one node from community and another from community . By integrating out , we obtain a posterior distribution on the finite space . Note that due to label switching, we have , where is defined by .

In the simulation, we set and let the true community assignment vector be given by if and otherwise. We generate the observed graph by sampling each (with ) independently from when and from when . We use and so that the two communities are well separated in the observed graph.

We consider both random walk MH (RWMH) and informed MH (IMH) algorithms, whose proposal neighborhood is defined as , where is the Hamming distance. In words, the proposal only changes the community assignment of one node. For IMH, this time we use and (reason will be explained later). We simulate 100 data sets, and for each data set we run RWMH for 20,000 iterations and IMH for 2,000 iterations. We consider two different initialization schemes, denoted by and . The assignment vector is randomly generated such that half of the community assignments are incorrect, while is generated with only one third of the assignments being incorrect. We summarize the results in Table 6 where the four metrics are defined similarly to those used in Section 6.1. Note that we treat both and as the true model, where for each .

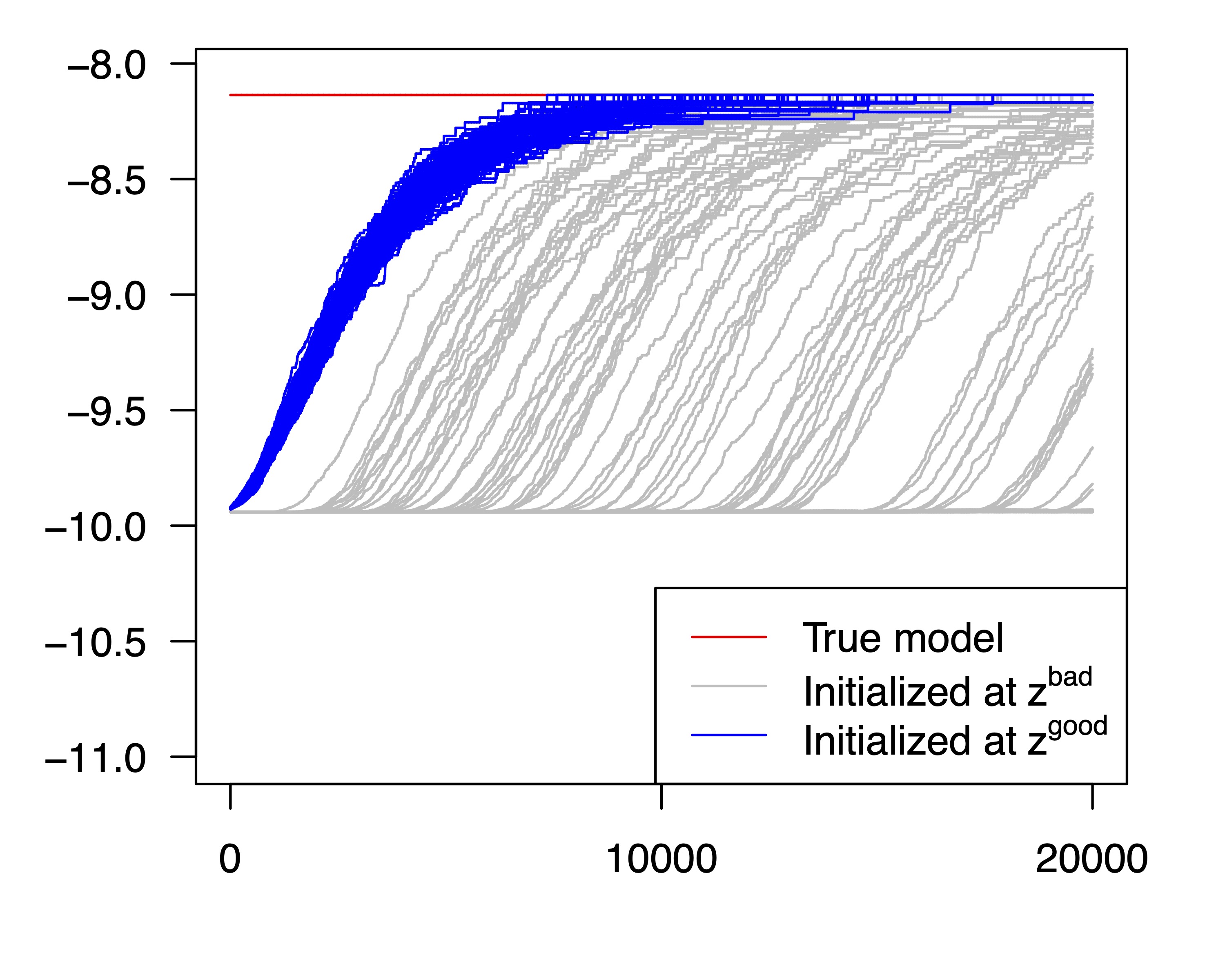

The result confirms our theoretical findings in Section 5 regarding the effect of initialization on the mixing. When we initialize the samplers at , fewer than 50 % of the runs reach the true assignment vector up to label switching, while all chains find the true assignment within a reasonable number of iterations when is used for initialization. In Figure 3, we plot the scaled log-posterior trajectories of 100 MCMC runs for one simulated data set. The figure shows that some runs initialized at get trapped near it, and when the chain is initialized at , it exhibits much faster mixing.

We have also tried IMH with and and observed that in all 100 replicates with initial state , the sampler fails to find the true assignment vector and gets stuck around . We have numerically examined the posterior landscape around and found that the ratio , where , is always very small (often around ). Hence, an informed proposal with only proposes with probability (and may get rejected due to small acceptance rates). For comparison, using assigns larger proposal probability to and sometimes does help IMH move away from , as shown in Figure 3.

| RWMH | IMH | |||

| Initialization | ||||

| Success | 41 | 100 | 49 | 100 |

| – | 10901 | – | 333 | |

| Time | 8.4 | 6.8 | 76.1 | 75.5 |

| – | 5.6 | – | 13.4 | |

7 Discussion and Concluding Remarks

Our theory suggests that an MH algorithm converges fast if it is initialized within a set such that is large and is unimodal on with respect to the given neighborhood relation . In practice, it is difficult to determine if is indeed unimodal with respect to , and thus a larger proposal neighborhood is sometimes preferred to prevent the chain from getting stuck at local modes. For example, in variable selection, one can enlarge the proposal neighborhood by allowing adding or removing multiple variables simultaneously, which is a common practice in the literature (Lamnisos et al., 2009; Guan and Stephens, 2011; Titsias and Yau, 2017; Zhou and Guan, 2019; Liang et al., 2022; Griffin et al., 2021). However, using a too large neighborhood is not desirable either, which is sometimes called the “Goldilocks principle” (Rosenthal, 2011). Our theory suggests that we should try to use the smallest neighborhood that leaves unimodal on . Developing diagnostics related to the unimodal condition could be an interesting direction for future work and provide guidance on choosing the neighborhood size in adaptive methods (Griffin et al., 2021; Liang et al., 2022).

One important technical contribution of this work is that we have demonstrated how to use multicommodity flow method and single-element drift condition to derive mixing time bounds in the context of discrete-space statistical models. To our knowledge, both methods have been rarely considered in the literature on high-dimensional Bayesian statistics. For random walk MH algorithms, the canonical path ensemble method may already provide a nearly optimal spectral gap bound, but for informed MH algorithms, the use of multicommodity flow is crucial to obtaining the dimension-free estimate of the relaxation time. Regarding the drift condition analysis, general theoretical results have been obtained for random walk or gradient-based MH algorithms on Euclidean spaces. In particular, the drift function ( denotes the Lebesgue density) has been used to prove geometric ergodicity (Roberts and Tweedie, 1996; Jarner and Hansen, 2000) and to derive explicit convergence rate bounds in the recent work of Bhattacharya and Jones (2023). This choice of is conceptually similar to the one we introduce in (27), , where the exponent is chosen deliberately to optimize the mixing time bound. It would be interesting to investigate whether one can also improve the mixing time estimates on Euclidean spaces by using a different exponent (though our choice will not be applicable since becomes zero on unbounded spaces).

Both our theory and simulation studies suggest that informed MH algorithms require stronger assumptions on the posterior distribution or initialization scheme to achieve fast mixing. An open question is whether informed samplers still have theoretical guarantees under the regime , which is not covered by our results such as Theorem 2. Note that by Theorem 1, random walk MH algorithms mix rapidly in this case.

Finally, we point out that MH sampling schemes may not be the most efficient way to utilize informed proposals. The informed importance tempering algorithm proposed in Zhou and Smith (2022) and Li et al. (2023), which generalizes the tempered Gibbs sampler of Zanella and Roberts (2019), allows one to always accept informed proposals and use importance weighting to correct for the bias. It was shown in Li et al. (2023) that this technique can also be applied to multiple-try Metropolis algorithms (Liu et al., 2000; Chang et al., 2022; Gagnon et al., 2023), making them rejection-free. These multiple-try methods enable the calculation of an informed proposal only on a random subset of , with the subset’s size adjustable according to available computing resources, which makes them highly flexible and easily applicable to general state spaces.

Acknowledgement

The authors were supported by NSF grants DMS-2311307 and DMS-2245591. They would like to thank Yves Atchadé for helpful discussion about restricted spectral gap.

Appendix

Appendix A Review of Path Methods

A.1 Spectral Gap and Path Methods

Let denote the transition probability matrix of an irreducible, aperiodic and reversible Markov chain on the finite state space . Let denote the stationary distribution and define for any . Recall that we define the spectral gap of as

and the relation between spectral gap and mixing time is described by the inequality (11). In words, if is close to zero, the chain requires a large number of steps to get close to the stationary distribution in total variation distance. Path methods are a class of techniques for finding bounds on by examining likely trajectories of the Markov chain. They are based on the following variational definition of (Levin and Peres, 2017, Lemma 13.6):

| (35) |

where , and is the Dirichlet form associated to the pair , defined as

| (36) |

where the inner product is defined by

We have the following equivalent characterizations of and (Levin and Peres, 2017, Lemma 13.6),

| (37) | |||

| (38) |

Given a sequence , we write with for , and we say is an edge of . We say is a path if does not contain duplicate edges. Let be the edge set for , which is defined by

| (39) |

Given , let

| (40) |

and let . Define by

| (41) |

We now present Proposition 1, which provides a bound on the spectral gap using paths and generalizes the multicommodity flow method of Sinclair (1992).

Proposition 1 (Theorem 3.2.9 in Giné et al. (1996)).

Let , , and for , define its -length by

| (42) |

Let a function satisfy that

| (43) |

Then , where

| (44) |

In Proposition 1, is called a weight function, and is called a flow function. Proposition 1 directly follows from (37), (38) and the following lemma, which will be used later for proving a similar result for restricted spectral gap in Section B (see Proposition 2).

Lemma 2.

Proof.

Given , let denote the increment of along . For any , we can write

By the Cauchy-Schwarz inequality,

Hence,

We sum over , which yields

which completes the proof. ∎

Remark 8.

Observe that in Lemma 2, one can replace with any function that maps from to . The conclusion still holds and the proof is identical.

A.2 Review of the Mixing Time Bound in Zhou and Chang (2023)

In this section, we review the proof techniques used in Zhou and Chang (2023) to derive the mixing time bound under the unimodal condition. Similar arguments will be used later in Section B.3 for proving some results of this work. Let the triple satisfy where and are defined in (12) and (8) respectively, and recall that we always assume

We begin by constructing the functions and for utilizing Proposition 1.

A.2.1 Constructing a flow

Assume that is unimodal on . We first present a general method for constructing flows by identifying likely paths leading to , and derive a simple upper bound on the maximum load of any edge. For this result, we only need to require .

Lemma 3 (Lemma B2 of Zhou and Chang (2023)).

Suppose satisfies . Let . Define

| (45) |

and let

| (46) |

be the resulting edge set. Write . There exists a flow such that for any ,

| (47) |

and for any and with ,

| (48) |

Proof.

Define an auxiliary transition matrix on by

| (49) |

Given , let be the reversed path of . We first construct a normalized flow function, denoted by , as follows.

-

(1)

If does not occur in or occurs at least twice in , let .

-

(2)

If , let .

-

(3)

If , let .

-

(4)

Define if for some , where and .

Remark 9.

This result can be generalized by replacing with any such that

-

(i)

for any ,

-

(ii)

whenever .

One can then define a transition matrix analogously to , which is a Markov chain with absorbing state . The same argument then completes the proof.

A.2.2 Defining a weight function

Consider defined in Lemma 3. For such that , we define

| (53) |

where is a constant and . Let be as defined in Lemma 3 with . For any with , we have

| (54) |

To obtain (54), we first decompose to two sub-paths such that and , which exists by our construction of . Letting , we obtain by the geometric series calculation that

Calculating in the same manner and using , we get (54).

A.2.3 Spectral gap bound

Using and constructed above, we can now derive a spectral gap bound.

Lemma 4.

Proof.

We first fix with . The case with can be analyzed in the same manner by symmetry. Observe that

For fixed with ,

where the first inequality is from (54) and the last inequality is due to (48) with the definition in (49).

Let denote the set of ancestors of in the graph (each edge is directed towards the state with larger posterior probability). Note that

only if or belongs to . Hence, summing over distinct and using for any , we get

Using , a routine geometric series calculation gives

(See also Lemma 3 of Zhou and Smith (2022).) Finally, set so that ; note that since . We then obtain the asserted result by taking maximum over . ∎

Appendix B Path Methods for Restricted Spectral Gaps

B.1 Restricted Spectral Gap

If there are isolated local modes, the spectral gap of the Markov chain can be extremely close to 0. This remains true even when all local modes (other than the global one) have negligible probability mass are highly unlikely to be visited by the chain if it is properly initialized. As a result, calculating the standard spectral gap bound yields an overly conservative estimate of the mixing time. To address this issue, we work with the restricted spectral gap, which was considered in Atchadé (2021).

We still use the notation introduced in Appendix A. Given a function , let

| (56) |

Given , define the -restricted spectral gap by

| (57) |

We note that if , coincides with . The following lemma is from Atchadé (2021), which shows that we can use restricted spectral gap to bound the mixing time given a sufficiently good initial distribution.

Lemma 5.

Let and be another distribution on . Define . Choose any , and let be such that . If satisfies

then for any such that

B.2 Multicommodity Flow Bounds

Given a subset , recall that we write

| (58) |

for each . Similarly, let

| (59) |

denote the edge set of restricted to . Given , let and denote the corresponding path sets. We provide the following proposition that extends the argument used in Proposition 1 to the restricted spectral gap.

Proposition 2.

Proof.

By Lemma 2, for any ,

where . If or , and thus . Hence,

Further, since ,

The result then follows from the definition of the restricted spectral gap . ∎

B.3 Application of the Multicommodity Flow Method under the Restricted Unimodal Condition

In this section, we assume and show that the multicommodity flow method reviewed in Section A.2 is also applicable to bounding the restricted spectral gap. For , we define

| (61) |

and

| (62) |

Let denote the resulting path set.

Lemma 6.

Appendix C Review of Single-element Drift Condition

The drift-and-minorization method is one of the most frequently used techniques for deriving the convergence rates of Markov chains on general state spaces. It directly bounds the total variation distance from the stationary distribution without using the spectral gap. For our purpose, we only need to use the single-element drift condition considered in Jerison (2016), which is particularly useful on discrete spaces.

Definition 1 (single-element drift condition).

The transition matrix satisfies a single-element drift condition if there exist an element , a function , and constants such that for all .

Note that in Jerison (2016), the single-element drift condition is proposed for general-state-space Markov chains, and one needs to further require that (this holds trivially in our case since is finite). Given this drift condition, we can bound the mixing time using the following result of Jerison (2016). Recall that we assume is reversible and thus its eigenvalues are all real.

Lemma 7 (Theorem 4.5 of Jerison (2016)).

Suppose has non-negative eigenvalues and satisfies the single-element drift condition given in Definition 1. Then, for all ,

Therefore, we have

Proof.

This directly follows from Theorem 4.5 of Jerison (2016) and the inequality for ∎

Appendix D Proofs

Proof of Lemma 1.

Since , we have

where the second equality follows from and . Combining the two inequalities, we get

Since and for any , we have

where the last inequality follows by assumption. ∎

Proof of Theorem 2.

Proof.

Fix any . By the definition of , there exists some such that . Hence, . Using and Lemma 1, we see that the acceptance probability for every equals one. Therefore, it only remains to prove that . On one hand, and implies that

On the other hand, since , we have

Since , , which completes the proof. ∎

Proof of Theorem 3.

For ,

Using , we can rewrite the above equation as

| (66) |

where we define

| (67) |

Since whenever , we have

| (68) | ||||

| (69) |

with and is given by (45). We now consider the two cases separately.

Case 2: . For , we simply use the worst-case bound . Since and , we have . It follows that

| (71) |

where the last inequality follows from the assumption . Since , we get

| (72) |

Proof of Theorem 4.

Let be the Dirac measure which assigns unit probability mass to some , and define . Hence, we can write . Applying Lemma 5 with , we obtain that

if . Now, we show that the restricted spectral gap is bounded as

We use Lemma 4 with the restricted edge set defined in (62) and Proposition 2. By setting in (63), we obtain

For any , define

The definition of implies that . Hence,

Using Proposition 2 yields the conclusion. ∎

References

- Anil Kumar and Ramesh [2001] VS Anil Kumar and Hariharan Ramesh. Coupling vs. conductance for the Jerrum–Sinclair chain. Random Structures & Algorithms, 18(1):1–17, 2001.

- Atchadé [2021] Yves F Atchadé. Approximate spectral gaps for Markov chain mixing times in high dimensions. SIAM Journal on Mathematics of Data Science, 3(3):854–872, 2021.

- Belloni and Chernozhukov [2009] Alexandre Belloni and Victor Chernozhukov. On the computational complexity of MCMC-based estimators in large samples. The Annals of Statistics, 37(4):2011–2055, 2009.

- Bhattacharya and Jones [2023] Riddhiman Bhattacharya and Galin L Jones. Explicit constraints on the geometric rate of convergence of random walk Metropolis-Hastings. arXiv preprint arXiv:2307.11644, 2023.

- Castillo and Ročková [2021] Ismael Castillo and Veronika Ročková. Uncertainty quantification for Bayesian CART. The Annals of Statistics, 49(6):3482–3509, 2021.

- Chang et al. [2022] Hyunwoong Chang, Changwoo Lee, Zhao Tang Luo, Huiyan Sang, and Quan Zhou. Rapidly mixing multiple-try Metropolis algorithms for model selection problems. Advances in Neural Information Processing Systems, 35:25842–25855, 2022.

- Chang et al. [2023] Hyunwoong Chang, James Cai, and Quan Zhou. Order-based structure learning without score equivalence. Biometrika, 2023. doi: https://doi.org/10.1093/biomet/asad052.

- Cheng et al. [2018] Xiang Cheng, Niladri S Chatterji, Peter L Bartlett, and Michael I Jordan. Underdamped Langevin MCMC: a non-asymptotic analysis. In Conference on learning theory, pages 300–323. PMLR, 2018.

- Chewi et al. [2021] Sinho Chewi, Chen Lu, Kwangjun Ahn, Xiang Cheng, Thibaut Le Gouic, and Philippe Rigollet. Optimal dimension dependence of the Metropolis-adjusted Langevin algorithm. In Conference on Learning Theory, pages 1260–1300. PMLR, 2021.

- Cowles and Carlin [1996] Mary Kathryn Cowles and Bradley P Carlin. Markov chain Monte Carlo convergence diagnostics: a comparative review. Journal of the American statistical Association, 91(434):883–904, 1996.

- Dalalyan [2017] Arnak S Dalalyan. Theoretical guarantees for approximate sampling from smooth and log-concave densities. Journal of the Royal Statistical Society Series B: Statistical Methodology, 79(3):651–676, 2017.

- Diaconis and Shahshahani [1981] Persi Diaconis and Mehrdad Shahshahani. Generating a random permutation with random transpositions. Zeitschrift für Wahrscheinlichkeitstheorie und verwandte Gebiete, 57(2):159–179, 1981.

- Donoho [1997] David L Donoho. CART and best-ortho-basis: a connection. The Annals of Statistics, 25(5):1870–1911, 1997.

- Duane et al. [1987] Simon Duane, Anthony D Kennedy, Brian J Pendleton, and Duncan Roweth. Hybrid Monte Carlo. Physics letters B, 195(2):216–222, 1987.

- Durmus and Moulines [2019] Alain Durmus and Eric Moulines. High-dimensional Bayesian inference via the unadjusted Langevin algorithm. Bernoulli, 25:2854–2882, 2019.

- Dwivedi et al. [2019] Raaz Dwivedi, Yuansi Chen, Martin J. Wainwright, and Bin Yu. Log-concave sampling: Metropolis-Hastings algorithms are fast. Journal of Machine Learning Research, 20(183):1–42, 2019.

- Fort et al. [2003] G Fort, E Moulines, Gareth O Roberts, and JS Rosenthal. On the geometric ergodicity of hybrid samplers. Journal of Applied Probability, 40(1):123–146, 2003.

- Gagnon et al. [2023] Philippe Gagnon, Florian Maire, and Giacomo Zanella. Improving multiple-try Metropolis with local balancing. Journal of Machine Learning Research, 24(248):1–59, 2023.

- Gelman and Rubin [1992] Andrew Gelman and Donald B Rubin. Inference from iterative simulation using multiple sequences. Statistical Science, 7(4):457–472, 1992.

- George and McCulloch [1997] Edward I George and Robert E McCulloch. Approaches for Bayesian variable selection. Statistica Sinica, pages 339–373, 1997.

- Giné et al. [1996] Evarist Giné, Geoffrey R Grimmett, and Laurent Saloff-Coste. Lectures on probability theory and statistics: École d’été de Probabilités de Saint-Flour XXVI-1996. Springer, 1996.

- Girolami and Calderhead [2011] Mark Girolami and Ben Calderhead. Riemann manifold Langevin and Hamiltonian Monte Carlo methods. Journal of the Royal Statistical Society Series B: Statistical Methodology, 73(2):123–214, 2011.

- Gong and Flegal [2016] Lei Gong and James M Flegal. A practical sequential stopping rule for high-dimensional Markov chain Monte Carlo. Journal of Computational and Graphical Statistics, 25(3):684–700, 2016.

- Grathwohl et al. [2021] Will Grathwohl, Kevin Swersky, Milad Hashemi, David Duvenaud, and Chris Maddison. Oops I took a gradient: scalable sampling for discrete distributions. In International Conference on Machine Learning, pages 3831–3841. PMLR, 2021.

- Griffin et al. [2021] Jim E Griffin, KG Łatuszyński, and Mark FJ Steel. In search of lost mixing time: adaptive Markov chain Monte Carlo schemes for Bayesian variable selection with very large . Biometrika, 108(1):53–69, 2021.

- Guan and Krone [2007] Yongtao Guan and Stephen M Krone. Small-world MCMC and convergence to multi-modal distributions: from slow mixing to fast mixing. The Annals of Applied Probability, 17(1):284–304, 2007.

- Guan and Stephens [2011] Yongtao Guan and Matthew Stephens. Bayesian variable selection regression for genome-wide association studies and other large-scale problems. The Annals of Applied Statistics, 5(3):1780, 2011.

- Guruswami [2000] Venkatesan Guruswami. Rapidly mixing Markov chains: A comparison of techniques. arXiv preprint arXiv:1603.01512, 2000.

- Hastings [1970] W Keith Hastings. Monte Carlo sampling methods using Markov chains and their applications. Biometrika, 57(1):97–109, 04 1970.

- Jarner and Hansen [2000] Søren Fiig Jarner and Ernst Hansen. Geometric ergodicity of Metropolis algorithms. Stochastic processes and their applications, 85(2):341–361, 2000.

- Jerison [2016] Daniel Jerison. The drift and minorization method for reversible Markov chains. Stanford University, 2016.

- Jerrum and Sinclair [1996] Mark Jerrum and Alistair Sinclair. The Markov chain Monte Carlo method: an approach to approximate counting and integration. Approximation algorithms for NP-hard problems, pages 482–520, 1996.

- Jerrum et al. [2004] Mark Jerrum, Jung-Bae Son, Prasad Tetali, and Eric Vigoda. Elementary bounds on Poincaré and log-Sobolev constants for decomposable Markov chains. The Annals of Applied Probability, pages 1741–1765, 2004.

- Johndrow et al. [2020] James Johndrow, Paulo Orenstein, and Anirban Bhattacharya. Scalable approximate MCMC algorithms for the horseshoe prior. Journal of Machine Learning Research, 21(73):1–61, 2020.

- Kim and Rockova [2023] Jungeum Kim and Veronika Rockova. On mixing rates for Bayesian CART. arXiv preprint arXiv:2306.00126, 2023.

- Lamnisos et al. [2009] Demetris Lamnisos, Jim E Griffin, and Mark FJ Steel. Transdimensional sampling algorithms for Bayesian variable selection in classification problems with many more variables than observations. Journal of Computational and Graphical Statistics, 18(3):592–612, 2009.

- Legramanti et al. [2022] Sirio Legramanti, Tommaso Rigon, Daniele Durante, and David B Dunson. Extended stochastic block models with application to criminal networks. The Annals of Applied Statistics, 16(4):2369, 2022.

- Levin and Peres [2017] David A Levin and Yuval Peres. Markov chains and mixing times, volume 107. American Mathematical Society, 2017.

- Li et al. [2023] Guanxun Li, Aaron Smith, and Quan Zhou. Importance is important: a guide to informed importance tempering methods. arXiv preprint arXiv:2304.06251, 2023.

- Liang et al. [2022] Xitong Liang, Samuel Livingstone, and Jim Griffin. Adaptive random neighbourhood informed Markov chain Monte Carlo for high-dimensional Bayesian variable selection. Statistics and Computing, 32(5):84, 2022.

- Liu et al. [2000] Jun S Liu, Faming Liang, and Wing Hung Wong. The multiple-try method and local optimization in Metropolis sampling. Journal of the American Statistical Association, 95(449):121–134, 2000.

- Madigan et al. [1995] David Madigan, Jeremy York, and Denis Allard. Bayesian graphical models for discrete data. International Statistical Review/Revue Internationale de Statistique, pages 215–232, 1995.

- Madras and Randall [2002] Neal Madras and Dana Randall. Markov chain decomposition for convergence rate analysis. The Annals of Applied Probability, pages 581–606, 2002.

- Mira [2001] Antonietta Mira. Ordering and improving the performance of Monte Carlo Markov chains. Statistical Science, pages 340–350, 2001.

- Narisetty and He [2014] Naveen Naidu Narisetty and Xuming He. Bayesian variable selection with shrinking and diffusing priors. The Annals of Statistics, 42(2):789–817, 2014.

- Peres and Sousi [2015] Yuval Peres and Perla Sousi. Mixing times are hitting times of large sets. Journal of Theoretical Probability, 28(2):488–519, 2015.

- Pollard and Yang [2019] David Pollard and Dana Yang. Rapid mixing of a Markov chain for an exponentially weighted aggregation estimator. arXiv preprint arXiv:1909.11773, 2019.

- Robert et al. [1999] Christian P Robert, George Casella, and George Casella. Monte Carlo statistical methods, volume 2. Springer, 1999.

- Roberts and Stramer [2002] Gareth O Roberts and Osnat Stramer. Langevin diffusions and Metropolis-Hastings algorithms. Methodology and computing in applied probability, 4:337–357, 2002.

- Roberts and Tweedie [1996] Gareth O Roberts and Richard L Tweedie. Geometric convergence and central limit theorems for multidimensional Hastings and Metropolis algorithms. Biometrika, 83(1):95–110, 1996.